2023cdf8bd3a91e549ee2f1997f6c581.ppt

- Количество слайдов: 62

Towards an Autonomous Corpora: Khurshid Ahmad Professor of Artificial Intelligence, Department of Computing, University of Surrey, ENGLAND. Dial-a-Corpus, Tuscan Word Center/Univeristy of Sienna, Certosa di Pontignano, Sienna, Italy. June 27 th, 2005.

Towards an Autonomous Corpora: Khurshid Ahmad Professor of Artificial Intelligence, Department of Computing, University of Surrey, ENGLAND. Dial-a-Corpus, Tuscan Word Center/Univeristy of Sienna, Certosa di Pontignano, Sienna, Italy. June 27 th, 2005.

Towards an Autonomous Corpora: Preamble Linguists often rely on a “corpus” of language, a body of recorded speech and writing, nowadays usually computerised to study the structure and function of the language system 1 but Language, if anything, is an eco-system, where the new constituents are born, infants grow into mature beings, and some constituents die or are pruned. There are social norms, biological limitations, and psychological innovations 1 Corpus colossal. (2005). The Economist. Jan 20 th 2005 (http: //www. economist. com/)

Towards an Autonomous Corpora: Preamble Linguists often rely on a “corpus” of language, a body of recorded speech and writing, nowadays usually computerised to study the structure and function of the language system 1 but Language, if anything, is an eco-system, where the new constituents are born, infants grow into mature beings, and some constituents die or are pruned. There are social norms, biological limitations, and psychological innovations 1 Corpus colossal. (2005). The Economist. Jan 20 th 2005 (http: //www. economist. com/)

Towards an Autonomous Corpora: Preamble There are some corpora with virtual life: § Conversation and dialogue recordings subcorpora of major corpora have a sense of life through the interaction between people, with hesitations and retractions; § Monitor corpora, mainly continual addition (and pruning) of texts, particularly news texts, induces life in the corpus. § Monitor corpora were ahead of their time and brave souls built them with minimal tools and scarce data. 1 Corpus colossal. (2005). The Economist. Jan 20 th 2005 (http: //www. economist. com/)

Towards an Autonomous Corpora: Preamble There are some corpora with virtual life: § Conversation and dialogue recordings subcorpora of major corpora have a sense of life through the interaction between people, with hesitations and retractions; § Monitor corpora, mainly continual addition (and pruning) of texts, particularly news texts, induces life in the corpus. § Monitor corpora were ahead of their time and brave souls built them with minimal tools and scarce data. 1 Corpus colossal. (2005). The Economist. Jan 20 th 2005 (http: //www. economist. com/)

Towards an Autonomous Corpora: Preamble § There are some corpora with virtual life: § Conversation and dialogue recordings sub-corpora of major corpora have a sense of life through the interaction between people, with hesitations and retractions; § Monitor corpora, mainly continual addition (and pruning) of texts, particularly news texts, induces life in the corpus. § Texts collected by large-scale hypertextual web search engines (Google, Yahoo, Web Crawler) can be organised into a text corpus – let us call it a webenabled corpus.

Towards an Autonomous Corpora: Preamble § There are some corpora with virtual life: § Conversation and dialogue recordings sub-corpora of major corpora have a sense of life through the interaction between people, with hesitations and retractions; § Monitor corpora, mainly continual addition (and pruning) of texts, particularly news texts, induces life in the corpus. § Texts collected by large-scale hypertextual web search engines (Google, Yahoo, Web Crawler) can be organised into a text corpus – let us call it a webenabled corpus.

Towards an Autonomous Corpora: Preamble § How does one collect texts from the ‘web’: § You find a search engine (Google); § Type in keywords or web site names; § Get thousands of web documents, texts, images, e-mails, travel agents. . § Select texts YOURSELVES; § Store texts § Analyse texts

Towards an Autonomous Corpora: Preamble § How does one collect texts from the ‘web’: § You find a search engine (Google); § Type in keywords or web site names; § Get thousands of web documents, texts, images, e-mails, travel agents. . § Select texts YOURSELVES; § Store texts § Analyse texts

Towards an Autonomous Corpora: Preamble § How does one collect texts from the ‘web’: § You find a search engine (Google); § Type in keywords or web site names; § Get thousands of web documents, texts, images, e-mails, travel agents. . § Select texts YOURSELVES; § Store texts § Analyse texts § But this is possible on a few hundred thousands; what happens when there are millions of new documents being created every week; §The deluge of information is such that we need helpers/servants – robots to do some of the spade work. §THIS IS THE BURDEN OF MY TALK

Towards an Autonomous Corpora: Preamble § How does one collect texts from the ‘web’: § You find a search engine (Google); § Type in keywords or web site names; § Get thousands of web documents, texts, images, e-mails, travel agents. . § Select texts YOURSELVES; § Store texts § Analyse texts § But this is possible on a few hundred thousands; what happens when there are millions of new documents being created every week; §The deluge of information is such that we need helpers/servants – robots to do some of the spade work. §THIS IS THE BURDEN OF MY TALK

Towards an Autonomous Corpora: Preamble § How does one collect texts from the ‘web’: § § § You find a search engine (Google); Type in keywords or web site names; Get thousands of web documents, texts, images, e-mails, travel agents. . Select texts YOURSELVES; Store texts Analyse texts § The documents you get ‘off’ the web are collected by search engines or web crawlers AUTOMATICALLY without much human intervention. § 200 million pages trawled everyday. § The quality of the data sometimes is reflected in this approach.

Towards an Autonomous Corpora: Preamble § How does one collect texts from the ‘web’: § § § You find a search engine (Google); Type in keywords or web site names; Get thousands of web documents, texts, images, e-mails, travel agents. . Select texts YOURSELVES; Store texts Analyse texts § The documents you get ‘off’ the web are collected by search engines or web crawlers AUTOMATICALLY without much human intervention. § 200 million pages trawled everyday. § The quality of the data sometimes is reflected in this approach.

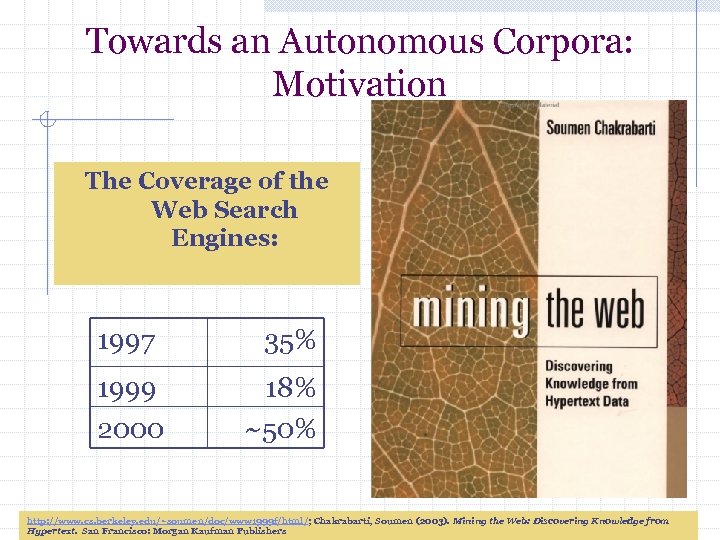

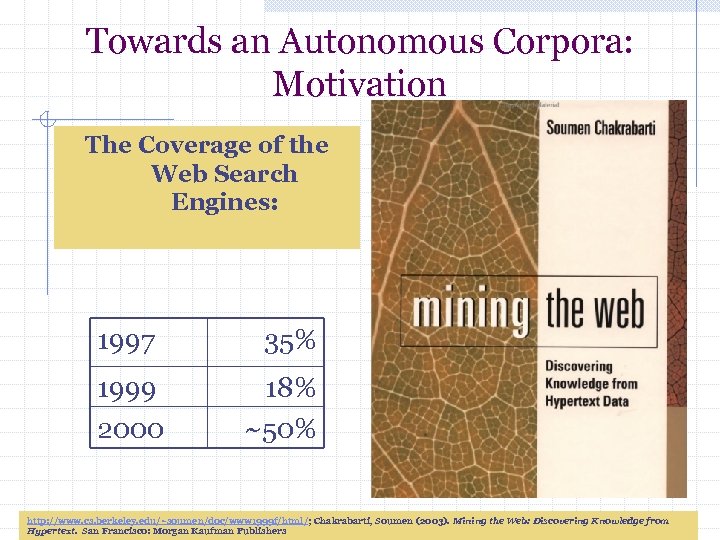

Towards an Autonomous Corpora: Motivation The Coverage of the Web Search Engines: 1997 1999 2000 35% 18% ~50% http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/; Chakrabarti, Soumen (2003). Mining the Web: Discovering Knowledge from Hypertext. San Francisco: Morgan Kaufman Publishers

Towards an Autonomous Corpora: Motivation The Coverage of the Web Search Engines: 1997 1999 2000 35% 18% ~50% http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/; Chakrabarti, Soumen (2003). Mining the Web: Discovering Knowledge from Hypertext. San Francisco: Morgan Kaufman Publishers

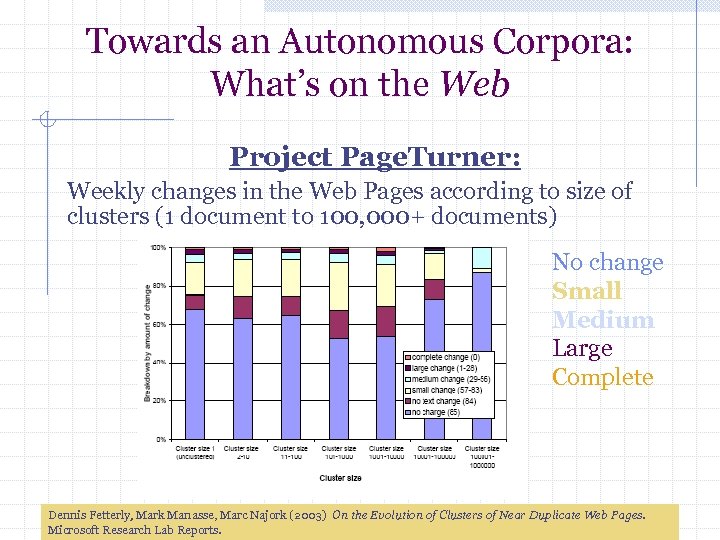

Towards an Autonomous Corpora: What’s on the Web • Microsoft Research Labs “Page. Turner” project measured the amount of textual changes in individual web pages over time. • Page. Turner crawled a set of slightly over 150 million web pages once a week, starting in November 2002 and continuing for 11 weeks. • For every downloaded page, a vector of 84 features was recorded , together with other salient information, such as the URL, the HTTP status code (or any DNS or TCP error), the document’s length, the number of words, etc. Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web • Microsoft Research Labs “Page. Turner” project measured the amount of textual changes in individual web pages over time. • Page. Turner crawled a set of slightly over 150 million web pages once a week, starting in November 2002 and continuing for 11 weeks. • For every downloaded page, a vector of 84 features was recorded , together with other salient information, such as the URL, the HTTP status code (or any DNS or TCP error), the document’s length, the number of words, etc. Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web • Microsoft Research Labs “Page. Turner” project measured the amount of textual changes in individual web pages over time. 150 Million documents downloaded in one week 57 Million were similar to some other document downloaded that week 93 Million documents were not similar to any other document. The documents that were similar to others fell into 13, 283, 856 clusters. In other words, 44, 022, 091 documents, or 29. 2% of all the documents downloaded, were near duplicates of the 13, 283, 856 “canonical” documents representing the clusters. Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web • Microsoft Research Labs “Page. Turner” project measured the amount of textual changes in individual web pages over time. 150 Million documents downloaded in one week 57 Million were similar to some other document downloaded that week 93 Million documents were not similar to any other document. The documents that were similar to others fell into 13, 283, 856 clusters. In other words, 44, 022, 091 documents, or 29. 2% of all the documents downloaded, were near duplicates of the 13, 283, 856 “canonical” documents representing the clusters. Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

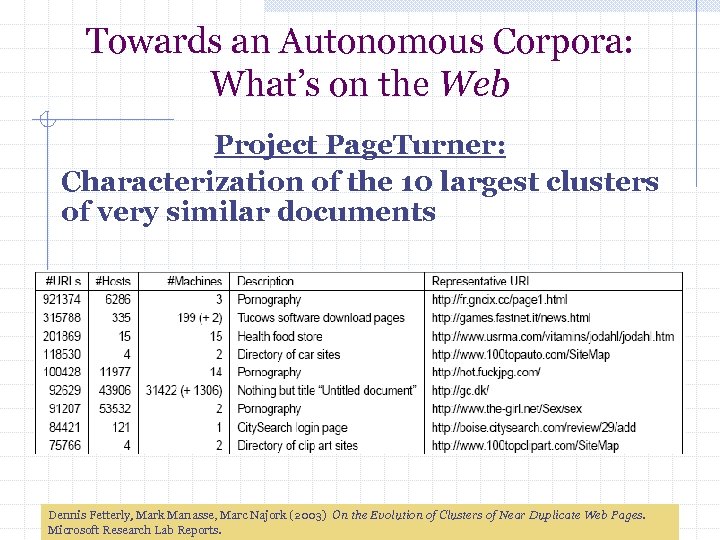

Towards an Autonomous Corpora: What’s on the Web Project Page. Turner: Characterization of the 10 largest clusters of very similar documents Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web Project Page. Turner: Characterization of the 10 largest clusters of very similar documents Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

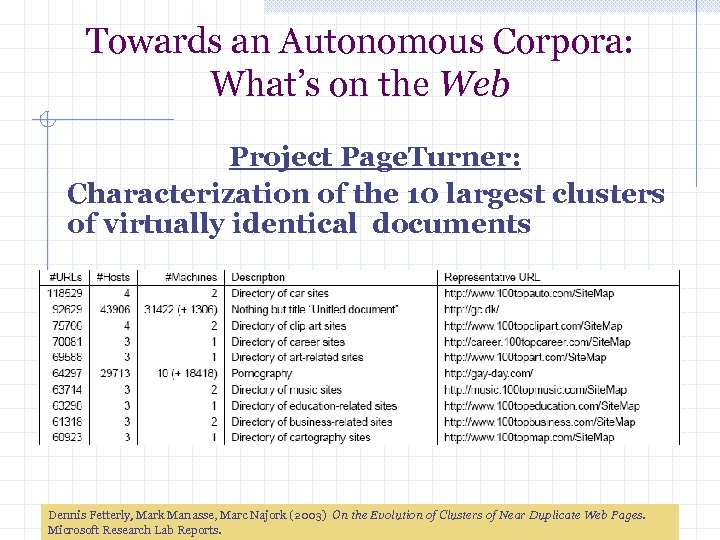

Towards an Autonomous Corpora: What’s on the Web Project Page. Turner: Characterization of the 10 largest clusters of virtually identical documents Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web Project Page. Turner: Characterization of the 10 largest clusters of virtually identical documents Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web Project Page. Turner: Weekly changes in the Web Pages according to size of clusters (1 document to 100, 000+ documents) No change Small Medium Large Complete Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

Towards an Autonomous Corpora: What’s on the Web Project Page. Turner: Weekly changes in the Web Pages according to size of clusters (1 document to 100, 000+ documents) No change Small Medium Large Complete Dennis Fetterly, Mark Manasse, Marc Najork (2003) On the Evolution of Clusters of Near Duplicate Web Pages. Microsoft Research Lab Reports.

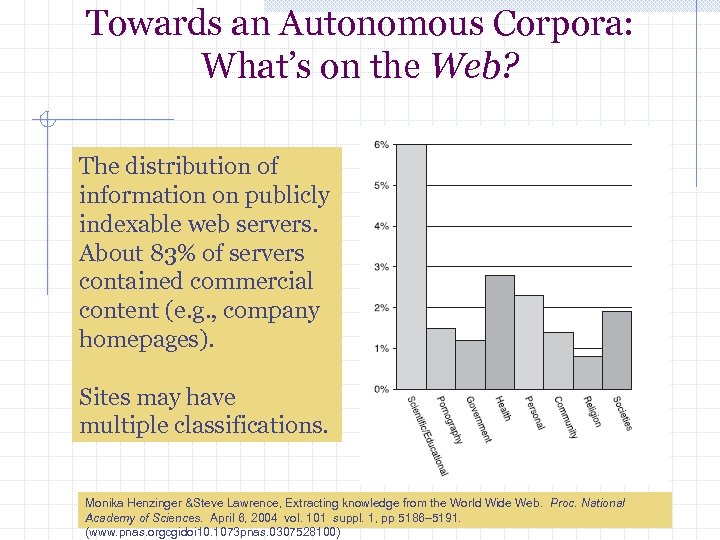

Towards an Autonomous Corpora: What’s on the Web? The distribution of information on publicly indexable web servers. About 83% of servers contained commercial content (e. g. , company homepages). Sites may have multiple classifications. Monika Henzinger &Steve Lawrence, Extracting knowledge from the World Wide Web. Proc. National Academy of Sciences. April 6, 2004 vol. 101 suppl. 1, pp 5186– 5191. (www. pnas. orgcgidoi 10. 1073 pnas. 0307528100)

Towards an Autonomous Corpora: What’s on the Web? The distribution of information on publicly indexable web servers. About 83% of servers contained commercial content (e. g. , company homepages). Sites may have multiple classifications. Monika Henzinger &Steve Lawrence, Extracting knowledge from the World Wide Web. Proc. National Academy of Sciences. April 6, 2004 vol. 101 suppl. 1, pp 5186– 5191. (www. pnas. orgcgidoi 10. 1073 pnas. 0307528100)

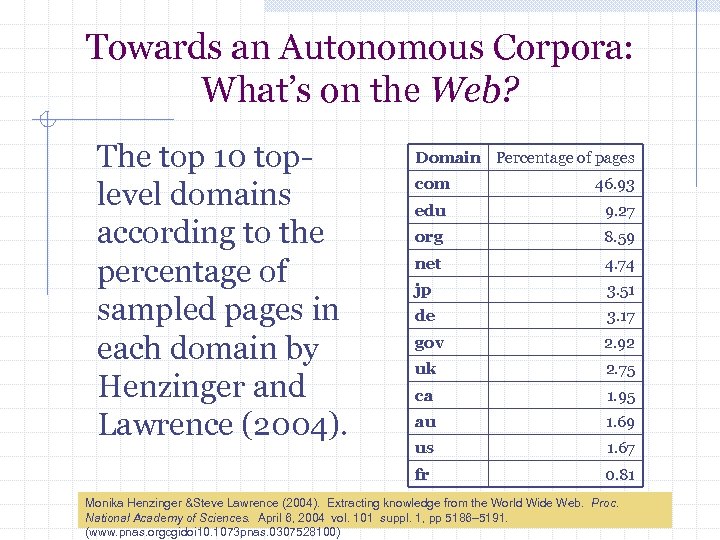

Towards an Autonomous Corpora: What’s on the Web? The top 10 toplevel domains according to the percentage of sampled pages in each domain by Henzinger and Lawrence (2004). Domain Percentage of pages com 46. 93 edu 9. 27 org 8. 59 net 4. 74 jp 3. 51 de 3. 17 gov 2. 92 uk 2. 75 ca 1. 95 au 1. 69 us 1. 67 fr 0. 81 Monika Henzinger &Steve Lawrence (2004). Extracting knowledge from the World Wide Web. Proc. National Academy of Sciences. April 6, 2004 vol. 101 suppl. 1, pp 5186– 5191. (www. pnas. orgcgidoi 10. 1073 pnas. 0307528100)

Towards an Autonomous Corpora: What’s on the Web? The top 10 toplevel domains according to the percentage of sampled pages in each domain by Henzinger and Lawrence (2004). Domain Percentage of pages com 46. 93 edu 9. 27 org 8. 59 net 4. 74 jp 3. 51 de 3. 17 gov 2. 92 uk 2. 75 ca 1. 95 au 1. 69 us 1. 67 fr 0. 81 Monika Henzinger &Steve Lawrence (2004). Extracting knowledge from the World Wide Web. Proc. National Academy of Sciences. April 6, 2004 vol. 101 suppl. 1, pp 5186– 5191. (www. pnas. orgcgidoi 10. 1073 pnas. 0307528100)

Towards an Autonomous Corpora: What’s on the Web? • There are specialist search engines that already have expert selected texts (images, maps, time series, . . ) for a number of domains. • For instance, Infomine advertises imaginative, informative and instructional texts, both expert selected and robot selected in a range of ‘domains’: Biological, Agricultural and Medical Sciences Business Economics Cultural Diversity Ejournals Government Information Maps & GIS Physical Sciences, Engineering, Computing and Maths Social Sciences and Humanities Visual & Performing Arts http: //infomine. ucr. edu/about/

Towards an Autonomous Corpora: What’s on the Web? • There are specialist search engines that already have expert selected texts (images, maps, time series, . . ) for a number of domains. • For instance, Infomine advertises imaginative, informative and instructional texts, both expert selected and robot selected in a range of ‘domains’: Biological, Agricultural and Medical Sciences Business Economics Cultural Diversity Ejournals Government Information Maps & GIS Physical Sciences, Engineering, Computing and Maths Social Sciences and Humanities Visual & Performing Arts http: //infomine. ucr. edu/about/

Towards an Autonomous Corpora: What’s on the Web?

Towards an Autonomous Corpora: What’s on the Web?

Towards an Autonomous Corpora: What’s on the Web? Searching the web for texts on corpus linguistics.

Towards an Autonomous Corpora: What’s on the Web? Searching the web for texts on corpus linguistics.

Web. Crawler Searching the web for texts on downward collocates.

Web. Crawler Searching the web for texts on downward collocates.

Web. Crawler

Web. Crawler

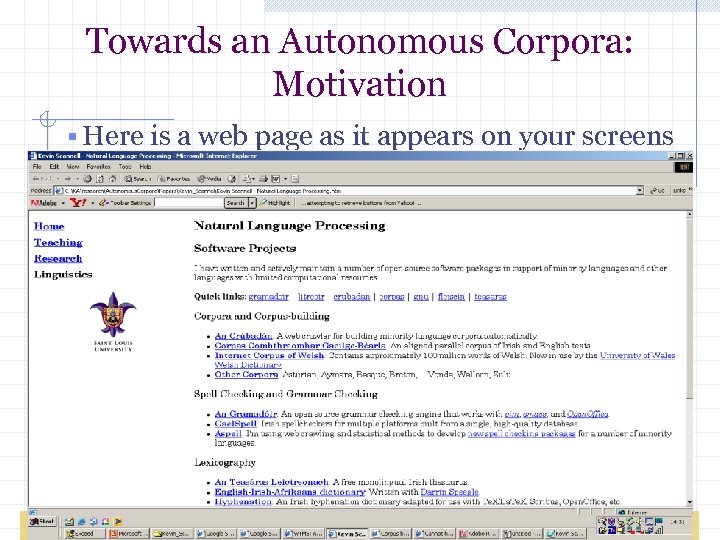

Towards an Autonomous Corpora: Motivation § Here is a web page as it appears on your screens Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § Here is a web page as it appears on your screens Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

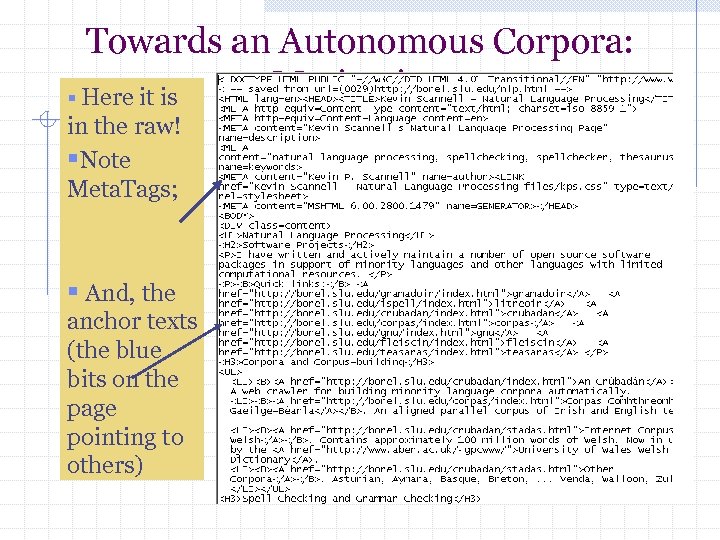

Towards an Autonomous Corpora: Motivation § Here it is in the raw! §Note Meta. Tags; § And, the anchor texts (the blue bits on the page pointing to others)

Towards an Autonomous Corpora: Motivation § Here it is in the raw! §Note Meta. Tags; § And, the anchor texts (the blue bits on the page pointing to others)

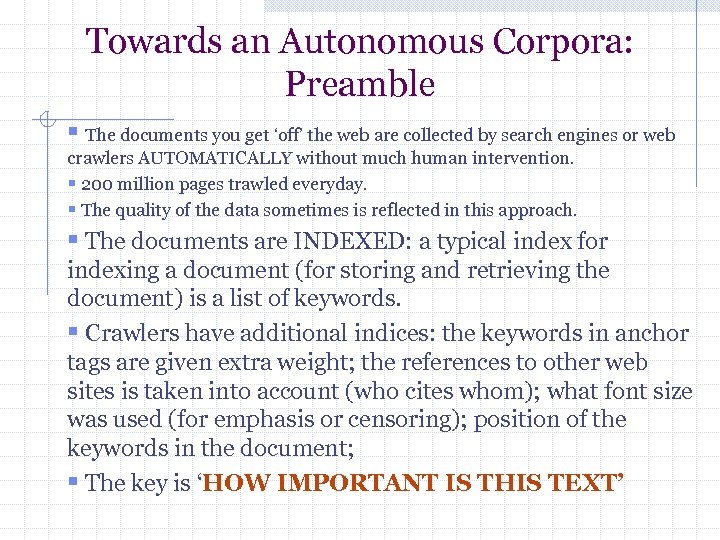

Towards an Autonomous Corpora: Preamble § The documents you get ‘off’ the web are collected by search engines or web crawlers AUTOMATICALLY without much human intervention. § 200 million pages trawled everyday. § The quality of the data sometimes is reflected in this approach. § The documents are INDEXED: a typical index for indexing a document (for storing and retrieving the document) is a list of keywords. § Crawlers have additional indices: the keywords in anchor tags are given extra weight; the references to other web sites is taken into account (who cites whom); what font size was used (for emphasis or censoring); position of the keywords in the document; § The key is ‘HOW IMPORTANT IS THIS TEXT’

Towards an Autonomous Corpora: Preamble § The documents you get ‘off’ the web are collected by search engines or web crawlers AUTOMATICALLY without much human intervention. § 200 million pages trawled everyday. § The quality of the data sometimes is reflected in this approach. § The documents are INDEXED: a typical index for indexing a document (for storing and retrieving the document) is a list of keywords. § Crawlers have additional indices: the keywords in anchor tags are given extra weight; the references to other web sites is taken into account (who cites whom); what font size was used (for emphasis or censoring); position of the keywords in the document; § The key is ‘HOW IMPORTANT IS THIS TEXT’

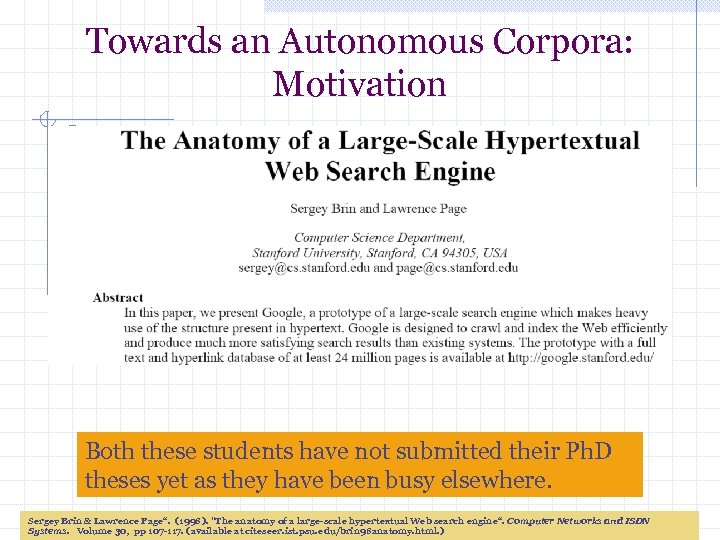

Towards an Autonomous Corpora: Motivation § Both these students have not submitted their Ph. D theses yet as they have been busy elsewhere. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § Both these students have not submitted their Ph. D theses yet as they have been busy elsewhere. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § Texts collected by large-scale hypertextual web search engines (Google, Yahoo, Alta Vista) can be organised into a text corpus – let us call it a web-enabled corpus. § The web search engines are now an essential fact of life – complete with the verb to google. § The number of texts collected everyday, and more importantly the number of texts created everyday, on the Web is now merely estimated. § But compare the TREC 96 ‘very large corpus’ (c. 20 GB) with one day trawl by then ‘prototype of a large-scale search engine’ (Google) in 1998 – 147 Gb from a trawl of 24 million web pages § Now over 200 million pages are trawled per day Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § Texts collected by large-scale hypertextual web search engines (Google, Yahoo, Alta Vista) can be organised into a text corpus – let us call it a web-enabled corpus. § The web search engines are now an essential fact of life – complete with the verb to google. § The number of texts collected everyday, and more importantly the number of texts created everyday, on the Web is now merely estimated. § But compare the TREC 96 ‘very large corpus’ (c. 20 GB) with one day trawl by then ‘prototype of a large-scale search engine’ (Google) in 1998 – 147 Gb from a trawl of 24 million web pages § Now over 200 million pages are trawled per day Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Some Definitions HYPERLINK § A hyperlink, or simply a link, is a reference in a hypertext document to another document or other resource. § A hyperlink is similar to a citation in literature. § A hyperlink combined with a data network and suitable access protocol, it can be used to fetch the resource referenced. This can then be saved, viewed, or displayed as part of the referencing document. § Hyperlinks are part of the foundation of the World Wide Web. § A link has two ends, called anchors, and a direction. The link starts at the source anchor and points to the destination anchor. However, the term link is often used for the source anchor, while the destination anchor is called the link target. http: //en. wikipedia. org/wiki/Hyperlink

Towards an Autonomous Corpora: Some Definitions HYPERLINK § A hyperlink, or simply a link, is a reference in a hypertext document to another document or other resource. § A hyperlink is similar to a citation in literature. § A hyperlink combined with a data network and suitable access protocol, it can be used to fetch the resource referenced. This can then be saved, viewed, or displayed as part of the referencing document. § Hyperlinks are part of the foundation of the World Wide Web. § A link has two ends, called anchors, and a direction. The link starts at the source anchor and points to the destination anchor. However, the term link is often used for the source anchor, while the destination anchor is called the link target. http: //en. wikipedia. org/wiki/Hyperlink

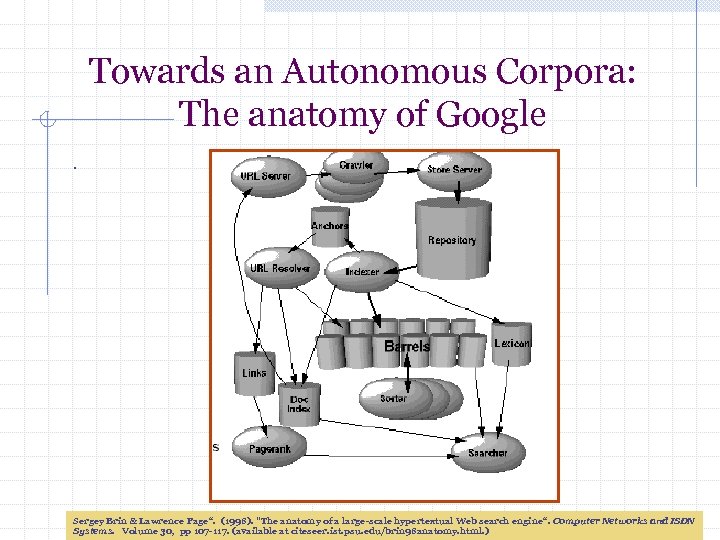

Towards an Autonomous Corpora: The anatomy of Google. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: The anatomy of Google. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: How do the crawlers work? Plain Hits and Fancy Hits: Universal Resource Locator (URL); Web Page Title; Anchor Text; Meta Tag; Plain Hits: Word lists in text documents http: //en. wikipedia. org/wiki/Hyperlink

Towards an Autonomous Corpora: How do the crawlers work? Plain Hits and Fancy Hits: Universal Resource Locator (URL); Web Page Title; Anchor Text; Meta Tag; Plain Hits: Word lists in text documents http: //en. wikipedia. org/wiki/Hyperlink

Towards an Autonomous Corpora: How do the crawlers work? Page. Rank and Plain Hits and Fancy Hits: In addition to plain and fancy hits, Google’s hitlist contains information about the frequency of usage of the web pages cited –the authority of the web page/site- and there is much inference about the pragmatic intent of the author of the web page: § Font size; Position of the keywords; § Title of the page; Keywords in anchor http: //en. wikipedia. org/wiki/Hyperlink

Towards an Autonomous Corpora: How do the crawlers work? Page. Rank and Plain Hits and Fancy Hits: In addition to plain and fancy hits, Google’s hitlist contains information about the frequency of usage of the web pages cited –the authority of the web page/site- and there is much inference about the pragmatic intent of the author of the web page: § Font size; Position of the keywords; § Title of the page; Keywords in anchor http: //en. wikipedia. org/wiki/Hyperlink

Towards an Autonomous Corpora The Use of Anchors: The text of links is treated in a special way in Google search engine. Most search engines associate the text of a link with the page that the link is on. In addition, Google search engine associates it with the page the link points to. This has several advantages. First, anchors often provide more accurate descriptions of web pages than the pages themselves. Second, anchors may exist for documents which cannot be indexed by a text-based search engine, such as images, programs, and databases. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora The Use of Anchors: The text of links is treated in a special way in Google search engine. Most search engines associate the text of a link with the page that the link is on. In addition, Google search engine associates it with the page the link points to. This has several advantages. First, anchors often provide more accurate descriptions of web pages than the pages themselves. Second, anchors may exist for documents which cannot be indexed by a text-based search engine, such as images, programs, and databases. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

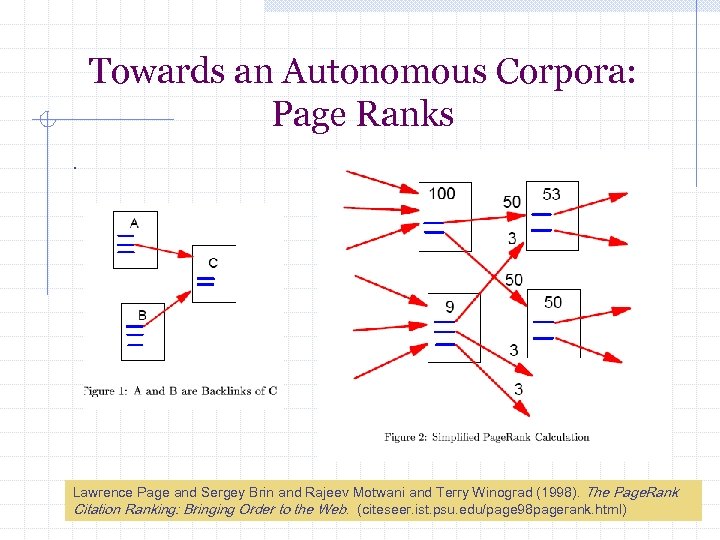

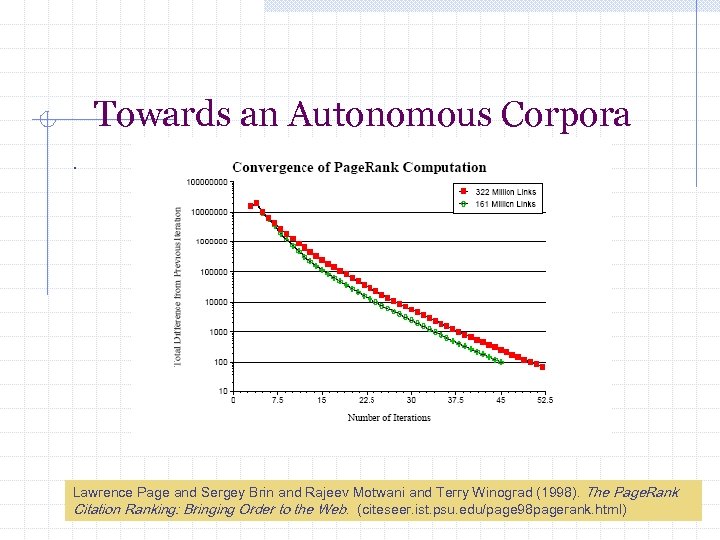

Towards an Autonomous Corpora: Page Ranks. Lawrence Page and Sergey Brin and Rajeev Motwani and Terry Winograd (1998). The Page. Rank Citation Ranking: Bringing Order to the Web. (citeseer. ist. psu. edu/page 98 pagerank. html)

Towards an Autonomous Corpora: Page Ranks. Lawrence Page and Sergey Brin and Rajeev Motwani and Terry Winograd (1998). The Page. Rank Citation Ranking: Bringing Order to the Web. (citeseer. ist. psu. edu/page 98 pagerank. html)

Towards an Autonomous Corpora The Page Rank Algorithm: We assume page A has pages T 1. . . Tn which point to it (i. e. , are citations). The parameter d is a damping factor which can be set between 0 and 1. We usually set d to 0. 85. Also C(A) is defined as the number of links going out of page A. The Page. Rank of a page A is given as follows: PR(A) = (1 -d) + d (PR(T 1)/C(T 1) +. . . + PR(Tn)/C(Tn)) Note that the Page. Ranks form a probability distribution over web pages, so the sum of all web pages’ Page. Ranks will be one. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora The Page Rank Algorithm: We assume page A has pages T 1. . . Tn which point to it (i. e. , are citations). The parameter d is a damping factor which can be set between 0 and 1. We usually set d to 0. 85. Also C(A) is defined as the number of links going out of page A. The Page. Rank of a page A is given as follows: PR(A) = (1 -d) + d (PR(T 1)/C(T 1) +. . . + PR(Tn)/C(Tn)) Note that the Page. Ranks form a probability distribution over web pages, so the sum of all web pages’ Page. Ranks will be one. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

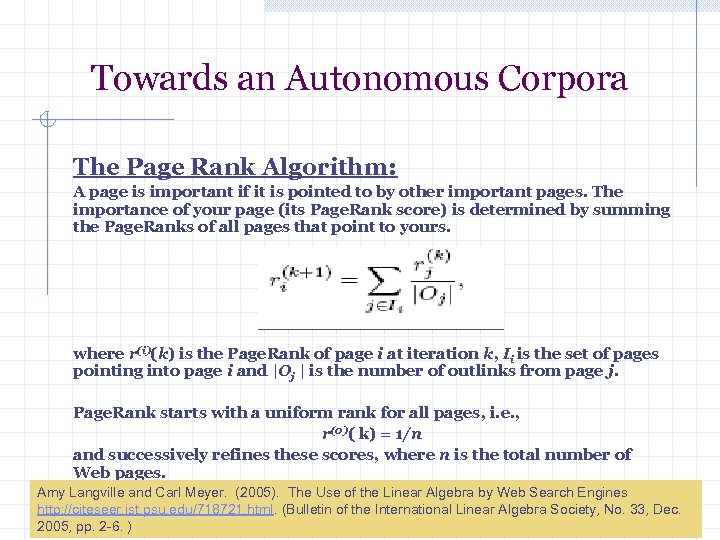

Towards an Autonomous Corpora The Page Rank Algorithm: A page is important if it is pointed to by other important pages. The importance of your page (its Page. Rank score) is determined by summing the Page. Ranks of all pages that point to yours. where r(i)(k) is the Page. Rank of page i at iteration k, Ii is the set of pages pointing into page i and |Oj | is the number of outlinks from page j. Page. Rank starts with a uniform rank for all pages, i. e. , r(0)( k) = 1/n and successively refines these scores, where n is the total number of Web pages. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora The Page Rank Algorithm: A page is important if it is pointed to by other important pages. The importance of your page (its Page. Rank score) is determined by summing the Page. Ranks of all pages that point to yours. where r(i)(k) is the Page. Rank of page i at iteration k, Ii is the set of pages pointing into page i and |Oj | is the number of outlinks from page j. Page. Rank starts with a uniform rank for all pages, i. e. , r(0)( k) = 1/n and successively refines these scores, where n is the total number of Web pages. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora: Some Definitions A web crawler (also known as web spider) is a program which browses the World Wide Web in a methodical, automated manner. Web crawlers are mainly used to create a copy of all the visited pages for later processing by a search engine, that will index the downloaded pages to provide fast searches. A web crawler is one type of bot, or software agent. In general, it starts with a list of URLs to visit. As it visits these URLs, it identifies all the hyperlinks in the page and adds them to the list of URLs to visit, recursively browsing the Web according to a set of policies. http: //en. wikipedia. org/wiki/Web_crawler

Towards an Autonomous Corpora: Some Definitions A web crawler (also known as web spider) is a program which browses the World Wide Web in a methodical, automated manner. Web crawlers are mainly used to create a copy of all the visited pages for later processing by a search engine, that will index the downloaded pages to provide fast searches. A web crawler is one type of bot, or software agent. In general, it starts with a list of URLs to visit. As it visits these URLs, it identifies all the hyperlinks in the page and adds them to the list of URLs to visit, recursively browsing the Web according to a set of policies. http: //en. wikipedia. org/wiki/Web_crawler

Towards an Autonomous Corpora: Some Definitions ‘Running a web crawler is a challenging task. There are tricky performance and reliability issues and even more importantly, there are social issues. Crawling is the most fragile application since it involves interacting with hundreds of thousands of web servers and various name servers which are all beyond the control of the system. ’ (Brin & Page 1998) http: //en. wikipedia. org/wiki/Web_crawler

Towards an Autonomous Corpora: Some Definitions ‘Running a web crawler is a challenging task. There are tricky performance and reliability issues and even more importantly, there are social issues. Crawling is the most fragile application since it involves interacting with hundreds of thousands of web servers and various name servers which are all beyond the control of the system. ’ (Brin & Page 1998) http: //en. wikipedia. org/wiki/Web_crawler

Towards an Autonomous Corpora: Some Defintions §Anchor text is the visible text in a hyperlink. §Anchor text gets a lot of weight in search engine algorithms because the linked text is usually relevant to the landing page. §The objective of search engines is to provide highly relevant search results; this is where anchor text helps as the tendency is, more often than not, to hyperlink words relevant to the landing page. Usually this is exploited by webmasters to procure high results in SERPS (search engine results pages). The concept of Google Bombing was/is possible through anchor text manipulation. Much has been written on anchor text which is available on the web today. Although the search engines are well aware of anchor text manipulation, not much change can be expected in the SE algorithms in the near future because the brighter side of the picture cannot be overlooked: anchor text delivers relevance. http: //en. wikipedia. org/wiki/ Anchor_text

Towards an Autonomous Corpora: Some Defintions §Anchor text is the visible text in a hyperlink. §Anchor text gets a lot of weight in search engine algorithms because the linked text is usually relevant to the landing page. §The objective of search engines is to provide highly relevant search results; this is where anchor text helps as the tendency is, more often than not, to hyperlink words relevant to the landing page. Usually this is exploited by webmasters to procure high results in SERPS (search engine results pages). The concept of Google Bombing was/is possible through anchor text manipulation. Much has been written on anchor text which is available on the web today. Although the search engines are well aware of anchor text manipulation, not much change can be expected in the SE algorithms in the near future because the brighter side of the picture cannot be overlooked: anchor text delivers relevance. http: //en. wikipedia. org/wiki/ Anchor_text

Towards an Autonomous Corpora: Some definitions Hit Lists A hit list corresponds to a list of occurrences of a particular word in a particular document including position, font, and capitalization information. Hit lists account for most of the space used in both the forward and the inverted indices. Because of this, it is important to represent them as efficiently as possible. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Some definitions Hit Lists A hit list corresponds to a list of occurrences of a particular word in a particular document including position, font, and capitalization information. Hit lists account for most of the space used in both the forward and the inverted indices. Because of this, it is important to represent them as efficiently as possible. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Some more definitions A Google bomb or Google wash is an attempt to influence the ranking of a given site in results returned by the Google search engine. Due to the way that Google's Page. Rank algorithm works, a website will be ranked higher if the sites that link to that page all use consistent anchor text. Googlebomb is used both as a verb and a noun. http: //en. wikipedia. org/wiki/Google_Bomb

Towards an Autonomous Corpora: Some more definitions A Google bomb or Google wash is an attempt to influence the ranking of a given site in results returned by the Google search engine. Due to the way that Google's Page. Rank algorithm works, a website will be ranked higher if the sites that link to that page all use consistent anchor text. Googlebomb is used both as a verb and a noun. http: //en. wikipedia. org/wiki/Google_Bomb

Towards an Autonomous Corpora: Motivation § Texts collected by large-scale hypertextual web search engines (Google, Yahoo, Alta Vista) can be organised into a text corpus – let us call it a web-enabled corpus. § A whole new technology has developed around the search engines – and web-crawlers are one of the main artefacts pf this technology. § Web crawling technology draws from bibliometrics, information retrieval, mark-up languages, Internet technology, and from marketing theory and practice. § Crawlers have to deal with duplications, erroneous and incomplete documents, misleading titles, and for all practical purposes an uncountable number of actors and stakeholders. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § Texts collected by large-scale hypertextual web search engines (Google, Yahoo, Alta Vista) can be organised into a text corpus – let us call it a web-enabled corpus. § A whole new technology has developed around the search engines – and web-crawlers are one of the main artefacts pf this technology. § Web crawling technology draws from bibliometrics, information retrieval, mark-up languages, Internet technology, and from marketing theory and practice. § Crawlers have to deal with duplications, erroneous and incomplete documents, misleading titles, and for all practical purposes an uncountable number of actors and stakeholders. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § A whole new technology has developed around the search engines – and web-crawlers are one of the main artefacts pf this technology. § A web-enabled corpus, in my view, will not depend only on traditional keyword-based trawls, but rather will depend an ontological description provided by the corpus builder. § Yes, web trawls bring in all sorts of ‘mean texts’ (says Mark Liberman) but what comes back is living language: duplications, errors, misleading titles, confused content, and frequent revisions and always some exciting new text or text type. § The trawl brings back different genres and different modalities of communication (images, sounds and even ongoing computer games) Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § A whole new technology has developed around the search engines – and web-crawlers are one of the main artefacts pf this technology. § A web-enabled corpus, in my view, will not depend only on traditional keyword-based trawls, but rather will depend an ontological description provided by the corpus builder. § Yes, web trawls bring in all sorts of ‘mean texts’ (says Mark Liberman) but what comes back is living language: duplications, errors, misleading titles, confused content, and frequent revisions and always some exciting new text or text type. § The trawl brings back different genres and different modalities of communication (images, sounds and even ongoing computer games) Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation § The crawler technology – that is powered by an array of computers, clever programs that can run over a distributed network, and a limitless permission for crawling over- has its own “craft theory of meaning”. § ‘Hits’ – word occurrences – are centre-piece here, including both the plain and fancy hits. § Sociology of links is critical to the success of a search engine – the more cited links (and by implication the content of the linked page) are regarded as more credible than the less cited ones: there authorities and hubs (Remember the first corpus of Educated British English? ) Wilks, Yorick. , Slator, Brian M. , & Guthrie, Louise M. , (1996). Electric Words: Dictionaries, Computers, Meaning. Cambridge (USA) & London: THe

Towards an Autonomous Corpora: Motivation § The crawler technology – that is powered by an array of computers, clever programs that can run over a distributed network, and a limitless permission for crawling over- has its own “craft theory of meaning”. § ‘Hits’ – word occurrences – are centre-piece here, including both the plain and fancy hits. § Sociology of links is critical to the success of a search engine – the more cited links (and by implication the content of the linked page) are regarded as more credible than the less cited ones: there authorities and hubs (Remember the first corpus of Educated British English? ) Wilks, Yorick. , Slator, Brian M. , & Guthrie, Louise M. , (1996). Electric Words: Dictionaries, Computers, Meaning. Cambridge (USA) & London: THe

Towards an Autonomous Corpora: Motivation § The crawler technology – that is powered by an array of computers, clever programs that can run over a distributed network, and a limitless permission for crawling over- has its own “craft theory of meaning”. § Crawler technologists are compiling lexicon – including base lexicon and a list of neologism- for indexing and retrieving stored documents. § The Google lexicon had 14 million entries in 1998 and hits were added on a daily basis. § Text-enabled thesaurus construction? Wilks, Yorick. , Slator, Brian M. , & Guthrie, Louise M. , (1996). Electric Words: Dictionaries, Computers, Meaning. Cambridge (USA) & London: THe

Towards an Autonomous Corpora: Motivation § The crawler technology – that is powered by an array of computers, clever programs that can run over a distributed network, and a limitless permission for crawling over- has its own “craft theory of meaning”. § Crawler technologists are compiling lexicon – including base lexicon and a list of neologism- for indexing and retrieving stored documents. § The Google lexicon had 14 million entries in 1998 and hits were added on a daily basis. § Text-enabled thesaurus construction? Wilks, Yorick. , Slator, Brian M. , & Guthrie, Louise M. , (1996). Electric Words: Dictionaries, Computers, Meaning. Cambridge (USA) & London: THe

Towards an Autonomous Corpora: Motivation § The googlers, both crawlers and the users, have raised the interesting question as to ‘how and why did the notion arise that one could write down not only word lists of parts and wholes but then move on to explaining or describing the meanings of words? ’ § ‘Here is one of those historical-cultural shifts, full of philosophical significance in the European tradition of thought, where words come explicitly to refer to one another in an organised manner. ’ § The question now is whether computers can help lexicographers and information retrieval experts to create the networks of meanings we find not only in a single text but in a text collection? Wilks, Yorick. , Slator, Brian M. , & Guthrie, Louise M. , (1996). Electric Words: Dictionaries, Computers, Meaning. Cambridge (USA) & London: THe

Towards an Autonomous Corpora: Motivation § The googlers, both crawlers and the users, have raised the interesting question as to ‘how and why did the notion arise that one could write down not only word lists of parts and wholes but then move on to explaining or describing the meanings of words? ’ § ‘Here is one of those historical-cultural shifts, full of philosophical significance in the European tradition of thought, where words come explicitly to refer to one another in an organised manner. ’ § The question now is whether computers can help lexicographers and information retrieval experts to create the networks of meanings we find not only in a single text but in a text collection? Wilks, Yorick. , Slator, Brian M. , & Guthrie, Louise M. , (1996). Electric Words: Dictionaries, Computers, Meaning. Cambridge (USA) & London: THe

Towards an Autonomous Corpora: Motivation: Examples from Minority Languages § Kevin Scannel’s web crawling software An Crúbadán for minority languages (i. e. any language other than English) § An Crúbadán designed to exploit the vast quantities of text freely available on the web as a way of bringing the benefits of statistical NLP to languages with small numbers of speakers and/or limited computational resources. § Initially it was deployed for the six Celtic languages, but more recently I've added support for a number of other languages from all parts of the world. § http: //borel. slu. edu/index. html

Towards an Autonomous Corpora: Motivation: Examples from Minority Languages § Kevin Scannel’s web crawling software An Crúbadán for minority languages (i. e. any language other than English) § An Crúbadán designed to exploit the vast quantities of text freely available on the web as a way of bringing the benefits of statistical NLP to languages with small numbers of speakers and/or limited computational resources. § Initially it was deployed for the six Celtic languages, but more recently I've added support for a number of other languages from all parts of the world. § http: //borel. slu. edu/index. html

Towards an Autonomous Corpora: Motivation Examples from Minority Languages §Rayid Ghani, Rosie Jones, and Dunja Mladenic’s Mining the web to create a minority language corpus. § Language Recognition § Web crawling § Comparison of information retrieval techniques § To create a corpus of Web documents in a minority language. E. g. Slovenian. Difficult because Slovenian is very similar to Czech, Slovak and Croatian, and there are certainly more Czech and Croatian documents on the Web. §http: //citeseer. nj. nec. com/492009. html § http: //citeseer. nj. nec. com/492009. html

Towards an Autonomous Corpora: Motivation Examples from Minority Languages §Rayid Ghani, Rosie Jones, and Dunja Mladenic’s Mining the web to create a minority language corpus. § Language Recognition § Web crawling § Comparison of information retrieval techniques § To create a corpus of Web documents in a minority language. E. g. Slovenian. Difficult because Slovenian is very similar to Czech, Slovak and Croatian, and there are certainly more Czech and Croatian documents on the Web. §http: //citeseer. nj. nec. com/492009. html § http: //citeseer. nj. nec. com/492009. html

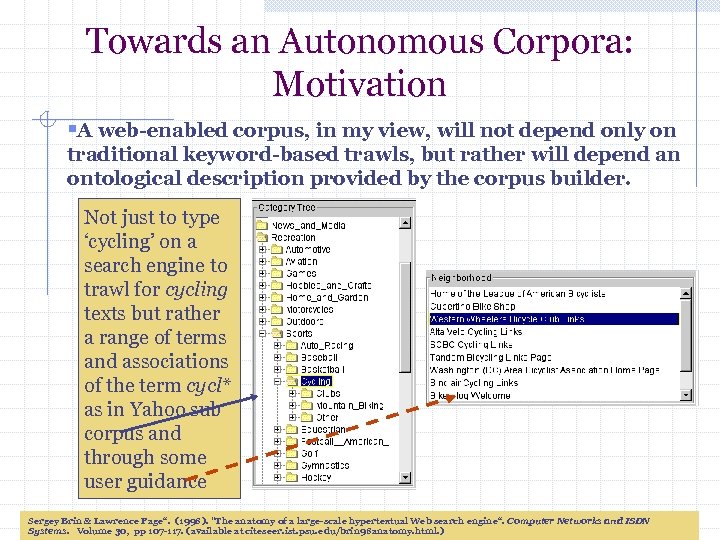

Towards an Autonomous Corpora: Motivation §A web-enabled corpus, in my view, will not depend only on traditional keyword-based trawls, but rather will depend an ontological description provided by the corpus builder. Not just to type ‘cycling’ on a search engine to trawl for cycling texts but rather a range of terms and associations of the term cycl* as in Yahoo sub corpus and through some user guidance Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: Motivation §A web-enabled corpus, in my view, will not depend only on traditional keyword-based trawls, but rather will depend an ontological description provided by the corpus builder. Not just to type ‘cycling’ on a search engine to trawl for cycling texts but rather a range of terms and associations of the term cycl* as in Yahoo sub corpus and through some user guidance Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

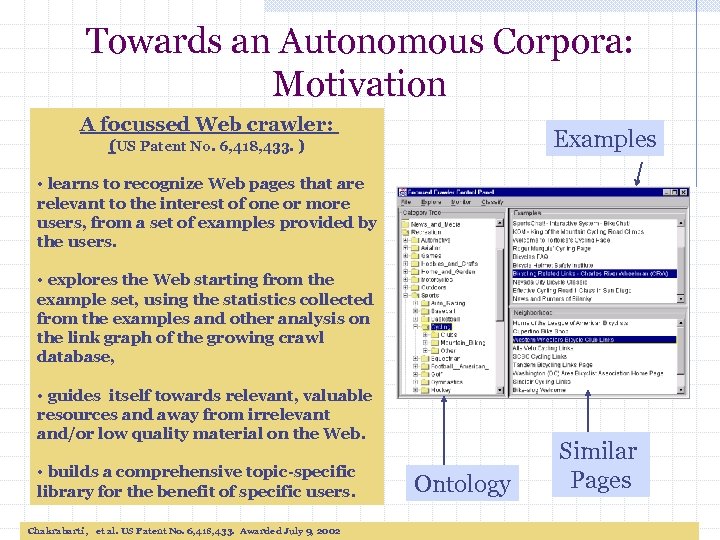

Towards an Autonomous Corpora: Motivation A focussed Web crawler: Examples (US Patent No. 6, 418, 433. ) • learns to recognize Web pages that are relevant to the interest of one or more users, from a set of examples provided by the users. • explores the Web starting from the example set, using the statistics collected from the examples and other analysis on the link graph of the growing crawl database, • guides itself towards relevant, valuable resources and away from irrelevant and/or low quality material on the Web. • builds a comprehensive topic-specific library for the benefit of specific users. Chakrabarti , et al. US Patent No. 6, 418, 433. Awarded July 9, 2002 Ontology Similar Pages

Towards an Autonomous Corpora: Motivation A focussed Web crawler: Examples (US Patent No. 6, 418, 433. ) • learns to recognize Web pages that are relevant to the interest of one or more users, from a set of examples provided by the users. • explores the Web starting from the example set, using the statistics collected from the examples and other analysis on the link graph of the growing crawl database, • guides itself towards relevant, valuable resources and away from irrelevant and/or low quality material on the Web. • builds a comprehensive topic-specific library for the benefit of specific users. Chakrabarti , et al. US Patent No. 6, 418, 433. Awarded July 9, 2002 Ontology Similar Pages

Towards an Autonomous Corpora: Motivation §A web-enabled corpus, in my view, will not depend only on one search engine but on many search engines

Towards an Autonomous Corpora: Motivation §A web-enabled corpus, in my view, will not depend only on one search engine but on many search engines

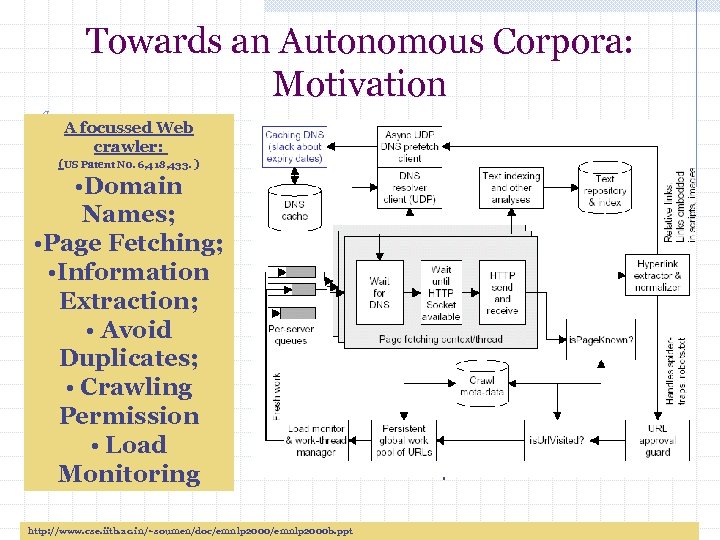

Towards an Autonomous Corpora: Motivation A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cse. iitb. ac. in/~soumen/doc/emnlp 2000 b. ppt

Towards an Autonomous Corpora: Motivation A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cse. iitb. ac. in/~soumen/doc/emnlp 2000 b. ppt

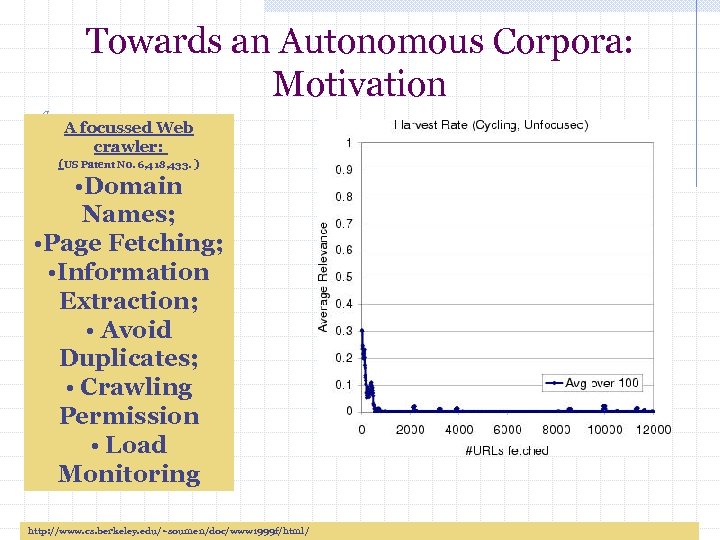

Towards an Autonomous Corpora: Motivation A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/

Towards an Autonomous Corpora: Motivation A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/

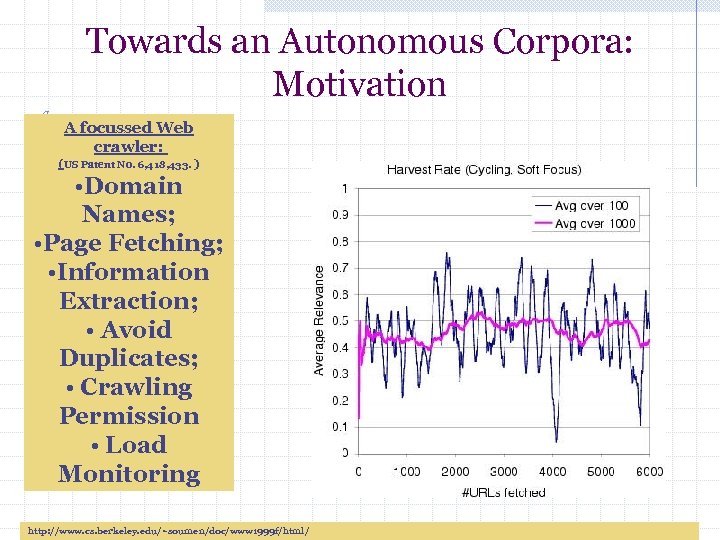

Towards an Autonomous Corpora: Motivation A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/

Towards an Autonomous Corpora: Motivation A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/

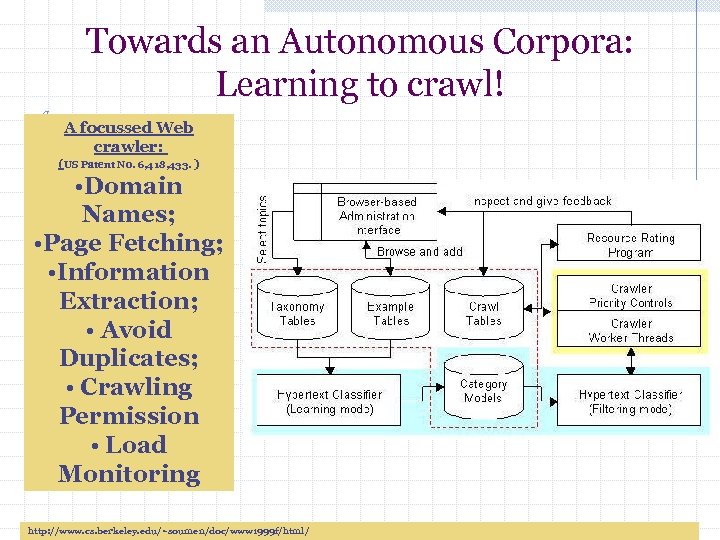

Towards an Autonomous Corpora: Learning to crawl! A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/

Towards an Autonomous Corpora: Learning to crawl! A focussed Web crawler: (US Patent No. 6, 418, 433. ) • Domain Names; • Page Fetching; • Information Extraction; • Avoid Duplicates; • Crawling Permission • Load Monitoring http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/

Towards an Autonomous Corpora: Motivation The Coverage of the Web Search Engines: 1997 1999 2000 35% 18% ~50% http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/; Chakrabarti, Soumen (2003). Mining the Web: Discovering Knowledge from Hypertext. San Francisco: Morgan Kaufman Publishers

Towards an Autonomous Corpora: Motivation The Coverage of the Web Search Engines: 1997 1999 2000 35% 18% ~50% http: //www. cs. berkeley. edu/~soumen/doc/www 1999 f/html/; Chakrabarti, Soumen (2003). Mining the Web: Discovering Knowledge from Hypertext. San Francisco: Morgan Kaufman Publishers

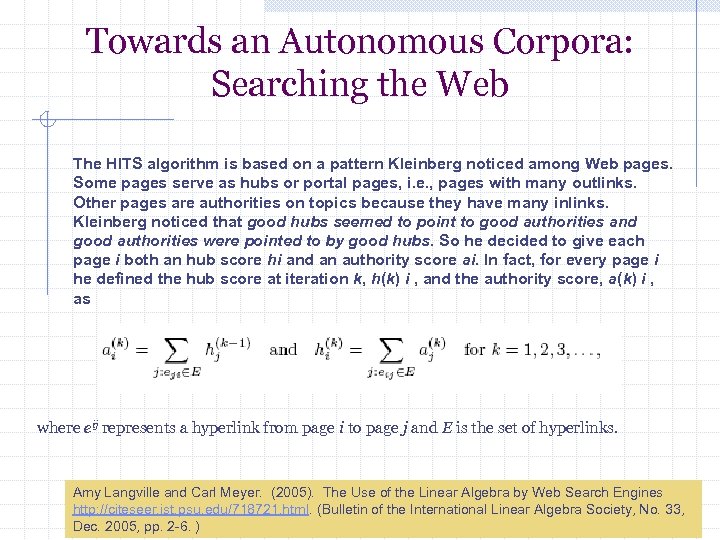

Towards an Autonomous Corpora: Searching the Web The HITS algorithm is based on a pattern Kleinberg noticed among Web pages. Some pages serve as hubs or portal pages, i. e. , pages with many outlinks. Other pages are authorities on topics because they have many inlinks. Kleinberg noticed that good hubs seemed to point to good authorities and good authorities were pointed to by good hubs. So he decided to give each page i both an hub score hi and an authority score ai. In fact, for every page i he defined the hub score at iteration k, h(k) i , and the authority score, a(k) i , as where eij represents a hyperlink from page i to page j and E is the set of hyperlinks. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora: Searching the Web The HITS algorithm is based on a pattern Kleinberg noticed among Web pages. Some pages serve as hubs or portal pages, i. e. , pages with many outlinks. Other pages are authorities on topics because they have many inlinks. Kleinberg noticed that good hubs seemed to point to good authorities and good authorities were pointed to by good hubs. So he decided to give each page i both an hub score hi and an authority score ai. In fact, for every page i he defined the hub score at iteration k, h(k) i , and the authority score, a(k) i , as where eij represents a hyperlink from page i to page j and E is the set of hyperlinks. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora: Searching the Web Page. Rank, the second link analysis algorithm from 1998, is the heart of Google. Brin and Page use a recursive scheme similar to Kleinberg’s. Their original idea was that a page is important if it is pointed to by other important pages. That is, they decided that the importance of your page (its Page. Rank score) is determined by summing the Page. Ranks of all pages that point to yours. In building a mathematical definition of Page. Rank, Brin and Page also reasoned that when an important page points to several places, its weight (Page. Rank) should be distributed proportionately. In other words, if YAHOO! points to your Web page, that’s good, but you shouldn’t receive the full weight of YAHOO! because they point to many other places. If YAHOO! points to 999 pages in addition to yours, then you should only get credit for 1/1000 of YAHOO!’s Page. Rank. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora: Searching the Web Page. Rank, the second link analysis algorithm from 1998, is the heart of Google. Brin and Page use a recursive scheme similar to Kleinberg’s. Their original idea was that a page is important if it is pointed to by other important pages. That is, they decided that the importance of your page (its Page. Rank score) is determined by summing the Page. Ranks of all pages that point to yours. In building a mathematical definition of Page. Rank, Brin and Page also reasoned that when an important page points to several places, its weight (Page. Rank) should be distributed proportionately. In other words, if YAHOO! points to your Web page, that’s good, but you shouldn’t receive the full weight of YAHOO! because they point to many other places. If YAHOO! points to 999 pages in addition to yours, then you should only get credit for 1/1000 of YAHOO!’s Page. Rank. Amy Langville and Carl Meyer. (2005). The Use of the Linear Algebra by Web Search Engines http: //citeseer. ist. psu. edu/718721. html. (Bulletin of the International Linear Algebra Society, No. 33, Dec. 2005, pp. 2 -6. )

Towards an Autonomous Corpora: The Google Lexicon The lexicon has several different forms. § One important change from earlier systems is that the lexicon can fit in memory for a reasonable price. In the current implementation we can keep the lexicon in memory on a machine with 256 MB of main memory. § The current lexicon contains 14 million words(though some rare words were not added to the lexicon). § It is implemented in two parts -- a list of the words (concatenated together but separated by nulls) and a hash table of pointers. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora: The Google Lexicon The lexicon has several different forms. § One important change from earlier systems is that the lexicon can fit in memory for a reasonable price. In the current implementation we can keep the lexicon in memory on a machine with 256 MB of main memory. § The current lexicon contains 14 million words(though some rare words were not added to the lexicon). § It is implemented in two parts -- a list of the words (concatenated together but separated by nulls) and a hash table of pointers. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora The web is a vast collection of completely uncontrolled heterogeneous documents. Documents on the web have extreme variation internal to the documents, and also in the external meta information that might be available n documents differ internally in their language (both human and programming), w vocabulary (email addresses, links, zip codes, phone numbers, product numbers), w type or format (text, HTML, PDF, images, sounds), and w may even be machine generated (log files or output from a database). External meta information is information that can be inferred about a document, but is not contained within it. Examples of external meta information include things like reputation of the source, update frequency, quality, popularity or usage, and citations. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora The web is a vast collection of completely uncontrolled heterogeneous documents. Documents on the web have extreme variation internal to the documents, and also in the external meta information that might be available n documents differ internally in their language (both human and programming), w vocabulary (email addresses, links, zip codes, phone numbers, product numbers), w type or format (text, HTML, PDF, images, sounds), and w may even be machine generated (log files or output from a database). External meta information is information that can be inferred about a document, but is not contained within it. Examples of external meta information include things like reputation of the source, update frequency, quality, popularity or usage, and citations. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora Searching 1. Parse the query. 2. Convert words into word. IDs. 3. Seek to the start of the doclist in the short barrel for every word. 4. Scan through the doclists until there is a document that matches all the search terms. 5. Compute the rank of that document for the query. 6. If we are in the short barrels and at the end of any doclist, seek to the start of the doclist in the full barrel for every word and go to step 4. 7. If we are not at the end of any doclist go to step 4. Sort the documents that have matched by rank and return the top k. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora Searching 1. Parse the query. 2. Convert words into word. IDs. 3. Seek to the start of the doclist in the short barrel for every word. 4. Scan through the doclists until there is a document that matches all the search terms. 5. Compute the rank of that document for the query. 6. If we are in the short barrels and at the end of any doclist, seek to the start of the doclist in the full barrel for every word and go to step 4. 7. If we are not at the end of any doclist go to step 4. Sort the documents that have matched by rank and return the top k. Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora Searching For a multi-word search, the situation is more complicated. Now multiple hit lists must be scanned through at once so that hits occurring close together in a document are weighted higher than hits occurring far apart. The hits from the multiple hit lists are matched up so that nearby hits are matched together. For every matched set of hits, a proximity is computed. The proximity is based on how far apart the hits are in the document (or anchor) but is classified into 10 different value "bins" ranging from a phrase match to "not even close". Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

Towards an Autonomous Corpora Searching For a multi-word search, the situation is more complicated. Now multiple hit lists must be scanned through at once so that hits occurring close together in a document are weighted higher than hits occurring far apart. The hits from the multiple hit lists are matched up so that nearby hits are matched together. For every matched set of hits, a proximity is computed. The proximity is based on how far apart the hits are in the document (or anchor) but is classified into 10 different value "bins" ranging from a phrase match to "not even close". Sergey Brin & Lawrence Page“. (1998). "The anatomy of a large-scale hypertextual Web search engine“. Computer Networks and ISDN Systems. Volume 30, pp 107 -117. (available at citeseer. ist. psu. edu/brin 98 anatomy. html. )

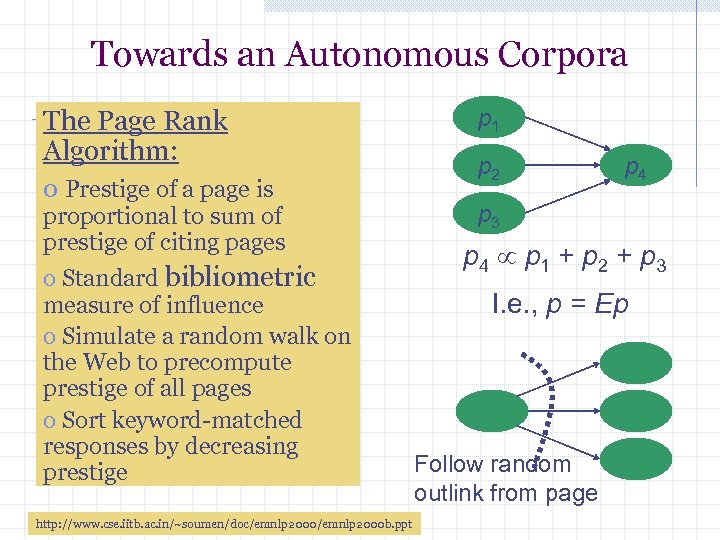

Towards an Autonomous Corpora The Page Rank Algorithm: o Prestige of a page is proportional to sum of prestige of citing pages o Standard bibliometric measure of influence o Simulate a random walk on the Web to precompute prestige of all pages o Sort keyword-matched responses by decreasing prestige http: //www. cse. iitb. ac. in/~soumen/doc/emnlp 2000 b. ppt p 1 p 2 p 4 p 3 p 4 p 1 + p 2 + p 3 I. e. , p = Ep Follow random outlink from page

Towards an Autonomous Corpora The Page Rank Algorithm: o Prestige of a page is proportional to sum of prestige of citing pages o Standard bibliometric measure of influence o Simulate a random walk on the Web to precompute prestige of all pages o Sort keyword-matched responses by decreasing prestige http: //www. cse. iitb. ac. in/~soumen/doc/emnlp 2000 b. ppt p 1 p 2 p 4 p 3 p 4 p 1 + p 2 + p 3 I. e. , p = Ep Follow random outlink from page

Towards an Autonomous Corpora. Lawrence Page and Sergey Brin and Rajeev Motwani and Terry Winograd (1998). The Page. Rank Citation Ranking: Bringing Order to the Web. (citeseer. ist. psu. edu/page 98 pagerank. html)

Towards an Autonomous Corpora. Lawrence Page and Sergey Brin and Rajeev Motwani and Terry Winograd (1998). The Page. Rank Citation Ranking: Bringing Order to the Web. (citeseer. ist. psu. edu/page 98 pagerank. html)

Towards an Autonomous Corpora: Some Observation on dialling corpora The World-Wide Web : A SWOT Analysis Strength: an open-access to a vast array of genre-diverse, multilingual documents for building corpora of real language, particularly with focused crawling; Weakness: the documents are captured and indexed automatically; machine supported capture does lead to the capture of the same data and some of the indexing techniques are ad-hoc; this leads to unwanted, ‘mean’ texts being trawled. Opportunity: Webbies have a naïve view of texts and text collections; corpus linguists can help here in the description of texts and text corpora; Threats: An open environment of heterogeneous users and interests; dominated by techno solutions;

Towards an Autonomous Corpora: Some Observation on dialling corpora The World-Wide Web : A SWOT Analysis Strength: an open-access to a vast array of genre-diverse, multilingual documents for building corpora of real language, particularly with focused crawling; Weakness: the documents are captured and indexed automatically; machine supported capture does lead to the capture of the same data and some of the indexing techniques are ad-hoc; this leads to unwanted, ‘mean’ texts being trawled. Opportunity: Webbies have a naïve view of texts and text collections; corpus linguists can help here in the description of texts and text corpora; Threats: An open environment of heterogeneous users and interests; dominated by techno solutions;