05e6a9cb4734f465577e6797760822ed.ppt

- Количество слайдов: 33

Towards Acceleration of Fault Simulation Using Graphics Processing Units Kanupriya Gulati Sunil P. Khatri Department of ECE Texas A&M University, College Station

Towards Acceleration of Fault Simulation Using Graphics Processing Units Kanupriya Gulati Sunil P. Khatri Department of ECE Texas A&M University, College Station

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Introduction n Fault Simulation (FS) is crucial in the VLSI design flow n n n Given a digital design and a set of vectors V, FS evaluates the number of stuck at faults (Fsim) tested by applying V The ratio of Fsim/Ftotal is a measure of fault coverage Current designs have millions of logic gates n n The number of faulty variations are proportional to design size Each of these variations needs to be simulated for the V vectors n Therefore, it is important to explore ways to accelerate FS n The ideal FS approach should be n n n Fast Scalable & Cost effective

Introduction n Fault Simulation (FS) is crucial in the VLSI design flow n n n Given a digital design and a set of vectors V, FS evaluates the number of stuck at faults (Fsim) tested by applying V The ratio of Fsim/Ftotal is a measure of fault coverage Current designs have millions of logic gates n n The number of faulty variations are proportional to design size Each of these variations needs to be simulated for the V vectors n Therefore, it is important to explore ways to accelerate FS n The ideal FS approach should be n n n Fast Scalable & Cost effective

Introduction n We accelerate FS using graphics processing units (GPUs) n n A GPU is essentially a commodity stream processor n n By exploiting fault and pattern parallel approaches Highly parallel Very fast Operating paradigm is SIMD (Single-Instruction, Multiple Data) GPUs, owing to their massively parallel architecture, have been used to accelerate n n n Image/stream processing Data compression Numerical algorithms n LU decomposition, FFT etc

Introduction n We accelerate FS using graphics processing units (GPUs) n n A GPU is essentially a commodity stream processor n n By exploiting fault and pattern parallel approaches Highly parallel Very fast Operating paradigm is SIMD (Single-Instruction, Multiple Data) GPUs, owing to their massively parallel architecture, have been used to accelerate n n n Image/stream processing Data compression Numerical algorithms n LU decomposition, FFT etc

Introduction n We implemented our approach on the n n n We used the Compute Unified Device Architecture (CUDA) framework n n Open source C-like GPU programming and interfacing tool When using a single 8800 GTX GPU card n n n NVIDIA Ge. Force 8800 GTX GPU By careful engineering, we maximally harness the GPU’s n Raw computational power and n Huge memory bandwidth ~35 X speedup is obtained compared to a commercial FS tool Accounts for CPU processing and data transfer times as well Our runtimes are projected for the NVIDIA Tesla server n n Can house up to 8 GPU devices ~238 X speedup is possible compared to the commercial engine

Introduction n We implemented our approach on the n n n We used the Compute Unified Device Architecture (CUDA) framework n n Open source C-like GPU programming and interfacing tool When using a single 8800 GTX GPU card n n n NVIDIA Ge. Force 8800 GTX GPU By careful engineering, we maximally harness the GPU’s n Raw computational power and n Huge memory bandwidth ~35 X speedup is obtained compared to a commercial FS tool Accounts for CPU processing and data transfer times as well Our runtimes are projected for the NVIDIA Tesla server n n Can house up to 8 GPU devices ~238 X speedup is possible compared to the commercial engine

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

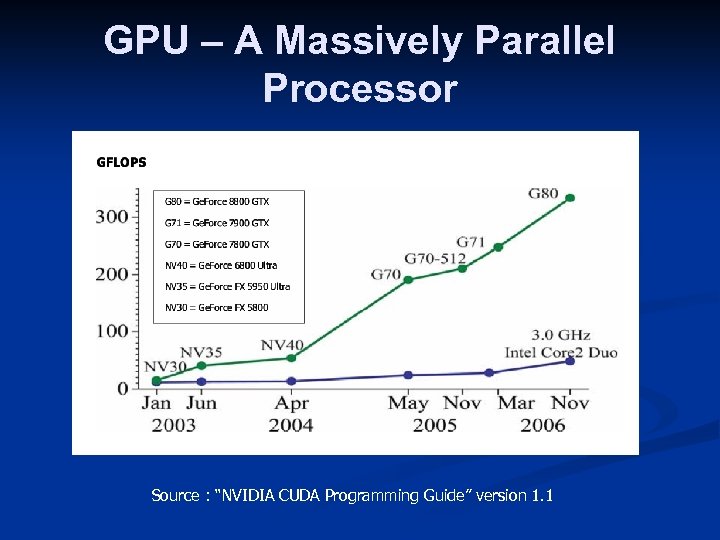

GPU – A Massively Parallel Processor Source : “NVIDIA CUDA Programming Guide” version 1. 1

GPU – A Massively Parallel Processor Source : “NVIDIA CUDA Programming Guide” version 1. 1

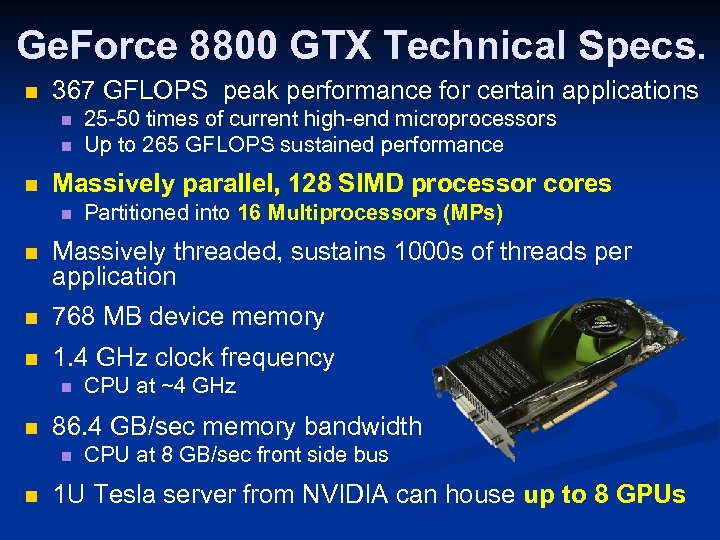

Ge. Force 8800 GTX Technical Specs. n 367 GFLOPS peak performance for certain applications n n n 25 -50 times of current high-end microprocessors Up to 265 GFLOPS sustained performance Massively parallel, 128 SIMD processor cores n Partitioned into 16 Multiprocessors (MPs) n Massively threaded, sustains 1000 s of threads per application n 768 MB device memory n 1. 4 GHz clock frequency n n 86. 4 GB/sec memory bandwidth n n CPU at ~4 GHz CPU at 8 GB/sec front side bus 1 U Tesla server from NVIDIA can house up to 8 GPUs

Ge. Force 8800 GTX Technical Specs. n 367 GFLOPS peak performance for certain applications n n n 25 -50 times of current high-end microprocessors Up to 265 GFLOPS sustained performance Massively parallel, 128 SIMD processor cores n Partitioned into 16 Multiprocessors (MPs) n Massively threaded, sustains 1000 s of threads per application n 768 MB device memory n 1. 4 GHz clock frequency n n 86. 4 GB/sec memory bandwidth n n CPU at ~4 GHz CPU at 8 GB/sec front side bus 1 U Tesla server from NVIDIA can house up to 8 GPUs

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

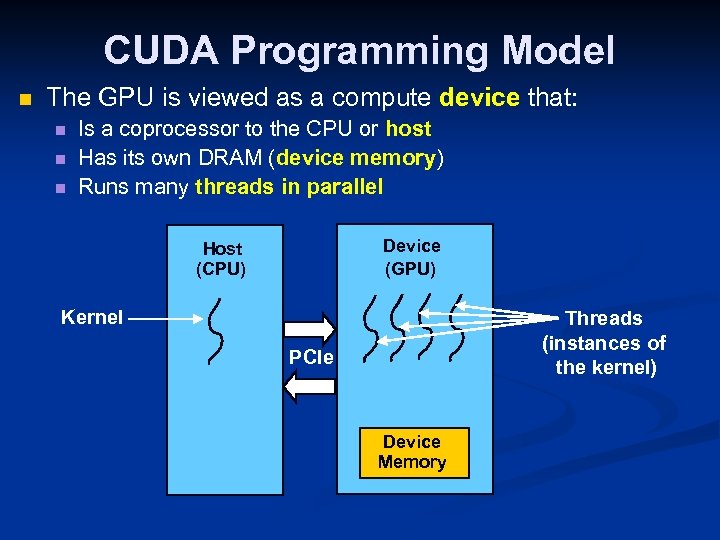

CUDA Programming Model n The GPU is viewed as a compute device that: n n n Is a coprocessor to the CPU or host Has its own DRAM (device memory) Runs many threads in parallel Device (GPU) Host (CPU) Kernel Threads (instances of the kernel) PCIe Device Memory

CUDA Programming Model n The GPU is viewed as a compute device that: n n n Is a coprocessor to the CPU or host Has its own DRAM (device memory) Runs many threads in parallel Device (GPU) Host (CPU) Kernel Threads (instances of the kernel) PCIe Device Memory

CUDA Programming Model n Data-parallel portions of an application are executed on the device in parallel on many threads n n n Kernel : code routine executed on GPU Thread : instance of a kernel Differences between GPU and CPU threads n n GPU threads are extremely lightweight n Very little creation overhead GPU needs 1000 s of threads to achieve full parallelism n Allows memory access latencies to be hidden n Multi-core CPUs require fewer threads, but the available parallelism is lower

CUDA Programming Model n Data-parallel portions of an application are executed on the device in parallel on many threads n n n Kernel : code routine executed on GPU Thread : instance of a kernel Differences between GPU and CPU threads n n GPU threads are extremely lightweight n Very little creation overhead GPU needs 1000 s of threads to achieve full parallelism n Allows memory access latencies to be hidden n Multi-core CPUs require fewer threads, but the available parallelism is lower

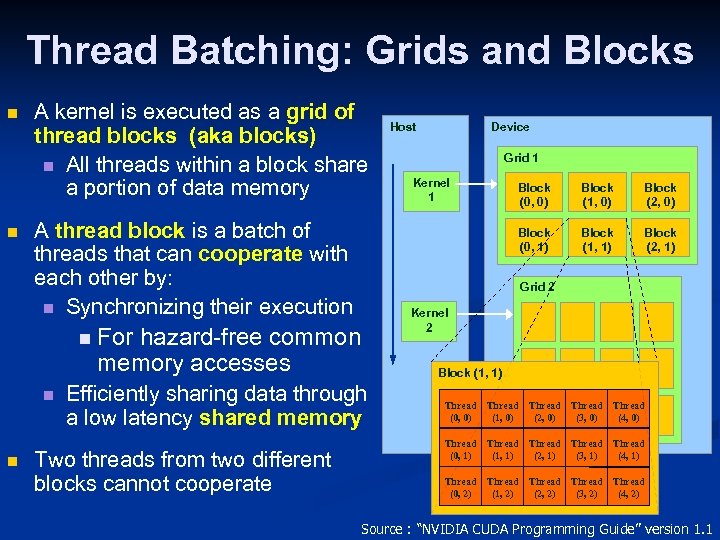

Thread Batching: Grids and Blocks n n A kernel is executed as a grid of thread blocks (aka blocks) n All threads within a block share a portion of data memory hazard-free common memory accesses Efficiently sharing data through a low latency shared memory Two threads from two different blocks cannot cooperate Grid 1 Kernel 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (1, 1) Block (2, 1) Grid 2 n For n Device Block (0, 1) A thread block is a batch of threads that can cooperate with each other by: n Synchronizing their execution n Host Kernel 2 Block (1, 1) Thread (0, 0) Thread (1, 0) Thread (2, 0) Thread (3, 0) Thread (4, 0) Thread (0, 1) Thread (1, 1) Thread (2, 1) Thread (3, 1) Thread (4, 1) Thread (0, 2) Thread (1, 2) Thread (2, 2) Thread (3, 2) Thread (4, 2) Source : “NVIDIA CUDA Programming Guide” version 1. 1

Thread Batching: Grids and Blocks n n A kernel is executed as a grid of thread blocks (aka blocks) n All threads within a block share a portion of data memory hazard-free common memory accesses Efficiently sharing data through a low latency shared memory Two threads from two different blocks cannot cooperate Grid 1 Kernel 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (1, 1) Block (2, 1) Grid 2 n For n Device Block (0, 1) A thread block is a batch of threads that can cooperate with each other by: n Synchronizing their execution n Host Kernel 2 Block (1, 1) Thread (0, 0) Thread (1, 0) Thread (2, 0) Thread (3, 0) Thread (4, 0) Thread (0, 1) Thread (1, 1) Thread (2, 1) Thread (3, 1) Thread (4, 1) Thread (0, 2) Thread (1, 2) Thread (2, 2) Thread (3, 2) Thread (4, 2) Source : “NVIDIA CUDA Programming Guide” version 1. 1

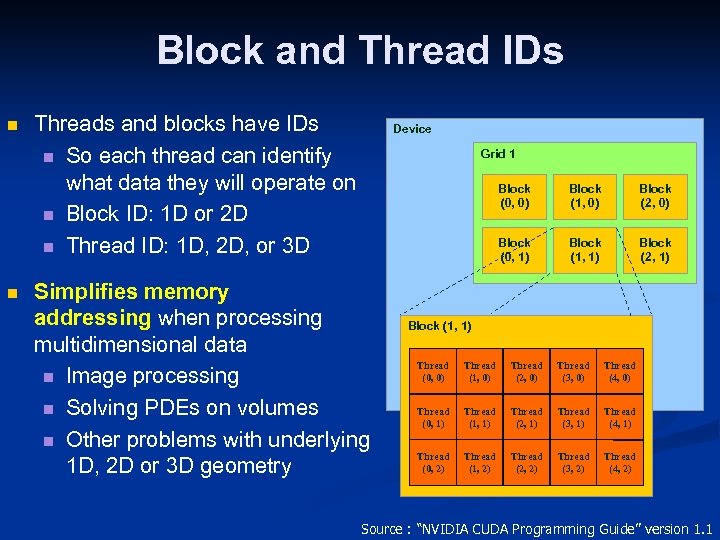

Block and Thread IDs n n Threads and blocks have IDs n So each thread can identify what data they will operate on n Block ID: 1 D or 2 D n Thread ID: 1 D, 2 D, or 3 D Device Grid 1 Block (0, 0) Block (2, 0) Block (0, 1) Simplifies memory addressing when processing multidimensional data n Image processing n Solving PDEs on volumes n Other problems with underlying 1 D, 2 D or 3 D geometry Block (1, 0) Block (1, 1) Block (2, 1) Block (1, 1) Thread (0, 0) Thread (1, 0) Thread (2, 0) Thread (3, 0) Thread (4, 0) Thread (0, 1) Thread (1, 1) Thread (2, 1) Thread (3, 1) Thread (4, 1) Thread (0, 2) Thread (1, 2) Thread (2, 2) Thread (3, 2) Thread (4, 2) Source : “NVIDIA CUDA Programming Guide” version 1. 1

Block and Thread IDs n n Threads and blocks have IDs n So each thread can identify what data they will operate on n Block ID: 1 D or 2 D n Thread ID: 1 D, 2 D, or 3 D Device Grid 1 Block (0, 0) Block (2, 0) Block (0, 1) Simplifies memory addressing when processing multidimensional data n Image processing n Solving PDEs on volumes n Other problems with underlying 1 D, 2 D or 3 D geometry Block (1, 0) Block (1, 1) Block (2, 1) Block (1, 1) Thread (0, 0) Thread (1, 0) Thread (2, 0) Thread (3, 0) Thread (4, 0) Thread (0, 1) Thread (1, 1) Thread (2, 1) Thread (3, 1) Thread (4, 1) Thread (0, 2) Thread (1, 2) Thread (2, 2) Thread (3, 2) Thread (4, 2) Source : “NVIDIA CUDA Programming Guide” version 1. 1

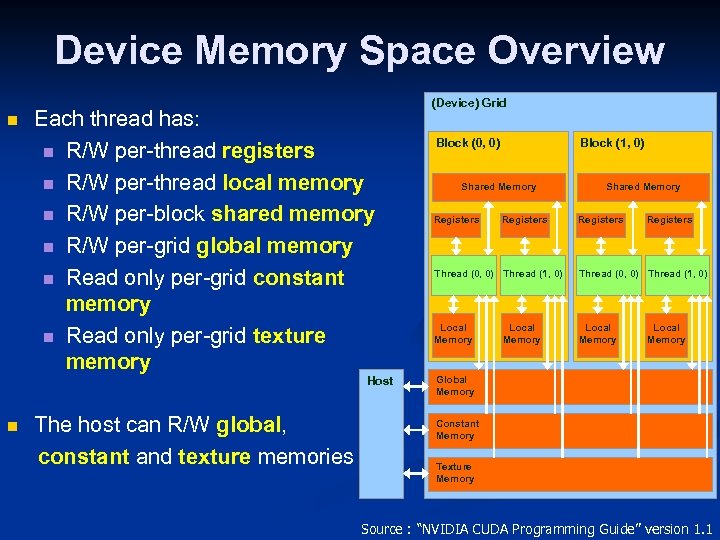

Device Memory Space Overview n Each thread has: n R/W per-thread registers n R/W per-thread local memory n R/W per-block shared memory n R/W per-grid global memory n Read only per-grid constant memory n Read only per-grid texture memory Host n The host can R/W global, constant and texture memories (Device) Grid Block (0, 0) Block (1, 0) Shared Memory Registers Thread (0, 0) Thread (1, 0) Local Memory Global Memory Constant Memory Texture Memory Source : “NVIDIA CUDA Programming Guide” version 1. 1

Device Memory Space Overview n Each thread has: n R/W per-thread registers n R/W per-thread local memory n R/W per-block shared memory n R/W per-grid global memory n Read only per-grid constant memory n Read only per-grid texture memory Host n The host can R/W global, constant and texture memories (Device) Grid Block (0, 0) Block (1, 0) Shared Memory Registers Thread (0, 0) Thread (1, 0) Local Memory Global Memory Constant Memory Texture Memory Source : “NVIDIA CUDA Programming Guide” version 1. 1

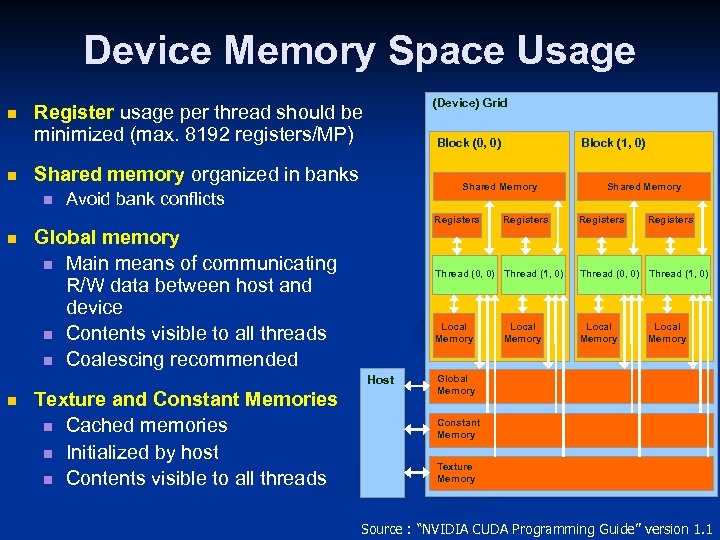

Device Memory Space Usage n n (Device) Grid Register usage per thread should be minimized (max. 8192 registers/MP) Block (0, 0) Shared memory organized in banks n Shared Memory Avoid bank conflicts Registers n Global memory n Main means of communicating R/W data between host and device n Contents visible to all threads n Coalescing recommended Texture and Constant Memories n Cached memories n Initialized by host n Contents visible to all threads Registers Shared Memory Registers Thread (0, 0) Thread (1, 0) Local Memory Host n Block (1, 0) Local Memory Global Memory Constant Memory Texture Memory Source : “NVIDIA CUDA Programming Guide” version 1. 1

Device Memory Space Usage n n (Device) Grid Register usage per thread should be minimized (max. 8192 registers/MP) Block (0, 0) Shared memory organized in banks n Shared Memory Avoid bank conflicts Registers n Global memory n Main means of communicating R/W data between host and device n Contents visible to all threads n Coalescing recommended Texture and Constant Memories n Cached memories n Initialized by host n Contents visible to all threads Registers Shared Memory Registers Thread (0, 0) Thread (1, 0) Local Memory Host n Block (1, 0) Local Memory Global Memory Constant Memory Texture Memory Source : “NVIDIA CUDA Programming Guide” version 1. 1

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

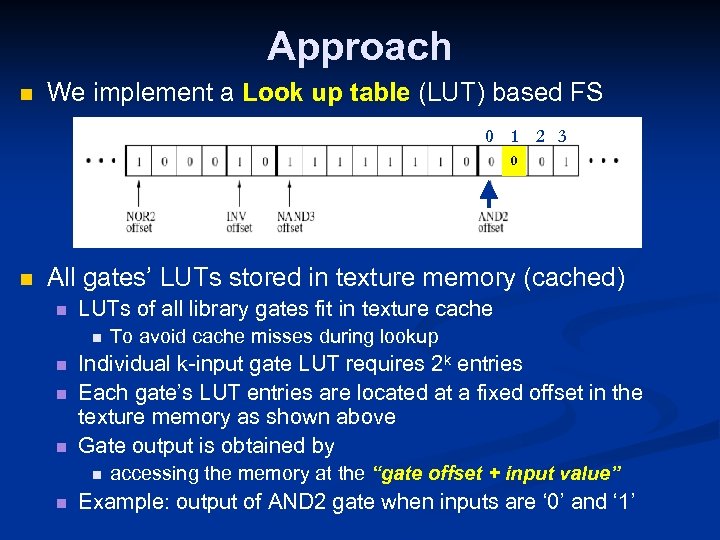

Approach n We implement a Look up table (LUT) based FS 0 1 2 3 0 n All gates’ LUTs stored in texture memory (cached) n LUTs of all library gates fit in texture cache n n Individual k-input gate LUT requires 2 k entries Each gate’s LUT entries are located at a fixed offset in the texture memory as shown above Gate output is obtained by n n To avoid cache misses during lookup accessing the memory at the “gate offset + input value” Example: output of AND 2 gate when inputs are ‘ 0’ and ‘ 1’

Approach n We implement a Look up table (LUT) based FS 0 1 2 3 0 n All gates’ LUTs stored in texture memory (cached) n LUTs of all library gates fit in texture cache n n Individual k-input gate LUT requires 2 k entries Each gate’s LUT entries are located at a fixed offset in the texture memory as shown above Gate output is obtained by n n To avoid cache misses during lookup accessing the memory at the “gate offset + input value” Example: output of AND 2 gate when inputs are ‘ 0’ and ‘ 1’

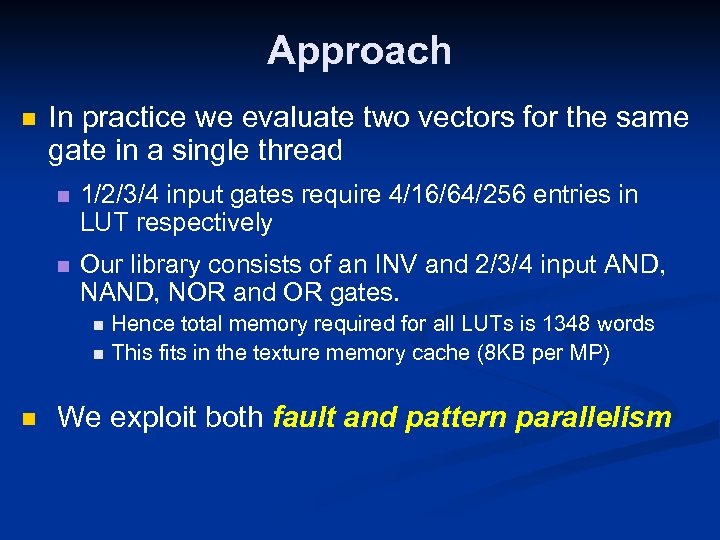

Approach n In practice we evaluate two vectors for the same gate in a single thread n 1/2/3/4 input gates require 4/16/64/256 entries in LUT respectively n Our library consists of an INV and 2/3/4 input AND, NOR and OR gates. Hence total memory required for all LUTs is 1348 words n This fits in the texture memory cache (8 KB per MP) n n We exploit both fault and pattern parallelism

Approach n In practice we evaluate two vectors for the same gate in a single thread n 1/2/3/4 input gates require 4/16/64/256 entries in LUT respectively n Our library consists of an INV and 2/3/4 input AND, NOR and OR gates. Hence total memory required for all LUTs is 1348 words n This fits in the texture memory cache (8 KB per MP) n n We exploit both fault and pattern parallelism

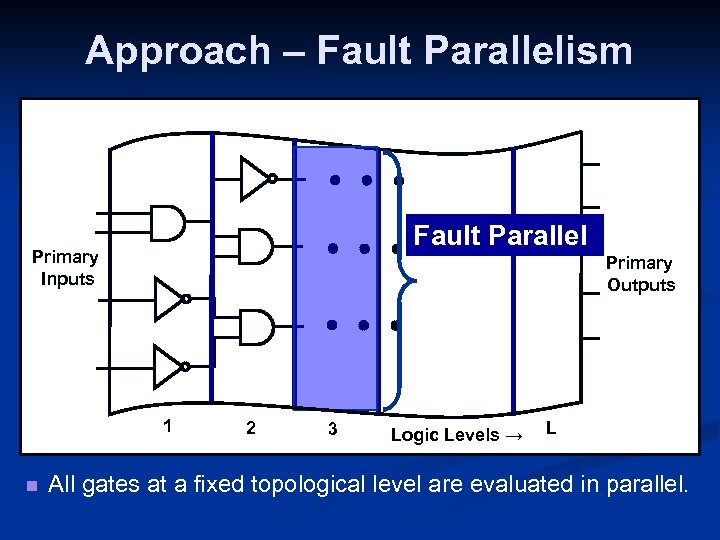

Approach – Fault Parallelism Fault Parallel Primary Inputs Primary Outputs 1 n 2 3 Logic Levels → L All gates at a fixed topological level are evaluated in parallel.

Approach – Fault Parallelism Fault Parallel Primary Inputs Primary Outputs 1 n 2 3 Logic Levels → L All gates at a fixed topological level are evaluated in parallel.

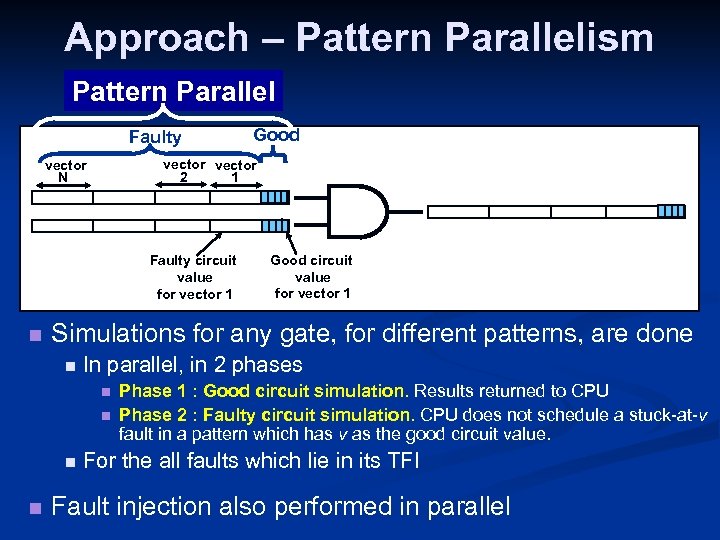

Approach – Pattern Parallelism Pattern Parallel Faulty vector 2 1 vector N Faulty circuit value for vector 1 n Good circuit value for vector 1 Simulations for any gate, for different patterns, are done n In parallel, in 2 phases n n Good Phase 1 : Good circuit simulation. Results returned to CPU Phase 2 : Faulty circuit simulation. CPU does not schedule a stuck-at-v fault in a pattern which has v as the good circuit value. For the all faults which lie in its TFI Fault injection also performed in parallel

Approach – Pattern Parallelism Pattern Parallel Faulty vector 2 1 vector N Faulty circuit value for vector 1 n Good circuit value for vector 1 Simulations for any gate, for different patterns, are done n In parallel, in 2 phases n n Good Phase 1 : Good circuit simulation. Results returned to CPU Phase 2 : Faulty circuit simulation. CPU does not schedule a stuck-at-v fault in a pattern which has v as the good circuit value. For the all faults which lie in its TFI Fault injection also performed in parallel

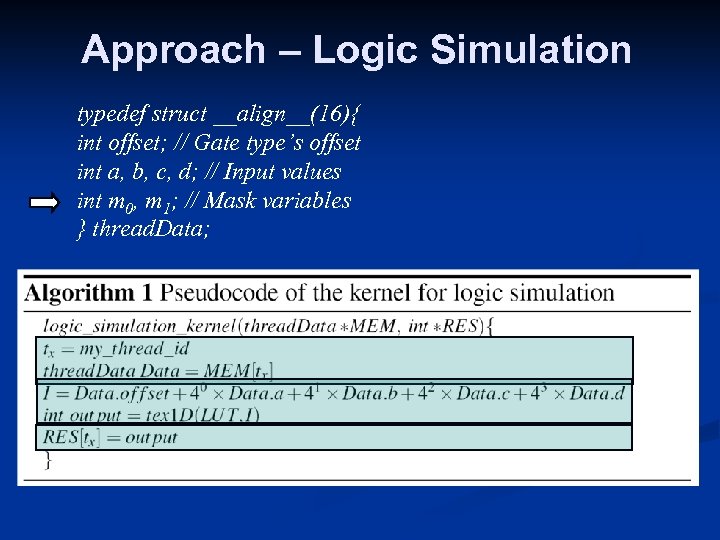

Approach – Logic Simulation typedef struct __align__(16){ int offset; // Gate type’s offset int a, b, c, d; // Input values int m 0, m 1; // Mask variables } thread. Data;

Approach – Logic Simulation typedef struct __align__(16){ int offset; // Gate type’s offset int a, b, c, d; // Input values int m 0, m 1; // Mask variables } thread. Data;

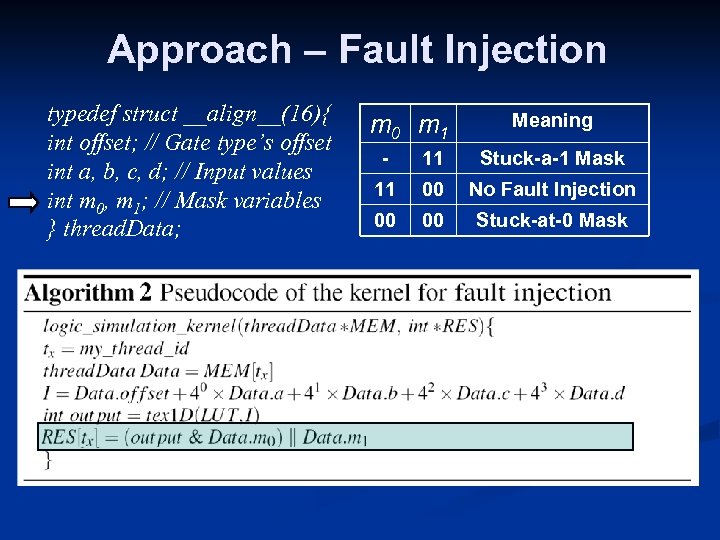

Approach – Fault Injection typedef struct __align__(16){ int offset; // Gate type’s offset int a, b, c, d; // Input values int m 0, m 1; // Mask variables } thread. Data; m 0 m 1 Meaning - 11 Stuck-a-1 Mask 11 00 No Fault Injection 00 00 Stuck-at-0 Mask

Approach – Fault Injection typedef struct __align__(16){ int offset; // Gate type’s offset int a, b, c, d; // Input values int m 0, m 1; // Mask variables } thread. Data; m 0 m 1 Meaning - 11 Stuck-a-1 Mask 11 00 No Fault Injection 00 00 Stuck-at-0 Mask

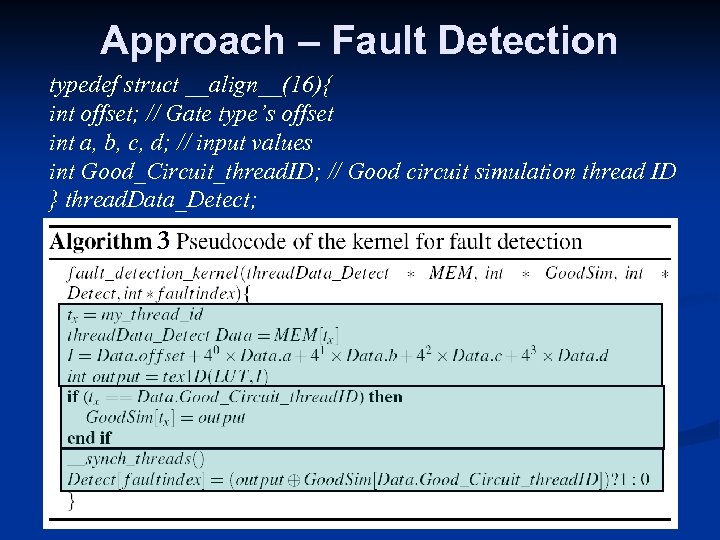

Approach – Fault Detection typedef struct __align__(16){ int offset; // Gate type’s offset int a, b, c, d; // input values int Good_Circuit_thread. ID; // Good circuit simulation thread ID } thread. Data_Detect; 3

Approach – Fault Detection typedef struct __align__(16){ int offset; // Gate type’s offset int a, b, c, d; // input values int Good_Circuit_thread. ID; // Good circuit simulation thread ID } thread. Data_Detect; 3

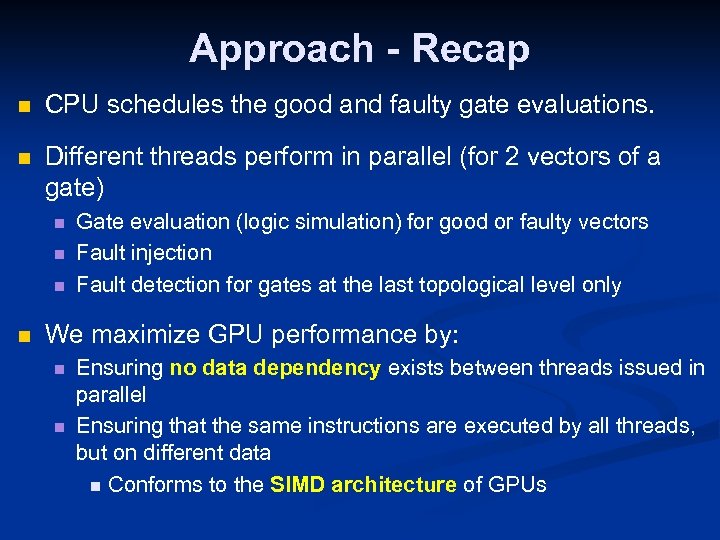

Approach - Recap n CPU schedules the good and faulty gate evaluations. n Different threads perform in parallel (for 2 vectors of a gate) n n Gate evaluation (logic simulation) for good or faulty vectors Fault injection Fault detection for gates at the last topological level only We maximize GPU performance by: n n Ensuring no data dependency exists between threads issued in parallel Ensuring that the same instructions are executed by all threads, but on different data n Conforms to the SIMD architecture of GPUs

Approach - Recap n CPU schedules the good and faulty gate evaluations. n Different threads perform in parallel (for 2 vectors of a gate) n n Gate evaluation (logic simulation) for good or faulty vectors Fault injection Fault detection for gates at the last topological level only We maximize GPU performance by: n n Ensuring no data dependency exists between threads issued in parallel Ensuring that the same instructions are executed by all threads, but on different data n Conforms to the SIMD architecture of GPUs

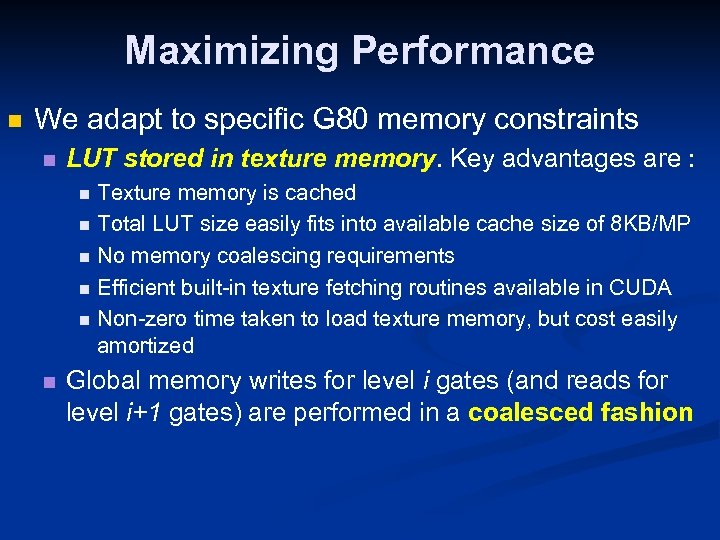

Maximizing Performance n We adapt to specific G 80 memory constraints n LUT stored in texture memory. Key advantages are : Texture memory is cached n Total LUT size easily fits into available cache size of 8 KB/MP n No memory coalescing requirements n Efficient built-in texture fetching routines available in CUDA n Non-zero time taken to load texture memory, but cost easily amortized n n Global memory writes for level i gates (and reads for level i+1 gates) are performed in a coalesced fashion

Maximizing Performance n We adapt to specific G 80 memory constraints n LUT stored in texture memory. Key advantages are : Texture memory is cached n Total LUT size easily fits into available cache size of 8 KB/MP n No memory coalescing requirements n Efficient built-in texture fetching routines available in CUDA n Non-zero time taken to load texture memory, but cost easily amortized n n Global memory writes for level i gates (and reads for level i+1 gates) are performed in a coalesced fashion

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

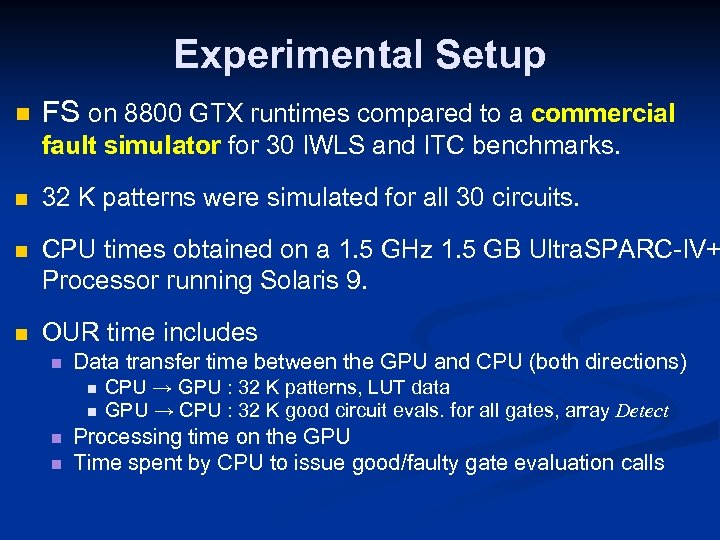

Experimental Setup n FS on 8800 GTX runtimes compared to a commercial fault simulator for 30 IWLS and ITC benchmarks. n 32 K patterns were simulated for all 30 circuits. n CPU times obtained on a 1. 5 GHz 1. 5 GB Ultra. SPARC-IV+ Processor running Solaris 9. n OUR time includes n Data transfer time between the GPU and CPU (both directions) n n CPU → GPU : 32 K patterns, LUT data GPU → CPU : 32 K good circuit evals. for all gates, array Detect Processing time on the GPU Time spent by CPU to issue good/faulty gate evaluation calls

Experimental Setup n FS on 8800 GTX runtimes compared to a commercial fault simulator for 30 IWLS and ITC benchmarks. n 32 K patterns were simulated for all 30 circuits. n CPU times obtained on a 1. 5 GHz 1. 5 GB Ultra. SPARC-IV+ Processor running Solaris 9. n OUR time includes n Data transfer time between the GPU and CPU (both directions) n n CPU → GPU : 32 K patterns, LUT data GPU → CPU : 32 K good circuit evals. for all gates, array Detect Processing time on the GPU Time spent by CPU to issue good/faulty gate evaluation calls

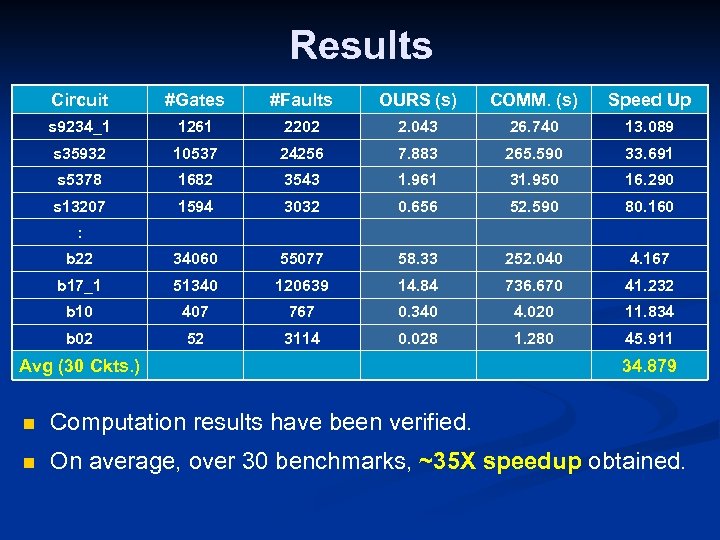

Results Circuit #Gates #Faults OURS (s) COMM. (s) Speed Up s 9234_1 1261 2202 2. 043 26. 740 13. 089 s 35932 10537 24256 7. 883 265. 590 33. 691 s 5378 1682 3543 1. 961 31. 950 16. 290 s 13207 1594 3032 0. 656 52. 590 80. 160 b 22 34060 55077 58. 33 252. 040 4. 167 b 17_1 51340 120639 14. 84 736. 670 41. 232 b 10 407 767 0. 340 4. 020 11. 834 b 02 52 3114 0. 028 1. 280 45. 911 : Avg (30 Ckts. ) 34. 879 n Computation results have been verified. n On average, over 30 benchmarks, ~35 X speedup obtained.

Results Circuit #Gates #Faults OURS (s) COMM. (s) Speed Up s 9234_1 1261 2202 2. 043 26. 740 13. 089 s 35932 10537 24256 7. 883 265. 590 33. 691 s 5378 1682 3543 1. 961 31. 950 16. 290 s 13207 1594 3032 0. 656 52. 590 80. 160 b 22 34060 55077 58. 33 252. 040 4. 167 b 17_1 51340 120639 14. 84 736. 670 41. 232 b 10 407 767 0. 340 4. 020 11. 834 b 02 52 3114 0. 028 1. 280 45. 911 : Avg (30 Ckts. ) 34. 879 n Computation results have been verified. n On average, over 30 benchmarks, ~35 X speedup obtained.

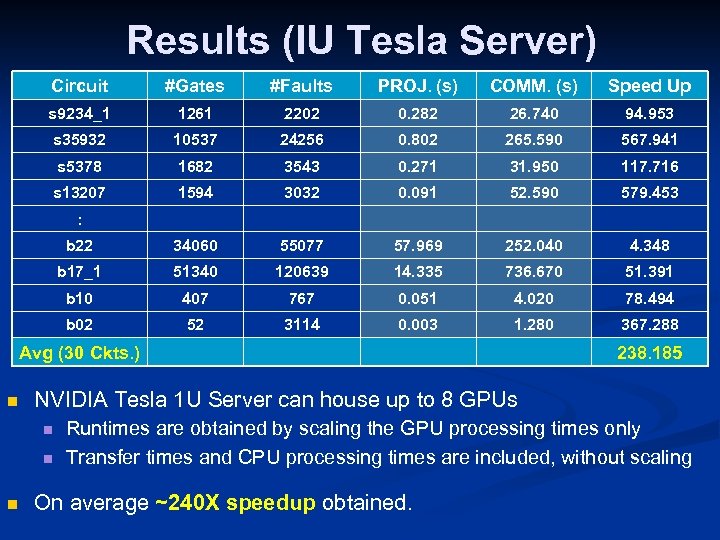

Results (IU Tesla Server) Circuit #Gates #Faults PROJ. (s) COMM. (s) Speed Up s 9234_1 1261 2202 0. 282 26. 740 94. 953 s 35932 10537 24256 0. 802 265. 590 567. 941 s 5378 1682 3543 0. 271 31. 950 117. 716 s 13207 1594 3032 0. 091 52. 590 579. 453 b 22 34060 55077 57. 969 252. 040 4. 348 b 17_1 51340 120639 14. 335 736. 670 51. 391 b 10 407 767 0. 051 4. 020 78. 494 b 02 52 3114 0. 003 1. 280 367. 288 : Avg (30 Ckts. ) n NVIDIA Tesla 1 U Server can house up to 8 GPUs n n n 238. 185 Runtimes are obtained by scaling the GPU processing times only Transfer times and CPU processing times are included, without scaling On average ~240 X speedup obtained.

Results (IU Tesla Server) Circuit #Gates #Faults PROJ. (s) COMM. (s) Speed Up s 9234_1 1261 2202 0. 282 26. 740 94. 953 s 35932 10537 24256 0. 802 265. 590 567. 941 s 5378 1682 3543 0. 271 31. 950 117. 716 s 13207 1594 3032 0. 091 52. 590 579. 453 b 22 34060 55077 57. 969 252. 040 4. 348 b 17_1 51340 120639 14. 335 736. 670 51. 391 b 10 407 767 0. 051 4. 020 78. 494 b 02 52 3114 0. 003 1. 280 367. 288 : Avg (30 Ckts. ) n NVIDIA Tesla 1 U Server can house up to 8 GPUs n n n 238. 185 Runtimes are obtained by scaling the GPU processing times only Transfer times and CPU processing times are included, without scaling On average ~240 X speedup obtained.

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Outline n n n Introduction Technical Specifications of the GPU CUDA Programming Model Approach Experimental Setup and Results Conclusions

Conclusions n We have accelerated FS using GPUs n n By careful engineering, we maximally harness the GPU’s n n n ~35 X speedup compared to commercial FS engine When projected for a 1 U NVIDIA Tesla Server n n Raw computational power and Huge memory bandwidths When using a Single 8800 GTX GPU n n Implement a pattern and fault parallel technique ~238 X speedup is possible over the commercial engine Future work includes exploring parallel fault simulation on the GPU

Conclusions n We have accelerated FS using GPUs n n By careful engineering, we maximally harness the GPU’s n n n ~35 X speedup compared to commercial FS engine When projected for a 1 U NVIDIA Tesla Server n n Raw computational power and Huge memory bandwidths When using a Single 8800 GTX GPU n n Implement a pattern and fault parallel technique ~238 X speedup is possible over the commercial engine Future work includes exploring parallel fault simulation on the GPU

Thank You

Thank You