1485a65fab1e25efce34e5d5e37240c2.ppt

- Количество слайдов: 51

Towards a Grid-enabled Analysis Environment Harvey B. Newman California Institute of Technology Grid-enabled Analysis Environment Workshop June 23, 2003

Welcome to Caltech and Our Workshop u Caltech u Logistics u Agenda u Our Staff

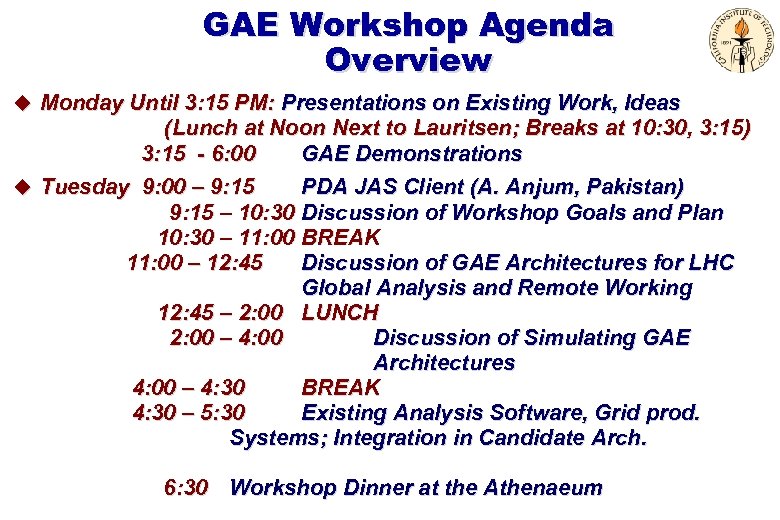

GAE Workshop Agenda Overview u Monday Until 3: 15 PM: Presentations on Existing Work, Ideas (Lunch at Noon Next to Lauritsen; Breaks at 10: 30, 3: 15) 3: 15 - 6: 00 GAE Demonstrations u Tuesday 9: 00 – 9: 15 PDA JAS Client (A. Anjum, Pakistan) 9: 15 – 10: 30 Discussion of Workshop Goals and Plan 10: 30 – 11: 00 BREAK 11: 00 – 12: 45 Discussion of GAE Architectures for LHC Global Analysis and Remote Working 12: 45 – 2: 00 LUNCH 2: 00 – 4: 00 Discussion of Simulating GAE Architectures 4: 00 – 4: 30 BREAK 4: 30 – 5: 30 Existing Analysis Software, Grid prod. Systems; Integration in Candidate Arch. 6: 30 Workshop Dinner at the Athenaeum

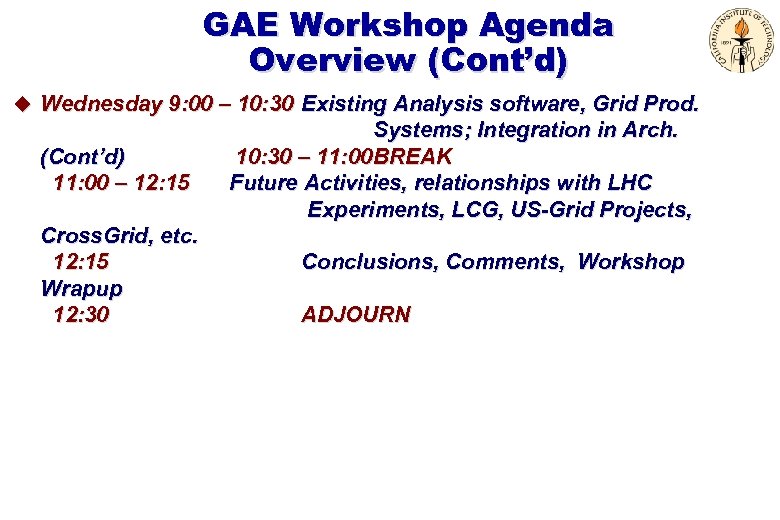

GAE Workshop Agenda Overview (Cont’d) u Wednesday 9: 00 – 10: 30 Existing Analysis software, Grid Prod. (Cont’d) 11: 00 – 12: 15 Cross. Grid, etc. 12: 15 Wrapup 12: 30 Systems; Integration in Arch. 10: 30 – 11: 00 BREAK Future Activities, relationships with LHC Experiments, LCG, US-Grid Projects, Conclusions, Comments, Workshop ADJOURN

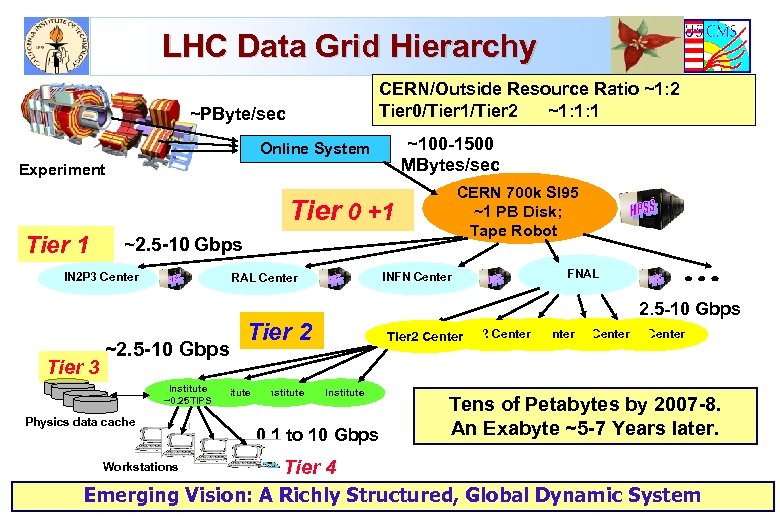

LHC Data Grid Hierarchy CERN/Outside Resource Ratio ~1: 2 Tier 0/Tier 1/Tier 2 ~1: 1: 1 ~PByte/sec ~100 -1500 MBytes/sec Online System Experiment CERN 700 k SI 95 ~1 PB Disk; Tape Robot Tier 0 +1 Tier 1 ~2. 5 -10 Gbps IN 2 P 3 Center Tier 3 INFN Center RAL Center ~2. 5 -10 Gbps Physics data cache 2. 5 -10 Gbps Tier 2 Institute ~0. 25 TIPS Institute FNAL Tier 2 Center Tier 2 Center Institute 0. 1 to 10 Gbps Tens of Petabytes by 2007 -8. An Exabyte ~5 -7 Years later. Tier 4 Emerging Vision: A Richly Structured, Global Dynamic System Workstations

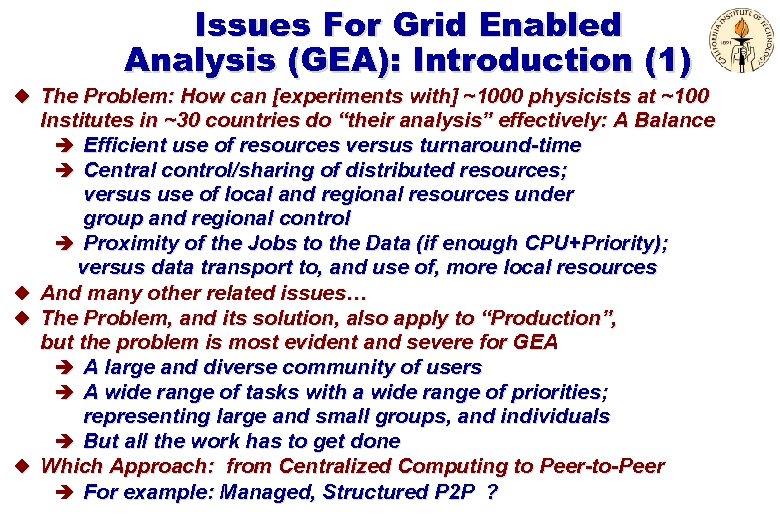

Issues For Grid Enabled Analysis (GEA): Introduction (1) u The Problem: How can [experiments with] ~1000 physicists at ~100 u u u Institutes in ~30 countries do “their analysis” effectively: A Balance è Efficient use of resources versus turnaround-time è Central control/sharing of distributed resources; versus use of local and regional resources under group and regional control è Proximity of the Jobs to the Data (if enough CPU+Priority); versus data transport to, and use of, more local resources And many other related issues… The Problem, and its solution, also apply to “Production”, but the problem is most evident and severe for GEA è A large and diverse community of users è A wide range of tasks with a wide range of priorities; representing large and small groups, and individuals è But all the work has to get done Which Approach: from Centralized Computing to Peer-to-Peer è For example: Managed, Structured P 2 P ?

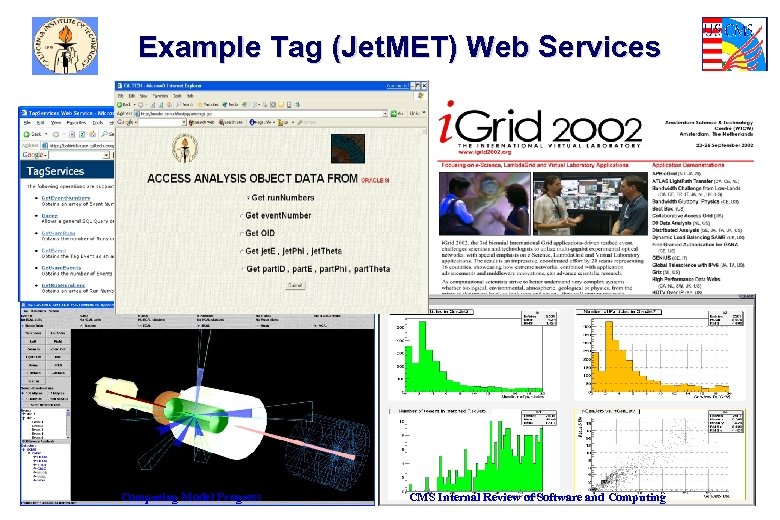

Example Tag (Jet. MET) Web Services Computing Model Progress CMS Internal Review of Software and Computing

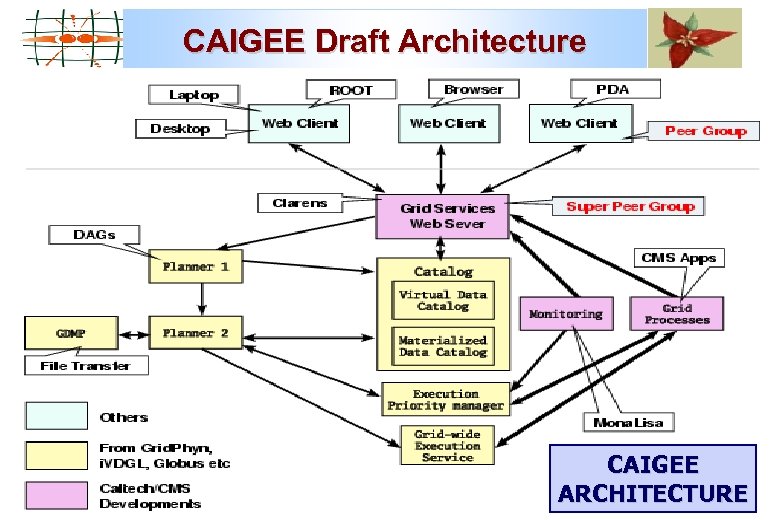

CAIGEE Draft Architecture CAIGEE ARCHITECTURE

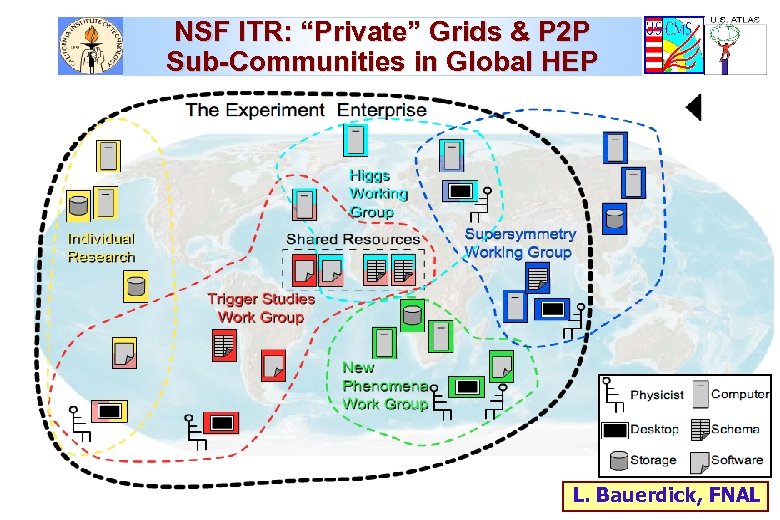

NSF ITR: “Private” Grids & P 2 P Sub-Communities in Global HEP L. Bauerdick, FNAL

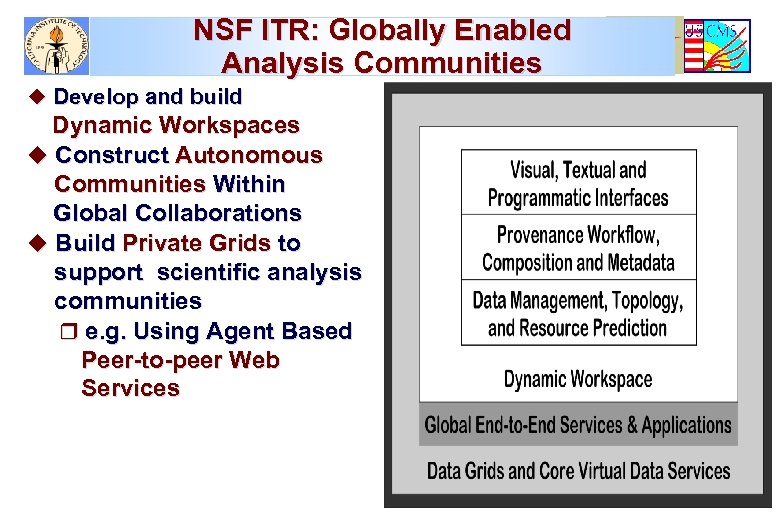

NSF ITR: Globally Enabled Analysis Communities u Develop and build Dynamic Workspaces u Construct Autonomous Communities Within Global Collaborations u Build Private Grids to support scientific analysis communities r e. g. Using Agent Based Peer-to-peer Web Services

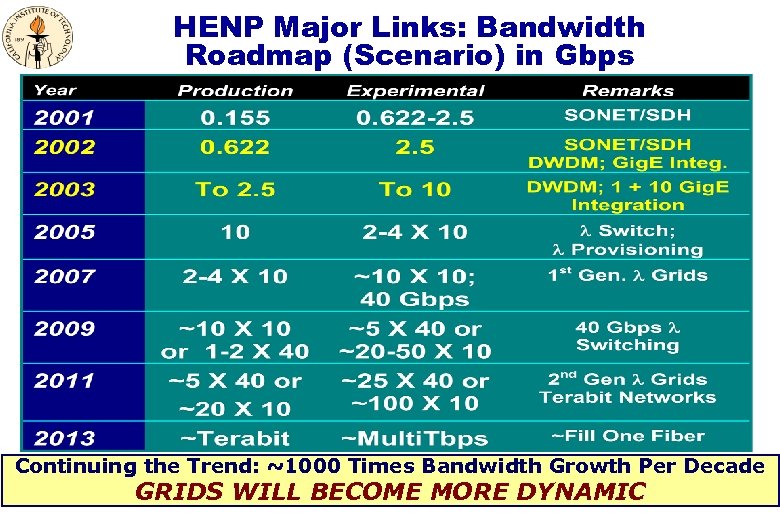

HENP Major Links: Bandwidth Roadmap (Scenario) in Gbps Continuing the Trend: ~1000 Times Bandwidth Growth Per Decade GRIDS WILL BECOME MORE DYNAMIC

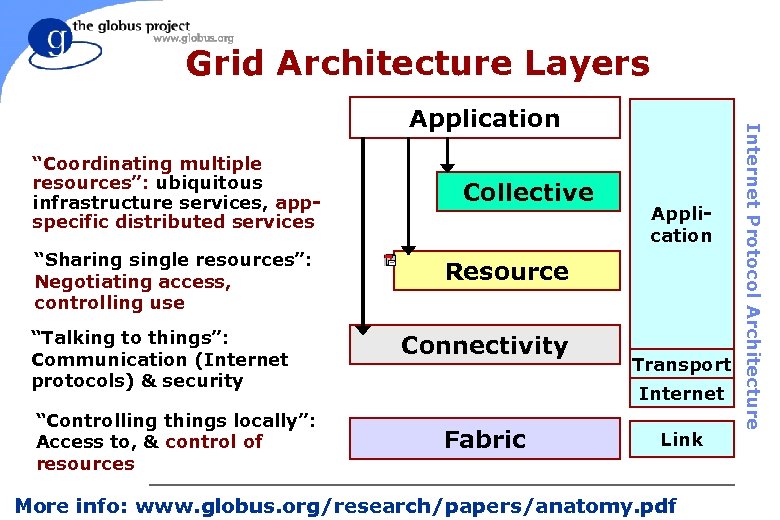

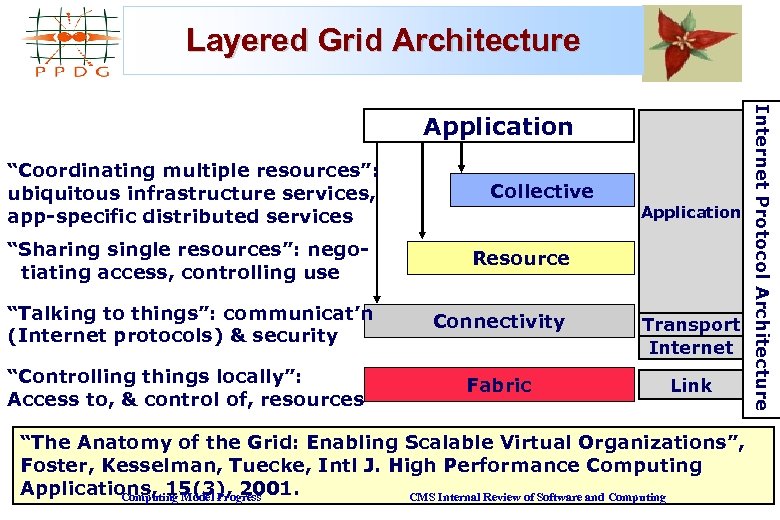

Grid Architecture Layers “Coordinating multiple resources”: ubiquitous infrastructure services, appspecific distributed services “Sharing single resources”: Negotiating access, controlling use “Talking to things”: Communication (Internet protocols) & security “Controlling things locally”: Access to, & control of resources Collective Application Resource Connectivity Transport Internet Fabric Link Internet Protocol Architecture Application foster@mcs. anl. gov More info: www. globus. org/research/papers/anatomy. pdf CHICAGO ARGONNE ö

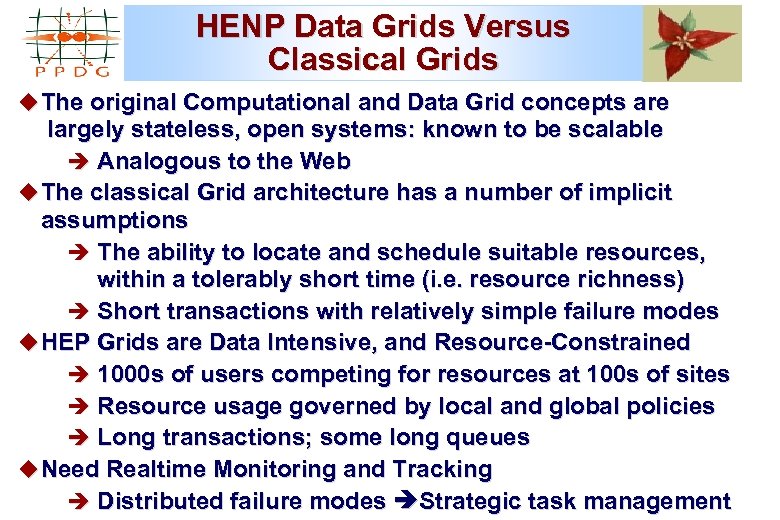

HENP Data Grids Versus Classical Grids u The original Computational and Data Grid concepts are largely stateless, open systems: known to be scalable è Analogous to the Web u The classical Grid architecture has a number of implicit assumptions è The ability to locate and schedule suitable resources, within a tolerably short time (i. e. resource richness) è Short transactions with relatively simple failure modes u HEP Grids are Data Intensive, and Resource-Constrained è 1000 s of users competing for resources at 100 s of sites è Resource usage governed by local and global policies è Long transactions; some long queues u Need Realtime Monitoring and Tracking è Distributed failure modes Strategic task management

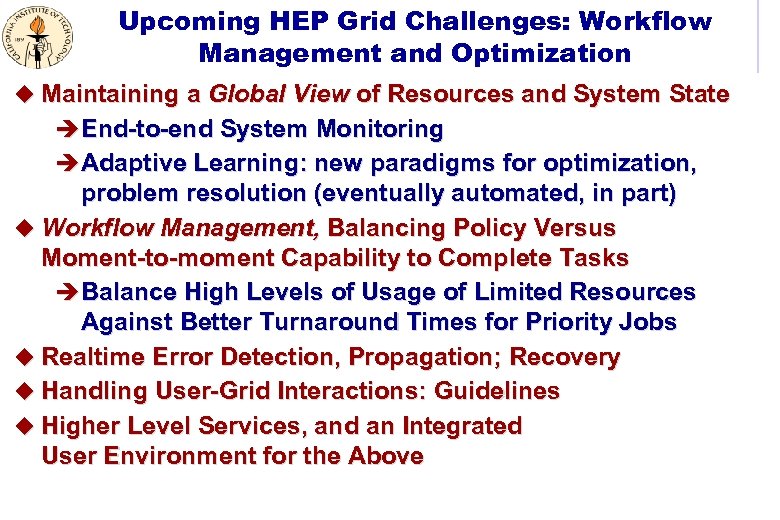

Upcoming HEP Grid Challenges: Workflow Management and Optimization u Maintaining a Global View of Resources and System State è End-to-end System Monitoring è Adaptive Learning: new paradigms for optimization, problem resolution (eventually automated, in part) u Workflow Management, Balancing Policy Versus Moment-to-moment Capability to Complete Tasks è Balance High Levels of Usage of Limited Resources Against Better Turnaround Times for Priority Jobs u Realtime Error Detection, Propagation; Recovery u Handling User-Grid Interactions: Guidelines u Higher Level Services, and an Integrated User Environment for the Above

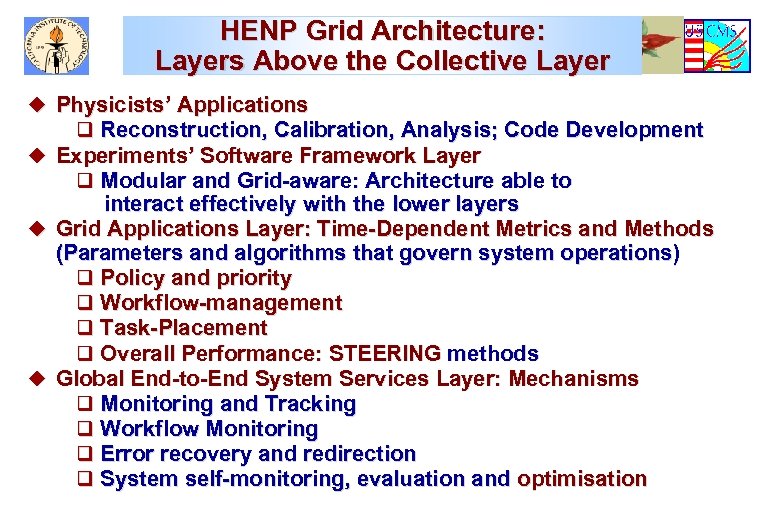

HENP Grid Architecture: Layers Above the Collective Layer u Physicists’ Applications q Reconstruction, Calibration, Analysis; Code Development u Experiments’ Software Framework Layer q Modular and Grid-aware: Architecture able to interact effectively with the lower layers u Grid Applications Layer: Time-Dependent Metrics and Methods (Parameters and algorithms that govern system operations) q Policy and priority q Workflow-management q Task-Placement q Overall Performance: STEERING methods u Global End-to-End System Services Layer: Mechanisms q Monitoring and Tracking q Workflow Monitoring q Error recovery and redirection q System self-monitoring, evaluation and optimisation

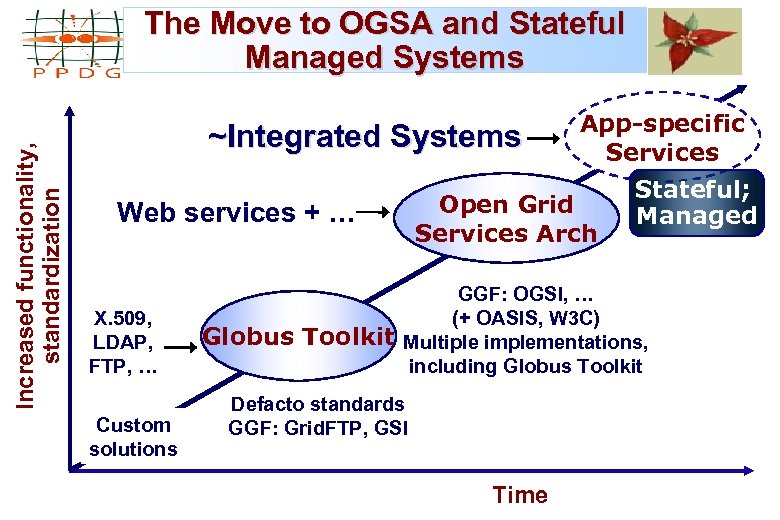

Increased functionality, standardization The Move to OGSA and Stateful Managed Systems ~Integrated Systems Open Grid Services Arch Web services + … X. 509, LDAP, FTP, … Custom solutions Globus Toolkit App-specific Services Stateful; Managed GGF: OGSI, … (+ OASIS, W 3 C) Multiple implementations, including Globus Toolkit Defacto standards GGF: Grid. FTP, GSI Time

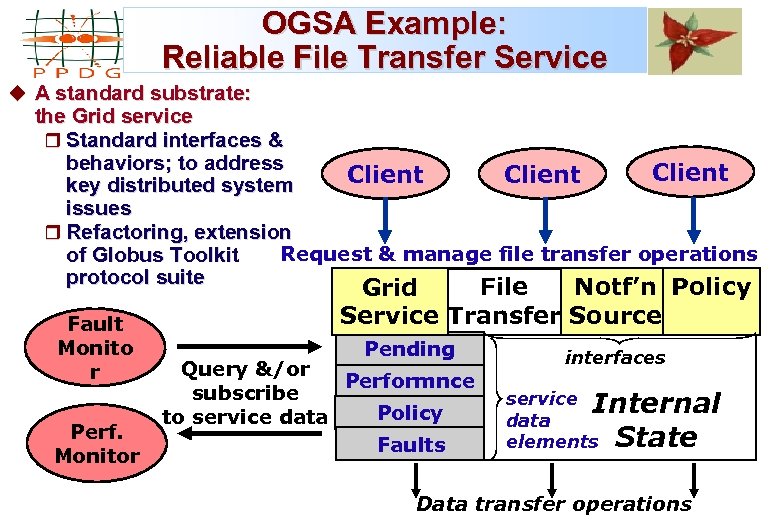

OGSA Example: Reliable File Transfer Service u A standard substrate: the Grid service r Standard interfaces & behaviors; to address Client key distributed system issues r Refactoring, extension Request & manage file transfer operations of Globus Toolkit protocol suite Notf’n Policy File Grid Fault Monito r Perf. Monitor Service Transfer Source Pending Query &/or Performnce subscribe Policy to service data Faults interfaces service Internal data elements State Data transfer operations

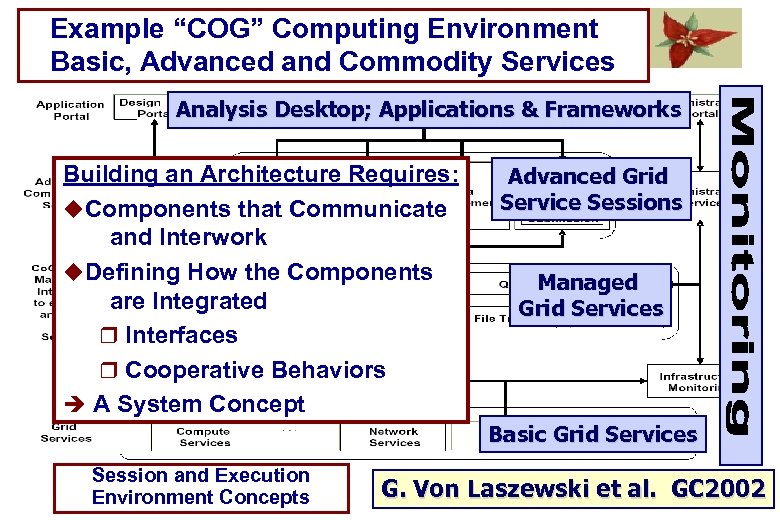

Example “COG” Computing Environment Basic, Advanced and Commodity Services Analysis Desktop; Applications & Frameworks Building an Architecture Requires: u. Components that Communicate and Interwork u. Defining How the Components are Integrated r Interfaces r Cooperative Behaviors è A System Concept Advanced Grid Service Sessions Managed Grid Services Basic Grid Services Session and Execution Environment Concepts G. Von Laszewski et al. GC 2002

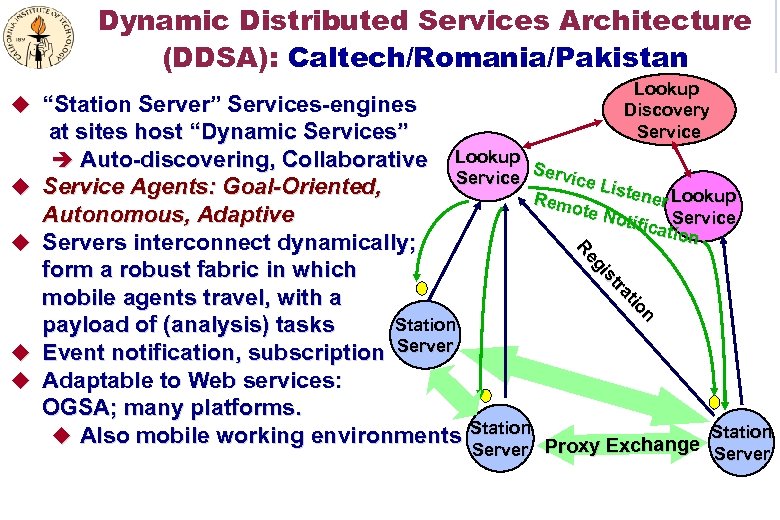

Dynamic Distributed Services Architecture (DDSA): Caltech/Romania/Pakistan Lookup Discovery Service u “Station Server” Services-engines u u u n on ttiio ra ra stt iis eg e R u at sites host “Dynamic Services” è Auto-discovering, Collaborative Lookup Se rvice Service Liste Service Agents: Goal-Oriented, ner Lookup Rem ote N Autonomous, Adaptive otific Service ation Servers interconnect dynamically; form a robust fabric in which mobile agents travel, with a Station payload of (analysis) tasks Event notification, subscription Server Adaptable to Web services: OGSA; many platforms. u Also mobile working environments Station Proxy Exchange Station Server

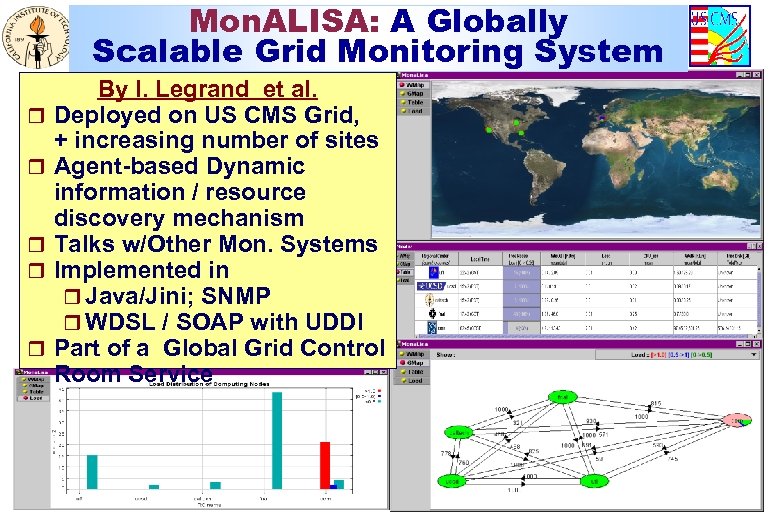

Mon. ALISA: A Globally Scalable Grid Monitoring System r r r By I. Legrand et al. Deployed on US CMS Grid, + increasing number of sites Agent-based Dynamic information / resource discovery mechanism Talks w/Other Mon. Systems Implemented in r Java/Jini; SNMP r WDSL / SOAP with UDDI Part of a Global Grid Control Room Service

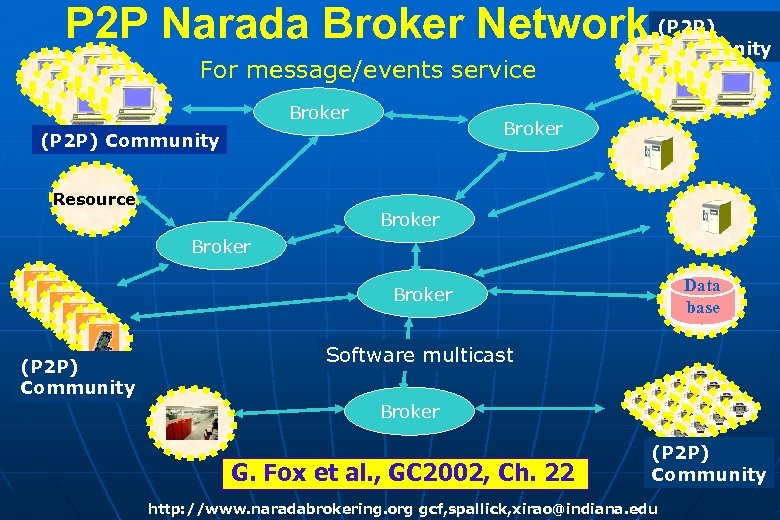

P 2 P Narada Broker Network (P 2 P) Community For message/events service Broker (P 2 P) Community Resource Broker Data base Broker (P 2 P) Community Software multicast Broker G. Fox et al. , GC 2002, Ch. 22 (P 2 P) Community http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

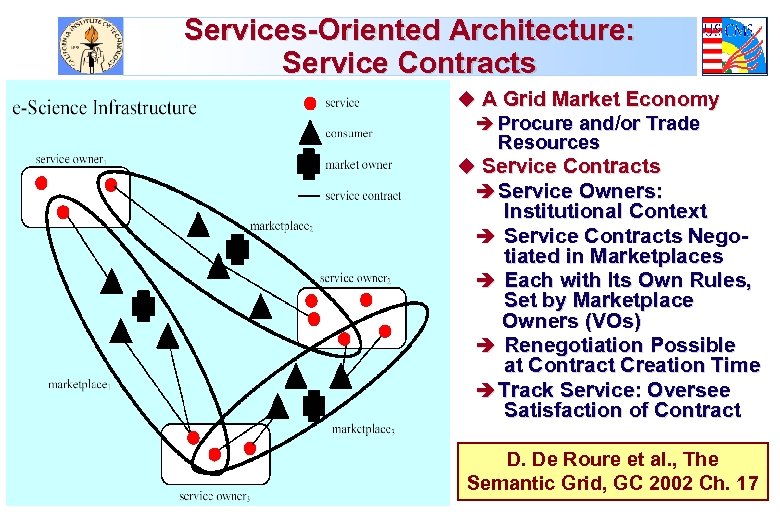

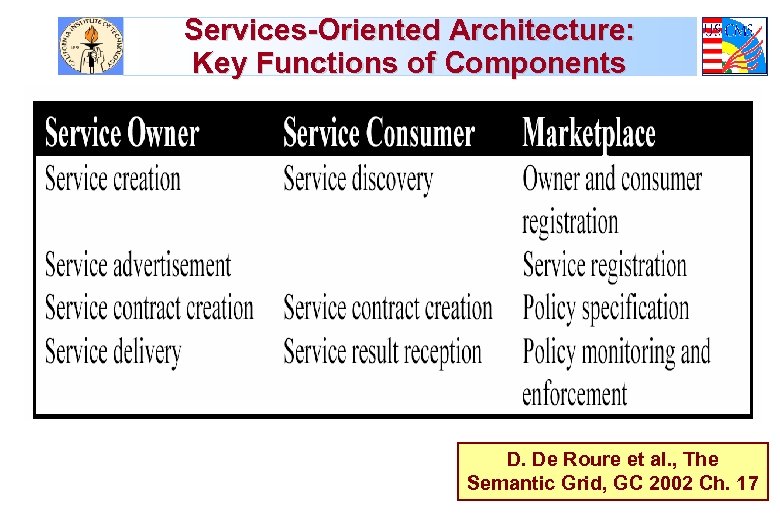

Services-Oriented Architecture: Service Contracts u A Grid Market Economy è Procure and/or Trade Resources u Service Contracts è Service Owners: Institutional Context è Service Contracts Negotiated in Marketplaces è Each with Its Own Rules, Set by Marketplace Owners (VOs) è Renegotiation Possible at Contract Creation Time è Track Service: Oversee Satisfaction of Contract D. De Roure et al. , The Semantic Grid, GC 2002 Ch. 17

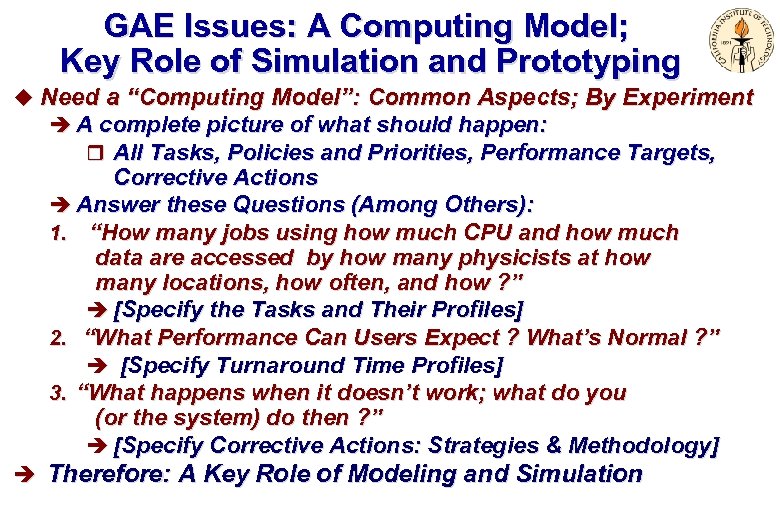

GAE Issues: A Computing Model; Key Role of Simulation and Prototyping u Need a “Computing Model”: Common Aspects; By Experiment è A complete picture of what should happen: r All Tasks, Policies and Priorities, Performance Targets, Corrective Actions è Answer these Questions (Among Others): 1. “How many jobs using how much CPU and how much data are accessed by how many physicists at how many locations, how often, and how ? ” è [Specify the Tasks and Their Profiles] 2. “What Performance Can Users Expect ? What’s Normal ? ” è [Specify Turnaround Time Profiles] 3. “What happens when it doesn’t work; what do you (or the system) do then ? ” è [Specify Corrective Actions: Strategies & Methodology] è Therefore: A Key Role of Modeling and Simulation

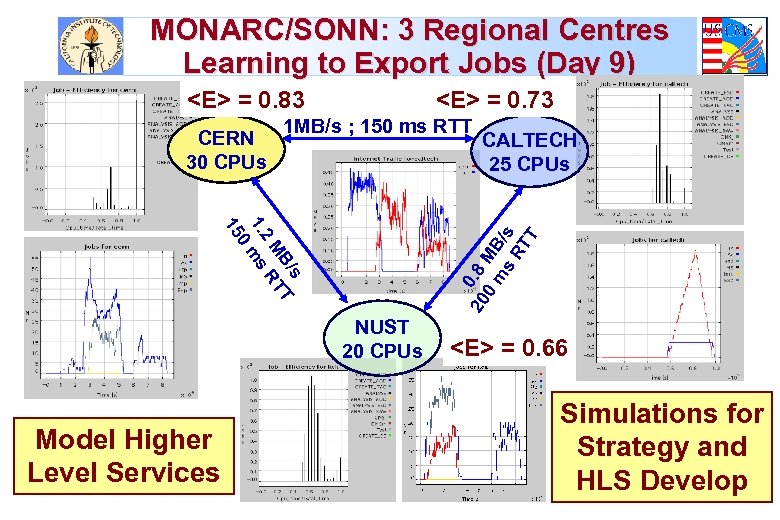

MONARC/SONN: 3 Regional Centres Learning to Export Jobs (Day 9) <E> = 0. 83 CERN 30 CPUs <E> = 0. 73 1 MB/s ; 150 ms RTT 20 0. 8 0 MB m s /s RT T s B/ T M RT 2 1. ms 0 15 NUST 20 CPUs Model Higher Level Services CALTECH 25 CPUs <E> = 0. 66 Simulations for Day = 9 Strategy and HLS Develop

GAE Workshop Goals (1) u “Getting Our Arms Around” the Grid-Enabled Analysis “Problem” u Review Existing Work Towards a GAE: Components, Interfaces, System Concepts u Review Client Analysis Tools; Consider How to Integrate Them u User Interfaces: What does the GAE Desktop Look Like ? (Different Flavors) u Look At Requirements, Ideas for a GAE Architecture è A Vision of the System’s Goals and Workings r Attention to Strategy and Policy u Develop (Continue) a Program of Simulations of the System è For the Computing Model, and Defining the GAE è Essential for Developing a Feasible Vision; Developing Strategies, Solving Problems and Optimizing the System è With a Complementary Program of Prototyping

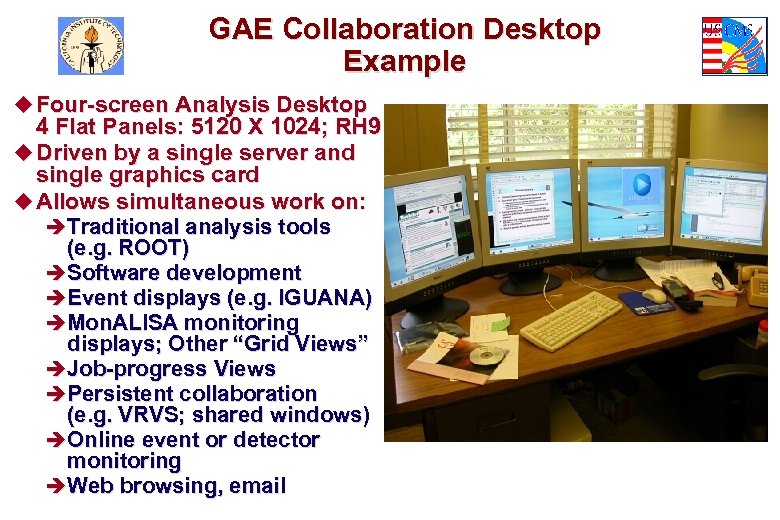

GAE Collaboration Desktop Example u Four-screen Analysis Desktop 4 Flat Panels: 5120 X 1024; RH 9 u Driven by a single server and single graphics card u Allows simultaneous work on: èTraditional analysis tools (e. g. ROOT) èSoftware development èEvent displays (e. g. IGUANA) èMon. ALISA monitoring displays; Other “Grid Views” èJob-progress Views èPersistent collaboration (e. g. VRVS; shared windows) èOnline event or detector monitoring èWeb browsing, email

GAE Workshop Goals (2) u Architectural Approaches: Choose A Feasible Direction èFor example a Managed Services Architecture èBe Prepared to Learn by Doing; Simulating and Prototyping u Where to Start, and the Development Strategy èExisting and Missing Parts of the System [Layers; Concepts] èWhen to Adapt Existing Components, Or to Re-Build Them “from Scratch” u Manpower Available to Meet the Goals; Shortfalls u Allocation of Tasks; Including Generating a Plan u Linkage Between Analysis and Grid-Enabled Production u Planning for Closer Relationship with LCG, Trillium, and the Experiments’ starting Efforts in this area

Some Extra Slides Follow Computing Model Progress CMS Internal Review of Software and Computing

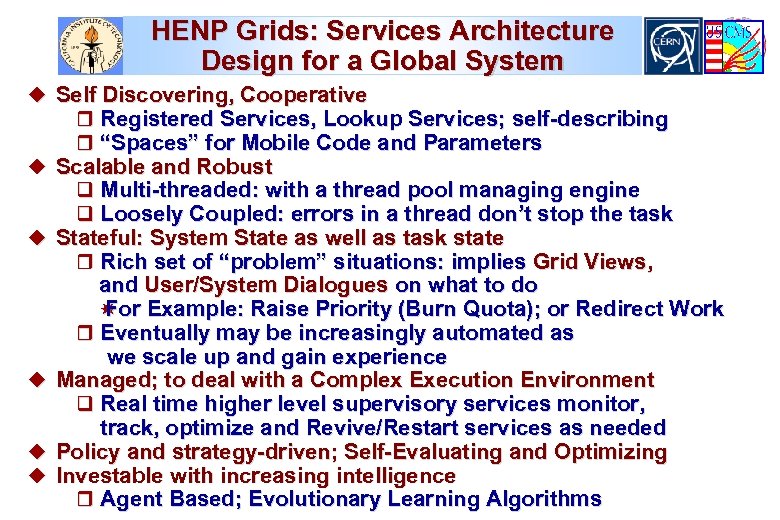

HENP Grids: Services Architecture Design for a Global System u Self Discovering, Cooperative r Registered Services, Lookup Services; self-describing r “Spaces” for Mobile Code and Parameters u Scalable and Robust q Multi-threaded: with a thread pool managing engine q Loosely Coupled: errors in a thread don’t stop the task u Stateful: System State as well as task state r Rich set of “problem” situations: implies Grid Views, u u u and User/System Dialogues on what to do Example: Raise Priority (Burn Quota); or Redirect Work For r Eventually may be increasingly automated as we scale up and gain experience Managed; to deal with a Complex Execution Environment q Real time higher level supervisory services monitor, track, optimize and Revive/Restart services as needed Policy and strategy-driven; Self-Evaluating and Optimizing Investable with increasing intelligence r Agent Based; Evolutionary Learning Algorithms

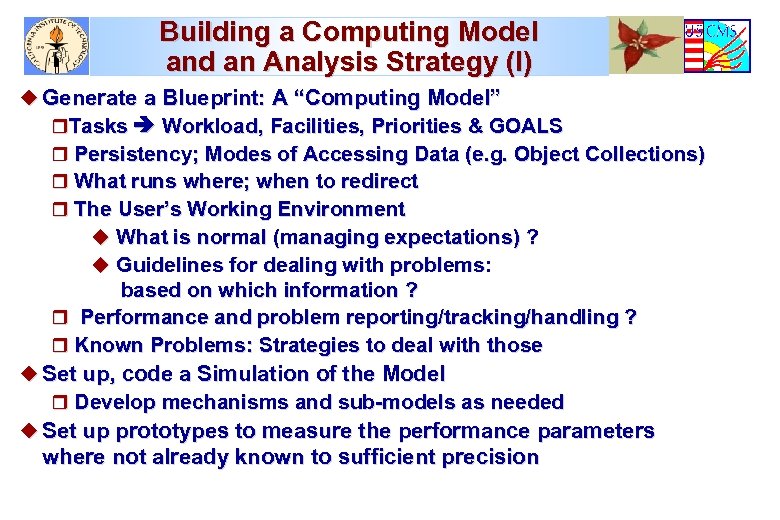

Building a Computing Model and an Analysis Strategy (I) u Generate a Blueprint: A “Computing Model” r. Tasks Workload, Facilities, Priorities & GOALS r Persistency; Modes of Accessing Data (e. g. Object Collections) r What runs where; when to redirect r The User’s Working Environment u What is normal (managing expectations) ? u Guidelines for dealing with problems: based on which information ? r Performance and problem reporting/tracking/handling ? r Known Problems: Strategies to deal with those u Set up, code a Simulation of the Model r Develop mechanisms and sub-models as needed u Set up prototypes to measure the performance parameters where not already known to sufficient precision

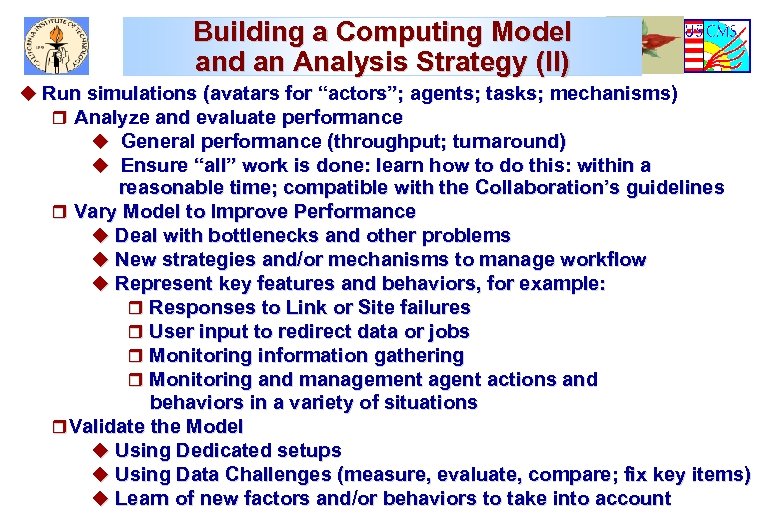

Building a Computing Model and an Analysis Strategy (II) u Run simulations (avatars for “actors”; agents; tasks; mechanisms) r Analyze and evaluate performance u General performance (throughput; turnaround) u Ensure “all” work is done: learn how to do this: within a reasonable time; compatible with the Collaboration’s guidelines r Vary Model to Improve Performance u Deal with bottlenecks and other problems u New strategies and/or mechanisms to manage workflow u Represent key features and behaviors, for example: r Responses to Link or Site failures r User input to redirect data or jobs r Monitoring information gathering r Monitoring and management agent actions and behaviors in a variety of situations r Validate the Model u Using Dedicated setups u Using Data Challenges (measure, evaluate, compare; fix key items) u Learn of new factors and/or behaviors to take into account

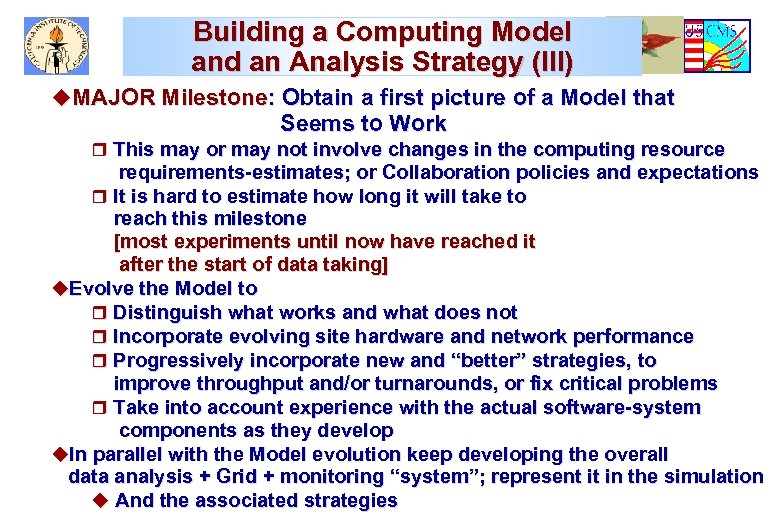

Building a Computing Model and an Analysis Strategy (III) u. MAJOR Milestone: Obtain a first picture of a Model that Seems to Work r This may or may not involve changes in the computing resource requirements-estimates; or Collaboration policies and expectations r It is hard to estimate how long it will take to reach this milestone [most experiments until now have reached it after the start of data taking] u. Evolve the Model to r Distinguish what works and what does not r Incorporate evolving site hardware and network performance r Progressively incorporate new and “better” strategies, to improve throughput and/or turnarounds, or fix critical problems r Take into account experience with the actual software-system components as they develop u. In parallel with the Model evolution keep developing the overall data analysis + Grid + monitoring “system”; represent it in the simulation u And the associated strategies

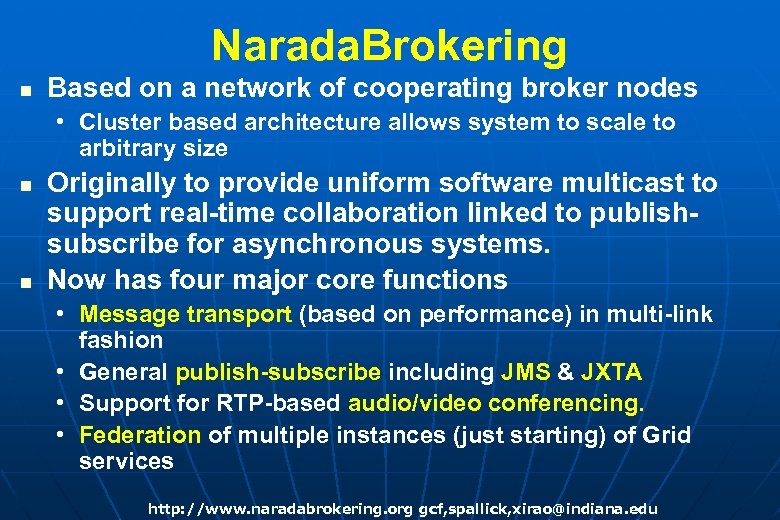

Narada. Brokering n Based on a network of cooperating broker nodes • Cluster based architecture allows system to scale to arbitrary size n n Originally to provide uniform software multicast to support real-time collaboration linked to publishsubscribe for asynchronous systems. Now has four major core functions • Message transport (based on performance) in multi-link fashion • General publish-subscribe including JMS & JXTA • Support for RTP-based audio/video conferencing. • Federation of multiple instances (just starting) of Grid services http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

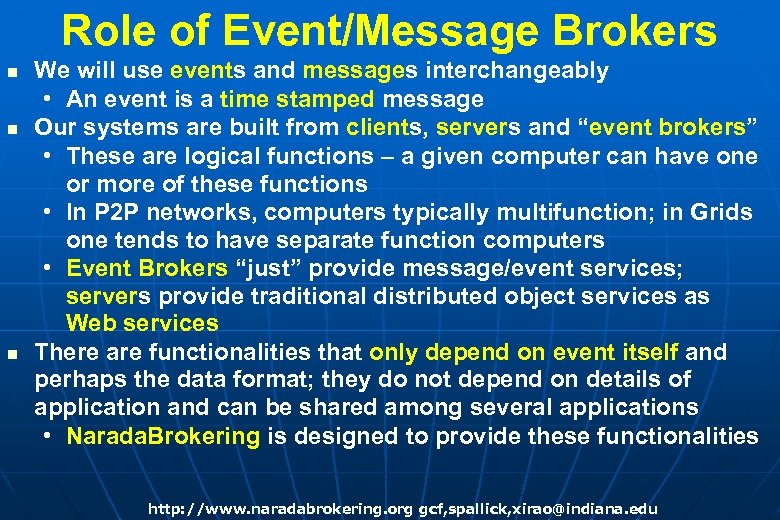

Role of Event/Message Brokers n n n We will use events and messages interchangeably • An event is a time stamped message Our systems are built from clients, servers and “event brokers” • These are logical functions – a given computer can have one or more of these functions • In P 2 P networks, computers typically multifunction; in Grids one tends to have separate function computers • Event Brokers “just” provide message/event services; servers provide traditional distributed object services as Web services There are functionalities that only depend on event itself and perhaps the data format; they do not depend on details of application and can be shared among several applications • Narada. Brokering is designed to provide these functionalities http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

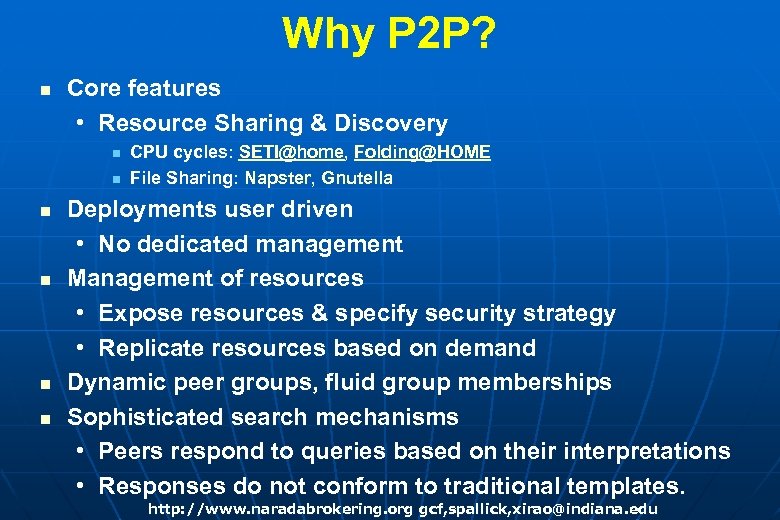

Why P 2 P? n Core features • Resource Sharing & Discovery n n n CPU cycles: SETI@home, Folding@HOME File Sharing: Napster, Gnutella Deployments user driven • No dedicated management Management of resources • Expose resources & specify security strategy • Replicate resources based on demand Dynamic peer groups, fluid group memberships Sophisticated search mechanisms • Peers respond to queries based on their interpretations • Responses do not conform to traditional templates. http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

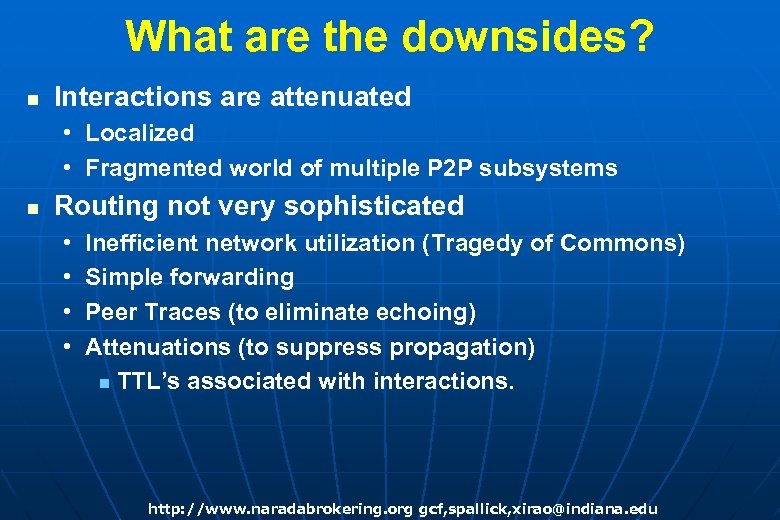

What are the downsides? n Interactions are attenuated • Localized • Fragmented world of multiple P 2 P subsystems n Routing not very sophisticated • • Inefficient network utilization (Tragedy of Commons) Simple forwarding Peer Traces (to eliminate echoing) Attenuations (to suppress propagation) n TTL’s associated with interactions. http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

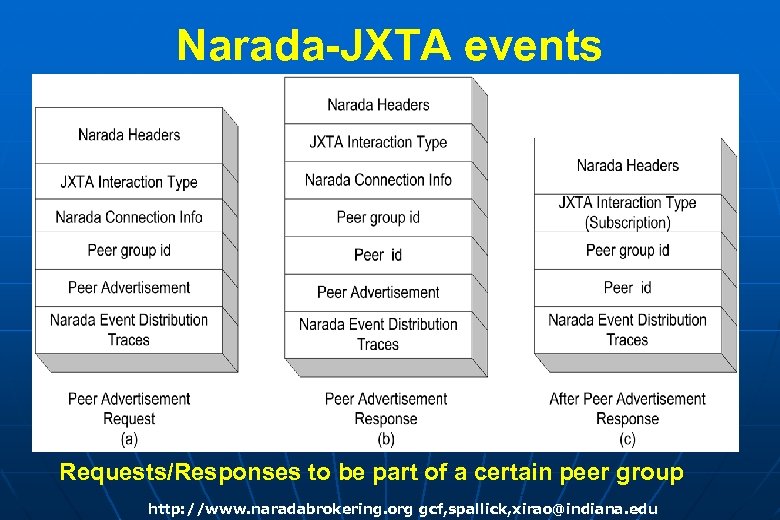

Narada-JXTA events Requests/Responses to be part of a certain peer group http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

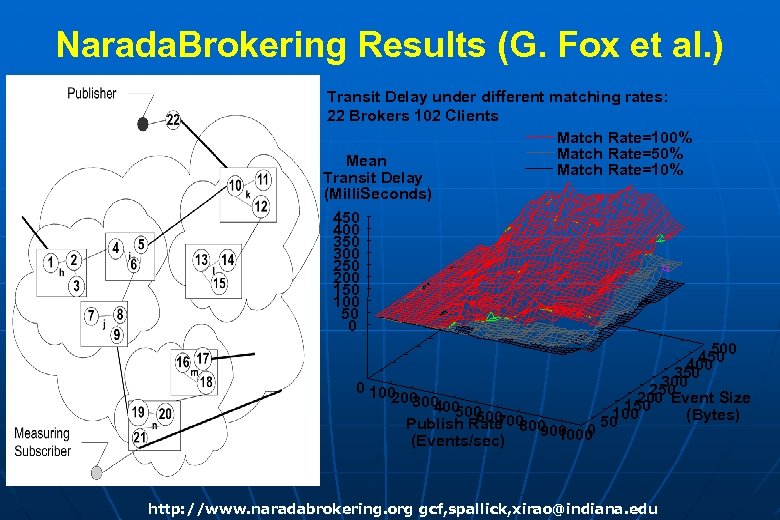

Narada. Brokering Results (G. Fox et al. ) Transit Delay under different matching rates: 22 Brokers 102 Clients Match Rate=100% Match Rate=50% Mean Match Rate=10% Transit Delay (Milli. Seconds) 450 400 350 300 250 200 150 100 50 0 0 100 200 300 400 500 600 700 Publish Rate 800 900 0 1000 (Events/sec) 500 450 400 350 300 250 200 150 Event Size 100 (Bytes) 50 http: //www. naradabrokering. org gcf, spallick, xirao@indiana. edu

Services-Oriented Architecture: Key Functions of Components D. De Roure et al. , The Semantic Grid, GC 2002 Ch. 17

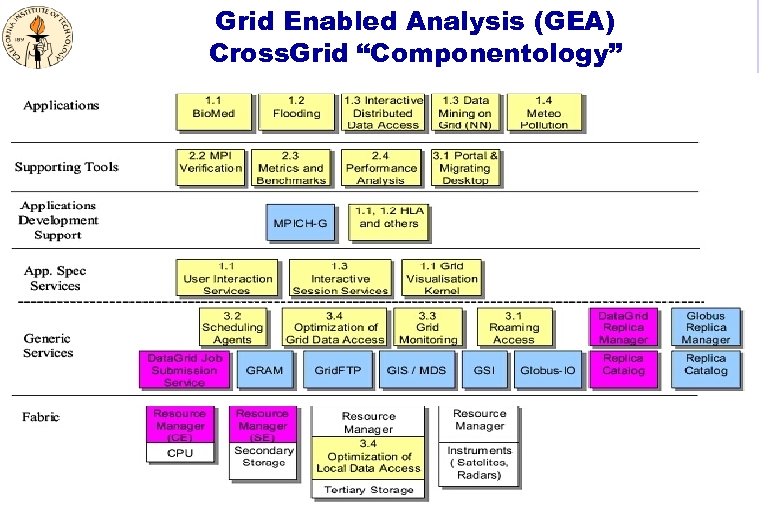

Grid Enabled Analysis (GEA) Cross. Grid “Componentology”

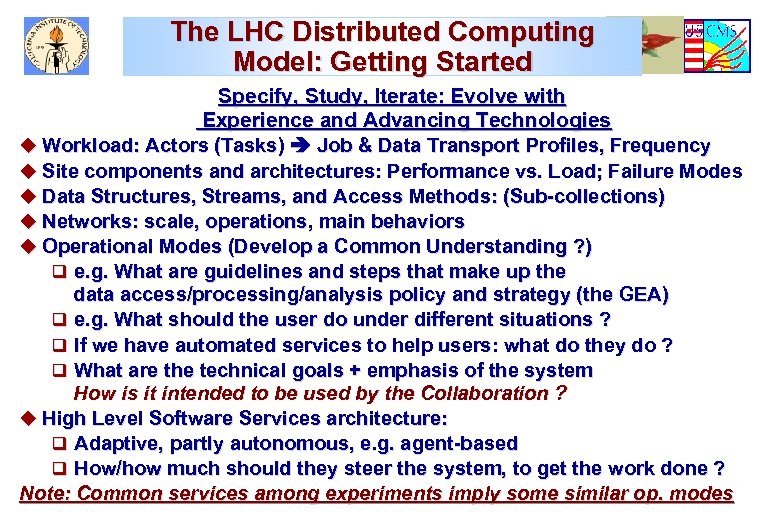

The LHC Distributed Computing Model: Getting Started Specify, Study, Iterate: Evolve with Experience and Advancing Technologies u Workload: Actors (Tasks) Job & Data Transport Profiles, Frequency u Site components and architectures: Performance vs. Load; Failure Modes u Data Structures, Streams, and Access Methods: (Sub-collections) u Networks: scale, operations, main behaviors u Operational Modes (Develop a Common Understanding ? ) q e. g. What are guidelines and steps that make up the data access/processing/analysis policy and strategy (the GEA) q e. g. What should the user do under different situations ? q If we have automated services to help users: what do they do ? q What are the technical goals + emphasis of the system How is it intended to be used by the Collaboration ? u High Level Software Services architecture: q Adaptive, partly autonomous, e. g. agent-based q How/how much should they steer the system, to get the work done ? Note: Common services among experiments imply some similar op. modes

![2001 Transatlantic Net WG Bandwidth Requirements [*] See http: //gate. hep. anl. gov/lprice/TAN. The 2001 Transatlantic Net WG Bandwidth Requirements [*] See http: //gate. hep. anl. gov/lprice/TAN. The](https://present5.com/presentation/1485a65fab1e25efce34e5d5e37240c2/image-42.jpg)

2001 Transatlantic Net WG Bandwidth Requirements [*] See http: //gate. hep. anl. gov/lprice/TAN. The 2001 LHC requirements outlook now looks Very Conservative in 2003

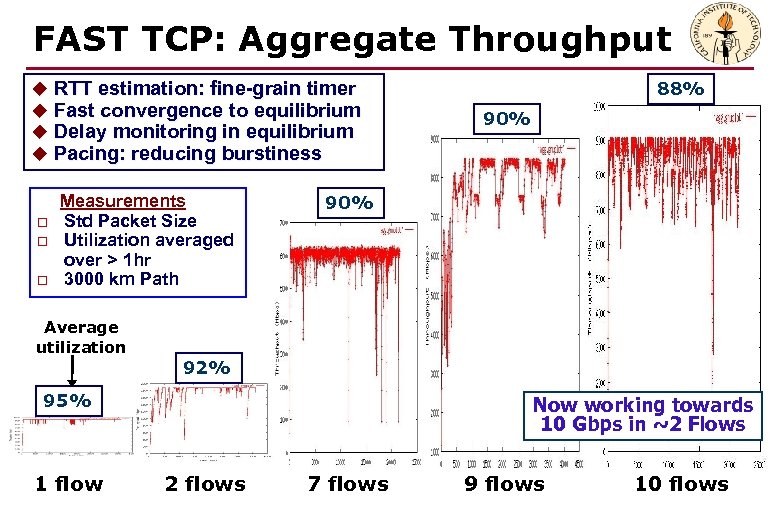

FAST TCP: Aggregate Throughput u RTT estimation: fine-grain timer u Fast convergence to equilibrium u Delay monitoring in equilibrium u Pacing: reducing burstiness o o o Measurements Std Packet Size Utilization averaged over > 1 hr 3000 km Path 88% 90% Average utilization 92% 95% 1 flow Now working towards 10 Gbps in ~2 Flows 2 flows 7 flows 9 flows 10 flows

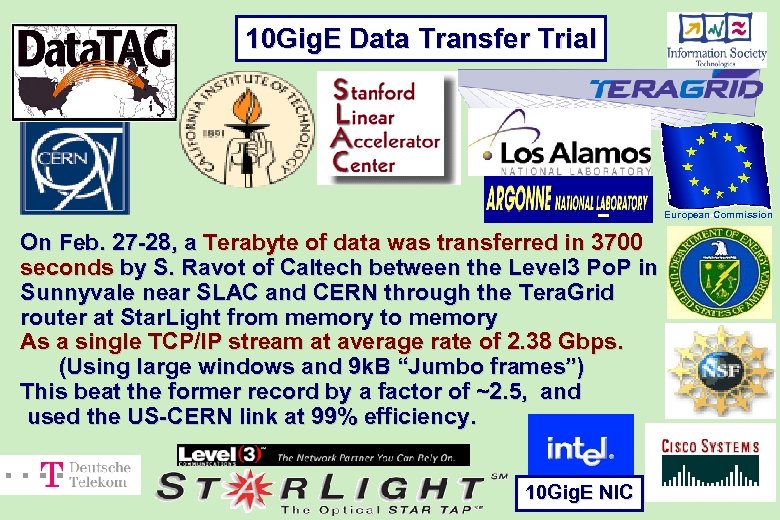

10 Gig. E Data Transfer Trial European Commission On Feb. 27 -28, a Terabyte of data was transferred in 3700 seconds by S. Ravot of Caltech between the Level 3 Po. P in Sunnyvale near SLAC and CERN through the Tera. Grid router at Star. Light from memory to memory As a single TCP/IP stream at average rate of 2. 38 Gbps. (Using large windows and 9 k. B “Jumbo frames”) This beat the former record by a factor of ~2. 5, and used the US-CERN link at 99% efficiency. 10 Gig. E NIC

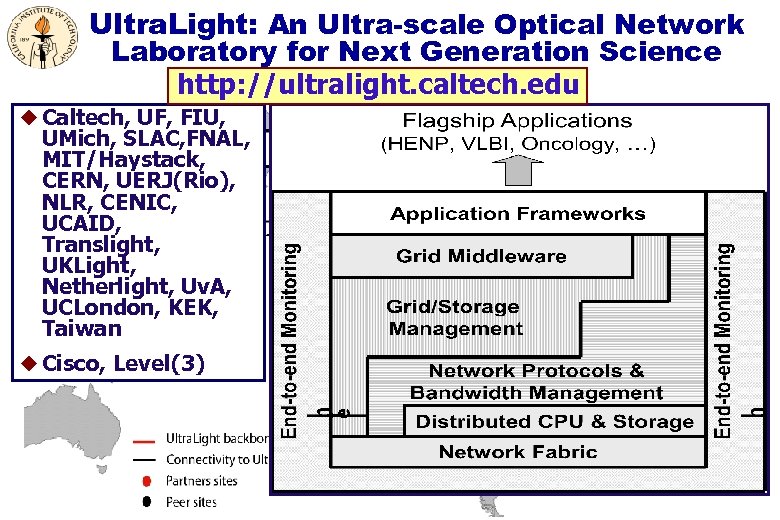

Ultra. Light: An Ultra-scale Optical Network Laboratory for Next Generation Science http: //ultralight. caltech. edu u Caltech, UF, FIU, UMich, SLAC, FNAL, MIT/Haystack, CERN, UERJ(Rio), NLR, CENIC, UCAID, Translight, UKLight, Netherlight, Uv. A, UCLondon, KEK, Taiwan u Cisco, Level(3)

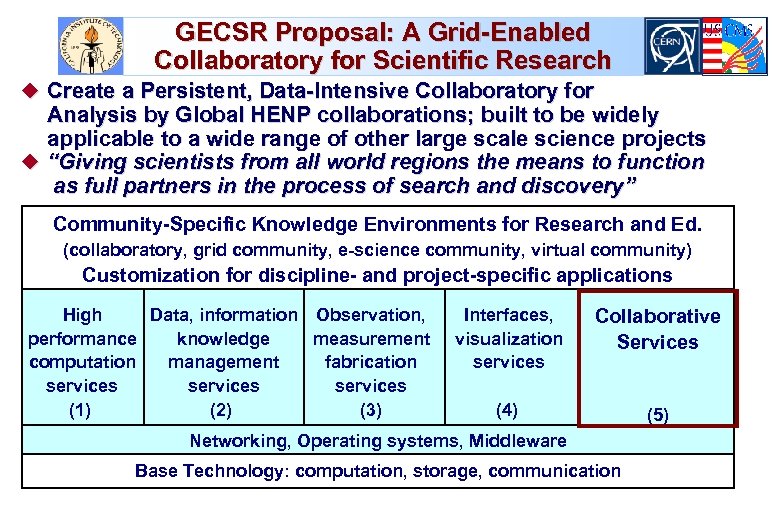

GECSR Proposal: A Grid-Enabled Collaboratory for Scientific Research u Create a Persistent, Data-Intensive Collaboratory for Analysis by Global HENP collaborations; built to be widely applicable to a wide range of other large scale science projects u “Giving scientists from all world regions the means to function as full partners in the process of search and discovery” Community-Specific Knowledge Environments for Research and Ed. (collaboratory, grid community, e-science community, virtual community) Customization for discipline- and project-specific applications High Data, information Observation, performance knowledge measurement computation management fabrication services (1) (2) (3) Interfaces, visualization services Collaborative Services (4) (5) Networking, Operating systems, Middleware Base Technology: computation, storage, communication

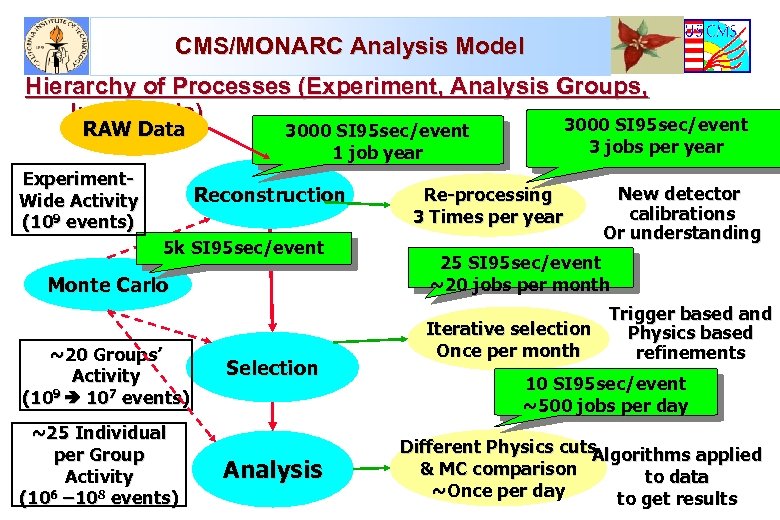

CMS/MONARC Analysis Model Hierarchy of Processes (Experiment, Analysis Groups, Individuals) 3000 SI 95 sec/event RAW Data Experiment. Wide Activity (109 events) 3000 SI 95 sec/event 1 job year Reconstruction 5 k SI 95 sec/event Monte Carlo ~20 Groups’ Activity (109 107 events) ~25 Individual per Group Activity (106 – 108 events) Selection Analysis 3 jobs per year Re-processing 3 Times per year New detector calibrations Or understanding 25 SI 95 sec/event ~20 jobs per month Trigger based and Iterative selection Physics based Once per month refinements 10 SI 95 sec/event ~500 jobs per day Different Physics cuts Algorithms applied & MC comparison to data ~Once per day to get results

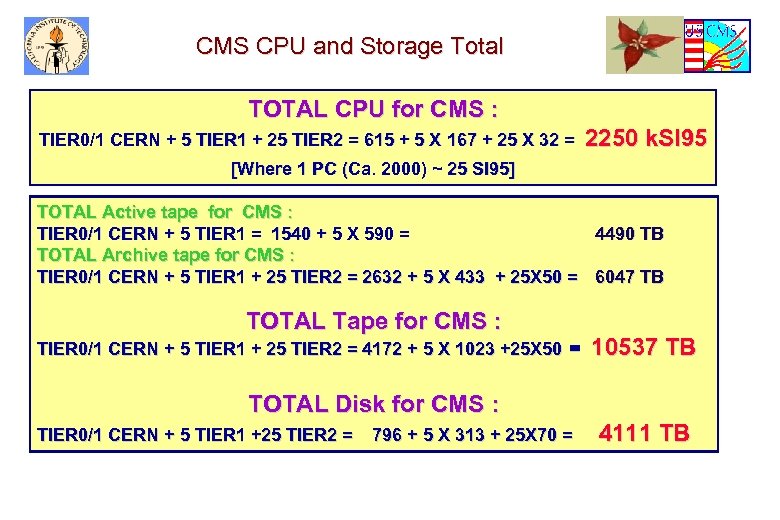

CMS CPU and Storage Total TOTAL CPU for CMS : TIER 0/1 CERN + 5 TIER 1 + 25 TIER 2 = 615 + 5 X 167 + 25 X 32 = 2250 k. SI 95 [Where 1 PC (Ca. 2000) ~ 25 SI 95] TOTAL Active tape for CMS : TIER 0/1 CERN + 5 TIER 1 = 1540 + 5 X 590 = 4490 TB TOTAL Archive tape for CMS : TIER 0/1 CERN + 5 TIER 1 + 25 TIER 2 = 2632 + 5 X 433 + 25 X 50 = 6047 TB TOTAL Tape for CMS : TIER 0/1 CERN + 5 TIER 1 + 25 TIER 2 = 4172 + 5 X 1023 +25 X 50 = 10537 TB TOTAL Disk for CMS : TIER 0/1 CERN + 5 TIER 1 +25 TIER 2 = 796 + 5 X 313 + 25 X 70 = 4111 TB

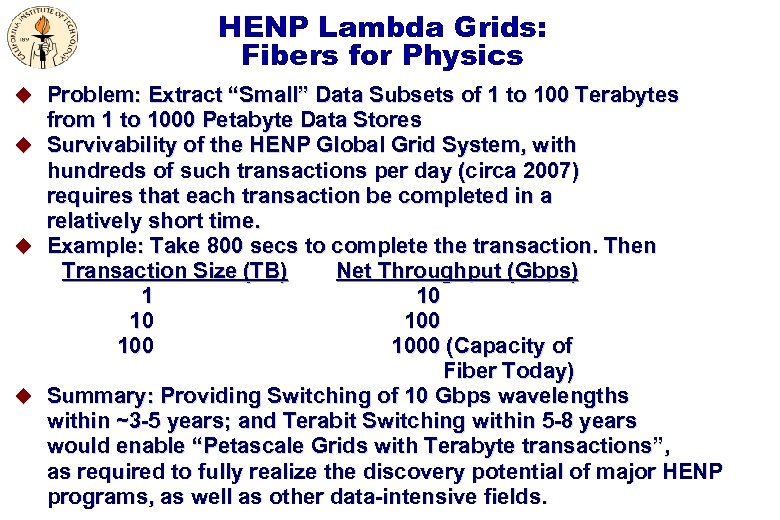

HENP Lambda Grids: Fibers for Physics u Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes u u u from 1 to 1000 Petabyte Data Stores Survivability of the HENP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 1 10 10 100 1000 (Capacity of Fiber Today) Summary: Providing Switching of 10 Gbps wavelengths within ~3 -5 years; and Terabit Switching within 5 -8 years would enable “Petascale Grids with Terabyte transactions”, as required to fully realize the discovery potential of major HENP programs, as well as other data-intensive fields.

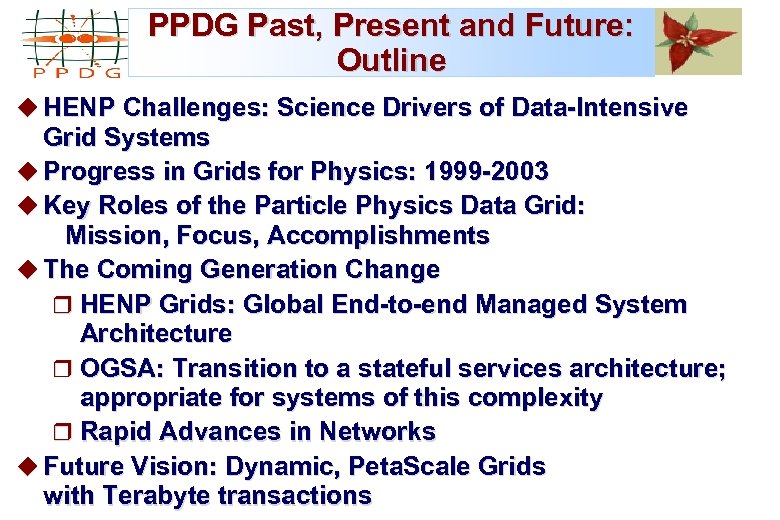

PPDG Past, Present and Future: Outline u HENP Challenges: Science Drivers of Data-Intensive Grid Systems u Progress in Grids for Physics: 1999 -2003 u Key Roles of the Particle Physics Data Grid: Mission, Focus, Accomplishments u The Coming Generation Change r HENP Grids: Global End-to-end Managed System Architecture r OGSA: Transition to a stateful services architecture; appropriate for systems of this complexity r Rapid Advances in Networks u Future Vision: Dynamic, Peta. Scale Grids with Terabyte transactions

Layered Grid Architecture “Coordinating multiple resources”: ubiquitous infrastructure services, app-specific distributed services Collective Application “Sharing single resources”: negotiating access, controlling use Resource “Talking to things”: communicat’n (Internet protocols) & security Connectivity Transport Internet “Controlling things locally”: Access to, & control of, resources Fabric Link “The Anatomy of the Grid: Enabling Scalable Virtual Organizations”, Foster, Kesselman, Tuecke, Intl J. High Performance Computing Applications, 15(3), 2001. Computing Model Progress CMS Internal Review of Software and Computing Internet Protocol Architecture Application

1485a65fab1e25efce34e5d5e37240c2.ppt