9dcf29890eb08589b5367479b1c650e0.ppt

- Количество слайдов: 24

Toward a Taxonomy of Communications Security Models PROOFS 2012 Leuven, Belgium Mark Brown Red. Phone Security Saint Paul, MN USA mark@redphonesecurity. com

Toward a Taxonomy of Communications Security Models PROOFS 2012 Leuven, Belgium Mark Brown Red. Phone Security Saint Paul, MN USA mark@redphonesecurity. com

Introduction Outline • Introduction • • Problem • • What organization of requirements can prevent surprises? Solution • • What are security requirements? Our idea “toward” organizing the subject matter Examples • • AES Example Analyzing Axioms and Assumptions Sep 13, 2012 (C) Red. Phone Security 2012 2

Introduction Outline • Introduction • • Problem • • What organization of requirements can prevent surprises? Solution • • What are security requirements? Our idea “toward” organizing the subject matter Examples • • AES Example Analyzing Axioms and Assumptions Sep 13, 2012 (C) Red. Phone Security 2012 2

Introduction Our Context and Project • This research sponsored by Joint Tactical Radio System (JTRS) • • • MLS Remote Administration Project • • $6 BB R&D spend over 15 year lifetime Performers include: Do. D, Primes, Security contractors, NSA, etc. Secure the multi-level operation of the JTRS radiosets (MLS) Allow for “system high” remote administration Implement “on a chip”: • SSL/TLS protocol • Suite B cryptography • Classic MLS flows We identified an absence of security requirements What are “security requirements”? Sep 13, 2012 (C) Red. Phone Security 2012 3

Introduction Our Context and Project • This research sponsored by Joint Tactical Radio System (JTRS) • • • MLS Remote Administration Project • • $6 BB R&D spend over 15 year lifetime Performers include: Do. D, Primes, Security contractors, NSA, etc. Secure the multi-level operation of the JTRS radiosets (MLS) Allow for “system high” remote administration Implement “on a chip”: • SSL/TLS protocol • Suite B cryptography • Classic MLS flows We identified an absence of security requirements What are “security requirements”? Sep 13, 2012 (C) Red. Phone Security 2012 3

Introduction Missing Security Requirements General Requirements Existed • • • Thousands of pages of requirements and specifications Criticized by US Government Accounting Office Included lists of things developers must do and not do We found no clear specification of “What must the system do in order to be secure? ” We took up the task of “security requirements” • • • Describing the system and its security in plain language Identifying assumptions Documenting “shall nevers” Modeling the system and its attackers Finding proofs Deliver not merely our judgment of “it’s secure” but assurance Sep 13, 2012 (C) Red. Phone Security 2012 4

Introduction Missing Security Requirements General Requirements Existed • • • Thousands of pages of requirements and specifications Criticized by US Government Accounting Office Included lists of things developers must do and not do We found no clear specification of “What must the system do in order to be secure? ” We took up the task of “security requirements” • • • Describing the system and its security in plain language Identifying assumptions Documenting “shall nevers” Modeling the system and its attackers Finding proofs Deliver not merely our judgment of “it’s secure” but assurance Sep 13, 2012 (C) Red. Phone Security 2012 4

Problem Our Challenge What subject matter is included in “security requirements” • • • How shall it be organized? What system / layer boundaries and granularity? (Littlewood, et al. 1993) How to deal with assumptions? What formal model(s) can assure a secure system? Really? Consider: • • Covert channels (multi-level secure) Side channels (cryptographic cores) Untrusted toolchain, fabrication, distribution (refinement) Classical concerns (bypass, subversion, reverse engineering, assurance of “no backdoors”, etc. ) Hard cases: full attack code is not present at time of evaluation, but emerges operationally (when the system is used in a particularly bad way). Sep 13, 2012 (C) Red. Phone Security 2012 5

Problem Our Challenge What subject matter is included in “security requirements” • • • How shall it be organized? What system / layer boundaries and granularity? (Littlewood, et al. 1993) How to deal with assumptions? What formal model(s) can assure a secure system? Really? Consider: • • Covert channels (multi-level secure) Side channels (cryptographic cores) Untrusted toolchain, fabrication, distribution (refinement) Classical concerns (bypass, subversion, reverse engineering, assurance of “no backdoors”, etc. ) Hard cases: full attack code is not present at time of evaluation, but emerges operationally (when the system is used in a particularly bad way). Sep 13, 2012 (C) Red. Phone Security 2012 5

Problem Meaningful Requirements (1) • • Our first topic is operational requirements. “All of the systems met or came close to meeting most of their documented requirements, ” Mike began. Actually, the program office designed the systems to the specifications it was given and was largely successful in doing so. “Yet, ” he continued, “in operational testing, these systems demonstrated little useful military capability. ” In operational test and evaluation, our focus is necessarily on answering the question: “Is a unit’s ability to accomplish its mission improved when equipped with a system? ” and much less so on answering the question: “Does this system meet its system specifications? ” In this case, the systems under test contributed little to improve mission accomplishment. (continued…) Sep 13, 2012 (C) Red. Phone Security 2012 6

Problem Meaningful Requirements (1) • • Our first topic is operational requirements. “All of the systems met or came close to meeting most of their documented requirements, ” Mike began. Actually, the program office designed the systems to the specifications it was given and was largely successful in doing so. “Yet, ” he continued, “in operational testing, these systems demonstrated little useful military capability. ” In operational test and evaluation, our focus is necessarily on answering the question: “Is a unit’s ability to accomplish its mission improved when equipped with a system? ” and much less so on answering the question: “Does this system meet its system specifications? ” In this case, the systems under test contributed little to improve mission accomplishment. (continued…) Sep 13, 2012 (C) Red. Phone Security 2012 6

Problem Meaningful Requirements (2) • • I attribute this lack of demonstrated military utility in large measure to the established system requirements and specifications not being descriptive of a meaningful and useful operational capability. In my view, the system requirements document did not sufficiently link its largely technical specifications to desired operational outcomes. The requirements and specifications were necessary, but well short of sufficient, to assure military utility. This situation is not unique to this program. Program requirements must be operational in nature and clearly linked to a useful and measurable operational capability. Contract specifications must be both necessary and sufficient to assure operational effectiveness in combat. March 2011 Testimony to the House Armed Services Subcommittee on Tactical Air and Land Forces. Emphasis added, and names omitted. Sep 13, 2012 (C) Red. Phone Security 2012 7

Problem Meaningful Requirements (2) • • I attribute this lack of demonstrated military utility in large measure to the established system requirements and specifications not being descriptive of a meaningful and useful operational capability. In my view, the system requirements document did not sufficiently link its largely technical specifications to desired operational outcomes. The requirements and specifications were necessary, but well short of sufficient, to assure military utility. This situation is not unique to this program. Program requirements must be operational in nature and clearly linked to a useful and measurable operational capability. Contract specifications must be both necessary and sufficient to assure operational effectiveness in combat. March 2011 Testimony to the House Armed Services Subcommittee on Tactical Air and Land Forces. Emphasis added, and names omitted. Sep 13, 2012 (C) Red. Phone Security 2012 7

Problem Shall vs. Shall Never • Security asserts what cannot be (nonexistence) – – • So, security models typically assume “No Other Changes” Security-assertions… – – – • Cannot be done, accomplished, known, … Granted a context in which the secured acts might otherwise occur… Granted the correct functioning of a system Take the form “shall never” Concern survival or basic enterprise functioning Include: secrecy, integrity, availability, authenticity, authorization, audit, and assurance Argue for the priority of some concern over other concerns (Waever) Involve cost-risk “tradeoffs” (Schneier) System-assertions take the form “shall” Sep 13, 2012 (C) Red. Phone Security 2012 8

Problem Shall vs. Shall Never • Security asserts what cannot be (nonexistence) – – • So, security models typically assume “No Other Changes” Security-assertions… – – – • Cannot be done, accomplished, known, … Granted a context in which the secured acts might otherwise occur… Granted the correct functioning of a system Take the form “shall never” Concern survival or basic enterprise functioning Include: secrecy, integrity, availability, authenticity, authorization, audit, and assurance Argue for the priority of some concern over other concerns (Waever) Involve cost-risk “tradeoffs” (Schneier) System-assertions take the form “shall” Sep 13, 2012 (C) Red. Phone Security 2012 8

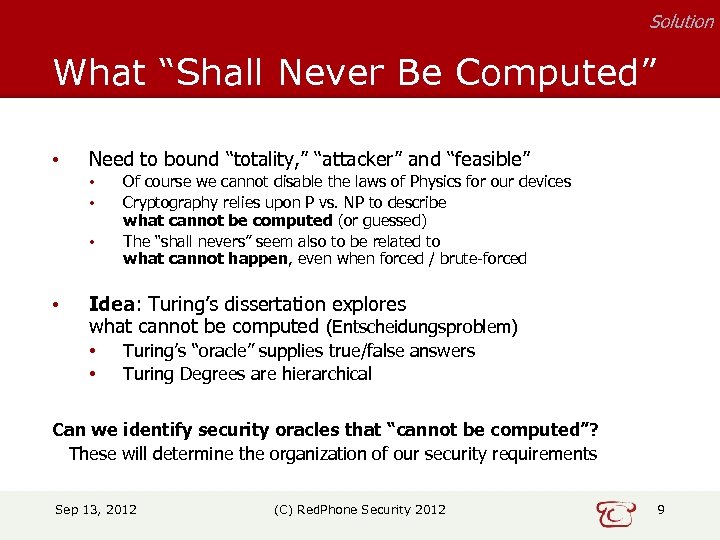

Solution What “Shall Never Be Computed” • Need to bound “totality, ” “attacker” and “feasible” • • Of course we cannot disable the laws of Physics for our devices Cryptography relies upon P vs. NP to describe what cannot be computed (or guessed) The “shall nevers” seem also to be related to what cannot happen, even when forced / brute-forced Idea: Turing’s dissertation explores what cannot be computed (Entscheidungsproblem) • • Turing’s “oracle” supplies true/false answers Turing Degrees are hierarchical Can we identify security oracles that “cannot be computed”? These will determine the organization of our security requirements Sep 13, 2012 (C) Red. Phone Security 2012 9

Solution What “Shall Never Be Computed” • Need to bound “totality, ” “attacker” and “feasible” • • Of course we cannot disable the laws of Physics for our devices Cryptography relies upon P vs. NP to describe what cannot be computed (or guessed) The “shall nevers” seem also to be related to what cannot happen, even when forced / brute-forced Idea: Turing’s dissertation explores what cannot be computed (Entscheidungsproblem) • • Turing’s “oracle” supplies true/false answers Turing Degrees are hierarchical Can we identify security oracles that “cannot be computed”? These will determine the organization of our security requirements Sep 13, 2012 (C) Red. Phone Security 2012 9

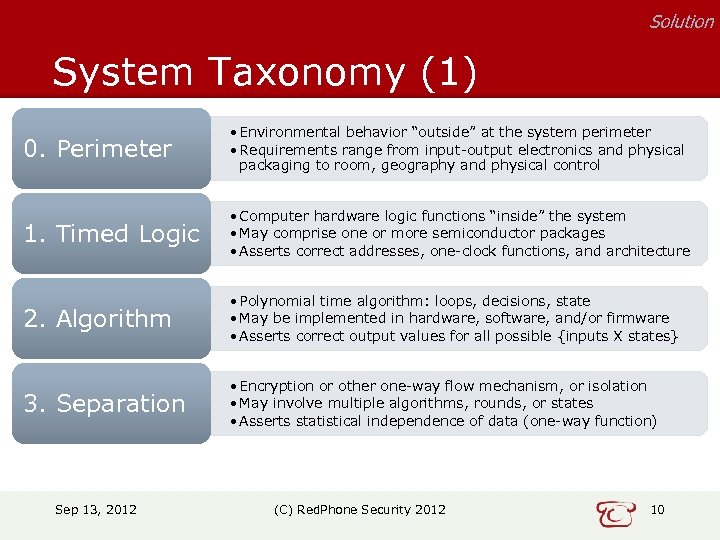

Solution System Taxonomy (1) 0. Perimeter • Environmental behavior “outside” at the system perimeter • Requirements range from input-output electronics and physical packaging to room, geography and physical control 1. Timed Logic • Computer hardware logic functions “inside” the system • May comprise one or more semiconductor packages • Asserts correct addresses, one-clock functions, and architecture 2. Algorithm • Polynomial time algorithm: loops, decisions, state • May be implemented in hardware, software, and/or firmware • Asserts correct output values for all possible {inputs X states} 3. Separation • Encryption or other one-way flow mechanism, or isolation • May involve multiple algorithms, rounds, or states • Asserts statistical independence of data (one-way function) Sep 13, 2012 (C) Red. Phone Security 2012 10

Solution System Taxonomy (1) 0. Perimeter • Environmental behavior “outside” at the system perimeter • Requirements range from input-output electronics and physical packaging to room, geography and physical control 1. Timed Logic • Computer hardware logic functions “inside” the system • May comprise one or more semiconductor packages • Asserts correct addresses, one-clock functions, and architecture 2. Algorithm • Polynomial time algorithm: loops, decisions, state • May be implemented in hardware, software, and/or firmware • Asserts correct output values for all possible {inputs X states} 3. Separation • Encryption or other one-way flow mechanism, or isolation • May involve multiple algorithms, rounds, or states • Asserts statistical independence of data (one-way function) Sep 13, 2012 (C) Red. Phone Security 2012 10

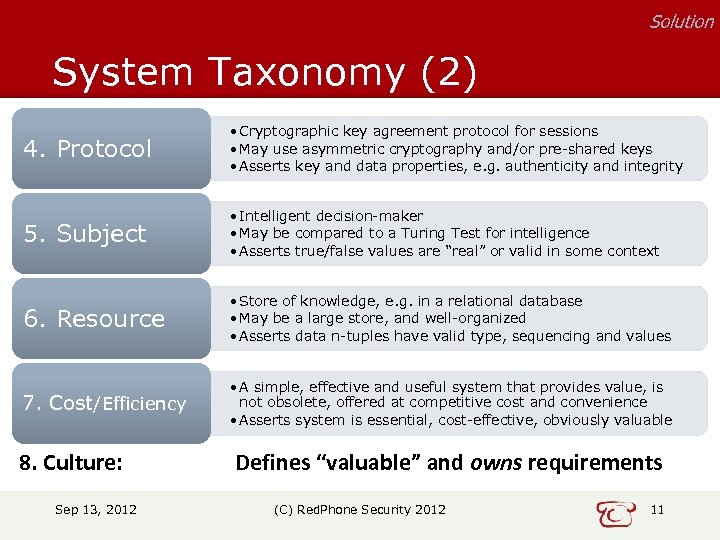

Solution System Taxonomy (2) 4. Protocol • Cryptographic key agreement protocol for sessions • May use asymmetric cryptography and/or pre-shared keys • Asserts key and data properties, e. g. authenticity and integrity 5. Subject • Intelligent decision-maker • May be compared to a Turing Test for intelligence • Asserts true/false values are “real” or valid in some context 6. Resource • Store of knowledge, e. g. in a relational database • May be a large store, and well-organized • Asserts data n-tuples have valid type, sequencing and values 7. Cost/Efficiency • A simple, effective and useful system that provides value, is not obsolete, offered at competitive cost and convenience • Asserts system is essential, cost-effective, obviously valuable 8. Culture: Sep 13, 2012 Defines “valuable” and owns requirements (C) Red. Phone Security 2012 11

Solution System Taxonomy (2) 4. Protocol • Cryptographic key agreement protocol for sessions • May use asymmetric cryptography and/or pre-shared keys • Asserts key and data properties, e. g. authenticity and integrity 5. Subject • Intelligent decision-maker • May be compared to a Turing Test for intelligence • Asserts true/false values are “real” or valid in some context 6. Resource • Store of knowledge, e. g. in a relational database • May be a large store, and well-organized • Asserts data n-tuples have valid type, sequencing and values 7. Cost/Efficiency • A simple, effective and useful system that provides value, is not obsolete, offered at competitive cost and convenience • Asserts system is essential, cost-effective, obviously valuable 8. Culture: Sep 13, 2012 Defines “valuable” and owns requirements (C) Red. Phone Security 2012 11

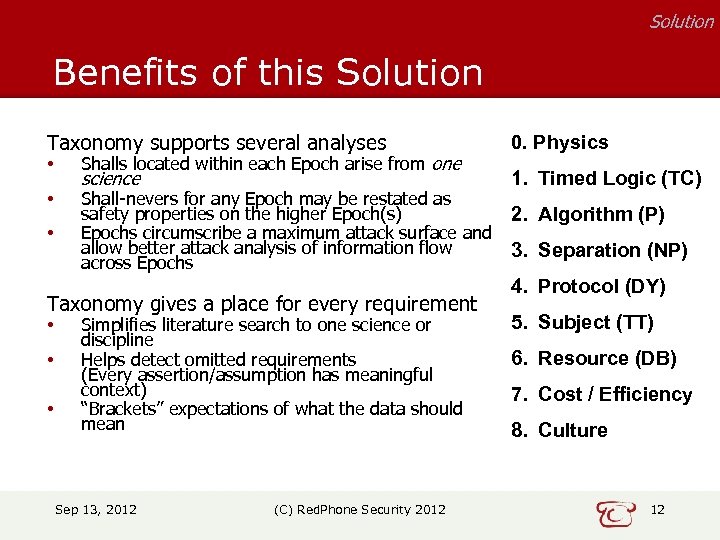

Solution Benefits of this Solution Taxonomy supports several analyses • Shalls located within each Epoch arise from one • Shall-nevers for any Epoch may be restated as safety properties on the higher Epoch(s) Epochs circumscribe a maximum attack surface and allow better attack analysis of information flow across Epochs • science Taxonomy gives a place for every requirement • • • Simplifies literature search to one science or discipline Helps detect omitted requirements (Every assertion/assumption has meaningful context) “Brackets” expectations of what the data should mean Sep 13, 2012 (C) Red. Phone Security 2012 0. Physics 1. Timed Logic (TC) 2. Algorithm (P) 3. Separation (NP) 4. Protocol (DY) 5. Subject (TT) 6. Resource (DB) 7. Cost / Efficiency 8. Culture 12

Solution Benefits of this Solution Taxonomy supports several analyses • Shalls located within each Epoch arise from one • Shall-nevers for any Epoch may be restated as safety properties on the higher Epoch(s) Epochs circumscribe a maximum attack surface and allow better attack analysis of information flow across Epochs • science Taxonomy gives a place for every requirement • • • Simplifies literature search to one science or discipline Helps detect omitted requirements (Every assertion/assumption has meaningful context) “Brackets” expectations of what the data should mean Sep 13, 2012 (C) Red. Phone Security 2012 0. Physics 1. Timed Logic (TC) 2. Algorithm (P) 3. Separation (NP) 4. Protocol (DY) 5. Subject (TT) 6. Resource (DB) 7. Cost / Efficiency 8. Culture 12

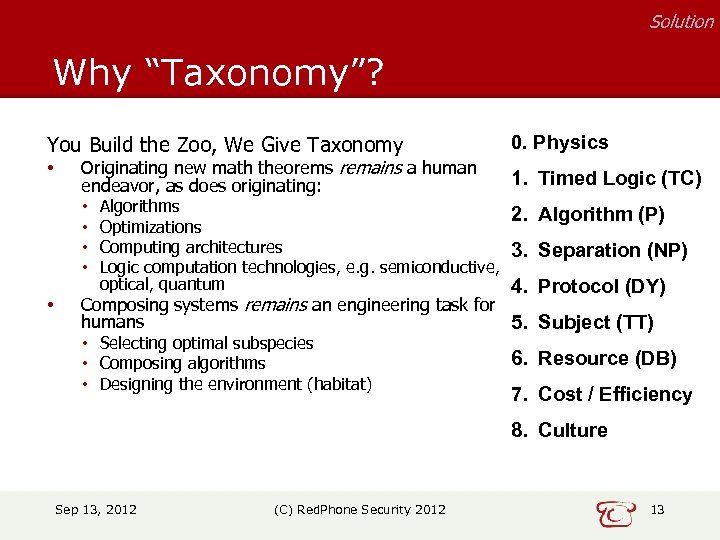

Solution Why “Taxonomy”? You Build the Zoo, We Give Taxonomy • Originating new math theorems remains a human 1. Timed Logic (TC) endeavor, as does originating: • Algorithms 2. Algorithm (P) • Optimizations • Computing architectures 3. Separation (NP) • Logic computation technologies, e. g. semiconductive, optical, quantum • 0. Physics 4. Protocol (DY) Composing systems remains an engineering task for humans 5. Subject (TT) • Selecting optimal subspecies 6. Resource (DB) • Composing algorithms • Designing the environment (habitat) 7. Cost / Efficiency 8. Culture Sep 13, 2012 (C) Red. Phone Security 2012 13

Solution Why “Taxonomy”? You Build the Zoo, We Give Taxonomy • Originating new math theorems remains a human 1. Timed Logic (TC) endeavor, as does originating: • Algorithms 2. Algorithm (P) • Optimizations • Computing architectures 3. Separation (NP) • Logic computation technologies, e. g. semiconductive, optical, quantum • 0. Physics 4. Protocol (DY) Composing systems remains an engineering task for humans 5. Subject (TT) • Selecting optimal subspecies 6. Resource (DB) • Composing algorithms • Designing the environment (habitat) 7. Cost / Efficiency 8. Culture Sep 13, 2012 (C) Red. Phone Security 2012 13

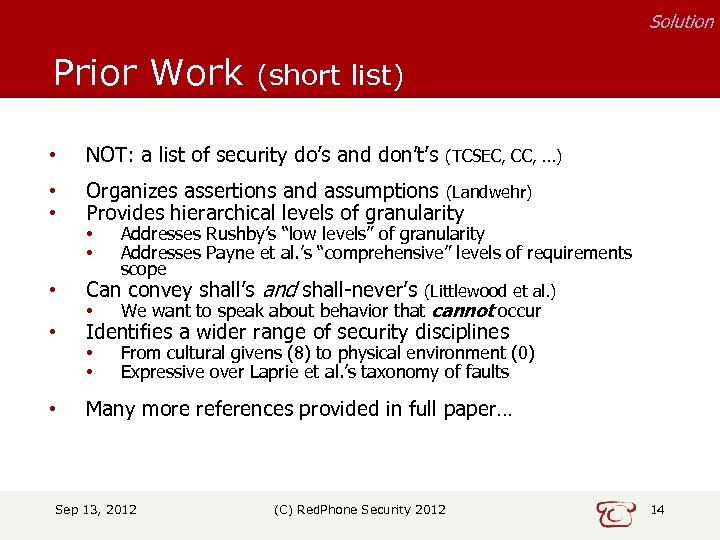

Solution Prior Work (short list) • NOT: a list of security do’s and don’t’s (TCSEC, CC, …) • • Organizes assertions and assumptions (Landwehr) Provides hierarchical levels of granularity • Can convey shall’s and shall-never’s (Littlewood et al. ) • Identifies a wider range of security disciplines • Many more references provided in full paper… • • Addresses Rushby’s “low levels” of granularity Addresses Payne et al. ’s “comprehensive” levels of requirements scope • We want to speak about behavior that cannot occur • • From cultural givens (8) to physical environment (0) Expressive over Laprie et al. ’s taxonomy of faults Sep 13, 2012 (C) Red. Phone Security 2012 14

Solution Prior Work (short list) • NOT: a list of security do’s and don’t’s (TCSEC, CC, …) • • Organizes assertions and assumptions (Landwehr) Provides hierarchical levels of granularity • Can convey shall’s and shall-never’s (Littlewood et al. ) • Identifies a wider range of security disciplines • Many more references provided in full paper… • • Addresses Rushby’s “low levels” of granularity Addresses Payne et al. ’s “comprehensive” levels of requirements scope • We want to speak about behavior that cannot occur • • From cultural givens (8) to physical environment (0) Expressive over Laprie et al. ’s taxonomy of faults Sep 13, 2012 (C) Red. Phone Security 2012 14

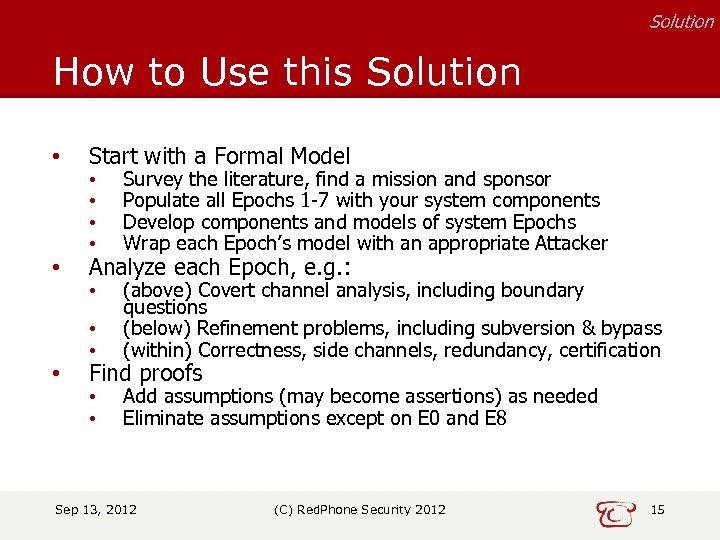

Solution How to Use this Solution • Start with a Formal Model • Analyze each Epoch, e. g. : • • • Survey the literature, find a mission and sponsor Populate all Epochs 1 -7 with your system components Develop components and models of system Epochs Wrap each Epoch’s model with an appropriate Attacker • • • (above) Covert channel analysis, including boundary questions (below) Refinement problems, including subversion & bypass (within) Correctness, side channels, redundancy, certification • • Add assumptions (may become assertions) as needed Eliminate assumptions except on E 0 and E 8 Find proofs Sep 13, 2012 (C) Red. Phone Security 2012 15

Solution How to Use this Solution • Start with a Formal Model • Analyze each Epoch, e. g. : • • • Survey the literature, find a mission and sponsor Populate all Epochs 1 -7 with your system components Develop components and models of system Epochs Wrap each Epoch’s model with an appropriate Attacker • • • (above) Covert channel analysis, including boundary questions (below) Refinement problems, including subversion & bypass (within) Correctness, side channels, redundancy, certification • • Add assumptions (may become assertions) as needed Eliminate assumptions except on E 0 and E 8 Find proofs Sep 13, 2012 (C) Red. Phone Security 2012 15

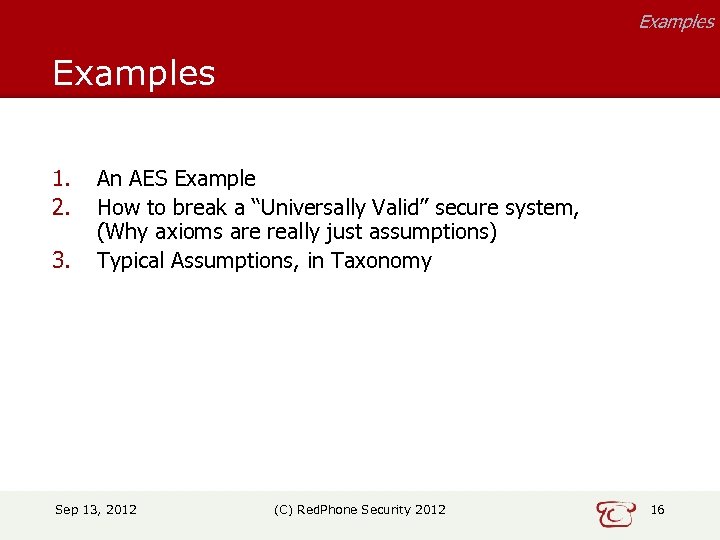

Examples 1. 2. 3. An AES Example How to break a “Universally Valid” secure system, (Why axioms are really just assumptions) Typical Assumptions, in Taxonomy Sep 13, 2012 (C) Red. Phone Security 2012 16

Examples 1. 2. 3. An AES Example How to break a “Universally Valid” secure system, (Why axioms are really just assumptions) Typical Assumptions, in Taxonomy Sep 13, 2012 (C) Red. Phone Security 2012 16

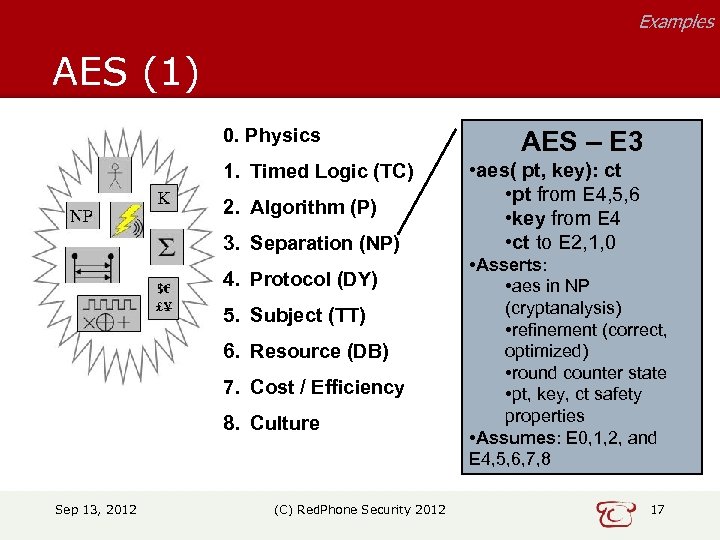

Examples AES (1) 0. Physics 1. Timed Logic (TC) 2. Algorithm (P) 3. Separation (NP) 4. Protocol (DY) 5. Subject (TT) 6. Resource (DB) 7. Cost / Efficiency 8. Culture Sep 13, 2012 (C) Red. Phone Security 2012 AES – E 3 • aes( pt, key): ct • pt from E 4, 5, 6 • key from E 4 • ct to E 2, 1, 0 • Asserts: • aes in NP (cryptanalysis) • refinement (correct, optimized) • round counter state • pt, key, ct safety properties • Assumes: E 0, 1, 2, and E 4, 5, 6, 7, 8 17

Examples AES (1) 0. Physics 1. Timed Logic (TC) 2. Algorithm (P) 3. Separation (NP) 4. Protocol (DY) 5. Subject (TT) 6. Resource (DB) 7. Cost / Efficiency 8. Culture Sep 13, 2012 (C) Red. Phone Security 2012 AES – E 3 • aes( pt, key): ct • pt from E 4, 5, 6 • key from E 4 • ct to E 2, 1, 0 • Asserts: • aes in NP (cryptanalysis) • refinement (correct, optimized) • round counter state • pt, key, ct safety properties • Assumes: E 0, 1, 2, and E 4, 5, 6, 7, 8 17

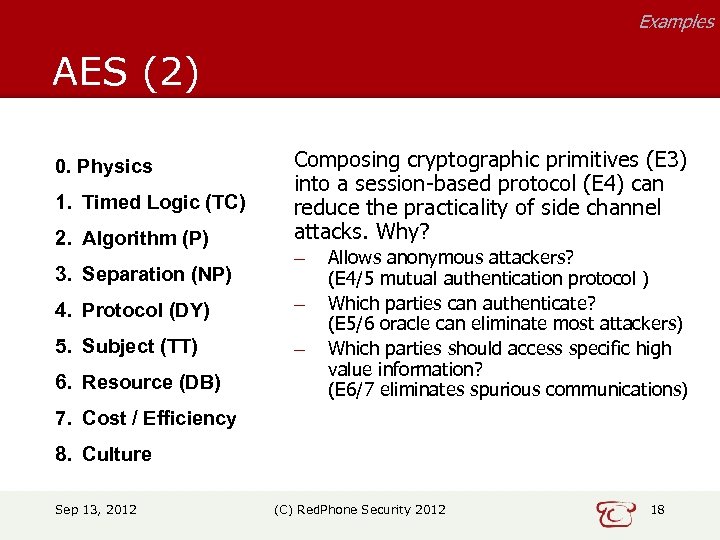

Examples AES (2) 0. Physics 1. Timed Logic (TC) 2. Algorithm (P) 3. Separation (NP) Composing cryptographic primitives (E 3) into a session-based protocol (E 4) can reduce the practicality of side channel attacks. Why? – 4. Protocol (DY) – 5. Subject (TT) – 6. Resource (DB) Allows anonymous attackers? (E 4/5 mutual authentication protocol ) Which parties can authenticate? (E 5/6 oracle can eliminate most attackers) Which parties should access specific high value information? (E 6/7 eliminates spurious communications) 7. Cost / Efficiency 8. Culture Sep 13, 2012 (C) Red. Phone Security 2012 18

Examples AES (2) 0. Physics 1. Timed Logic (TC) 2. Algorithm (P) 3. Separation (NP) Composing cryptographic primitives (E 3) into a session-based protocol (E 4) can reduce the practicality of side channel attacks. Why? – 4. Protocol (DY) – 5. Subject (TT) – 6. Resource (DB) Allows anonymous attackers? (E 4/5 mutual authentication protocol ) Which parties can authenticate? (E 5/6 oracle can eliminate most attackers) Which parties should access specific high value information? (E 6/7 eliminates spurious communications) 7. Cost / Efficiency 8. Culture Sep 13, 2012 (C) Red. Phone Security 2012 18

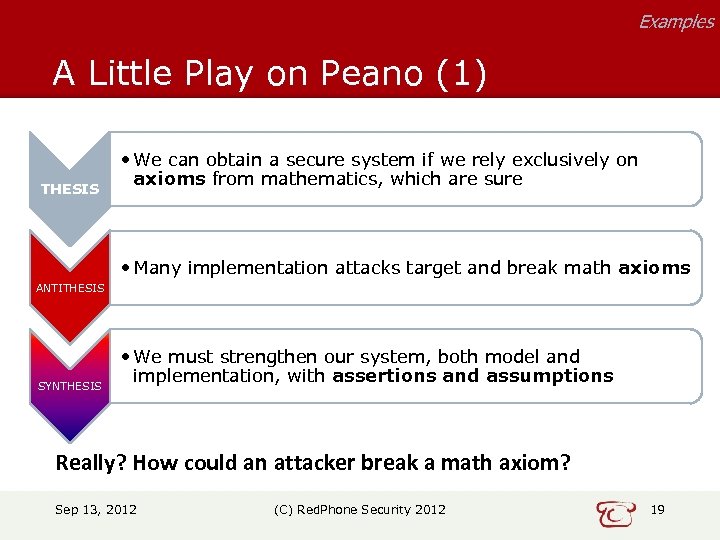

Examples A Little Play on Peano (1) THESIS • We can obtain a secure system if we rely exclusively on axioms from mathematics, which are sure • Many implementation attacks target and break math axioms ANTITHESIS SYNTHESIS • We must strengthen our system, both model and implementation, with assertions and assumptions Really? How could an attacker break a math axiom? Sep 13, 2012 (C) Red. Phone Security 2012 19

Examples A Little Play on Peano (1) THESIS • We can obtain a secure system if we rely exclusively on axioms from mathematics, which are sure • Many implementation attacks target and break math axioms ANTITHESIS SYNTHESIS • We must strengthen our system, both model and implementation, with assertions and assumptions Really? How could an attacker break a math axiom? Sep 13, 2012 (C) Red. Phone Security 2012 19

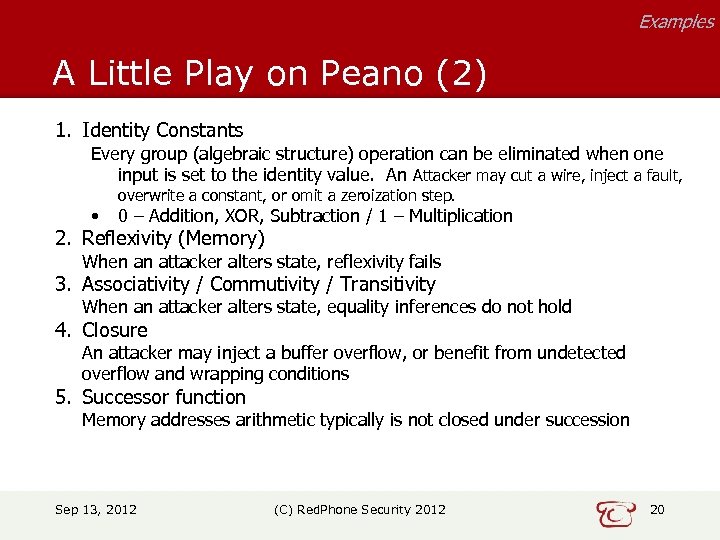

Examples A Little Play on Peano (2) 1. Identity Constants Every group (algebraic structure) operation can be eliminated when one input is set to the identity value. An Attacker may cut a wire, inject a fault, • overwrite a constant, or omit a zeroization step. 0 – Addition, XOR, Subtraction / 1 – Multiplication 2. Reflexivity (Memory) When an attacker alters state, reflexivity fails 3. Associativity / Commutivity / Transitivity When an attacker alters state, equality inferences do not hold 4. Closure An attacker may inject a buffer overflow, or benefit from undetected overflow and wrapping conditions 5. Successor function Memory addresses arithmetic typically is not closed under succession Sep 13, 2012 (C) Red. Phone Security 2012 20

Examples A Little Play on Peano (2) 1. Identity Constants Every group (algebraic structure) operation can be eliminated when one input is set to the identity value. An Attacker may cut a wire, inject a fault, • overwrite a constant, or omit a zeroization step. 0 – Addition, XOR, Subtraction / 1 – Multiplication 2. Reflexivity (Memory) When an attacker alters state, reflexivity fails 3. Associativity / Commutivity / Transitivity When an attacker alters state, equality inferences do not hold 4. Closure An attacker may inject a buffer overflow, or benefit from undetected overflow and wrapping conditions 5. Successor function Memory addresses arithmetic typically is not closed under succession Sep 13, 2012 (C) Red. Phone Security 2012 20

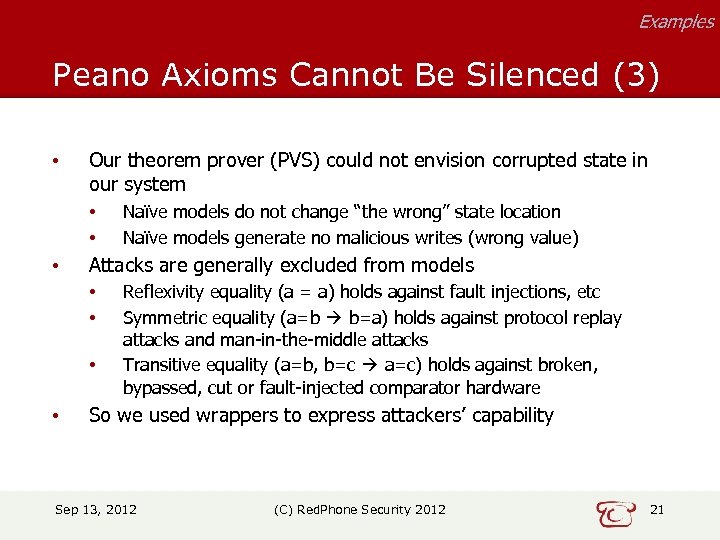

Examples Peano Axioms Cannot Be Silenced (3) • Our theorem prover (PVS) could not envision corrupted state in our system • • • Attacks are generally excluded from models • • Naïve models do not change “the wrong” state location Naïve models generate no malicious writes (wrong value) Reflexivity equality (a = a) holds against fault injections, etc Symmetric equality (a=b b=a) holds against protocol replay attacks and man-in-the-middle attacks Transitive equality (a=b, b=c a=c) holds against broken, bypassed, cut or fault-injected comparator hardware So we used wrappers to express attackers’ capability Sep 13, 2012 (C) Red. Phone Security 2012 21

Examples Peano Axioms Cannot Be Silenced (3) • Our theorem prover (PVS) could not envision corrupted state in our system • • • Attacks are generally excluded from models • • Naïve models do not change “the wrong” state location Naïve models generate no malicious writes (wrong value) Reflexivity equality (a = a) holds against fault injections, etc Symmetric equality (a=b b=a) holds against protocol replay attacks and man-in-the-middle attacks Transitive equality (a=b, b=c a=c) holds against broken, bypassed, cut or fault-injected comparator hardware So we used wrappers to express attackers’ capability Sep 13, 2012 (C) Red. Phone Security 2012 21

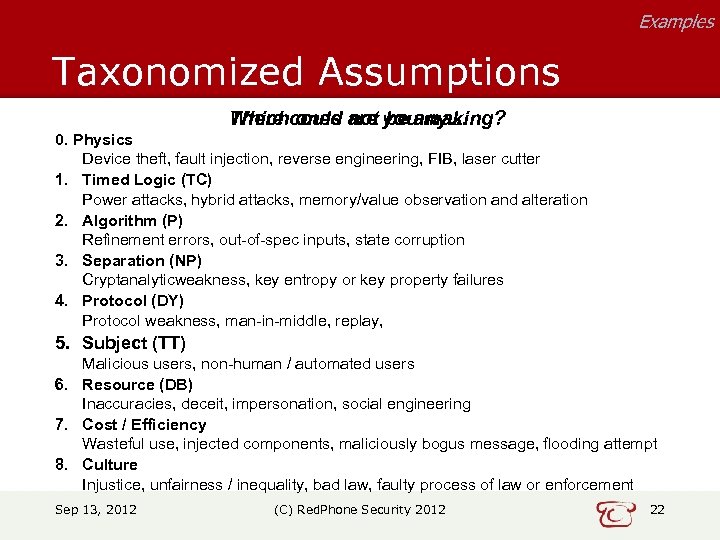

Examples Taxonomized Assumptions Which ones not be any… There could are you making? 0. Physics Device theft, fault injection, reverse engineering, FIB, laser cutter 1. Timed Logic (TC) Power attacks, hybrid attacks, memory/value observation and alteration 2. Algorithm (P) Refinement errors, out-of-spec inputs, state corruption 3. Separation (NP) Cryptanalyticweakness, key entropy or key property failures 4. Protocol (DY) Protocol weakness, man-in-middle, replay, 5. Subject (TT) Malicious users, non-human / automated users 6. Resource (DB) Inaccuracies, deceit, impersonation, social engineering 7. Cost / Efficiency Wasteful use, injected components, maliciously bogus message, flooding attempt 8. Culture Injustice, unfairness / inequality, bad law, faulty process of law or enforcement Sep 13, 2012 (C) Red. Phone Security 2012 22

Examples Taxonomized Assumptions Which ones not be any… There could are you making? 0. Physics Device theft, fault injection, reverse engineering, FIB, laser cutter 1. Timed Logic (TC) Power attacks, hybrid attacks, memory/value observation and alteration 2. Algorithm (P) Refinement errors, out-of-spec inputs, state corruption 3. Separation (NP) Cryptanalyticweakness, key entropy or key property failures 4. Protocol (DY) Protocol weakness, man-in-middle, replay, 5. Subject (TT) Malicious users, non-human / automated users 6. Resource (DB) Inaccuracies, deceit, impersonation, social engineering 7. Cost / Efficiency Wasteful use, injected components, maliciously bogus message, flooding attempt 8. Culture Injustice, unfairness / inequality, bad law, faulty process of law or enforcement Sep 13, 2012 (C) Red. Phone Security 2012 22

Summary • Requirements can be operationalized either as assumptions or as assertions • • Attackers break assumptions Engineers make assertions • for a system • within an Epoch We must organize requirements to discover our hidden assumptions (Husserl’s epoche) This work proposes a taxonomy for modeling secure communications systems

Summary • Requirements can be operationalized either as assumptions or as assertions • • Attackers break assumptions Engineers make assertions • for a system • within an Epoch We must organize requirements to discover our hidden assumptions (Husserl’s epoche) This work proposes a taxonomy for modeling secure communications systems

Toward a Taxonomy of Communications Security Models THANK YOU! Mark Brown Red. Phone Security Saint Paul, MN USA mark@redphonesecurity. com

Toward a Taxonomy of Communications Security Models THANK YOU! Mark Brown Red. Phone Security Saint Paul, MN USA mark@redphonesecurity. com