bcaedc85522c4d0b1a110e4c93669597.ppt

- Количество слайдов: 90

Topics in BMI: Grid Computing CSE 300 Grid Computing and its Applications in the Biomedical Informatics Domain May 2008 Jay Coppola Database Architect Information Technologies Department The University of Connecticut Health Center 263 Farmington Ave. MC-5210 Farmington, CT 06030 jcoppola@uchc. edu (860) 679 - 1682 Grid-1

What is a Computer Grid? CSE 300 m A Computer Grid is a grouping of computer resources (CPU, Disk, Memory, Peripherals, ect. ) for use as a single, albeit large and powerful, virtual computer. m “Distributed computing across virtualized resources” [1]. m “Coordinates resources that are not subject to centralized control… using standard, open, generalpurpose interfaces and protocols…to deliver nontrivial quality of service” [3]. Grid-2

What is a Computer Grid? CSE 300 m m The basic premise is to have the ability to leverage as much of the unused CPU cycles (and other computer resources of the grid) as possible in order to execute a computer program more quickly. q Years and Months Days and Hours! Application requirements for grid usage q Application must be capable of executing remotely on the distributed grid architecture. q Application must be able to be subdivided into smaller “jobs” to take advantage of parallel processing. q Required data must be available and without undo latency. May require replication of data sets across grid. Grid-3

Why Grid? CSE 300 m The term “Grid” refers to the electric power “grid”. q Virtually unlimited power. q Customer is abstracted from type and location of power source. Ø Nuclear, Coal, Solar, Wind… Ø Local power plant or remote generation facility. – Millstone 2, 3, or Niagara Falls. Ø Customer doesn’t know and doesn’t care! q Pays on a per hour usage rate. Ø $. 16/Killowatt Hour - $1. 00/CPU Hour. q Plug ‘n Play. Grid computing not quite there yet. Grid-4

Grid Topologies m CSE 300 Grids come in all sizes but are typically segregated into 4 basic configurations or sizes that span physical location as well as geo-political issues. q Cluster – Similar H/W and S/W on a local LAN q Intragrid – Dissimilar H/W and S/W on a local LAN (departments in same company share) q Extragrid – Two or more Intragrids spanning across LAN’s, typically within the same geopolitical environment (Corporate wide) q Intergrid - A world wide combination of multiple Extragrid’s Ø Possible dedicated H/W and standalone Mainframe and Super-computer systems. Ø Spans corporations as well as counties. Ø Internet or private network backbone. Grid-5

Computer Grid Topologies (Intergrid) CSE 300 Cluster Extragrid Intragrid Grid-6

Computer Grid Types CSE 300 m Computer Grids are divided into 3 types: q Computational (CPU) Grid Ø Most Common (and mature) of all grids. Ø Logical extension of Distributed Computing. Ø CPU Cycles. q Data Grid Ø Focuses on the data storage capacity as the main shared resource. Ø Manage massive data sets ranging in size from Mega (106) bytes to Peta (1015) bytes. q Network Grid Ø Focuses on the communication aspects of the available resources. Ø Provide fault tolerant high-performance communication services. q Each grid type requires some aspect of the other to be truly functional. Grid-7

Grid Benefits and Issues m CSE 300 m m m Exploit under utilized resources q Business desktop PC’s utilized 5% [2]. q 2 – 3 GHz Dual/Quad CPU’s, 1(+) Gigabytes of memory, . 5 to 1 Terabyte disk, Gigabyte Ethernet. q Servers also under utilized with even more performance and resources. q Performance and capacity continually growing. This unutilized computing power can be exploited by a computer grid architecture. q More efficient use of under utilized H/W. q Create a super computer for the cost of software! May require application rewrite. “Grid Ready” app. Remote Grid “node” (computer) must meet any special H/W, S/W, or resource requirements of the executing App. Grid-8

Benefits and Issues – Parallel CPU Capacity CSE 300 m m m Computer Grid offers the potential for massive parallel processing. To truly exploit a grid the application must be subdivided into multiple sub-jobs for parallel processing. Not practical or workable for many applications. Currently no practical tool exists that can transform an arbitrary app into sub-jobs to take advantage of parallel processing. Applications that can be subdivided will experience huge performance gains! Grid-9

Benefits and Issues – Virtual Resources CSE 300 m Virtualization of Resources q Fundamental point of Grid Ø Physical characteristics are abstracted. Ø Underlying H/W and S/W is transparent to the Grid user. Ø User “sees” one large and powerful computer system. Ø User can focus on the Task not the computer system. Grid-10

Benefits and Issues Access to Additional Resources CSE 300 m Each Computer (Node) of the Grid adds its resources to the entire grid. q CPU, Memory, Disk, and N/W. q Software Licenses. q Specialized Peripherals Ø Remote controlled Electron Microscope Ø Sensors Ø May require reservation system to guarantee availability. Grid-11

Benefits and Issues Resource Balancing and Reliability CSE 300 m m m Grid system maintains metadata about resources q Availability of node q Available resources on a particular node q Average throughput/performance q Failure detection/long executing jobs System will direct a sub-job to an available node that can support the performance required. q If a node is busy, redirect to a different node System can detect failed nodes and sub-jobs q Restart a job on same or different node q Resubmit job to a different node. Grid-12

Benefits and Issues Management and Virtual Organizations m CSE 300 m Grid system can manage priorities among different projects and jobs q Requires cooperation among grid uses. Virtual Organizations (VO) q Political entity. q Formed by users, groups, teams, companies, countries… q Form a collaboration among users to achieve a common goal. q DefinesProvides protocols and mechanisms for access to resources. q Can be stand-alone or a hierarchy of regional, national, or international VO’s. Grid-13

Benefits and Issues - Security m CSE 300 Important issue made even more important in a Grid architecture. q Application and Data is now exposed to multiple computers (nodes) any of which may be directly communicating with or executing your application. q Addressed with Authentication, Authorization, and Encryption. Ø Each node must be authenticated by the “grid” it belongs to. Ø Once authenticated, authorization can be given to specific nodes to allow it to perform certain tasks. Ø Encryption required for communication intercept issues. q Use technologies such as Key Encryption, Certificate Authority (CA), Digital Certificates, and SSL. Grid-14

Software Components m CSE 300 m m Grid system requires a layer of software to manage the grid (middleware). Management tasks q Scheduling of jobs. q Resource availability monitoring. q Node capacity and utilization information gathering. q Job status for recovery. Local node S/W q Needed to allow node to accept a sub-job for execution. q Allow it to register its resources to the grid. q Monitor job progress and send status to grid. Grid-15

Software Components – Job Scheduler m CSE 300 Major component of Grid “Middleware” q Can vary in complexity Ø Blindly submit jobs round-robin. Ø Job queuing system with several priority queues. q Advanced features include: Ø Maintain metadata for each node – – Performance Resources Availability (idle/busy) Status (On-line/Off-line) Ø Automatically find the most appropriate node for the next job in queue. Ø Job monitoring for recovery Grid-16

Software Components – Node Software m CSE 300 m m Grid systems can have 2 different node types q Resource only – No job submission. q Participating node – Can submit a job as well. Every node of a grid system requires interface S/W regardless of type All Nodes require… q Monitoring software that notifies the grid middlewarescheduler about… Ø Node availability Ø Current load Ø Available resources Ø Status of grid management software Grid-17

Software Components – Node Software m CSE 300 All nodes require (continued)… q Software that allows the node to accept and execute a job Ø Node S/W must accept the executable file or select the appropriate file from a local copy. Ø Locate any required dataset whether local or remotely located. Ø Communicate job status during execution. Ø Return results once completed. Ø Allow for communication between sub-jobs whether local to that node or not. Ø Dynamically adjust priorities of a job to meet a “level of service” requirement of others. m Participating grid node has additional requirements q Allow jobs to be submitted to the grid scheduler Ø May have its own scheduler or an interface to the grids common scheduler. Grid-18

Globus Toolkit CSE 300 m Framework for creating a Grid solution. m Created by the Globus Alliance (http: //www. ggf. org ). m 80% of all computer grid systems are implemented using a version of the Globus Toolkit [2] m Current release is Version 4. 0. 6 – GT 4 m Version 3 introduced SOA to the framework. m Version 4 expanded SOA and leverages Web Service (WS) as the underlying technology. Grid-19

Globus Toolkit m CSE 300 Globus Grid Forum (GGF) q Created Open Grid Service Architecture (OGSA) Ø Utilizes SOA for Grid implementation. q Two OGSA-compliant Grid Service Implementations based on Web Service (WS) architecture Ø Open Grid Service Interface (OGSI) Ø Web Service Resource Framework (WSRF) q WSRF is the latest and most true to the WS architecture Ø Utilizes standard XML schemas Ø Provides distinction between the service and the state of the service which is required for GS. Ø Defines a WS-Resource which includes “State” data using WS’s. – Maintained in an XML document. – Defines life cycle. – Known to and accessed by one or more WS’s. Grid-20

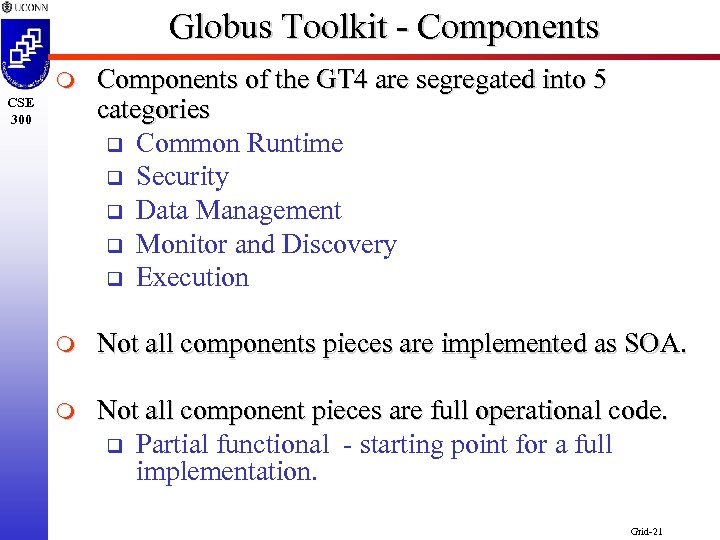

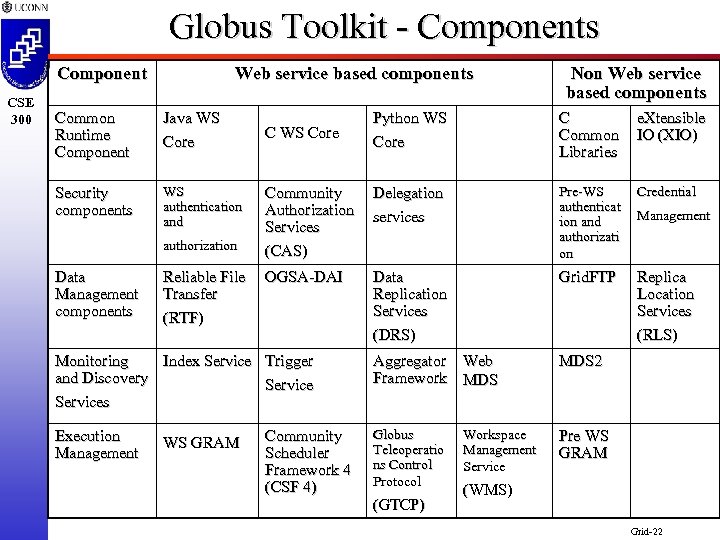

Globus Toolkit - Components m Components of the GT 4 are segregated into 5 categories q Common Runtime q Security q Data Management q Monitor and Discovery q Execution m Not all components pieces are implemented as SOA. m Not all component pieces are full operational code. q Partial functional - starting point for a full implementation. CSE 300 Grid-21

Globus Toolkit - Components Component CSE 300 Web service based components Common Runtime Component Java WS Core Security components WS authentication and Non Web service based components Python WS Core C Common Libraries e. Xtensible IO (XIO) Community Authorization Services (CAS) Delegation services Pre-WS authenticat ion and authorizati on Credential OGSA-DAI Data Replication Services (DRS) Grid. FTP Replica Location Services (RLS) Monitoring Index Service Trigger and Discovery Services Aggregator Web Framework MDS 2 Execution Management Globus Teleoperatio ns Control Protocol Pre WS GRAM authorization Data Management components Reliable File Transfer (RTF) WS GRAM C WS Core Community Scheduler Framework 4 (CSF 4) (GTCP) Workspace Management Service Management (WMS) Grid-22

Globus Toolkit - Components m CSE 300 Common Runtime Components q “Building Blocks” for most toolkit components q Web Services implemented in 3 languages: Ø Java Ø C Ø Python (Py. Gridware) q q q All 3 consist of API’s and tools that implement the WSRF and WS-Notification standards. Act as base components for various default services. Java WS Core provides the development base library and tools for custom WSRF services. Grid-23

Globus Toolkit - Components m CSE 300 e. Xtensible IO (XIO) q Extensible I/O library written in C q Provides single API Ø Supports multiple protocols Ø Implementation encapsulated as drivers Ø Framework for error handling Ø Asynchronous message delivery Ø Timeouts q Driver approach Ø Supports concept of driver stacks Ø Maximizes code reuse Ø Written as atomic units and “stacked” on top of one another. Grid-24

Globus Toolkit - Components CSE 300 m Security Components q Implemented using Grid Security Infrastructure (GSI) Ø Utilizes public key cryptography as basis q Three primary functions of GSI Ø Provide secure authentication and confidentiality between elements of the grid. Ø Provide support for security across organizational boundaries i. e. no centrally-managed security system. Ø Supports “single-sign-on” for grid users Grid-25

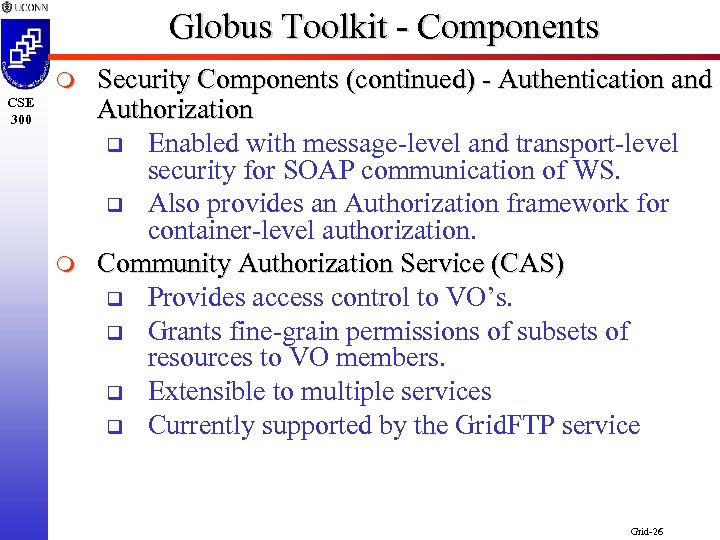

Globus Toolkit - Components m CSE 300 m Security Components (continued) - Authentication and Authorization q Enabled with message-level and transport-level security for SOAP communication of WS. q Also provides an Authorization framework for container-level authorization. Community Authorization Service (CAS) q Provides access control to VO’s. q Grants fine-grain permissions of subsets of resources to VO members. q Extensible to multiple services q Currently supported by the Grid. FTP service Grid-26

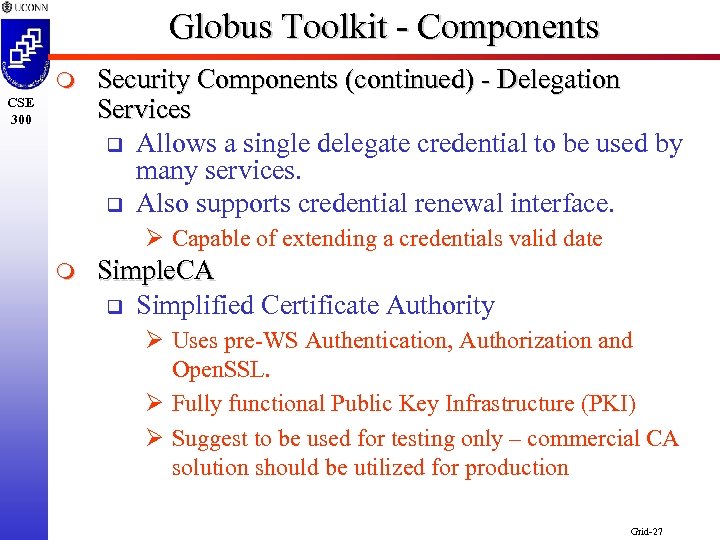

Globus Toolkit - Components m CSE 300 Security Components (continued) - Delegation Services q Allows a single delegate credential to be used by many services. q Also supports credential renewal interface. Ø Capable of extending a credentials valid date m Simple. CA q Simplified Certificate Authority Ø Uses pre-WS Authentication, Authorization and Open. SSL. Ø Fully functional Public Key Infrastructure (PKI) Ø Suggest to be used for testing only – commercial CA solution should be utilized for production Grid-27

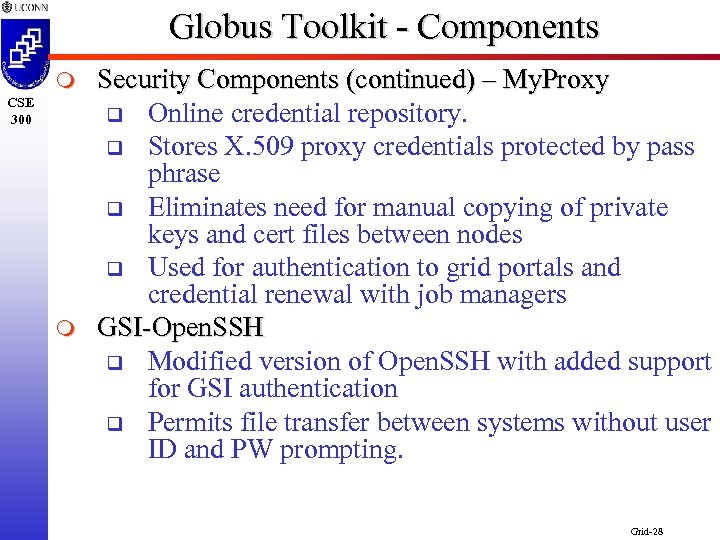

Globus Toolkit - Components m CSE 300 m Security Components (continued) – My. Proxy q Online credential repository. q Stores X. 509 proxy credentials protected by pass phrase q Eliminates need for manual copying of private keys and cert files between nodes q Used for authentication to grid portals and credential renewal with job managers GSI-Open. SSH q Modified version of Open. SSH with added support for GSI authentication q Permits file transfer between systems without user ID and PW prompting. Grid-28

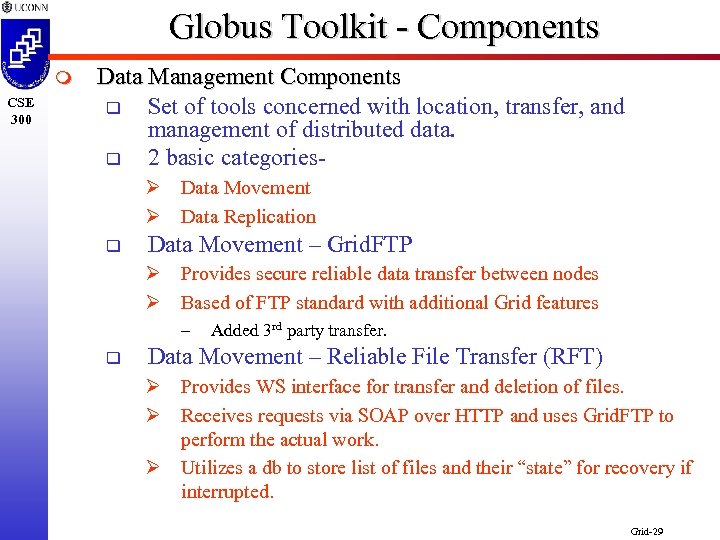

Globus Toolkit - Components m CSE 300 Data Management Components q Set of tools concerned with location, transfer, and management of distributed data. q 2 basic categoriesØ Data Movement Ø Data Replication q Data Movement – Grid. FTP Ø Provides secure reliable data transfer between nodes Ø Based of FTP standard with additional Grid features – q Added 3 rd party transfer. Data Movement – Reliable File Transfer (RFT) Ø Provides WS interface for transfer and deletion of files. Ø Receives requests via SOAP over HTTP and uses Grid. FTP to perform the actual work. Ø Utilizes a db to store list of files and their “state” for recovery if interrupted. Grid-29

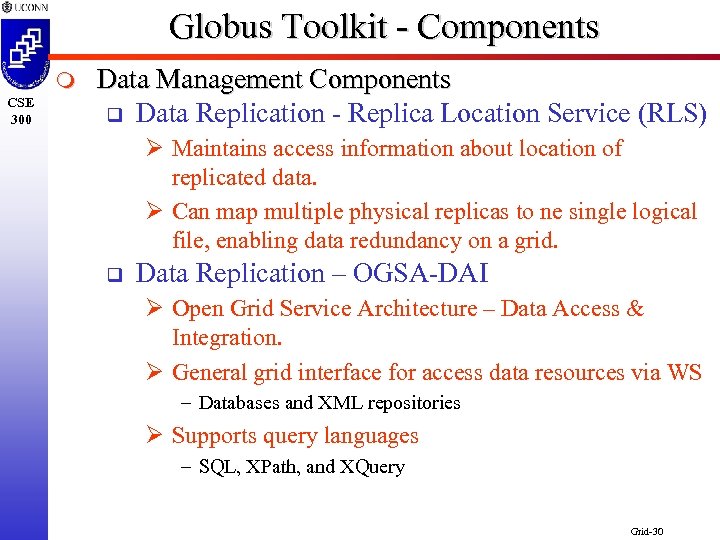

Globus Toolkit - Components m CSE 300 Data Management Components q Data Replication - Replica Location Service (RLS) Ø Maintains access information about location of replicated data. Ø Can map multiple physical replicas to ne single logical file, enabling data redundancy on a grid. q Data Replication – OGSA-DAI Ø Open Grid Service Architecture – Data Access & Integration. Ø General grid interface for access data resources via WS – Databases and XML repositories Ø Supports query languages – SQL, XPath, and XQuery Grid-30

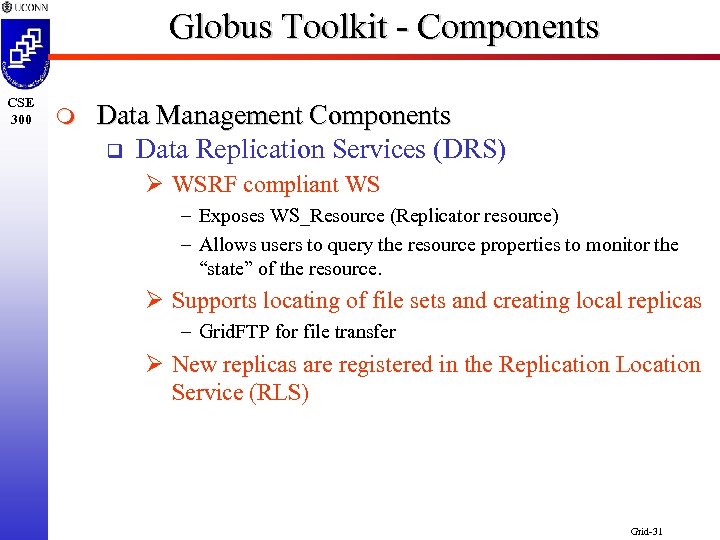

Globus Toolkit - Components CSE 300 m Data Management Components q Data Replication Services (DRS) Ø WSRF compliant WS – Exposes WS_Resource (Replicator resource) – Allows users to query the resource properties to monitor the “state” of the resource. Ø Supports locating of file sets and creating local replicas – Grid. FTP for file transfer Ø New replicas are registered in the Replication Location Service (RLS) Grid-31

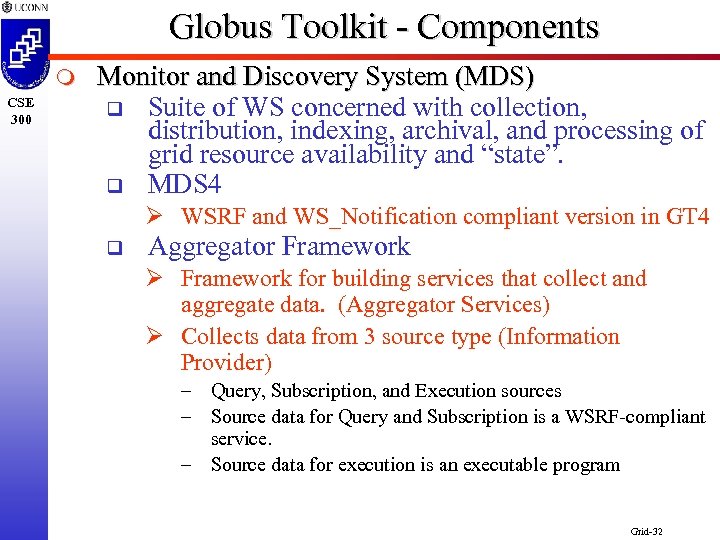

Globus Toolkit - Components m CSE 300 Monitor and Discovery System (MDS) q Suite of WS concerned with collection, distribution, indexing, archival, and processing of grid resource availability and “state”. q MDS 4 Ø WSRF and WS_Notification compliant version in GT 4 q Aggregator Framework Ø Framework for building services that collect and aggregate data. (Aggregator Services) Ø Collects data from 3 source type (Information Provider) – Query, Subscription, and Execution sources – Source data for Query and Subscription is a WSRF-compliant service. – Source data for execution is an executable program Grid-32

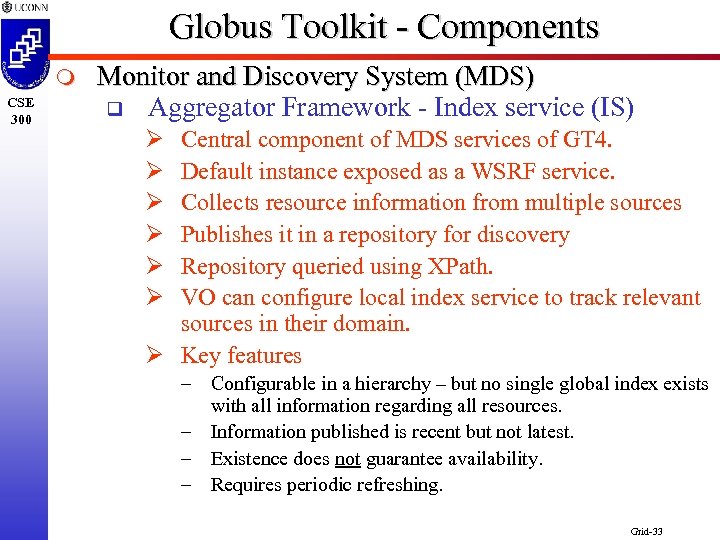

Globus Toolkit - Components m CSE 300 Monitor and Discovery System (MDS) q Aggregator Framework - Index service (IS) Ø Ø Ø Central component of MDS services of GT 4. Default instance exposed as a WSRF service. Collects resource information from multiple sources Publishes it in a repository for discovery Repository queried using XPath. VO can configure local index service to track relevant sources in their domain. Ø Key features – Configurable in a hierarchy – but no single global index exists with all information regarding all resources. – Information published is recent but not latest. – Existence does not guarantee availability. – Requires periodic refreshing. Grid-33

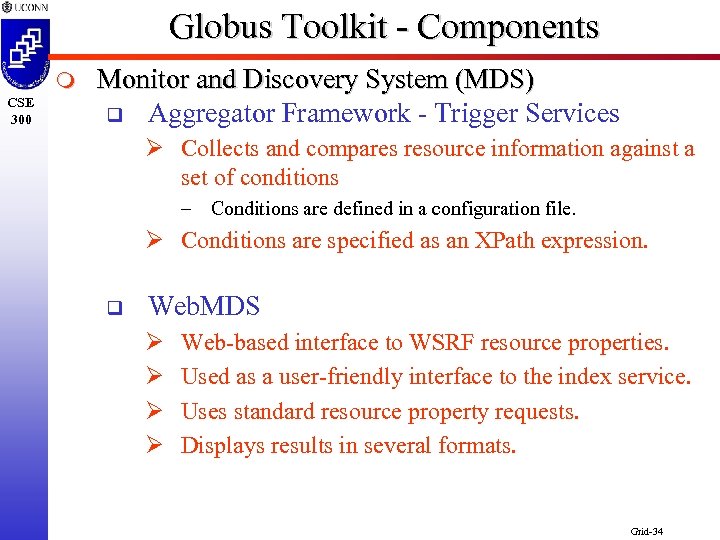

Globus Toolkit - Components m CSE 300 Monitor and Discovery System (MDS) q Aggregator Framework - Trigger Services Ø Collects and compares resource information against a set of conditions – Conditions are defined in a configuration file. Ø Conditions are specified as an XPath expression. q Web. MDS Ø Ø Web-based interface to WSRF resource properties. Used as a user-friendly interface to the index service. Uses standard resource property requests. Displays results in several formats. Grid-34

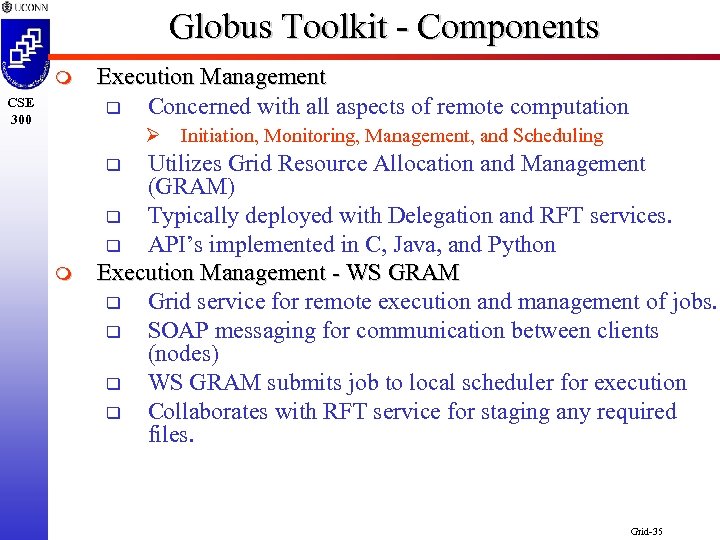

Globus Toolkit - Components m CSE 300 Execution Management q Concerned with all aspects of remote computation Ø Initiation, Monitoring, Management, and Scheduling Utilizes Grid Resource Allocation and Management (GRAM) q Typically deployed with Delegation and RFT services. q API’s implemented in C, Java, and Python Execution Management - WS GRAM q Grid service for remote execution and management of jobs. q SOAP messaging for communication between clients (nodes) q WS GRAM submits job to local scheduler for execution q Collaborates with RFT service for staging any required files. q m Grid-35

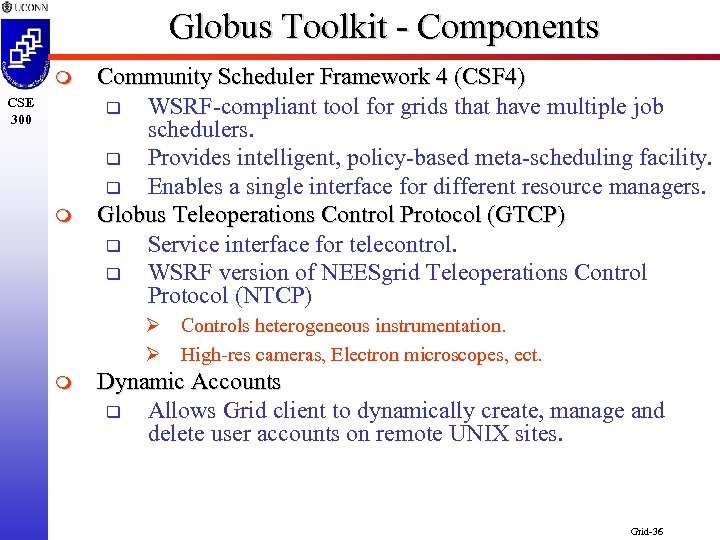

Globus Toolkit - Components m CSE 300 m Community Scheduler Framework 4 (CSF 4) q WSRF-compliant tool for grids that have multiple job schedulers. q Provides intelligent, policy-based meta-scheduling facility. q Enables a single interface for different resource managers. Globus Teleoperations Control Protocol (GTCP) q Service interface for telecontrol. q WSRF version of NEESgrid Teleoperations Control Protocol (NTCP) Ø Controls heterogeneous instrumentation. Ø High-res cameras, Electron microscopes, ect. m Dynamic Accounts q Allows Grid client to dynamically create, manage and delete user accounts on remote UNIX sites. Grid-36

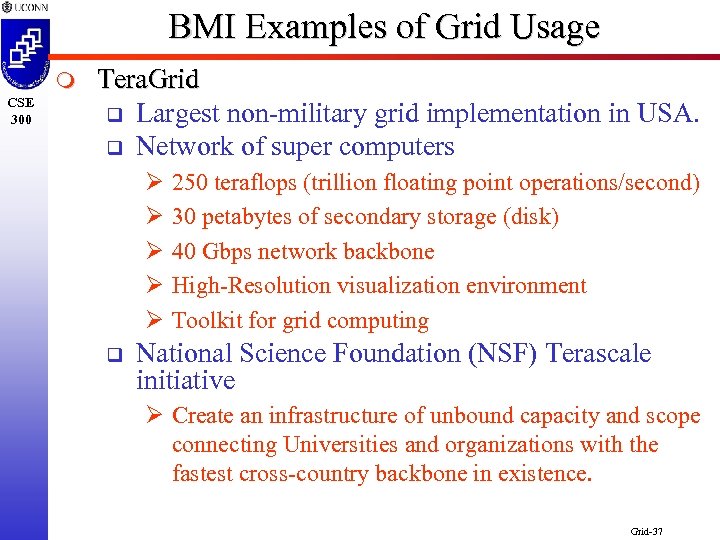

BMI Examples of Grid Usage m CSE 300 Tera. Grid q Largest non-military grid implementation in USA. q Network of super computers Ø 250 teraflops (trillion floating point operations/second) Ø 30 petabytes of secondary storage (disk) Ø 40 Gbps network backbone Ø High-Resolution visualization environment Ø Toolkit for grid computing q National Science Foundation (NSF) Terascale initiative Ø Create an infrastructure of unbound capacity and scope connecting Universities and organizations with the fastest cross-country backbone in existence. Grid-37

BMI Examples of Grid Usage CSE 300 m Tera. Grid q Currently composed of 11 super computers across the USA. q Each site contributes resources and expertise to create the largest computer grid in USA. q Primary usage is to support scientific research q Medical field usage: Ø Brain imaging. Ø Drug interaction with cancer cells. Grid-38

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q Indiana University (IU) Ø “Big Red” - Big Red is a distributed shared-memory cluster, consisting of 768 IBM JS 21 Blades, each with two dual-core Power. PC 970 MP processors, 8 GB of memory, and a PCI-X Myrinet 2000 adapter for high-bandwidth, low-latency Message Passing Interface (MPI) applications. q Joint Institute for Computational Sciences (JICS) Ø University of Tennessee and ORNL Ø Future expansions are being planned that would add a 40 -teraflops Cray XT 3 system to the Tera. Grid. Ø Additional plans to expand to a 170 teraflops Cray XT 4 system which in turn will be upgraded to a 10, 000+ compute socket Cray system of approximately 1 petaflop. Grid-39

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q Louisiana Optical Network Initiative (LONI) Ø “Queen Bee”, the core cluster of LONI, is a 50. 7 Teraflops Peak Performance 668 node Dell Power. Edge 1950 cluster running the Red Hat Enterprise Linux 4 operating system. Each node contains two Quad Core Intel Xeon 2. 33 GHz 64 -bit processors and 8 GB of memory. Ø The cluster is interconnected with 10 GB/sec Infniband has 192 TB of storage in a Lustre file system. Ø Half of Queen Bee's computational cycles have been contributed to the Tera. Grid community. Grid-40

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q Oak Ridge National Laboratory (ORNL) Ø More of a user than a provider. Ø Their users of neutron science facilities (the High Flux Isotope Reactor and the Spallation Neutron Source) will be able to access Tera. Grid resources and services for their data storage, analysis, and simulation. q National Center for Supercomputing Applications (NCSA) Ø University of Illinois Urbana-Champaign Ø Provides 10 teraflops of capability computing through its IBM Linux cluster, which consists of 1, 776 Itanium 2 processors. Ø The NCSA also includes 600 terabytes of secondary storage and 2 petabytes of archival storage capacity. Grid-41

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q Pittsburgh Supercomputing Center (PSC) Ø Provides computational power via its 3, 000 -processor HP Alpha Server system, TCS-1, which offers 6 teraflops of capability coupled uniquely to a 21 -node visualization system. It also provides a 128 -processor, 512 -gigabyte shared-memory HP Marvel system, a 150 terabyte disk cache, and a mass storage system with a capacity of 2. 4 petabytes. q Purdue University Ø Provide 6 teraflops of computing capability Ø 400 terabytes of data storage capacity Ø Visualization resources, access to life science data sets, and a connection to the Purdue Terrestrial Observatory. Grid-42

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q San Diego Supercomputer Center (SDCS) Ø Leads the Tera. Grid data and knowledge management effort. Ø Provides a data-intensive IBM Linux cluster based on Itanium processors, that reaches over 4 teraflops and 540 terabytes of network disk storage. Ø In addition, a portion of SDSC’s IBM 10 -teraflops supercomputer is assigned to the Tera. Grid. Ø An IBM HPSS archive currently stores a petabyte of data. Grid-43

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q Texas Advanced Computing Center (TACC) Ø Provides a 1024 -processor Cray/Dell Xeon-based Linux cluster Ø A 128 -processor Sun E 25 K Terascale visualization machine with 512 gigabytes of shared memory Ø Total of 6. 75 teraflops of computing/visualization capacity. Ø Provides a 50 terabyte Sun storage area network. Ø Only half of the cycles produced by these resources are available to Tera. Grid users. Grid-44

BMI Examples of Grid Usage m CSE 300 Tera. Grid – 11 Sites q University of Chicago/Argonne National Laboratory (UC/ANL) Ø Provides users with high-resolution rendering and remote visualization capabilities via a 1 -teraflop IBM Linux cluster with parallel visualization hardware. q National Center for Atmospheric Research (NCAR) Ø Located in Boulder, CO. Ø “Frost” - Blue. Gene/L computing system. The 2048 processor system brings 250 teraflops of computing capability and more than 30 petabytes of online and archival data storage to the Tera. Grid-45

BMI Examples of Grid Usage m CSE 300 Tera. Grid Applications q The Center for Imaging Science (CIS) at Johns Hopkins University has deployed a shape-based morphometric tools on the Tera. Grid to support the Biomedical Informatics Research Network, a National Institute of Health initiative involving 15 universities and 22 research groups whose work centers on brain imaging of human neurological disorders and associated animal models. q University of Illinois, Urbana-Champaign has a project that uses massive parallelism on the Tera. Grid for major advances in the understanding of membrane proteins. q Another project is also harnessing the Tera. Grid to attack problems in the mechanisms of bioenergetic proteins, the recognition and regulation of DNA by proteins, the molecular basis of lipid metabolism, and the mechanical properties of cells. Grid-46

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Molecular visualization and modeling tool. q Study of geometry and properties of molecules. q Grid. Mol Features Include… Ø Modifying bond lengths and angles. Ø Change dihedral angles. (the angle between two planes that are determined by three connected atoms) Ø Adding or deleting atoms. Ø Adding radicals. q Globus Toolkit based Ø Scheduling tool is non-GT middleware. q q q Coded in Java, Java 3 D, C/C++, and Open. GL. Standalone application or applet for browser. Runs on the China National Grid (CNG) Ø Composed of 8 super computer sites across China Grid-47

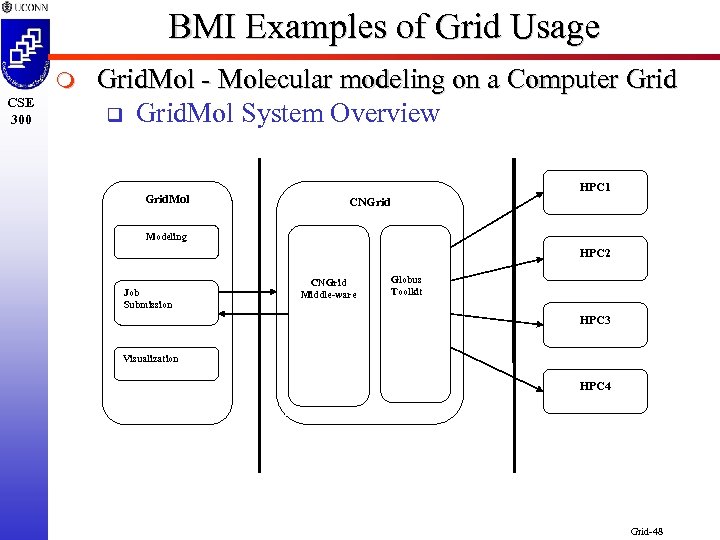

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Grid. Mol System Overview Grid. Mol HPC 1 CNGrid Modeling HPC 2 Job Submission CNGrid Middle-ware Globus Toolkit HPC 3 Visualization HPC 4 Grid-48

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Overview figure points – Ø A job is submitted to the CNGrid Middleware which will execute the application on available High Performance Computer systems (HPC) based on performance requirements. Ø Grid. Mol maintains a history of job descriptions to remember the jobs for future operations. Ø After the job is submitted users can query the status of the job to determine if it has been successfully submitted or has failed. Ø After a job has finished Grid. Mol can be used to analyze the results using several different visualization tools. Ø Grid. Mol complete abstracts the underlying grid infrastructure from the user. Users do not need to know how to submit a job to the grid or on which HPC(s) it will run, which allows the research to focus on the molecule modeling problem and not be bothered with issues related to using the computer grid system. Grid-49

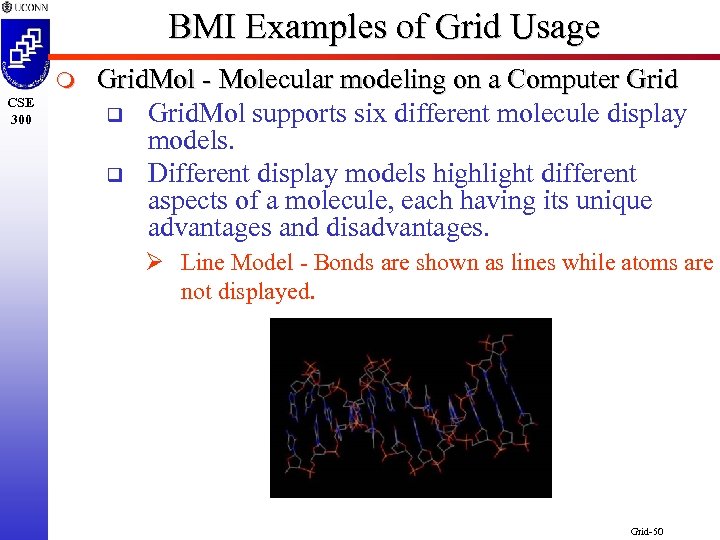

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Grid. Mol supports six different molecule display models. q Different display models highlight different aspects of a molecule, each having its unique advantages and disadvantages. Ø Line Model - Bonds are shown as lines while atoms are not displayed. Grid-50

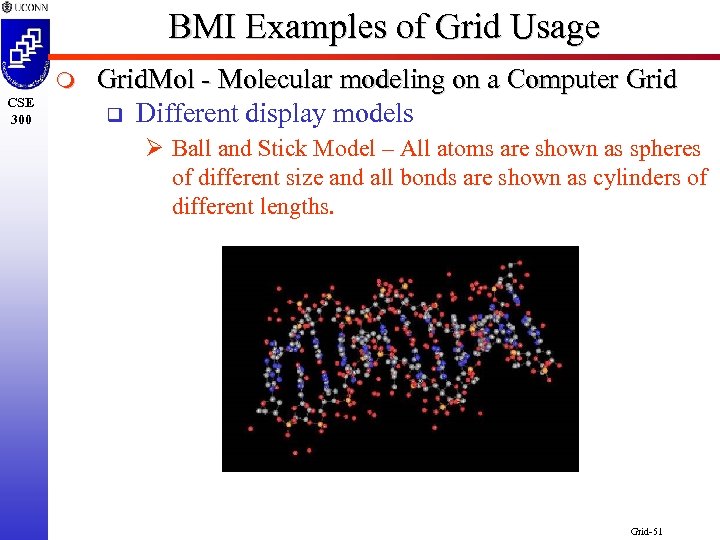

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Different display models Ø Ball and Stick Model – All atoms are shown as spheres of different size and all bonds are shown as cylinders of different lengths. Grid-51

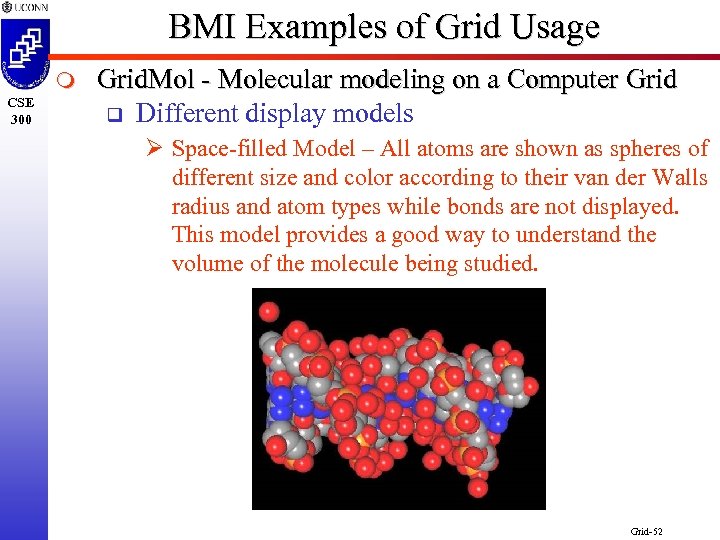

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Different display models Ø Space-filled Model – All atoms are shown as spheres of different size and color according to their van der Walls radius and atom types while bonds are not displayed. This model provides a good way to understand the volume of the molecule being studied. Grid-52

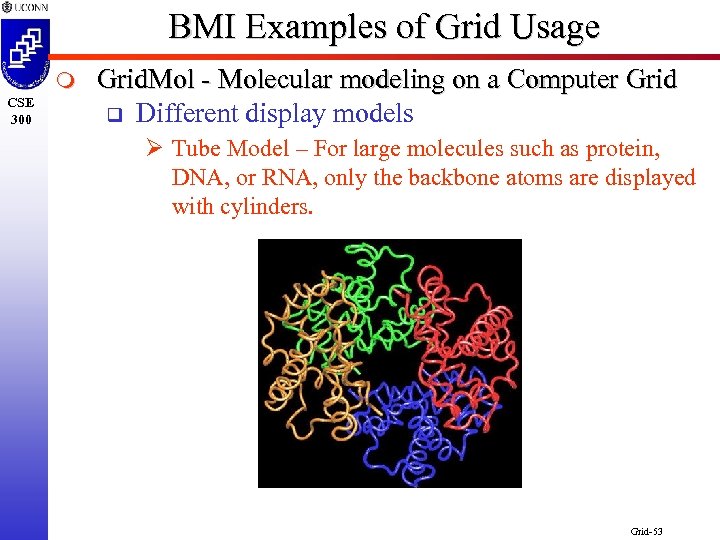

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Different display models Ø Tube Model – For large molecules such as protein, DNA, or RNA, only the backbone atoms are displayed with cylinders. Grid-53

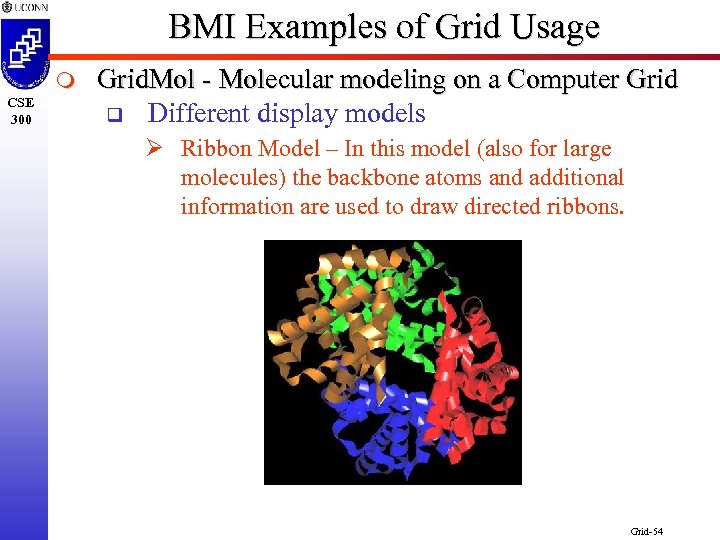

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Different display models Ø Ribbon Model – In this model (also for large molecules) the backbone atoms and additional information are used to draw directed ribbons. Grid-54

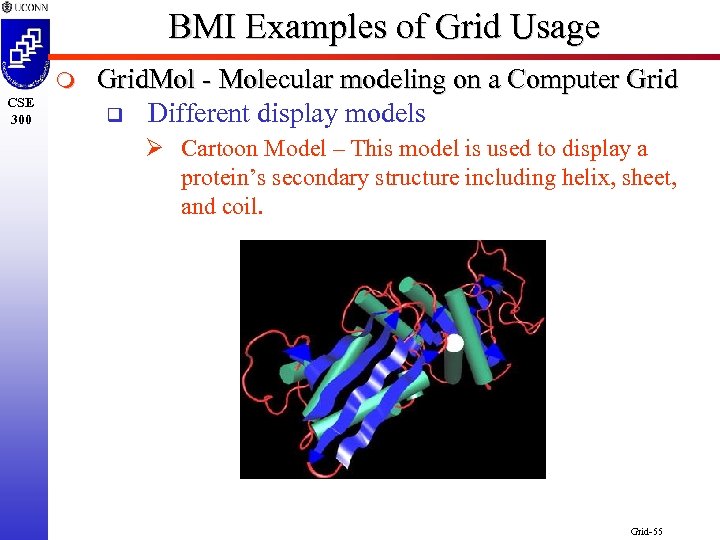

BMI Examples of Grid Usage m CSE 300 Grid. Mol - Molecular modeling on a Computer Grid q Different display models Ø Cartoon Model – This model is used to display a protein’s secondary structure including helix, sheet, and coil. Grid-55

BMI Examples of Grid Usage CSE 300 m Genetic Research q Analysis and discovery of Gene sequences requires massive computational power. q Current techniques in this field generate increasing amounts of complex data sets. Ø Knowledge discovery algorithms Ø Data Mining Ø Remote collaboration between experts q Perfect candidate for computational grid architecture. Grid-56

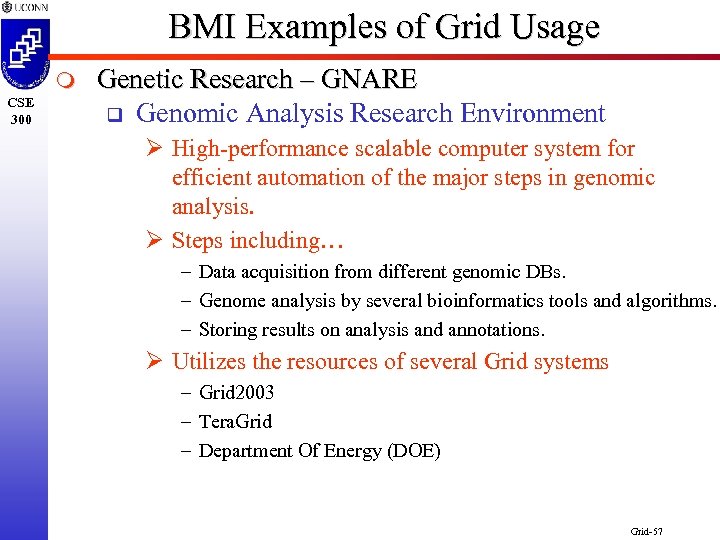

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q Genomic Analysis Research Environment Ø High-performance scalable computer system for efficient automation of the major steps in genomic analysis. Ø Steps including… – Data acquisition from different genomic DBs. – Genome analysis by several bioinformatics tools and algorithms. – Storing results on analysis and annotations. Ø Utilizes the resources of several Grid systems – Grid 2003 – Tera. Grid – Department Of Energy (DOE) Grid-57

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q Composed of three major parts Ø GUDA Ø Integrated Database Ø Web-based apps to run the system. q GUDA Ø “Heart” of the system. Ø Gateway to the grid, handling all computational analysis. Ø Automated, scalable, high-throughput workflow engine. Ø Executes computationally intensive workflows on the grid. Ø Interfaces to the Integrated database. Grid-58

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q GNARE Architecture Grid-59

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q Integrated Database Ø Holds the genome sequence data and annotations from monitored public databases. Ø Hold results of data analysis from GADU update engine. q Web-based applications Ø Front-end for GUDA’s analysis services. Ø Integrated database. q GADU details Ø Heart of the system Ø Diagram on next slide… Grid-60

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q GADU Architecture 7 Grid-61

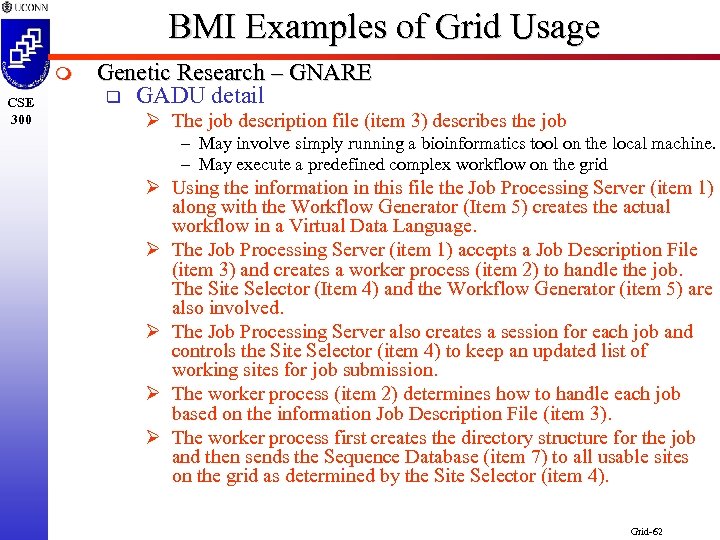

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q GADU detail Ø The job description file (item 3) describes the job – May involve simply running a bioinformatics tool on the local machine. – May execute a predefined complex workflow on the grid Ø Using the information in this file the Job Processing Server (item 1) along with the Workflow Generator (Item 5) creates the actual workflow in a Virtual Data Language. Ø The Job Processing Server (item 1) accepts a Job Description File (item 3) and creates a worker process (item 2) to handle the job. The Site Selector (Item 4) and the Workflow Generator (item 5) are also involved. Ø The Job Processing Server also creates a session for each job and controls the Site Selector (item 4) to keep an updated list of working sites for job submission. Ø The worker process (item 2) determines how to handle each job based on the information Job Description File (item 3). Ø The worker process first creates the directory structure for the job and then sends the Sequence Database (item 7) to all usable sites on the grid as determined by the Site Selector (item 4). Grid-62

BMI Examples of Grid Usage m CSE 300 Genetic Research – GNARE q Additional uses include Ø Bio-Defense research Ø Structural Biology Ø Bioremediation q Benefits Ø Reduced Human intervention to process genome sequences. Ø Dramatic reduction in time to process a sequence. Ø Simplifies the analysis of newly sequenced genomes. Grid-63

BMI Examples of Grid Usage m CSE 300 Genetic Research – Grid-Allegro q “Marries” the Allegro genome analysis S/W with a computational grid architecture. Ø Goal - Improve Performance q Allegro is a genome linkage analysis tool Ø Readily sub-dividable into multiple sub-jobs. – Perfect for the massive parallel processing power of a grid. Ø Genome type simulation on stand-alone PC is “doable” – But…takes weeks or months depending on the number of genome types and pedigrees. – Grid can help with this! q Grid-Allegro implemented on Swegrid VO. Ø Globus-based Swedish national computer grid. Ø 600 computers in 6 clusters located across Sweden. Grid-64

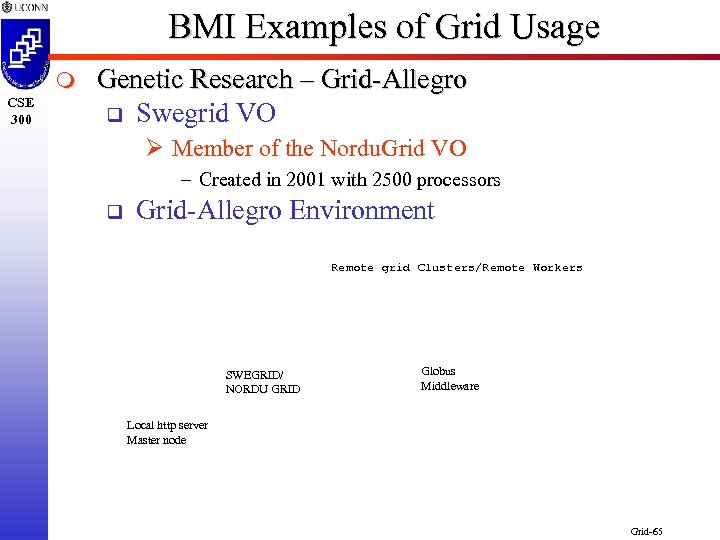

BMI Examples of Grid Usage m CSE 300 Genetic Research – Grid-Allegro q Swegrid VO Ø Member of the Nordu. Grid VO – Created in 2001 with 2500 processors q Grid-Allegro Environment Remote grid Clusters/Remote Workers SWEGRID/ NORDU GRID Globus Middleware Local http server Master node Grid-65

BMI Examples of Grid Usage m CSE 300 Genetic Research – Grid-Allegro q Grid-Allegro system overview Ø Two programs, written in Perl, were created to implement the Grid-Allegro system. Ø (Gridallegrosteep 1. pl) runs locally on the master node – prepare the input files that will be submitted to the grid – create a specific number of grid jobs using the Globus Resource Specification Language (RSL). Ø The Grid-broker program (the second program named gridallegrosteep 2. pl) handles the distribution of the jobs to the remote nodes of the grid. – constantly evaluating the status of each job, managing resubmissions in the case of failure or excessive delay in a grid scheduling queue – collects the output results when completed by a remote node. Grid-66

BMI Examples of Grid Usage m CSE 300 Genetic Research – Grid-Allegro q Performance improvements Ø Grid-Allegro system was tested with an analysis of Swedish families with Alzheimer’s disease (AD). Ø The study was conducted on 109 families consisting of 470 individuals. Ø A test of this size (requiring 1000 simulations) using the Allegro system on a 2 GHz/512 Mb PC was calculated to take 1200 days or 3. 2 years. Ø Adding in application movement latency of 12 hours and running with the full complement of nodes (600) the complete analysis took 2. 6 days Ø 62. 4 hours versus 1200 days this is a 461 -fold improvement. Ø No Hardware costs only software! Grid-67

BMI Examples of Grid Usage m CSE 300 Medical Imaging q Mammography Ø Important tool for detection of Breast Cancer Ø Old film-based images made storing and retrieving difficult. – Must be converted to digital. Ø Modern images are digital – no conversion required. Ø Benefits of digital images – – – Allows for faster retrieval and more efficient storage. Allows for analysis by doctors and researchers in remote locations. Easier access to images by hospitals, universities, and research facilities would improve breast cancer screening and diagnosis Ø Need for a system that is able to provide large-scale digital image storage and analysis services, allow multiple medical sites to store, process, and data-mine the images, manage mammograms as digital images, and make theses images available to other hospitals, universities, and research institutions. Ø Data grid architecture would be an excellent choice for these requirements. Grid-68

BMI Examples of Grid Usage m CSE 300 Medical Imaging q Mammography System requirements Ø A typical digital mammographic image is 32 MB with four images taken per patient-examination for a total of 128 MB per patient [3]. Ø Record would need to includes patient demographic, attending physician information, and related examination notes. Ø Must be scalable to support thousands of patients with thousands more added every year for multiple hospitals and clinics. Ø Patient privacy and image access must be controlled to protect against unauthorized viewing and modification. Grid-69

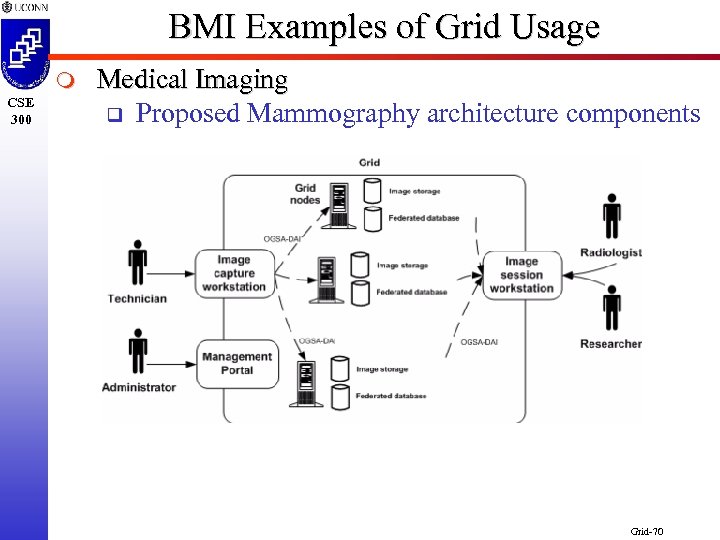

BMI Examples of Grid Usage m CSE 300 Medical Imaging q Proposed Mammography architecture components Grid-70

BMI Examples of Grid Usage m CSE 300 Medical Imaging q Mammography architecture component descriptions Ø Image capture workstation – Technician digitize images and convert them to a high quality data image using the Digital Imaging Communications in Medicine (DICOM) open format. – The DICOM standard was developed by the American College of Radiology along with others. – Simplify the development of image recognition and analysis programs. Ø Grid nodes – Grid nodes are resources provided toby the grid. – Each university, clinic, or research facility that participates will add servers along with image storage for DICOM files. – Each node would have a relational federated database to store patient data and image metadata. Grid-71

BMI Examples of Grid Usage m CSE 300 Medical Imaging q Mammography architecture component descriptions Ø Image session workstation – Radiologists and researchers retrieve the images and related data to perform diagnostics and research related activities. Ø Management portal – A centrally located component used by Administrators to perform management tasks, such as managing the system workload and capacity. Ø OGSA-DAI – Open Grid Service Architecture – Data Access Integration – provides a standard interface for a distributed query processing system to access data in different databases using SOA and open standards. Grid-72

BMI Examples of Grid Usage m CSE 300 Medical Imaging q Mammography architecture component descriptions Ø Security and Privacy – Data is protected using cryptology and other security strategies defined in the OGSA standard. q Current implementation Ø Using GT 4 architecture. Ø Includes four screening centers and five universities with approximately 35 staff members with 256 Terabytes of data being stored per year. Ø Only supports mammography but future plans include expanding to other digital images and expanding the amount of participating members to include world-wide facilities. Ø Also data mining technologies so a researcher can find “similar” images is being planed for future releases. Grid-73

BMI Examples of Grid Usage m CSE 300 Neuroimaging q Helps physicians and researchers expand their knowledge and understanding of the brain. q Help with detecting abnormalities and cancers. q Includes imaging technologies Ø Positron Emission Tomography (PET) Ø functional Magnetic Resonance Imaging (f. MRI) q q Computational infrastructure advancements required in the storage, analysis, and sharing of f. MRI data. To properly analyze f. MRI’s of the brain requires repeated averaging of subsections of time series (TS) data and correlating this TS data. Grid-74

BMI Examples of Grid Usage m CSE 300 Neuroimaging q System proposed in paper [Uri Hasson. Improving the analysis, storage and sharing of neuroimaging data using relational databases and distributed computing. Neuro. Image 39 (2008)] q Proposes a Grid enabled DBMS based approach to handle the computational demands for imaging research, storage, and analysis of f. MRI data. q Requirements of the proposed system Ø efficiently store data. Ø enable rapid selection of data. Ø make data easily accessible for both local and remote users. Grid-75

BMI Examples of Grid Usage m CSE 300 Neuroimaging q The sited advantages of a database-centric framework includes Ø Using the DBMS for storage and sharing data Ø Takes advantage of the DBMS capabilities by making the database an integral part of the f. MRI data analysis workflow. q System Architecture Ø Distributed clients pull data from a central server and work independently and simultaneously to conduct their analysis. Ø The server maintains a relational database that store the data that are to be analyzed as well as the metadata (assignment of nodes to anatomical regions of interest). Ø Regional replications of the database are maintained for localityperformance and backup. – TS data set can be 10 Gig or more localized replicas reduce NW latency issues. – Also helpful with db concurrency performance. Grid-76

BMI Examples of Grid Usage m CSE 300 Neuroimaging q System diagram Grid-77

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q Drug design uses a molecular modeling technique that requires the screening of millions of molecular compounds that are located in a chemical database (CDB) to identify those that are potential useful drugs. q Lengthy process that can take up to 15 years from the first compound synthesis in the laboratory to the drug being available to the consumer. q This process has been estimated to cost an average of $800 Million dollars per drug [3]. q This process is referred to as molecular docking. q Docking helps scientists predict how small molecules chemically bind to an enzyme or a protein receptor of a known three-dimensional molecular structure Grid-78

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design Grid-79

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q Docking process is both a computational and data intensive task Ø makes it a perfect candidate for computer grid technologies. q To perform molecular docking requires information about the molecule that is located in one of many large CDB’s. Ø Each CDB requires storage as larges a 1 Terabyte. Ø However each docking process only requires one molecular compound record (ligand[1]), not the entire database. [1] An Ion, a molecule or a molecular group that binds to another chemical entity to form a larger complex entity. [4] Grid-80

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q Designing a drug requires the screening of millions of ligands located in different CDB’s. q Depending on the complexity of the compound a single screening may take anywhere between a few minutes to a few hours on a standard PC. Ø Screening all compounds in a single database can take years! Ø A drug design problem that involves screening 180, 000 compounds with each compound screening job taking three hours on a desktop PC requires (180, 000 x 3) 540, 000 hours or roughly 61 years! Grid-81

BMI Examples of Grid Usage CSE 300 m Drug Discovery and Design q Screening process can be implemented in parallel utilizing grid technology Ø Depending on the grid size this time (61 years) can be significantly reduced. q q Reference [4] proposes a “Virtual Laboratory Tool” that transforms existing molecular modeling applications so they can be processed in parallel. Sub-Jobs require minimal CDB access but a computationally intense. Grid-82

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q Virtual Laboratory (VL) workflow… Ø The drug designer formulates the molecular docking problem. Ø The problem is submitted to the Grid Resource Broker, along with performance and optimization requirements. Ø The broker discovers the resources, establishing their cost and capabilities. Ø A schedule is then prepared to map docking jobs to resources. Ø The broker dispatcher deploys its agents to the appropriate resources. Ø The agent executes a list of commands specified in the job’s task specification. Grid-83

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q Virtual Laboratory (VL) workflow… Ø Typical tasks may include – Copy executables and input files from the user machine or extract records from a remote CDB. – Substitute parameters declared in the input file. – Execution of the program. – Copy the results back to the user. q Virtual Laboratory features Ø VL builds on existing grid technologies. Ø Provides new tools for managing and accessing remote CDB as a network service. Grid-84

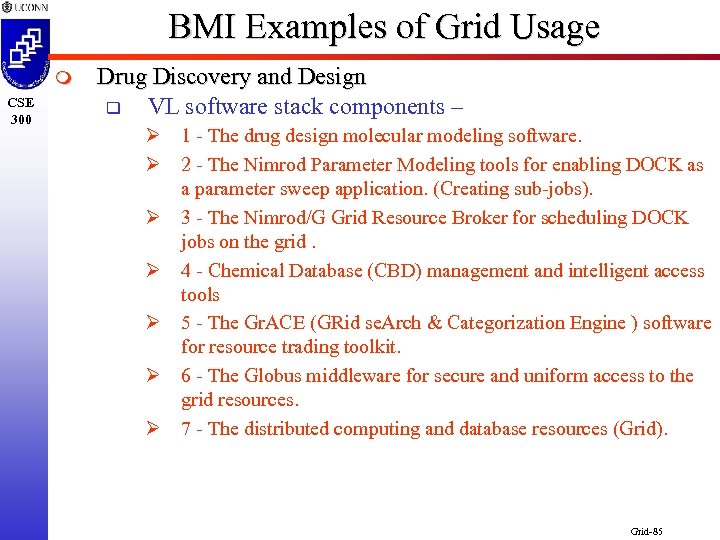

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q VL software stack components – Ø 1 - The drug design molecular modeling software. Ø 2 - The Nimrod Parameter Modeling tools for enabling DOCK as a parameter sweep application. (Creating sub-jobs). Ø 3 - The Nimrod/G Grid Resource Broker for scheduling DOCK jobs on the grid. Ø 4 - Chemical Database (CBD) management and intelligent access tools Ø 5 - The Gr. ACE (GRid se. Arch & Categorization Engine ) software for resource trading toolkit. Ø 6 - The Globus middleware for secure and uniform access to the grid resources. Ø 7 - The distributed computing and database resources (Grid). Grid-85

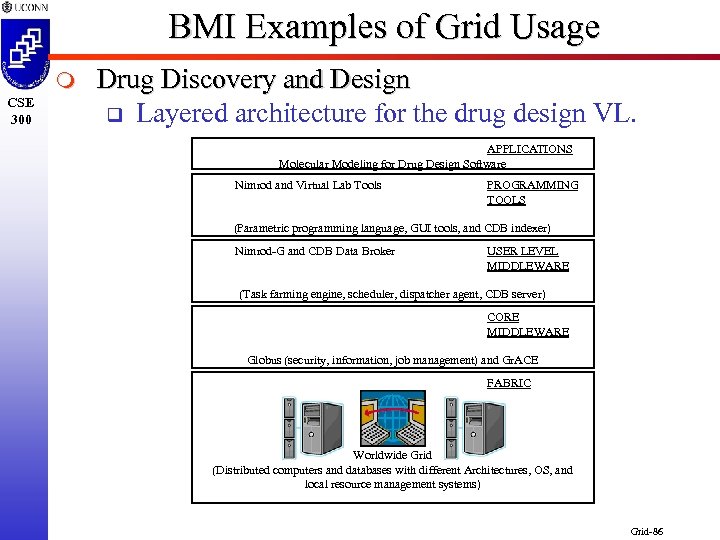

BMI Examples of Grid Usage m CSE 300 Drug Discovery and Design q Layered architecture for the drug design VL. APPLICATIONS Molecular Modeling for Drug Design Software Nimrod and Virtual Lab Tools PROGRAMMING TOOLS (Parametric programming language, GUI tools, and CDB indexer) Nimrod-G and CDB Data Broker USER LEVEL MIDDLEWARE (Task farming engine, scheduler, dispatcher agent, CDB server) CORE MIDDLEWARE Globus (security, information, job management) and Gr. ACE FABRIC Worldwide Grid (Distributed computers and databases with different Architectures, OS, and local resource management systems) Grid-86

Topics in BMI: Grid Computing CSE 300 m Conclusion q Computational grid systems bring together multiple computer system resources. q Create a (virtual) massive super computer for use by people and organizations to address a particular computational need (VO). q BMI applications can be particularly computationally challenging. Ø Requiring years to complete on conventional PC’s. q Utilizing grid technologies have shown a 400+ fold increase in performance. Ø 1200 days to 2. 6 days. Grid-87

Topics in BMI: Grid Computing m CSE 300 Conclusion q What a Grid enables… Ø Lessoning time for Mammography analysis and diagnosis. Ø Enabling analysis of the Human genome at a much faster pace. Ø Help with brain scan analysis and diagnosis. Ø Quicken the process for drug discovery. Ø Allowing for better and faster research in all fields. q q Grid technology is saving Time, Money, and LIVES! Can you think of a better use for a computer? Grid-88

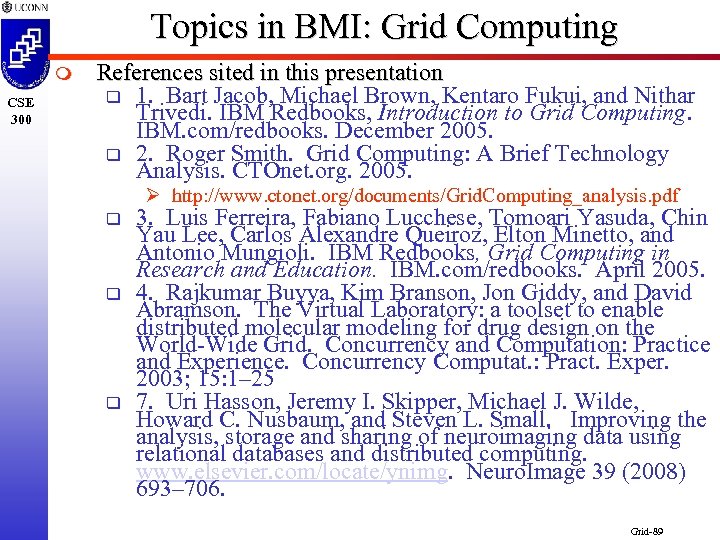

Topics in BMI: Grid Computing m CSE 300 References sited in this presentation q 1. Bart Jacob, Michael Brown, Kentaro Fukui, and Nithar Trivedi. IBM Redbooks, Introduction to Grid Computing. IBM. com/redbooks. December 2005. q 2. Roger Smith. Grid Computing: A Brief Technology Analysis. CTOnet. org. 2005. Ø http: //www. ctonet. org/documents/Grid. Computing_analysis. pdf q q q 3. Luis Ferreira, Fabiano Lucchese, Tomoari Yasuda, Chin Yau Lee, Carlos Alexandre Queiroz, Elton Minetto, and Antonio Mungioli. IBM Redbooks, Grid Computing in Research and Education. IBM. com/redbooks. April 2005. 4. Rajkumar Buyya, Kim Branson, Jon Giddy, and David Abramson. The Virtual Laboratory: a toolset to enable distributed molecular modeling for drug design on the World-Wide Grid. Concurrency and Computation: Practice and Experience. Concurrency Computat. : Pract. Exper. 2003; 15: 1– 25 7. Uri Hasson, Jeremy I. Skipper, Michael J. Wilde, Howard C. Nusbaum, and Steven L. Small. Improving the analysis, storage and sharing of neuroimaging data using relational databases and distributed computing. www. elsevier. com/locate/ynimg. Neuro. Image 39 (2008) 693– 706. Grid-89

Topics in BMI: Grid Computing m Questions? m Comments? m Want to join a Grid system to help the world? q http: //www. worldcommunitygrid. org/ q http: //boinc. berkeley. edu/ m Thank you! CSE 300 Grid-90

bcaedc85522c4d0b1a110e4c93669597.ppt