f055a32d58984ee75b08a7d0a6466bbf.ppt

- Количество слайдов: 44

Three (and a half) Trends: The Near Future of NLP Eduard Hovy Information Sciences Institute University of Southern California

Three (and a half) Trends: The Near Future of NLP Eduard Hovy Information Sciences Institute University of Southern California

Talk overview • • • Introduction 3 important lessons since 2001 NLP is notation transformation Three styles for future work Conclusion

Talk overview • • • Introduction 3 important lessons since 2001 NLP is notation transformation Three styles for future work Conclusion

Three Important Lessons Since 2001

Three Important Lessons Since 2001

Lesson 1: Banko and Brill, HLT-01 • Confusion set disambiguation task: {you’re | your}, {to | too | two}, {its | it’s} • 5 Algorithms: ngram table, winnow, perceptron, transformation-based learning, decision trees • Training: 106 109 words You don’t have to be smart, • Lessons: just need enough training data you – All methods improved to almost same point – Simple method can end above complex one – Don’t waste your time with algorithms and optimization

Lesson 1: Banko and Brill, HLT-01 • Confusion set disambiguation task: {you’re | your}, {to | too | two}, {its | it’s} • 5 Algorithms: ngram table, winnow, perceptron, transformation-based learning, decision trees • Training: 106 109 words You don’t have to be smart, • Lessons: just need enough training data you – All methods improved to almost same point – Simple method can end above complex one – Don’t waste your time with algorithms and optimization

Lesson 2: Och, ACL-02 • Best MT system in world (Arabic English, by BLEU and NIST, 2002– 2005): Och’s work • Method: learn ngram correspondence patterns (alignment templates) using Max. Ent (log-linear translation model) and trained to maximize BLEU score You don’t have to be smart, you just need enough storage • Approximately: EBMT + Viterbi search • Lesson: the more you store, the better your MT

Lesson 2: Och, ACL-02 • Best MT system in world (Arabic English, by BLEU and NIST, 2002– 2005): Och’s work • Method: learn ngram correspondence patterns (alignment templates) using Max. Ent (log-linear translation model) and trained to maximize BLEU score You don’t have to be smart, you just need enough storage • Approximately: EBMT + Viterbi search • Lesson: the more you store, the better your MT

Storage needs • Unigram translation table: bilingual dictionary – 200 K words in each side (= 2 MB if each word is 10 chars) • Bigram translation table (every bigram): – Lexicon: 200 K = 218 words – Table entries: [200 K words + translations] = 4 1010 entries – Each entry size = 4 words 18 bits = 9 bytes – 4 1010 entries 9 bytes = 36 1010 ≈ 4 1011 bytes = 0, 4 TB (= $1500 at today’s prices!) • Trigram translation table (every trigram): – 1, 2 105 TB — ready in 2008? • Better: store only attested ngrams (up to 5? 7? 9? ), fall back to shorter ones when not in table…

Storage needs • Unigram translation table: bilingual dictionary – 200 K words in each side (= 2 MB if each word is 10 chars) • Bigram translation table (every bigram): – Lexicon: 200 K = 218 words – Table entries: [200 K words + translations] = 4 1010 entries – Each entry size = 4 words 18 bits = 9 bytes – 4 1010 entries 9 bytes = 36 1010 ≈ 4 1011 bytes = 0, 4 TB (= $1500 at today’s prices!) • Trigram translation table (every trigram): – 1, 2 105 TB — ready in 2008? • Better: store only attested ngrams (up to 5? 7? 9? ), fall back to shorter ones when not in table…

Lesson 3: Fleischman and Hovy, ACL-03 • Text mining studies: classify locations and people from free text into fine-grain classes — sometimes better than humans • Recent goal: extract all people and organizations from web • Method: – Download 15 GB text from web and preprocess – Identify named entities (BBN’s Identi. Finder (Bikel et al. 93)) – Extract ones with descriptive ${NNP}*${VBG}*${JJ}*${NN}+${NNP}+ phrases (CN/PN and APOS) – Noisy; so filter (WEKA: Naïve trainer/NN Victor/NNP Valle/NNP Bayes, Decision Tree, Decision List, Support Vector Machines, Boosting, Bagging) – Categorize them in ontology: companies, people, rivers… ABC/NN spokesman/NN Tom/NNP Mackin/NNP official/NN Radio/NNP Vilnius/NNP German/NNP expert/NN Riedhart/NNP Dumez/NN Investment/NNP • Result: over 2 mill examples, collapsing into 1 mill instances – Avg: 2 mentions/instance, 40+ for George W. Bush

Lesson 3: Fleischman and Hovy, ACL-03 • Text mining studies: classify locations and people from free text into fine-grain classes — sometimes better than humans • Recent goal: extract all people and organizations from web • Method: – Download 15 GB text from web and preprocess – Identify named entities (BBN’s Identi. Finder (Bikel et al. 93)) – Extract ones with descriptive ${NNP}*${VBG}*${JJ}*${NN}+${NNP}+ phrases (CN/PN and APOS) – Noisy; so filter (WEKA: Naïve trainer/NN Victor/NNP Valle/NNP Bayes, Decision Tree, Decision List, Support Vector Machines, Boosting, Bagging) – Categorize them in ontology: companies, people, rivers… ABC/NN spokesman/NN Tom/NNP Mackin/NNP official/NN Radio/NNP Vilnius/NNP German/NNP expert/NN Riedhart/NNP Dumez/NN Investment/NNP • Result: over 2 mill examples, collapsing into 1 mill instances – Avg: 2 mentions/instance, 40+ for George W. Bush

Testing quality of instance data • Test: QA on “who is X? ”: – – 100 questions from Ask. Jeeves Compared against ISI’s Text. Map Memory system scored 25% better Over half of questions that Text. Map got wrong could have be smart, You don’t have to benefited from informationenough time you just need in the concept-instance pairs – This method took 10 seconds, Text. Map took ~9 hours • Lesson: don’t waste your time building a fancy QA system

Testing quality of instance data • Test: QA on “who is X? ”: – – 100 questions from Ask. Jeeves Compared against ISI’s Text. Map Memory system scored 25% better Over half of questions that Text. Map got wrong could have be smart, You don’t have to benefited from informationenough time you just need in the concept-instance pairs – This method took 10 seconds, Text. Map took ~9 hours • Lesson: don’t waste your time building a fancy QA system

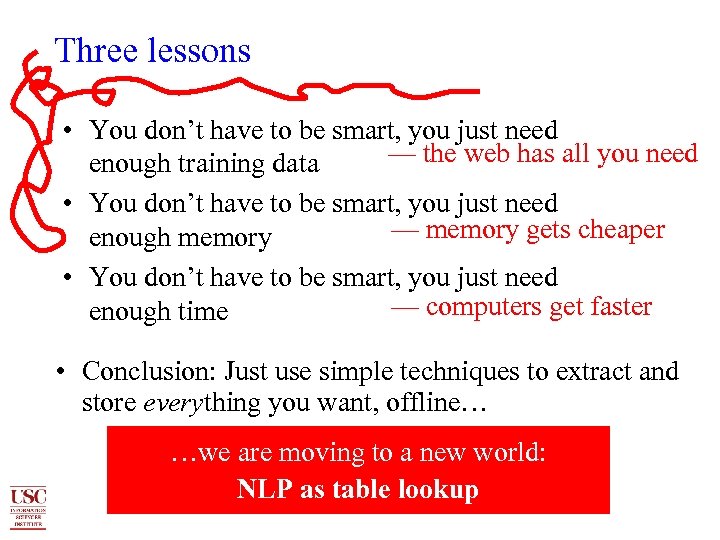

Three lessons • You don’t have to be smart, you just need — the web has all you need enough training data • You don’t have to be smart, you just need — memory gets cheaper enough memory • You don’t have to be smart, you just need — computers get faster enough time • Conclusion: Just use simple techniques to extract and store everything you want, offline… …we are moving to a new world: NLP as table lookup

Three lessons • You don’t have to be smart, you just need — the web has all you need enough training data • You don’t have to be smart, you just need — memory gets cheaper enough memory • You don’t have to be smart, you just need — computers get faster enough time • Conclusion: Just use simple techniques to extract and store everything you want, offline… …we are moving to a new world: NLP as table lookup

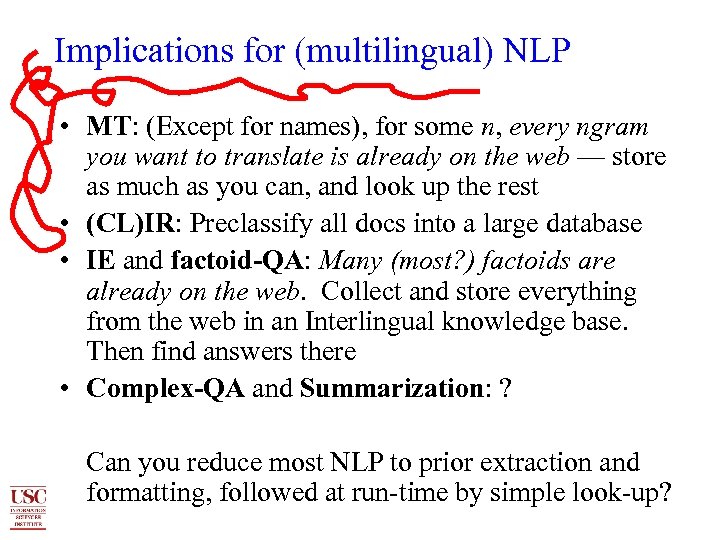

Implications for (multilingual) NLP • MT: (Except for names), for some n, every ngram you want to translate is already on the web — store as much as you can, and look up the rest • (CL)IR: Preclassify all docs into a large database • IE and factoid-QA: Many (most? ) factoids are already on the web. Collect and store everything from the web in an Interlingual knowledge base. Then find answers there • Complex-QA and Summarization: ? Can you reduce most NLP to prior extraction and formatting, followed at run-time by simple look-up?

Implications for (multilingual) NLP • MT: (Except for names), for some n, every ngram you want to translate is already on the web — store as much as you can, and look up the rest • (CL)IR: Preclassify all docs into a large database • IE and factoid-QA: Many (most? ) factoids are already on the web. Collect and store everything from the web in an Interlingual knowledge base. Then find answers there • Complex-QA and Summarization: ? Can you reduce most NLP to prior extraction and formatting, followed at run-time by simple look-up?

3. NLP as Notation Transformation

3. NLP as Notation Transformation

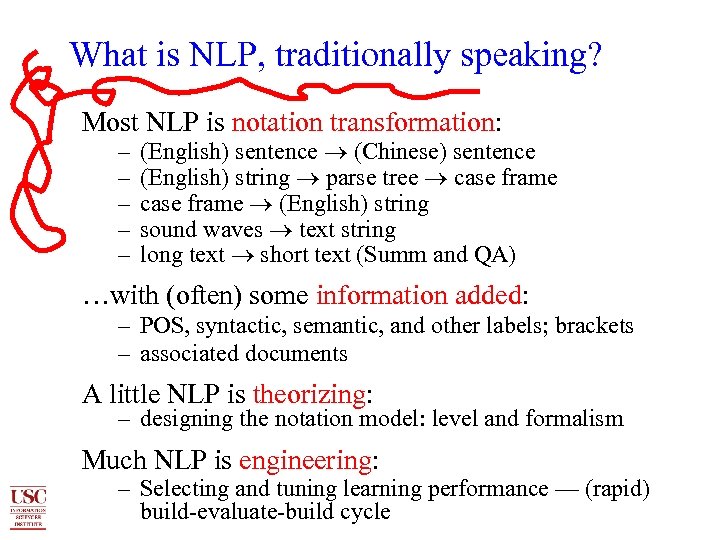

What is NLP, traditionally speaking? Most NLP is notation transformation: – – – (English) sentence (Chinese) sentence (English) string parse tree case frame (English) string sound waves text string long text short text (Summ and QA) …with (often) some information added: – POS, syntactic, semantic, and other labels; brackets – associated documents A little NLP is theorizing: – designing the notation model: level and formalism Much NLP is engineering: – Selecting and tuning learning performance — (rapid) build-evaluate-build cycle

What is NLP, traditionally speaking? Most NLP is notation transformation: – – – (English) sentence (Chinese) sentence (English) string parse tree case frame (English) string sound waves text string long text short text (Summ and QA) …with (often) some information added: – POS, syntactic, semantic, and other labels; brackets – associated documents A little NLP is theorizing: – designing the notation model: level and formalism Much NLP is engineering: – Selecting and tuning learning performance — (rapid) build-evaluate-build cycle

A new option for you • Traditional NLP: you build the transformation rules by hand • Statistical NLP: you build them by machine • Your new options: 1. Traditional analysis (new MT system in 3 years) 2. Statistical approach (new MT language in 1 day) 3. Table lookup (? )

A new option for you • Traditional NLP: you build the transformation rules by hand • Statistical NLP: you build them by machine • Your new options: 1. Traditional analysis (new MT system in 3 years) 2. Statistical approach (new MT language in 1 day) 3. Table lookup (? )

4. Three Styles of Future Work

4. Three Styles of Future Work

Responding to the Change The world is changing—how do you respond? 1. The new traditional style: analysis of phenomena, corpus annotation of transformations, BUT leave system implementation for others 2. The statistical style: typical NLP using statistics — learn to do the (old-style) transformations as annotated in the corpora 3. The greedy style: build big tables for everything and do lookup at run-time

Responding to the Change The world is changing—how do you respond? 1. The new traditional style: analysis of phenomena, corpus annotation of transformations, BUT leave system implementation for others 2. The statistical style: typical NLP using statistics — learn to do the (old-style) transformations as annotated in the corpora 3. The greedy style: build big tables for everything and do lookup at run-time

4. 1 The New Traditional Style: Careful Analysis, No Code

4. 1 The New Traditional Style: Careful Analysis, No Code

Two multilingual annotation projects • IL-Annot: NSF-funded: New Mexico, Maryland, Columbia, MITRE, CMU, ISI (2003– 04) – Goal: develop MT / interlingua representations and test them by human annotation on texts from six languages (Japanese, Arabic, Korean, Spanish, French, English) – Outcomes: • IL design for set of complex representational phenomena • Annotation methodology, manuals, tools, evaluations • Annotated parallel texts according to IL, for training data • Onto. Bank: DARPA-funded: BBN, Penn, ISI (2004–) – Goal: annotate 1 mill words (semantic symbols for nouns, verbs, adjs, advs, cross-refs, and perhaps case roles)

Two multilingual annotation projects • IL-Annot: NSF-funded: New Mexico, Maryland, Columbia, MITRE, CMU, ISI (2003– 04) – Goal: develop MT / interlingua representations and test them by human annotation on texts from six languages (Japanese, Arabic, Korean, Spanish, French, English) – Outcomes: • IL design for set of complex representational phenomena • Annotation methodology, manuals, tools, evaluations • Annotated parallel texts according to IL, for training data • Onto. Bank: DARPA-funded: BBN, Penn, ISI (2004–) – Goal: annotate 1 mill words (semantic symbols for nouns, verbs, adjs, advs, cross-refs, and perhaps case roles)

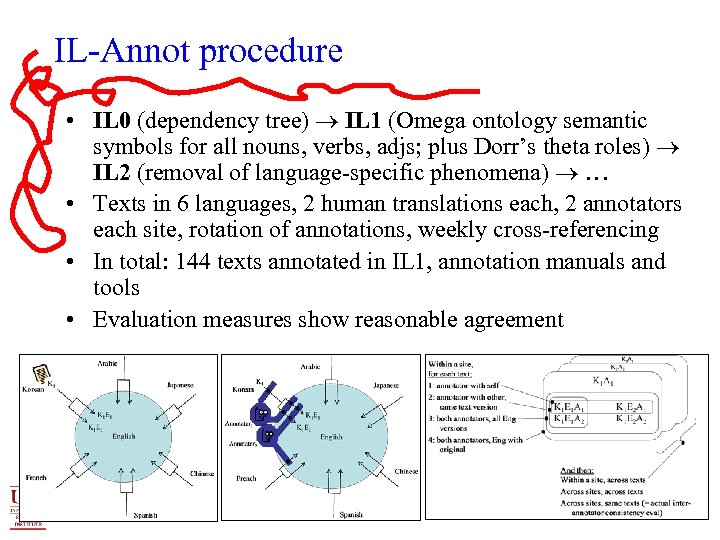

IL-Annot procedure • IL 0 (dependency tree) IL 1 (Omega ontology semantic symbols for all nouns, verbs, adjs; plus Dorr’s theta roles) IL 2 (removal of language-specific phenomena) … • Texts in 6 languages, 2 human translations each, 2 annotators each site, rotation of annotations, weekly cross-referencing • In total: 144 texts annotated in IL 1, annotation manuals and tools • Evaluation measures show reasonable agreement

IL-Annot procedure • IL 0 (dependency tree) IL 1 (Omega ontology semantic symbols for all nouns, verbs, adjs; plus Dorr’s theta roles) IL 2 (removal of language-specific phenomena) … • Texts in 6 languages, 2 human translations each, 2 annotators each site, rotation of annotations, weekly cross-referencing • In total: 144 texts annotated in IL 1, annotation manuals and tools • Evaluation measures show reasonable agreement

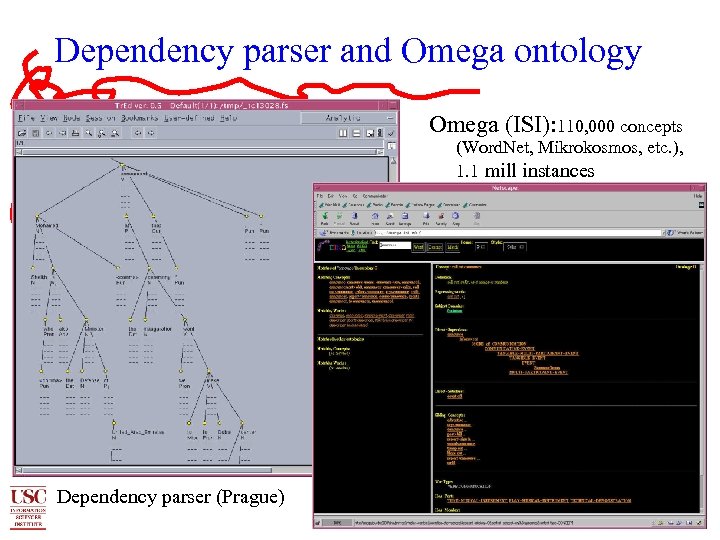

Dependency parser and Omega ontology Omega (ISI): 110, 000 concepts (Word. Net, Mikrokosmos, etc. ), 1. 1 mill instances Dependency parser (Prague)

Dependency parser and Omega ontology Omega (ISI): 110, 000 concepts (Word. Net, Mikrokosmos, etc. ), 1. 1 mill instances Dependency parser (Prague)

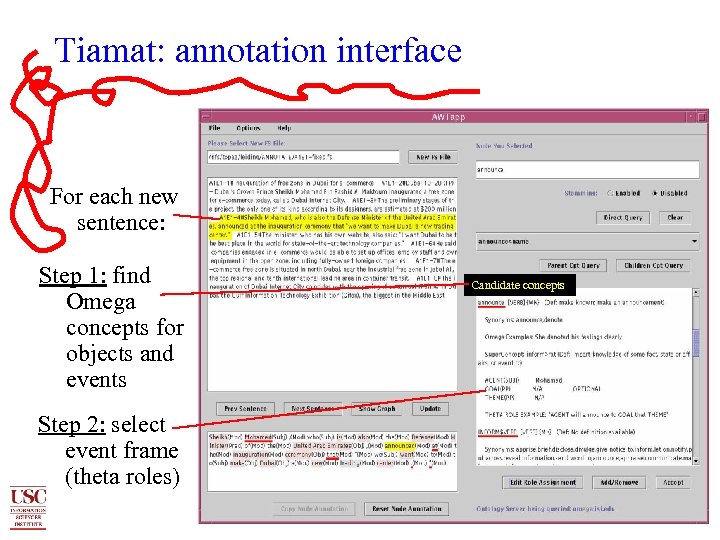

Tiamat: annotation interface For each new sentence: Step 1: find Omega concepts for objects and events Step 2: select event frame (theta roles) Candidate concepts

Tiamat: annotation interface For each new sentence: Step 1: find Omega concepts for objects and events Step 2: select event frame (theta roles) Candidate concepts

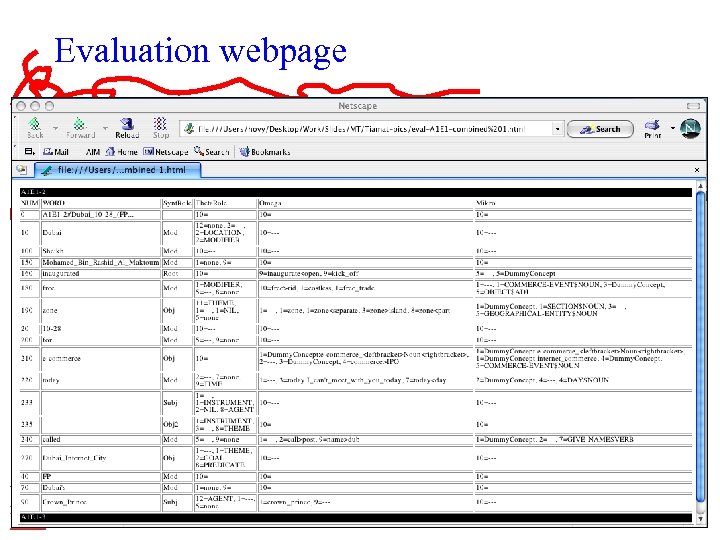

Evaluation webpage

Evaluation webpage

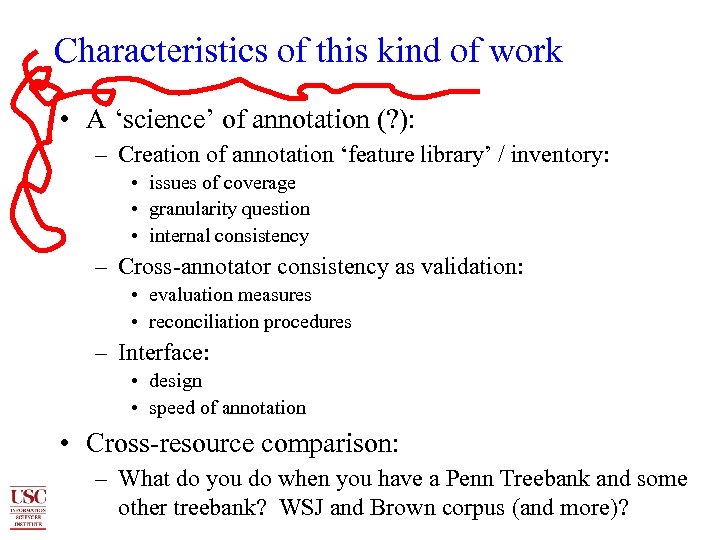

Characteristics of this kind of work • A ‘science’ of annotation (? ): – Creation of annotation ‘feature library’ / inventory: • issues of coverage • granularity question • internal consistency – Cross-annotator consistency as validation: • evaluation measures • reconciliation procedures – Interface: • design • speed of annotation • Cross-resource comparison: – What do you do when you have a Penn Treebank and some other treebank? WSJ and Brown corpus (and more)?

Characteristics of this kind of work • A ‘science’ of annotation (? ): – Creation of annotation ‘feature library’ / inventory: • issues of coverage • granularity question • internal consistency – Cross-annotator consistency as validation: • evaluation measures • reconciliation procedures – Interface: • design • speed of annotation • Cross-resource comparison: – What do you do when you have a Penn Treebank and some other treebank? WSJ and Brown corpus (and more)?

4. 2 The Statistical Style: Learn Traditional Transformations

4. 2 The Statistical Style: Learn Traditional Transformations

A new method for old problems • Most (? ) modern Computational Linguistics: – Statistical models for Part-of-Speech tagging, wordsense disambiguation, parsing, semantic analysis, NL generation, anaphora resolution… – Statistically based MT, IR, summarization, QA, Information Extraction, opinion analysis… – Experimenting with learning algorithms – Corpus creation, for learning systems – Automated evaluation studies, for MT (BLEU) Summarization (ROUGE, BE)… …probably, this is you!

A new method for old problems • Most (? ) modern Computational Linguistics: – Statistical models for Part-of-Speech tagging, wordsense disambiguation, parsing, semantic analysis, NL generation, anaphora resolution… – Statistically based MT, IR, summarization, QA, Information Extraction, opinion analysis… – Experimenting with learning algorithms – Corpus creation, for learning systems – Automated evaluation studies, for MT (BLEU) Summarization (ROUGE, BE)… …probably, this is you!

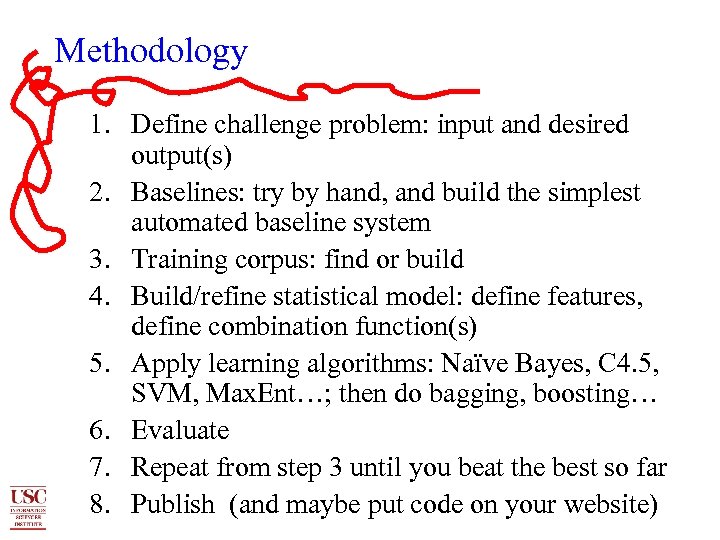

Methodology 1. Define challenge problem: input and desired output(s) 2. Baselines: try by hand, and build the simplest automated baseline system 3. Training corpus: find or build 4. Build/refine statistical model: define features, define combination function(s) 5. Apply learning algorithms: Naïve Bayes, C 4. 5, SVM, Max. Ent…; then do bagging, boosting… 6. Evaluate 7. Repeat from step 3 until you beat the best so far 8. Publish (and maybe put code on your website)

Methodology 1. Define challenge problem: input and desired output(s) 2. Baselines: try by hand, and build the simplest automated baseline system 3. Training corpus: find or build 4. Build/refine statistical model: define features, define combination function(s) 5. Apply learning algorithms: Naïve Bayes, C 4. 5, SVM, Max. Ent…; then do bagging, boosting… 6. Evaluate 7. Repeat from step 3 until you beat the best so far 8. Publish (and maybe put code on your website)

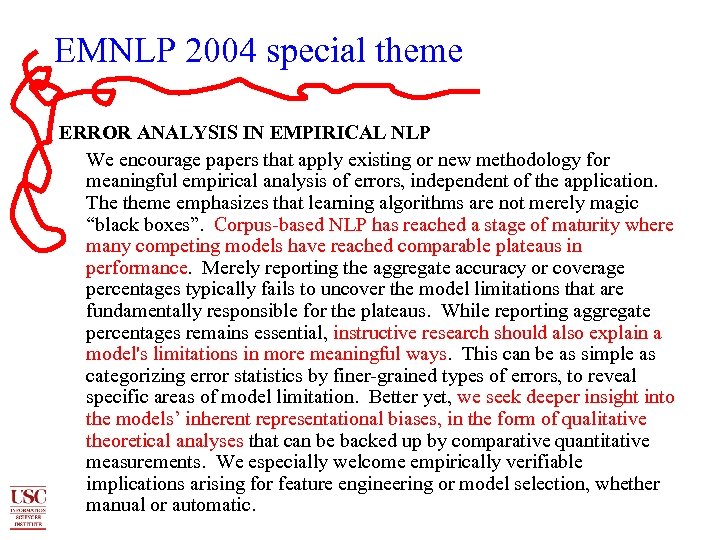

EMNLP 2004 special theme ERROR ANALYSIS IN EMPIRICAL NLP We encourage papers that apply existing or new methodology for meaningful empirical analysis of errors, independent of the application. The theme emphasizes that learning algorithms are not merely magic “black boxes”. Corpus-based NLP has reached a stage of maturity where many competing models have reached comparable plateaus in performance. Merely reporting the aggregate accuracy or coverage percentages typically fails to uncover the model limitations that are fundamentally responsible for the plateaus. While reporting aggregate percentages remains essential, instructive research should also explain a model's limitations in more meaningful ways. This can be as simple as categorizing error statistics by finer-grained types of errors, to reveal specific areas of model limitation. Better yet, we seek deeper insight into the models’ inherent representational biases, in the form of qualitative theoretical analyses that can be backed up by comparative quantitative measurements. We especially welcome empirically verifiable implications arising for feature engineering or model selection, whether manual or automatic.

EMNLP 2004 special theme ERROR ANALYSIS IN EMPIRICAL NLP We encourage papers that apply existing or new methodology for meaningful empirical analysis of errors, independent of the application. The theme emphasizes that learning algorithms are not merely magic “black boxes”. Corpus-based NLP has reached a stage of maturity where many competing models have reached comparable plateaus in performance. Merely reporting the aggregate accuracy or coverage percentages typically fails to uncover the model limitations that are fundamentally responsible for the plateaus. While reporting aggregate percentages remains essential, instructive research should also explain a model's limitations in more meaningful ways. This can be as simple as categorizing error statistics by finer-grained types of errors, to reveal specific areas of model limitation. Better yet, we seek deeper insight into the models’ inherent representational biases, in the form of qualitative theoretical analyses that can be backed up by comparative quantitative measurements. We especially welcome empirically verifiable implications arising for feature engineering or model selection, whether manual or automatic.

4. 3 The Greedy Style: Just Collect Everything

4. 3 The Greedy Style: Just Collect Everything

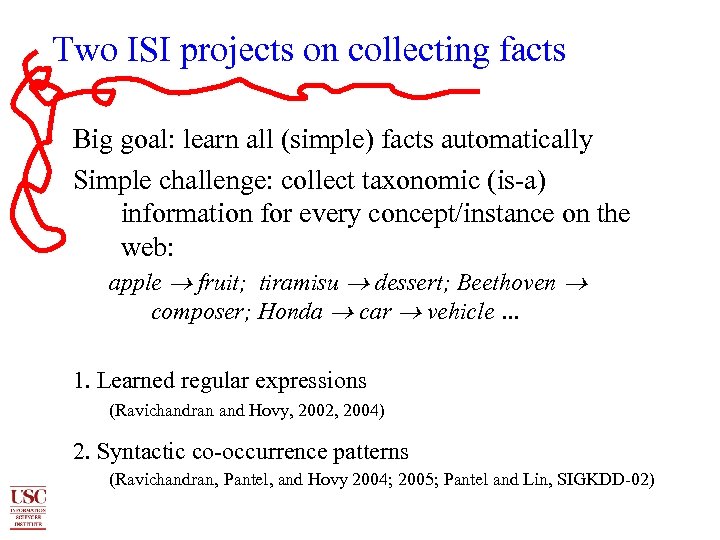

Two ISI projects on collecting facts Big goal: learn all (simple) facts automatically Simple challenge: collect taxonomic (is-a) information for every concept/instance on the web: apple fruit; tiramisu dessert; Beethoven composer; Honda car vehicle … 1. Learned regular expressions (Ravichandran and Hovy, 2002, 2004) 2. Syntactic co-occurrence patterns (Ravichandran, Pantel, and Hovy 2004; 2005; Pantel and Lin, SIGKDD-02)

Two ISI projects on collecting facts Big goal: learn all (simple) facts automatically Simple challenge: collect taxonomic (is-a) information for every concept/instance on the web: apple fruit; tiramisu dessert; Beethoven composer; Honda car vehicle … 1. Learned regular expressions (Ravichandran and Hovy, 2002, 2004) 2. Syntactic co-occurrence patterns (Ravichandran, Pantel, and Hovy 2004; 2005; Pantel and Lin, SIGKDD-02)

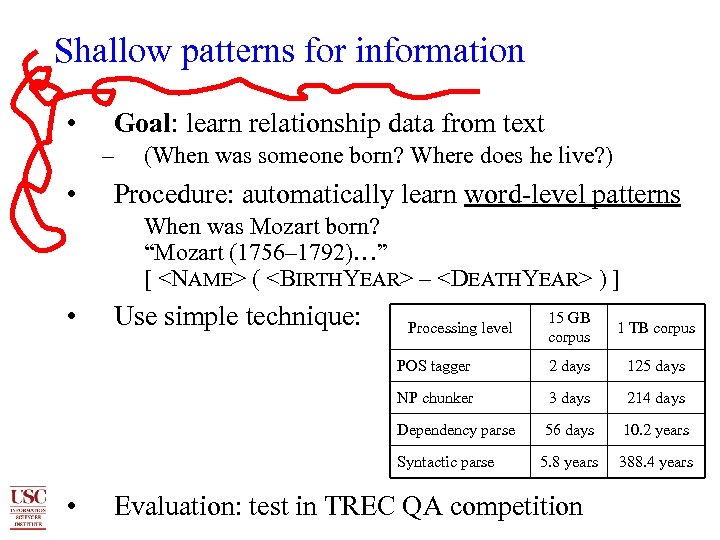

Shallow patterns for information • Goal: learn relationship data from text – • (When was someone born? Where does he live? ) Procedure: automatically learn word-level patterns When was Mozart born? “Mozart (1756– 1792)…” [

Shallow patterns for information • Goal: learn relationship data from text – • (When was someone born? Where does he live? ) Procedure: automatically learn word-level patterns When was Mozart born? “Mozart (1756– 1792)…” [

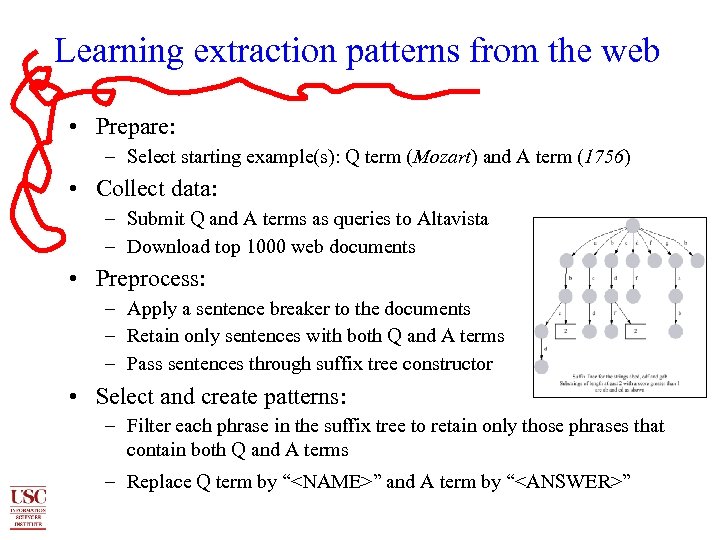

Learning extraction patterns from the web • Prepare: – Select starting example(s): Q term (Mozart) and A term (1756) • Collect data: – Submit Q and A terms as queries to Altavista – Download top 1000 web documents • Preprocess: – Apply a sentence breaker to the documents – Retain only sentences with both Q and A terms – Pass sentences through suffix tree constructor • Select and create patterns: – Filter each phrase in the suffix tree to retain only those phrases that contain both Q and A terms – Replace Q term by “

Learning extraction patterns from the web • Prepare: – Select starting example(s): Q term (Mozart) and A term (1756) • Collect data: – Submit Q and A terms as queries to Altavista – Download top 1000 web documents • Preprocess: – Apply a sentence breaker to the documents – Retain only sentences with both Q and A terms – Pass sentences through suffix tree constructor • Select and create patterns: – Filter each phrase in the suffix tree to retain only those phrases that contain both Q and A terms – Replace Q term by “

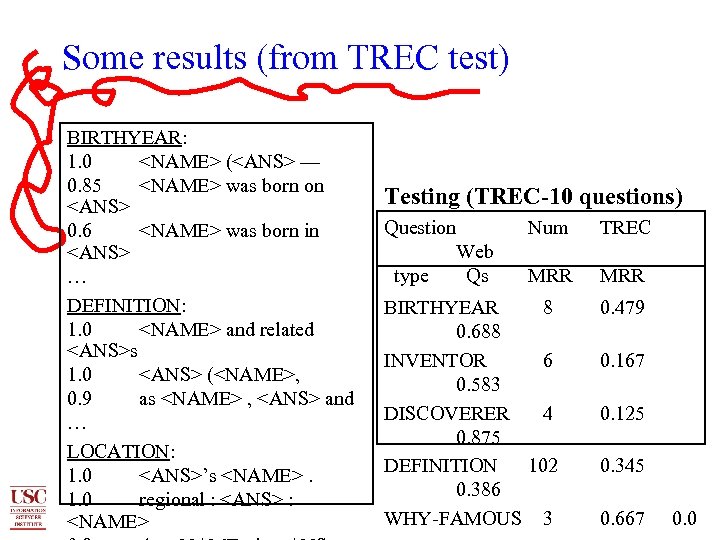

Some results (from TREC test) BIRTHYEAR: 1. 0

Some results (from TREC test) BIRTHYEAR: 1. 0

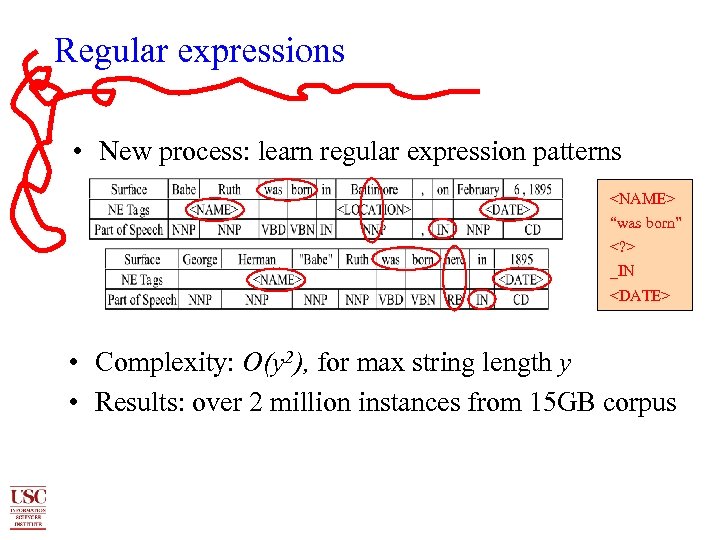

Regular expressions • New process: learn regular expression patterns

Regular expressions • New process: learn regular expression patterns

Pantel and Lin: Clustering By Committee • Very accurate new clustering procedure CBC: – Define syntactic/POS patterns as features (N-N; N-subj-V) – Parse corpus using MINIPAR (D. Lin) – Cluster, using MI on features: (e=word, f=pattern) – Find cluster centroids (using strict criteria): committee – For non-centroid words, match their pattern features to committee’s; if match, include in cluster, remove features – If word has remaining features, try to include in other clusters as well — handle ambiguity • Find name for clusters (superconcepts): – Word shared in apposition, nominal-subj, etc. templates • Complexity: O(n 2 k) for n words in corpus, k features

Pantel and Lin: Clustering By Committee • Very accurate new clustering procedure CBC: – Define syntactic/POS patterns as features (N-N; N-subj-V) – Parse corpus using MINIPAR (D. Lin) – Cluster, using MI on features: (e=word, f=pattern) – Find cluster centroids (using strict criteria): committee – For non-centroid words, match their pattern features to committee’s; if match, include in cluster, remove features – If word has remaining features, try to include in other clusters as well — handle ambiguity • Find name for clusters (superconcepts): – Word shared in apposition, nominal-subj, etc. templates • Complexity: O(n 2 k) for n words in corpus, k features

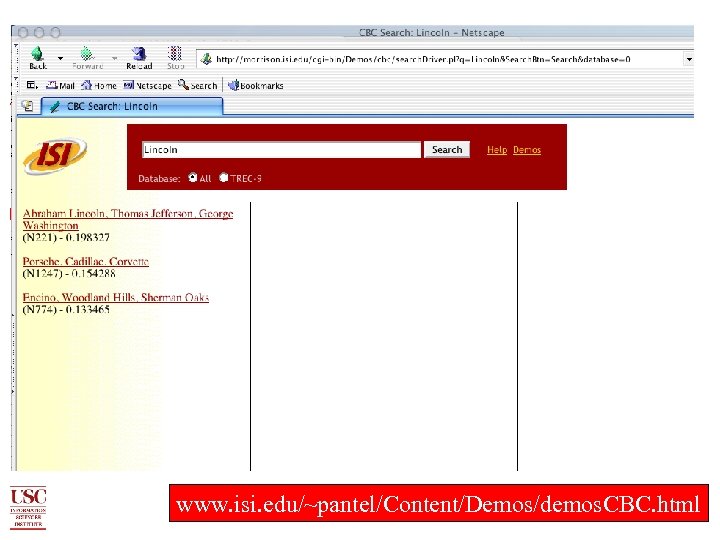

www. isi. edu/~pantel/Content/Demos/demos. CBC. html

www. isi. edu/~pantel/Content/Demos/demos. CBC. html

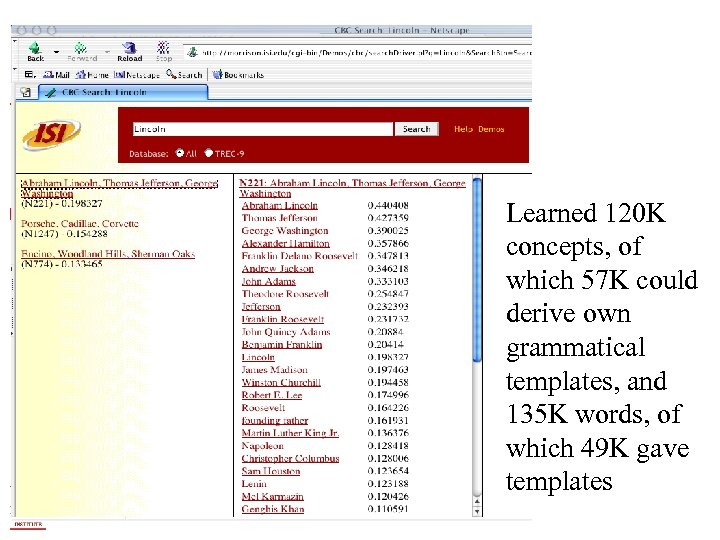

Learned 120 K concepts, of which 57 K could derive own grammatical templates, and 135 K words, of which 49 K gave templates

Learned 120 K concepts, of which 57 K could derive own grammatical templates, and 135 K words, of which 49 K gave templates

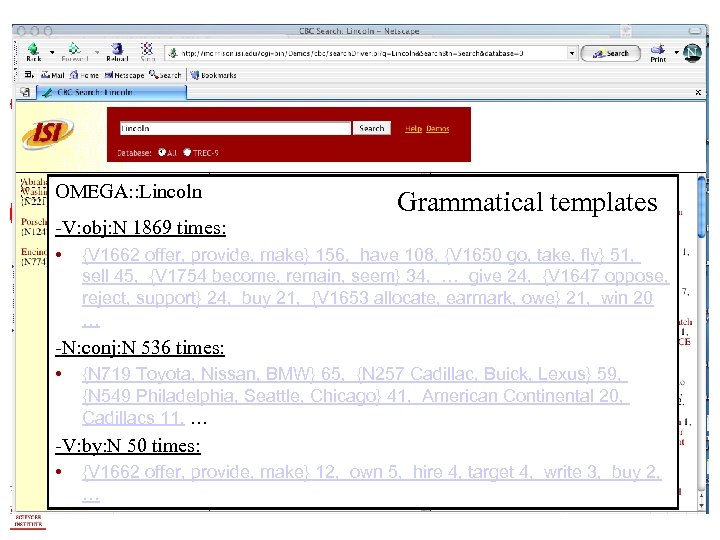

OMEGA: : Lincoln -V: obj: N 1869 times: • Grammatical templates {V 1662 offer, provide, make} 156, have 108, {V 1650 go, take, fly} 51, sell 45, {V 1754 become, remain, seem} 34, … give 24, {V 1647 oppose, reject, support} 24, buy 21, {V 1653 allocate, earmark, owe} 21, win 20 … -N: conj: N 536 times: • {N 719 Toyota, Nissan, BMW} 65, {N 257 Cadillac, Buick, Lexus} 59, {N 549 Philadelphia, Seattle, Chicago} 41, American Continental 20, Cadillacs 11, … -V: by: N 50 times: • {V 1662 offer, provide, make} 12, own 5, hire 4, target 4, write 3, buy 2, …

OMEGA: : Lincoln -V: obj: N 1869 times: • Grammatical templates {V 1662 offer, provide, make} 156, have 108, {V 1650 go, take, fly} 51, sell 45, {V 1754 become, remain, seem} 34, … give 24, {V 1647 oppose, reject, support} 24, buy 21, {V 1653 allocate, earmark, owe} 21, win 20 … -N: conj: N 536 times: • {N 719 Toyota, Nissan, BMW} 65, {N 257 Cadillac, Buick, Lexus} 59, {N 549 Philadelphia, Seattle, Chicago} 41, American Continental 20, Cadillacs 11, … -V: by: N 50 times: • {V 1662 offer, provide, make} 12, own 5, hire 4, target 4, write 3, buy 2, …

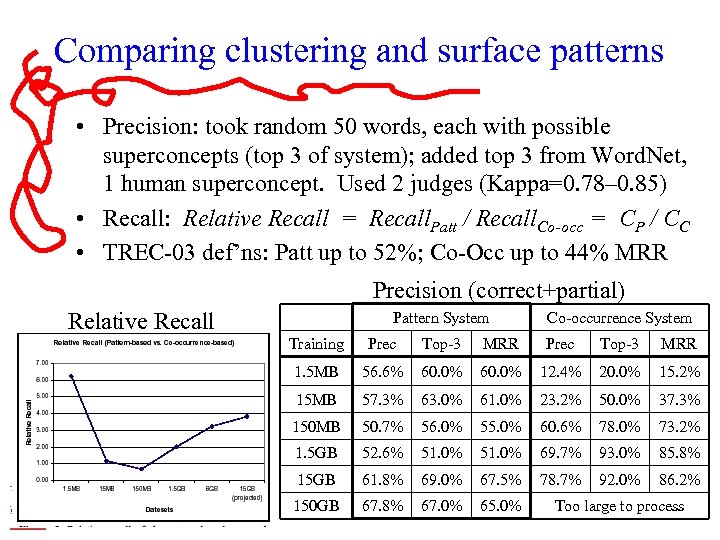

Comparing clustering and surface patterns • Precision: took random 50 words, each with possible superconcepts (top 3 of system); added top 3 from Word. Net, 1 human superconcept. Used 2 judges (Kappa=0. 78– 0. 85) • Recall: Relative Recall = Recall. Patt / Recall. Co-occ = CP / CC • TREC-03 def’ns: Patt up to 52%; Co-Occ up to 44% MRR Precision (correct+partial) Relative Recall Pattern System Co-occurrence System Training Prec Top-3 MRR 1. 5 MB 56. 6% 60. 0% 12. 4% 20. 0% 15. 2% 15 MB 57. 3% 63. 0% 61. 0% 23. 2% 50. 0% 37. 3% 150 MB 50. 7% 56. 0% 55. 0% 60. 6% 78. 0% 73. 2% 1. 5 GB 52. 6% 51. 0% 69. 7% 93. 0% 85. 8% 15 GB 61. 8% 69. 0% 67. 5% 78. 7% 92. 0% 86. 2% 150 GB 67. 8% 67. 0% 65. 0% Too large to process

Comparing clustering and surface patterns • Precision: took random 50 words, each with possible superconcepts (top 3 of system); added top 3 from Word. Net, 1 human superconcept. Used 2 judges (Kappa=0. 78– 0. 85) • Recall: Relative Recall = Recall. Patt / Recall. Co-occ = CP / CC • TREC-03 def’ns: Patt up to 52%; Co-Occ up to 44% MRR Precision (correct+partial) Relative Recall Pattern System Co-occurrence System Training Prec Top-3 MRR 1. 5 MB 56. 6% 60. 0% 12. 4% 20. 0% 15. 2% 15 MB 57. 3% 63. 0% 61. 0% 23. 2% 50. 0% 37. 3% 150 MB 50. 7% 56. 0% 55. 0% 60. 6% 78. 0% 73. 2% 1. 5 GB 52. 6% 51. 0% 69. 7% 93. 0% 85. 8% 15 GB 61. 8% 69. 0% 67. 5% 78. 7% 92. 0% 86. 2% 150 GB 67. 8% 67. 0% 65. 0% Too large to process

5. Conclusion

5. Conclusion

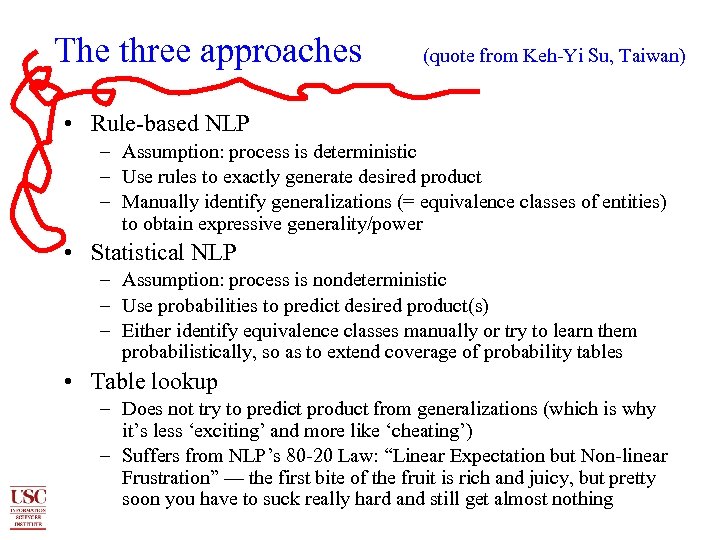

The three approaches (quote from Keh-Yi Su, Taiwan) • Rule-based NLP – Assumption: process is deterministic – Use rules to exactly generate desired product – Manually identify generalizations (= equivalence classes of entities) to obtain expressive generality/power • Statistical NLP – Assumption: process is nondeterministic – Use probabilities to predict desired product(s) – Either identify equivalence classes manually or try to learn them probabilistically, so as to extend coverage of probability tables • Table lookup – Does not try to predict product from generalizations (which is why it’s less ‘exciting’ and more like ‘cheating’) – Suffers from NLP’s 80 -20 Law: “Linear Expectation but Non-linear Frustration” — the first bite of the fruit is rich and juicy, but pretty soon you have to suck really hard and still get almost nothing

The three approaches (quote from Keh-Yi Su, Taiwan) • Rule-based NLP – Assumption: process is deterministic – Use rules to exactly generate desired product – Manually identify generalizations (= equivalence classes of entities) to obtain expressive generality/power • Statistical NLP – Assumption: process is nondeterministic – Use probabilities to predict desired product(s) – Either identify equivalence classes manually or try to learn them probabilistically, so as to extend coverage of probability tables • Table lookup – Does not try to predict product from generalizations (which is why it’s less ‘exciting’ and more like ‘cheating’) – Suffers from NLP’s 80 -20 Law: “Linear Expectation but Non-linear Frustration” — the first bite of the fruit is rich and juicy, but pretty soon you have to suck really hard and still get almost nothing

Theory in tables • Traditional NLP doesn’t really work, but lets you theorize • Statistical approaches tell you how to do it but not why it works this way • Table lookup tells you nothing except the answer, but beats the competition in speed and (probably) quality • Before calculators, people used log and trigonometry tables to avoid the hard computations at ‘run-time’ • There was a lot of theory behind the tables • Do we need theory behind the NLP tables? – MT: maybe not? Only to compress better? – QA/IE facts: maybe not? Just a little from KR/ontologies?

Theory in tables • Traditional NLP doesn’t really work, but lets you theorize • Statistical approaches tell you how to do it but not why it works this way • Table lookup tells you nothing except the answer, but beats the competition in speed and (probably) quality • Before calculators, people used log and trigonometry tables to avoid the hard computations at ‘run-time’ • There was a lot of theory behind the tables • Do we need theory behind the NLP tables? – MT: maybe not? Only to compress better? – QA/IE facts: maybe not? Just a little from KR/ontologies?

Boredom and excitement • Precise models (theory) and Accurate models (performance) • Why am I doing what I am doing? • The Four-color Map Theorem, and Brouwer and the Intuitionists: Mathematics in Amsterdam in the 1920 s

Boredom and excitement • Precise models (theory) and Accurate models (performance) • Why am I doing what I am doing? • The Four-color Map Theorem, and Brouwer and the Intuitionists: Mathematics in Amsterdam in the 1920 s

Ensemble frameworks Is there a fourth way? …yes of course; you can always go meta. Build a framework to integrate/compare other work: – Application performance competitions: TREC + MUC + DUC + etc. (Harman and Voorhees et al. , 1991–) – Application integration architectures: MITRE MITAP (Hirschman et al. 2003) and others – Meta-evaluation: ISLE-FEMTI for MT (Hovy et al. 2004); ORANGE for MT and summarization (Lin and Och 2004)

Ensemble frameworks Is there a fourth way? …yes of course; you can always go meta. Build a framework to integrate/compare other work: – Application performance competitions: TREC + MUC + DUC + etc. (Harman and Voorhees et al. , 1991–) – Application integration architectures: MITRE MITAP (Hirschman et al. 2003) and others – Meta-evaluation: ISLE-FEMTI for MT (Hovy et al. 2004); ORANGE for MT and summarization (Lin and Och 2004)

Multilingual NLP tomorrow Your choices: 1. 2. 3. 4. Traditional study Statistical approach Table lookup Ensemble frameworks Who are you? ? ?

Multilingual NLP tomorrow Your choices: 1. 2. 3. 4. Traditional study Statistical approach Table lookup Ensemble frameworks Who are you? ? ?

Thank you

Thank you