57983682c84f53eb3ebfaeabbc3d9515.ppt

- Количество слайдов: 31

Thinking systemically: Seeing from simple to complex in impact evaluation Expert lecture for Af. REA Conference March 30 – April 2, 2009 Cairo, Egypt Professor Patricia Rogers Royal Melbourne Institute of Technology, Australia Dr. Irene Guijt Learning by Design, the Netherlands Bob Williams Independent consultant, New Zealand (with thanks to Dr. Jim Woodhill, Wageningen International, the Netherlands)

Thinking systemically: Seeing from simple to complex in impact evaluation Expert lecture for Af. REA Conference March 30 – April 2, 2009 Cairo, Egypt Professor Patricia Rogers Royal Melbourne Institute of Technology, Australia Dr. Irene Guijt Learning by Design, the Netherlands Bob Williams Independent consultant, New Zealand (with thanks to Dr. Jim Woodhill, Wageningen International, the Netherlands)

TODAY’S SESSION • Explore what thinking systemically is and how it relates to evaluation • Introduce a systems approach that we think has the potential to move IDE forward, plus give you something you can use in your own practice • Give you an opportunity to reflect and play with systems ideas and this method.

TODAY’S SESSION • Explore what thinking systemically is and how it relates to evaluation • Introduce a systems approach that we think has the potential to move IDE forward, plus give you something you can use in your own practice • Give you an opportunity to reflect and play with systems ideas and this method.

SYSTEMS CONCEPTS IN EVALUATION AN EXPERT ANTHOLOGY Eds. Iraj Imam & Bob Williams

SYSTEMS CONCEPTS IN EVALUATION AN EXPERT ANTHOLOGY Eds. Iraj Imam & Bob Williams

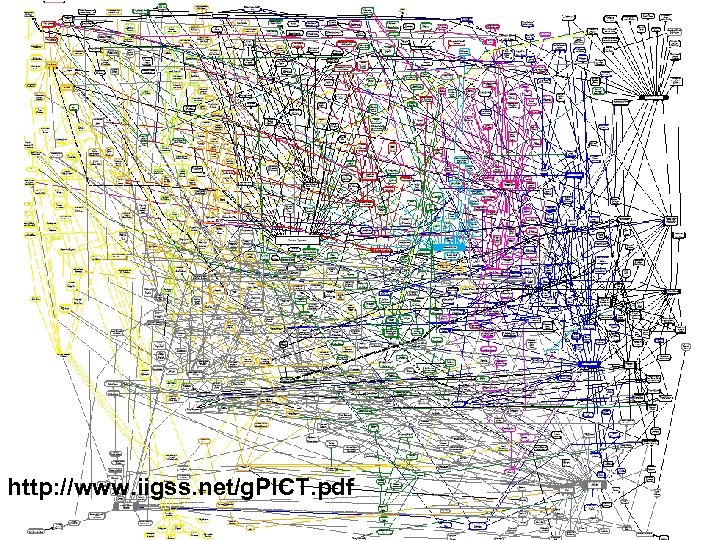

http: //www. iigss. net/g. PICT. pdf

http: //www. iigss. net/g. PICT. pdf

THREE ELEMENTS OF THINKING SYSTEMICALLY ØINTER-RELATIONSHIPS ØPERSPECTIVES ØBOUNDARIES

THREE ELEMENTS OF THINKING SYSTEMICALLY ØINTER-RELATIONSHIPS ØPERSPECTIVES ØBOUNDARIES

INTER-RELATIONSHIPS Being deeply aware of their significance ØSome inter-relationships matter more than most ØSome only matter over time ØSome are slower in their impact than others ØSome are linear (A affects B), some are non-linear and recursive (A affects B which affects A) ØMost critically, systems thinking focuses on the inter-relationship of ideas, assumptions, beliefs as well as actions in the traditional cause and effect.

INTER-RELATIONSHIPS Being deeply aware of their significance ØSome inter-relationships matter more than most ØSome only matter over time ØSome are slower in their impact than others ØSome are linear (A affects B), some are non-linear and recursive (A affects B which affects A) ØMost critically, systems thinking focuses on the inter-relationship of ideas, assumptions, beliefs as well as actions in the traditional cause and effect.

PERSPECTIVES Thinking systemically about perspectives is not the same as stakeholder analysis

PERSPECTIVES Thinking systemically about perspectives is not the same as stakeholder analysis

PERSPECTIVES

PERSPECTIVES

PERSPECTIVES We all bring different perspectives to bear on anything we do. In this workshop I am handling four different perspectives A session where people learn something 2. Something that allows me to communicate my knowledge 3. A means of expressing friendship and support to colleagues, 4. A way of enjoying myself. 1. You cannot understand how I behave at this session unless you understand how I juggle these perspectives.

PERSPECTIVES We all bring different perspectives to bear on anything we do. In this workshop I am handling four different perspectives A session where people learn something 2. Something that allows me to communicate my knowledge 3. A means of expressing friendship and support to colleagues, 4. A way of enjoying myself. 1. You cannot understand how I behave at this session unless you understand how I juggle these perspectives.

BOUNDARIES

BOUNDARIES

BOUNDARIES Who or what is “in” and who or what is “out” Purpose of evaluation; how will you judge “success”? 2. Resources for evaluation; what is not in your control? 3. What evidence is considered credible, whose expertise is acknowledged, or ignored? 4. Whose or what interests are not being served by an evaluation ? 1.

BOUNDARIES Who or what is “in” and who or what is “out” Purpose of evaluation; how will you judge “success”? 2. Resources for evaluation; what is not in your control? 3. What evidence is considered credible, whose expertise is acknowledged, or ignored? 4. Whose or what interests are not being served by an evaluation ? 1.

BOUNDARIES Thinking systemically requires you to do two things around those boundary decisions; 1. to identify the consequences of boundary decisions 2. consider how to mitigate any negative consequences of boundary decisions.

BOUNDARIES Thinking systemically requires you to do two things around those boundary decisions; 1. to identify the consequences of boundary decisions 2. consider how to mitigate any negative consequences of boundary decisions.

Tool for thought - the Cynefin Framework Facilitates seeing situational diversity § Based on recognizing different types of cause and effect relations – a given situation will contain aspects of all § Draws on theories of: § l l l § Complexity Cognitive Systems Narrative Networks Developed by Dave Snowden – ex-IBM knowledge management researcher

Tool for thought - the Cynefin Framework Facilitates seeing situational diversity § Based on recognizing different types of cause and effect relations – a given situation will contain aspects of all § Draws on theories of: § l l l § Complexity Cognitive Systems Narrative Networks Developed by Dave Snowden – ex-IBM knowledge management researcher

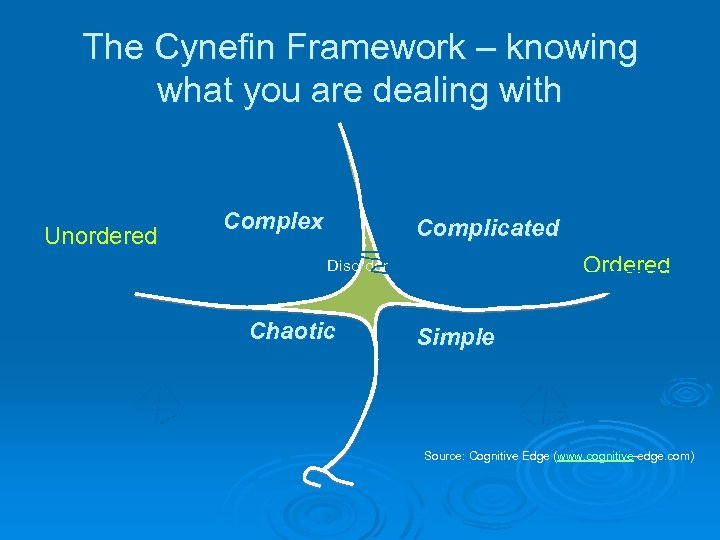

The Cynefin Framework – knowing what you are dealing with Unordered Complex Complicated Ordered Disorder Chaotic Simple Source: Cognitive Edge (www. cognitive-edge. com)

The Cynefin Framework – knowing what you are dealing with Unordered Complex Complicated Ordered Disorder Chaotic Simple Source: Cognitive Edge (www. cognitive-edge. com)

Ordered Domain – Simple (known) § Cause and Effect: l repeatable, perceivable, and predictable § Approach: l Sense – Categorise - Respond § Methods: l Standard operating procedures l Best practices Source: Cognitive Edge (www. cognitive-edge. com) l Process reengineering

Ordered Domain – Simple (known) § Cause and Effect: l repeatable, perceivable, and predictable § Approach: l Sense – Categorise - Respond § Methods: l Standard operating procedures l Best practices Source: Cognitive Edge (www. cognitive-edge. com) l Process reengineering

Evaluating the ‘simple’ § Simple aspects of a situation l l § For evaluation l l § Need to know activities and some context If activity takes place, outcomes are known (e. g. polio vaccination once in the person is guaranteed) Monitoring is important l § Causal links are tight, clearly observed and understood Key variables to assess can be determined “sense, categorise, respond” But need to guard for slipping into chaos

Evaluating the ‘simple’ § Simple aspects of a situation l l § For evaluation l l § Need to know activities and some context If activity takes place, outcomes are known (e. g. polio vaccination once in the person is guaranteed) Monitoring is important l § Causal links are tight, clearly observed and understood Key variables to assess can be determined “sense, categorise, respond” But need to guard for slipping into chaos

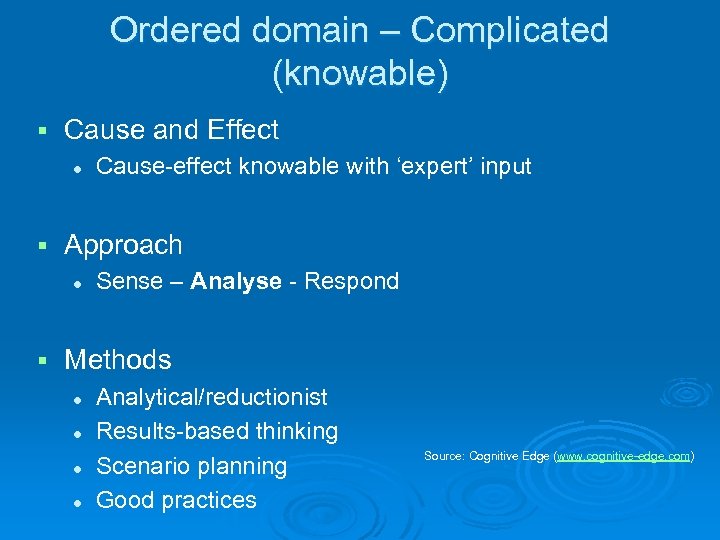

Ordered domain – Complicated (knowable) § Cause and Effect l § Approach l § Cause-effect knowable with ‘expert’ input Sense – Analyse - Respond Methods l l Analytical/reductionist Results-based thinking Scenario planning Good practices Source: Cognitive Edge (www. cognitive-edge. com)

Ordered domain – Complicated (knowable) § Cause and Effect l § Approach l § Cause-effect knowable with ‘expert’ input Sense – Analyse - Respond Methods l l Analytical/reductionist Results-based thinking Scenario planning Good practices Source: Cognitive Edge (www. cognitive-edge. com)

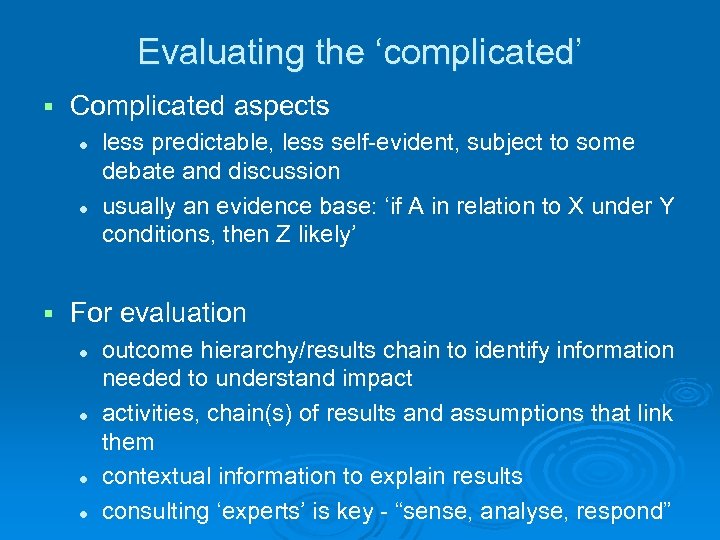

Evaluating the ‘complicated’ § Complicated aspects l l § less predictable, less self-evident, subject to some debate and discussion usually an evidence base: ‘if A in relation to X under Y conditions, then Z likely’ For evaluation l l outcome hierarchy/results chain to identify information needed to understand impact activities, chain(s) of results and assumptions that link them contextual information to explain results consulting ‘experts’ is key - “sense, analyse, respond”

Evaluating the ‘complicated’ § Complicated aspects l l § less predictable, less self-evident, subject to some debate and discussion usually an evidence base: ‘if A in relation to X under Y conditions, then Z likely’ For evaluation l l outcome hierarchy/results chain to identify information needed to understand impact activities, chain(s) of results and assumptions that link them contextual information to explain results consulting ‘experts’ is key - “sense, analyse, respond”

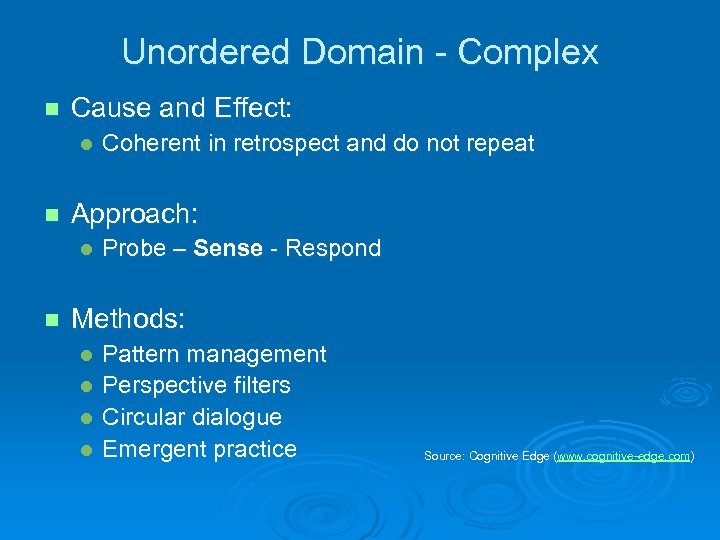

Unordered Domain - Complex n Cause and Effect: l n Approach: l n Coherent in retrospect and do not repeat Probe – Sense - Respond Methods: l l Pattern management Perspective filters Circular dialogue Emergent practice Source: Cognitive Edge (www. cognitive-edge. com)

Unordered Domain - Complex n Cause and Effect: l n Approach: l n Coherent in retrospect and do not repeat Probe – Sense - Respond Methods: l l Pattern management Perspective filters Circular dialogue Emergent practice Source: Cognitive Edge (www. cognitive-edge. com)

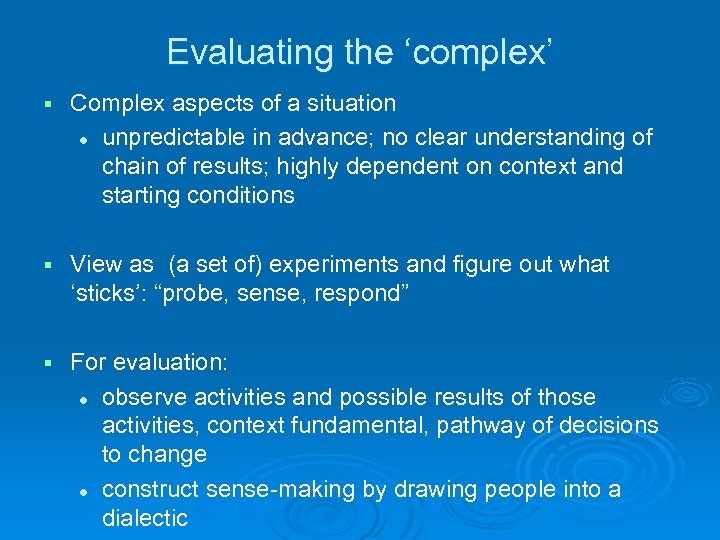

Evaluating the ‘complex’ § Complex aspects of a situation l unpredictable in advance; no clear understanding of chain of results; highly dependent on context and starting conditions § View as (a set of) experiments and figure out what ‘sticks’: “probe, sense, respond” § For evaluation: l observe activities and possible results of those activities, context fundamental, pathway of decisions to change l construct sense-making by drawing people into a dialectic

Evaluating the ‘complex’ § Complex aspects of a situation l unpredictable in advance; no clear understanding of chain of results; highly dependent on context and starting conditions § View as (a set of) experiments and figure out what ‘sticks’: “probe, sense, respond” § For evaluation: l observe activities and possible results of those activities, context fundamental, pathway of decisions to change l construct sense-making by drawing people into a dialectic

Unordered Domain - Chaotic § Cause and Effect: l § Approach: l § not perceivable Act – Sense - Respond Methods: l l l Stability-focused intervention Crisis management Source: Cognitive Edge (www. cognitive-edge. com) Novel practice

Unordered Domain - Chaotic § Cause and Effect: l § Approach: l § not perceivable Act – Sense - Respond Methods: l l l Stability-focused intervention Crisis management Source: Cognitive Edge (www. cognitive-edge. com) Novel practice

Evaluating the ‘chaotic’ § Chaos l § totally unpredictable; no clear understanding of chain of results For evaluation: l l l Observe context, prioritise needs, act, observe again Afterwards (if/when situation stabilises), evaluate if best possible action under the circumstances was taken (real time evaluation) “act, sense, respond” - assess worth of “act” and subsequent effects and follow-up actions

Evaluating the ‘chaotic’ § Chaos l § totally unpredictable; no clear understanding of chain of results For evaluation: l l l Observe context, prioritise needs, act, observe again Afterwards (if/when situation stabilises), evaluate if best possible action under the circumstances was taken (real time evaluation) “act, sense, respond” - assess worth of “act” and subsequent effects and follow-up actions

Implications for IE Practice How do we understand what is happening? § How do we change what is happening? §

Implications for IE Practice How do we understand what is happening? § How do we change what is happening? §

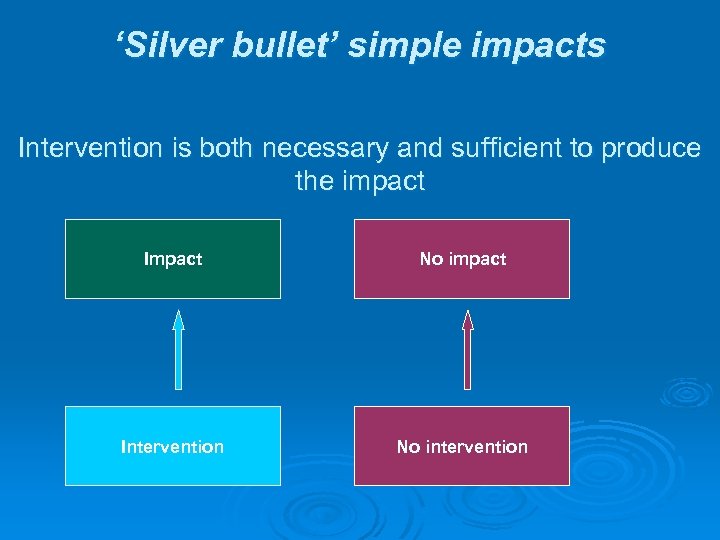

‘Silver bullet’ simple impacts Intervention is both necessary and sufficient to produce the impact Impact No impact Intervention No intervention

‘Silver bullet’ simple impacts Intervention is both necessary and sufficient to produce the impact Impact No impact Intervention No intervention

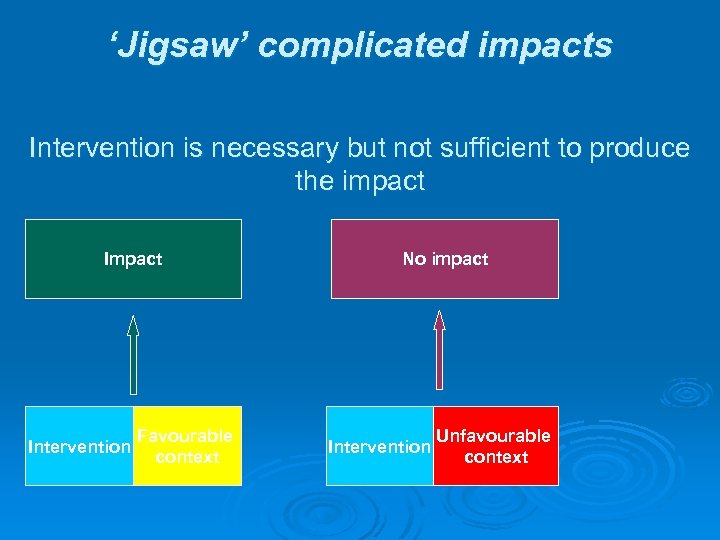

‘Jigsaw’ complicated impacts Intervention is necessary but not sufficient to produce the impact Intervention Favourable context No impact Intervention Unfavourable context

‘Jigsaw’ complicated impacts Intervention is necessary but not sufficient to produce the impact Intervention Favourable context No impact Intervention Unfavourable context

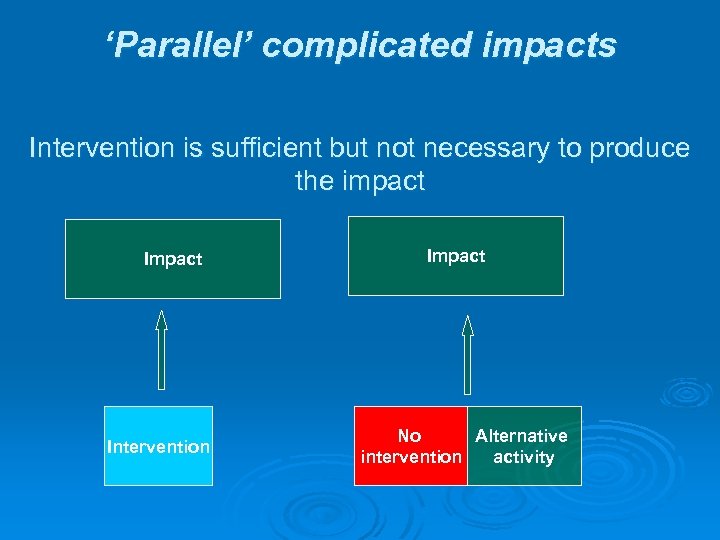

‘Parallel’ complicated impacts Intervention is sufficient but not necessary to produce the impact Intervention Impact No Alternative intervention activity

‘Parallel’ complicated impacts Intervention is sufficient but not necessary to produce the impact Intervention Impact No Alternative intervention activity

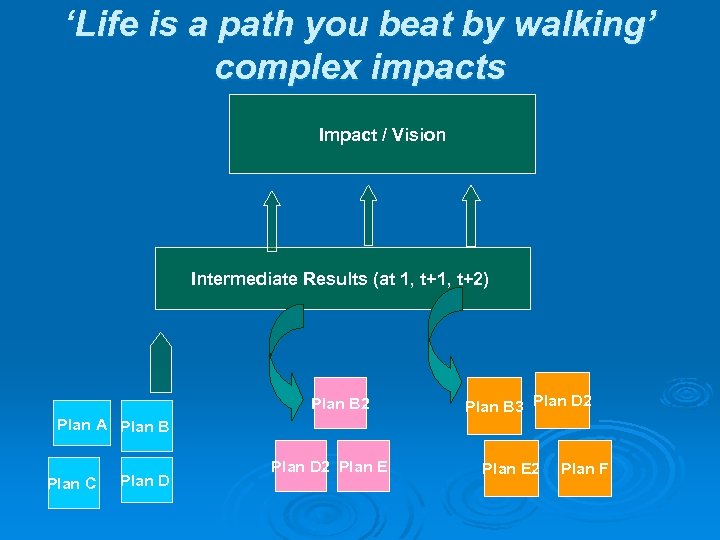

‘Life is a path you beat by walking’ complex impacts Impact / Vision Intermediate Results (at 1, t+2) Plan B 2 Plan A Plan B Plan C Plan D 2 Plan E Plan B 3 Plan D 2 Plan E 2 Plan F

‘Life is a path you beat by walking’ complex impacts Impact / Vision Intermediate Results (at 1, t+2) Plan B 2 Plan A Plan B Plan C Plan D 2 Plan E Plan B 3 Plan D 2 Plan E 2 Plan F

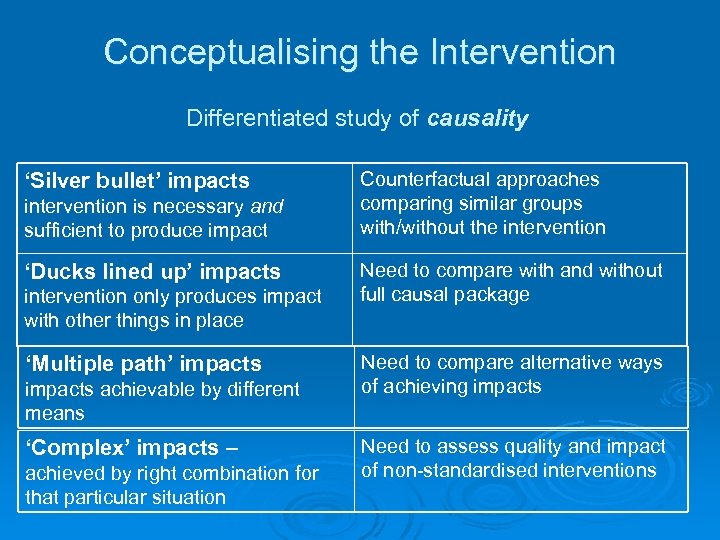

Conceptualising the Intervention Differentiated study of causality ‘Silver bullet’ impacts intervention is necessary and sufficient to produce impact ‘Ducks lined up’ impacts intervention only produces impact with other things in place ‘Multiple path’ impacts achievable by different means ‘Complex’ impacts – achieved by right combination for that particular situation Counterfactual approaches comparing similar groups with/without the intervention Need to compare with and without full causal package Need to compare alternative ways of achieving impacts Need to assess quality and impact of non-standardised interventions

Conceptualising the Intervention Differentiated study of causality ‘Silver bullet’ impacts intervention is necessary and sufficient to produce impact ‘Ducks lined up’ impacts intervention only produces impact with other things in place ‘Multiple path’ impacts achievable by different means ‘Complex’ impacts – achieved by right combination for that particular situation Counterfactual approaches comparing similar groups with/without the intervention Need to compare with and without full causal package Need to compare alternative ways of achieving impacts Need to assess quality and impact of non-standardised interventions

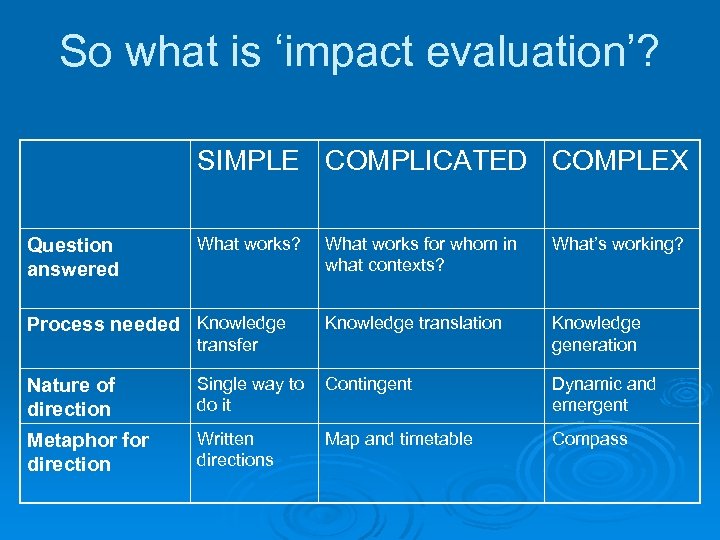

So what is ‘impact evaluation’? SIMPLE COMPLICATED COMPLEX What works for whom in what contexts? What’s working? Knowledge translation transfer Knowledge generation Nature of direction Single way to Contingent do it Dynamic and emergent Metaphor for direction Written directions Compass Question answered What works? Process needed Knowledge Map and timetable

So what is ‘impact evaluation’? SIMPLE COMPLICATED COMPLEX What works for whom in what contexts? What’s working? Knowledge translation transfer Knowledge generation Nature of direction Single way to Contingent do it Dynamic and emergent Metaphor for direction Written directions Compass Question answered What works? Process needed Knowledge Map and timetable

Take home messages Seeing ‘ontological diversity’ in situations (and interventions) enables a more conscious, appropriate methodologically mixed approach – it’s about being a good professional. 2. Cynefin is just a heuristic - a tool for thinking systemically 3. Thinking systemically is about a deep understanding inter-relationships, perspectives and boundaries. Boundary critique is the area where evaluation can learn most by drawing on the experience of the systems field. 1.

Take home messages Seeing ‘ontological diversity’ in situations (and interventions) enables a more conscious, appropriate methodologically mixed approach – it’s about being a good professional. 2. Cynefin is just a heuristic - a tool for thinking systemically 3. Thinking systemically is about a deep understanding inter-relationships, perspectives and boundaries. Boundary critique is the area where evaluation can learn most by drawing on the experience of the systems field. 1.

References Eoyang, Glenda. (2008) So, what about accountability? http: //www. cognitive-edge. com/blogs/guest/2008/12/so_what_about_accountability_1. php Glouberman, S. and Zimmerman, B. (2002) Complicated and Complex Systems: What Would Successful Reform of Medicare Look Like? Commission on the Future of Health Care in Canada. Discussion Paper 8. Available at http: //www. healthandeverything. org/pubs/Glouberman_E. pdf Guijt, I. (2008). Navigating Complexity. Report of an Innovation Dialogue, May 2008. http: //portals. wi. wur. nl/files/docs/Innovation%20 Dialogue%20 on%20 Navigating%20 Complexity%20%20 Full%20 Report. pdf Guijt, I. and P. Engel. (2009). ‘Nine Hot Potatoes. Current Debates and Issues in Results-Oriented Practice. ’ Presentation for Hivos In-house Training on Reflection-oriented Practice. Mackie, J. (1974). The Cement of the Universe. Oxford University Press, Oxford. Mark MR. 2001. What works and how can we tell? Evaluation Seminar 2. Victoria Department of Natural Resources and Environment. Rogers, P. J. (2008) ‘Using programme theory for complicated and complex programmes’ Evaluation: the international jourmal of theory, research and practice. 14 (1): 29 -48. Rogers, P. J. (2008) ‘Impact Evaluation Guidance. Subgroup 2’. Meeting of NONIE (Network of Networks on Impact Evaluation), Washington, DC. Rogers, P. J. (2001) Impact Evaluation Research Report Department of Natural Resources and Environment, Victoria. Ross, H. L. , Campbell, D. T. , & Glass, G. V (1970). Determining the social effects of a legal reform. In S. S. Nagel (Ed. ), Law and social change (pp. 15 -32). Beverly Hills, CA: SAGE. Williams, B. and I. Imam. (2007). Systems Concepts in Evaluation: An Expert Anthology. American Evaluation Association. Woodhill, J. (2008). The Cynefin Framework: What to do about complexity? Implications for Learning, Participation, Strategy and Leadership. Presentation for the ‘Navigating Complexity Workshop’, Wageningen International. http: //portals. wi. wur. nl/files/docs/File/navigatingcomplexity/Cynefin. Framework%20 final%20%5 BRead. Only%5 D. pdf

References Eoyang, Glenda. (2008) So, what about accountability? http: //www. cognitive-edge. com/blogs/guest/2008/12/so_what_about_accountability_1. php Glouberman, S. and Zimmerman, B. (2002) Complicated and Complex Systems: What Would Successful Reform of Medicare Look Like? Commission on the Future of Health Care in Canada. Discussion Paper 8. Available at http: //www. healthandeverything. org/pubs/Glouberman_E. pdf Guijt, I. (2008). Navigating Complexity. Report of an Innovation Dialogue, May 2008. http: //portals. wi. wur. nl/files/docs/Innovation%20 Dialogue%20 on%20 Navigating%20 Complexity%20%20 Full%20 Report. pdf Guijt, I. and P. Engel. (2009). ‘Nine Hot Potatoes. Current Debates and Issues in Results-Oriented Practice. ’ Presentation for Hivos In-house Training on Reflection-oriented Practice. Mackie, J. (1974). The Cement of the Universe. Oxford University Press, Oxford. Mark MR. 2001. What works and how can we tell? Evaluation Seminar 2. Victoria Department of Natural Resources and Environment. Rogers, P. J. (2008) ‘Using programme theory for complicated and complex programmes’ Evaluation: the international jourmal of theory, research and practice. 14 (1): 29 -48. Rogers, P. J. (2008) ‘Impact Evaluation Guidance. Subgroup 2’. Meeting of NONIE (Network of Networks on Impact Evaluation), Washington, DC. Rogers, P. J. (2001) Impact Evaluation Research Report Department of Natural Resources and Environment, Victoria. Ross, H. L. , Campbell, D. T. , & Glass, G. V (1970). Determining the social effects of a legal reform. In S. S. Nagel (Ed. ), Law and social change (pp. 15 -32). Beverly Hills, CA: SAGE. Williams, B. and I. Imam. (2007). Systems Concepts in Evaluation: An Expert Anthology. American Evaluation Association. Woodhill, J. (2008). The Cynefin Framework: What to do about complexity? Implications for Learning, Participation, Strategy and Leadership. Presentation for the ‘Navigating Complexity Workshop’, Wageningen International. http: //portals. wi. wur. nl/files/docs/File/navigatingcomplexity/Cynefin. Framework%20 final%20%5 BRead. Only%5 D. pdf