28772ca4cf7ab8dd8e3ed666851146be.ppt

- Количество слайдов: 17

Theme Information Extraction for World Wide Web Paper Unsupervised Learning of Soft Patterns for Generating Definitions from Online News Author Cui, H. , Kan, M-Y. and Chua, T-S Presenter Bei Yu

IE approaches n n n Traditional IE (from NLP and CL) n Using syntactic and semantic constraints Wrapper (independently developed for WWW) n Using delimiter-based extraction patterns This paper n Soft Pattern + IR(PRF) + summarization (sentence retrieval/ranking, MMR) techniques

Unsupervised Learning of Soft Patterns for Generating Definitions from Online News n n n IE from QA perspective Research question: finding definition sentence for terms or person names; Previous approaches: n hand-crafted rules (previous paper) or n supervised learning Research method: n unsupervised soft patterns +IR + summarization External tools needed: commercial pos tagger and syntactic chunker (NP, VP)

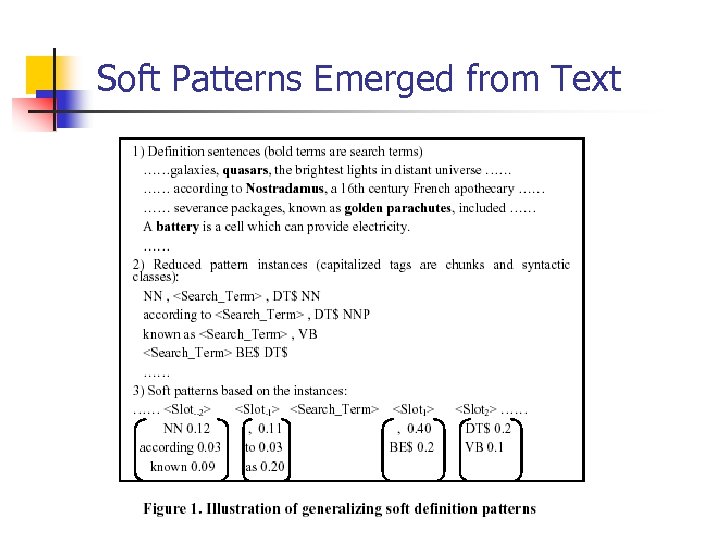

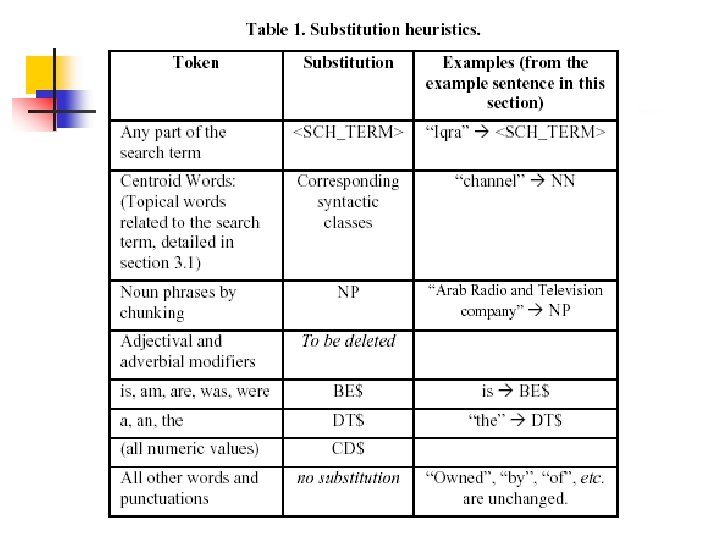

Soft Patterns n A virtual vector representation (window size 3) n n Slot: a vector of tokens with their probabilities of occurrence n n <Slot-w, ……, Slot-2, Slot-1, SCH_TERM , Slot 1, Slot 2, ……Slotw : Pa> <(tokeni 1, weighti 1), (tokeni 2, eighti 2) ……(tokenim, weightim): Sloti> Token: word, punctuation or syntactic tag (substituted? )

Soft Patterns Emerged from Text

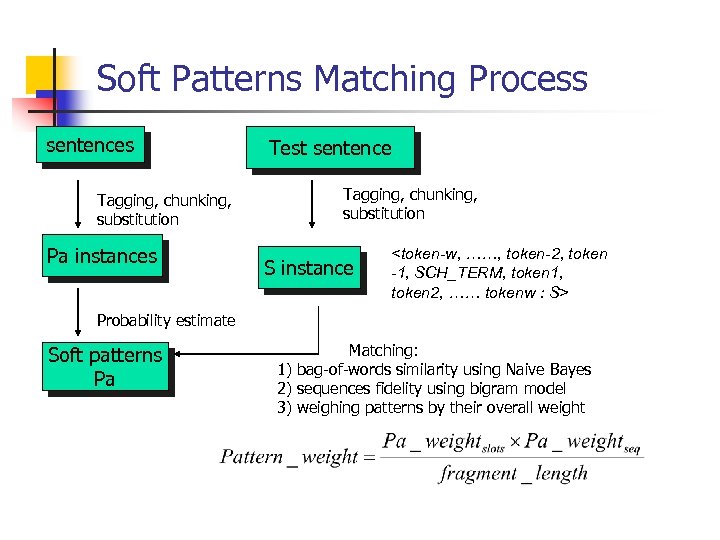

Soft Patterns Matching Process sentences Tagging, chunking, substitution Pa instances Test sentence Tagging, chunking, substitution S instance <token-w, ……, token-2, token -1, SCH_TERM, token 1, token 2, …… tokenw : S> Probability estimate Soft patterns Pa Matching: 1) bag-of-words similarity using Naive Bayes 2) sequences fidelity using bigram model 3) weighing patterns by their overall weight

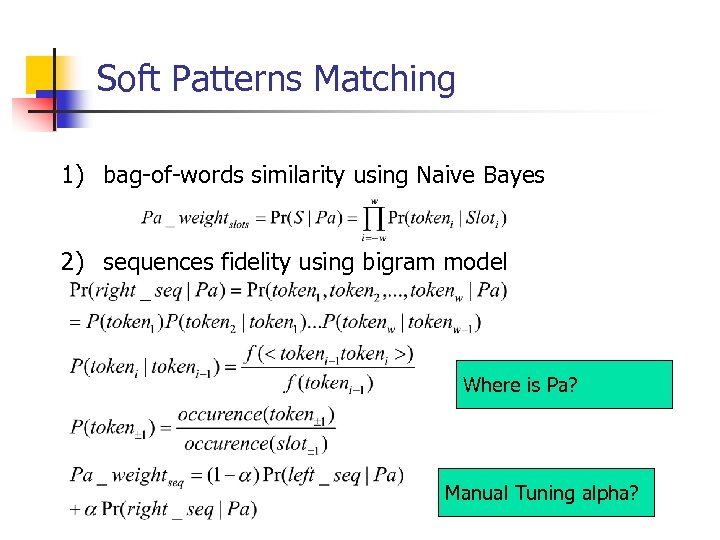

Soft Patterns Matching 1) bag-of-words similarity using Naive Bayes 2) sequences fidelity using bigram model Where is Pa? Manual Tuning alpha?

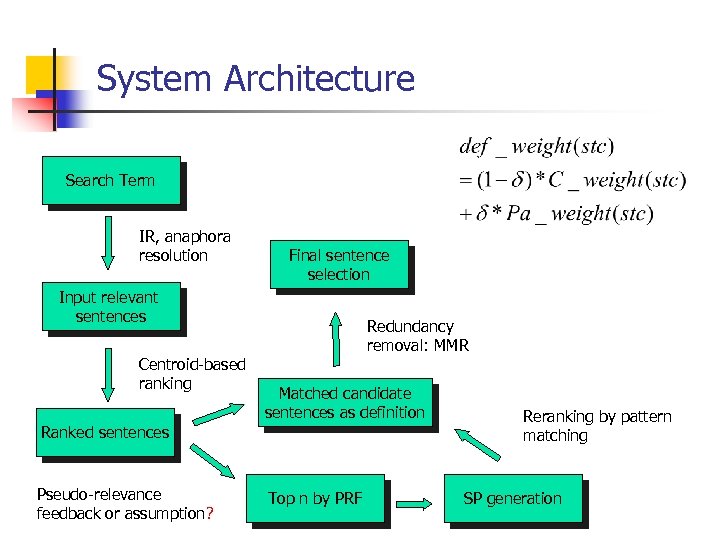

System Architecture Search Term IR, anaphora resolution Final sentence selection Input relevant sentences Centroid-based ranking Redundancy removal: MMR Matched candidate sentences as definition Ranked sentences Pseudo-relevance feedback or assumption? Top n by PRF Reranking by pattern matching SP generation

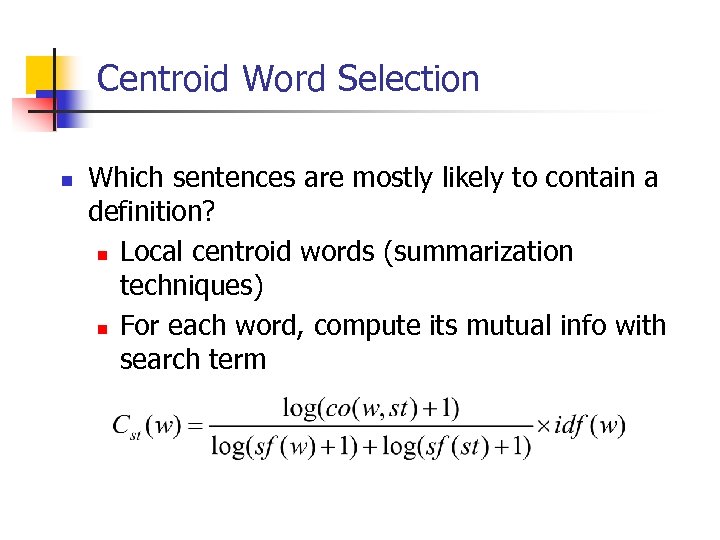

Centroid Word Selection n Which sentences are mostly likely to contain a definition? n Local centroid words (summarization techniques) n For each word, compute its mutual info with search term

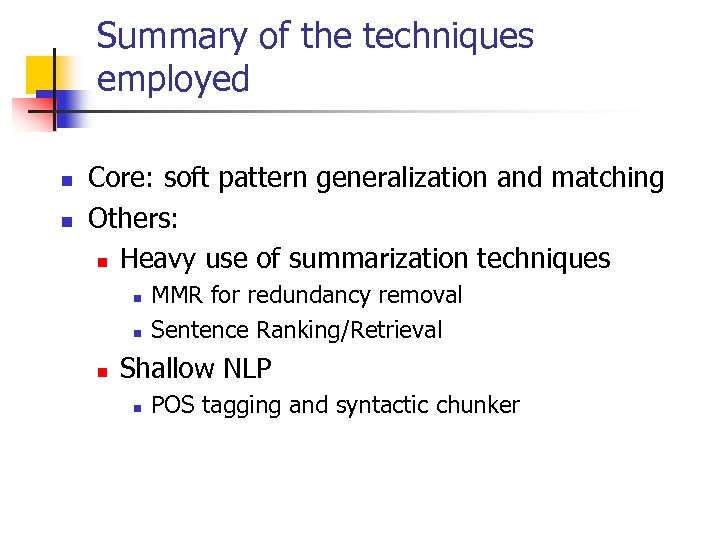

Summary of the techniques employed n n Core: soft pattern generalization and matching Others: n Heavy use of summarization techniques n n n MMR for redundancy removal Sentence Ranking/Retrieval Shallow NLP n POS tagging and syntactic chunker

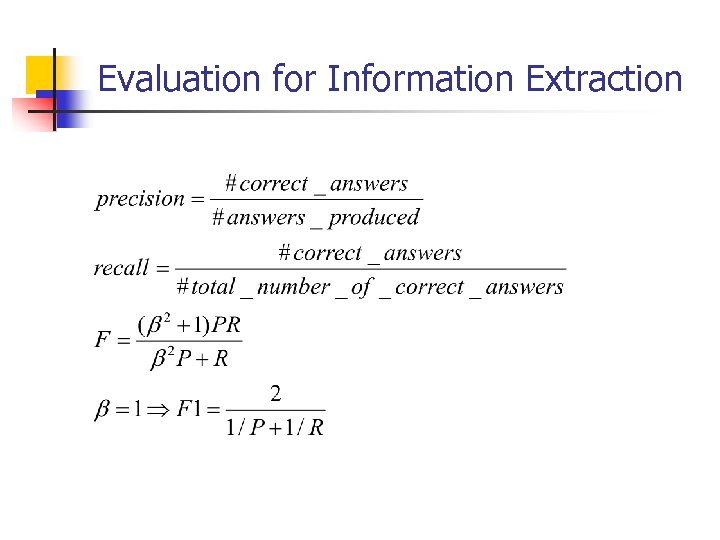

Evaluation for Information Extraction

Evaluation for Definition Extraction n n Test data: n TREC QA corpus n Online news (heuristics leaning to news text) Experiment: n Comparison to HCR and centroid-based statistical method (baseline) n F 5 -measure

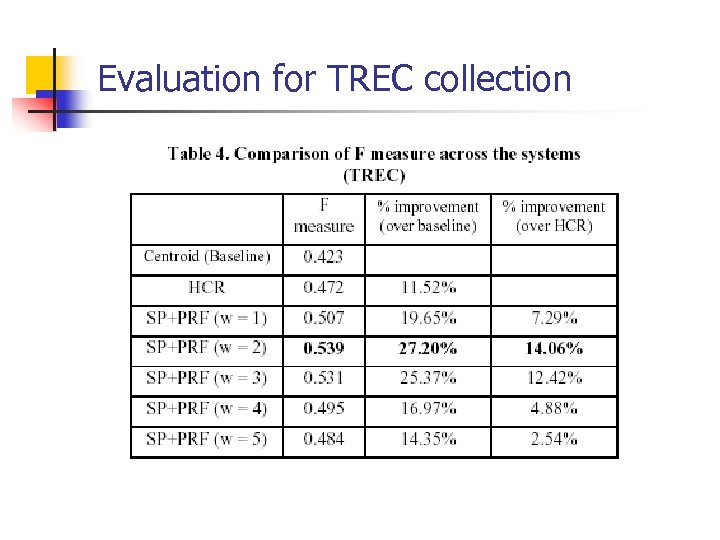

Evaluation for TREC collection

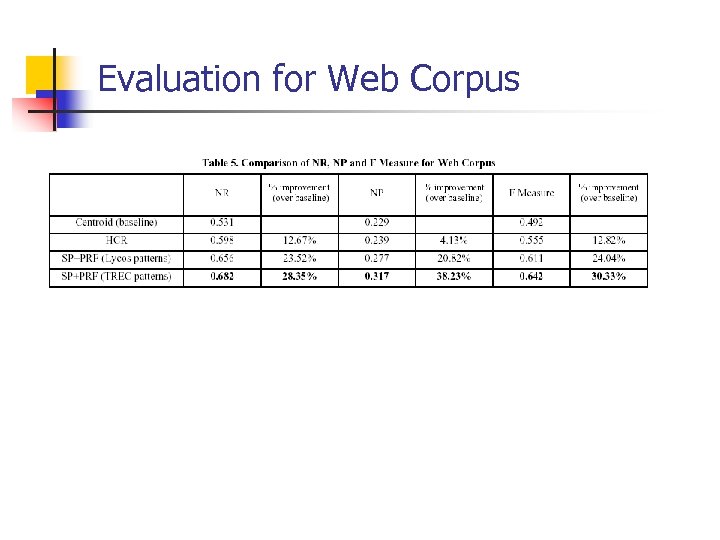

Evaluation for Web Corpus

Questions for this paper n n n n Chunker-variate performance? (NP, VP) Manual tuning parameter (alpha, delta)? Void PRF? Question selection: seed for pattern generation Is it “patterns” or just one pattern at all? Arbitrary window size? Is it really “unsupervised learning? ” n Part of data used for rule induction Can SP+PRF really beat HCR?

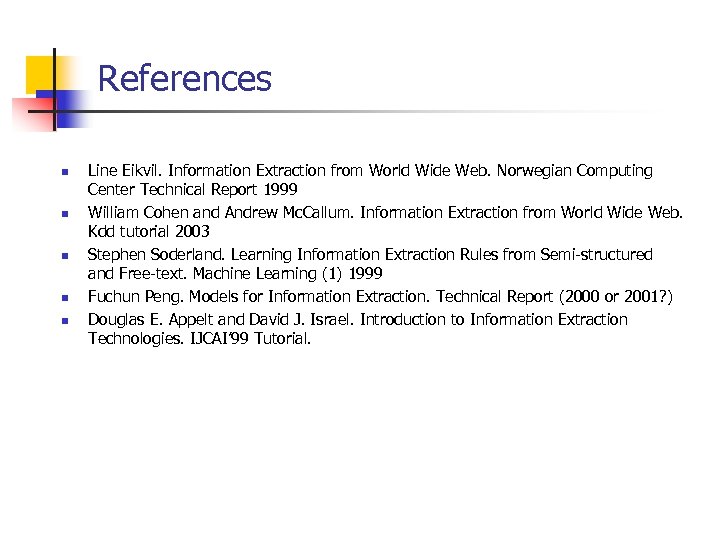

References n n n Line Eikvil. Information Extraction from World Wide Web. Norwegian Computing Center Technical Report 1999 William Cohen and Andrew Mc. Callum. Information Extraction from World Wide Web. Kdd tutorial 2003 Stephen Soderland. Learning Information Extraction Rules from Semi-structured and Free-text. Machine Learning (1) 1999 Fuchun Peng. Models for Information Extraction. Technical Report (2000 or 2001? ) Douglas E. Appelt and David J. Israel. Introduction to Information Extraction Technologies. IJCAI’ 99 Tutorial.

28772ca4cf7ab8dd8e3ed666851146be.ppt