94937b3a4ad901c5dee80f0ed948ea0a.ppt

- Количество слайдов: 47

The Worldwide LHC Computing Grid WLCG Service Schedule The WLCG Service has been operational (as a service) since late 2005. A few critical components still need to be added or upgraded. But we have no time left prior to Full Dress Rehearsals in the summer…

The Worldwide LHC Computing Grid WLCG Service Schedule The WLCG Service has been operational (as a service) since late 2005. A few critical components still need to be added or upgraded. But we have no time left prior to Full Dress Rehearsals in the summer…

Talk Outline • Lessons from the past – flashback to CHEP 2 K Ø Expecting the un-expected • Targets set at the Sep ’ 06 LHCC Comprehensive review • What we have achieved so far Ø What we have failed to achieve The key outstanding issues • What can we realistically achieve in the very few remaining weeks (until the Dress Rehearsals…) • Outlook for 2008. . .

Talk Outline • Lessons from the past – flashback to CHEP 2 K Ø Expecting the un-expected • Targets set at the Sep ’ 06 LHCC Comprehensive review • What we have achieved so far Ø What we have failed to achieve The key outstanding issues • What can we realistically achieve in the very few remaining weeks (until the Dress Rehearsals…) • Outlook for 2008. . .

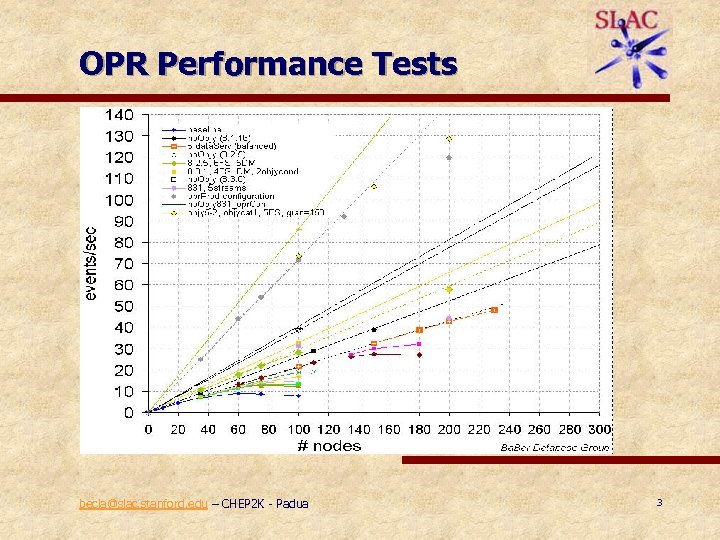

OPR Performance Tests becla@slac. stanford. edu – CHEP 2 K - Padua 3

OPR Performance Tests becla@slac. stanford. edu – CHEP 2 K - Padua 3

“Conventional wisdom” - 2000 • “Either you have been there or you have not” Ø Translation: you need to test everything both separately and together under full production conditions before you can be sure that you are really ready. For the expected. • There are still significant things that have not been tested by a single VO, let alone by all VOs together • CMS CSA 06 preparations: careful preparation and testing of all components over several months - basically everything broke first time (but was then fixed)… This is a technique that has been proven (repeatedly) to work… M This is simply “Murphy’s law for the Grid”… How did we manage to forget this so quickly?

“Conventional wisdom” - 2000 • “Either you have been there or you have not” Ø Translation: you need to test everything both separately and together under full production conditions before you can be sure that you are really ready. For the expected. • There are still significant things that have not been tested by a single VO, let alone by all VOs together • CMS CSA 06 preparations: careful preparation and testing of all components over several months - basically everything broke first time (but was then fixed)… This is a technique that has been proven (repeatedly) to work… M This is simply “Murphy’s law for the Grid”… How did we manage to forget this so quickly?

The 1 st Law Of (Grid) Computing § Murphy's law (also known as Finagle's law or Sod's law) is a popular adage in Western culture, which broadly states that things will go wrong in any given situation. "If there's more than one way to do a job, and one of those ways will result in disaster, then somebody will do it that way. " It is most commonly formulated as "Anything that can go wrong will go wrong. " In American culture the law was named after Major Edward A. Murphy, Jr. , a development engineer working for a brief time on rocket sled experiments done by the United States Air Force in 1949. § … first received public attention during a press conference … it was that nobody had been severely injured during the rocket sled [of testing the human tolerance for g-forces during rapid deceleration. ]. Stapp replied that it was because they took Murphy's Law under consideration. Ø “Expect the unexpected” – Bandits (Bruce Willis)

The 1 st Law Of (Grid) Computing § Murphy's law (also known as Finagle's law or Sod's law) is a popular adage in Western culture, which broadly states that things will go wrong in any given situation. "If there's more than one way to do a job, and one of those ways will result in disaster, then somebody will do it that way. " It is most commonly formulated as "Anything that can go wrong will go wrong. " In American culture the law was named after Major Edward A. Murphy, Jr. , a development engineer working for a brief time on rocket sled experiments done by the United States Air Force in 1949. § … first received public attention during a press conference … it was that nobody had been severely injured during the rocket sled [of testing the human tolerance for g-forces during rapid deceleration. ]. Stapp replied that it was because they took Murphy's Law under consideration. Ø “Expect the unexpected” – Bandits (Bruce Willis)

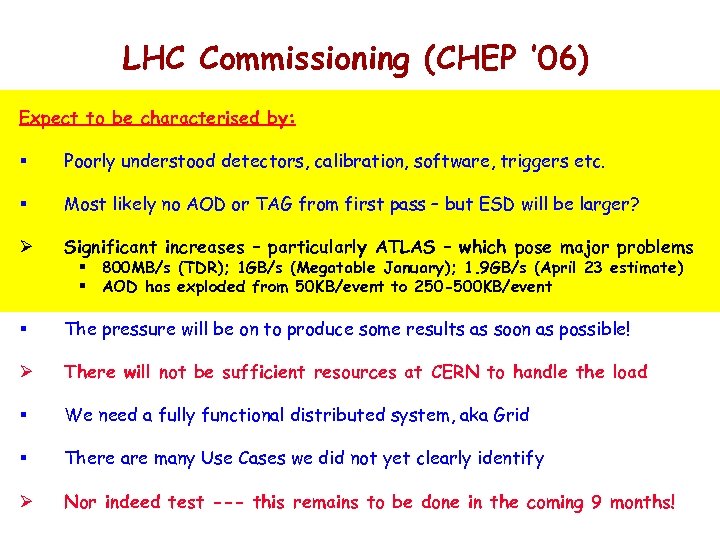

LHC Commissioning (CHEP ’ 06) Expect to be characterised by: § Poorly understood detectors, calibration, software, triggers etc. § Most likely no AOD or TAG from first pass – but ESD will be larger? Ø Significant increases – particularly ATLAS – which pose major problems § The pressure will be on to produce some results as soon as possible! Ø There will not be sufficient resources at CERN to handle the load § We need a fully functional distributed system, aka Grid § There are many Use Cases we did not yet clearly identify Ø Nor indeed test --- this remains to be done in the coming 9 months! § 800 MB/s (TDR); 1 GB/s (Megatable January); 1. 9 GB/s (April 23 estimate) § AOD has exploded from 50 KB/event to 250 -500 KB/event

LHC Commissioning (CHEP ’ 06) Expect to be characterised by: § Poorly understood detectors, calibration, software, triggers etc. § Most likely no AOD or TAG from first pass – but ESD will be larger? Ø Significant increases – particularly ATLAS – which pose major problems § The pressure will be on to produce some results as soon as possible! Ø There will not be sufficient resources at CERN to handle the load § We need a fully functional distributed system, aka Grid § There are many Use Cases we did not yet clearly identify Ø Nor indeed test --- this remains to be done in the coming 9 months! § 800 MB/s (TDR); 1 GB/s (Megatable January); 1. 9 GB/s (April 23 estimate) § AOD has exploded from 50 KB/event to 250 -500 KB/event

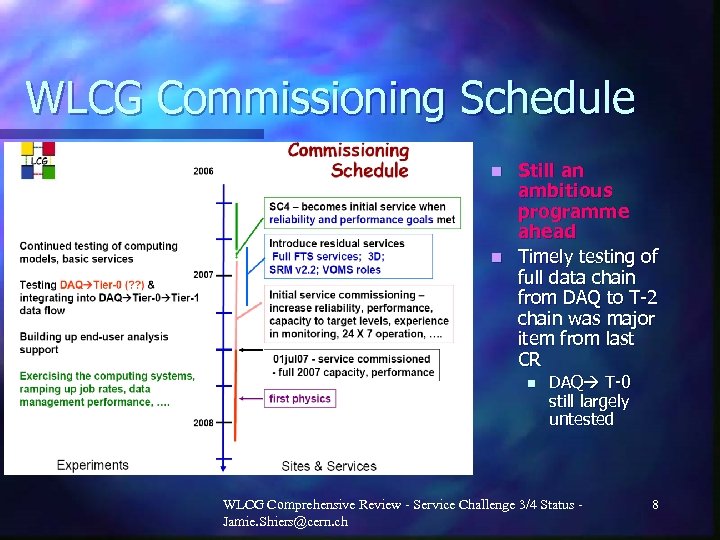

WLCG Commissioning Schedule Still an ambitious programme ahead n Timely testing of full data chain from DAQ to T-2 chain was major item from last CR n n DAQ T-0 still largely untested WLCG Comprehensive Review - Service Challenge 3/4 Status Jamie. Shiers@cern. ch 8

WLCG Commissioning Schedule Still an ambitious programme ahead n Timely testing of full data chain from DAQ to T-2 chain was major item from last CR n n DAQ T-0 still largely untested WLCG Comprehensive Review - Service Challenge 3/4 Status Jamie. Shiers@cern. ch 8

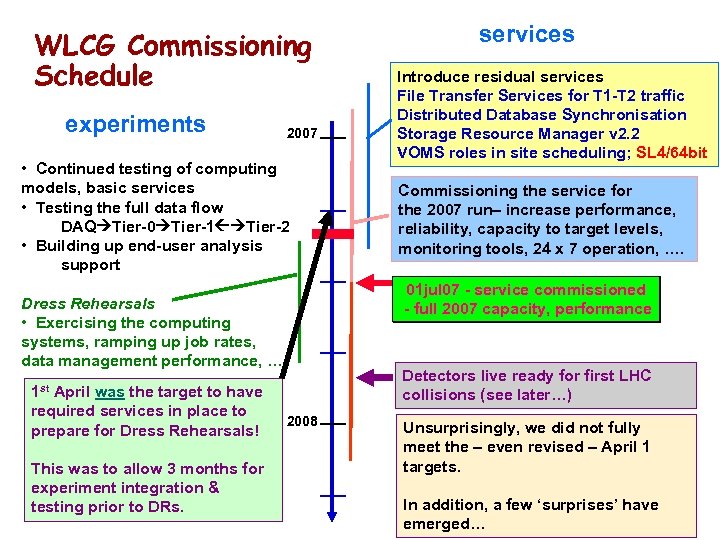

WLCG Commissioning Schedule experiments 2007 • Continued testing of computing models, basic services • Testing the full data flow DAQ Tier-0 Tier-1 Tier-2 • Building up end-user analysis support Dress Rehearsals • Exercising the computing systems, ramping up job rates, data management performance, …. 1 st April was the target to have required services in place to prepare for Dress Rehearsals! This was to allow 3 months for experiment integration & testing prior to DRs. 2008 services Introduce residual services File Transfer Services for T 1 -T 2 traffic Distributed Database Synchronisation Storage Resource Manager v 2. 2 VOMS roles in site scheduling; SL 4/64 bit Commissioning the service for the 2007 run– increase performance, reliability, capacity to target levels, monitoring tools, 24 x 7 operation, …. 01 jul 07 - service commissioned - full 2007 capacity, performance Detectors live ready for first LHC collisions (see later…) Unsurprisingly, we did not fully meet the – even revised – April 1 targets. In addition, a few ‘surprises’ have emerged…

WLCG Commissioning Schedule experiments 2007 • Continued testing of computing models, basic services • Testing the full data flow DAQ Tier-0 Tier-1 Tier-2 • Building up end-user analysis support Dress Rehearsals • Exercising the computing systems, ramping up job rates, data management performance, …. 1 st April was the target to have required services in place to prepare for Dress Rehearsals! This was to allow 3 months for experiment integration & testing prior to DRs. 2008 services Introduce residual services File Transfer Services for T 1 -T 2 traffic Distributed Database Synchronisation Storage Resource Manager v 2. 2 VOMS roles in site scheduling; SL 4/64 bit Commissioning the service for the 2007 run– increase performance, reliability, capacity to target levels, monitoring tools, 24 x 7 operation, …. 01 jul 07 - service commissioned - full 2007 capacity, performance Detectors live ready for first LHC collisions (see later…) Unsurprisingly, we did not fully meet the – even revised – April 1 targets. In addition, a few ‘surprises’ have emerged…

What have we (not) achieved? • Of the list of residual services, what precisely have we managed to deploy? • How well have we managed with respect to the main Q 1 milestones? • What are the key problems remaining?

What have we (not) achieved? • Of the list of residual services, what precisely have we managed to deploy? • How well have we managed with respect to the main Q 1 milestones? • What are the key problems remaining?

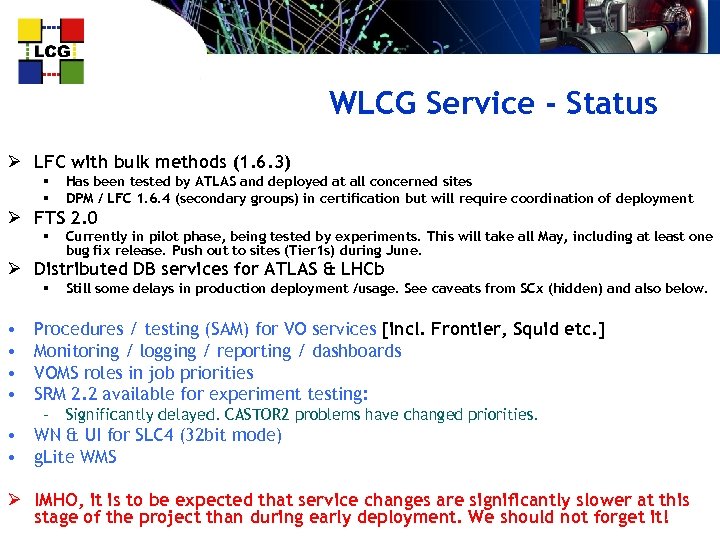

WLCG Service - Status Ø LFC with bulk methods (1. 6. 3) § § Has been tested by ATLAS and deployed at all concerned sites DPM / LFC 1. 6. 4 (secondary groups) in certification but will require coordination of deployment Ø FTS 2. 0 § Currently in pilot phase, being tested by experiments. This will take all May, including at least one bug fix release. Push out to sites (Tier 1 s) during June. Ø Distributed DB services for ATLAS & LHCb § • • Still some delays in production deployment /usage. See caveats from SCx (hidden) and also below. Procedures / testing (SAM) for VO services [incl. Frontier, Squid etc. ] Monitoring / logging / reporting / dashboards VOMS roles in job priorities SRM 2. 2 available for experiment testing: – Significantly delayed. CASTOR 2 problems have changed priorities. • WN & UI for SLC 4 (32 bit mode) • g. Lite WMS Ø IMHO, it is to be expected that service changes are significantly slower at this stage of the project than during early deployment. We should not forget it!

WLCG Service - Status Ø LFC with bulk methods (1. 6. 3) § § Has been tested by ATLAS and deployed at all concerned sites DPM / LFC 1. 6. 4 (secondary groups) in certification but will require coordination of deployment Ø FTS 2. 0 § Currently in pilot phase, being tested by experiments. This will take all May, including at least one bug fix release. Push out to sites (Tier 1 s) during June. Ø Distributed DB services for ATLAS & LHCb § • • Still some delays in production deployment /usage. See caveats from SCx (hidden) and also below. Procedures / testing (SAM) for VO services [incl. Frontier, Squid etc. ] Monitoring / logging / reporting / dashboards VOMS roles in job priorities SRM 2. 2 available for experiment testing: – Significantly delayed. CASTOR 2 problems have changed priorities. • WN & UI for SLC 4 (32 bit mode) • g. Lite WMS Ø IMHO, it is to be expected that service changes are significantly slower at this stage of the project than during early deployment. We should not forget it!

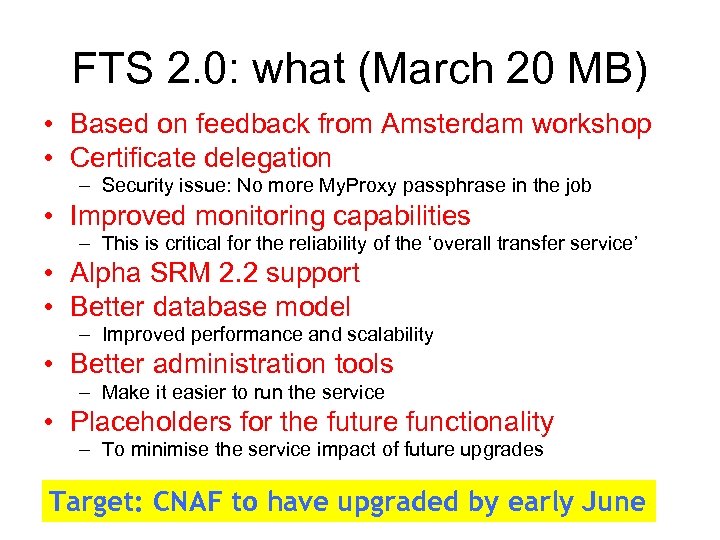

FTS 2. 0: what (March 20 MB) • Based on feedback from Amsterdam workshop • Certificate delegation – Security issue: No more My. Proxy passphrase in the job • Improved monitoring capabilities – This is critical for the reliability of the ‘overall transfer service’ • Alpha SRM 2. 2 support • Better database model – Improved performance and scalability • Better administration tools – Make it easier to run the service • Placeholders for the future functionality – To minimise the service impact of future upgrades Target: CNAF to have upgraded by early June

FTS 2. 0: what (March 20 MB) • Based on feedback from Amsterdam workshop • Certificate delegation – Security issue: No more My. Proxy passphrase in the job • Improved monitoring capabilities – This is critical for the reliability of the ‘overall transfer service’ • Alpha SRM 2. 2 support • Better database model – Improved performance and scalability • Better administration tools – Make it easier to run the service • Placeholders for the future functionality – To minimise the service impact of future upgrades Target: CNAF to have upgraded by early June

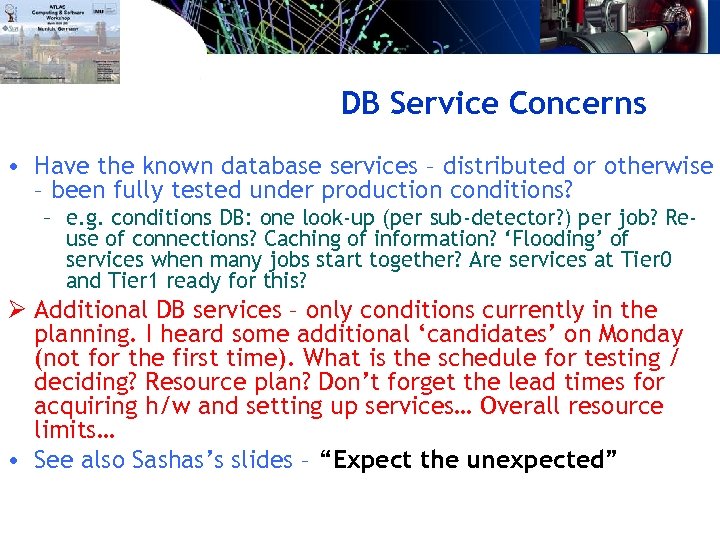

DB Service Concerns • Have the known database services – distributed or otherwise – been fully tested under production conditions? – e. g. conditions DB: one look-up (per sub-detector? ) per job? Reuse of connections? Caching of information? ‘Flooding’ of services when many jobs start together? Are services at Tier 0 and Tier 1 ready for this? Ø Additional DB services – only conditions currently in the planning. I heard some additional ‘candidates’ on Monday (not for the first time). What is the schedule for testing / deciding? Resource plan? Don’t forget the lead times for acquiring h/w and setting up services… Overall resource limits… • See also Sashas’s slides – “Expect the unexpected”

DB Service Concerns • Have the known database services – distributed or otherwise – been fully tested under production conditions? – e. g. conditions DB: one look-up (per sub-detector? ) per job? Reuse of connections? Caching of information? ‘Flooding’ of services when many jobs start together? Are services at Tier 0 and Tier 1 ready for this? Ø Additional DB services – only conditions currently in the planning. I heard some additional ‘candidates’ on Monday (not for the first time). What is the schedule for testing / deciding? Resource plan? Don’t forget the lead times for acquiring h/w and setting up services… Overall resource limits… • See also Sashas’s slides – “Expect the unexpected”

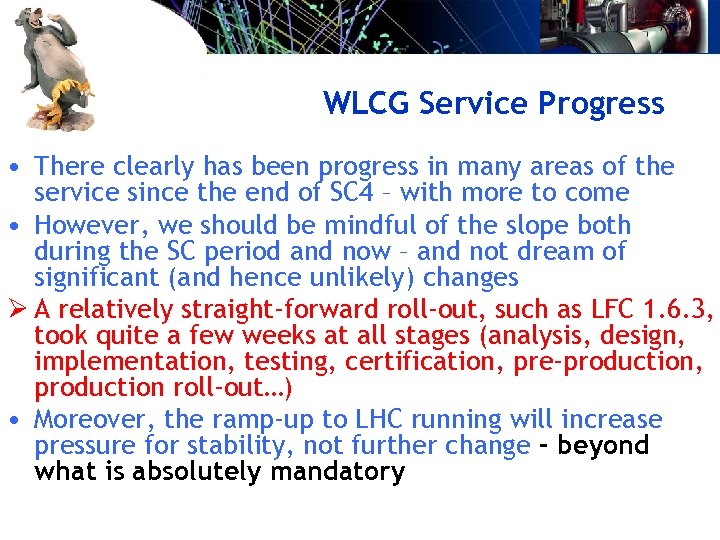

WLCG Service Progress • There clearly has been progress in many areas of the service since the end of SC 4 – with more to come • However, we should be mindful of the slope both during the SC period and now – and not dream of significant (and hence unlikely) changes Ø A relatively straight-forward roll-out, such as LFC 1. 6. 3, took quite a few weeks at all stages (analysis, design, implementation, testing, certification, pre-production, production roll-out…) • Moreover, the ramp-up to LHC running will increase pressure for stability, not further change – beyond what is absolutely mandatory

WLCG Service Progress • There clearly has been progress in many areas of the service since the end of SC 4 – with more to come • However, we should be mindful of the slope both during the SC period and now – and not dream of significant (and hence unlikely) changes Ø A relatively straight-forward roll-out, such as LFC 1. 6. 3, took quite a few weeks at all stages (analysis, design, implementation, testing, certification, pre-production, production roll-out…) • Moreover, the ramp-up to LHC running will increase pressure for stability, not further change – beyond what is absolutely mandatory

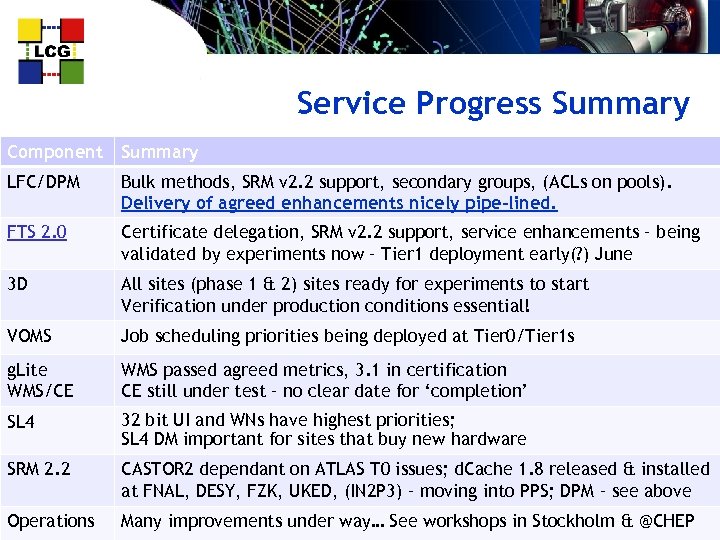

Service Progress Summary Component Summary LFC/DPM Bulk methods, SRM v 2. 2 support, secondary groups, (ACLs on pools). Delivery of agreed enhancements nicely pipe-lined. FTS 2. 0 Certificate delegation, SRM v 2. 2 support, service enhancements – being validated by experiments now – Tier 1 deployment early(? ) June 3 D All sites (phase 1 & 2) sites ready for experiments to start Verification under production conditions essential! VOMS Job scheduling priorities being deployed at Tier 0/Tier 1 s g. Lite WMS/CE WMS passed agreed metrics, 3. 1 in certification CE still under test – no clear date for ‘completion’ SL 4 32 bit UI and WNs have highest priorities; SL 4 DM important for sites that buy new hardware SRM 2. 2 CASTOR 2 dependant on ATLAS T 0 issues; d. Cache 1. 8 released & installed at FNAL, DESY, FZK, UKED, (IN 2 P 3) – moving into PPS; DPM – see above Operations Many improvements under way… See workshops in Stockholm & @CHEP

Service Progress Summary Component Summary LFC/DPM Bulk methods, SRM v 2. 2 support, secondary groups, (ACLs on pools). Delivery of agreed enhancements nicely pipe-lined. FTS 2. 0 Certificate delegation, SRM v 2. 2 support, service enhancements – being validated by experiments now – Tier 1 deployment early(? ) June 3 D All sites (phase 1 & 2) sites ready for experiments to start Verification under production conditions essential! VOMS Job scheduling priorities being deployed at Tier 0/Tier 1 s g. Lite WMS/CE WMS passed agreed metrics, 3. 1 in certification CE still under test – no clear date for ‘completion’ SL 4 32 bit UI and WNs have highest priorities; SL 4 DM important for sites that buy new hardware SRM 2. 2 CASTOR 2 dependant on ATLAS T 0 issues; d. Cache 1. 8 released & installed at FNAL, DESY, FZK, UKED, (IN 2 P 3) – moving into PPS; DPM – see above Operations Many improvements under way… See workshops in Stockholm & @CHEP

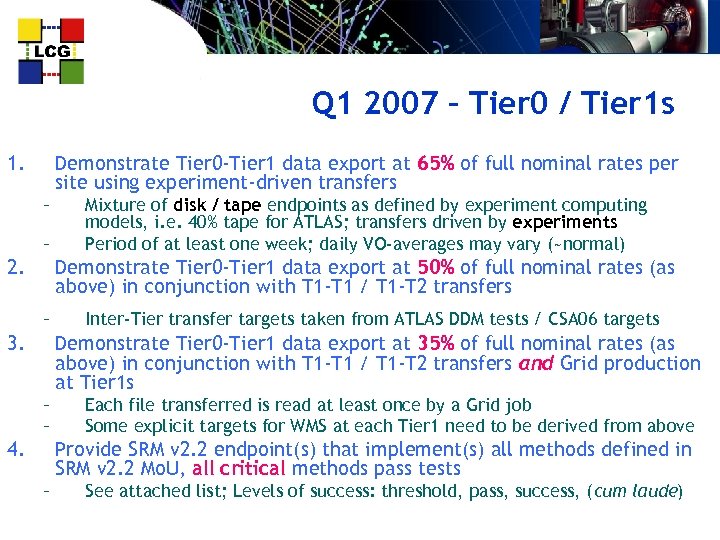

Q 1 2007 – Tier 0 / Tier 1 s 1. Demonstrate Tier 0 -Tier 1 data export at 65% of full nominal rates per site using experiment-driven transfers – – 2. Mixture of disk / tape endpoints as defined by experiment computing models, i. e. 40% tape for ATLAS; transfers driven by experiments Period of at least one week; daily VO-averages may vary (~normal) Demonstrate Tier 0 -Tier 1 data export at 50% of full nominal rates (as above) in conjunction with T 1 -T 1 / T 1 -T 2 transfers – 3. Inter-Tier transfer targets taken from ATLAS DDM tests / CSA 06 targets Demonstrate Tier 0 -Tier 1 data export at 35% of full nominal rates (as above) in conjunction with T 1 -T 1 / T 1 -T 2 transfers and Grid production at Tier 1 s – – 4. Each file transferred is read at least once by a Grid job Some explicit targets for WMS at each Tier 1 need to be derived from above Provide SRM v 2. 2 endpoint(s) that implement(s) all methods defined in SRM v 2. 2 Mo. U, all critical methods pass tests – See attached list; Levels of success: threshold, pass, success, (cum laude)

Q 1 2007 – Tier 0 / Tier 1 s 1. Demonstrate Tier 0 -Tier 1 data export at 65% of full nominal rates per site using experiment-driven transfers – – 2. Mixture of disk / tape endpoints as defined by experiment computing models, i. e. 40% tape for ATLAS; transfers driven by experiments Period of at least one week; daily VO-averages may vary (~normal) Demonstrate Tier 0 -Tier 1 data export at 50% of full nominal rates (as above) in conjunction with T 1 -T 1 / T 1 -T 2 transfers – 3. Inter-Tier transfer targets taken from ATLAS DDM tests / CSA 06 targets Demonstrate Tier 0 -Tier 1 data export at 35% of full nominal rates (as above) in conjunction with T 1 -T 1 / T 1 -T 2 transfers and Grid production at Tier 1 s – – 4. Each file transferred is read at least once by a Grid job Some explicit targets for WMS at each Tier 1 need to be derived from above Provide SRM v 2. 2 endpoint(s) that implement(s) all methods defined in SRM v 2. 2 Mo. U, all critical methods pass tests – See attached list; Levels of success: threshold, pass, success, (cum laude)

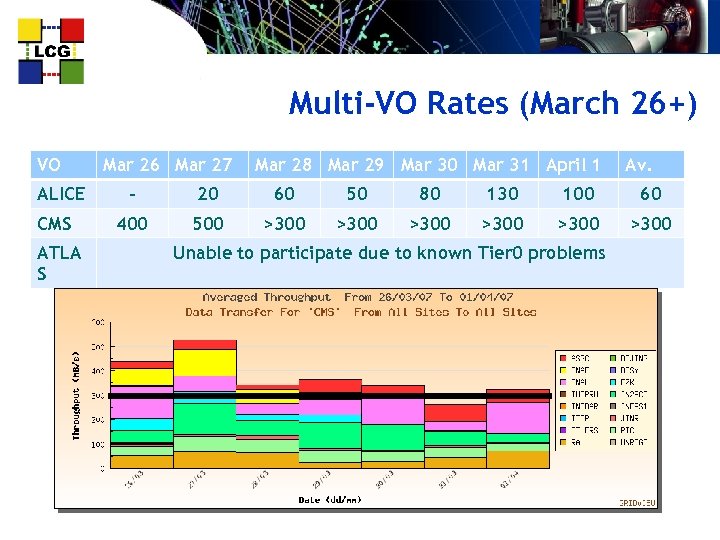

Multi-VO Rates (March 26+) VO ALICE CMS ATLA S Mar 26 Mar 27 Mar 28 Mar 29 Mar 30 Mar 31 April 1 Av. - 20 60 50 80 130 100 60 400 500 >300 >300 Unable to participate due to known Tier 0 problems

Multi-VO Rates (March 26+) VO ALICE CMS ATLA S Mar 26 Mar 27 Mar 28 Mar 29 Mar 30 Mar 31 April 1 Av. - 20 60 50 80 130 100 60 400 500 >300 >300 Unable to participate due to known Tier 0 problems

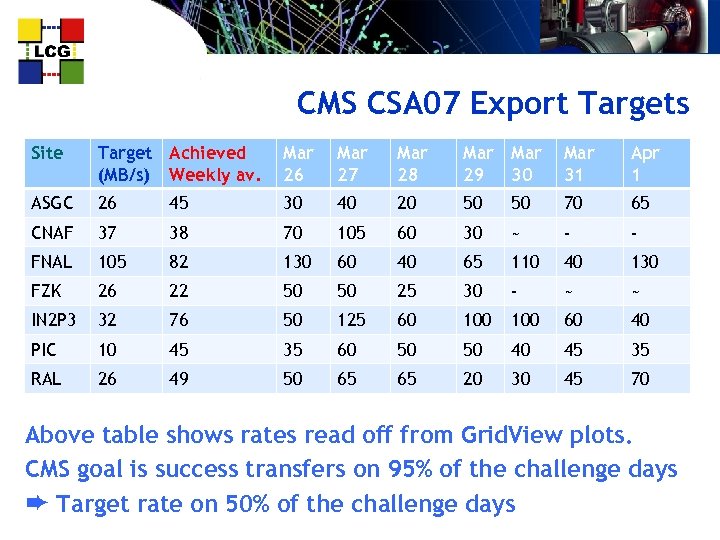

CMS CSA 07 Export Targets Site Target Achieved (MB/s) Weekly av. Mar 26 Mar 27 Mar 28 Mar 29 30 Mar 31 Apr 1 ASGC 26 45 30 40 20 50 50 70 65 CNAF 37 38 70 105 60 30 ~ - - FNAL 105 82 130 60 40 65 110 40 130 FZK 26 22 50 50 25 30 - ~ ~ IN 2 P 3 32 76 50 125 60 100 60 40 PIC 10 45 35 60 50 50 40 45 35 RAL 26 49 50 65 65 20 30 45 70 Above table shows rates read off from Grid. View plots. CMS goal is success transfers on 95% of the challenge days ➨ Target rate on 50% of the challenge days

CMS CSA 07 Export Targets Site Target Achieved (MB/s) Weekly av. Mar 26 Mar 27 Mar 28 Mar 29 30 Mar 31 Apr 1 ASGC 26 45 30 40 20 50 50 70 65 CNAF 37 38 70 105 60 30 ~ - - FNAL 105 82 130 60 40 65 110 40 130 FZK 26 22 50 50 25 30 - ~ ~ IN 2 P 3 32 76 50 125 60 100 60 40 PIC 10 45 35 60 50 50 40 45 35 RAL 26 49 50 65 65 20 30 45 70 Above table shows rates read off from Grid. View plots. CMS goal is success transfers on 95% of the challenge days ➨ Target rate on 50% of the challenge days

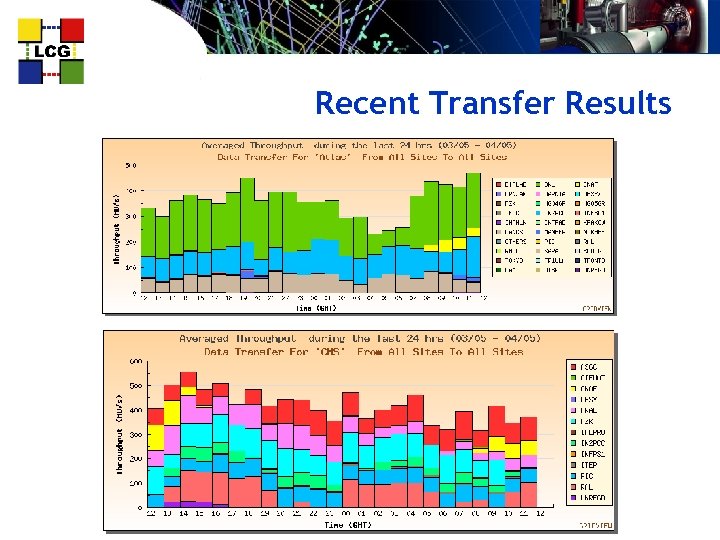

Recent Transfer Results

Recent Transfer Results

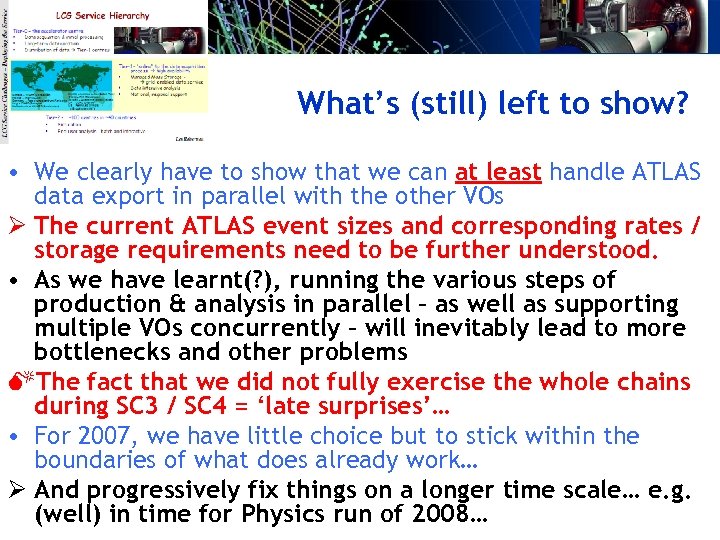

What’s (still) left to show? • We clearly have to show that we can at least handle ATLAS data export in parallel with the other VOs Ø The current ATLAS event sizes and corresponding rates / storage requirements need to be further understood. • As we have learnt(? ), running the various steps of production & analysis in parallel – as well as supporting multiple VOs concurrently – will inevitably lead to more bottlenecks and other problems MThe fact that we did not fully exercise the whole chains during SC 3 / SC 4 = ‘late surprises’… • For 2007, we have little choice but to stick within the boundaries of what does already work… Ø And progressively fix things on a longer time scale… e. g. (well) in time for Physics run of 2008…

What’s (still) left to show? • We clearly have to show that we can at least handle ATLAS data export in parallel with the other VOs Ø The current ATLAS event sizes and corresponding rates / storage requirements need to be further understood. • As we have learnt(? ), running the various steps of production & analysis in parallel – as well as supporting multiple VOs concurrently – will inevitably lead to more bottlenecks and other problems MThe fact that we did not fully exercise the whole chains during SC 3 / SC 4 = ‘late surprises’… • For 2007, we have little choice but to stick within the boundaries of what does already work… Ø And progressively fix things on a longer time scale… e. g. (well) in time for Physics run of 2008…

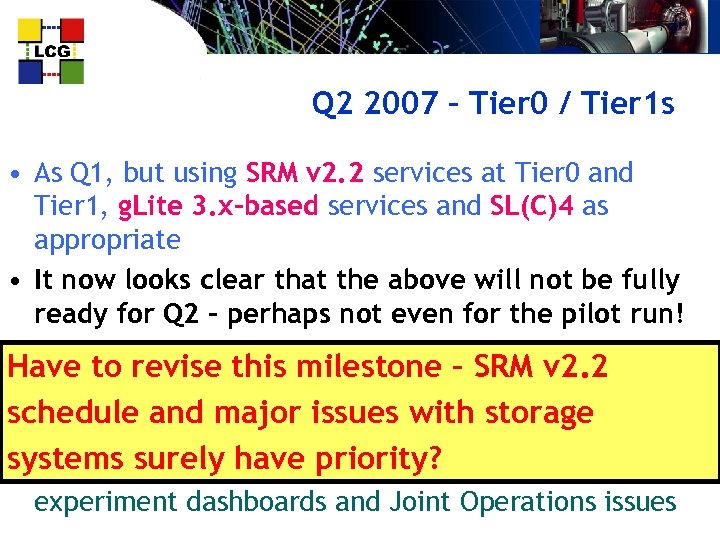

Q 2 2007 – Tier 0 / Tier 1 s • As Q 1, but using SRM v 2. 2 services at Tier 0 and Tier 1, g. Lite 3. x-based services and SL(C)4 as appropriate • It now looks clear that the above will not be fully ready for Q 2 – perhaps not even for the pilot run! • Provide revise this milestone dress rehearsals services required for Q 3 – SRM v 2. 2 Have to Ø Basically, what we had at end of SC 4 + Distributed schedule and major issuesmethods; FTS 2. 0 with storage Database Services; LFC bulk systems surely have VO-specific services, SAM tests, § Work also ongoing on priority? experiment dashboards and Joint Operations issues

Q 2 2007 – Tier 0 / Tier 1 s • As Q 1, but using SRM v 2. 2 services at Tier 0 and Tier 1, g. Lite 3. x-based services and SL(C)4 as appropriate • It now looks clear that the above will not be fully ready for Q 2 – perhaps not even for the pilot run! • Provide revise this milestone dress rehearsals services required for Q 3 – SRM v 2. 2 Have to Ø Basically, what we had at end of SC 4 + Distributed schedule and major issuesmethods; FTS 2. 0 with storage Database Services; LFC bulk systems surely have VO-specific services, SAM tests, § Work also ongoing on priority? experiment dashboards and Joint Operations issues

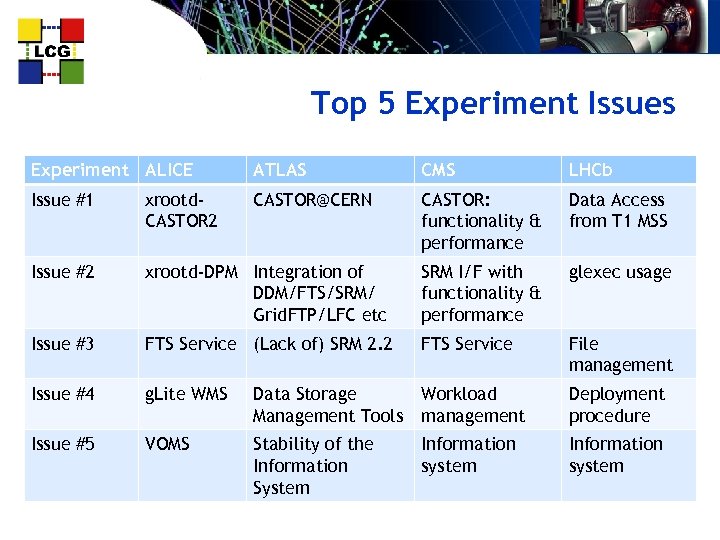

Top 5 Experiment Issues Experiment ALICE ATLAS CMS LHCb Issue #1 xrootd. CASTOR 2 CASTOR@CERN CASTOR: functionality & performance Data Access from T 1 MSS Issue #2 xrootd-DPM Integration of DDM/FTS/SRM/ Grid. FTP/LFC etc SRM I/F with functionality & performance glexec usage Issue #3 FTS Service (Lack of) SRM 2. 2 FTS Service File management Issue #4 g. Lite WMS Data Storage Management Tools Workload management Deployment procedure Issue #5 VOMS Stability of the Information System Information system

Top 5 Experiment Issues Experiment ALICE ATLAS CMS LHCb Issue #1 xrootd. CASTOR 2 CASTOR@CERN CASTOR: functionality & performance Data Access from T 1 MSS Issue #2 xrootd-DPM Integration of DDM/FTS/SRM/ Grid. FTP/LFC etc SRM I/F with functionality & performance glexec usage Issue #3 FTS Service (Lack of) SRM 2. 2 FTS Service File management Issue #4 g. Lite WMS Data Storage Management Tools Workload management Deployment procedure Issue #5 VOMS Stability of the Information System Information system

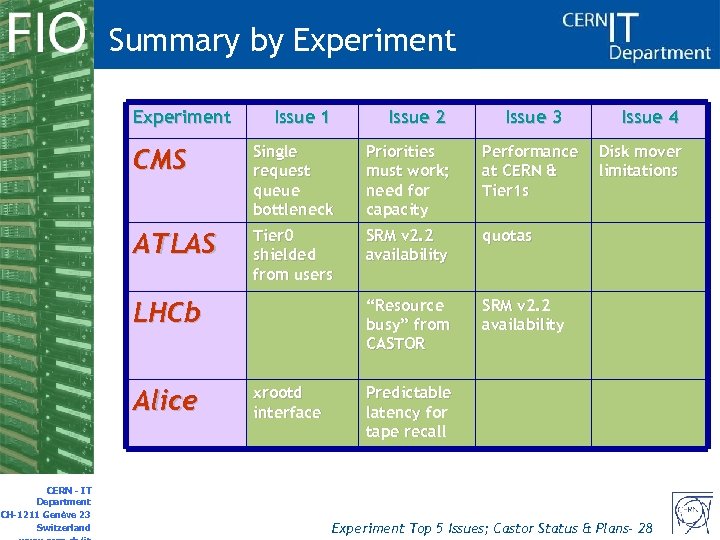

Summary by Experiment Issue 1 Issue 2 CMS Single request queue bottleneck Priorities must work; need for capacity Performance at CERN & Tier 1 s ATLAS Tier 0 shielded from users SRM v 2. 2 availability quotas “Resource busy” from CASTOR SRM v 2. 2 availability LHCb Alice CERN - IT Department CH-1211 Genève 23 Switzerland xrootd interface Issue 3 Issue 4 Disk mover limitations Predictable latency for tape recall Experiment Top 5 Issues; Castor Status & Plans- 28

Summary by Experiment Issue 1 Issue 2 CMS Single request queue bottleneck Priorities must work; need for capacity Performance at CERN & Tier 1 s ATLAS Tier 0 shielded from users SRM v 2. 2 availability quotas “Resource busy” from CASTOR SRM v 2. 2 availability LHCb Alice CERN - IT Department CH-1211 Genève 23 Switzerland xrootd interface Issue 3 Issue 4 Disk mover limitations Predictable latency for tape recall Experiment Top 5 Issues; Castor Status & Plans- 28

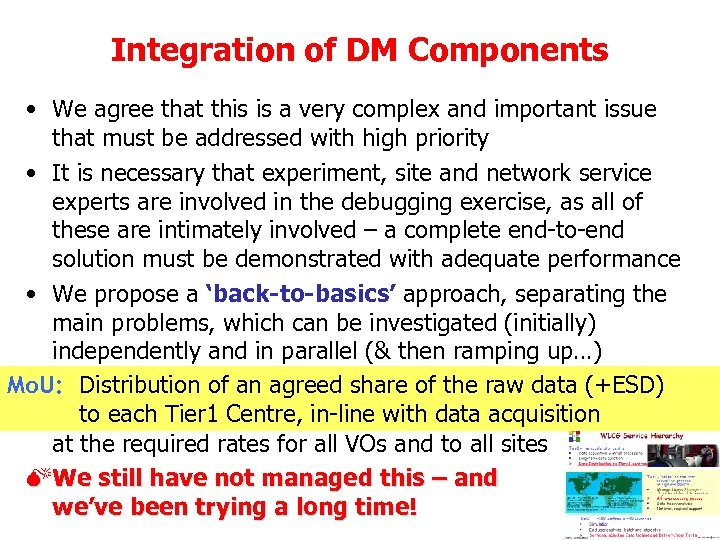

Integration of DM Components • We agree that this is a very complex and important issue that must be addressed with high priority • It is necessary that experiment, site and network service experts are involved in the debugging exercise, as all of these are intimately involved – a complete end-to-end solution must be demonstrated with adequate performance • We propose a ‘back-to-basics’ approach, separating the main problems, which can be investigated (initially) independently and in parallel (& then ramping up…) Mo. U: Distribution of an agreed share of hostraw data (+ESD) • We should not forget the role of the lab in the WLCG to each Tier 1 Centre, in-line with data acquisition model – we must be able to distribute data at the required rates for all VOs and to all sites MWe still have not managed this – and we’ve been trying a long time! 29

Integration of DM Components • We agree that this is a very complex and important issue that must be addressed with high priority • It is necessary that experiment, site and network service experts are involved in the debugging exercise, as all of these are intimately involved – a complete end-to-end solution must be demonstrated with adequate performance • We propose a ‘back-to-basics’ approach, separating the main problems, which can be investigated (initially) independently and in parallel (& then ramping up…) Mo. U: Distribution of an agreed share of hostraw data (+ESD) • We should not forget the role of the lab in the WLCG to each Tier 1 Centre, in-line with data acquisition model – we must be able to distribute data at the required rates for all VOs and to all sites MWe still have not managed this – and we’ve been trying a long time! 29

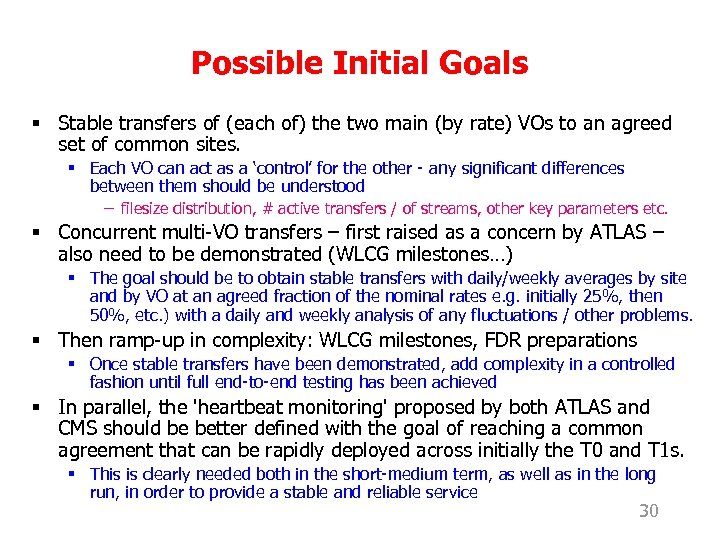

Possible Initial Goals § Stable transfers of (each of) the two main (by rate) VOs to an agreed set of common sites. § Each VO can act as a ‘control’ for the other - any significant differences between them should be understood − filesize distribution, # active transfers / of streams, other key parameters etc. § Concurrent multi-VO transfers – first raised as a concern by ATLAS – also need to be demonstrated (WLCG milestones…) § The goal should be to obtain stable transfers with daily/weekly averages by site and by VO at an agreed fraction of the nominal rates e. g. initially 25%, then 50%, etc. ) with a daily and weekly analysis of any fluctuations / other problems. § Then ramp-up in complexity: WLCG milestones, FDR preparations § Once stable transfers have been demonstrated, add complexity in a controlled fashion until full end-to-end testing has been achieved § In parallel, the 'heartbeat monitoring' proposed by both ATLAS and CMS should be better defined with the goal of reaching a common agreement that can be rapidly deployed across initially the T 0 and T 1 s. § This is clearly needed both in the short-medium term, as well as in the long run, in order to provide a stable and reliable service 30

Possible Initial Goals § Stable transfers of (each of) the two main (by rate) VOs to an agreed set of common sites. § Each VO can act as a ‘control’ for the other - any significant differences between them should be understood − filesize distribution, # active transfers / of streams, other key parameters etc. § Concurrent multi-VO transfers – first raised as a concern by ATLAS – also need to be demonstrated (WLCG milestones…) § The goal should be to obtain stable transfers with daily/weekly averages by site and by VO at an agreed fraction of the nominal rates e. g. initially 25%, then 50%, etc. ) with a daily and weekly analysis of any fluctuations / other problems. § Then ramp-up in complexity: WLCG milestones, FDR preparations § Once stable transfers have been demonstrated, add complexity in a controlled fashion until full end-to-end testing has been achieved § In parallel, the 'heartbeat monitoring' proposed by both ATLAS and CMS should be better defined with the goal of reaching a common agreement that can be rapidly deployed across initially the T 0 and T 1 s. § This is clearly needed both in the short-medium term, as well as in the long run, in order to provide a stable and reliable service 30

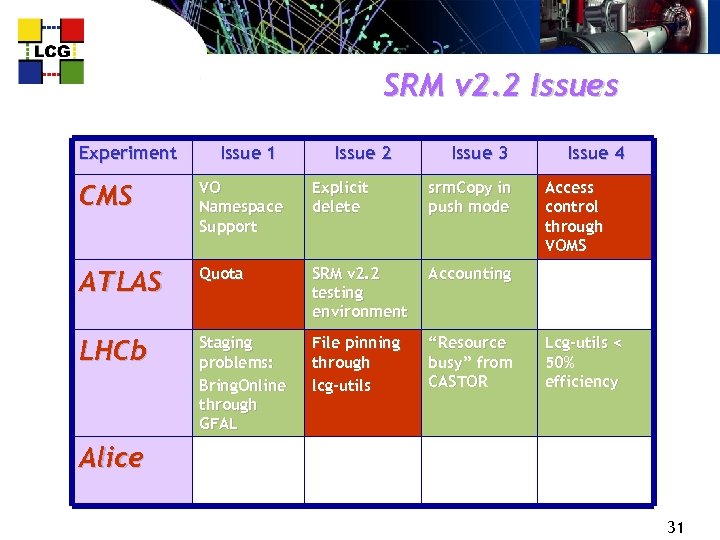

SRM v 2. 2 Issues Experiment Issue 1 Issue 2 Issue 3 CMS VO Namespace Support Explicit delete srm. Copy in push mode ATLAS Quota SRM v 2. 2 testing environment Accounting LHCb Staging problems: Bring. Online through GFAL File pinning through lcg-utils “Resource busy” from CASTOR Issue 4 Access control through VOMS Lcg-utils < 50% efficiency Alice 31

SRM v 2. 2 Issues Experiment Issue 1 Issue 2 Issue 3 CMS VO Namespace Support Explicit delete srm. Copy in push mode ATLAS Quota SRM v 2. 2 testing environment Accounting LHCb Staging problems: Bring. Online through GFAL File pinning through lcg-utils “Resource busy” from CASTOR Issue 4 Access control through VOMS Lcg-utils < 50% efficiency Alice 31

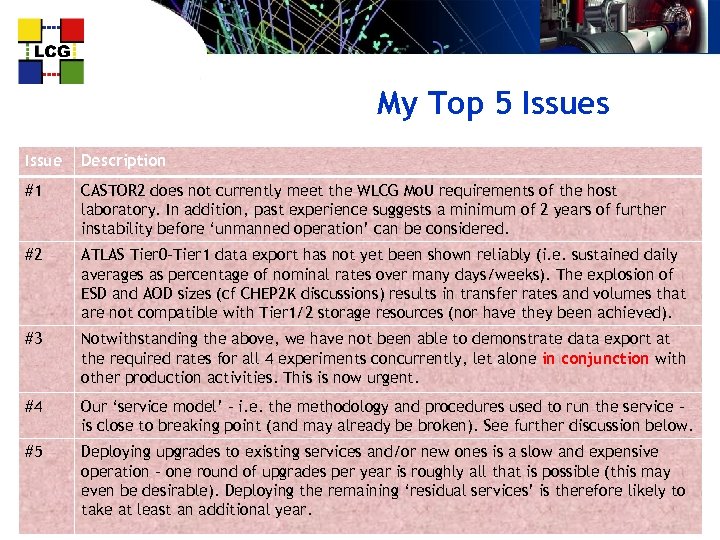

My Top 5 Issues Issue Description #1 CASTOR 2 does not currently meet the WLCG Mo. U requirements of the host laboratory. In addition, past experience suggests a minimum of 2 years of further instability before ‘unmanned operation’ can be considered. #2 ATLAS Tier 0 -Tier 1 data export has not yet been shown reliably (i. e. sustained daily averages as percentage of nominal rates over many days/weeks). The explosion of ESD and AOD sizes (cf CHEP 2 K discussions) results in transfer rates and volumes that are not compatible with Tier 1/2 storage resources (nor have they been achieved). #3 Notwithstanding the above, we have not been able to demonstrate data export at the required rates for all 4 experiments concurrently, let alone in conjunction with other production activities. This is now urgent. #4 Our ‘service model’ – i. e. the methodology and procedures used to run the service – is close to breaking point (and may already be broken). See further discussion below. #5 Deploying upgrades to existing services and/or new ones is a slow and expensive operation – one round of upgrades per year is roughly all that is possible (this may even be desirable). Deploying the remaining ‘residual services’ is therefore likely to take at least an additional year.

My Top 5 Issues Issue Description #1 CASTOR 2 does not currently meet the WLCG Mo. U requirements of the host laboratory. In addition, past experience suggests a minimum of 2 years of further instability before ‘unmanned operation’ can be considered. #2 ATLAS Tier 0 -Tier 1 data export has not yet been shown reliably (i. e. sustained daily averages as percentage of nominal rates over many days/weeks). The explosion of ESD and AOD sizes (cf CHEP 2 K discussions) results in transfer rates and volumes that are not compatible with Tier 1/2 storage resources (nor have they been achieved). #3 Notwithstanding the above, we have not been able to demonstrate data export at the required rates for all 4 experiments concurrently, let alone in conjunction with other production activities. This is now urgent. #4 Our ‘service model’ – i. e. the methodology and procedures used to run the service – is close to breaking point (and may already be broken). See further discussion below. #5 Deploying upgrades to existing services and/or new ones is a slow and expensive operation – one round of upgrades per year is roughly all that is possible (this may even be desirable). Deploying the remaining ‘residual services’ is therefore likely to take at least an additional year.

WLCG Service Interventions Status, Procedures & Directions Jamie Shiers, CERN, March 22 2007

WLCG Service Interventions Status, Procedures & Directions Jamie Shiers, CERN, March 22 2007

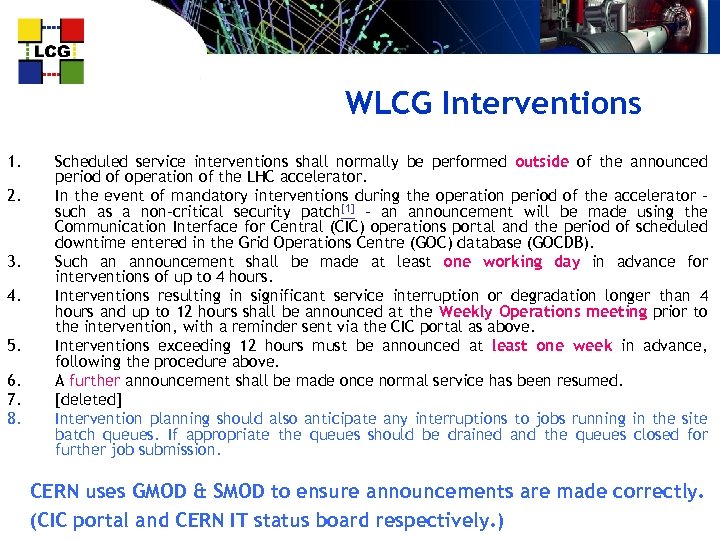

WLCG Interventions 1. 2. 3. 4. 5. 6. 7. 8. Scheduled service interventions shall normally be performed outside of the announced period of operation of the LHC accelerator. In the event of mandatory interventions during the operation period of the accelerator – such as a non-critical security patch[1] – an announcement will be made using the Communication Interface for Central (CIC) operations portal and the period of scheduled downtime entered in the Grid Operations Centre (GOC) database (GOCDB). Such an announcement shall be made at least one working day in advance for interventions of up to 4 hours. Interventions resulting in significant service interruption or degradation longer than 4 hours and up to 12 hours shall be announced at the Weekly Operations meeting prior to the intervention, with a reminder sent via the CIC portal as above. Interventions exceeding 12 hours must be announced at least one week in advance, following the procedure above. A further announcement shall be made once normal service has been resumed. [deleted] Intervention planning should also anticipate any interruptions to jobs running in the site batch queues. If appropriate the queues should be drained and the queues closed for further job submission. CERN uses GMOD & SMOD to ensure announcements are made correctly. (CIC portal and CERN IT status board respectively. )

WLCG Interventions 1. 2. 3. 4. 5. 6. 7. 8. Scheduled service interventions shall normally be performed outside of the announced period of operation of the LHC accelerator. In the event of mandatory interventions during the operation period of the accelerator – such as a non-critical security patch[1] – an announcement will be made using the Communication Interface for Central (CIC) operations portal and the period of scheduled downtime entered in the Grid Operations Centre (GOC) database (GOCDB). Such an announcement shall be made at least one working day in advance for interventions of up to 4 hours. Interventions resulting in significant service interruption or degradation longer than 4 hours and up to 12 hours shall be announced at the Weekly Operations meeting prior to the intervention, with a reminder sent via the CIC portal as above. Interventions exceeding 12 hours must be announced at least one week in advance, following the procedure above. A further announcement shall be made once normal service has been resumed. [deleted] Intervention planning should also anticipate any interruptions to jobs running in the site batch queues. If appropriate the queues should be drained and the queues closed for further job submission. CERN uses GMOD & SMOD to ensure announcements are made correctly. (CIC portal and CERN IT status board respectively. )

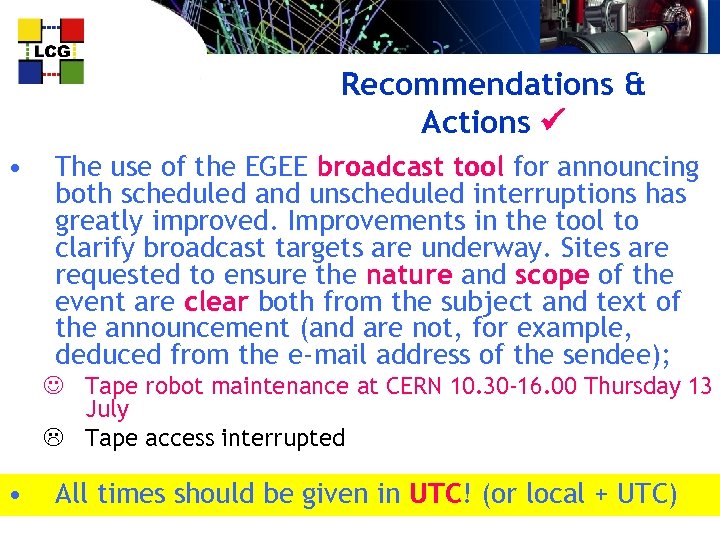

Recommendations & Actions • The use of the EGEE broadcast tool for announcing both scheduled and unscheduled interruptions has greatly improved. Improvements in the tool to clarify broadcast targets are underway. Sites are requested to ensure the nature and scope of the event are clear both from the subject and text of the announcement (and are not, for example, deduced from the e-mail address of the sendee); J Tape robot maintenance at CERN 10. 30 -16. 00 Thursday 13 July L Tape access interrupted • All times should be given in UTC! (or local + UTC)

Recommendations & Actions • The use of the EGEE broadcast tool for announcing both scheduled and unscheduled interruptions has greatly improved. Improvements in the tool to clarify broadcast targets are underway. Sites are requested to ensure the nature and scope of the event are clear both from the subject and text of the announcement (and are not, for example, deduced from the e-mail address of the sendee); J Tape robot maintenance at CERN 10. 30 -16. 00 Thursday 13 July L Tape access interrupted • All times should be given in UTC! (or local + UTC)

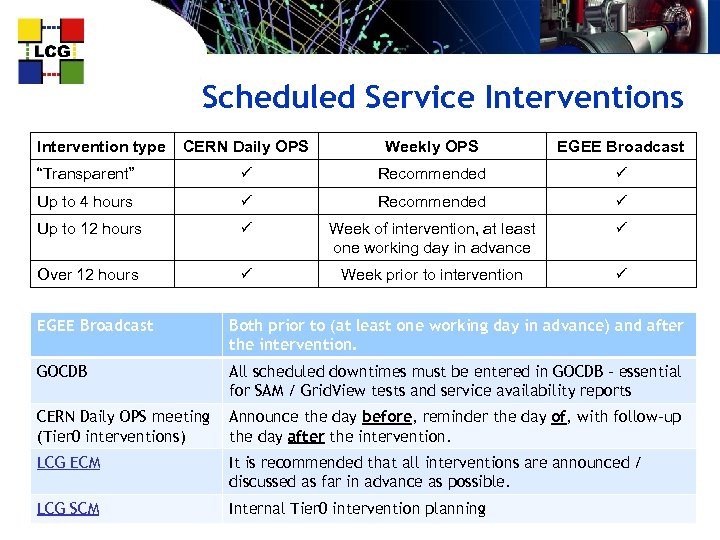

Scheduled Service Interventions Intervention type CERN Daily OPS Weekly OPS EGEE Broadcast “Transparent” Recommended Up to 4 hours Recommended Up to 12 hours Week of intervention, at least one working day in advance Over 12 hours Week prior to intervention EGEE Broadcast Both prior to (at least one working day in advance) and after the intervention. GOCDB All scheduled downtimes must be entered in GOCDB – essential for SAM / Grid. View tests and service availability reports CERN Daily OPS meeting (Tier 0 interventions) Announce the day before, reminder the day of, with follow-up the day after the intervention. LCG ECM It is recommended that all interventions are announced / discussed as far in advance as possible. LCG SCM Internal Tier 0 intervention planning

Scheduled Service Interventions Intervention type CERN Daily OPS Weekly OPS EGEE Broadcast “Transparent” Recommended Up to 4 hours Recommended Up to 12 hours Week of intervention, at least one working day in advance Over 12 hours Week prior to intervention EGEE Broadcast Both prior to (at least one working day in advance) and after the intervention. GOCDB All scheduled downtimes must be entered in GOCDB – essential for SAM / Grid. View tests and service availability reports CERN Daily OPS meeting (Tier 0 interventions) Announce the day before, reminder the day of, with follow-up the day after the intervention. LCG ECM It is recommended that all interventions are announced / discussed as far in advance as possible. LCG SCM Internal Tier 0 intervention planning

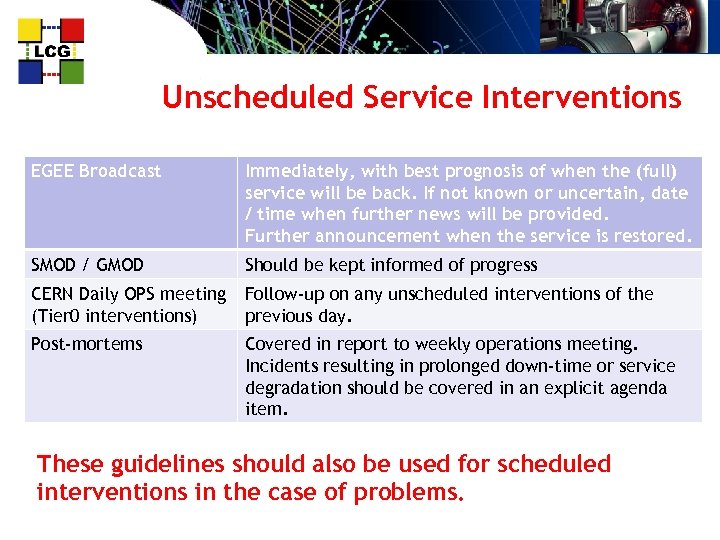

Unscheduled Service Interventions EGEE Broadcast Immediately, with best prognosis of when the (full) service will be back. If not known or uncertain, date / time when further news will be provided. Further announcement when the service is restored. SMOD / GMOD Should be kept informed of progress CERN Daily OPS meeting (Tier 0 interventions) Follow-up on any unscheduled interventions of the previous day. Post-mortems Covered in report to weekly operations meeting. Incidents resulting in prolonged down-time or service degradation should be covered in an explicit agenda item. These guidelines should also be used for scheduled interventions in the case of problems.

Unscheduled Service Interventions EGEE Broadcast Immediately, with best prognosis of when the (full) service will be back. If not known or uncertain, date / time when further news will be provided. Further announcement when the service is restored. SMOD / GMOD Should be kept informed of progress CERN Daily OPS meeting (Tier 0 interventions) Follow-up on any unscheduled interventions of the previous day. Post-mortems Covered in report to weekly operations meeting. Incidents resulting in prolonged down-time or service degradation should be covered in an explicit agenda item. These guidelines should also be used for scheduled interventions in the case of problems.

GMOD Role • The main function of the GMOD is to ensure that problems reported for CERN-IT-GD managed machines are properly followed up and solved. • Also performs all EGEE broadcasts for CERN services Ø Wiki page: https: //twiki. cern. ch/twiki/bin/view/LCG/Gmod. Role. Description • MAIL: it-dep-gd-gmod@cern. ch • PHONE: – primary: 164111 (+41764874111) – backup: 164222 (+41764874222)

GMOD Role • The main function of the GMOD is to ensure that problems reported for CERN-IT-GD managed machines are properly followed up and solved. • Also performs all EGEE broadcasts for CERN services Ø Wiki page: https: //twiki. cern. ch/twiki/bin/view/LCG/Gmod. Role. Description • MAIL: it-dep-gd-gmod@cern. ch • PHONE: – primary: 164111 (+41764874111) – backup: 164222 (+41764874222)

WLCG Service Meetings • CERN has a week-daily operations meeting at 09: 00 – dial-in access {sites, experiments} possible / welcomed – A tradition of at least 30 years – used to be by mainframe – merged since early LEP + 41227676000 access code 017 5012 (leader 017 5011) • Regular LCG Service Coordination Meeting – Tier 0 Services, their impact Grid-wide + roll-out of fixes / features • LCG Experiment Coordination Meeting – dial-in access – + – – – Ø Medium term planning, e. g. FDR preparations & requirements; 41227676000 access code 016 6222 (leader 016 6111) Checks the experiments’ Resource and Production plans; Discusses issues such as ‘VO-shares’ on FTS channels; Medium term interventions, impact & scheduling Prepares input to WLCG-EGEE-OSG operations meeting

WLCG Service Meetings • CERN has a week-daily operations meeting at 09: 00 – dial-in access {sites, experiments} possible / welcomed – A tradition of at least 30 years – used to be by mainframe – merged since early LEP + 41227676000 access code 017 5012 (leader 017 5011) • Regular LCG Service Coordination Meeting – Tier 0 Services, their impact Grid-wide + roll-out of fixes / features • LCG Experiment Coordination Meeting – dial-in access – + – – – Ø Medium term planning, e. g. FDR preparations & requirements; 41227676000 access code 016 6222 (leader 016 6111) Checks the experiments’ Resource and Production plans; Discusses issues such as ‘VO-shares’ on FTS channels; Medium term interventions, impact & scheduling Prepares input to WLCG-EGEE-OSG operations meeting

Logging Service Operations • In order to help track / resolve problems, these should be logged in the ‘site operations log’ – Examples include adding / rebooting nodes, tape movements between robots, restarting daemons, deploying new versions of m/w or configuration files etc. – Any interventions by on-call teams / service managers • At CERN, these logs are reviewed daily at 09: 00 • Cross-correlation between problems & operations is key to rapid problem identification & resolution – And the lack of logging has in the past led to problems that have been extremely costly in time & effort Ø For cross-site issues – i. e. Grid issues - UTC is essential

Logging Service Operations • In order to help track / resolve problems, these should be logged in the ‘site operations log’ – Examples include adding / rebooting nodes, tape movements between robots, restarting daemons, deploying new versions of m/w or configuration files etc. – Any interventions by on-call teams / service managers • At CERN, these logs are reviewed daily at 09: 00 • Cross-correlation between problems & operations is key to rapid problem identification & resolution – And the lack of logging has in the past led to problems that have been extremely costly in time & effort Ø For cross-site issues – i. e. Grid issues - UTC is essential

(WLCG) Site Contact Details wlcg-tier 1 -contacts@cern. ch includes all except ggus Site E-mail Emergency Contact Phone Number (GGUS helpdesk@ggus. org +49 7247 82 8383) CERN grid-cern-prod-admins@cern. ch +41 22 767 5011 (operators – 24 x 7) ASGC asgc-t 1@lists. grid. sinica. edu. tw +886 -2 -2788 -0058 ext 1005 BNL bnl-sc@rcf. rhic. bnl. gov +1 631 -344 5480 FNAL cms-t 1@fnal. gov +1 630 -840 -2345 (helpdesk – 24 x 7) GRIDKA lcg-admin@listserv. fzk. de +49 -7247 -82 -8383 IN 2 P 3 grid. admin@cc. in 2 p 3. fr +33 4 78 93 08 80 INFN tier 1 -staff@infn. it; sc@infn. it +39 051 6092851 NDGF sc-tech@ndgf. org PIC lcg. support@pic. es +34 93 581 3308 RAL lcg-support@gridpp. rl. ac. uk +44 (0) 1235 446981 SARA/NIKHEF grid. support@sara. nl +31 20 592 8008 TRIUMF lcg_support@triumf. ca +1 604 222 7333 (main control room – 24 x 7)

(WLCG) Site Contact Details wlcg-tier 1 -contacts@cern. ch includes all except ggus Site E-mail Emergency Contact Phone Number (GGUS helpdesk@ggus. org +49 7247 82 8383) CERN grid-cern-prod-admins@cern. ch +41 22 767 5011 (operators – 24 x 7) ASGC asgc-t 1@lists. grid. sinica. edu. tw +886 -2 -2788 -0058 ext 1005 BNL bnl-sc@rcf. rhic. bnl. gov +1 631 -344 5480 FNAL cms-t 1@fnal. gov +1 630 -840 -2345 (helpdesk – 24 x 7) GRIDKA lcg-admin@listserv. fzk. de +49 -7247 -82 -8383 IN 2 P 3 grid. admin@cc. in 2 p 3. fr +33 4 78 93 08 80 INFN tier 1 -staff@infn. it; sc@infn. it +39 051 6092851 NDGF sc-tech@ndgf. org PIC lcg. support@pic. es +34 93 581 3308 RAL lcg-support@gridpp. rl. ac. uk +44 (0) 1235 446981 SARA/NIKHEF grid. support@sara. nl +31 20 592 8008 TRIUMF lcg_support@triumf. ca +1 604 222 7333 (main control room – 24 x 7)

Site Offline Procedure 1. If a site goes completely off-line (e. g. major power or network failure) they should contact their Regional Operations Centre (ROC) by phone and ask them to make the broadcast. 2. If the site is also the ROC, then the ROC should phone of the other ROCs and ask them to make the broadcast. 3. We already have a backup grid operator-on-duty team each week, so if the primary one goes off-line, then they call the backup who takes over. This covers WLCG Tier 0, Tier 1 and Tier 2 sites (as well as all EGEE sites)

Site Offline Procedure 1. If a site goes completely off-line (e. g. major power or network failure) they should contact their Regional Operations Centre (ROC) by phone and ask them to make the broadcast. 2. If the site is also the ROC, then the ROC should phone of the other ROCs and ask them to make the broadcast. 3. We already have a backup grid operator-on-duty team each week, so if the primary one goes off-line, then they call the backup who takes over. This covers WLCG Tier 0, Tier 1 and Tier 2 sites (as well as all EGEE sites)

Interventions - Summary Ø Essential to consult / inform / log systematically and clearly Ø Standard tools and procedures should always be used Ø Templates for service announcements under development Ø Future goal: make the majority of routine interventions ‘transparent’

Interventions - Summary Ø Essential to consult / inform / log systematically and clearly Ø Standard tools and procedures should always be used Ø Templates for service announcements under development Ø Future goal: make the majority of routine interventions ‘transparent’

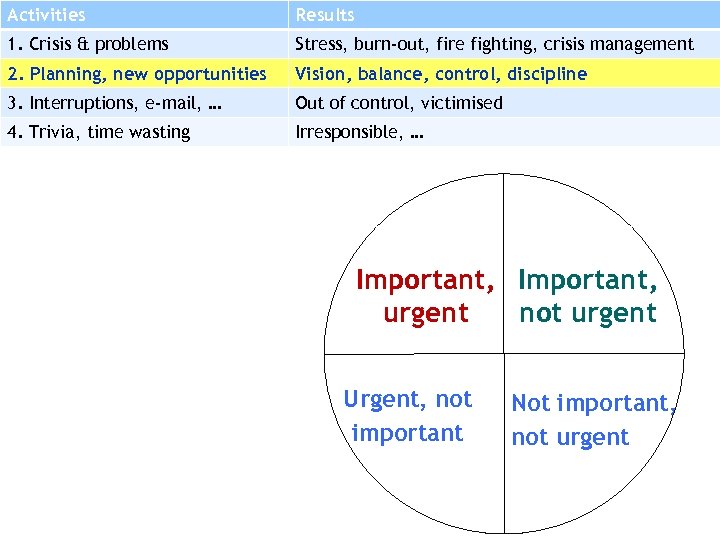

Activities Results 1. Crisis & problems Stress, burn-out, fire fighting, crisis management 2. Planning, new opportunities Vision, balance, control, discipline 3. Interruptions, e-mail, … Out of control, victimised 4. Trivia, time wasting Irresponsible, … Important, urgent not urgent Urgent, not important Not important, not urgent

Activities Results 1. Crisis & problems Stress, burn-out, fire fighting, crisis management 2. Planning, new opportunities Vision, balance, control, discipline 3. Interruptions, e-mail, … Out of control, victimised 4. Trivia, time wasting Irresponsible, … Important, urgent not urgent Urgent, not important Not important, not urgent

Expecting the un-expected • The Expected: – – – When services / servers don’t respond or return an invalid status / message; When users use a new client against an old server; When the air-conditioning / power fails (again & again); When 1000 batch jobs start up simultaneously and clobber the system; A disruptive and urgent security incident… (again, we’ve forgotten…) • The Un-expected: – When disks fail and you have to recover from backup – and the tapes have been overwritten; – When a ‘transparent’ intervention results in long-term service instability and (significantly) degraded performance; – When a service engineer puts a Coke into a machine to ‘warm it up’… • The Truly Un-expected: – – When a fishing trawler cuts a trans-Atlantic network cable; a Tsunami does the equivalent in Asia Pacific; Oracle returns you someone else’s data… mozzarella is declared a weapon of mass destruction… Ø All of these (and more) have happened!

Expecting the un-expected • The Expected: – – – When services / servers don’t respond or return an invalid status / message; When users use a new client against an old server; When the air-conditioning / power fails (again & again); When 1000 batch jobs start up simultaneously and clobber the system; A disruptive and urgent security incident… (again, we’ve forgotten…) • The Un-expected: – When disks fail and you have to recover from backup – and the tapes have been overwritten; – When a ‘transparent’ intervention results in long-term service instability and (significantly) degraded performance; – When a service engineer puts a Coke into a machine to ‘warm it up’… • The Truly Un-expected: – – When a fishing trawler cuts a trans-Atlantic network cable; a Tsunami does the equivalent in Asia Pacific; Oracle returns you someone else’s data… mozzarella is declared a weapon of mass destruction… Ø All of these (and more) have happened!

Summary • The basic programme for this year is: – Q 1 / Q 2: prepare for the experiments’ Dress Rehearsals – Q 3: execute Dress Rehearsals (several iterations) – Q 4: Engineering run of the LHC – and indeed WLCG! • 2008 will probably be: – – Q 1: analyse results of pilot run Q 2: further round of Dress Rehearsals Q 3: data taking Q 4: (re-)processing and analysis WLCG workshop around here….

Summary • The basic programme for this year is: – Q 1 / Q 2: prepare for the experiments’ Dress Rehearsals – Q 3: execute Dress Rehearsals (several iterations) – Q 4: Engineering run of the LHC – and indeed WLCG! • 2008 will probably be: – – Q 1: analyse results of pilot run Q 2: further round of Dress Rehearsals Q 3: data taking Q 4: (re-)processing and analysis WLCG workshop around here….

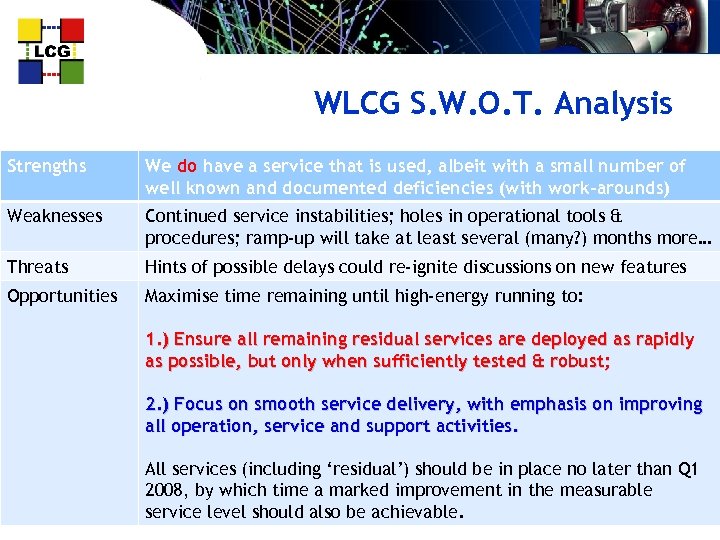

WLCG S. W. O. T. Analysis Strengths We do have a service that is used, albeit with a small number of well known and documented deficiencies (with work-arounds) Weaknesses Continued service instabilities; holes in operational tools & procedures; ramp-up will take at least several (many? ) months more… Threats Hints of possible delays could re-ignite discussions on new features Opportunities Maximise time remaining until high-energy running to: 1. ) Ensure all remaining residual services are deployed as rapidly as possible, but only when sufficiently tested & robust; 2. ) Focus on smooth service delivery, with emphasis on improving all operation, service and support activities. All services (including ‘residual’) should be in place no later than Q 1 2008, by which time a marked improvement in the measurable service level should also be achievable.

WLCG S. W. O. T. Analysis Strengths We do have a service that is used, albeit with a small number of well known and documented deficiencies (with work-arounds) Weaknesses Continued service instabilities; holes in operational tools & procedures; ramp-up will take at least several (many? ) months more… Threats Hints of possible delays could re-ignite discussions on new features Opportunities Maximise time remaining until high-energy running to: 1. ) Ensure all remaining residual services are deployed as rapidly as possible, but only when sufficiently tested & robust; 2. ) Focus on smooth service delivery, with emphasis on improving all operation, service and support activities. All services (including ‘residual’) should be in place no later than Q 1 2008, by which time a marked improvement in the measurable service level should also be achievable.

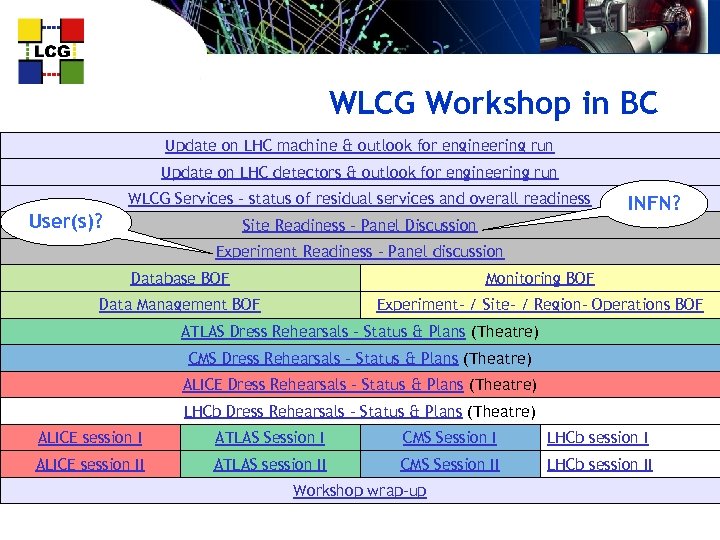

WLCG Workshop in BC Update on LHC machine & outlook for engineering run Update on LHC detectors & outlook for engineering run WLCG Services - status of residual services and overall readiness User(s)? INFN? Site Readiness - Panel Discussion Experiment Readiness - Panel discussion Database BOF Monitoring BOF Data Management BOF Experiment- / Site- / Region- Operations BOF ATLAS Dress Rehearsals - Status & Plans (Theatre) CMS Dress Rehearsals - Status & Plans (Theatre) ALICE Dress Rehearsals - Status & Plans (Theatre) LHCb Dress Rehearsals - Status & Plans (Theatre) ALICE session I ATLAS Session I CMS Session I LHCb session I ALICE session II ATLAS session II CMS Session II LHCb session II Workshop wrap-up

WLCG Workshop in BC Update on LHC machine & outlook for engineering run Update on LHC detectors & outlook for engineering run WLCG Services - status of residual services and overall readiness User(s)? INFN? Site Readiness - Panel Discussion Experiment Readiness - Panel discussion Database BOF Monitoring BOF Data Management BOF Experiment- / Site- / Region- Operations BOF ATLAS Dress Rehearsals - Status & Plans (Theatre) CMS Dress Rehearsals - Status & Plans (Theatre) ALICE Dress Rehearsals - Status & Plans (Theatre) LHCb Dress Rehearsals - Status & Plans (Theatre) ALICE session I ATLAS Session I CMS Session I LHCb session I ALICE session II ATLAS session II CMS Session II LHCb session II Workshop wrap-up

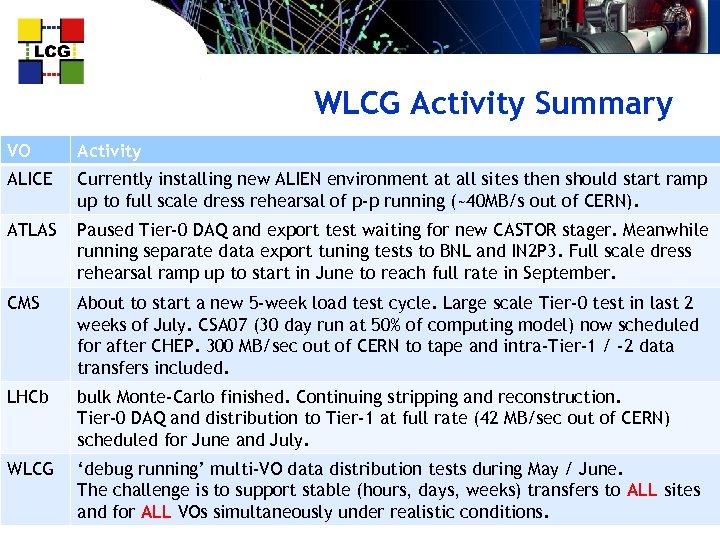

WLCG Activity Summary VO Activity ALICE Currently installing new ALIEN environment at all sites then should start ramp up to full scale dress rehearsal of p-p running (~40 MB/s out of CERN). ATLAS Paused Tier-0 DAQ and export test waiting for new CASTOR stager. Meanwhile running separate data export tuning tests to BNL and IN 2 P 3. Full scale dress rehearsal ramp up to start in June to reach full rate in September. CMS About to start a new 5 -week load test cycle. Large scale Tier-0 test in last 2 weeks of July. CSA 07 (30 day run at 50% of computing model) now scheduled for after CHEP. 300 MB/sec out of CERN to tape and intra-Tier-1 / -2 data transfers included. LHCb bulk Monte-Carlo finished. Continuing stripping and reconstruction. Tier-0 DAQ and distribution to Tier-1 at full rate (42 MB/sec out of CERN) scheduled for June and July. WLCG ‘debug running’ multi-VO data distribution tests during May / June. The challenge is to support stable (hours, days, weeks) transfers to ALL sites and for ALL VOs simultaneously under realistic conditions.

WLCG Activity Summary VO Activity ALICE Currently installing new ALIEN environment at all sites then should start ramp up to full scale dress rehearsal of p-p running (~40 MB/s out of CERN). ATLAS Paused Tier-0 DAQ and export test waiting for new CASTOR stager. Meanwhile running separate data export tuning tests to BNL and IN 2 P 3. Full scale dress rehearsal ramp up to start in June to reach full rate in September. CMS About to start a new 5 -week load test cycle. Large scale Tier-0 test in last 2 weeks of July. CSA 07 (30 day run at 50% of computing model) now scheduled for after CHEP. 300 MB/sec out of CERN to tape and intra-Tier-1 / -2 data transfers included. LHCb bulk Monte-Carlo finished. Continuing stripping and reconstruction. Tier-0 DAQ and distribution to Tier-1 at full rate (42 MB/sec out of CERN) scheduled for June and July. WLCG ‘debug running’ multi-VO data distribution tests during May / June. The challenge is to support stable (hours, days, weeks) transfers to ALL sites and for ALL VOs simultaneously under realistic conditions.

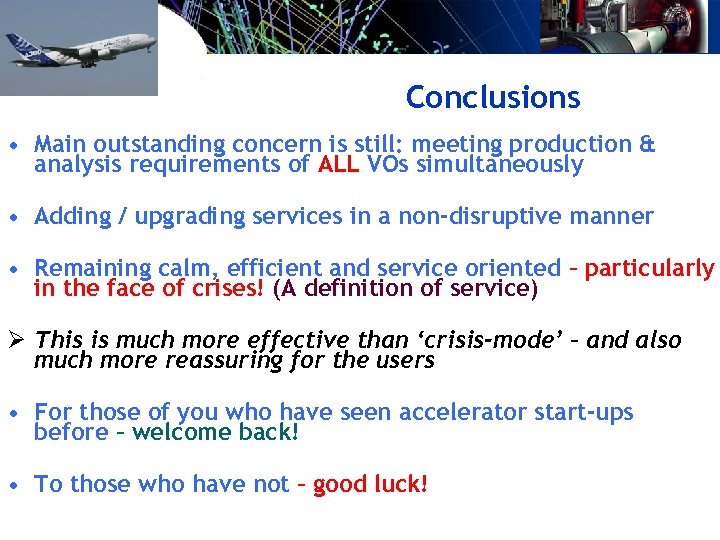

Conclusions • Main outstanding concern is still: meeting production & analysis requirements of ALL VOs simultaneously • Adding / upgrading services in a non-disruptive manner • Remaining calm, efficient and service oriented – particularly in the face of crises! (A definition of service) Ø This is much more effective than ‘crisis-mode’ – and also much more reassuring for the users • For those of you who have seen accelerator start-ups before – welcome back! • To those who have not – good luck!

Conclusions • Main outstanding concern is still: meeting production & analysis requirements of ALL VOs simultaneously • Adding / upgrading services in a non-disruptive manner • Remaining calm, efficient and service oriented – particularly in the face of crises! (A definition of service) Ø This is much more effective than ‘crisis-mode’ – and also much more reassuring for the users • For those of you who have seen accelerator start-ups before – welcome back! • To those who have not – good luck!

The Future

The Future

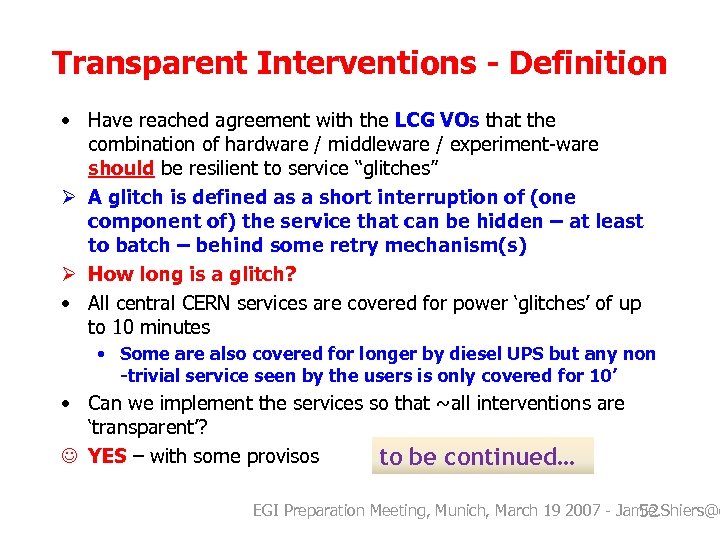

Transparent Interventions - Definition • Have reached agreement with the LCG VOs that the combination of hardware / middleware / experiment-ware should be resilient to service “glitches” Ø A glitch is defined as a short interruption of (one component of) the service that can be hidden – at least to batch – behind some retry mechanism(s) Ø How long is a glitch? • All central CERN services are covered for power ‘glitches’ of up to 10 minutes • Some are also covered for longer by diesel UPS but any non -trivial service seen by the users is only covered for 10’ • Can we implement the services so that ~all interventions are ‘transparent’? J YES – with some provisos to be continued… EGI Preparation Meeting, Munich, March 19 2007 - Jamie. Shiers@c 52

Transparent Interventions - Definition • Have reached agreement with the LCG VOs that the combination of hardware / middleware / experiment-ware should be resilient to service “glitches” Ø A glitch is defined as a short interruption of (one component of) the service that can be hidden – at least to batch – behind some retry mechanism(s) Ø How long is a glitch? • All central CERN services are covered for power ‘glitches’ of up to 10 minutes • Some are also covered for longer by diesel UPS but any non -trivial service seen by the users is only covered for 10’ • Can we implement the services so that ~all interventions are ‘transparent’? J YES – with some provisos to be continued… EGI Preparation Meeting, Munich, March 19 2007 - Jamie. Shiers@c 52