b8c1ddbb0f3fd2f2c79ed90e6dc90b30.ppt

- Количество слайдов: 42

The Worldwide LHC Computing Grid Service Experiment Plans for SC 4 Jamie Shiers February 2004 Assembled from SC 4 Workshop presentations + Les’ plenary talk at CHEP

The Worldwide LHC Computing Grid Service Experiment Plans for SC 4 Jamie Shiers February 2004 Assembled from SC 4 Workshop presentations + Les’ plenary talk at CHEP

LCG Introduction § Global goals and timelines for SC 4 § Experiment plans for pre, post and SC 4 production § Medium term outline for WLCG services Ø The focus of Service Challenge 4 is to demonstrate a basic but reliable service that can be scaled up - by April 2007 to the capacity and performance needed for the first beams. § Development of new functionality and services must continue, but we must be careful that this does not interfere with the main priority for this year – reliable operation of the baseline services les. robertson@cern. ch

LCG Introduction § Global goals and timelines for SC 4 § Experiment plans for pre, post and SC 4 production § Medium term outline for WLCG services Ø The focus of Service Challenge 4 is to demonstrate a basic but reliable service that can be scaled up - by April 2007 to the capacity and performance needed for the first beams. § Development of new functionality and services must continue, but we must be careful that this does not interfere with the main priority for this year – reliable operation of the baseline services les. robertson@cern. ch

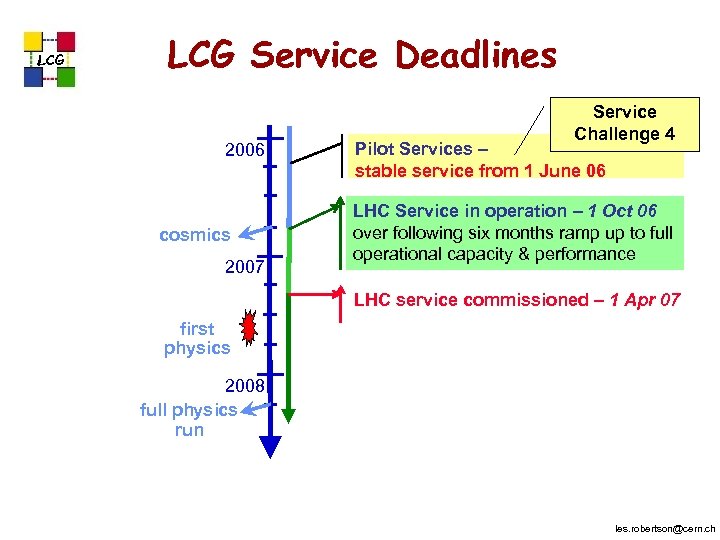

LCG Service Deadlines 2006 cosmics 2007 Service Challenge 4 Pilot Services – stable service from 1 June 06 LHC Service in operation – 1 Oct 06 over following six months ramp up to full operational capacity & performance LHC service commissioned – 1 Apr 07 first physics 2008 full physics run les. robertson@cern. ch

LCG Service Deadlines 2006 cosmics 2007 Service Challenge 4 Pilot Services – stable service from 1 June 06 LHC Service in operation – 1 Oct 06 over following six months ramp up to full operational capacity & performance LHC service commissioned – 1 Apr 07 first physics 2008 full physics run les. robertson@cern. ch

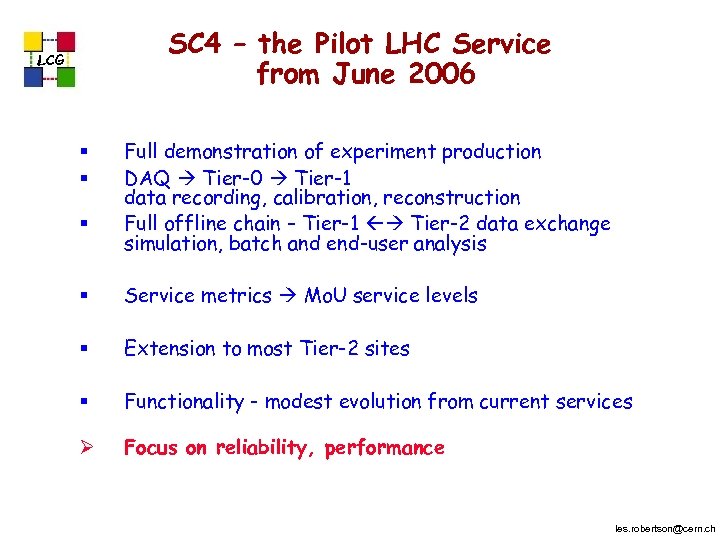

SC 4 – the Pilot LHC Service from June 2006 LCG § § § Full demonstration of experiment production DAQ Tier-0 Tier-1 data recording, calibration, reconstruction Full offline chain – Tier-1 Tier-2 data exchange simulation, batch and end-user analysis § Service metrics Mo. U service levels § Extension to most Tier-2 sites § Functionality - modest evolution from current services Ø Focus on reliability, performance les. robertson@cern. ch

SC 4 – the Pilot LHC Service from June 2006 LCG § § § Full demonstration of experiment production DAQ Tier-0 Tier-1 data recording, calibration, reconstruction Full offline chain – Tier-1 Tier-2 data exchange simulation, batch and end-user analysis § Service metrics Mo. U service levels § Extension to most Tier-2 sites § Functionality - modest evolution from current services Ø Focus on reliability, performance les. robertson@cern. ch

ALICE Data Challenges 2006 • Last chance to show that things are working together (i. e. to test our computing model) • whatever does not work here is likely not to work when real data are there – So we better plan it well and do it well 5

ALICE Data Challenges 2006 • Last chance to show that things are working together (i. e. to test our computing model) • whatever does not work here is likely not to work when real data are there – So we better plan it well and do it well 5

ALICE Data Challenges 2006 • Three main objectives – Computing Data Challenge • Final version of rootifier / recorder • Online data monitoring – Physics data challenge • Simulation of signal events: 106 Pb-Pb, 108 p-p • Final version reconstruction • Data analysis – PROOF data challenge • Preparation of the fast reconstruction / analysis framework 6

ALICE Data Challenges 2006 • Three main objectives – Computing Data Challenge • Final version of rootifier / recorder • Online data monitoring – Physics data challenge • Simulation of signal events: 106 Pb-Pb, 108 p-p • Final version reconstruction • Data analysis – PROOF data challenge • Preparation of the fast reconstruction / analysis framework 6

Main points • Data flow • Realistic system stress test • Network stress test • SC 4 Schedule • Analysis activity 7

Main points • Data flow • Realistic system stress test • Network stress test • SC 4 Schedule • Analysis activity 7

Data Flow • Not very fancy… always the same • Distributed Simulation Production – Here we stress-test the system with the number of jobs in parallel • Data back to CERN • First reconstruction at CERN – RAW/ESD Scheduled “push-out” – here we do the network test • Distributed reconstruction – Here we stress test the I/O subsystem • Distributed (batch) analysis – “And here comes the proof of the pudding” - FCA 8

Data Flow • Not very fancy… always the same • Distributed Simulation Production – Here we stress-test the system with the number of jobs in parallel • Data back to CERN • First reconstruction at CERN – RAW/ESD Scheduled “push-out” – here we do the network test • Distributed reconstruction – Here we stress test the I/O subsystem • Distributed (batch) analysis – “And here comes the proof of the pudding” - FCA 8

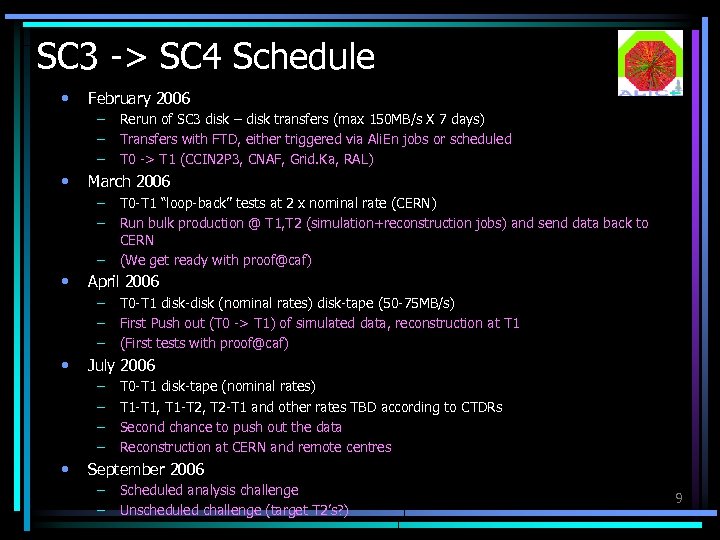

SC 3 -> SC 4 Schedule • February 2006 – – – • March 2006 – – – • T 0 -T 1 disk-disk (nominal rates) disk-tape (50 -75 MB/s) First Push out (T 0 -> T 1) of simulated data, reconstruction at T 1 (First tests with proof@caf) July 2006 – – • T 0 -T 1 “loop-back” tests at 2 x nominal rate (CERN) Run bulk production @ T 1, T 2 (simulation+reconstruction jobs) and send data back to CERN (We get ready with proof@caf) April 2006 – – – • Rerun of SC 3 disk – disk transfers (max 150 MB/s X 7 days) Transfers with FTD, either triggered via Ali. En jobs or scheduled T 0 -> T 1 (CCIN 2 P 3, CNAF, Grid. Ka, RAL) T 0 -T 1 disk-tape (nominal rates) T 1 -T 1, T 1 -T 2, T 2 -T 1 and other rates TBD according to CTDRs Second chance to push out the data Reconstruction at CERN and remote centres September 2006 – – Scheduled analysis challenge Unscheduled challenge (target T 2’s? ) 9

SC 3 -> SC 4 Schedule • February 2006 – – – • March 2006 – – – • T 0 -T 1 disk-disk (nominal rates) disk-tape (50 -75 MB/s) First Push out (T 0 -> T 1) of simulated data, reconstruction at T 1 (First tests with proof@caf) July 2006 – – • T 0 -T 1 “loop-back” tests at 2 x nominal rate (CERN) Run bulk production @ T 1, T 2 (simulation+reconstruction jobs) and send data back to CERN (We get ready with proof@caf) April 2006 – – – • Rerun of SC 3 disk – disk transfers (max 150 MB/s X 7 days) Transfers with FTD, either triggered via Ali. En jobs or scheduled T 0 -> T 1 (CCIN 2 P 3, CNAF, Grid. Ka, RAL) T 0 -T 1 disk-tape (nominal rates) T 1 -T 1, T 1 -T 2, T 2 -T 1 and other rates TBD according to CTDRs Second chance to push out the data Reconstruction at CERN and remote centres September 2006 – – Scheduled analysis challenge Unscheduled challenge (target T 2’s? ) 9

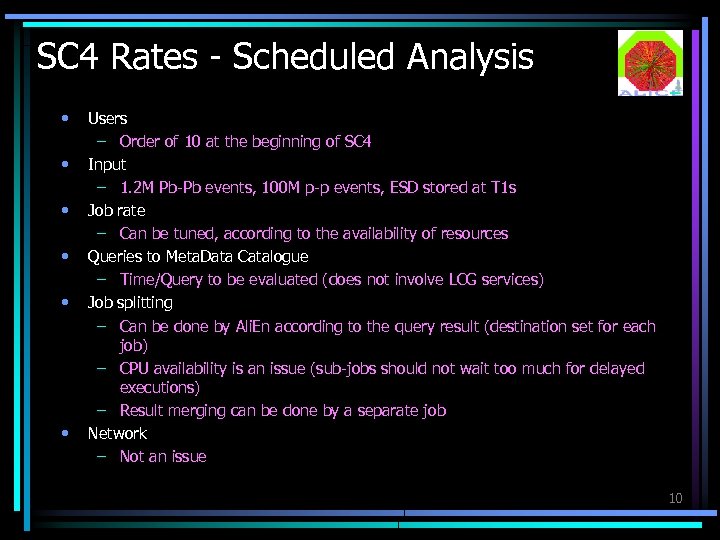

SC 4 Rates - Scheduled Analysis • • • Users – Order of 10 at the beginning of SC 4 Input – 1. 2 M Pb-Pb events, 100 M p-p events, ESD stored at T 1 s Job rate – Can be tuned, according to the availability of resources Queries to Meta. Data Catalogue – Time/Query to be evaluated (does not involve LCG services) Job splitting – Can be done by Ali. En according to the query result (destination set for each job) – CPU availability is an issue (sub-jobs should not wait too much for delayed executions) – Result merging can be done by a separate job Network – Not an issue 10

SC 4 Rates - Scheduled Analysis • • • Users – Order of 10 at the beginning of SC 4 Input – 1. 2 M Pb-Pb events, 100 M p-p events, ESD stored at T 1 s Job rate – Can be tuned, according to the availability of resources Queries to Meta. Data Catalogue – Time/Query to be evaluated (does not involve LCG services) Job splitting – Can be done by Ali. En according to the query result (destination set for each job) – CPU availability is an issue (sub-jobs should not wait too much for delayed executions) – Result merging can be done by a separate job Network – Not an issue 10

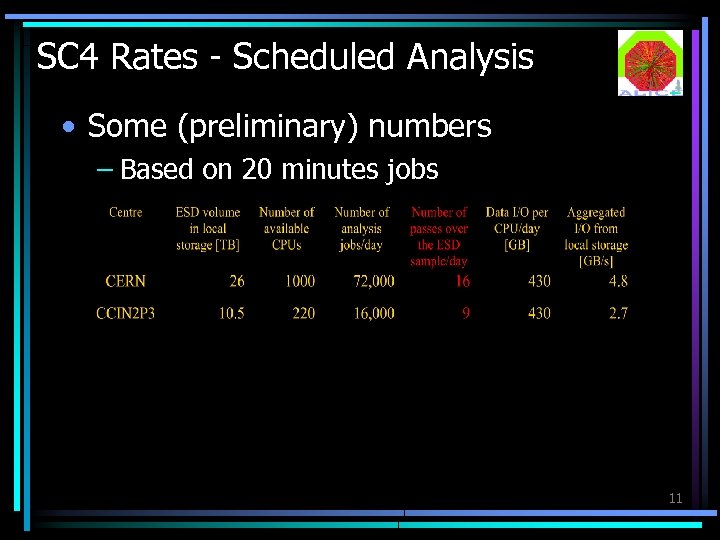

SC 4 Rates - Scheduled Analysis • Some (preliminary) numbers – Based on 20 minutes jobs 11

SC 4 Rates - Scheduled Analysis • Some (preliminary) numbers – Based on 20 minutes jobs 11

SC 4 Rates - Unscheduled Analysis • To be defined 12

SC 4 Rates - Unscheduled Analysis • To be defined 12

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Tests l Complete Tier-0 test n Internal data transfer from “Event Filter” farm to Castor disk pool, Castor tape, CPU farm n Calibration loop and handling of conditions data Ø Including distribution of conditions data to Tier-1 s (and Tier-2 s) n n Transfer of AOD and TAG data to Tier-2 s n l Transfer of RAW, ESD, AOD and TAG data to Tier-1 s Data and dataset registration in DB (add meta-data information to meta-data DB) Distributed production n Full simulation chain run at Tier-2 s (and Tier-1 s) Ø n Reprocessing raw data at Tier-1 s Ø l Data distribution to Tier-1 s, other Tier-2 s and CAF Data distribution to other Tier-1 s, Tier-2 s and CAF Distributed analysis n “Random” job submission accessing data at Tier-1 s (some) and Tier-2 s (mostly) n Dario job submission, distribution and output retrieval Tests of performance of Barberis: ATLAS SC 4 Plans 13

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Tests l Complete Tier-0 test n Internal data transfer from “Event Filter” farm to Castor disk pool, Castor tape, CPU farm n Calibration loop and handling of conditions data Ø Including distribution of conditions data to Tier-1 s (and Tier-2 s) n n Transfer of AOD and TAG data to Tier-2 s n l Transfer of RAW, ESD, AOD and TAG data to Tier-1 s Data and dataset registration in DB (add meta-data information to meta-data DB) Distributed production n Full simulation chain run at Tier-2 s (and Tier-1 s) Ø n Reprocessing raw data at Tier-1 s Ø l Data distribution to Tier-1 s, other Tier-2 s and CAF Data distribution to other Tier-1 s, Tier-2 s and CAF Distributed analysis n “Random” job submission accessing data at Tier-1 s (some) and Tier-2 s (mostly) n Dario job submission, distribution and output retrieval Tests of performance of Barberis: ATLAS SC 4 Plans 13

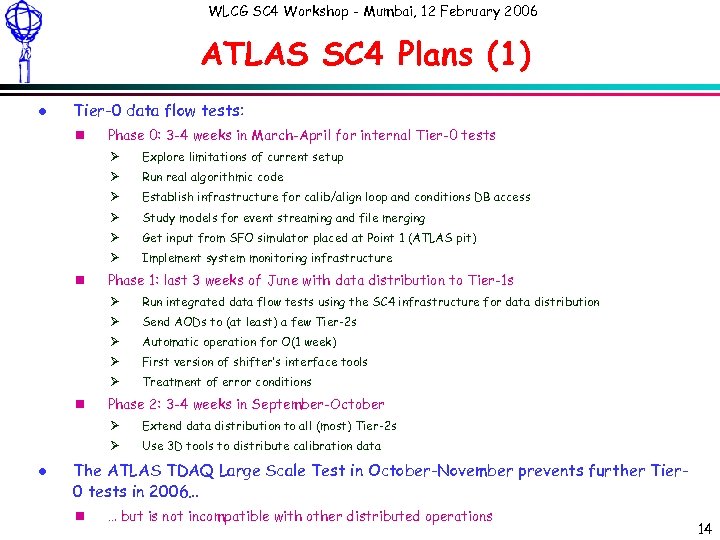

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Plans (1) l Tier-0 data flow tests: n Phase 0: 3 -4 weeks in March-April for internal Tier-0 tests Ø Ø Run real algorithmic code Ø Establish infrastructure for calib/align loop and conditions DB access Ø Study models for event streaming and file merging Ø Get input from SFO simulator placed at Point 1 (ATLAS pit) Ø n Explore limitations of current setup Implement system monitoring infrastructure Phase 1: last 3 weeks of June with data distribution to Tier-1 s Ø Ø Send AODs to (at least) a few Tier-2 s Ø Automatic operation for O(1 week) Ø First version of shifter’s interface tools Ø n Run integrated data flow tests using the SC 4 infrastructure for data distribution Treatment of error conditions Phase 2: 3 -4 weeks in September-October Ø Ø l Extend data distribution to all (most) Tier-2 s Use 3 D tools to distribute calibration data The ATLAS TDAQ Large Scale Test in October-November prevents further Tier 0 tests in 2006… n … but is not incompatible with other distributed operations Dario Barberis: ATLAS SC 4 Plans 14

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Plans (1) l Tier-0 data flow tests: n Phase 0: 3 -4 weeks in March-April for internal Tier-0 tests Ø Ø Run real algorithmic code Ø Establish infrastructure for calib/align loop and conditions DB access Ø Study models for event streaming and file merging Ø Get input from SFO simulator placed at Point 1 (ATLAS pit) Ø n Explore limitations of current setup Implement system monitoring infrastructure Phase 1: last 3 weeks of June with data distribution to Tier-1 s Ø Ø Send AODs to (at least) a few Tier-2 s Ø Automatic operation for O(1 week) Ø First version of shifter’s interface tools Ø n Run integrated data flow tests using the SC 4 infrastructure for data distribution Treatment of error conditions Phase 2: 3 -4 weeks in September-October Ø Ø l Extend data distribution to all (most) Tier-2 s Use 3 D tools to distribute calibration data The ATLAS TDAQ Large Scale Test in October-November prevents further Tier 0 tests in 2006… n … but is not incompatible with other distributed operations Dario Barberis: ATLAS SC 4 Plans 14

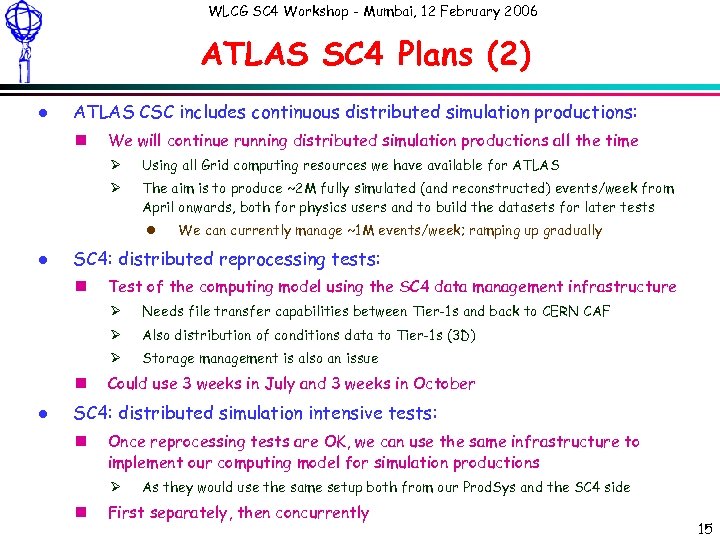

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Plans (2) l ATLAS CSC includes continuous distributed simulation productions: n We will continue running distributed simulation productions all the time Ø Using all Grid computing resources we have available for ATLAS Ø The aim is to produce ~2 M fully simulated (and reconstructed) events/week from April onwards, both for physics users and to build the datasets for later tests l l We can currently manage ~1 M events/week; ramping up gradually SC 4: distributed reprocessing tests: n Test of the computing model using the SC 4 data management infrastructure Ø Ø l Also distribution of conditions data to Tier-1 s (3 D) Ø n Needs file transfer capabilities between Tier-1 s and back to CERN CAF Storage management is also an issue Could use 3 weeks in July and 3 weeks in October SC 4: distributed simulation intensive tests: n Once reprocessing tests are OK, we can use the same infrastructure to implement our computing model for simulation productions Ø n As they would use the same setup both from our Prod. Sys and the SC 4 side First separately, then concurrently Dario Barberis: ATLAS SC 4 Plans 15

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Plans (2) l ATLAS CSC includes continuous distributed simulation productions: n We will continue running distributed simulation productions all the time Ø Using all Grid computing resources we have available for ATLAS Ø The aim is to produce ~2 M fully simulated (and reconstructed) events/week from April onwards, both for physics users and to build the datasets for later tests l l We can currently manage ~1 M events/week; ramping up gradually SC 4: distributed reprocessing tests: n Test of the computing model using the SC 4 data management infrastructure Ø Ø l Also distribution of conditions data to Tier-1 s (3 D) Ø n Needs file transfer capabilities between Tier-1 s and back to CERN CAF Storage management is also an issue Could use 3 weeks in July and 3 weeks in October SC 4: distributed simulation intensive tests: n Once reprocessing tests are OK, we can use the same infrastructure to implement our computing model for simulation productions Ø n As they would use the same setup both from our Prod. Sys and the SC 4 side First separately, then concurrently Dario Barberis: ATLAS SC 4 Plans 15

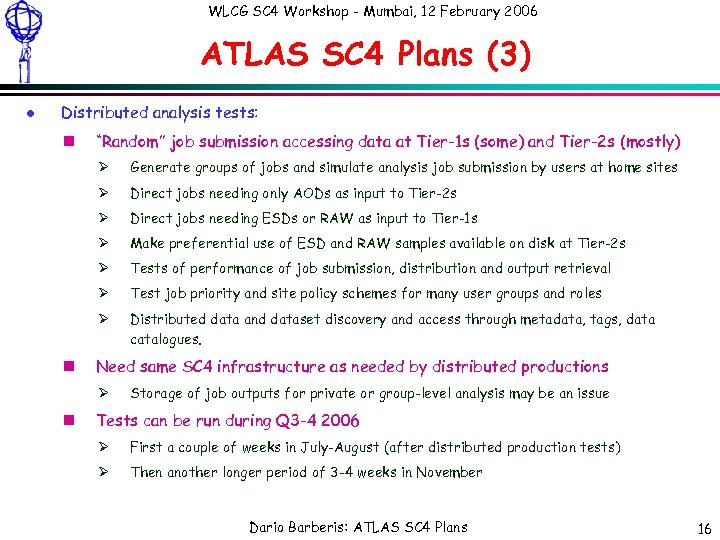

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Plans (3) l Distributed analysis tests: n “Random” job submission accessing data at Tier-1 s (some) and Tier-2 s (mostly) Ø Ø Direct jobs needing only AODs as input to Tier-2 s Ø Direct jobs needing ESDs or RAW as input to Tier-1 s Ø Make preferential use of ESD and RAW samples available on disk at Tier-2 s Ø Tests of performance of job submission, distribution and output retrieval Ø Test job priority and site policy schemes for many user groups and roles Ø n Generate groups of jobs and simulate analysis job submission by users at home sites Distributed data and dataset discovery and access through metadata, tags, data catalogues. Need same SC 4 infrastructure as needed by distributed productions Ø n Storage of job outputs for private or group-level analysis may be an issue Tests can be run during Q 3 -4 2006 Ø First a couple of weeks in July-August (after distributed production tests) Ø Then another longer period of 3 -4 weeks in November Dario Barberis: ATLAS SC 4 Plans 16

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Plans (3) l Distributed analysis tests: n “Random” job submission accessing data at Tier-1 s (some) and Tier-2 s (mostly) Ø Ø Direct jobs needing only AODs as input to Tier-2 s Ø Direct jobs needing ESDs or RAW as input to Tier-1 s Ø Make preferential use of ESD and RAW samples available on disk at Tier-2 s Ø Tests of performance of job submission, distribution and output retrieval Ø Test job priority and site policy schemes for many user groups and roles Ø n Generate groups of jobs and simulate analysis job submission by users at home sites Distributed data and dataset discovery and access through metadata, tags, data catalogues. Need same SC 4 infrastructure as needed by distributed productions Ø n Storage of job outputs for private or group-level analysis may be an issue Tests can be run during Q 3 -4 2006 Ø First a couple of weeks in July-August (after distributed production tests) Ø Then another longer period of 3 -4 weeks in November Dario Barberis: ATLAS SC 4 Plans 16

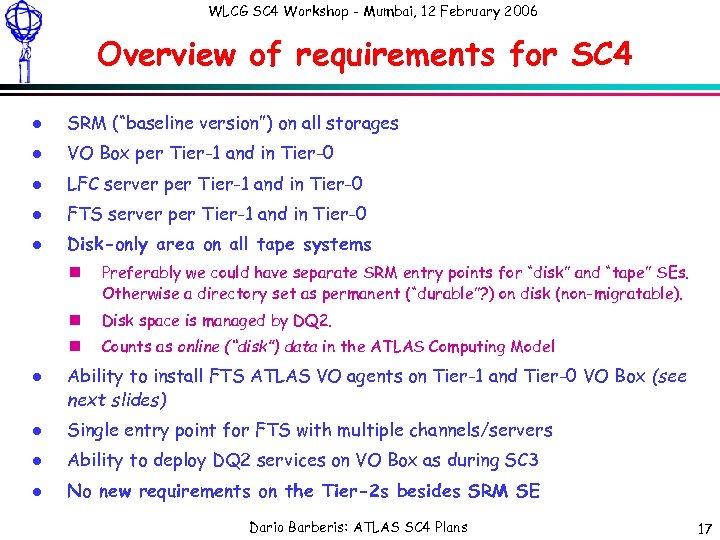

WLCG SC 4 Workshop - Mumbai, 12 February 2006 Overview of requirements for SC 4 l SRM (“baseline version”) on all storages l VO Box per Tier-1 and in Tier-0 l LFC server per Tier-1 and in Tier-0 l FTS server per Tier-1 and in Tier-0 l Disk-only area on all tape systems n n Disk space is managed by DQ 2. n l Preferably we could have separate SRM entry points for “disk” and “tape” SEs. Otherwise a directory set as permanent (“durable”? ) on disk (non-migratable). Counts as online (“disk”) data in the ATLAS Computing Model Ability to install FTS ATLAS VO agents on Tier-1 and Tier-0 VO Box (see next slides) l Single entry point for FTS with multiple channels/servers l Ability to deploy DQ 2 services on VO Box as during SC 3 l No new requirements on the Tier-2 s besides SRM SE Dario Barberis: ATLAS SC 4 Plans 17

WLCG SC 4 Workshop - Mumbai, 12 February 2006 Overview of requirements for SC 4 l SRM (“baseline version”) on all storages l VO Box per Tier-1 and in Tier-0 l LFC server per Tier-1 and in Tier-0 l FTS server per Tier-1 and in Tier-0 l Disk-only area on all tape systems n n Disk space is managed by DQ 2. n l Preferably we could have separate SRM entry points for “disk” and “tape” SEs. Otherwise a directory set as permanent (“durable”? ) on disk (non-migratable). Counts as online (“disk”) data in the ATLAS Computing Model Ability to install FTS ATLAS VO agents on Tier-1 and Tier-0 VO Box (see next slides) l Single entry point for FTS with multiple channels/servers l Ability to deploy DQ 2 services on VO Box as during SC 3 l No new requirements on the Tier-2 s besides SRM SE Dario Barberis: ATLAS SC 4 Plans 17

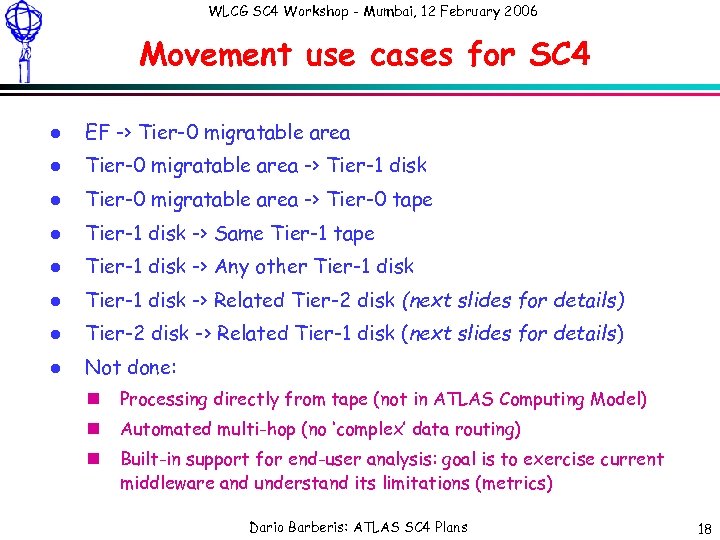

WLCG SC 4 Workshop - Mumbai, 12 February 2006 Movement use cases for SC 4 l EF -> Tier-0 migratable area l Tier-0 migratable area -> Tier-1 disk l Tier-0 migratable area -> Tier-0 tape l Tier-1 disk -> Same Tier-1 tape l Tier-1 disk -> Any other Tier-1 disk l Tier-1 disk -> Related Tier-2 disk (next slides for details) l Tier-2 disk -> Related Tier-1 disk (next slides for details) l Not done: n Processing directly from tape (not in ATLAS Computing Model) n Automated multi-hop (no ‘complex’ data routing) n Built-in support for end-user analysis: goal is to exercise current middleware and understand its limitations (metrics) Dario Barberis: ATLAS SC 4 Plans 18

WLCG SC 4 Workshop - Mumbai, 12 February 2006 Movement use cases for SC 4 l EF -> Tier-0 migratable area l Tier-0 migratable area -> Tier-1 disk l Tier-0 migratable area -> Tier-0 tape l Tier-1 disk -> Same Tier-1 tape l Tier-1 disk -> Any other Tier-1 disk l Tier-1 disk -> Related Tier-2 disk (next slides for details) l Tier-2 disk -> Related Tier-1 disk (next slides for details) l Not done: n Processing directly from tape (not in ATLAS Computing Model) n Automated multi-hop (no ‘complex’ data routing) n Built-in support for end-user analysis: goal is to exercise current middleware and understand its limitations (metrics) Dario Barberis: ATLAS SC 4 Plans 18

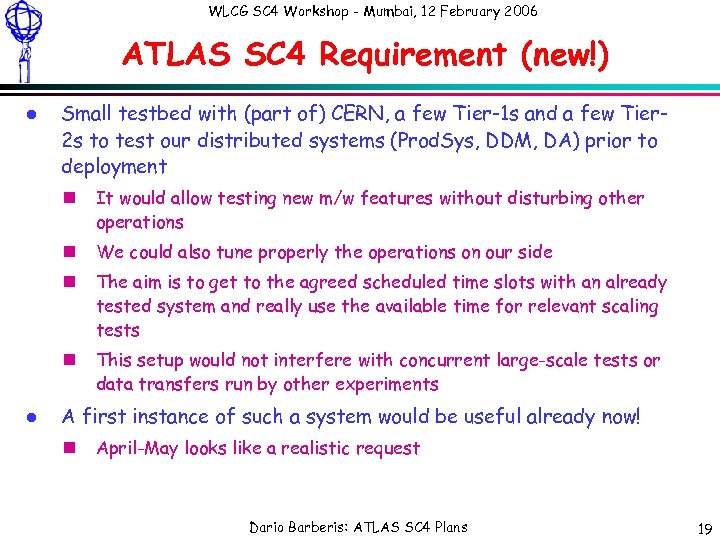

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Requirement (new!) l Small testbed with (part of) CERN, a few Tier-1 s and a few Tier 2 s to test our distributed systems (Prod. Sys, DDM, DA) prior to deployment n n We could also tune properly the operations on our side n The aim is to get to the agreed scheduled time slots with an already tested system and really use the available time for relevant scaling tests n l It would allow testing new m/w features without disturbing other operations This setup would not interfere with concurrent large-scale tests or data transfers run by other experiments A first instance of such a system would be useful already now! n April-May looks like a realistic request Dario Barberis: ATLAS SC 4 Plans 19

WLCG SC 4 Workshop - Mumbai, 12 February 2006 ATLAS SC 4 Requirement (new!) l Small testbed with (part of) CERN, a few Tier-1 s and a few Tier 2 s to test our distributed systems (Prod. Sys, DDM, DA) prior to deployment n n We could also tune properly the operations on our side n The aim is to get to the agreed scheduled time slots with an already tested system and really use the available time for relevant scaling tests n l It would allow testing new m/w features without disturbing other operations This setup would not interfere with concurrent large-scale tests or data transfers run by other experiments A first instance of such a system would be useful already now! n April-May looks like a realistic request Dario Barberis: ATLAS SC 4 Plans 19

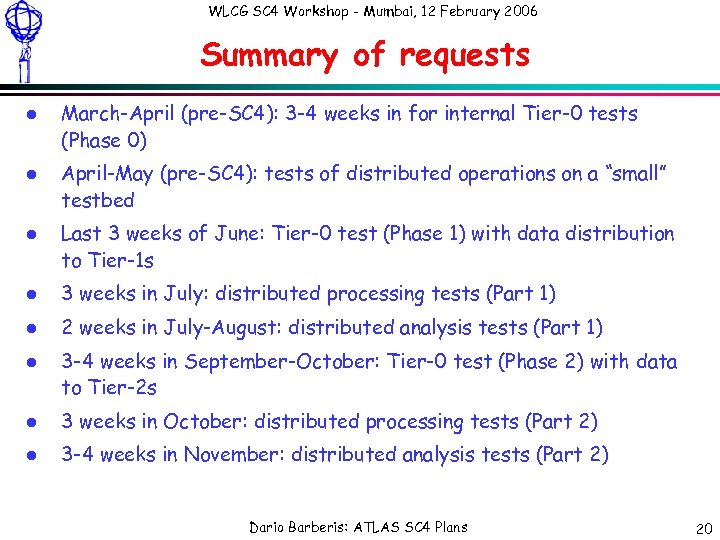

WLCG SC 4 Workshop - Mumbai, 12 February 2006 Summary of requests l l l March-April (pre-SC 4): 3 -4 weeks in for internal Tier-0 tests (Phase 0) April-May (pre-SC 4): tests of distributed operations on a “small” testbed Last 3 weeks of June: Tier-0 test (Phase 1) with data distribution to Tier-1 s l 3 weeks in July: distributed processing tests (Part 1) l 2 weeks in July-August: distributed analysis tests (Part 1) l 3 -4 weeks in September-October: Tier-0 test (Phase 2) with data to Tier-2 s l 3 weeks in October: distributed processing tests (Part 2) l 3 -4 weeks in November: distributed analysis tests (Part 2) Dario Barberis: ATLAS SC 4 Plans 20

WLCG SC 4 Workshop - Mumbai, 12 February 2006 Summary of requests l l l March-April (pre-SC 4): 3 -4 weeks in for internal Tier-0 tests (Phase 0) April-May (pre-SC 4): tests of distributed operations on a “small” testbed Last 3 weeks of June: Tier-0 test (Phase 1) with data distribution to Tier-1 s l 3 weeks in July: distributed processing tests (Part 1) l 2 weeks in July-August: distributed analysis tests (Part 1) l 3 -4 weeks in September-October: Tier-0 test (Phase 2) with data to Tier-2 s l 3 weeks in October: distributed processing tests (Part 2) l 3 -4 weeks in November: distributed analysis tests (Part 2) Dario Barberis: ATLAS SC 4 Plans 20

LCG 21 les. robertson@cern. ch

LCG 21 les. robertson@cern. ch

LCG 22 les. robertson@cern. ch

LCG 22 les. robertson@cern. ch

LCG 23 les. robertson@cern. ch

LCG 23 les. robertson@cern. ch

LCG 24 les. robertson@cern. ch

LCG 24 les. robertson@cern. ch

LCG 25 les. robertson@cern. ch

LCG 25 les. robertson@cern. ch

LCG 26 les. robertson@cern. ch

LCG 26 les. robertson@cern. ch

LCG 27 les. robertson@cern. ch

LCG 27 les. robertson@cern. ch

LCG 28 les. robertson@cern. ch

LCG 28 les. robertson@cern. ch

LCG 29 les. robertson@cern. ch

LCG 29 les. robertson@cern. ch

LHCb DC 06 “Test of LHCb Computing Model using LCG Production Services” • Distribution of RAW data • Reconstruction + Stripping • DST redistribution • User Analysis • MC Production • Use of Condition DB (Alignment + Calibration) 30

LHCb DC 06 “Test of LHCb Computing Model using LCG Production Services” • Distribution of RAW data • Reconstruction + Stripping • DST redistribution • User Analysis • MC Production • Use of Condition DB (Alignment + Calibration) 30

SC 4 Aim for LHCb • Test Data Processing part of CM • Use 200 M MC RAW events: – Distribute – Reconstruct – Stripped and Re-distribute • Simultaneous activities: – MC production – User Analysis 31

SC 4 Aim for LHCb • Test Data Processing part of CM • Use 200 M MC RAW events: – Distribute – Reconstruct – Stripped and Re-distribute • Simultaneous activities: – MC production – User Analysis 31

Preparation for SC 4 • Event generation, detector simulation & digitization • 100 M B-physics + 100 M min bias events: – 3. 7 MSI 2 k · month required (~2 -3 months) – 125 TB on MSS at Tier-0 (keep MC True) • Timing: – Start productions mid March, – Full capacity end March 32

Preparation for SC 4 • Event generation, detector simulation & digitization • 100 M B-physics + 100 M min bias events: – 3. 7 MSI 2 k · month required (~2 -3 months) – 125 TB on MSS at Tier-0 (keep MC True) • Timing: – Start productions mid March, – Full capacity end March 32

LHCb SC 4 (I) • Timing: – Start June – Duration 2 months • Distribution of RAW data – Tier 0 MSS SRM Tier 1’s MSS SRM • 2 TB/day out of CERN – 125 TB on MSS @ Tier 1’s 33

LHCb SC 4 (I) • Timing: – Start June – Duration 2 months • Distribution of RAW data – Tier 0 MSS SRM Tier 1’s MSS SRM • 2 TB/day out of CERN – 125 TB on MSS @ Tier 1’s 33

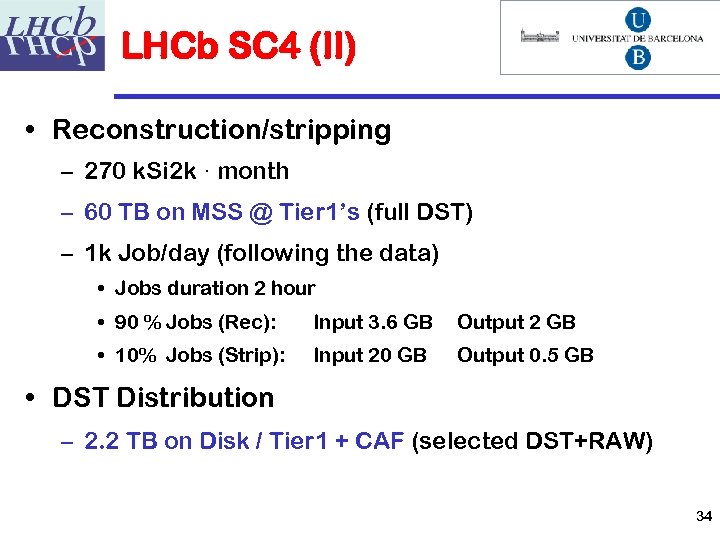

LHCb SC 4 (II) • Reconstruction/stripping – 270 k. Si 2 k · month – 60 TB on MSS @ Tier 1’s (full DST) – 1 k Job/day (following the data) • Jobs duration 2 hour • 90 % Jobs (Rec): Input 3. 6 GB Output 2 GB • 10% Jobs (Strip): Input 20 GB Output 0. 5 GB • DST Distribution – 2. 2 TB on Disk / Tier 1 + CAF (selected DST+RAW) 34

LHCb SC 4 (II) • Reconstruction/stripping – 270 k. Si 2 k · month – 60 TB on MSS @ Tier 1’s (full DST) – 1 k Job/day (following the data) • Jobs duration 2 hour • 90 % Jobs (Rec): Input 3. 6 GB Output 2 GB • 10% Jobs (Strip): Input 20 GB Output 0. 5 GB • DST Distribution – 2. 2 TB on Disk / Tier 1 + CAF (selected DST+RAW) 34

DIRAC Tools & LCG • DIRAC Transfer Agent @ Tier-0 + Tier-1’s – FTS + SRM • DIRAC Production Tools – Production Manager console – Transformation Agents • DIRAC WMS – LFC + RB + CE • Applications: – GFAL: Posix I/O via LFN 35

DIRAC Tools & LCG • DIRAC Transfer Agent @ Tier-0 + Tier-1’s – FTS + SRM • DIRAC Production Tools – Production Manager console – Transformation Agents • DIRAC WMS – LFC + RB + CE • Applications: – GFAL: Posix I/O via LFN 35

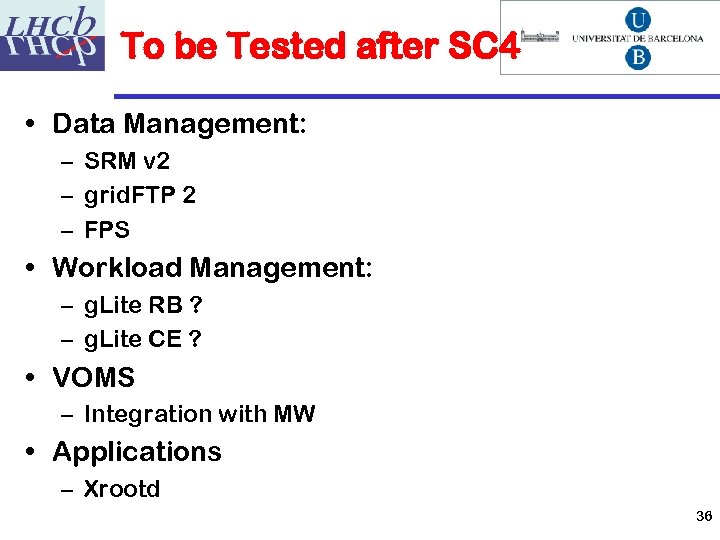

To be Tested after SC 4 • Data Management: – SRM v 2 – grid. FTP 2 – FPS • Workload Management: – g. Lite RB ? – g. Lite CE ? • VOMS – Integration with MW • Applications – Xrootd 36

To be Tested after SC 4 • Data Management: – SRM v 2 – grid. FTP 2 – FPS • Workload Management: – g. Lite RB ? – g. Lite CE ? • VOMS – Integration with MW • Applications – Xrootd 36

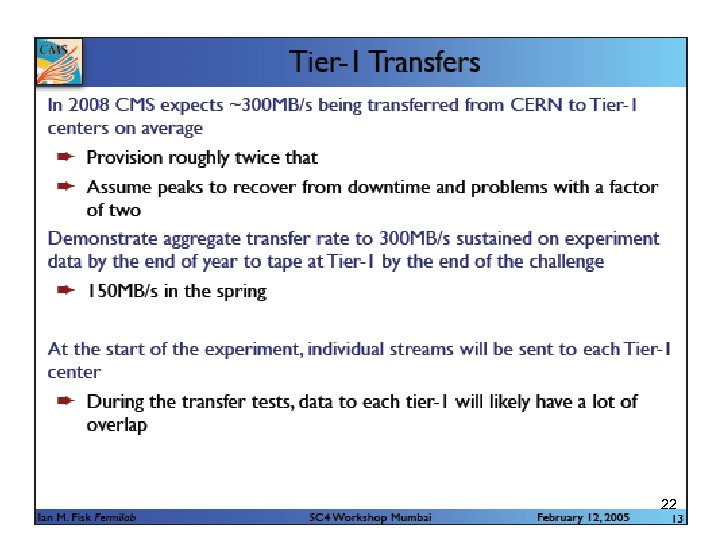

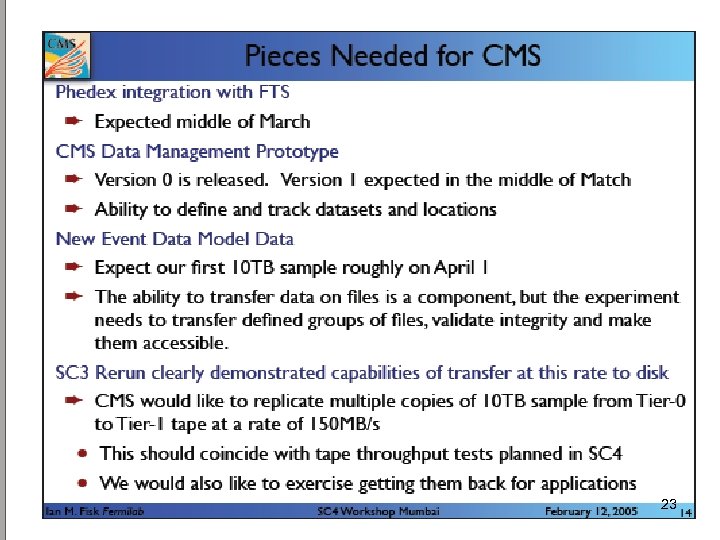

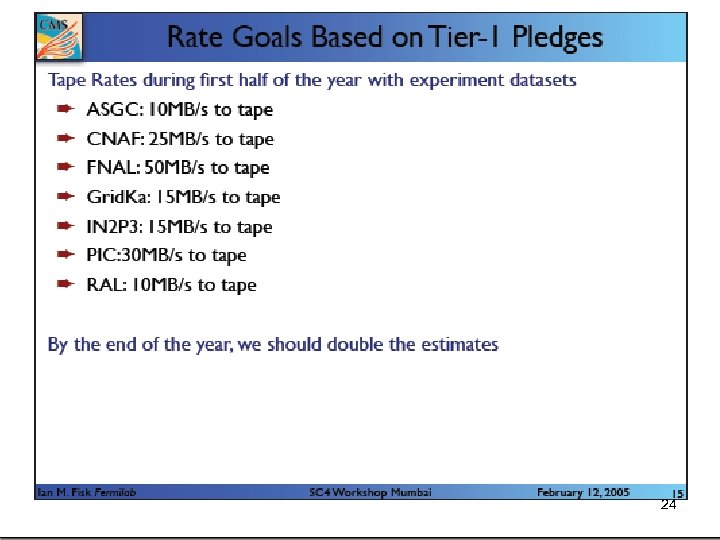

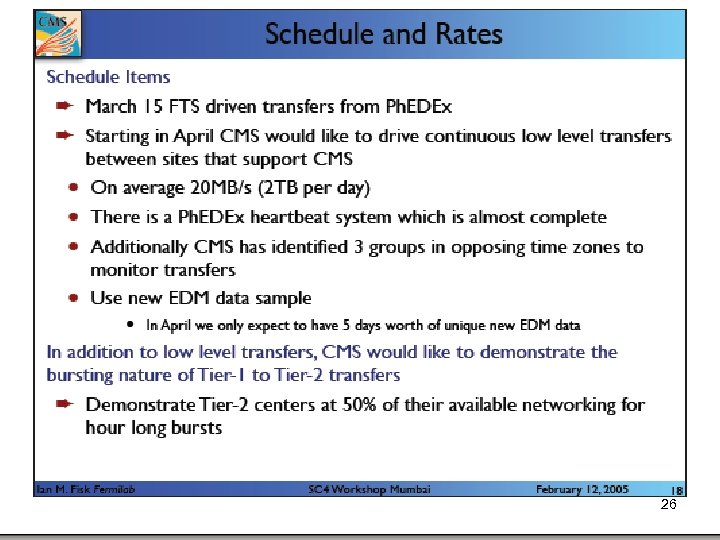

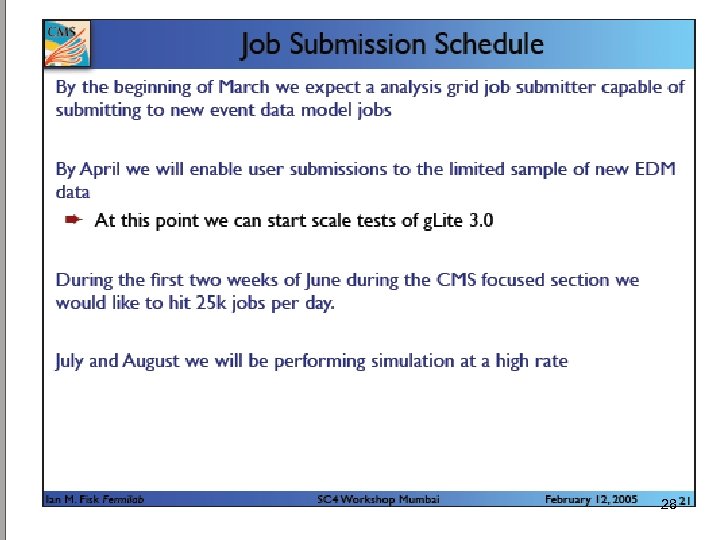

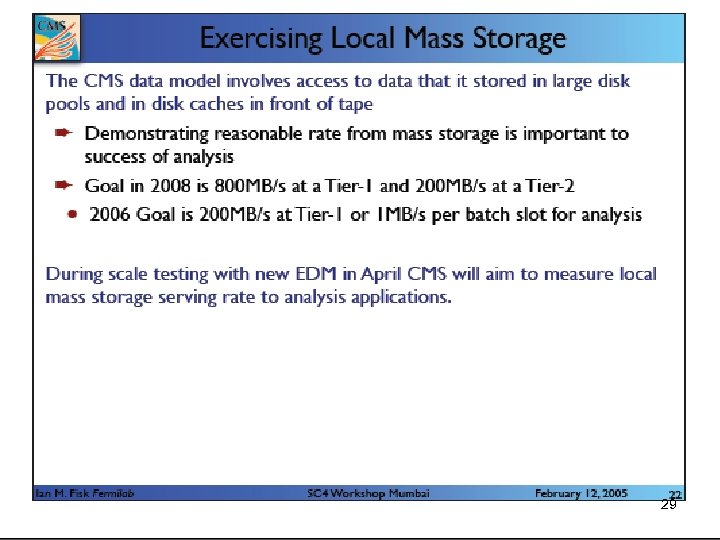

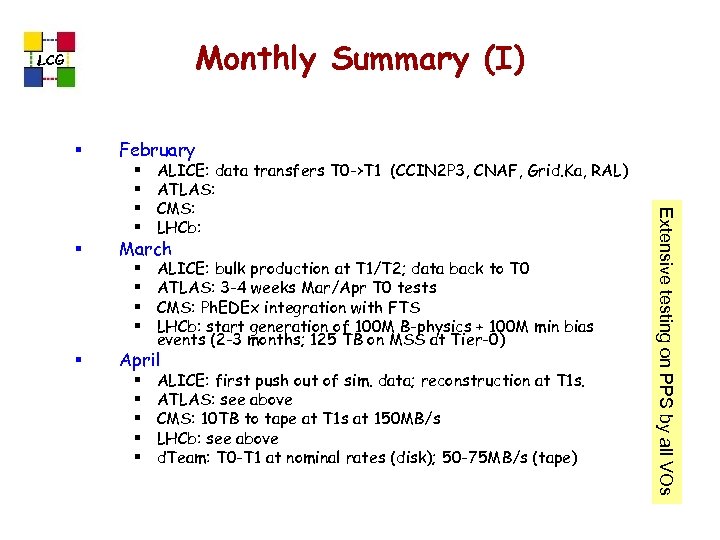

Monthly Summary (I) LCG § February § § § ALICE: data transfers T 0 ->T 1 (CCIN 2 P 3, CNAF, Grid. Ka, RAL) ATLAS: CMS: LHCb: ALICE: bulk production at T 1/T 2; data back to T 0 ATLAS: 3 -4 weeks Mar/Apr T 0 tests CMS: Ph. EDEx integration with FTS LHCb: start generation of 100 M B-physics + 100 M min bias events (2 -3 months; 125 TB on MSS at Tier-0) March April § § § ALICE: first push out of sim. data; reconstruction at T 1 s. ATLAS: see above CMS: 10 TB to tape at T 1 s at 150 MB/s LHCb: see above d. Team: T 0 -T 1 at nominal rates (disk); 50 -75 MB/s (tape) Extensive testing on PPS by all VOs § § les. robertson@cern. ch

Monthly Summary (I) LCG § February § § § ALICE: data transfers T 0 ->T 1 (CCIN 2 P 3, CNAF, Grid. Ka, RAL) ATLAS: CMS: LHCb: ALICE: bulk production at T 1/T 2; data back to T 0 ATLAS: 3 -4 weeks Mar/Apr T 0 tests CMS: Ph. EDEx integration with FTS LHCb: start generation of 100 M B-physics + 100 M min bias events (2 -3 months; 125 TB on MSS at Tier-0) March April § § § ALICE: first push out of sim. data; reconstruction at T 1 s. ATLAS: see above CMS: 10 TB to tape at T 1 s at 150 MB/s LHCb: see above d. Team: T 0 -T 1 at nominal rates (disk); 50 -75 MB/s (tape) Extensive testing on PPS by all VOs § § les. robertson@cern. ch

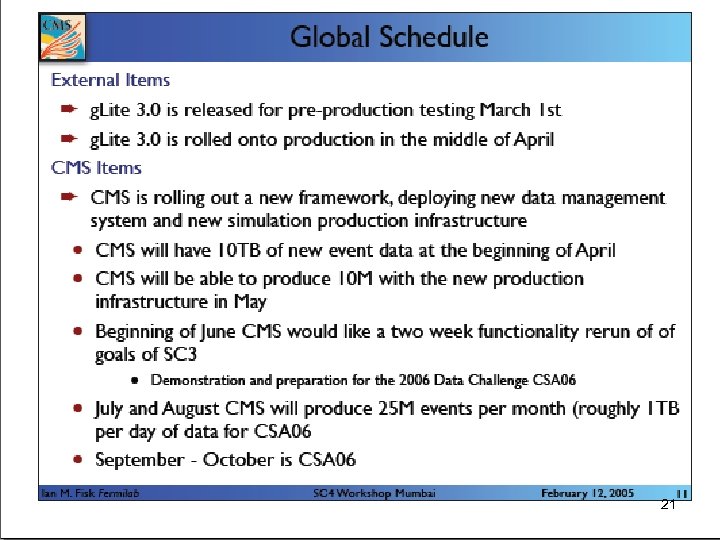

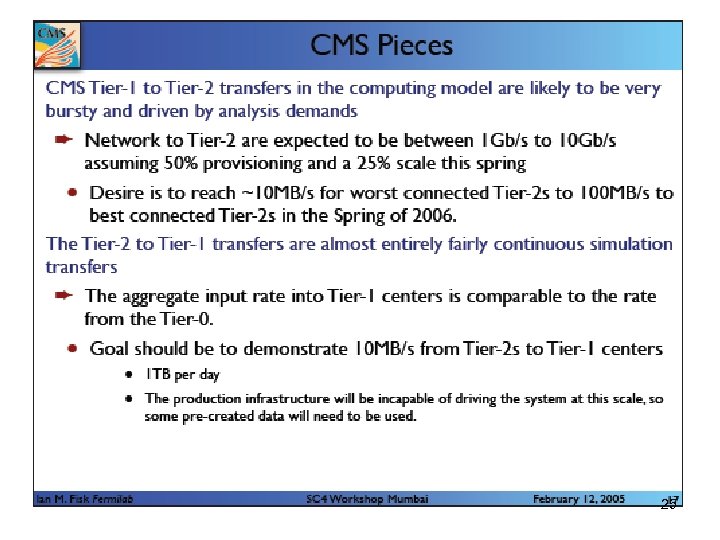

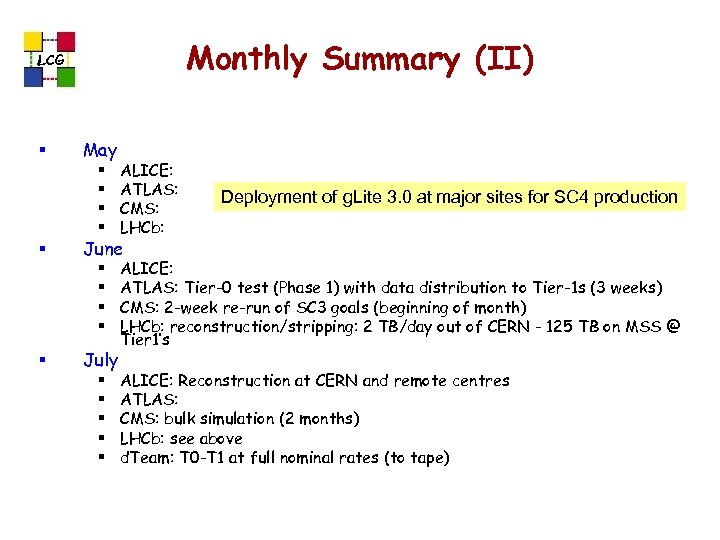

Monthly Summary (II) LCG § May § § § § § ALICE: ATLAS: CMS: LHCb: ALICE: ATLAS: Tier-0 test (Phase 1) with data distribution to Tier-1 s (3 weeks) CMS: 2 -week re-run of SC 3 goals (beginning of month) LHCb: reconstruction/stripping: 2 TB/day out of CERN - 125 TB on MSS @ Tier 1’s Deployment of g. Lite 3. 0 at major sites for SC 4 production June July § § § ALICE: Reconstruction at CERN and remote centres ATLAS: CMS: bulk simulation (2 months) LHCb: see above d. Team: T 0 -T 1 at full nominal rates (to tape) les. robertson@cern. ch

Monthly Summary (II) LCG § May § § § § § ALICE: ATLAS: CMS: LHCb: ALICE: ATLAS: Tier-0 test (Phase 1) with data distribution to Tier-1 s (3 weeks) CMS: 2 -week re-run of SC 3 goals (beginning of month) LHCb: reconstruction/stripping: 2 TB/day out of CERN - 125 TB on MSS @ Tier 1’s Deployment of g. Lite 3. 0 at major sites for SC 4 production June July § § § ALICE: Reconstruction at CERN and remote centres ATLAS: CMS: bulk simulation (2 months) LHCb: see above d. Team: T 0 -T 1 at full nominal rates (to tape) les. robertson@cern. ch

Monthly Summary (III) LCG § August § § § ALICE: ATLAS: CMS: bulk simulation continues LHCb: Analysis on data from June/July … until spring 07 or so… September § § ALICE: Scheduled + unscheduled (T 2 s? ) analysis challenges ATLAS: CMS: LHCb: see above les. robertson@cern. ch

Monthly Summary (III) LCG § August § § § ALICE: ATLAS: CMS: bulk simulation continues LHCb: Analysis on data from June/July … until spring 07 or so… September § § ALICE: Scheduled + unscheduled (T 2 s? ) analysis challenges ATLAS: CMS: LHCb: see above les. robertson@cern. ch

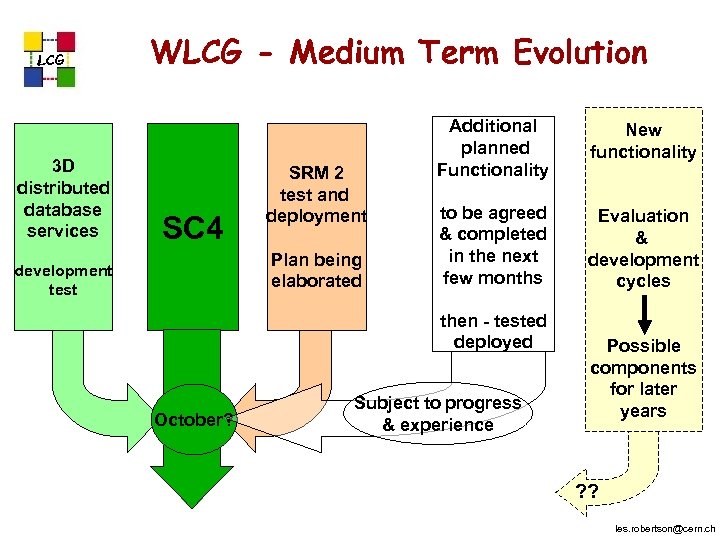

LCG 3 D distributed database services WLCG - Medium Term Evolution SC 4 SRM 2 test and deployment Plan being elaborated development test Additional planned Functionality New functionality to be agreed & completed in the next few months Evaluation & development cycles then - tested deployed October? Subject to progress & experience Possible components for later years ? ? les. robertson@cern. ch

LCG 3 D distributed database services WLCG - Medium Term Evolution SC 4 SRM 2 test and deployment Plan being elaborated development test Additional planned Functionality New functionality to be agreed & completed in the next few months Evaluation & development cycles then - tested deployed October? Subject to progress & experience Possible components for later years ? ? les. robertson@cern. ch

So What Happens at the end of SC 4? LCG § Well prior to October we need to have all structures and procedures in place… § … to run –-- and evolve --- a production service for the long-term § This includes all aspects – monitoring, automatic problem detection, resolution, reporting, escalation, {site, user} support, accounting, review, planning for new productions, service upgrades … Ø For the precise reason that things will evolve, should avoid over-specification… les. robertson@cern. ch

So What Happens at the end of SC 4? LCG § Well prior to October we need to have all structures and procedures in place… § … to run –-- and evolve --- a production service for the long-term § This includes all aspects – monitoring, automatic problem detection, resolution, reporting, escalation, {site, user} support, accounting, review, planning for new productions, service upgrades … Ø For the precise reason that things will evolve, should avoid over-specification… les. robertson@cern. ch

LCG Summary § Two grid infrastructures are now in operation, on which we are able to build computing services for LHC § Reliability and performance have improved significantly over the past year Ø The focus of Service Challenge 4 is to demonstrate a basic but reliable service that can be scaled up - by April 2007 to the capacity and performance needed for the first beams. § Development of new functionality and services must continue, but we must be careful that this does not interfere with the main priority for this year – reliable operation of the baseline services les. robertson@cern. ch

LCG Summary § Two grid infrastructures are now in operation, on which we are able to build computing services for LHC § Reliability and performance have improved significantly over the past year Ø The focus of Service Challenge 4 is to demonstrate a basic but reliable service that can be scaled up - by April 2007 to the capacity and performance needed for the first beams. § Development of new functionality and services must continue, but we must be careful that this does not interfere with the main priority for this year – reliable operation of the baseline services les. robertson@cern. ch