efdc9ddec7ce31e3d22bdd129f82f76a.ppt

- Количество слайдов: 28

The Tera. Paths Testbed: Exploring End-to-End Network Qo. S Dimitrios Katramatos, Dantong Yu, Bruce Gibbard, Shawn Mc. Kee Trident. Com 2007 Presented by D. Katramatos, BNL

Outline T Introduction T The Tera. Paths project T The Tera. Paths system architecture T The Tera. Paths testbed T In progress/future work 2

Introduction T Project background: modern nuclear and high-energy physics community (e. g. , LHC experiments) extensively uses grid computing model; US, European, and international networks are being upgraded to multiple 10 Gbps connections to cope with data movements of gigantic proportions T The problem: support efficient/reliable/predictable peta-scale data movement in modern grid environments utilizing high-speed networks q Multiple data flows with varying priorities q Default “best effort” network behavior can cause performance and service disruption problems T Solution: enhance network functionality with Qo. S features to allow prioritization and protection of data flows q Schedule network usage as a critical grid resource 3

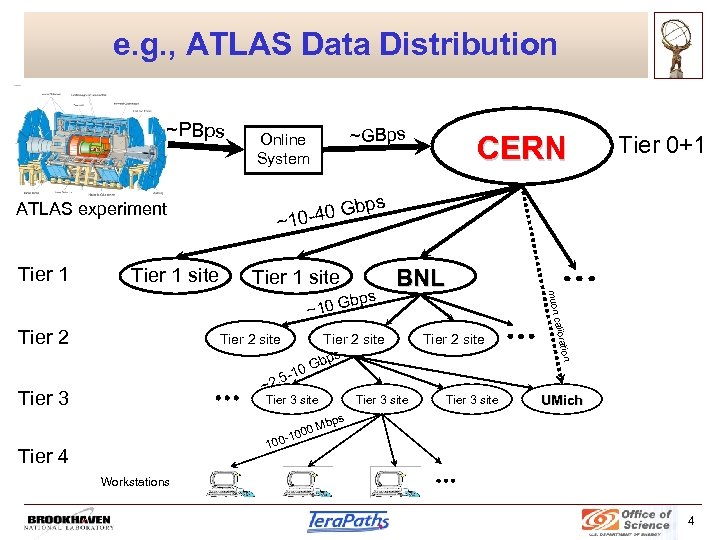

e. g. , ATLAS Data Distribution ~PBps Tier 1 site CERN Tier 1 site Tier 2 site 0 G ~ Tier 3 -1 2. 5 Tier 3 site tion Tier 2 site bps calibra Tier 2 site muon bps BNL ~10 G Tier 2 Tier 0+1 bps -40 G ~ 10 ATLAS experiment Tier 1 ~GBps Online System UMich bps 0 M 0 0 -10 10 Tier 4 Workstations 4

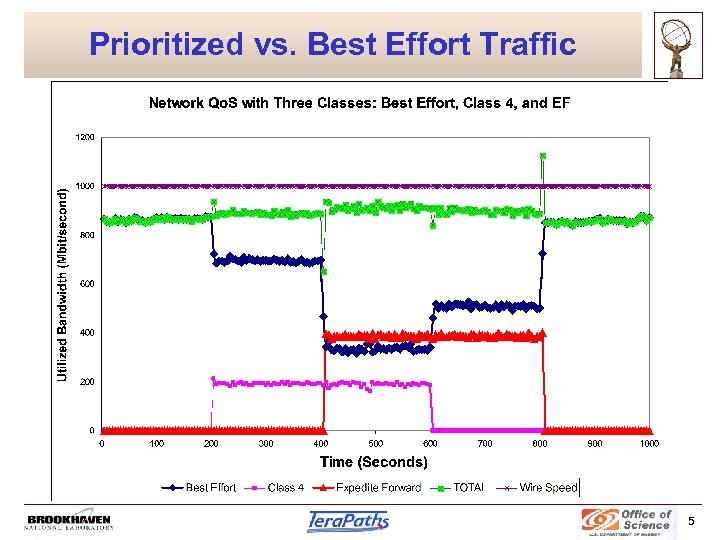

Prioritized vs. Best Effort Traffic 5

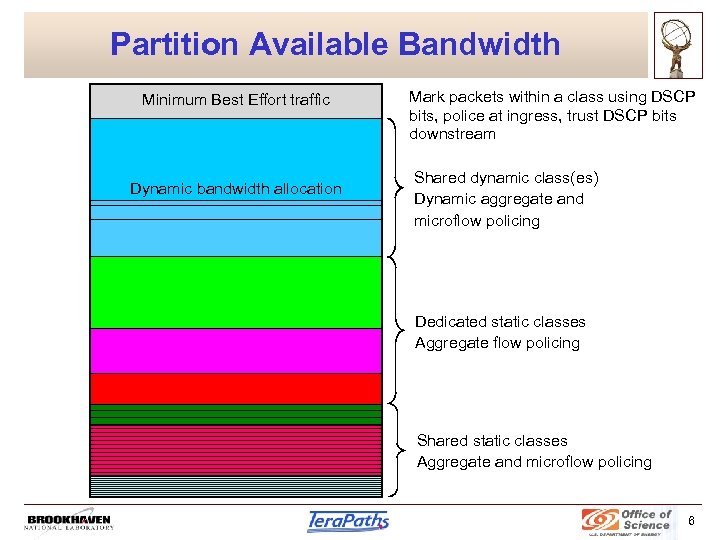

Partition Available Bandwidth Minimum Best Effort traffic Dynamic bandwidth allocation Mark packets within a class using DSCP bits, police at ingress, trust DSCP bits downstream Shared dynamic class(es) Dynamic aggregate and microflow policing Dedicated static classes Aggregate flow policing Shared static classes Aggregate and microflow policing 6

The Tera. Paths Project T BNL’s Tera. Paths project: q Under the U. S. ATLAS umbrella, funded by DOE q Research the use of Diff. Serv, MPLS/GMPLS in data-intensive distributed computing environments q Develop theoretical models for LAN/WAN coordination q Develop necessary software for integrating end-site services and WAN services to provide end-to-end (host-to-host) guaranteed bandwidth network paths to users q Create, maintain, and expand a multi-site testbed for Qo. S network research T Collaboration includes BNL, University of Michigan, ESnet, Internet 2, SLAC; Tier 2 centers being added; Tier 3 s to follow 7

End-to-End Qo. S… How? T Within a site’s LAN (administrative domain) Diff. Serv works and scales fine q Assign data flows to service classes q Pass-through “difficult” segments T But once packets leave site… DSCP markings get reset. Unless… q Ongoing effort by new high-speed network providers to offer reservationcontrolled dedicated paths with predetermined bandwidth z z ESnet’s OSCARS Internet 2’s BRUW, DRAGON q Reserved paths configured to respect DSCP markings q Address scalability by grouping data flows with same destination and forwarding to common tunnels/circuits 8

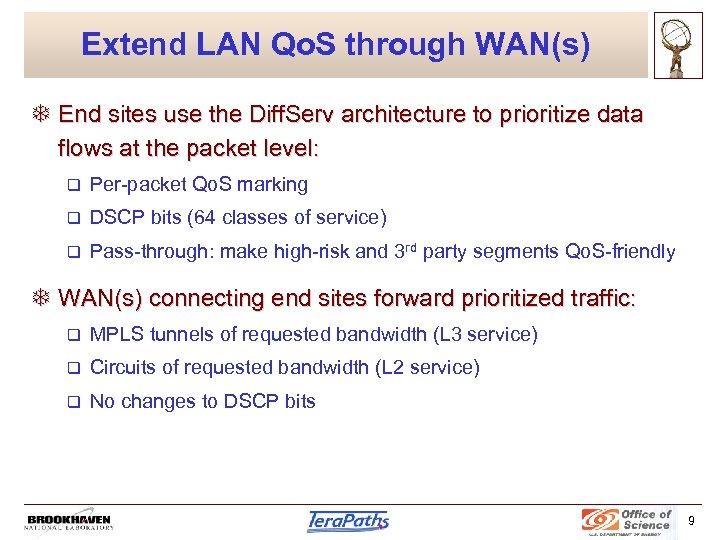

Extend LAN Qo. S through WAN(s) T End sites use the Diff. Serv architecture to prioritize data flows at the packet level: q Per-packet Qo. S marking q DSCP bits (64 classes of service) q Pass-through: make high-risk and 3 rd party segments Qo. S-friendly T WAN(s) connecting end sites forward prioritized traffic: q MPLS tunnels of requested bandwidth (L 3 service) q Circuits of requested bandwidth (L 2 service) q No changes to DSCP bits 9

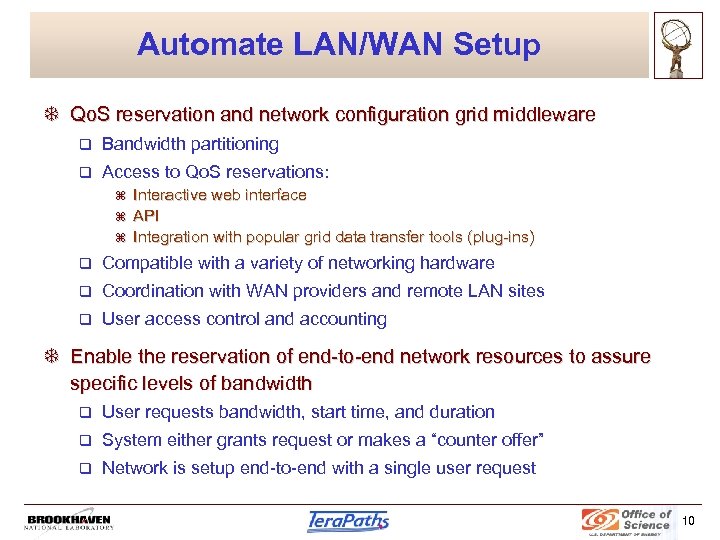

Automate LAN/WAN Setup T Qo. S reservation and network configuration grid middleware q Bandwidth partitioning q Access to Qo. S reservations: z z z Interactive web interface API Integration with popular grid data transfer tools (plug-ins) q Compatible with a variety of networking hardware q Coordination with WAN providers and remote LAN sites q User access control and accounting T Enable the reservation of end-to-end network resources to assure specific levels of bandwidth q User requests bandwidth, start time, and duration q System either grants request or makes a “counter offer” q Network is setup end-to-end with a single user request 10

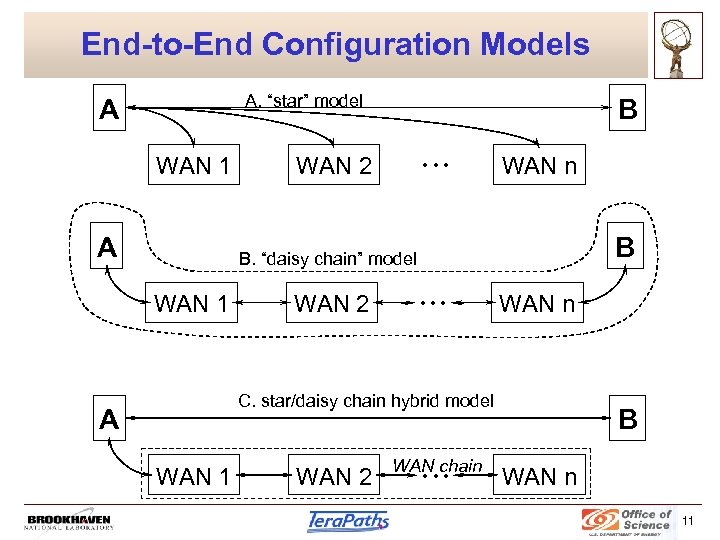

End-to-End Configuration Models A. “star” model A WAN 1 A B WAN 2 • • • WAN n B B. “daisy chain” model WAN 1 WAN 2 • • • WAN n C. star/daisy chain hybrid model A WAN 1 WAN 2 WAN chain • • • B WAN n 11

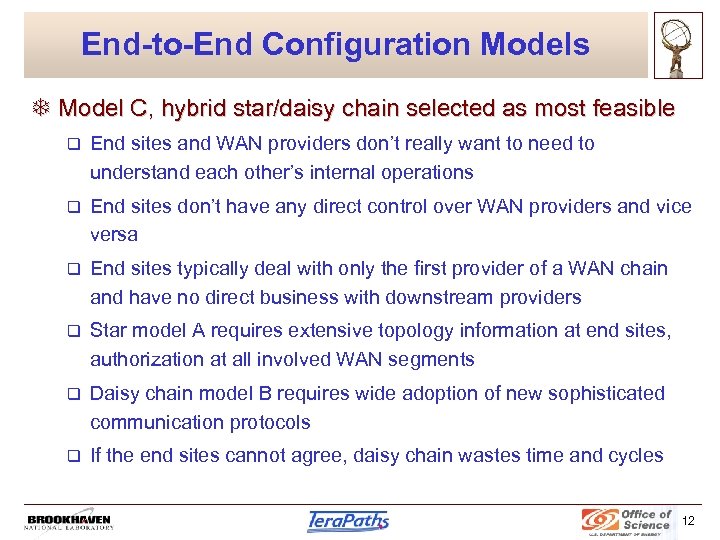

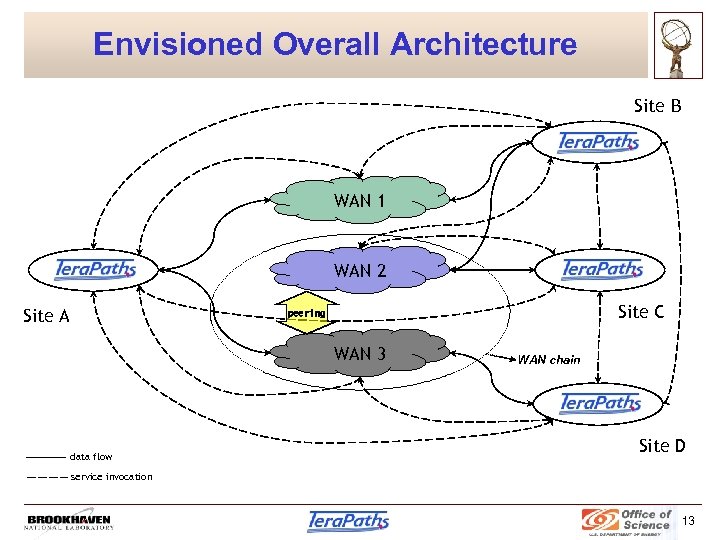

End-to-End Configuration Models T Model C, hybrid star/daisy chain selected as most feasible q End sites and WAN providers don’t really want to need to understand each other’s internal operations q End sites don’t have any direct control over WAN providers and vice versa q End sites typically deal with only the first provider of a WAN chain and have no direct business with downstream providers q Star model A requires extensive topology information at end sites, authorization at all involved WAN segments q Daisy chain model B requires wide adoption of new sophisticated communication protocols q If the end sites cannot agree, daisy chain wastes time and cycles 12

Envisioned Overall Architecture Site B Tera. Paths WAN 1 WAN 2 Tera. Paths Site A Tera. Paths Site C peering WAN 3 WAN chain Tera. Paths data flow Site D service invocation 13

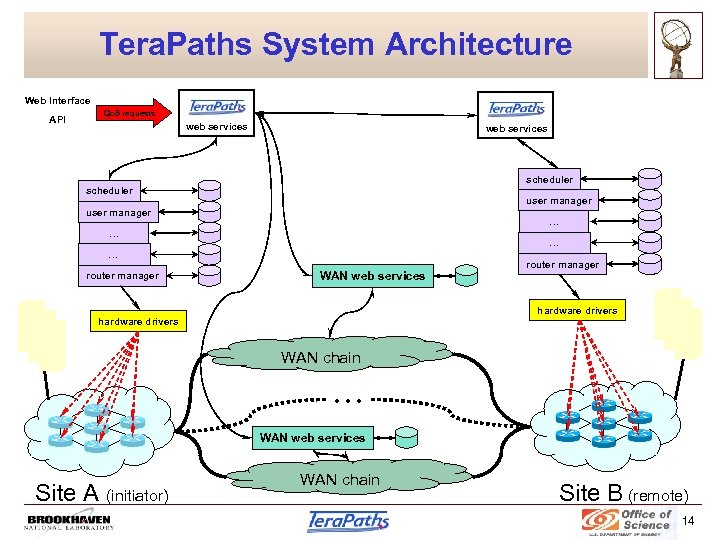

Tera. Paths System Architecture Web Interface API Qo. S requests web services scheduler user manager … … router manager WAN web services router manager hardware drivers WAN chain • • • WAN web services Site A (initiator) WAN chain Site B (remote) 14

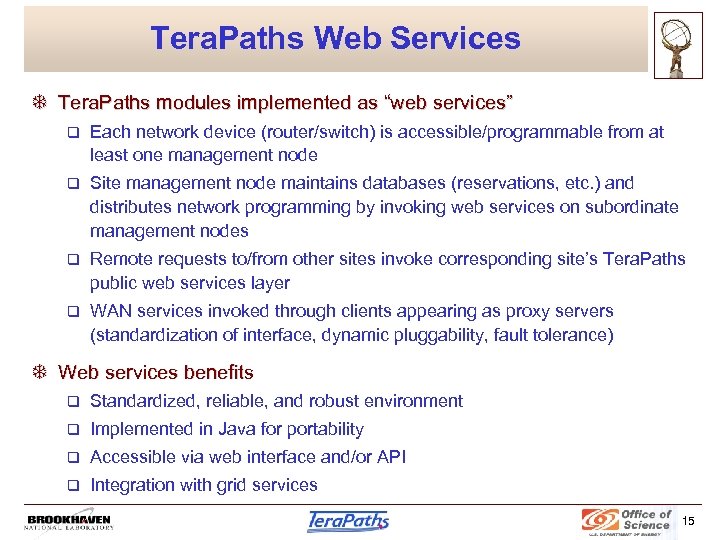

Tera. Paths Web Services T Tera. Paths modules implemented as “web services” q Each network device (router/switch) is accessible/programmable from at least one management node q Site management node maintains databases (reservations, etc. ) and distributes network programming by invoking web services on subordinate management nodes q Remote requests to/from other sites invoke corresponding site’s Tera. Paths public web services layer q WAN services invoked through clients appearing as proxy servers (standardization of interface, dynamic pluggability, fault tolerance) T Web services benefits q Standardized, reliable, and robust environment q Implemented in Java for portability q Accessible via web interface and/or API q Integration with grid services 15

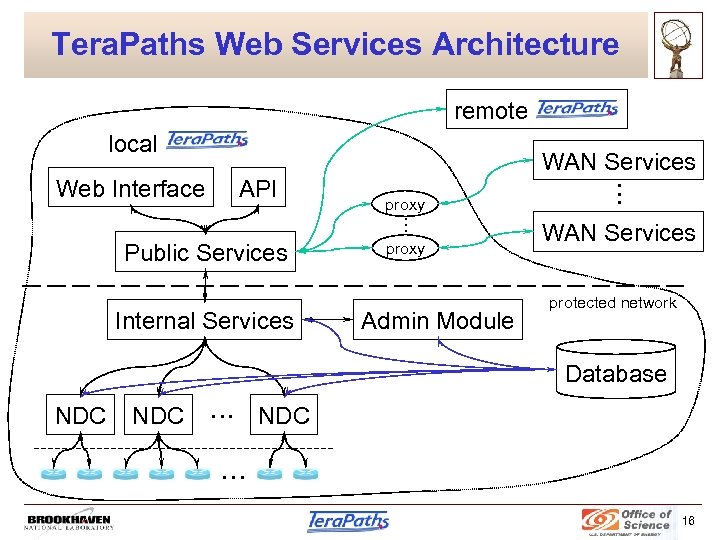

Tera. Paths Web Services Architecture remote local API proxy • • • Web Interface Public Services Internal Services proxy Admin Module • • • WAN Services protected network Database NDC • • • 16

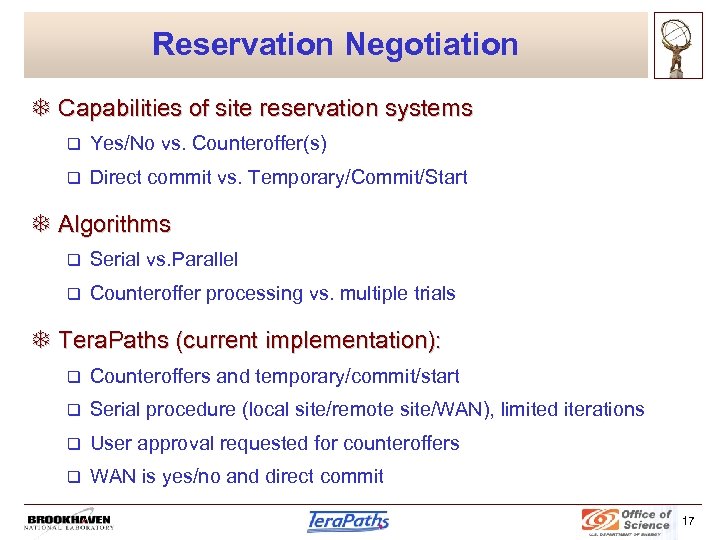

Reservation Negotiation T Capabilities of site reservation systems q Yes/No vs. Counteroffer(s) q Direct commit vs. Temporary/Commit/Start T Algorithms q Serial vs. Parallel q Counteroffer processing vs. multiple trials T Tera. Paths (current implementation): q Counteroffers and temporary/commit/start q Serial procedure (local site/remote site/WAN), limited iterations q User approval requested for counteroffers q WAN is yes/no and direct commit 17

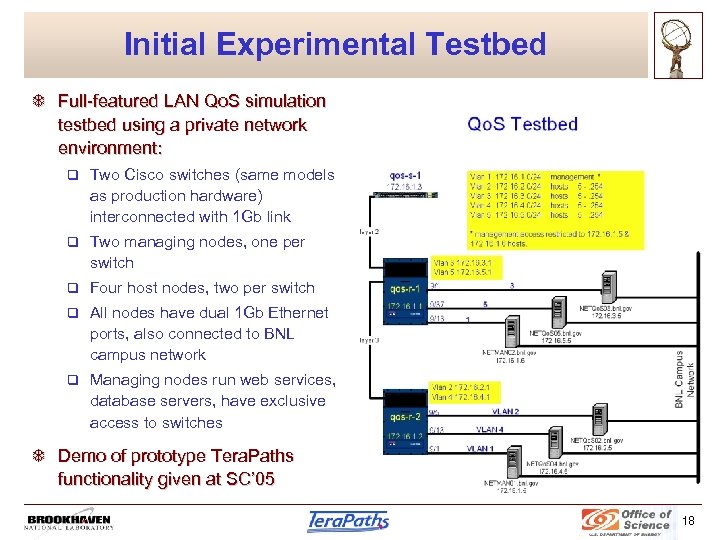

Initial Experimental Testbed T Full-featured LAN Qo. S simulation testbed using a private network environment: q Two Cisco switches (same models as production hardware) interconnected with 1 Gb link q Two managing nodes, one per switch q Four host nodes, two per switch q All nodes have dual 1 Gb Ethernet ports, also connected to BNL campus network q Managing nodes run web services, database servers, have exclusive access to switches T Demo of prototype Tera. Paths functionality given at SC’ 05 18

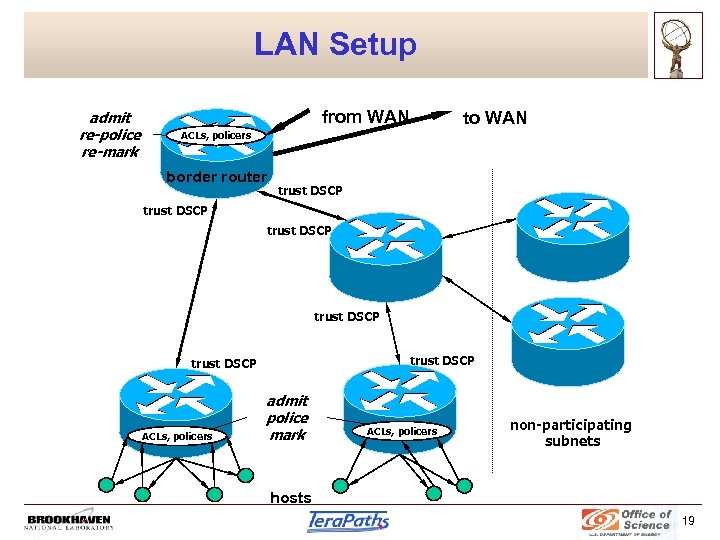

LAN Setup admit re-police re-mark from WAN to WAN ACLs, policers border router trust DSCP trust DSCP ACLs, policers admit police mark ACLs, policers non-participating subnets hosts 19

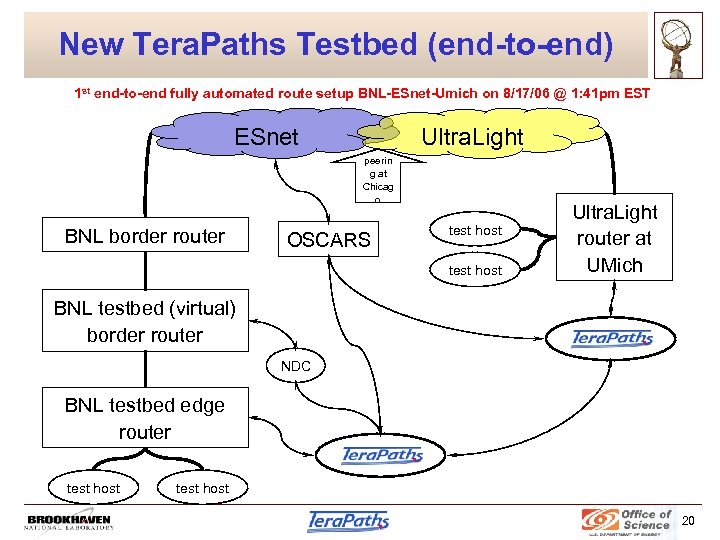

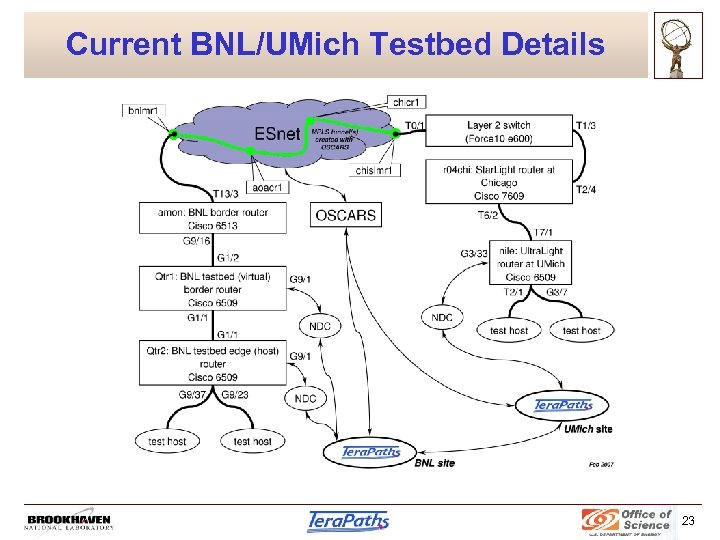

New Tera. Paths Testbed (end-to-end) 1 st end-to-end fully automated route setup BNL-ESnet-Umich on 8/17/06 @ 1: 41 pm EST ESnet Ultra. Light peerin g at Chicag o BNL border router OSCARS test host BNL testbed (virtual) border router Ultra. Light router at UMich Tera. Paths NDC BNL testbed edge router Tera. Paths test host 20

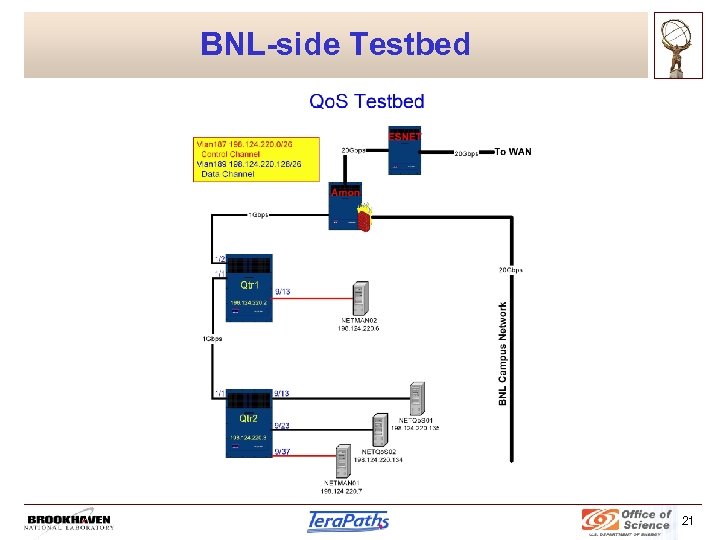

BNL-side Testbed 21

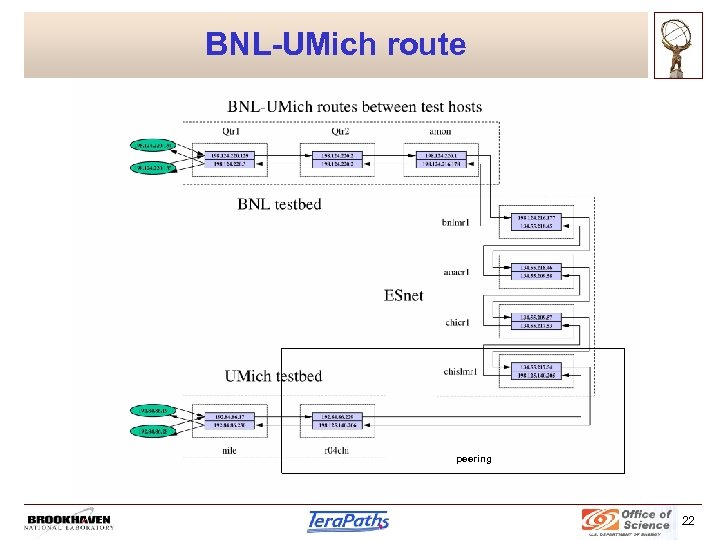

BNL-UMich route peering 22

Current BNL/UMich Testbed Details 23

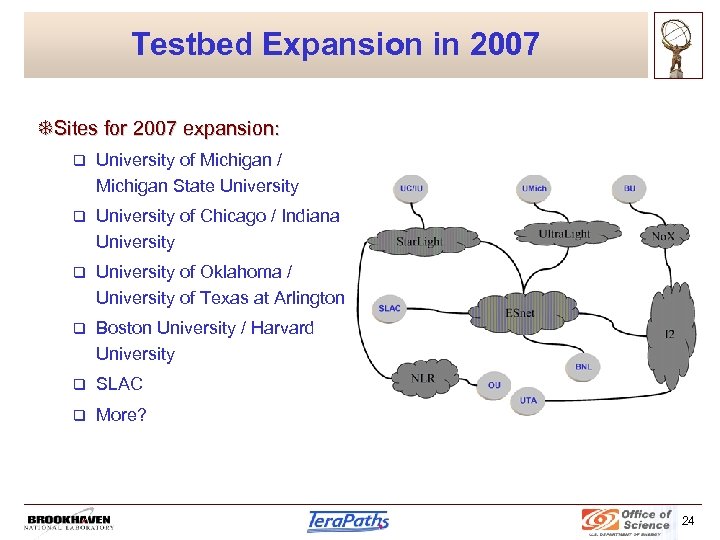

Testbed Expansion in 2007 TSites for 2007 expansion: q University of Michigan / Michigan State University q University of Chicago / Indiana University q University of Oklahoma / University of Texas at Arlington q Boston University / Harvard University q SLAC q More? 24

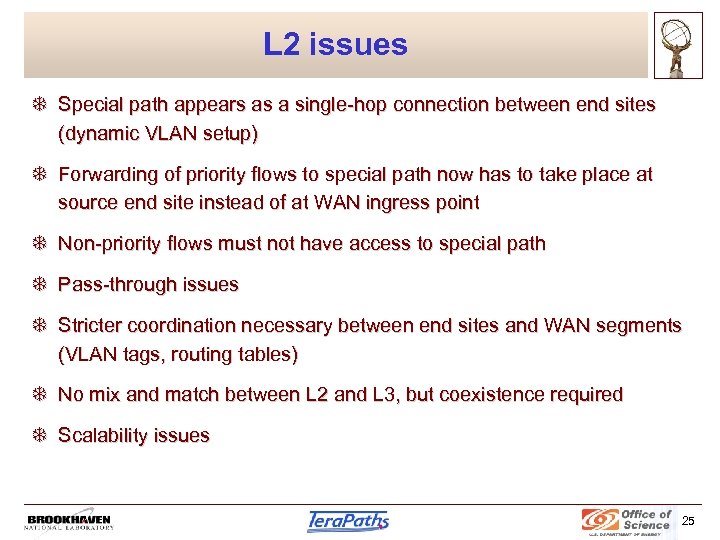

L 2 issues T Special path appears as a single-hop connection between end sites (dynamic VLAN setup) T Forwarding of priority flows to special path now has to take place at source end site instead of at WAN ingress point T Non-priority flows must not have access to special path T Pass-through issues T Stricter coordination necessary between end sites and WAN segments (VLAN tags, routing tables) T No mix and match between L 2 and L 3, but coexistence required T Scalability issues 25

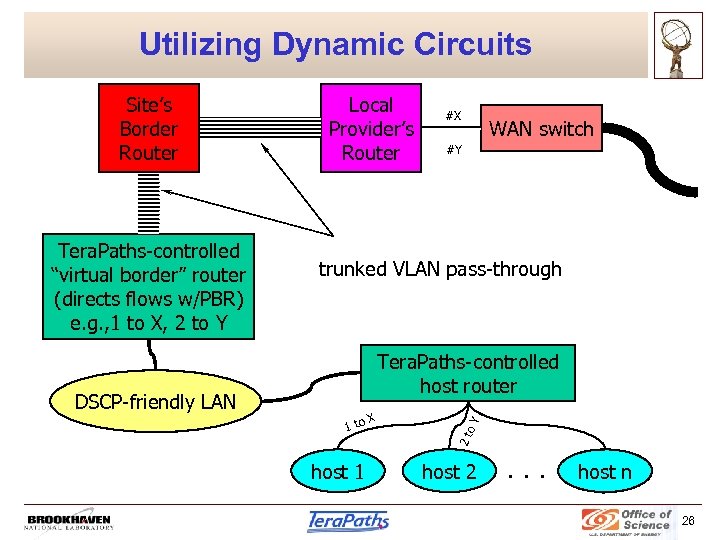

Utilizing Dynamic Circuits #X WAN switch #Y trunked VLAN pass-through Tera. Paths-controlled host router DSCP-friendly LAN 1 to host 1 X Y Tera. Paths-controlled “virtual border” router (directs flows w/PBR) e. g. , 1 to X, 2 to Y Local Provider’s Router 2 to Site’s Border Router host 2 . . . host n 26

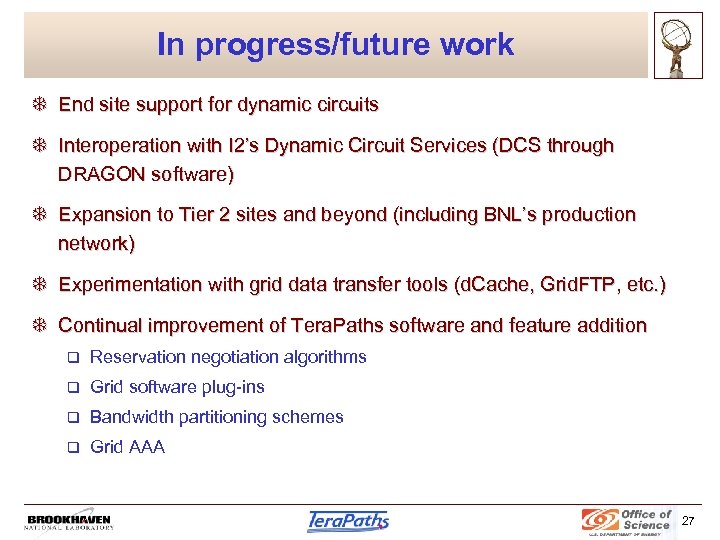

In progress/future work T End site support for dynamic circuits T Interoperation with I 2’s Dynamic Circuit Services (DCS through DRAGON software) T Expansion to Tier 2 sites and beyond (including BNL’s production network) T Experimentation with grid data transfer tools (d. Cache, Grid. FTP, etc. ) T Continual improvement of Tera. Paths software and feature addition q Reservation negotiation algorithms q Grid software plug-ins q Bandwidth partitioning schemes q Grid AAA 27

Thank you! Questions? 28

efdc9ddec7ce31e3d22bdd129f82f76a.ppt