764167be0e5ec1db14d3a0c85db97a40.ppt

- Количество слайдов: 20

THE STATUS OF PRAGUE COMPUTING FARM JAN ŠVEC Institute of Physics AS CR 4. 12. 2008, Prague

THE STATUS OF PRAGUE COMPUTING FARM JAN ŠVEC Institute of Physics AS CR 4. 12. 2008, Prague

History and main users Hardware description Networking Software and jobs distribution Management Monitoring User support

History and main users Hardware description Networking Software and jobs distribution Management Monitoring User support

HEP LHC - ATLAS, ALICE Tevatron - D 0 RHIC - Star H 1, Calice Astrophysics Auger Solid State Physics Others (HEP section of Institute of Physics) Active participation in GRID projects since 2001 (EDG, EGEE II, EGEE III (running), EGI) in collaboration with CESNET Czech Tier-2 site, connected to 2 Tier-1 sites (Forschungszentrum Karlsruhe – Germany, ASGC – Taiwan) and 3 Tier-3 sites (MFF Troja, FJFI ČVUT, ÚJF Řež, ÚJF Bulovka, UTEF)

HEP LHC - ATLAS, ALICE Tevatron - D 0 RHIC - Star H 1, Calice Astrophysics Auger Solid State Physics Others (HEP section of Institute of Physics) Active participation in GRID projects since 2001 (EDG, EGEE II, EGEE III (running), EGI) in collaboration with CESNET Czech Tier-2 site, connected to 2 Tier-1 sites (Forschungszentrum Karlsruhe – Germany, ASGC – Taiwan) and 3 Tier-3 sites (MFF Troja, FJFI ČVUT, ÚJF Řež, ÚJF Bulovka, UTEF)

first computers bought in 2001 (2 racks), placed in the main building insufficient cooling, small space, small UPS, inconvenient access (2 nd floor) new server room opened in 2004 server room and adjacent office 18 racks 200 k. VA UPS 350 k. VA diesel 2 cooling units water cooling planned for 2009 automatic fire suppression system (Argonite gas) good access

first computers bought in 2001 (2 racks), placed in the main building insufficient cooling, small space, small UPS, inconvenient access (2 nd floor) new server room opened in 2004 server room and adjacent office 18 racks 200 k. VA UPS 350 k. VA diesel 2 cooling units water cooling planned for 2009 automatic fire suppression system (Argonite gas) good access

35 x dual PIII 1. 13 GHz 67 x dual Xeon 3. 06 GHz 5 x dual Xeon 2. 8 GHz Redundant components Key services 3 x dual Opteron 1. 6 GHz File servers 36 x bl 35 p - dual Opteron 275, 280 6 x bl 20 p - dual Xeon 5160 8 x bl 460 - dual Xeon 5160 12 x bl 465 - dual Opteron 2220

35 x dual PIII 1. 13 GHz 67 x dual Xeon 3. 06 GHz 5 x dual Xeon 2. 8 GHz Redundant components Key services 3 x dual Opteron 1. 6 GHz File servers 36 x bl 35 p - dual Opteron 275, 280 6 x bl 20 p - dual Xeon 5160 8 x bl 460 - dual Xeon 5160 12 x bl 465 - dual Opteron 2220

HP Netserver 12 - 1 TB SCSI Easy Stor - 10 TB ATA Easy Stor - 30 TB SATA Promise Vtrak M 610 p - 13 TB SATA HP EVA 6100 - 28 TB FATA (SATA over FC) Overland Ultramus - 144 TB SATA DPM pool Overland Ultramus 12 TB Fiber Channell 4 Gb tape library cache 100 TB tape library - LTO 4 (expandable to 400 TB)

HP Netserver 12 - 1 TB SCSI Easy Stor - 10 TB ATA Easy Stor - 30 TB SATA Promise Vtrak M 610 p - 13 TB SATA HP EVA 6100 - 28 TB FATA (SATA over FC) Overland Ultramus - 144 TB SATA DPM pool Overland Ultramus 12 TB Fiber Channell 4 Gb tape library cache 100 TB tape library - LTO 4 (expandable to 400 TB)

SGI Altix ICE 8200 512 cores, Intel Xeon 2. 5 GHz 1 GB RAM per core Diskless nodes External SAS disk array 7. 2 TB Infiniband 4 x (20 Gbps) Suse Linux Enterprise Server Torque + Maui SGI Pro. Pack

SGI Altix ICE 8200 512 cores, Intel Xeon 2. 5 GHz 1 GB RAM per core Diskless nodes External SAS disk array 7. 2 TB Infiniband 4 x (20 Gbps) Suse Linux Enterprise Server Torque + Maui SGI Pro. Pack

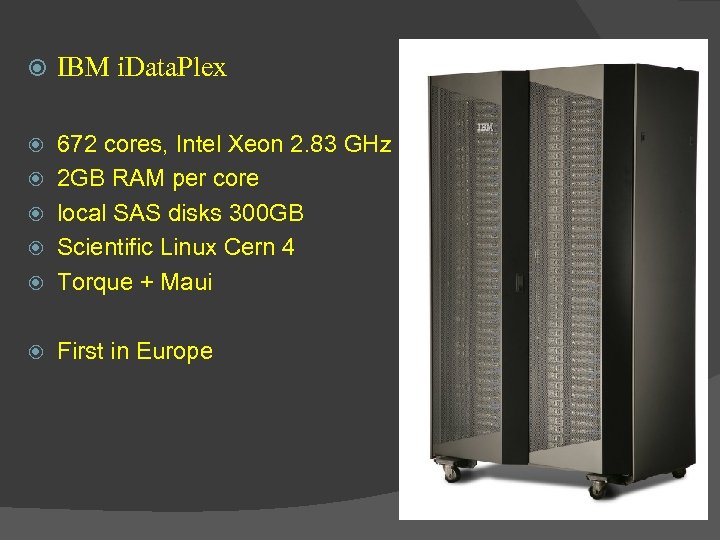

IBM i. Data. Plex 672 cores, Intel Xeon 2. 83 GHz 2 GB RAM per core local SAS disks 300 GB Scientific Linux Cern 4 Torque + Maui First in Europe

IBM i. Data. Plex 672 cores, Intel Xeon 2. 83 GHz 2 GB RAM per core local SAS disks 300 GB Scientific Linux Cern 4 Torque + Maui First in Europe

Scientific Linux (Cern) 4, 5 Suse Linux (SLES 10, Opensuse 11) 32 bit, 64 bit testing in progress Job management - PBSPro 9. x, Torque with Maui scheduler fair share used for scheduling cputime and walltime multiplicators Legato Networker - tape backup (user homes, configuration) g. Lite grid middleware (CE, SE, UI, MON box, site BDII, …) Job submission Local – “prak” interface (grid unsupported experiments) ○ No special requirements GRID - UI interface (Atlas, Alice, Auger) ○ X 509 certificate, signed by GRID certification authority (Cesnet, CERN) Interface hosts merging in progress

Scientific Linux (Cern) 4, 5 Suse Linux (SLES 10, Opensuse 11) 32 bit, 64 bit testing in progress Job management - PBSPro 9. x, Torque with Maui scheduler fair share used for scheduling cputime and walltime multiplicators Legato Networker - tape backup (user homes, configuration) g. Lite grid middleware (CE, SE, UI, MON box, site BDII, …) Job submission Local – “prak” interface (grid unsupported experiments) ○ No special requirements GRID - UI interface (Atlas, Alice, Auger) ○ X 509 certificate, signed by GRID certification authority (Cesnet, CERN) Interface hosts merging in progress

installation using PXE + kickstart system automatically updates from SLC repositories g. Lite middleware configured with YAIM (integrated into cfengine local site changes managed using cfengine

installation using PXE + kickstart system automatically updates from SLC repositories g. Lite middleware configured with YAIM (integrated into cfengine local site changes managed using cfengine

Manual administration is tedious and error prone Configuration is scattered among several places ○ Kickstart’s postinstall vs. existing nodes Ad-hoc changes, no revisions ○ Communication among sysadmins Machines temporarily offline ○ Conflicting changes Issues when reinstalling ○ Stuff went missing Too much work Cfengine to the rescue! Managing hundreds of boxes from a central place Change tracking with subversion Describe the end result, not the process

Manual administration is tedious and error prone Configuration is scattered among several places ○ Kickstart’s postinstall vs. existing nodes Ad-hoc changes, no revisions ○ Communication among sysadmins Machines temporarily offline ○ Conflicting changes Issues when reinstalling ○ Stuff went missing Too much work Cfengine to the rescue! Managing hundreds of boxes from a central place Change tracking with subversion Describe the end result, not the process

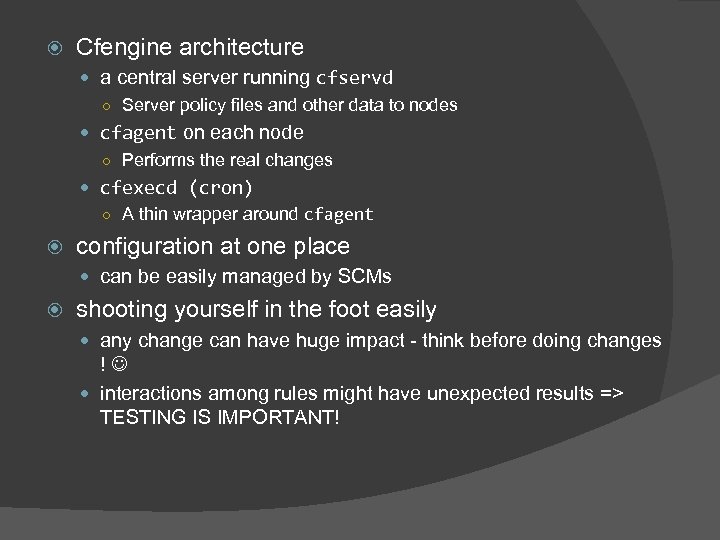

Cfengine architecture a central server running cfservd ○ Server policy files and other data to nodes cfagent on each node ○ Performs the real changes cfexecd (cron) ○ A thin wrapper around cfagent configuration at one place can be easily managed by SCMs shooting yourself in the foot easily any change can have huge impact - think before doing changes ! interactions among rules might have unexpected results => TESTING IS IMPORTANT!

Cfengine architecture a central server running cfservd ○ Server policy files and other data to nodes cfagent on each node ○ Performs the real changes cfexecd (cron) ○ A thin wrapper around cfagent configuration at one place can be easily managed by SCMs shooting yourself in the foot easily any change can have huge impact - think before doing changes ! interactions among rules might have unexpected results => TESTING IS IMPORTANT!

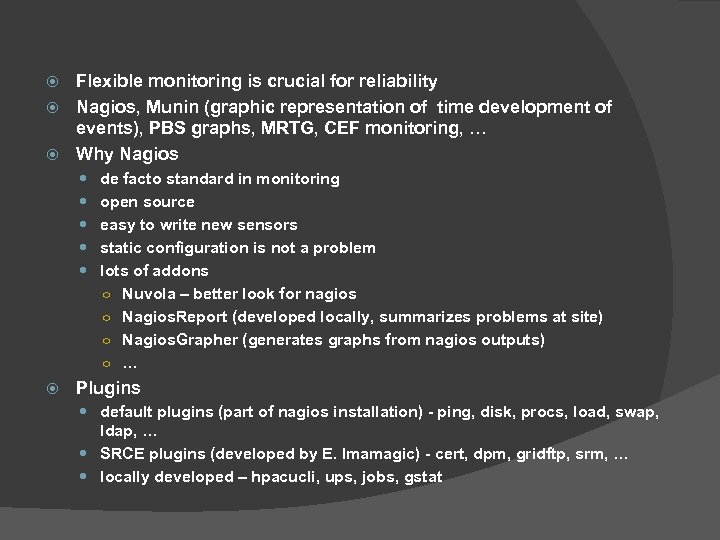

Flexible monitoring is crucial for reliability Nagios, Munin (graphic representation of time development of events), PBS graphs, MRTG, CEF monitoring, … Why Nagios de facto standard in monitoring open source easy to write new sensors static configuration is not a problem lots of addons ○ Nuvola – better look for nagios ○ Nagios. Report (developed locally, summarizes problems at site) ○ Nagios. Grapher (generates graphs from nagios outputs) ○ … Plugins default plugins (part of nagios installation) - ping, disk, procs, load, swap, ldap, … SRCE plugins (developed by E. Imamagic) - cert, dpm, gridftp, srm, … locally developed – hpacucli, ups, jobs, gstat

Flexible monitoring is crucial for reliability Nagios, Munin (graphic representation of time development of events), PBS graphs, MRTG, CEF monitoring, … Why Nagios de facto standard in monitoring open source easy to write new sensors static configuration is not a problem lots of addons ○ Nuvola – better look for nagios ○ Nagios. Report (developed locally, summarizes problems at site) ○ Nagios. Grapher (generates graphs from nagios outputs) ○ … Plugins default plugins (part of nagios installation) - ping, disk, procs, load, swap, ldap, … SRCE plugins (developed by E. Imamagic) - cert, dpm, gridftp, srm, … locally developed – hpacucli, ups, jobs, gstat

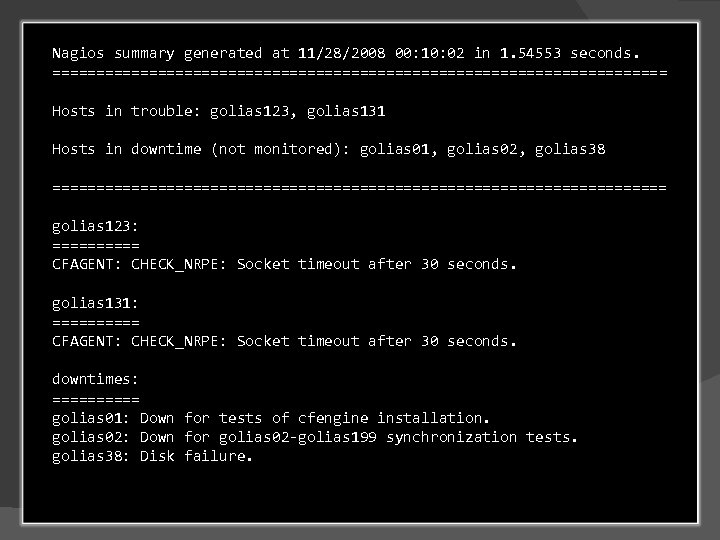

Nagios summary generated at 11/28/2008 00: 10: 02 in 1. 54553 seconds. =================================== Hosts in trouble: golias 123, golias 131 Hosts in downtime (not monitored): golias 01, golias 02, golias 38 =================================== golias 123: ===== CFAGENT: CHECK_NRPE: Socket timeout after 30 seconds. golias 131: ===== CFAGENT: CHECK_NRPE: Socket timeout after 30 seconds. downtimes: ===== golias 01: Down for tests of cfengine installation. golias 02: Down for golias 02 -golias 199 synchronization tests. golias 38: Disk failure.

Nagios summary generated at 11/28/2008 00: 10: 02 in 1. 54553 seconds. =================================== Hosts in trouble: golias 123, golias 131 Hosts in downtime (not monitored): golias 01, golias 02, golias 38 =================================== golias 123: ===== CFAGENT: CHECK_NRPE: Socket timeout after 30 seconds. golias 131: ===== CFAGENT: CHECK_NRPE: Socket timeout after 30 seconds. downtimes: ===== golias 01: Down for tests of cfengine installation. golias 02: Down for golias 02 -golias 199 synchronization tests. golias 38: Disk failure.

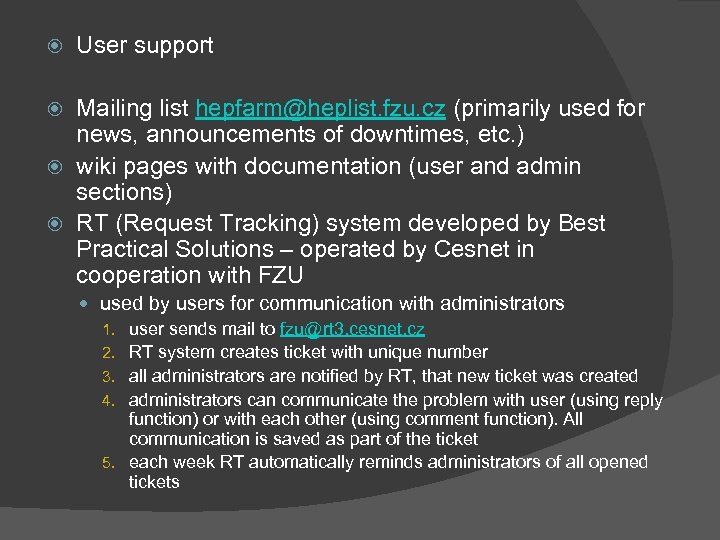

User support Mailing list hepfarm@heplist. fzu. cz (primarily used for news, announcements of downtimes, etc. ) wiki pages with documentation (user and admin sections) RT (Request Tracking) system developed by Best Practical Solutions – operated by Cesnet in cooperation with FZU used by users for communication with administrators 1. user sends mail to fzu@rt 3. cesnet. cz 2. RT system creates ticket with unique number 3. all administrators are notified by RT, that new ticket was created 4. administrators can communicate the problem with user (using reply function) or with each other (using comment function). All communication is saved as part of the ticket 5. each week RT automatically reminds administrators of all opened tickets

User support Mailing list hepfarm@heplist. fzu. cz (primarily used for news, announcements of downtimes, etc. ) wiki pages with documentation (user and admin sections) RT (Request Tracking) system developed by Best Practical Solutions – operated by Cesnet in cooperation with FZU used by users for communication with administrators 1. user sends mail to fzu@rt 3. cesnet. cz 2. RT system creates ticket with unique number 3. all administrators are notified by RT, that new ticket was created 4. administrators can communicate the problem with user (using reply function) or with each other (using comment function). All communication is saved as part of the ticket 5. each week RT automatically reminds administrators of all opened tickets