e54b06a42f8770f96ea8a5ecfdafe890.ppt

- Количество слайдов: 56

The Social Web or how flickr changed my life Kristina Lerman USC Information Sciences Institute http: //www. isi. edu/~lerman

Web 1. 0 2

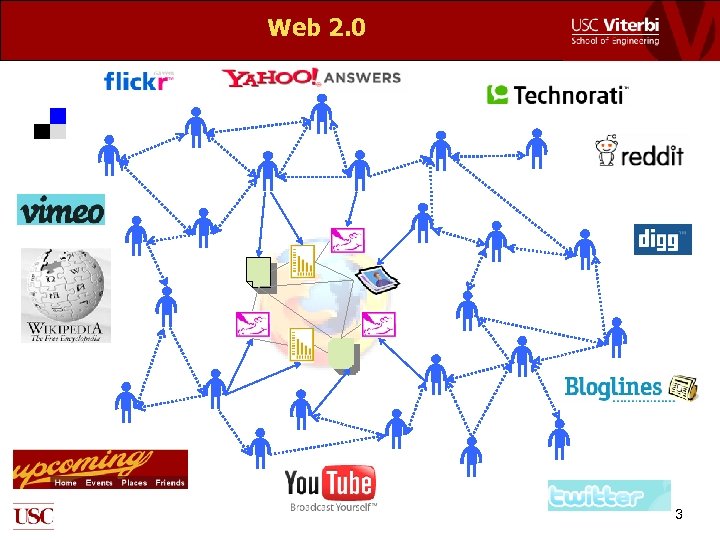

Web 2. 0 3

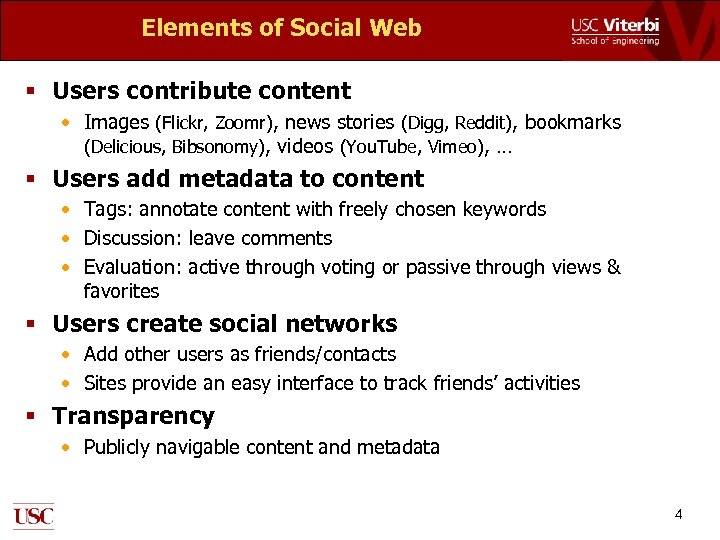

Elements of Social Web § Users contribute content • Images (Flickr, Zoomr), news stories (Digg, Reddit), bookmarks (Delicious, Bibsonomy), videos (You. Tube, Vimeo), … § Users add metadata to content • Tags: annotate content with freely chosen keywords • Discussion: leave comments • Evaluation: active through voting or passive through views & favorites § Users create social networks • Add other users as friends/contacts • Sites provide an easy interface to track friends’ activities § Transparency • Publicly navigable content and metadata 4

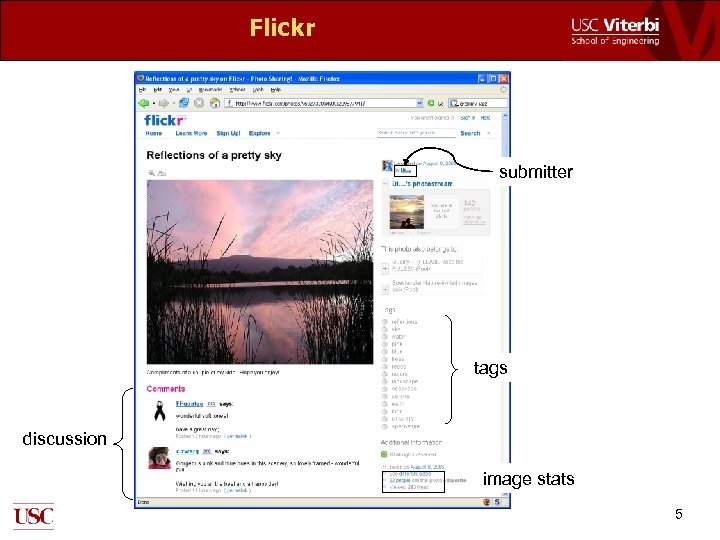

Flickr submitter tags discussion image stats 5

User profile 6

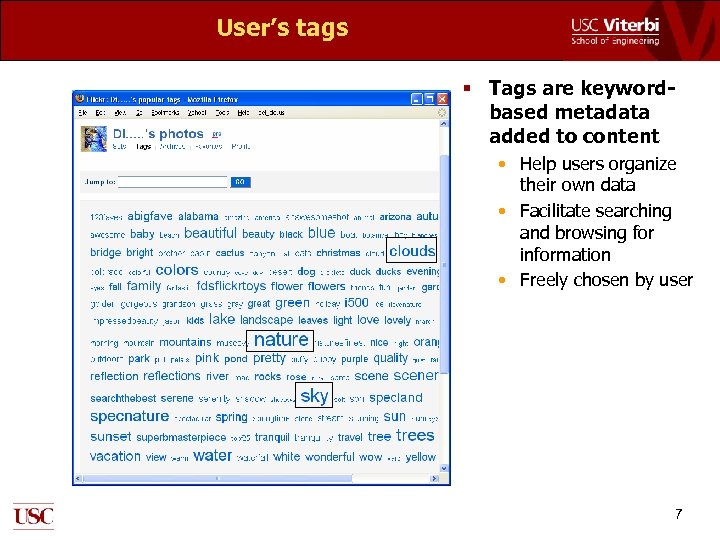

User’s tags § Tags are keywordbased metadata added to content • Help users organize their own data • Facilitate searching and browsing for information • Freely chosen by user 7

User’s favorite images (by other photographers) 8

So what? By exposing human activity, Social Web allows users to exploit the intelligence and opinions of others to solve problems • New way of interacting with information − Social Information Processing • Exploit collective effects − Word of mouth to amplify good information • Amenable to analysis − Design optimal social information processing systems Challenge for AI: harness the power of collective intelligence to solve information processing problems 9

Outline for the rest of the talk User-contributed metadata can be used to solve following information processing problems § Discovery Collectively added tags used for information discovery § Personalization User-added metadata, in the form of tags and social networks, used to personalize search results § Recommendation Social networks for information filtering § Dynamics of collaboration Mathematical study of collaborative rating system 10

Discovery personalization recommendation dynamics of collaboration with: Anon Plangrasopchok

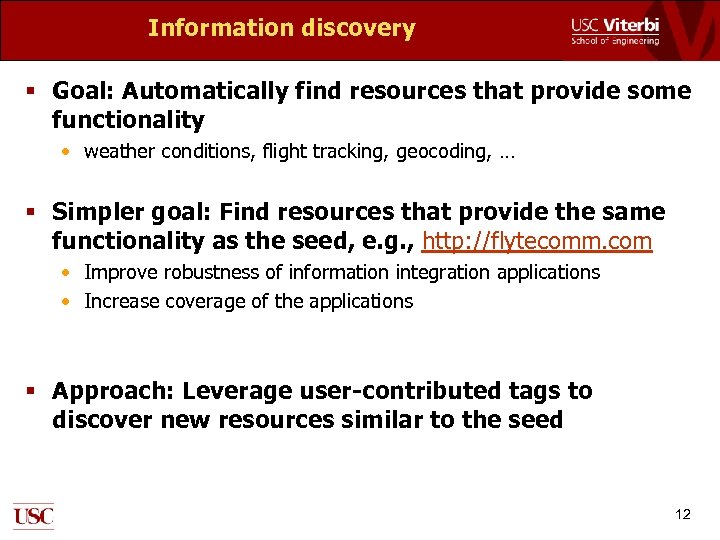

Information discovery § Goal: Automatically find resources that provide some functionality • weather conditions, flight tracking, geocoding, … § Simpler goal: Find resources that provide the same functionality as the seed, e. g. , http: //flytecomm. com • Improve robustness of information integration applications • Increase coverage of the applications § Approach: Leverage user-contributed tags to discover new resources similar to the seed 12

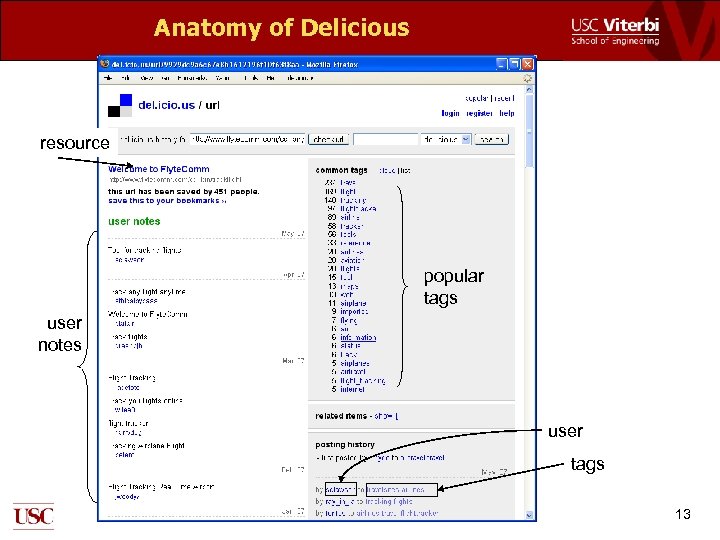

Anatomy of Delicious resource popular tags user notes user tags 13

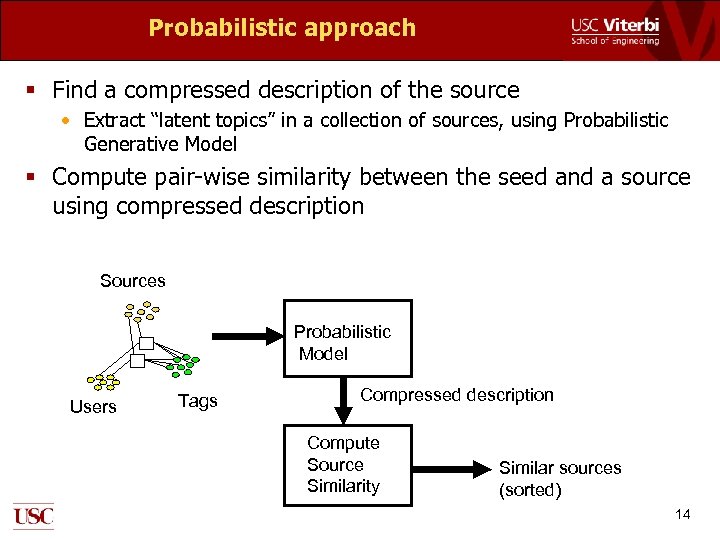

Probabilistic approach § Find a compressed description of the source • Extract “latent topics” in a collection of sources, using Probabilistic Generative Model § Compute pair-wise similarity between the seed and a source using compressed description Sources Probabilistic Model Users Tags Compressed description Compute Source Similarity Similar sources (sorted) 14

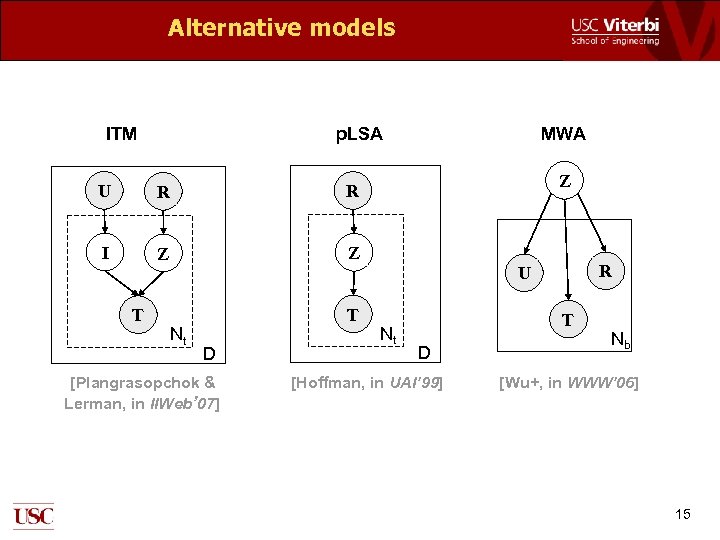

Alternative models ITM p. LSA U R Z Z R I MWA Z T R U Nt T D [Plangrasopchok & Lerman, in IIWeb’ 07] Nt T D [Hoffman, in UAI’ 99] Nb [Wu+, in WWW’ 06] 15

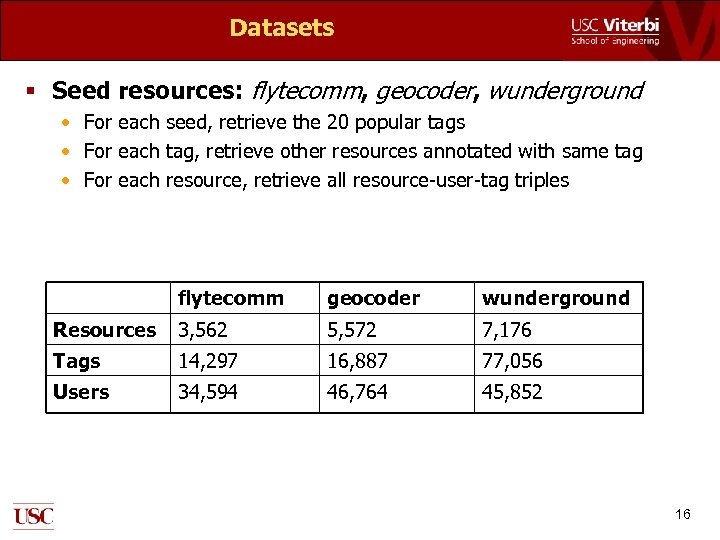

Datasets § Seed resources: flytecomm, geocoder, wunderground • For each seed, retrieve the 20 popular tags • For each tag, retrieve other resources annotated with same tag • For each resource, retrieve all resource-user-tag triples flytecomm geocoder wunderground Resources 3, 562 5, 572 7, 176 Tags 14, 297 16, 887 77, 056 Users 34, 594 46, 764 45, 852 16

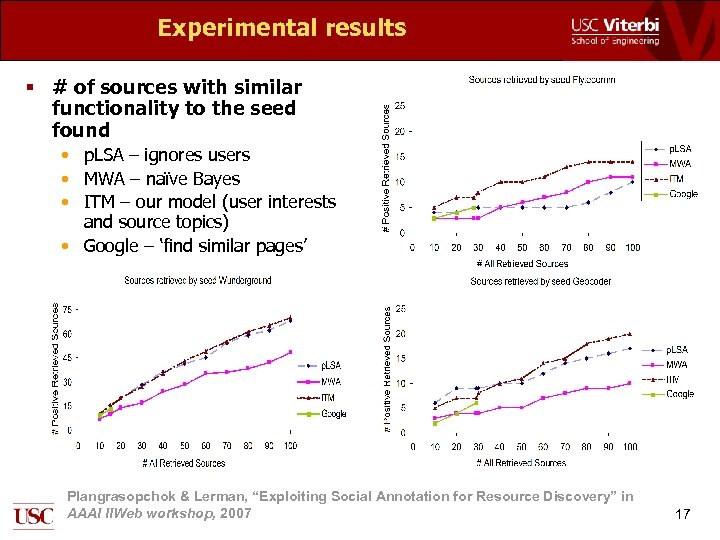

Experimental results § # of sources with similar functionality to the seed found • p. LSA – ignores users • MWA – naïve Bayes • ITM – our model (user interests and source topics) • Google – ‘find similar pages’ Plangrasopchok & Lerman, “Exploiting Social Annotation for Resource Discovery” in AAAI IIWeb workshop, 2007 17

Summary and future work § Exploit tagging activities of different users to find data sources similar to the seed § Future work • Extend the probabilistic model to learn topic hierarchies ( aka folksonomies) − Travel Flights » Booking » Status • Hotels » Booking » Reviews • Car rentals • Destinations • 18

discovery Personalization recommendation dynamics of collaboration with: Anon Plangrasopchok & Michael Wong

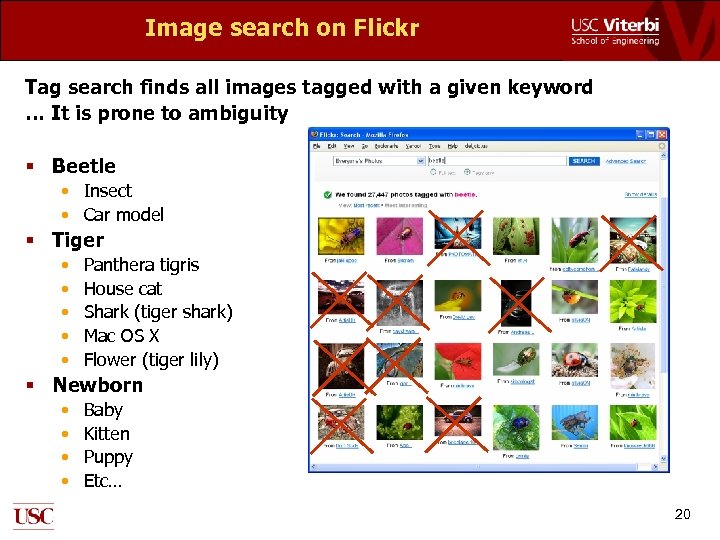

Image search on Flickr Tag search finds all images tagged with a given keyword … It is prone to ambiguity § Beetle • Insect • Car model § Tiger • • • Panthera tigris House cat Shark (tiger shark) Mac OS X Flower (tiger lily) § Newborn • • Baby Kitten Puppy Etc… 20

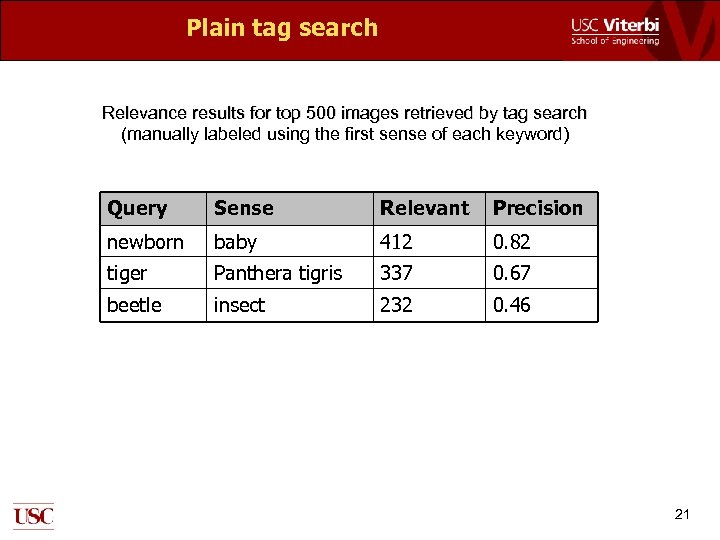

Plain tag search Relevance results for top 500 images retrieved by tag search (manually labeled using the first sense of each keyword) Query Sense Relevant Precision newborn baby 412 0. 82 tiger Panthera tigris 337 0. 67 beetle insect 232 0. 46 21

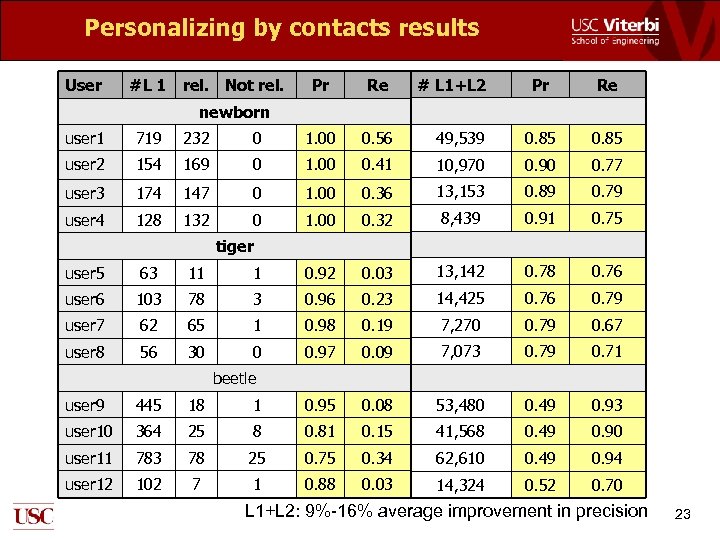

Personalizing search results Users express their tastes and preferences through the metadata they create • • Contacts they add to their social networks Tags they add to their own images Images they mark as their favorite Groups they join Use this metadata to improve image search results! • Personalizing by tags • Personalizing by contacts − Restrict results of image search to those images that were submitted by user u ‘s friends (Level 1 contacts) 22

Personalizing by contacts results User #L 1 rel. Not rel. Pr Re # L 1+L 2 Pr Re newborn user 1 719 232 0 1. 00 0. 56 49, 539 0. 85 user 2 154 169 0 1. 00 0. 41 10, 970 0. 90 0. 77 user 3 174 147 0 1. 00 0. 36 13, 153 0. 89 0. 79 user 4 128 132 0 1. 00 0. 32 8, 439 0. 91 0. 75 tiger user 5 63 11 1 0. 92 0. 03 13, 142 0. 78 0. 76 user 6 103 78 3 0. 96 0. 23 14, 425 0. 76 0. 79 user 7 62 65 1 0. 98 0. 19 7, 270 0. 79 0. 67 user 8 56 30 0 0. 97 0. 09 7, 073 0. 79 0. 71 beetle user 9 445 18 1 0. 95 0. 08 53, 480 0. 49 0. 93 user 10 364 25 8 0. 81 0. 15 41, 568 0. 49 0. 90 user 11 783 78 25 0. 75 0. 34 62, 610 0. 49 0. 94 user 12 102 7 1 0. 88 0. 03 14, 324 0. 52 0. 70 L 1+L 2: 9%-16% average improvement in precision 23

Personalizing by tags § Users often add descriptive metadata to images • • Tags Titles Image descriptions Add image to groups § Personalizing by tags • Find (hidden) topics of interest to the user • Find images in the search results related to these topics 24

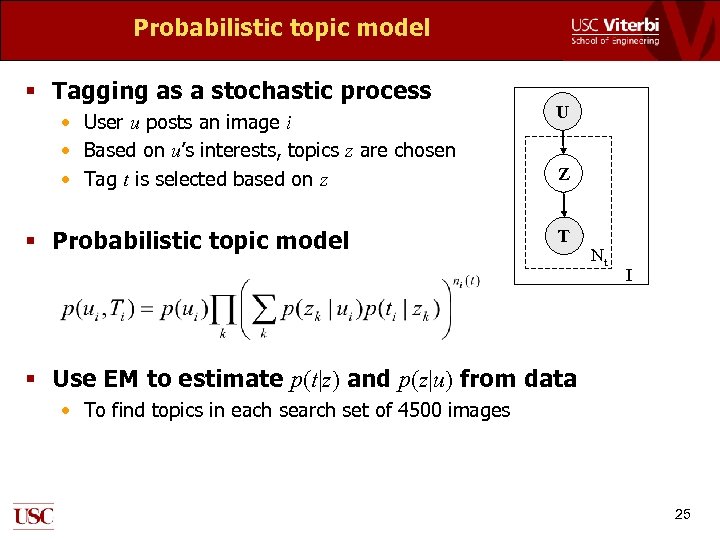

Probabilistic topic model § Tagging as a stochastic process • User u posts an image i • Based on u’s interests, topics z are chosen • Tag t is selected based on z § Probabilistic topic model U Z T Nt I § Use EM to estimate p(t|z) and p(z|u) from data • To find topics in each search set of 4500 images 25

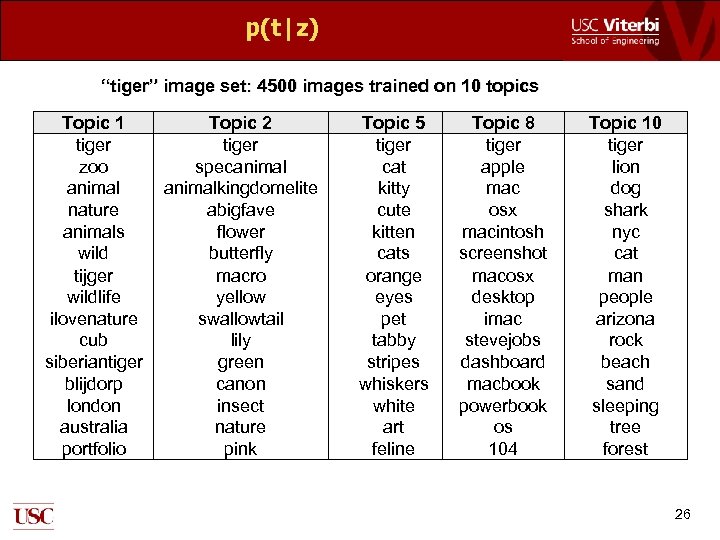

p(t|z) “tiger” image set: 4500 images trained on 10 topics Topic 1 tiger zoo animal nature animals wild tijger wildlife ilovenature cub siberiantiger blijdorp london australia portfolio Topic 2 tiger specanimalkingdomelite abigfave flower butterfly macro yellow swallowtail lily green canon insect nature pink Topic 5 tiger cat kitty cute kitten cats orange eyes pet tabby stripes whiskers white art feline Topic 8 tiger apple mac osx macintosh screenshot macosx desktop imac stevejobs dashboard macbook powerbook os 104 Topic 10 tiger lion dog shark nyc cat man people arizona rock beach sand sleeping tree forest 26

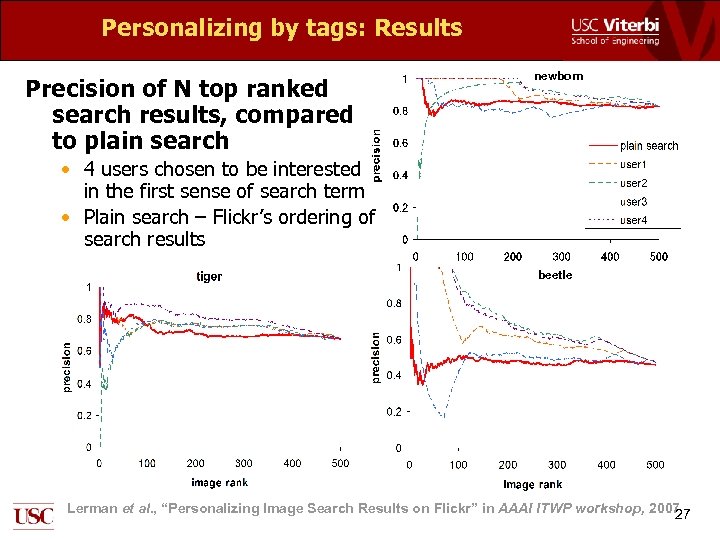

Personalizing by tags: Results Precision of N top ranked search results, compared to plain search newborn • 4 users chosen to be interested in the first sense of search term • Plain search – Flickr’s ordering of search results beetle Lerman et al. , “Personalizing Image Search Results on Flickr” in AAAI ITWP workshop, 2007 27

Summary & future work § Improve results of image search for an individual user as long as the user has expressed interest in the topic of search § Future work • Lots of other metadata to exploit − Favorites, groups, image titles and descriptions • Discover relevant synonyms to expand search • Topics that are new to the user? − Exploit collective knowledge to find communities of interest − Identify authorities within those communities 28

discovery personalization Recommendation dynamics of collaboration with: Dipsy Kapoor

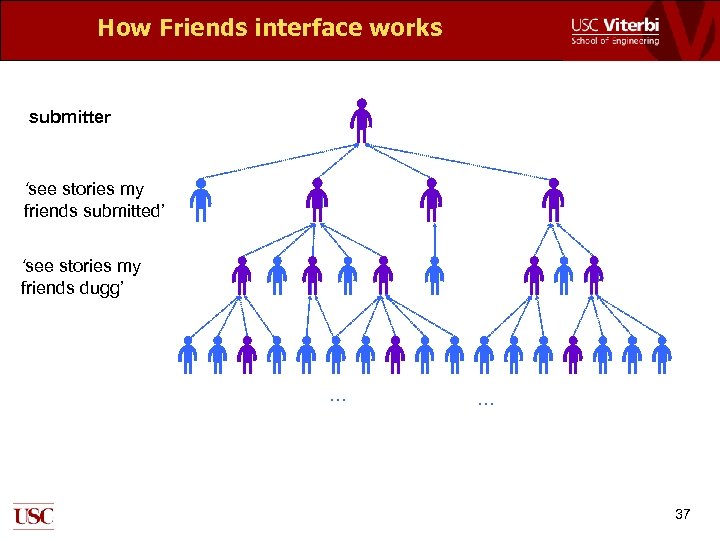

Social News Aggregation on Digg § § Users submit stories Users vote on (digg) stories • • § Select stories promoted to the front page based on received votes Collaborative front page emerges from the opinions of many users, not few editors Users create social networks by adding others as friends • Friends Interface makes it easy to track friends’ activities − − Stories friends submitted Stories friends dugg (voted on) 30

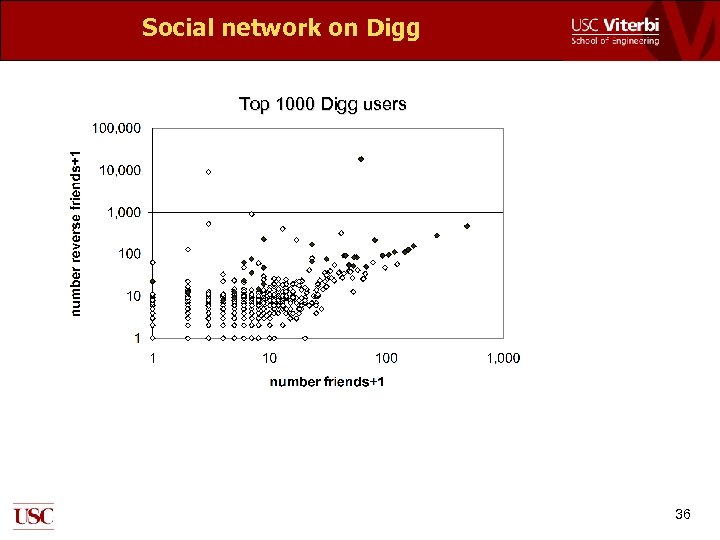

Top users § Digg ranks users Based on how many of their stories were promoted to front page − User with most stories is ranked #1, … § Top 1000 users data Collected by scraping Digg … now available through the API • Usage statistics − User rank − How many stories user submitted, dugg, commented on • Social networks − Friends: outgoing links A B : = B is a friend of A − Reverse friends: incoming links A B : = A is a reverse friend of B 31

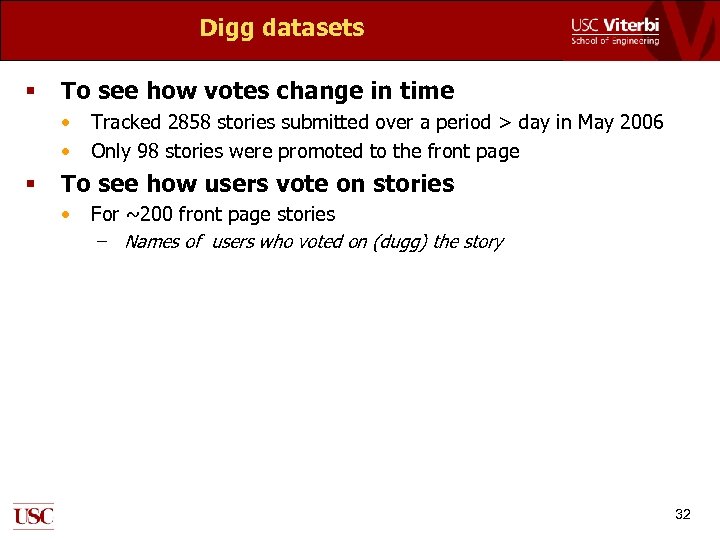

Digg datasets § To see how votes change in time • • § Tracked 2858 stories submitted over a period > day in May 2006 Only 98 stories were promoted to the front page To see how users vote on stories • For ~200 front page stories − Names of users who voted on (dugg) the story 32

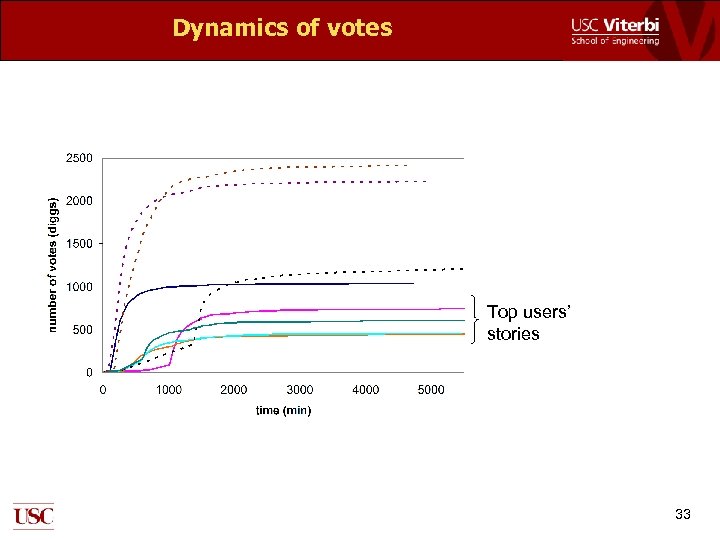

Dynamics of votes Top users’ stories 33

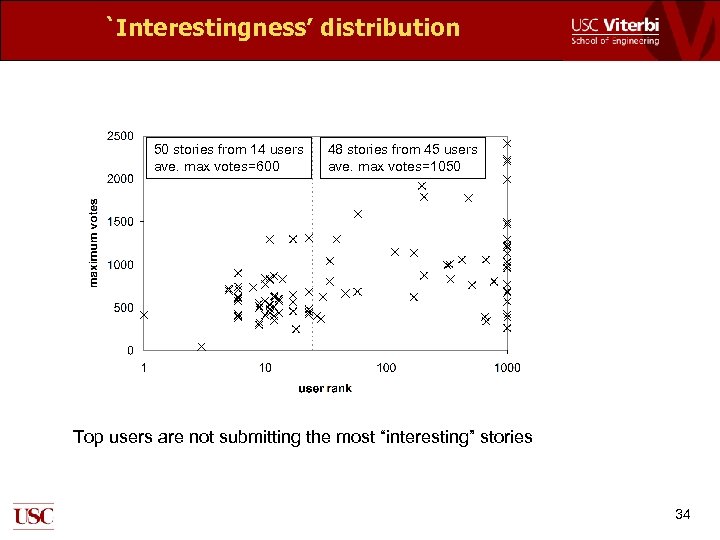

`Interestingness’ distribution 50 stories from 14 users ave. max votes=600 48 stories from 45 users ave. max votes=1050 Top users are not submitting the most “interesting” stories 34

Social filtering as recommendation Social filtering explains why top users are so successful § Users express their preferences by creating social networks § Use these networks – through the Friends Interface – to find new stories to read • Claim 1: Users digg stories their friends submit • Claim 2: Users digg stories their friends digg 35

Social network on Digg Top 1000 Digg users 36

How Friends interface works submitter ‘see stories my friends submitted’ ‘see stories my friends dugg’ … … 37

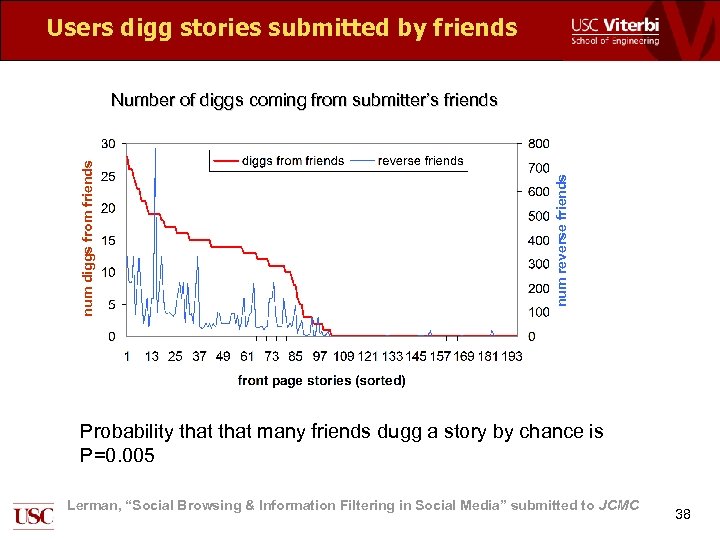

Users digg stories submitted by friends num reverse friends num diggs from friends Number of diggs coming from submitter’s friends Probability that many friends dugg a story by chance is P=0. 005 Lerman, “Social Browsing & Information Filtering in Social Media” submitted to JCMC 38

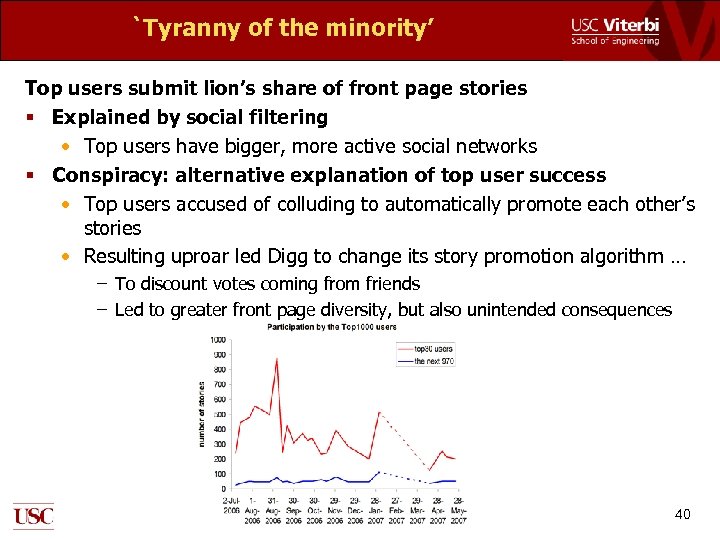

`Tyranny of the minority’ Top users submit lion’s share of front page stories § Explained by social filtering • Top users have bigger, more active social networks § Conspiracy: alternative explanation of top user success • Top users accused of colluding to automatically promote each other’s stories • Resulting uproar led Digg to change its story promotion algorithm … − To discount votes coming from friends − Led to greater front page diversity, but also unintended consequences 40

Design of collaborative rating systems § Designing a collaborative rating system, which exploits the emergent behavior of many independent evaluators, is difficult • Small changes can have big consequences • Few tools to predict system behavior − Execution − Simulation § Can we explore the effects of promotion algorithms before they are implemented? 42

discovery personalization recommendation Dynamics of collaboration with: Dipsy Kapoor

Analysis as a design tool Mathematical analysis can help understand predict the emergent behavior of collaborative information systems • Study the choice of the promotion algorithm before it is implemented • Effect of design choices on system behavior − story timeliness, interestingness, user participation, incentives to join social networks, etc. 44

Dynamics of collaborative rating Story is characterized by § Interestingness r • probability a story will received a vote when seen by a user § Visibility • Visibility on the upcoming stories page − Decreases with time as new stories are submitted • Visibility on the front page − Decreases with time as new stories are promoted • Visibility through the friends interface − Stories friends submitted − Stories friends dugg (voted on) 45

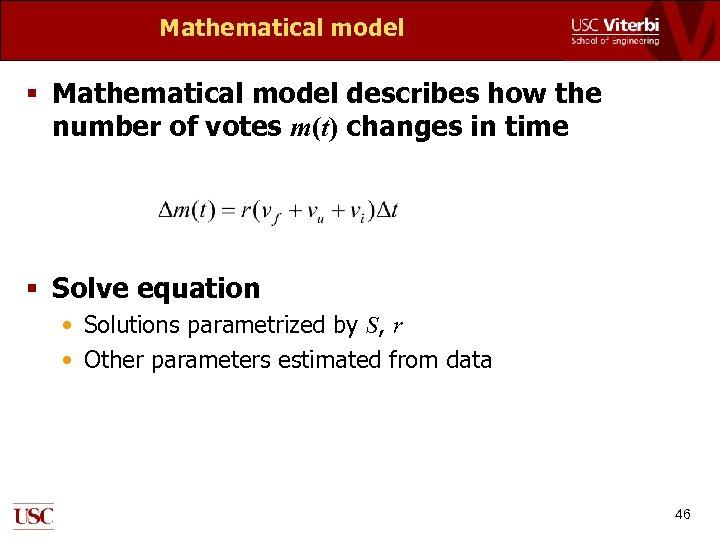

Mathematical model § Mathematical model describes how the number of votes m(t) changes in time § Solve equation • Solutions parametrized by S, r • Other parameters estimated from data 46

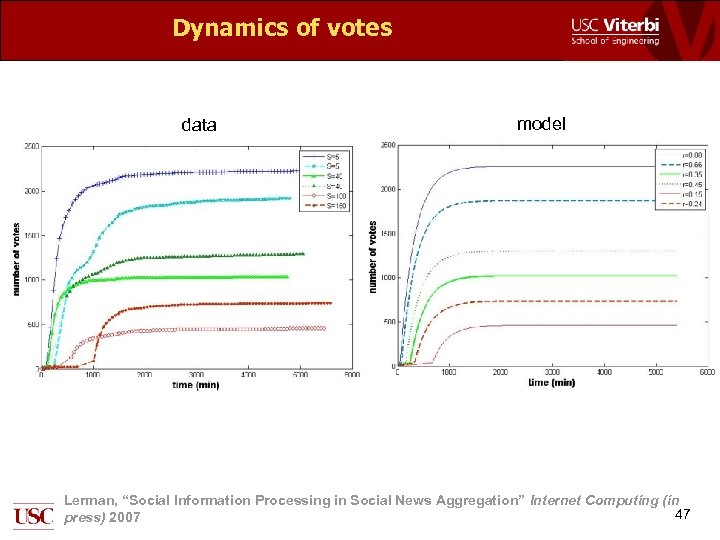

Dynamics of votes data model Lerman, “Social Information Processing in Social News Aggregation” Internet Computing (in 47 press) 2007

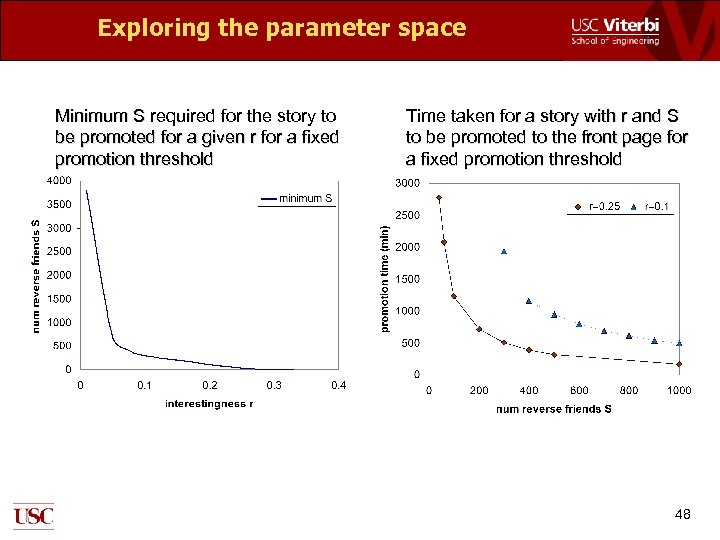

Exploring the parameter space Minimum S required for the story to be promoted for a given r for a fixed promotion threshold Time taken for a story with r and S to be promoted to the front page for a fixed promotion threshold 48

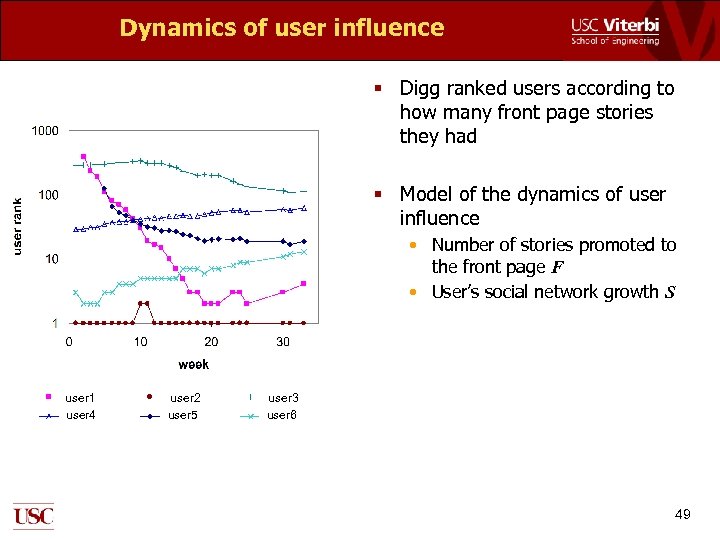

Dynamics of user influence § Digg ranked users according to how many front page stories they had § Model of the dynamics of user influence • Number of stories promoted to the front page F • User’s social network growth S user 1 user 4 user 2 user 5 user 3 user 6 49

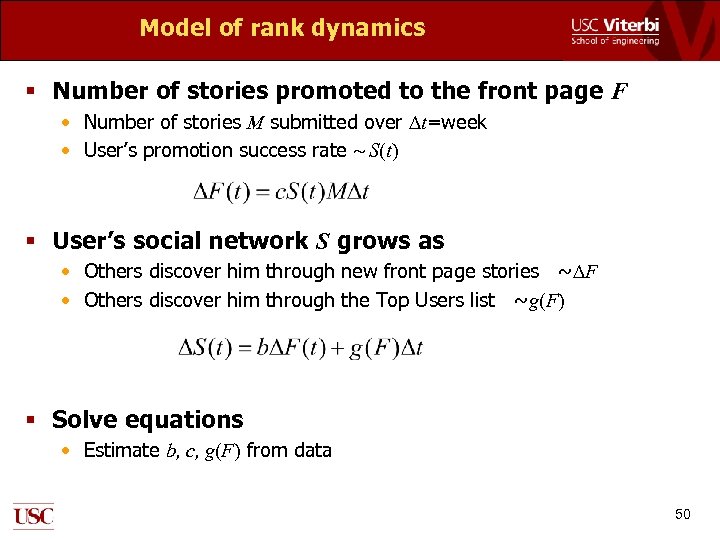

Model of rank dynamics § Number of stories promoted to the front page F • Number of stories M submitted over Dt=week • User’s promotion success rate ~ S(t) § User’s social network S grows as • Others discover him through new front page stories ~DF • Others discover him through the Top Users list ~g(F) § Solve equations • Estimate b, c, g(F) from data 50

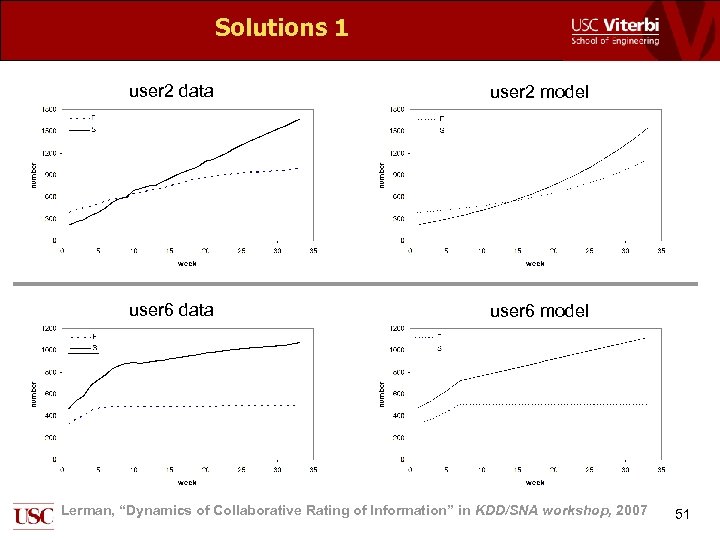

Solutions 1 user 2 data user 2 model user 6 data user 6 model Lerman, “Dynamics of Collaborative Rating of Information” in KDD/SNA workshop, 2007 51

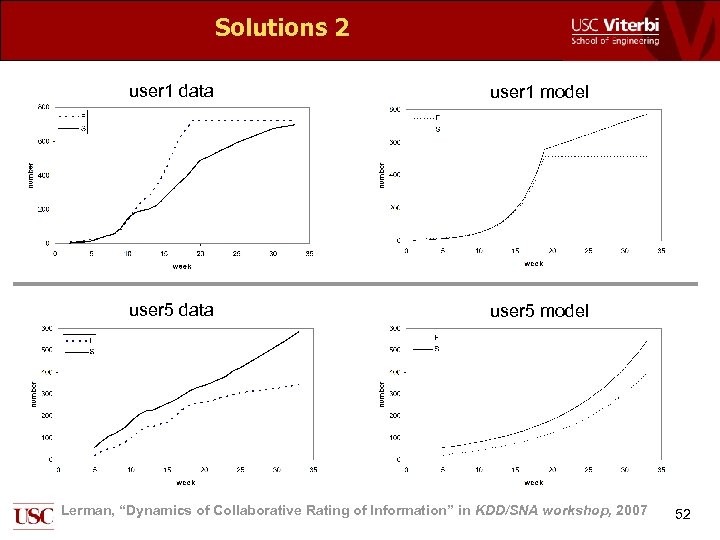

Solutions 2 user 1 data user 1 model user 5 data user 5 model Lerman, “Dynamics of Collaborative Rating of Information” in KDD/SNA workshop, 2007 52

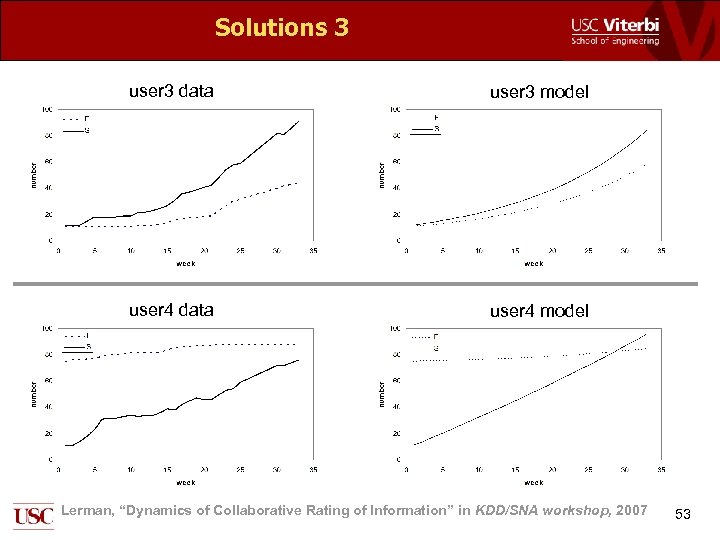

Solutions 3 user 3 data user 3 model user 4 data user 4 model Lerman, “Dynamics of Collaborative Rating of Information” in KDD/SNA workshop, 2007 53

Previous works Technologies that exploit independent activities of many users for information discovery and recommendation § Collaborative filtering [e. g. , Grouplens project 1997 -present] • Users express opinions by rating many products • System finds users with similar opinions and recommends products liked by those users • Product recommendation used by Amazon & Netflix − Users reluctant to rate products § Social navigation [Dieberger et al, 2000] • Exposes activity of others to help guide users to quality information sources − “N users found X helpful” − best seller lists, “what’s popular” pages, etc. 54

Conclusions § In their every day use of Social Web sites, users create large quantity of data, which express their knowledge and opinions • Content − Articles, media content, opinion pieces, etc. • Metadata − Tags, ratings, discussion, social networks • Links between users, content, and metadata § Social Web enables new problem solving approaches • Social Information Processing − Use knowledge, opinions, work of others for own information needs • Collective problem solving − Efficient, robust solutions beyond the scope of individual capabilities 55

Upcoming events § Social Information Processing Symposium • When: March 2008 • Where: AAAI Spring Symposium series @ Stanford • Organizers: K. Lerman, B. Huberman (HP Labs), D. Gutelius (SRI), S. Merugu (Yahoo) • http: //www. isi. edu/~lerman/sss 07/ 56

The future of the Social Web 2 Instead of connecting data, the Web connects people data § New applications • Collaboration tools − Collective intelligence: A large group of connected individuals acts more intelligently than individuals on their own • The personalization of everything − The more the system learns about me, the better it should filter • Discovery, not search − What papers do I need to read to know about the research on social networks? • Identifying emerging communities − Community-based vocabulary − Authoritative sources within the community 57

The future of the Social Web New challenge for AI: Instead of ever cleverer algorithms, harness the Collective Intelligence algorithms § Semantic Web vision [Berners-Lee & Hendler in Scientific American, 2001] • Web content annotated with machine-readable metadata (a formal classification system) to aid automatic information integration • Still unrealized in 2007 − Too complicated: specialized training to be used effectively − Costly and time-consuming to produce − Variety of specialized ontologies: ontology alignment problem § Folksonomy • “user generated taxonomy used to categorize and retrieve web content using open-ended labels called tags. ” [source: Wikipedia] − Bottom-up: decentralized, emergent, scalable − Dynamic: adapts to changing needs and priorities − Noisy: need tools to extract meaning from data 58

e54b06a42f8770f96ea8a5ecfdafe890.ppt