d29ff46b5db1529e67396b8c281597b2.ppt

- Количество слайдов: 25

The Social Science Research Unit (SSRU) Stephanie Kane, Project Manager Barbara Foltz, Unit Manager J. D. Wulfhorst, Director

The Social Science Research Unit (SSRU) Stephanie Kane, Project Manager Barbara Foltz, Unit Manager J. D. Wulfhorst, Director

Evolution of the SSRU n History as a “Service Unit” n Started from the ground up (’ 89 – ’ 99) n Era of re-development (’ 00 – ‘ 05) n Modernization of technology n Trained professional staff and management n Expertise in multiple methodologies n Self-sustaining for over 80% of our operations n New tier of projects beginning in 2006

Evolution of the SSRU n History as a “Service Unit” n Started from the ground up (’ 89 – ’ 99) n Era of re-development (’ 00 – ‘ 05) n Modernization of technology n Trained professional staff and management n Expertise in multiple methodologies n Self-sustaining for over 80% of our operations n New tier of projects beginning in 2006

SSRU Outcomes & Benefits n Scientifically rigorous survey methodology intended to yield high response rates and statistically valid results n SSRU assists clients in study design, sampling methodologies, and writing survey instruments; n conducting all or components of studies; and n analyzing and summarizing the results of projects n

SSRU Outcomes & Benefits n Scientifically rigorous survey methodology intended to yield high response rates and statistically valid results n SSRU assists clients in study design, sampling methodologies, and writing survey instruments; n conducting all or components of studies; and n analyzing and summarizing the results of projects n

SSRU Facilities & Laboratory n 1, 200 sq ft survey laboratory n Phone bank with 14 CATI stations using SPSS Data Entry Software n Automated Voice Recording System with a dedicated server n Data analysis using SPSS and SAS statistical software packages n Interview staff primarily UI students

SSRU Facilities & Laboratory n 1, 200 sq ft survey laboratory n Phone bank with 14 CATI stations using SPSS Data Entry Software n Automated Voice Recording System with a dedicated server n Data analysis using SPSS and SAS statistical software packages n Interview staff primarily UI students

Project Methodologies n Telephone and Mail Surveys n n n Dillman method for survey research Random sample of households Typically 50% - 65% response rates n Person-to-Person Surveys n Internet Surveys n Focus Groups—Qualitative research n Also used in conjunction with mail or phone surveys for mixed methodologies

Project Methodologies n Telephone and Mail Surveys n n n Dillman method for survey research Random sample of households Typically 50% - 65% response rates n Person-to-Person Surveys n Internet Surveys n Focus Groups—Qualitative research n Also used in conjunction with mail or phone surveys for mixed methodologies

What is the Dillman Method? n n Scientifically tested method for survey research Involves introductory hand-signed letter (mail) or postcard (phone) with real stamps Multiple follow-ups: several mailings (mail) or phone calls (phone) Can involve incentives

What is the Dillman Method? n n Scientifically tested method for survey research Involves introductory hand-signed letter (mail) or postcard (phone) with real stamps Multiple follow-ups: several mailings (mail) or phone calls (phone) Can involve incentives

Terminology Population: The entire group the researcher is interested in learning something about (e. g. Idaho residents) n Sample: The smaller group of units that are actually measured n Parameter: The summary measure for an entire population (the “true value”) n Statistic: The summary measure computed from sample data (the estimate) n

Terminology Population: The entire group the researcher is interested in learning something about (e. g. Idaho residents) n Sample: The smaller group of units that are actually measured n Parameter: The summary measure for an entire population (the “true value”) n Statistic: The summary measure computed from sample data (the estimate) n

Types of Experiments n Randomized experiments Subjects randomly assigned to treatment n Typical in medical, biological, chemical, engineering, physical fields n n Observational studies Subjects randomly selected from population n Typical in social science and marketing research n

Types of Experiments n Randomized experiments Subjects randomly assigned to treatment n Typical in medical, biological, chemical, engineering, physical fields n n Observational studies Subjects randomly selected from population n Typical in social science and marketing research n

Types of Sampling Simple random sample—every unit in the population has equal chance of being in the sample n Stratified sample—the population is separated into non-overlapping groups, and a simple random sample is drawn from each group n Cluster sample—each sampling unit is a cluster of elements n

Types of Sampling Simple random sample—every unit in the population has equal chance of being in the sample n Stratified sample—the population is separated into non-overlapping groups, and a simple random sample is drawn from each group n Cluster sample—each sampling unit is a cluster of elements n

Simple Random Sample

Simple Random Sample

Stratified Random Sampling

Stratified Random Sampling

Cluster Sampling

Cluster Sampling

Sources of Error in Survey Research n n Errors of non-observation 1. Sampling error 2. Coverage bias 3. Non-response bias Errors of observation (measurement error)

Sources of Error in Survey Research n n Errors of non-observation 1. Sampling error 2. Coverage bias 3. Non-response bias Errors of observation (measurement error)

Errors of Non-Observation n Sampling error: deviations of sample statistic from population parameter. Can be reduced by good sampling and appropriate sample size. Can be measured by confidence intervals. Coverage bias: selected sample is not representative of targeted population. Can be reduced by good sampling. Non-response bias: error resulting from non-response of members of the sample. Can be reduced or controlled for by follow-ups and sub-sampling of nonrespondents

Errors of Non-Observation n Sampling error: deviations of sample statistic from population parameter. Can be reduced by good sampling and appropriate sample size. Can be measured by confidence intervals. Coverage bias: selected sample is not representative of targeted population. Can be reduced by good sampling. Non-response bias: error resulting from non-response of members of the sample. Can be reduced or controlled for by follow-ups and sub-sampling of nonrespondents

Sampling Error Sampling error a result of not taking a complete census (which is often unpractical or impossible) n Statistics are used to place bounds on the error —we can be reasonably confident that true population parameter falls in a certain range n National studies usually +/- 3% n

Sampling Error Sampling error a result of not taking a complete census (which is often unpractical or impossible) n Statistics are used to place bounds on the error —we can be reasonably confident that true population parameter falls in a certain range n National studies usually +/- 3% n

Coverage Bias Sample does not adequately reflect population n Often a result of an incomplete frame (sometimes unavoidable) n n Equine survey case study

Coverage Bias Sample does not adequately reflect population n Often a result of an incomplete frame (sometimes unavoidable) n n Equine survey case study

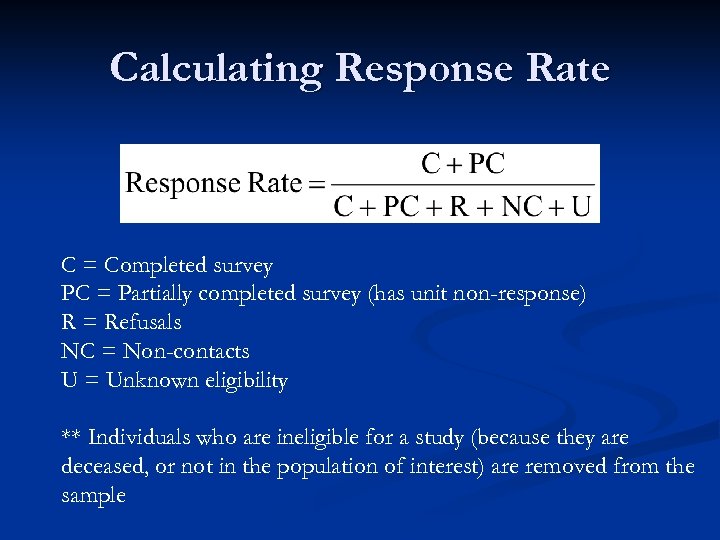

Calculating Response Rate C = Completed survey PC = Partially completed survey (has unit non-response) R = Refusals NC = Non-contacts U = Unknown eligibility ** Individuals who are ineligible for a study (because they are deceased, or not in the population of interest) are removed from the sample

Calculating Response Rate C = Completed survey PC = Partially completed survey (has unit non-response) R = Refusals NC = Non-contacts U = Unknown eligibility ** Individuals who are ineligible for a study (because they are deceased, or not in the population of interest) are removed from the sample

Non-Response Bias n Unit non-response n Unable to reach respondent n Cell-phone use case study n n Respondent refused survey Item non-response n Respondent refused question (usually sensitive question)

Non-Response Bias n Unit non-response n Unable to reach respondent n Cell-phone use case study n n Respondent refused survey Item non-response n Respondent refused question (usually sensitive question)

Errors of Observation Interviewer effects: intonation, staying on script, gender effects n Respondent effects: “social desirability”--not understanding a question or not wanting to tell the interviewer they don’t know n Question effects: question order, question wording, option order n Method of data collection, transcription or data entry errors n

Errors of Observation Interviewer effects: intonation, staying on script, gender effects n Respondent effects: “social desirability”--not understanding a question or not wanting to tell the interviewer they don’t know n Question effects: question order, question wording, option order n Method of data collection, transcription or data entry errors n

Reducing Survey Error n Improving coverage: Multi-frame studies (e. g. landline and cell phone lists) n Multi-mode studies (e. g. phone and mail) n RDD n n Improving response Increased number of call backs or mailings n Incentives or rewards n Pre-post card n

Reducing Survey Error n Improving coverage: Multi-frame studies (e. g. landline and cell phone lists) n Multi-mode studies (e. g. phone and mail) n RDD n n Improving response Increased number of call backs or mailings n Incentives or rewards n Pre-post card n

Testing for Non-Response Bias n Call a subset of the non-respondents and see if they differ on key questions from respondents n Compare sample demographics to census (population) demographics n Compare “hard to reach” respondents to “easy to reach” respondents

Testing for Non-Response Bias n Call a subset of the non-respondents and see if they differ on key questions from respondents n Compare sample demographics to census (population) demographics n Compare “hard to reach” respondents to “easy to reach” respondents

Accounting for Non-Response Weighting of observations n “Hot Deck” method: random replacement of item non-response from respondents (could be done by strata or demographic variables) n Maximum likelihood n

Accounting for Non-Response Weighting of observations n “Hot Deck” method: random replacement of item non-response from respondents (could be done by strata or demographic variables) n Maximum likelihood n

Reducing Measurement Error Well trained interviewers n Data checks and proofs n Pre-testing survey to test for question bias, order bias, or confusing questions n Rule-of-thumb: Less than 5% should respond “I don’t know” n

Reducing Measurement Error Well trained interviewers n Data checks and proofs n Pre-testing survey to test for question bias, order bias, or confusing questions n Rule-of-thumb: Less than 5% should respond “I don’t know” n

Project Examples n n n n n Crime Victimization (Idaho State Police) Equine Census in Idaho (ID Horse Council/Horse Board) Youth 4 -H (Extension) Green Industry of Idaho (INLA, economic impact study) Food Thermometer Use (UI – Food & Consumer Science) School Facilities (Moscow School District) Farm Experiential Learning (UI/WSU Extension & Rural Roots, Inc. ) Students with Disabilities (UI – Human Resource services) Ext. Critical issues: dairy wastes, forestry management training, mint production

Project Examples n n n n n Crime Victimization (Idaho State Police) Equine Census in Idaho (ID Horse Council/Horse Board) Youth 4 -H (Extension) Green Industry of Idaho (INLA, economic impact study) Food Thermometer Use (UI – Food & Consumer Science) School Facilities (Moscow School District) Farm Experiential Learning (UI/WSU Extension & Rural Roots, Inc. ) Students with Disabilities (UI – Human Resource services) Ext. Critical issues: dairy wastes, forestry management training, mint production

Suggested Resources n n Utts, J. M. and R. F. Heckard. 2004. Mind on Statistics. Thompson Learning, Inc. Belmont, CA Dillman, D. A. 1978. Mail and Telephone Surveys: The Total Design Method. John Wiley & Sons, Inc. NY, NY Scheaffer, R. L. , W. Mendenhall III, and R. L. Ott. 1996. Elementary Survey Sampling, 5 th Ed. Duxbury Press, NY. Levy, P. S. and S. Lemeshow. 1991. Sampling of Populations: Methods and Applications. John Wiley & Sons, Inc. NY, NY.

Suggested Resources n n Utts, J. M. and R. F. Heckard. 2004. Mind on Statistics. Thompson Learning, Inc. Belmont, CA Dillman, D. A. 1978. Mail and Telephone Surveys: The Total Design Method. John Wiley & Sons, Inc. NY, NY Scheaffer, R. L. , W. Mendenhall III, and R. L. Ott. 1996. Elementary Survey Sampling, 5 th Ed. Duxbury Press, NY. Levy, P. S. and S. Lemeshow. 1991. Sampling of Populations: Methods and Applications. John Wiley & Sons, Inc. NY, NY.