c3e1e8ce48a02c21376dfb9732d0c056.ppt

- Количество слайдов: 36

The Skinny on Fat SAS Data Set Processing By Gopal Rajagopal Phila. Sug 2005 Nov 9 th 2005 A quote from a Philly celebrity Benjamin Franklin … ‘Our Constitution is in actual operation; everything appears to promise that it will last; but in this world nothing is certain but death and taxes …. and more data ’ 1

The Skinny on Fat SAS Data Set Processing By Gopal Rajagopal Phila. Sug 2005 Nov 9 th 2005 A quote from a Philly celebrity Benjamin Franklin … ‘Our Constitution is in actual operation; everything appears to promise that it will last; but in this world nothing is certain but death and taxes …. and more data ’ 1

What can I do about routine processing of FAT SAS data sets ? Ask for a computer with more/faster … Memory, Storage, CPU’s, SAS V 9 SPDY server, bigger size monitor (!) etc. OR Start coding without explicitly taking scaling issues into consideration OR Use a ‘concentric ring’ approach i. e. act locally while thinking globally … l l Focus on local SAS program and it’s I/O (the common culprit) If needed, consider global and more ‘expensive’ solutions. 2

What can I do about routine processing of FAT SAS data sets ? Ask for a computer with more/faster … Memory, Storage, CPU’s, SAS V 9 SPDY server, bigger size monitor (!) etc. OR Start coding without explicitly taking scaling issues into consideration OR Use a ‘concentric ring’ approach i. e. act locally while thinking globally … l l Focus on local SAS program and it’s I/O (the common culprit) If needed, consider global and more ‘expensive’ solutions. 2

Agenda ¡Clarify terminology ¡Process Management SOP/Tips for Large data set manipulation ¡Discussion of some coding techniques/approaches ¡QC for Large data set processing ¡Questions 3

Agenda ¡Clarify terminology ¡Process Management SOP/Tips for Large data set manipulation ¡Discussion of some coding techniques/approaches ¡QC for Large data set processing ¡Questions 3

Clarify Terminology Who are you calling ‘FAT’ ? !. ¡ ¡ ¡ FAT is a relative term. A ‘FAT’ data set can have ‘too’ many variables or rows or both. Any data set is ‘FAT’, if you have ‘trouble’ processing it. ‘Trouble’ could mean … 1. 2. 3. 4. ¡ Not ‘enough’ Disk storage, RAM, CPU. Program takes too long to run Ever changing user demands and associated development/QC burden Programmer’s sanity/fortitude related stress Even for smaller data sets, some of the ideas in this presentation may be good SOP. 4

Clarify Terminology Who are you calling ‘FAT’ ? !. ¡ ¡ ¡ FAT is a relative term. A ‘FAT’ data set can have ‘too’ many variables or rows or both. Any data set is ‘FAT’, if you have ‘trouble’ processing it. ‘Trouble’ could mean … 1. 2. 3. 4. ¡ Not ‘enough’ Disk storage, RAM, CPU. Program takes too long to run Ever changing user demands and associated development/QC burden Programmer’s sanity/fortitude related stress Even for smaller data sets, some of the ideas in this presentation may be good SOP. 4

Clarify Terminology What is processing a ‘FAT’ SAS data set ? ¡ ¡ The focus will be on ‘typical’ data step operations (using SAS 8. 2) We will discuss SOPs and profile a few techniques … l l l SOP/Tips to streamline process management Reduce overhead(s) such as Disk space, elapsed time Help make sub-setting of data less onerous Examine alternatives to merging/sorting data Discuss faster ways of frequent searching of data 5

Clarify Terminology What is processing a ‘FAT’ SAS data set ? ¡ ¡ The focus will be on ‘typical’ data step operations (using SAS 8. 2) We will discuss SOPs and profile a few techniques … l l l SOP/Tips to streamline process management Reduce overhead(s) such as Disk space, elapsed time Help make sub-setting of data less onerous Examine alternatives to merging/sorting data Discuss faster ways of frequent searching of data 5

Clarify Terminology ‘Efficient’ Processing … huh ? Efficiency can mean the following positive adjective(s) describe your SAS code … ¡ Quicker than status quo ¡ Smaller than status quo ¡ Better coding i. e. coding is more efficient and streamlined. ¡ For the empirically inclined, a baseline is useful in measuring changes to the status quo. Note : These adjectives do not have to be mutually exclusive. 6

Clarify Terminology ‘Efficient’ Processing … huh ? Efficiency can mean the following positive adjective(s) describe your SAS code … ¡ Quicker than status quo ¡ Smaller than status quo ¡ Better coding i. e. coding is more efficient and streamlined. ¡ For the empirically inclined, a baseline is useful in measuring changes to the status quo. Note : These adjectives do not have to be mutually exclusive. 6

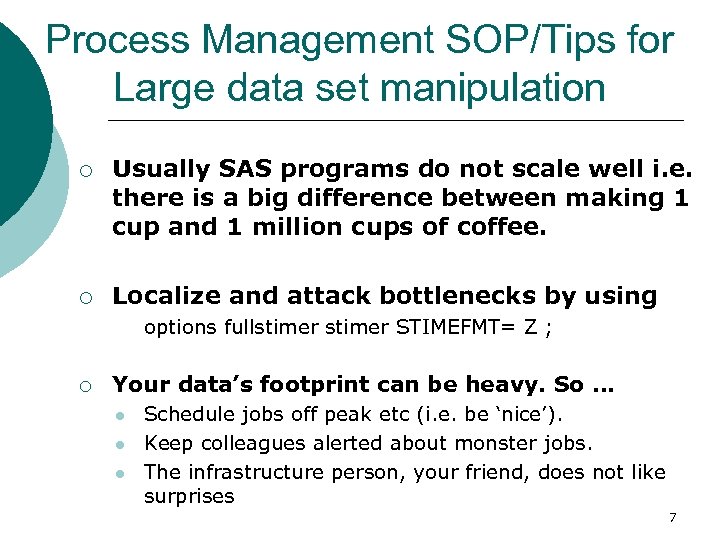

Process Management SOP/Tips for Large data set manipulation ¡ ¡ Usually SAS programs do not scale well i. e. there is a big difference between making 1 cup and 1 million cups of coffee. Localize and attack bottlenecks by using options fullstimer STIMEFMT= Z ; ¡ Your data’s footprint can be heavy. So … l l l Schedule jobs off peak etc (i. e. be ‘nice’). Keep colleagues alerted about monster jobs. The infrastructure person, your friend, does not like surprises 7

Process Management SOP/Tips for Large data set manipulation ¡ ¡ Usually SAS programs do not scale well i. e. there is a big difference between making 1 cup and 1 million cups of coffee. Localize and attack bottlenecks by using options fullstimer STIMEFMT= Z ; ¡ Your data’s footprint can be heavy. So … l l l Schedule jobs off peak etc (i. e. be ‘nice’). Keep colleagues alerted about monster jobs. The infrastructure person, your friend, does not like surprises 7

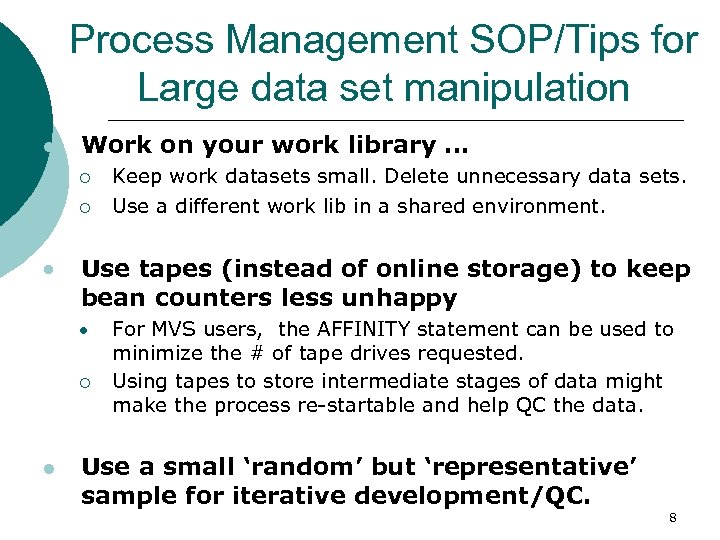

Process Management SOP/Tips for Large data set manipulation l Work on your work library … ¡ ¡ Keep work datasets small. Delete unnecessary data sets. Use a different work lib in a shared environment. Use tapes (instead of online storage) to keep bean counters less unhappy ¡ l For MVS users, the AFFINITY statement can be used to minimize the # of tape drives requested. Using tapes to store intermediate stages of data might make the process re-startable and help QC the data. Use a small ‘random’ but ‘representative’ sample for iterative development/QC. 8

Process Management SOP/Tips for Large data set manipulation l Work on your work library … ¡ ¡ Keep work datasets small. Delete unnecessary data sets. Use a different work lib in a shared environment. Use tapes (instead of online storage) to keep bean counters less unhappy ¡ l For MVS users, the AFFINITY statement can be used to minimize the # of tape drives requested. Using tapes to store intermediate stages of data might make the process re-startable and help QC the data. Use a small ‘random’ but ‘representative’ sample for iterative development/QC. 8

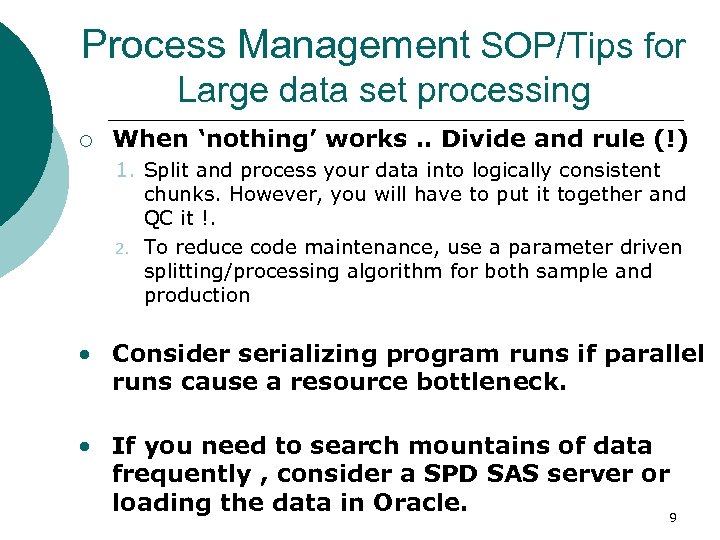

Process Management SOP/Tips for Large data set processing ¡ When ‘nothing’ works. . Divide and rule (!) 1. Split and process your data into logically consistent chunks. However, you will have to put it together and QC it !. 2. To reduce code maintenance, use a parameter driven splitting/processing algorithm for both sample and production Consider serializing program runs if parallel runs cause a resource bottleneck. If you need to search mountains of data frequently , consider a SPD SAS server or loading the data in Oracle. 9

Process Management SOP/Tips for Large data set processing ¡ When ‘nothing’ works. . Divide and rule (!) 1. Split and process your data into logically consistent chunks. However, you will have to put it together and QC it !. 2. To reduce code maintenance, use a parameter driven splitting/processing algorithm for both sample and production Consider serializing program runs if parallel runs cause a resource bottleneck. If you need to search mountains of data frequently , consider a SPD SAS server or loading the data in Oracle. 9

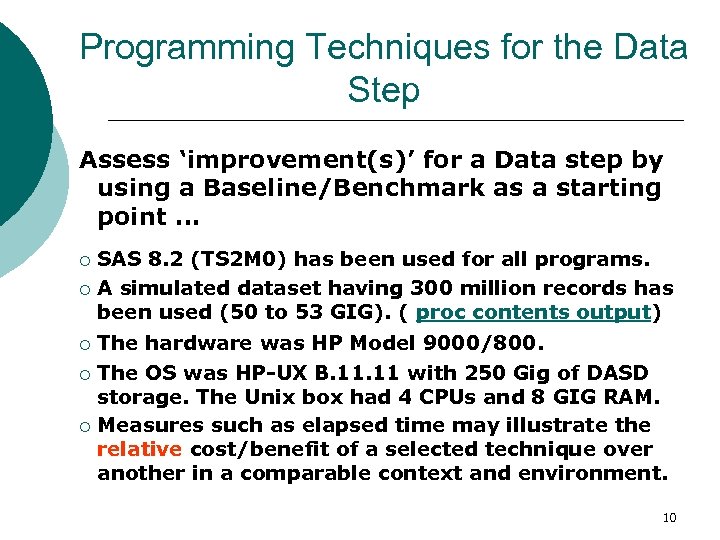

Programming Techniques for the Data Step Assess ‘improvement(s)’ for a Data step by using a Baseline/Benchmark as a starting point … SAS 8. 2 (TS 2 M 0) has been used for all programs. ¡ A simulated dataset having 300 million records has been used (50 to 53 GIG). ( proc contents output) ¡ The hardware was HP Model 9000/800. ¡ The OS was HP-UX B. 11 with 250 Gig of DASD storage. The Unix box had 4 CPUs and 8 GIG RAM. ¡ Measures such as elapsed time may illustrate the relative cost/benefit of a selected technique over another in a comparable context and environment. ¡ 10

Programming Techniques for the Data Step Assess ‘improvement(s)’ for a Data step by using a Baseline/Benchmark as a starting point … SAS 8. 2 (TS 2 M 0) has been used for all programs. ¡ A simulated dataset having 300 million records has been used (50 to 53 GIG). ( proc contents output) ¡ The hardware was HP Model 9000/800. ¡ The OS was HP-UX B. 11 with 250 Gig of DASD storage. The Unix box had 4 CPUs and 8 GIG RAM. ¡ Measures such as elapsed time may illustrate the relative cost/benefit of a selected technique over another in a comparable context and environment. ¡ 10

Programming Techniques for the Data Step An approach to reducing I/O, a significant bottleneck, could be … ¡ ¡ Reduce dataset size (vertically and horizontally) to fit the programming task. Minimize sequential I/O (SAS default). Maximize non sequential (i. e. random) I/O. To make your program faster … l l Re-direct sequential I/O to memory Re-direct non sequential I/O to memory Note : Most systems have lot less of RAM than DASD. 11

Programming Techniques for the Data Step An approach to reducing I/O, a significant bottleneck, could be … ¡ ¡ Reduce dataset size (vertically and horizontally) to fit the programming task. Minimize sequential I/O (SAS default). Maximize non sequential (i. e. random) I/O. To make your program faster … l l Re-direct sequential I/O to memory Re-direct non sequential I/O to memory Note : Most systems have lot less of RAM than DASD. 11

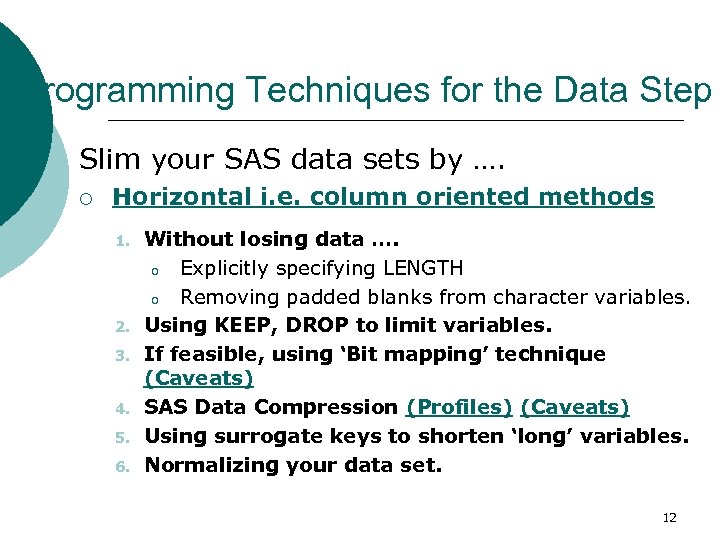

Programming Techniques for the Data Step Slim your SAS data sets by …. ¡ Horizontal i. e. column oriented methods 1. 2. 3. 4. 5. 6. Without losing data …. o Explicitly specifying LENGTH o Removing padded blanks from character variables. Using KEEP, DROP to limit variables. If feasible, using ‘Bit mapping’ technique (Caveats) SAS Data Compression (Profiles) (Caveats) Using surrogate keys to shorten ‘long’ variables. Normalizing your data set. 12

Programming Techniques for the Data Step Slim your SAS data sets by …. ¡ Horizontal i. e. column oriented methods 1. 2. 3. 4. 5. 6. Without losing data …. o Explicitly specifying LENGTH o Removing padded blanks from character variables. Using KEEP, DROP to limit variables. If feasible, using ‘Bit mapping’ technique (Caveats) SAS Data Compression (Profiles) (Caveats) Using surrogate keys to shorten ‘long’ variables. Normalizing your data set. 12

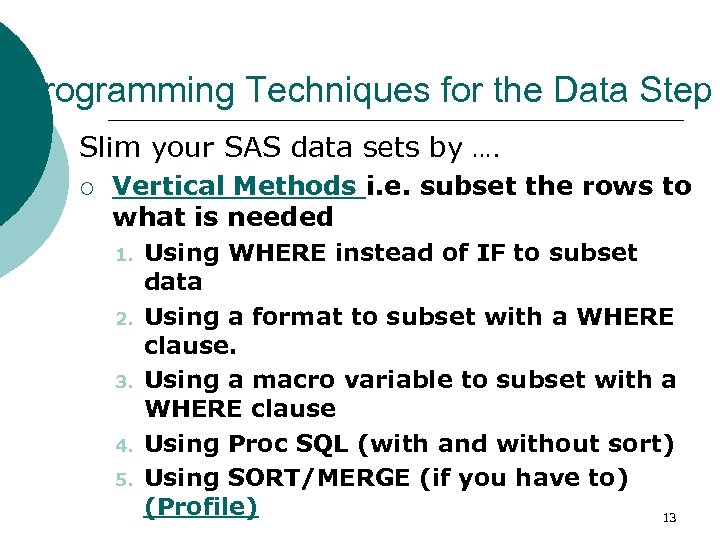

Programming Techniques for the Data Step Slim your SAS data sets by …. ¡ Vertical Methods i. e. subset the rows to what is needed 1. 2. 3. 4. 5. Using WHERE instead of IF to subset data Using a format to subset with a WHERE clause. Using a macro variable to subset with a WHERE clause Using Proc SQL (with and without sort) Using SORT/MERGE (if you have to) (Profile) 13

Programming Techniques for the Data Step Slim your SAS data sets by …. ¡ Vertical Methods i. e. subset the rows to what is needed 1. 2. 3. 4. 5. Using WHERE instead of IF to subset data Using a format to subset with a WHERE clause. Using a macro variable to subset with a WHERE clause Using Proc SQL (with and without sort) Using SORT/MERGE (if you have to) (Profile) 13

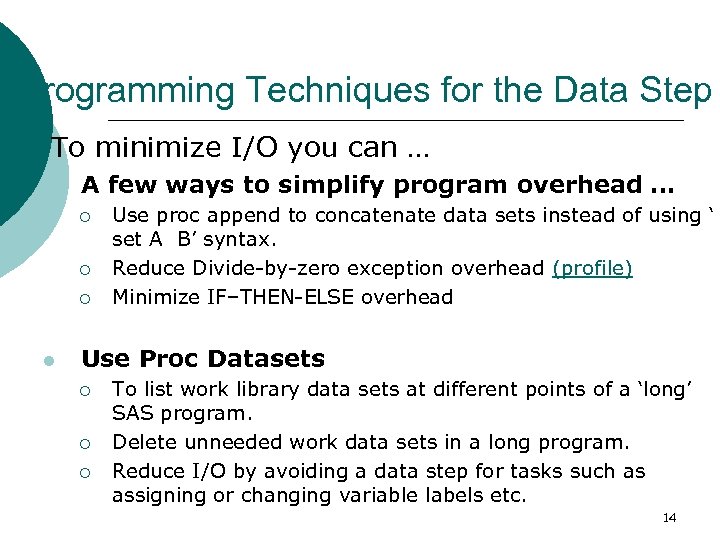

Programming Techniques for the Data Step ¡ To minimize I/O you can … l A few ways to simplify program overhead … ¡ ¡ ¡ l Use proc append to concatenate data sets instead of using ‘ set A B’ syntax. Reduce Divide-by-zero exception overhead (profile) Minimize IF–THEN-ELSE overhead Use Proc Datasets ¡ ¡ ¡ To list work library data sets at different points of a ‘long’ SAS program. Delete unneeded work data sets in a long program. Reduce I/O by avoiding a data step for tasks such as assigning or changing variable labels etc. 14

Programming Techniques for the Data Step ¡ To minimize I/O you can … l A few ways to simplify program overhead … ¡ ¡ ¡ l Use proc append to concatenate data sets instead of using ‘ set A B’ syntax. Reduce Divide-by-zero exception overhead (profile) Minimize IF–THEN-ELSE overhead Use Proc Datasets ¡ ¡ ¡ To list work library data sets at different points of a ‘long’ SAS program. Delete unneeded work data sets in a long program. Reduce I/O by avoiding a data step for tasks such as assigning or changing variable labels etc. 14

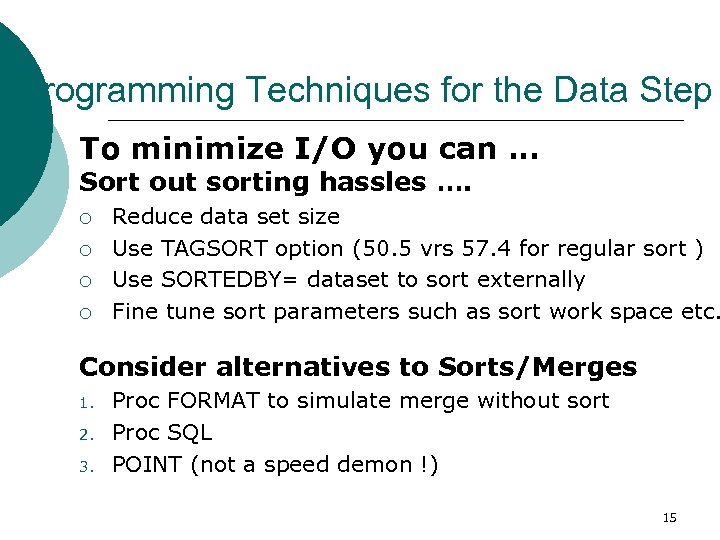

Programming Techniques for the Data Step To minimize I/O you can … Sort out sorting hassles …. ¡ ¡ Reduce data set size Use TAGSORT option (50. 5 vrs 57. 4 for regular sort ) Use SORTEDBY= dataset to sort externally Fine tune sort parameters such as sort work space etc. Consider alternatives to Sorts/Merges 1. 2. 3. Proc FORMAT to simulate merge without sort Proc SQL POINT (not a speed demon !) 15

Programming Techniques for the Data Step To minimize I/O you can … Sort out sorting hassles …. ¡ ¡ Reduce data set size Use TAGSORT option (50. 5 vrs 57. 4 for regular sort ) Use SORTEDBY= dataset to sort externally Fine tune sort parameters such as sort work space etc. Consider alternatives to Sorts/Merges 1. 2. 3. Proc FORMAT to simulate merge without sort Proc SQL POINT (not a speed demon !) 15

Programming Techniques for the Data Step Using Indices I/O is a significant burden for look up(s). 1. Proc Format ( using the put function) 2. Consider Indexing the Large SAS data set for more efficient look up(s) and use … l l l Proc SQL KEY= SET Option WHERE clause (Profile) 16

Programming Techniques for the Data Step Using Indices I/O is a significant burden for look up(s). 1. Proc Format ( using the put function) 2. Consider Indexing the Large SAS data set for more efficient look up(s) and use … l l l Proc SQL KEY= SET Option WHERE clause (Profile) 16

QC issues in processing large volumes of data ¡ ‘Dividing’ may be easier than ‘ruling’ from a QC/validation perspective. ¡ Use a small ‘random’ and ‘representative’ sample for validating processing and intensive QC. ¡ Compare a ‘before’ set of frequencies and record counts (using raw data) for critical variables with the corresponding ‘final’ numbers. 17

QC issues in processing large volumes of data ¡ ‘Dividing’ may be easier than ‘ruling’ from a QC/validation perspective. ¡ Use a small ‘random’ and ‘representative’ sample for validating processing and intensive QC. ¡ Compare a ‘before’ set of frequencies and record counts (using raw data) for critical variables with the corresponding ‘final’ numbers. 17

QC issues in processing large volumes of data ¡ Compare output for critical variables across time to spot anomalous trends (if available) ¡ Output, for a random selection of patient id(s) (for e. g. ), data from the final processed data and follow the algorithm back to the ‘raw’ data. ¡ Use tapes to store different intermediate stages of data being processed to follow the QC trail 18

QC issues in processing large volumes of data ¡ Compare output for critical variables across time to spot anomalous trends (if available) ¡ Output, for a random selection of patient id(s) (for e. g. ), data from the final processed data and follow the algorithm back to the ‘raw’ data. ¡ Use tapes to store different intermediate stages of data being processed to follow the QC trail 18

Acknowledgements I would like to thank my colleagues for their support : Margaret Coughlin Kathy Harkins Donna Usavage Anil Golla Rajavel Ganesan Girish Havildar Lisa Pyle Xingshu Zhu 19

Acknowledgements I would like to thank my colleagues for their support : Margaret Coughlin Kathy Harkins Donna Usavage Anil Golla Rajavel Ganesan Girish Havildar Lisa Pyle Xingshu Zhu 19

Questions ? 20

Questions ? 20

Slim your data Horizontal i. e. column oriented methods (1 of 3) Initial baseline size : 50 Gig (with 13 Numeric variables (length 8) and 2 char variables (39 Chars) Initial baseline IF run (in minutes) : 26. 7 (which returns 199, 800, 001 rows) Technique : Use LENGTH to reduce 8 Numeric variables (of default 8 bytes length) to 3 bytes. Size : 39 Gig Time to run IF: 23. 2 Note : Size is smaller but IF run time is going down a little. Technique : TRIM and reduce length of the 2 char variables from 39 to 8 characters in addition to numeric variable lengths Size : 19 Gig Time to run IF: 15. 4 Note : Size comes down > 50 % and run time also down 21

Slim your data Horizontal i. e. column oriented methods (1 of 3) Initial baseline size : 50 Gig (with 13 Numeric variables (length 8) and 2 char variables (39 Chars) Initial baseline IF run (in minutes) : 26. 7 (which returns 199, 800, 001 rows) Technique : Use LENGTH to reduce 8 Numeric variables (of default 8 bytes length) to 3 bytes. Size : 39 Gig Time to run IF: 23. 2 Note : Size is smaller but IF run time is going down a little. Technique : TRIM and reduce length of the 2 char variables from 39 to 8 characters in addition to numeric variable lengths Size : 19 Gig Time to run IF: 15. 4 Note : Size comes down > 50 % and run time also down 21

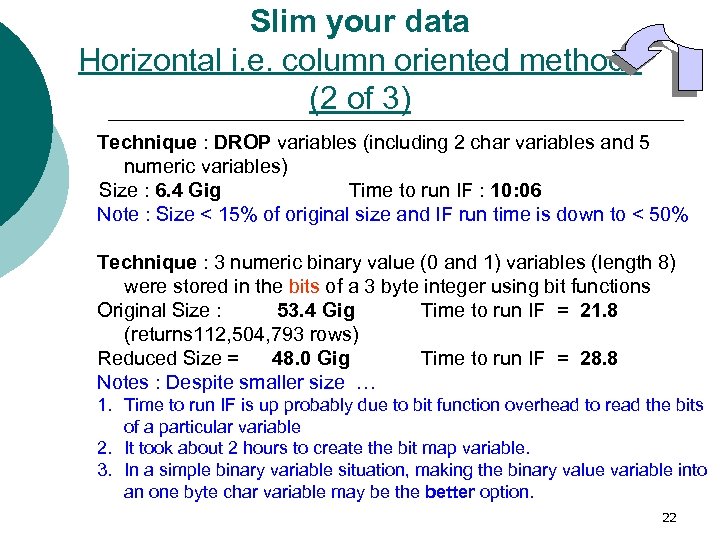

Slim your data Horizontal i. e. column oriented methods (2 of 3) Technique : DROP variables (including 2 char variables and 5 numeric variables) Size : 6. 4 Gig Time to run IF : 10: 06 Note : Size < 15% of original size and IF run time is down to < 50% Technique : 3 numeric binary value (0 and 1) variables (length 8) were stored in the bits of a 3 byte integer using bit functions Original Size : 53. 4 Gig Time to run IF = 21. 8 (returns 112, 504, 793 rows) Reduced Size = 48. 0 Gig Time to run IF = 28. 8 Notes : Despite smaller size … 1. Time to run IF is up probably due to bit function overhead to read the bits of a particular variable 2. It took about 2 hours to create the bit map variable. 3. In a simple binary variable situation, making the binary value variable into an one byte char variable may be the better option. 22

Slim your data Horizontal i. e. column oriented methods (2 of 3) Technique : DROP variables (including 2 char variables and 5 numeric variables) Size : 6. 4 Gig Time to run IF : 10: 06 Note : Size < 15% of original size and IF run time is down to < 50% Technique : 3 numeric binary value (0 and 1) variables (length 8) were stored in the bits of a 3 byte integer using bit functions Original Size : 53. 4 Gig Time to run IF = 21. 8 (returns 112, 504, 793 rows) Reduced Size = 48. 0 Gig Time to run IF = 28. 8 Notes : Despite smaller size … 1. Time to run IF is up probably due to bit function overhead to read the bits of a particular variable 2. It took about 2 hours to create the bit map variable. 3. In a simple binary variable situation, making the binary value variable into an one byte char variable may be the better option. 22

Slim your data Horizontal i. e. column oriented methods (3 of 3) Technique : Compress the data set with Binary option (without keeps or drops) Before compression size : 50 Gig (13 Numeric vars (length 8) and 2 char vars (39 Chars)) Time to run IF : 26. 7 (returning 199, 800, 001 rows) Note : It took 1 hour and 10 minutes to compress this data. After compression size : 21. 9 Gig Time to run IF : 28. 6 Note : If the output data set was also compressed it took 1 hour. 23

Slim your data Horizontal i. e. column oriented methods (3 of 3) Technique : Compress the data set with Binary option (without keeps or drops) Before compression size : 50 Gig (13 Numeric vars (length 8) and 2 char vars (39 Chars)) Time to run IF : 26. 7 (returning 199, 800, 001 rows) Note : It took 1 hour and 10 minutes to compress this data. After compression size : 21. 9 Gig Time to run IF : 28. 6 Note : If the output data set was also compressed it took 1 hour. 23

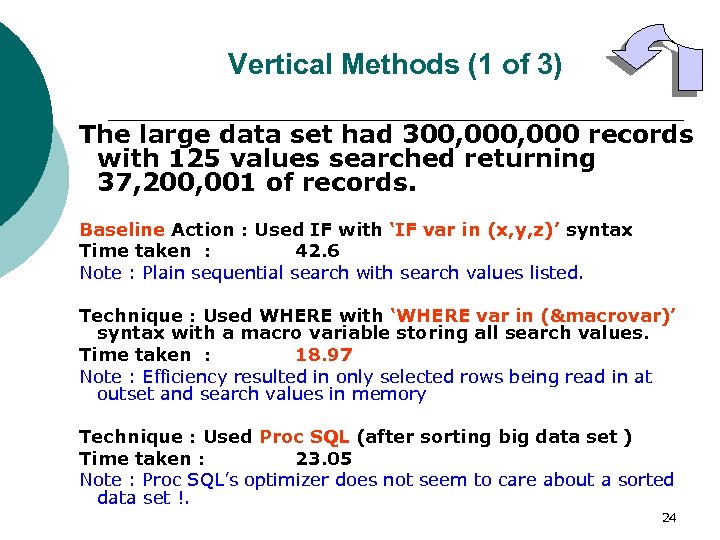

Vertical Methods (1 of 3) The large data set had 300, 000 records with 125 values searched returning 37, 200, 001 of records. Baseline Action : Used IF with ‘IF var in (x, y, z)’ syntax Time taken : 42. 6 Note : Plain sequential search with search values listed. Technique : Used WHERE with ‘WHERE var in (¯ovar)’ syntax with a macro variable storing all search values. Time taken : 18. 97 Note : Efficiency resulted in only selected rows being read in at outset and search values in memory Technique : Used Proc SQL (after sorting big data set ) Time taken : 23. 05 Note : Proc SQL’s optimizer does not seem to care about a sorted data set !. 24

Vertical Methods (1 of 3) The large data set had 300, 000 records with 125 values searched returning 37, 200, 001 of records. Baseline Action : Used IF with ‘IF var in (x, y, z)’ syntax Time taken : 42. 6 Note : Plain sequential search with search values listed. Technique : Used WHERE with ‘WHERE var in (¯ovar)’ syntax with a macro variable storing all search values. Time taken : 18. 97 Note : Efficiency resulted in only selected rows being read in at outset and search values in memory Technique : Used Proc SQL (after sorting big data set ) Time taken : 23. 05 Note : Proc SQL’s optimizer does not seem to care about a sorted data set !. 24

Vertical Methods (2 of 3) The large data set had 300, 000 records with 125 values searched returning 37, 200, 001 records. Technique : Used ‘WHERE var in (x, y, z)’ syntax by listing search values Time taken : 23. 18 Note : WHERE better than IF. However, plain listing of search values does not use memory ( with macro variable it took 18 minutes) Technique : Used Proc SQL (No sorting of the large data set ) Time taken : 23. 61 Note : SAS’s SQL optimizer is optimizing well here. However, sorting the big data set does not help (it took 23. 05). Technique : Created a format to house search values with a WHERE clause using syntax ‘WHERE put(formvar, ? formtmp. ) EQ '999'; ’ Time taken : 31. 26 Note : Only selected rows come in at the outset. Search values are in memory through the format. Not all memory techniques are the same ! 25

Vertical Methods (2 of 3) The large data set had 300, 000 records with 125 values searched returning 37, 200, 001 records. Technique : Used ‘WHERE var in (x, y, z)’ syntax by listing search values Time taken : 23. 18 Note : WHERE better than IF. However, plain listing of search values does not use memory ( with macro variable it took 18 minutes) Technique : Used Proc SQL (No sorting of the large data set ) Time taken : 23. 61 Note : SAS’s SQL optimizer is optimizing well here. However, sorting the big data set does not help (it took 23. 05). Technique : Created a format to house search values with a WHERE clause using syntax ‘WHERE put(formvar, ? formtmp. ) EQ '999'; ’ Time taken : 31. 26 Note : Only selected rows come in at the outset. Search values are in memory through the format. Not all memory techniques are the same ! 25

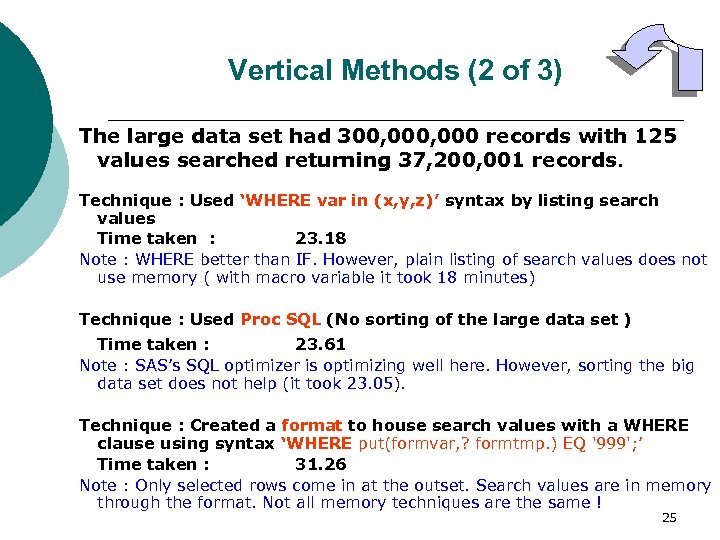

Vertical Methods (3 of 3) The large data set had 300, 000 records with 125 values searched returning 37, 200, 001 records. Technique : Used ‘IF var in (¯ovar)’ in a macro variable Time taken : 35. 4 Note : I/O in memory for search values even with the extra overhead with ‘IF’ seems to help. The plain vanilla syntax took 42. 6 minutes. No sorting used. Technique: SORT/MERGE Time taken for SORT : 60 Time taken for MERGE: 27 Total Time : 87 Note : Sort only if you have to as it is also disk intensive and takes a lot of time 26

Vertical Methods (3 of 3) The large data set had 300, 000 records with 125 values searched returning 37, 200, 001 records. Technique : Used ‘IF var in (¯ovar)’ in a macro variable Time taken : 35. 4 Note : I/O in memory for search values even with the extra overhead with ‘IF’ seems to help. The plain vanilla syntax took 42. 6 minutes. No sorting used. Technique: SORT/MERGE Time taken for SORT : 60 Time taken for MERGE: 27 Total Time : 87 Note : Sort only if you have to as it is also disk intensive and takes a lot of time 26

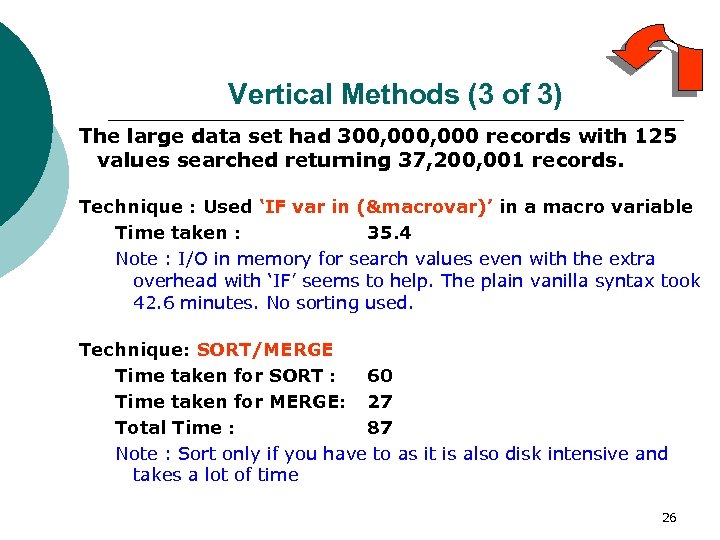

Using SAS indices (1 of 3) We are searching for records with 125 values amongst 300 million records … Scenario 1 : Get back 125 records from 300 million unique values Time taken using IF v_id in (x, y, z) syntax : Time taken using WHERE v_id in (x, y, z) syntax : 43. 1 4. 1 Note : WHERE (but not IF) uses the index and reads in what qualifies. The index on column V_ID is specified as unique. Scenario 2 : Get back 72, 000, 001 rows using Non Unique field with 100 distinct values Time taken using IF formvar in (x, y, z) syntax : 44. 2 Time taken using WHERE formvar in (x, y, z) syntax : 9. 2 Note : WHERE uses the index and reads in what qualifies. The index on column formvar is specified as non-unique. 27

Using SAS indices (1 of 3) We are searching for records with 125 values amongst 300 million records … Scenario 1 : Get back 125 records from 300 million unique values Time taken using IF v_id in (x, y, z) syntax : Time taken using WHERE v_id in (x, y, z) syntax : 43. 1 4. 1 Note : WHERE (but not IF) uses the index and reads in what qualifies. The index on column V_ID is specified as unique. Scenario 2 : Get back 72, 000, 001 rows using Non Unique field with 100 distinct values Time taken using IF formvar in (x, y, z) syntax : 44. 2 Time taken using WHERE formvar in (x, y, z) syntax : 9. 2 Note : WHERE uses the index and reads in what qualifies. The index on column formvar is specified as non-unique. 27

Using SAS indices (2 of 3) We are searching for records with 100 values amongst 300 million records using PROC SQL … Scenario 3 : Get back 100 records from 300 million unique values Time taken using SET=KEY option : . 2 Note : SET=KEY used an unique index which returned a ‘needle’ in a haystack. The index on column V_ID is also specified as unique. Scenario 4 : Try to get back one-to-many i. e. non unique records from 300 million records for 100 distinct values Note : SET = KEY syntax does not work as it picks only the first key value record which qualifies. 28

Using SAS indices (2 of 3) We are searching for records with 100 values amongst 300 million records using PROC SQL … Scenario 3 : Get back 100 records from 300 million unique values Time taken using SET=KEY option : . 2 Note : SET=KEY used an unique index which returned a ‘needle’ in a haystack. The index on column V_ID is also specified as unique. Scenario 4 : Try to get back one-to-many i. e. non unique records from 300 million records for 100 distinct values Note : SET = KEY syntax does not work as it picks only the first key value record which qualifies. 28

Using SAS indices (3 of 3) We are searching for records with 100 values amongst 300 million records using PROC SQL … Scenario 5 : Get back 100 unique values from 300 million unique value records Time taken using PROC SQL syntax : 3. 2 Note : PROC SQL used an unique index which returned a ‘needle’ in a haystack. The index on column V_ID is also specified as unique. Scenario 6 : Get back 297, 000, 001 records from 300 million records for 100 distinct values Time taken using PROC SQL syntax 43. 5 Note : PROC SQL does not use an index on formvar as …. 1. It is not unique 2. It expects to return a lot of records i. e. it is more efficient to do simple sequential search rather than reading the index too. 29

Using SAS indices (3 of 3) We are searching for records with 100 values amongst 300 million records using PROC SQL … Scenario 5 : Get back 100 unique values from 300 million unique value records Time taken using PROC SQL syntax : 3. 2 Note : PROC SQL used an unique index which returned a ‘needle’ in a haystack. The index on column V_ID is also specified as unique. Scenario 6 : Get back 297, 000, 001 records from 300 million records for 100 distinct values Time taken using PROC SQL syntax 43. 5 Note : PROC SQL does not use an index on formvar as …. 1. It is not unique 2. It expects to return a lot of records i. e. it is more efficient to do simple sequential search rather than reading the index too. 29

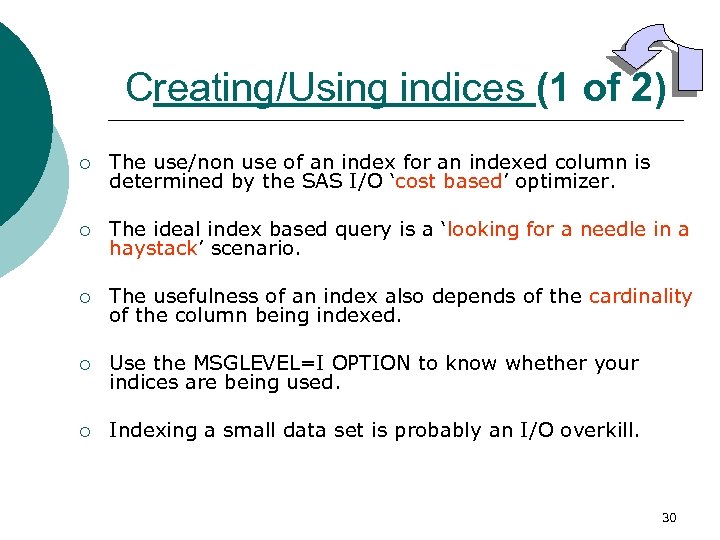

Creating/Using indices (1 of 2) ¡ The use/non use of an index for an indexed column is determined by the SAS I/O ‘cost based’ optimizer. ¡ The ideal index based query is a ‘looking for a needle in a haystack’ scenario. ¡ The usefulness of an index also depends of the cardinality of the column being indexed. ¡ Use the MSGLEVEL=I OPTION to know whether your indices are being used. ¡ Indexing a small data set is probably an I/O overkill. 30

Creating/Using indices (1 of 2) ¡ The use/non use of an index for an indexed column is determined by the SAS I/O ‘cost based’ optimizer. ¡ The ideal index based query is a ‘looking for a needle in a haystack’ scenario. ¡ The usefulness of an index also depends of the cardinality of the column being indexed. ¡ Use the MSGLEVEL=I OPTION to know whether your indices are being used. ¡ Indexing a small data set is probably an I/O overkill. 30

Creating/Using indices (2 of 2) ¡ ¡ B Tree indices, the type that BASE SAS creates, are more effective for highly unique columns and not decision support oriented tasks which typically use non unique columns. Also consider the cost of the index … 1. Index sparingly. An index is updated when the data file is updated. 1. For a 53 Gig file, with 2 indexed columns, the size of the index was 7. 2 Gig. 2. It is better to index a search column whose cardinality is high. 1. An index, to justify its cost of maintenance, needs to be used (i. e. read) often and updated minimally. 31

Creating/Using indices (2 of 2) ¡ ¡ B Tree indices, the type that BASE SAS creates, are more effective for highly unique columns and not decision support oriented tasks which typically use non unique columns. Also consider the cost of the index … 1. Index sparingly. An index is updated when the data file is updated. 1. For a 53 Gig file, with 2 indexed columns, the size of the index was 7. 2 Gig. 2. It is better to index a search column whose cardinality is high. 1. An index, to justify its cost of maintenance, needs to be used (i. e. read) often and updated minimally. 31

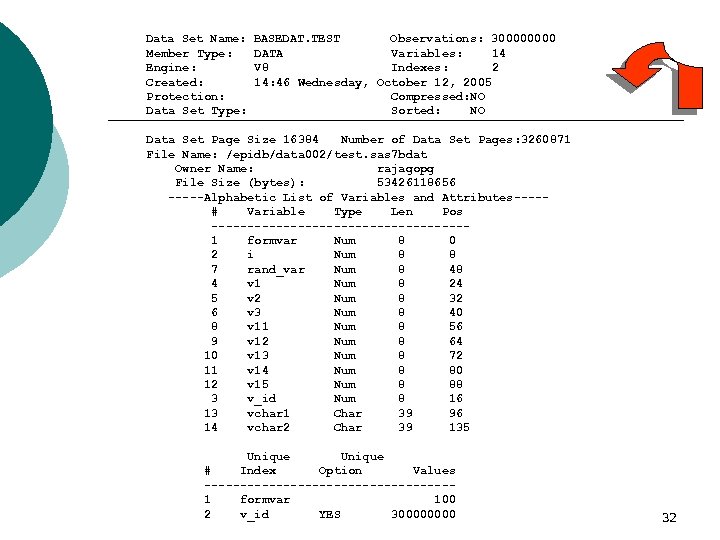

Data Set Name: Member Type: Engine: Created: Protection: Data Set Type: BASEDAT. TEST Observations: 30000 DATA Variables: 14 V 8 Indexes: 2 14: 46 Wednesday, October 12, 2005 Compressed: NO Sorted: NO Data Set Page Size 16384 Number of Data Set Pages: 3260871 File Name: /epidb/data 002/test. sas 7 bdat Owner Name: rajagopg File Size (bytes): 53426118656 -----Alphabetic List of Variables and Attributes----# Variable Type Len Pos ------------------1 formvar Num 8 0 2 i Num 8 8 7 rand_var Num 8 48 4 v 1 Num 8 24 5 v 2 Num 8 32 6 v 3 Num 8 40 8 v 11 Num 8 56 9 v 12 Num 8 64 10 v 13 Num 8 72 11 v 14 Num 8 80 12 v 15 Num 8 88 3 v_id Num 8 16 13 vchar 1 Char 39 96 14 vchar 2 Char 39 135 Unique # Index Option Values -----------------1 formvar 100 2 v_id YES 30000 32

Data Set Name: Member Type: Engine: Created: Protection: Data Set Type: BASEDAT. TEST Observations: 30000 DATA Variables: 14 V 8 Indexes: 2 14: 46 Wednesday, October 12, 2005 Compressed: NO Sorted: NO Data Set Page Size 16384 Number of Data Set Pages: 3260871 File Name: /epidb/data 002/test. sas 7 bdat Owner Name: rajagopg File Size (bytes): 53426118656 -----Alphabetic List of Variables and Attributes----# Variable Type Len Pos ------------------1 formvar Num 8 0 2 i Num 8 8 7 rand_var Num 8 48 4 v 1 Num 8 24 5 v 2 Num 8 32 6 v 3 Num 8 40 8 v 11 Num 8 56 9 v 12 Num 8 64 10 v 13 Num 8 72 11 v 14 Num 8 80 12 v 15 Num 8 88 3 v_id Num 8 16 13 vchar 1 Char 39 96 14 vchar 2 Char 39 135 Unique # Index Option Values -----------------1 formvar 100 2 v_id YES 30000 32

SAS Dataset Compression nuances ¡ Infrequently used/updated data sets are better candidates. ¡ Data sets without ‘long’ character variables are poor candidates for compression. The BINARY option (v 8. 2) compresses numbers too. ¡ All processing with a compressed data set implies on-thefly decompression and related overhead. Original data set size 50 Gig Note : 13 Numeric variables (length 8) and 2 character variables (39 Chars) Compressed data set size 21. 9 Gig Time to compress data set 60 Time to run IF (after compression) : 28. 0 Time to run IF (before compression) : 26. 0 Time to run IF 61. 0 (with compression of input and output datasets) Note : The number of rows returned was 199, 800, 001 rows 33

SAS Dataset Compression nuances ¡ Infrequently used/updated data sets are better candidates. ¡ Data sets without ‘long’ character variables are poor candidates for compression. The BINARY option (v 8. 2) compresses numbers too. ¡ All processing with a compressed data set implies on-thefly decompression and related overhead. Original data set size 50 Gig Note : 13 Numeric variables (length 8) and 2 character variables (39 Chars) Compressed data set size 21. 9 Gig Time to compress data set 60 Time to run IF (after compression) : 28. 0 Time to run IF (before compression) : 26. 0 Time to run IF 61. 0 (with compression of input and output datasets) Note : The number of rows returned was 199, 800, 001 rows 33

Bitwise representation and consolidation for some types of numeric data l The approach may be useful for numeric fields which have binary values and no missing values. l Bit oriented functions are relatively obscure. l The data step may need to be repeated if the underlying values change. l For e. g. , for the 300 million records, 3 numeric binary value (0 and 1) variables (length 8) were stored in the bits of a 3 byte integer using SAS bit functions. Original Size Time to run IF for v 1 = 1 Reduced Size Time to run IF for v 1 = 1 53. 4 Gig 21. 8 (returns 112, 504, 793 rows) 48 Gig 28. 8 Note : It took 2 hours to create the bit mapped field. Using the bit flag to subset data entails higher overhead (CPU, memory etc. ) 34

Bitwise representation and consolidation for some types of numeric data l The approach may be useful for numeric fields which have binary values and no missing values. l Bit oriented functions are relatively obscure. l The data step may need to be repeated if the underlying values change. l For e. g. , for the 300 million records, 3 numeric binary value (0 and 1) variables (length 8) were stored in the bits of a 3 byte integer using SAS bit functions. Original Size Time to run IF for v 1 = 1 Reduced Size Time to run IF for v 1 = 1 53. 4 Gig 21. 8 (returns 112, 504, 793 rows) 48 Gig 28. 8 Note : It took 2 hours to create the bit mapped field. Using the bit flag to subset data entails higher overhead (CPU, memory etc. ) 34

Simplify program overhead and save resources such as time (1 of 2)… ¡ Use ‘proc append’ to concatenate data sets instead of ‘set x y’ method to minimize sequential I/O. . Scenario : 2 identical datasets (large=300 million, small=1 record) are being concatenated Time for ‘set large small’ syntax 27. 2 Note : I/O needed for reading both data sets and creating a new data set. The ‘set’ order does not matter. Time for ‘Proc append base=big data=small’ syntax : 0. 00(!) Note : Very quick as only the small data set is being read Time for ‘Proc append base=small data=big’ syntax : 16. 6 Note : Reading in the ‘big’ data set takes time while a new data set is not being created (as in set x, y method). 35

Simplify program overhead and save resources such as time (1 of 2)… ¡ Use ‘proc append’ to concatenate data sets instead of ‘set x y’ method to minimize sequential I/O. . Scenario : 2 identical datasets (large=300 million, small=1 record) are being concatenated Time for ‘set large small’ syntax 27. 2 Note : I/O needed for reading both data sets and creating a new data set. The ‘set’ order does not matter. Time for ‘Proc append base=big data=small’ syntax : 0. 00(!) Note : Very quick as only the small data set is being read Time for ‘Proc append base=small data=big’ syntax : 16. 6 Note : Reading in the ‘big’ data set takes time while a new data set is not being created (as in set x, y method). 35

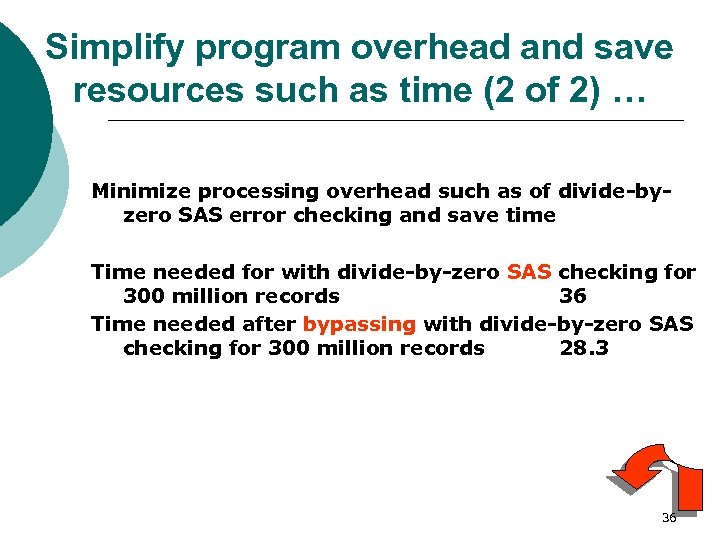

Simplify program overhead and save resources such as time (2 of 2) … Minimize processing overhead such as of divide-byzero SAS error checking and save time Time needed for with divide-by-zero SAS checking for 300 million records 36 Time needed after bypassing with divide-by-zero SAS checking for 300 million records 28. 3 36

Simplify program overhead and save resources such as time (2 of 2) … Minimize processing overhead such as of divide-byzero SAS error checking and save time Time needed for with divide-by-zero SAS checking for 300 million records 36 Time needed after bypassing with divide-by-zero SAS checking for 300 million records 28. 3 36