99e273c175d1fce266740a756a406e4e.ppt

- Количество слайдов: 27

The Search Engine Architecture CSCI 572: Information Retrieval and Search Engines Summer 2010

The Search Engine Architecture CSCI 572: Information Retrieval and Search Engines Summer 2010

Outline • Introduction • Google – The Page. Rank algorithm – The Google Architecture – Architectural components – Architectural interconnections – Architectural data structures – Evaluation of Google • Summary May-20 -10 CS 572 -Summer 2010 CAM-2

Outline • Introduction • Google – The Page. Rank algorithm – The Google Architecture – Architectural components – Architectural interconnections – Architectural data structures – Evaluation of Google • Summary May-20 -10 CS 572 -Summer 2010 CAM-2

Problems with search engines circa the last decade • Human maintenance – Subjective • Example: Ranking hits based on $$$ • Automated search engines – Quality of result • Neglect to take user’s context into account • Searching process – High quality results aren’t always at the top of the list May-20 -10 CS 572 -Summer 2010 CAM-3

Problems with search engines circa the last decade • Human maintenance – Subjective • Example: Ranking hits based on $$$ • Automated search engines – Quality of result • Neglect to take user’s context into account • Searching process – High quality results aren’t always at the top of the list May-20 -10 CS 572 -Summer 2010 CAM-3

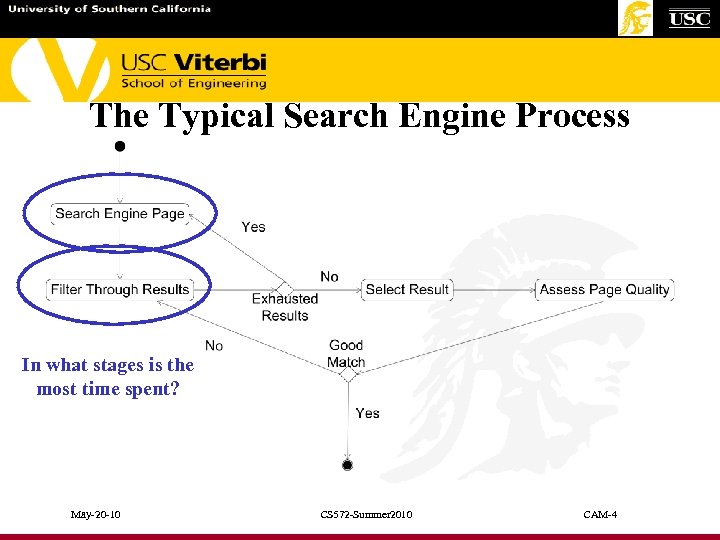

The Typical Search Engine Process In what stages is the most time spent? May-20 -10 CS 572 -Summer 2010 CAM-4

The Typical Search Engine Process In what stages is the most time spent? May-20 -10 CS 572 -Summer 2010 CAM-4

How to scale to modern times? • Currently – – Efficient index Petabyte scale storage space Efficient Crawling Cost effectiveness of hardware • Future – Qualitative context • Maintaining localization data – Perhaps send indexing to clients – Client computers help gather Google’s index in a distributed, decentralized fashion? May-20 -10 CS 572 -Summer 2010 CAM-5

How to scale to modern times? • Currently – – Efficient index Petabyte scale storage space Efficient Crawling Cost effectiveness of hardware • Future – Qualitative context • Maintaining localization data – Perhaps send indexing to clients – Client computers help gather Google’s index in a distributed, decentralized fashion? May-20 -10 CS 572 -Summer 2010 CAM-5

Google • The whole idea is to keep up with the growth of the web • Design Goals: -Remove Junk Results -Scalable document indices • Use of link structure to improve quality filtering • Use as an academic digital library – Provide search engine datasets – Search engine infrastructure and evolution May-20 -10 CS 572 -Summer 2010 CAM-6

Google • The whole idea is to keep up with the growth of the web • Design Goals: -Remove Junk Results -Scalable document indices • Use of link structure to improve quality filtering • Use as an academic digital library – Provide search engine datasets – Search engine infrastructure and evolution May-20 -10 CS 572 -Summer 2010 CAM-6

Google • Archival of information – Use of compression – Efficient data structures – Proprietary file system • Leverage of usage data • Page. Rank algorithm – Sort of a “lineage” of a source of information • Citation graph May-20 -10 CS 572 -Summer 2010 CAM-7

Google • Archival of information – Use of compression – Efficient data structures – Proprietary file system • Leverage of usage data • Page. Rank algorithm – Sort of a “lineage” of a source of information • Citation graph May-20 -10 CS 572 -Summer 2010 CAM-7

Page. Rank Algorithm • Numerical method to calculate page’s importance – this approach might well be followed by people doing research • Page Rank of a page A – – • With damping factor d Where PR(x) = Page Rank of page X Where C(x) = the amount of outgoing links from page x Where T 1…Tn is the set of pages with incoming links to page A – PR(A)=(1 -d)+d(PR(T 1)/C(T 1)+…+PR(Tn)/C(Tn)) It’s actually a bit more complicated than it first looks – For instance, what’s PR(T 1) and PR(T 2) and so on? May-20 -10 CS 572 -Summer 2010 CAM-8

Page. Rank Algorithm • Numerical method to calculate page’s importance – this approach might well be followed by people doing research • Page Rank of a page A – – • With damping factor d Where PR(x) = Page Rank of page X Where C(x) = the amount of outgoing links from page x Where T 1…Tn is the set of pages with incoming links to page A – PR(A)=(1 -d)+d(PR(T 1)/C(T 1)+…+PR(Tn)/C(Tn)) It’s actually a bit more complicated than it first looks – For instance, what’s PR(T 1) and PR(T 2) and so on? May-20 -10 CS 572 -Summer 2010 CAM-8

Page. Rank Algorithm • An excellent explanation – http: //www. iprcom. com/papers/pagerank/ • Since the PR(A) equation is a probability distribution over all web pages linking to web page A… – And because of the (1 -d) term and the d*(PR…. ) term – The Page. Ranks of all the web pages on the web will sum to 1 May-20 -10 CS 572 -Summer 2010 CAM-9

Page. Rank Algorithm • An excellent explanation – http: //www. iprcom. com/papers/pagerank/ • Since the PR(A) equation is a probability distribution over all web pages linking to web page A… – And because of the (1 -d) term and the d*(PR…. ) term – The Page. Ranks of all the web pages on the web will sum to 1 May-20 -10 CS 572 -Summer 2010 CAM-9

Page. Rank: Example • So, where do you start? • It turns out that you can effectively “guess” what the Page. Ranks for the web pages are initially – In our example, guess 0 for all of the pages • Then you run the PR function to calculate PR for all the web pages iteratively • You do this until… – The page ranks for each web page stop changing in each iteration – They “settle down” May-20 -10 CS 572 -Summer 2010 CAM-10

Page. Rank: Example • So, where do you start? • It turns out that you can effectively “guess” what the Page. Ranks for the web pages are initially – In our example, guess 0 for all of the pages • Then you run the PR function to calculate PR for all the web pages iteratively • You do this until… – The page ranks for each web page stop changing in each iteration – They “settle down” May-20 -10 CS 572 -Summer 2010 CAM-10

Page. Rank: Example Below is the iterative calculation that we would run PR(a) = 1 - $damp + $damp * PR(c); PR(b) = 1 - $damp + $damp * (PR(a)/2) PR(c) = 1 - $damp + $damp * (PR(a)/2 + PR(b) + PR(d)); PR(d) = 1 - $damp; May-20 -10 CS 572 -Summer 2010 CAM-11

Page. Rank: Example Below is the iterative calculation that we would run PR(a) = 1 - $damp + $damp * PR(c); PR(b) = 1 - $damp + $damp * (PR(a)/2) PR(c) = 1 - $damp + $damp * (PR(a)/2 + PR(b) + PR(d)); PR(d) = 1 - $damp; May-20 -10 CS 572 -Summer 2010 CAM-11

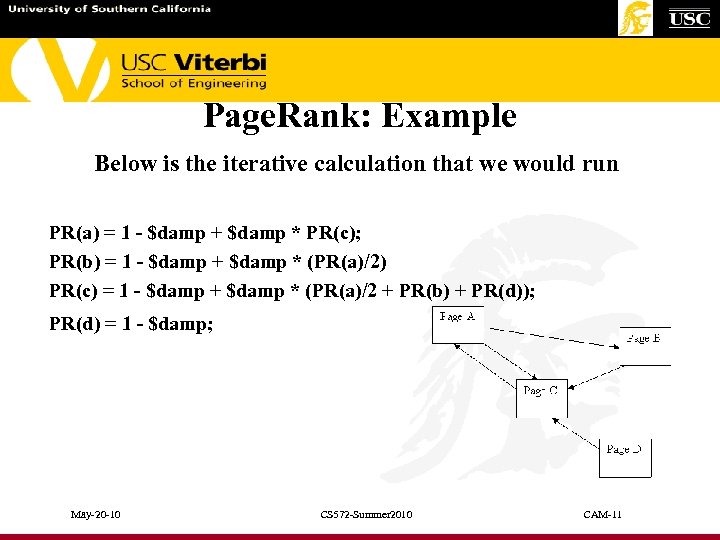

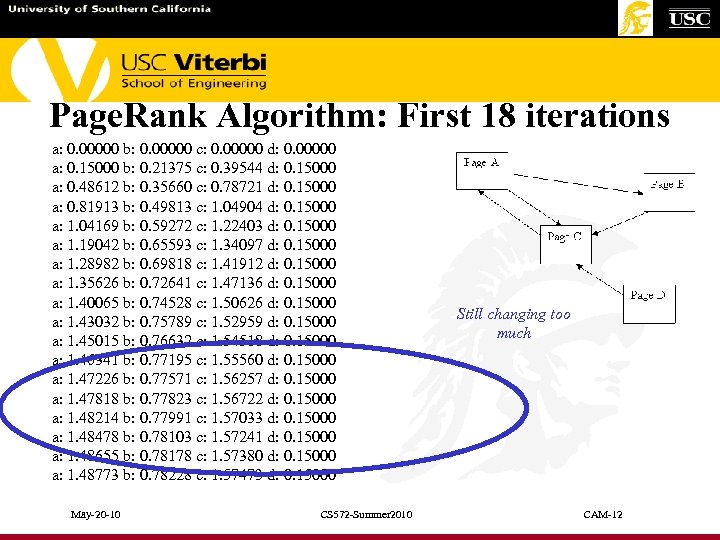

Page. Rank Algorithm: First 18 iterations a: 0. 00000 b: 0. 00000 c: 0. 00000 d: 0. 00000 a: 0. 15000 b: 0. 21375 c: 0. 39544 d: 0. 15000 a: 0. 48612 b: 0. 35660 c: 0. 78721 d: 0. 15000 a: 0. 81913 b: 0. 49813 c: 1. 04904 d: 0. 15000 a: 1. 04169 b: 0. 59272 c: 1. 22403 d: 0. 15000 a: 1. 19042 b: 0. 65593 c: 1. 34097 d: 0. 15000 a: 1. 28982 b: 0. 69818 c: 1. 41912 d: 0. 15000 a: 1. 35626 b: 0. 72641 c: 1. 47136 d: 0. 15000 a: 1. 40065 b: 0. 74528 c: 1. 50626 d: 0. 15000 a: 1. 43032 b: 0. 75789 c: 1. 52959 d: 0. 15000 a: 1. 45015 b: 0. 76632 c: 1. 54518 d: 0. 15000 a: 1. 46341 b: 0. 77195 c: 1. 55560 d: 0. 15000 a: 1. 47226 b: 0. 77571 c: 1. 56257 d: 0. 15000 a: 1. 47818 b: 0. 77823 c: 1. 56722 d: 0. 15000 a: 1. 48214 b: 0. 77991 c: 1. 57033 d: 0. 15000 a: 1. 48478 b: 0. 78103 c: 1. 57241 d: 0. 15000 a: 1. 48655 b: 0. 78178 c: 1. 57380 d: 0. 15000 a: 1. 48773 b: 0. 78228 c: 1. 57473 d: 0. 15000 May-20 -10 CS 572 -Summer 2010 Still changing too much CAM-12

Page. Rank Algorithm: First 18 iterations a: 0. 00000 b: 0. 00000 c: 0. 00000 d: 0. 00000 a: 0. 15000 b: 0. 21375 c: 0. 39544 d: 0. 15000 a: 0. 48612 b: 0. 35660 c: 0. 78721 d: 0. 15000 a: 0. 81913 b: 0. 49813 c: 1. 04904 d: 0. 15000 a: 1. 04169 b: 0. 59272 c: 1. 22403 d: 0. 15000 a: 1. 19042 b: 0. 65593 c: 1. 34097 d: 0. 15000 a: 1. 28982 b: 0. 69818 c: 1. 41912 d: 0. 15000 a: 1. 35626 b: 0. 72641 c: 1. 47136 d: 0. 15000 a: 1. 40065 b: 0. 74528 c: 1. 50626 d: 0. 15000 a: 1. 43032 b: 0. 75789 c: 1. 52959 d: 0. 15000 a: 1. 45015 b: 0. 76632 c: 1. 54518 d: 0. 15000 a: 1. 46341 b: 0. 77195 c: 1. 55560 d: 0. 15000 a: 1. 47226 b: 0. 77571 c: 1. 56257 d: 0. 15000 a: 1. 47818 b: 0. 77823 c: 1. 56722 d: 0. 15000 a: 1. 48214 b: 0. 77991 c: 1. 57033 d: 0. 15000 a: 1. 48478 b: 0. 78103 c: 1. 57241 d: 0. 15000 a: 1. 48655 b: 0. 78178 c: 1. 57380 d: 0. 15000 a: 1. 48773 b: 0. 78228 c: 1. 57473 d: 0. 15000 May-20 -10 CS 572 -Summer 2010 Still changing too much CAM-12

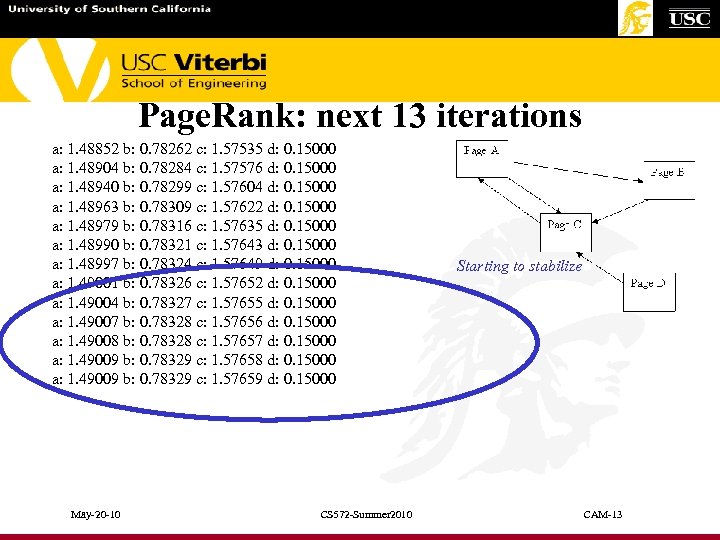

Page. Rank: next 13 iterations a: 1. 48852 b: 0. 78262 c: 1. 57535 d: 0. 15000 a: 1. 48904 b: 0. 78284 c: 1. 57576 d: 0. 15000 a: 1. 48940 b: 0. 78299 c: 1. 57604 d: 0. 15000 a: 1. 48963 b: 0. 78309 c: 1. 57622 d: 0. 15000 a: 1. 48979 b: 0. 78316 c: 1. 57635 d: 0. 15000 a: 1. 48990 b: 0. 78321 c: 1. 57643 d: 0. 15000 a: 1. 48997 b: 0. 78324 c: 1. 57649 d: 0. 15000 a: 1. 49001 b: 0. 78326 c: 1. 57652 d: 0. 15000 a: 1. 49004 b: 0. 78327 c: 1. 57655 d: 0. 15000 a: 1. 49007 b: 0. 78328 c: 1. 57656 d: 0. 15000 a: 1. 49008 b: 0. 78328 c: 1. 57657 d: 0. 15000 a: 1. 49009 b: 0. 78329 c: 1. 57658 d: 0. 15000 a: 1. 49009 b: 0. 78329 c: 1. 57659 d: 0. 15000 May-20 -10 CS 572 -Summer 2010 Starting to stabilize CAM-13

Page. Rank: next 13 iterations a: 1. 48852 b: 0. 78262 c: 1. 57535 d: 0. 15000 a: 1. 48904 b: 0. 78284 c: 1. 57576 d: 0. 15000 a: 1. 48940 b: 0. 78299 c: 1. 57604 d: 0. 15000 a: 1. 48963 b: 0. 78309 c: 1. 57622 d: 0. 15000 a: 1. 48979 b: 0. 78316 c: 1. 57635 d: 0. 15000 a: 1. 48990 b: 0. 78321 c: 1. 57643 d: 0. 15000 a: 1. 48997 b: 0. 78324 c: 1. 57649 d: 0. 15000 a: 1. 49001 b: 0. 78326 c: 1. 57652 d: 0. 15000 a: 1. 49004 b: 0. 78327 c: 1. 57655 d: 0. 15000 a: 1. 49007 b: 0. 78328 c: 1. 57656 d: 0. 15000 a: 1. 49008 b: 0. 78328 c: 1. 57657 d: 0. 15000 a: 1. 49009 b: 0. 78329 c: 1. 57658 d: 0. 15000 a: 1. 49009 b: 0. 78329 c: 1. 57659 d: 0. 15000 May-20 -10 CS 572 -Summer 2010 Starting to stabilize CAM-13

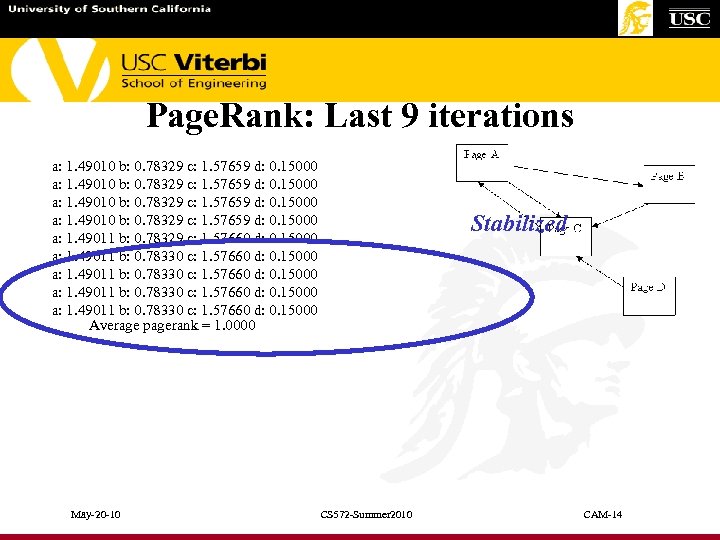

Page. Rank: Last 9 iterations a: 1. 49010 b: 0. 78329 c: 1. 57659 d: 0. 15000 a: 1. 49011 b: 0. 78329 c: 1. 57660 d: 0. 15000 a: 1. 49011 b: 0. 78330 c: 1. 57660 d: 0. 15000 Average pagerank = 1. 0000 May-20 -10 Stabilized CS 572 -Summer 2010 CAM-14

Page. Rank: Last 9 iterations a: 1. 49010 b: 0. 78329 c: 1. 57659 d: 0. 15000 a: 1. 49011 b: 0. 78329 c: 1. 57660 d: 0. 15000 a: 1. 49011 b: 0. 78330 c: 1. 57660 d: 0. 15000 Average pagerank = 1. 0000 May-20 -10 Stabilized CS 572 -Summer 2010 CAM-14

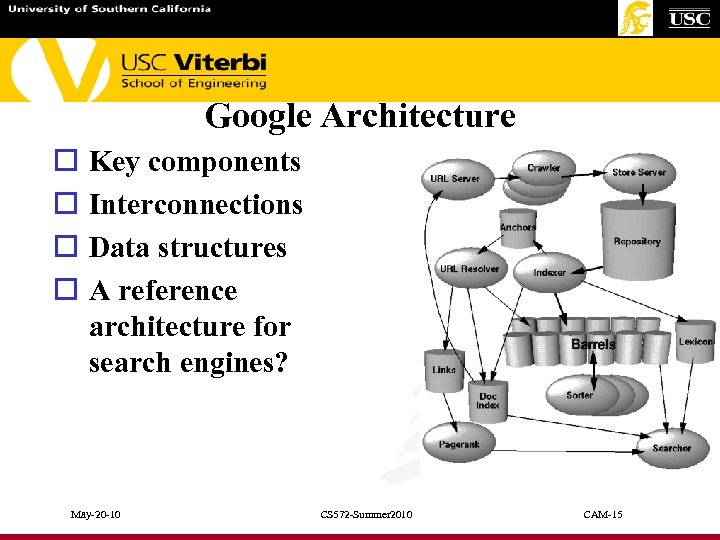

Google Architecture o o Key components Interconnections Data structures A reference architecture for search engines? May-20 -10 CS 572 -Summer 2010 CAM-15

Google Architecture o o Key components Interconnections Data structures A reference architecture for search engines? May-20 -10 CS 572 -Summer 2010 CAM-15

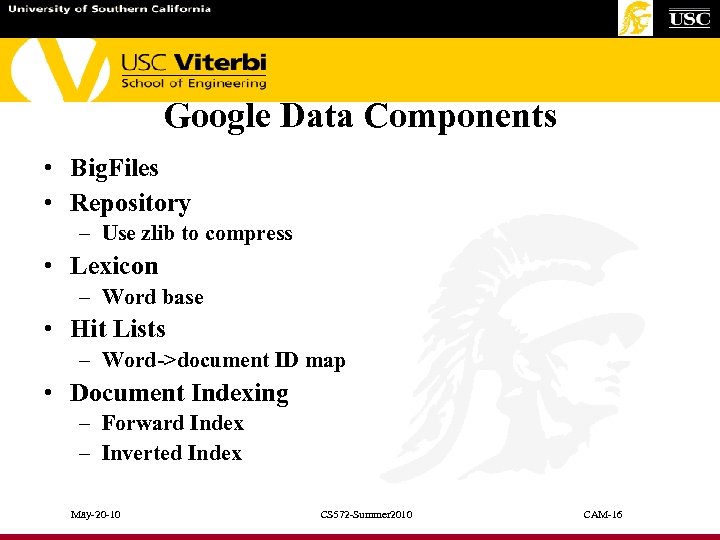

Google Data Components • Big. Files • Repository – Use zlib to compress • Lexicon – Word base • Hit Lists – Word->document ID map • Document Indexing – Forward Index – Inverted Index May-20 -10 CS 572 -Summer 2010 CAM-16

Google Data Components • Big. Files • Repository – Use zlib to compress • Lexicon – Word base • Hit Lists – Word->document ID map • Document Indexing – Forward Index – Inverted Index May-20 -10 CS 572 -Summer 2010 CAM-16

Google File System (GFS) • Big. Files – – A. k. a. Google’s Proprietary Filesystem 64 -bit addressable Compression Conventional operating systems don’t suffice • No explanation of why? – GFS: http: //labs. google. com/papers/gfs. html May-20 -10 CS 572 -Summer 2010 CAM-17

Google File System (GFS) • Big. Files – – A. k. a. Google’s Proprietary Filesystem 64 -bit addressable Compression Conventional operating systems don’t suffice • No explanation of why? – GFS: http: //labs. google. com/papers/gfs. html May-20 -10 CS 572 -Summer 2010 CAM-17

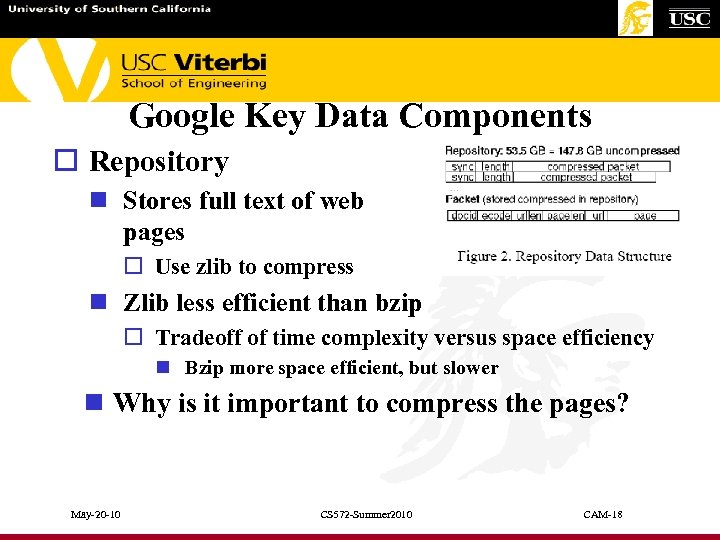

Google Key Data Components o Repository n Stores full text of web pages o Use zlib to compress n Zlib less efficient than bzip o Tradeoff of time complexity versus space efficiency n Bzip more space efficient, but slower n Why is it important to compress the pages? May-20 -10 CS 572 -Summer 2010 CAM-18

Google Key Data Components o Repository n Stores full text of web pages o Use zlib to compress n Zlib less efficient than bzip o Tradeoff of time complexity versus space efficiency n Bzip more space efficient, but slower n Why is it important to compress the pages? May-20 -10 CS 572 -Summer 2010 CAM-18

Google Lexicon • Lexicon – Contains 14 million words – Implemented as a hash table of pointers to words – Full explanation beyond the scope of this discussion • Why is it important to have a lexicon? – – Tokenization Analysis Language Identification SPAM May-20 -10 CS 572 -Summer 2010 CAM-19

Google Lexicon • Lexicon – Contains 14 million words – Implemented as a hash table of pointers to words – Full explanation beyond the scope of this discussion • Why is it important to have a lexicon? – – Tokenization Analysis Language Identification SPAM May-20 -10 CS 572 -Summer 2010 CAM-19

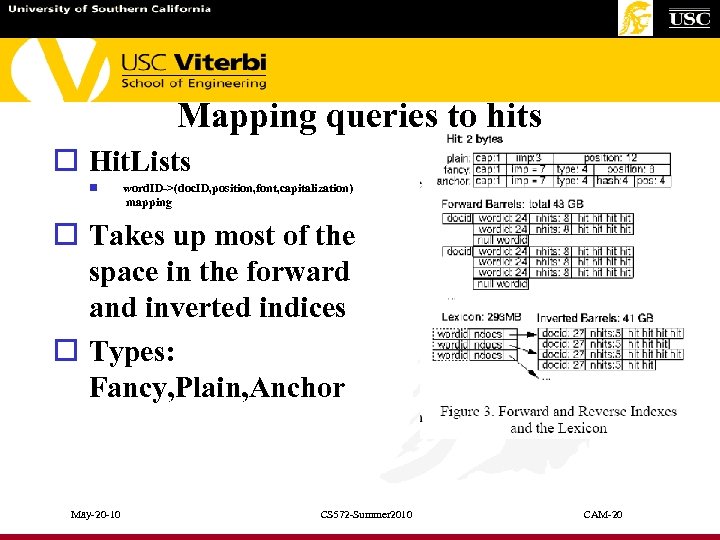

Mapping queries to hits o Hit. Lists n word. ID->(doc. ID, position, font, capitalization) mapping o Takes up most of the space in the forward and inverted indices o Types: Fancy, Plain, Anchor May-20 -10 CS 572 -Summer 2010 CAM-20

Mapping queries to hits o Hit. Lists n word. ID->(doc. ID, position, font, capitalization) mapping o Takes up most of the space in the forward and inverted indices o Types: Fancy, Plain, Anchor May-20 -10 CS 572 -Summer 2010 CAM-20

Document Indexing • Document Indexing – Forward Index • doc. IDs->word. IDs • Partially sorted • Duplicated doc IDs – Makes it easier for final indexing and coding – Inverted Index • word. IDs->doc. IDs • 2 sets of inverted barrels May-20 -10 CS 572 -Summer 2010 CAM-21

Document Indexing • Document Indexing – Forward Index • doc. IDs->word. IDs • Partially sorted • Duplicated doc IDs – Makes it easier for final indexing and coding – Inverted Index • word. IDs->doc. IDs • 2 sets of inverted barrels May-20 -10 CS 572 -Summer 2010 CAM-21

Crawling and Indexing • Crawling – Distributed, Parallel – Social issues • Bringing down web servers: politeness • Copyright issues • Text versus code • Indexing – Developed their own web page parser – Barrels • Distribution of compressed documents – Sorting May-20 -10 CS 572 -Summer 2010 CAM-22

Crawling and Indexing • Crawling – Distributed, Parallel – Social issues • Bringing down web servers: politeness • Copyright issues • Text versus code • Indexing – Developed their own web page parser – Barrels • Distribution of compressed documents – Sorting May-20 -10 CS 572 -Summer 2010 CAM-22

Google’s Query Evaluation • 1: Parse the query • 2: Convert words into Word. IDs – Using Lexicon • 3: Select the barrels that contain documents which match the Word. IDs • 4: Search through documents in the selected barrels until one is discovered that matches all the search terms • 5: Compute that document’s rank (using Page. Rank as one of the components) • 6: Repeat step 4 until no documents are found and we’ve went through all the barrels • 7: Sort the set of returned documents by document rank and return the top k documents May-20 -10 CS 572 -Summer 2010 CAM-23

Google’s Query Evaluation • 1: Parse the query • 2: Convert words into Word. IDs – Using Lexicon • 3: Select the barrels that contain documents which match the Word. IDs • 4: Search through documents in the selected barrels until one is discovered that matches all the search terms • 5: Compute that document’s rank (using Page. Rank as one of the components) • 6: Repeat step 4 until no documents are found and we’ve went through all the barrels • 7: Sort the set of returned documents by document rank and return the top k documents May-20 -10 CS 572 -Summer 2010 CAM-23

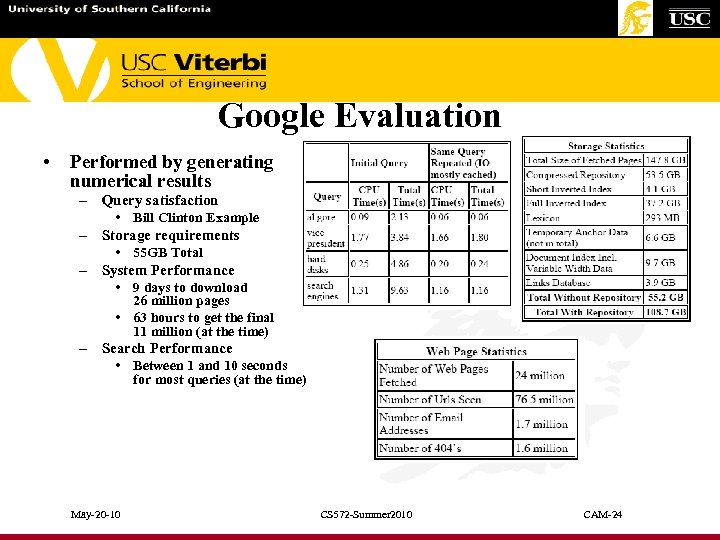

Google Evaluation • Performed by generating numerical results – Query satisfaction – – – • Bill Clinton Example Storage requirements • 55 GB Total System Performance • 9 days to download 26 million pages • 63 hours to get the final 11 million (at the time) Search Performance • Between 1 and 10 seconds for most queries (at the time) May-20 -10 CS 572 -Summer 2010 CAM-24

Google Evaluation • Performed by generating numerical results – Query satisfaction – – – • Bill Clinton Example Storage requirements • 55 GB Total System Performance • 9 days to download 26 million pages • 63 hours to get the final 11 million (at the time) Search Performance • Between 1 and 10 seconds for most queries (at the time) May-20 -10 CS 572 -Summer 2010 CAM-24

Wrapup • Loads of future work – Even at that time, there were issues of: • Information extraction from semi-structured sources (such as web pages) – Still an active area of research • Search engines as a digital library – What services, APIs and toolkits should a search engine provide? – What storage methods are the most efficient? – From 2005 to 2010 to ? ? ? • Enhancing metadata – Automatic markup and generation – What are the appropriate fields? • Automatic Concept Extraction – Present the Searcher with a context • Searching languages: beyond context-free queries • Other types of search: Facet, GIS, etc. May-20 -10 CS 572 -Summer 2010 CAM-25

Wrapup • Loads of future work – Even at that time, there were issues of: • Information extraction from semi-structured sources (such as web pages) – Still an active area of research • Search engines as a digital library – What services, APIs and toolkits should a search engine provide? – What storage methods are the most efficient? – From 2005 to 2010 to ? ? ? • Enhancing metadata – Automatic markup and generation – What are the appropriate fields? • Automatic Concept Extraction – Present the Searcher with a context • Searching languages: beyond context-free queries • Other types of search: Facet, GIS, etc. May-20 -10 CS 572 -Summer 2010 CAM-25

The Future? • User poses keyword query search – “Google-like” result page comes back – Along with each link returned, there will be • A “Concept Map” outlining – using extraction methods – what the “real” content of the document is – This basically allows you to “visually” see what the page rank is – Discover information visually – Existing evidence that this works well • http: //vivisimo. com/ • Carrot 2/3 clustering May-20 -10 CS 572 -Summer 2010 CAM-26

The Future? • User poses keyword query search – “Google-like” result page comes back – Along with each link returned, there will be • A “Concept Map” outlining – using extraction methods – what the “real” content of the document is – This basically allows you to “visually” see what the page rank is – Discover information visually – Existing evidence that this works well • http: //vivisimo. com/ • Carrot 2/3 clustering May-20 -10 CS 572 -Summer 2010 CAM-26

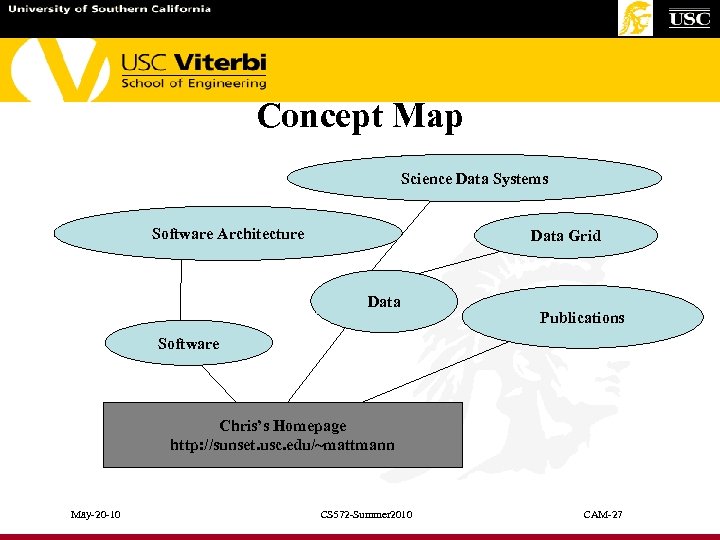

Concept Map Science Data Systems Software Architecture Data Grid Data Publications Software Chris’s Homepage http: //sunset. usc. edu/~mattmann May-20 -10 CS 572 -Summer 2010 CAM-27

Concept Map Science Data Systems Software Architecture Data Grid Data Publications Software Chris’s Homepage http: //sunset. usc. edu/~mattmann May-20 -10 CS 572 -Summer 2010 CAM-27