482b8c76dda7093c121855f986395bc4.ppt

- Количество слайдов: 16

The Problem • Finding information about people in huge text collections or on-line repositories on the Web is a common activity • Person names, however, are highly ambiguous -- in the US 90, 000 different names are shared by 100 million people • Cross-document coreference resolution is the task of identifying if two mentions of the same (or similar) name in different sources refer to the same individual • Solving this problem is important no only for better access to information but also in practical applications

Sem. Eval 2007 Web People Search Task • A search engine user types in a person name as a query • Instead of ranking the Web pages, an ideal system should organize the results in as many clusters as different individuals sharing the name have been returned • System receive a set of documents matching a person name and returns clusters, each cluster refers to the same individual

Sem. Eval 2007 Web People Search Data • Training data (100 documents person name) – 10 person names from the European Conference on Digital Libraries; 7 person names from Wikipedia; 32 person names from a previous study (Gideon&Mann’ 03) • Testing: 30 person names; pages returned by Yahoo! • Systems output compared to gold standard produced by human

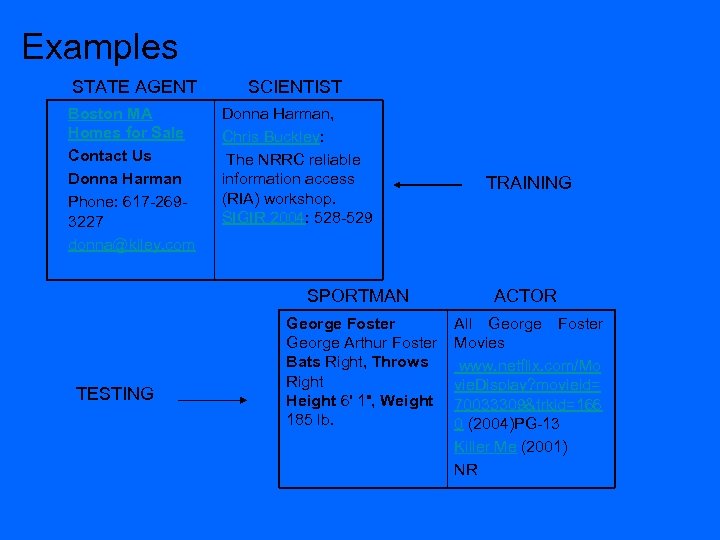

Examples STATE AGENT SCIENTIST Boston MA Homes for Sale Contact Us Donna Harman Phone: 617 -2693227 donna@kiley. com Donna Harman, Chris Buckley: The NRRC reliable information access (RIA) workshop. SIGIR 2004: 528 -529 TRAINING SPORTMAN TESTING ACTOR George Foster George Arthur Foster Bats Right, Throws Right Height 6' 1", Weight 185 lb. All George Foster Movies www. netflix. com/Mo vie. Display? movieid= 70033309&trkid=166 0 (2004)PG-13 Killer Me (2001) NR

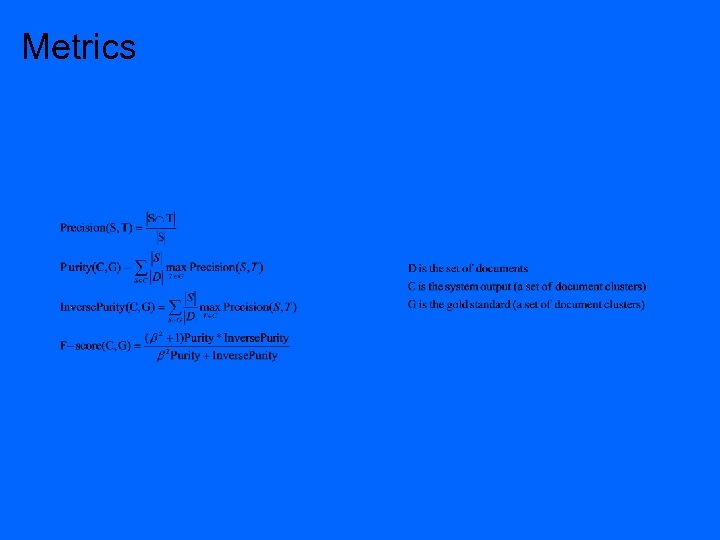

Metrics

Clustering Given a set of documents and a threshold 1. Initially there as many clusters as documents 2. All clusters are compared using a similarity metric 3. At each iteration the two most similar clusters are merged if their similarity is greater than a threshold (otherwise stop and return clusters) 4. Goto step 2

Document Representation • term frequency (tf) of term t in document d = the number of times t occurs in d • inverted document frequency (idf) of term t in collection c = the number of documents in c containing t • Bag-of-word approach = terms are words – text = (word 1=w 1…. ) • Semantic-based approach = terms are named entities (person, location, organization, date, address) – text = (ne 1=w 1…. ) • Two approaches to extract terms: – terms belong to the full document (full document condition) – terms belong to personal summaries (summary condition)

Examples of terms Organization: DARPA; MIT Press; Artificial Intelligence Center; AAAI; Department of Computer Science; etc. Person: Douglas E. Appelt; David J. Israel; Jean. Claude Martin; etc. Location: Menlo Park; Las Palmas; Clearwater Beach; etc. Date: 1995 -2007; 15 February 2007; 20: 34; etc. Address: http: //acl. ldc. upenn. edu/J/J 87/; Los Angeles Area; ontherecord@foxnews. com; 105 Chamber Street; etc.

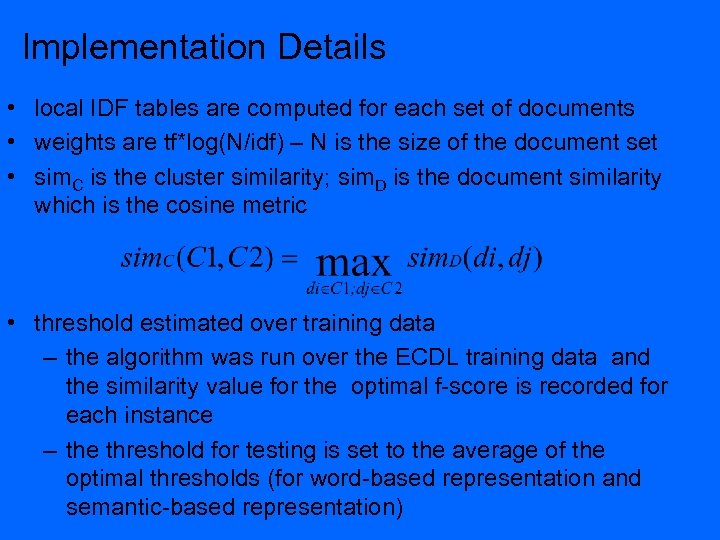

Implementation Details • local IDF tables are computed for each set of documents • weights are tf*log(N/idf) – N is the size of the document set • sim. C is the cluster similarity; sim. D is the document similarity which is the cosine metric • threshold estimated over training data – the algorithm was run over the ECDL training data and the similarity value for the optimal f-score is recorded for each instance – the threshold for testing is set to the average of the optimal thresholds (for word-based representation and semantic-based representation)

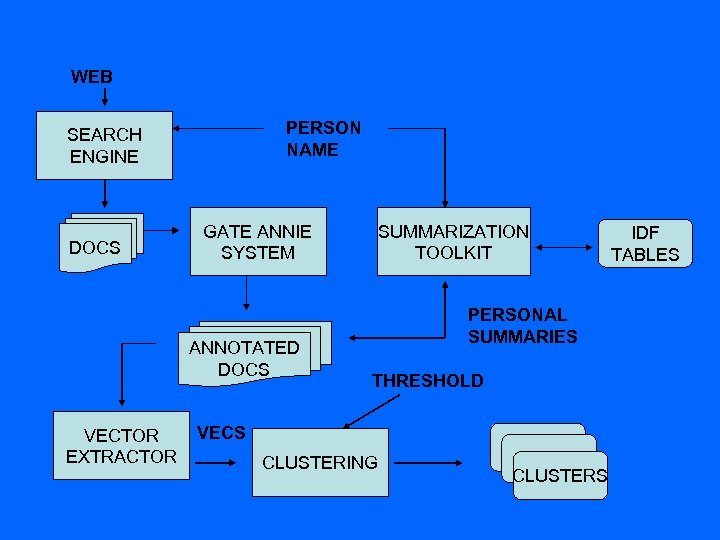

WEB PERSON NAME SEARCH ENGINE DOCS GATE ANNIE SYSTEM ANNOTATED DOCS VECTOR EXTRACTOR SUMMARIZATION TOOLKIT PERSONAL SUMMARIES THRESHOLD VECS CLUSTERING CLUSTERS IDF TABLES

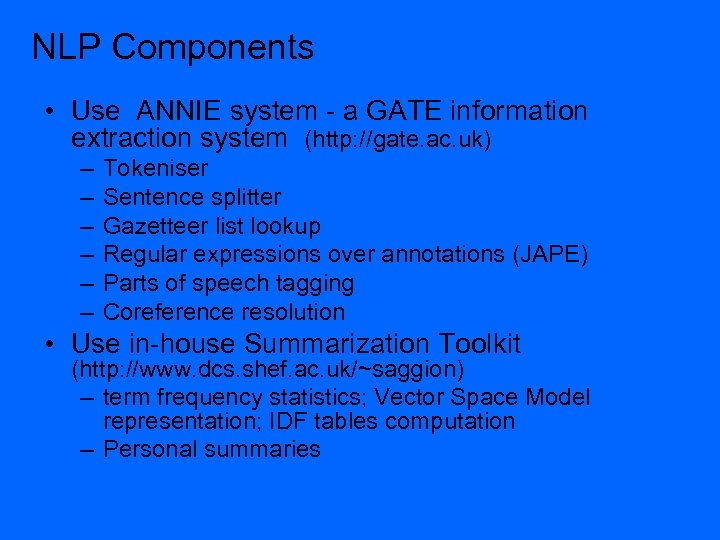

NLP Components • Use ANNIE system - a GATE information extraction system (http: //gate. ac. uk) – – – Tokeniser Sentence splitter Gazetteer list lookup Regular expressions over annotations (JAPE) Parts of speech tagging Coreference resolution • Use in-house Summarization Toolkit (http: //www. dcs. shef. ac. uk/~saggion) – term frequency statistics; Vector Space Model representation; IDF tables computation – Personal summaries

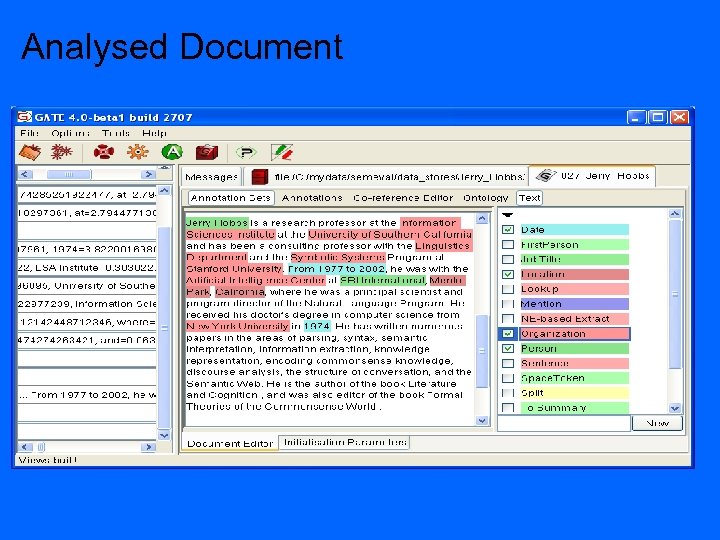

Analysed Document

Personal Summaries • Coreference chains are identified (in each document) • All elements in a coreference chain containing the target person are marked • Sentences containing marked person name are selected for summary

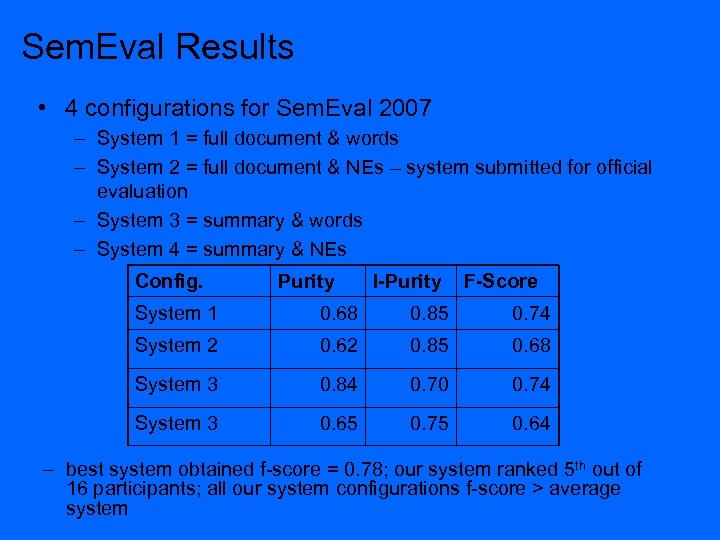

Sem. Eval Results • 4 configurations for Sem. Eval 2007 – System 1 = full document & words – System 2 = full document & NEs – system submitted for official evaluation – System 3 = summary & words – System 4 = summary & NEs Config. Purity I-Purity F-Score System 1 0. 68 0. 85 0. 74 System 2 0. 62 0. 85 0. 68 System 3 0. 84 0. 70 0. 74 System 3 0. 65 0. 75 0. 64 – best system obtained f-score = 0. 78; our system ranked 5 th out of 16 participants; all our system configurations f-score > average system

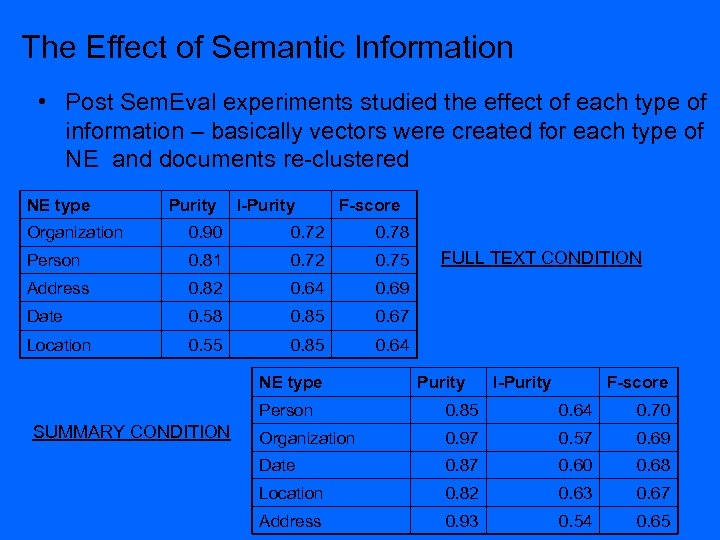

The Effect of Semantic Information • Post Sem. Eval experiments studied the effect of each type of information – basically vectors were created for each type of NE and documents re-clustered NE type Purity I-Purity F-score Organization 0. 90 0. 72 0. 78 Person 0. 81 0. 72 0. 75 Address 0. 82 0. 64 0. 69 Date 0. 58 0. 85 0. 67 Location 0. 55 0. 85 0. 64 NE type FULL TEXT CONDITION Purity I-Purity F-score Person SUMMARY CONDITION 0. 85 0. 64 0. 70 Organization 0. 97 0. 57 0. 69 Date 0. 87 0. 60 0. 68 Location 0. 82 0. 63 0. 67 Address 0. 93 0. 54 0. 65

Conclusions • Presented an approach to cross-document coreference based on available robust extraction and summarization technology • Approach is largely unsupervised – need some training data to set up parameters • System demonstrated good performance in Sem. Eval 2007 Web People Search Task • Special attention should be given to the type of information used for representing vectors in order to achieve optimal performance

482b8c76dda7093c121855f986395bc4.ppt