4190d6fa65afe9971809d0141b60e531.ppt

- Количество слайдов: 17

The Pegasus Workflow Management System Ewa Deelman University of Southern California Information Sciences Institute In collaboration with the Condor Team, University of Wisconsin Madison Funded by NSF under the OCI SDCI Program Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

The Pegasus Workflow Management System Ewa Deelman University of Southern California Information Sciences Institute In collaboration with the Condor Team, University of Wisconsin Madison Funded by NSF under the OCI SDCI Program Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

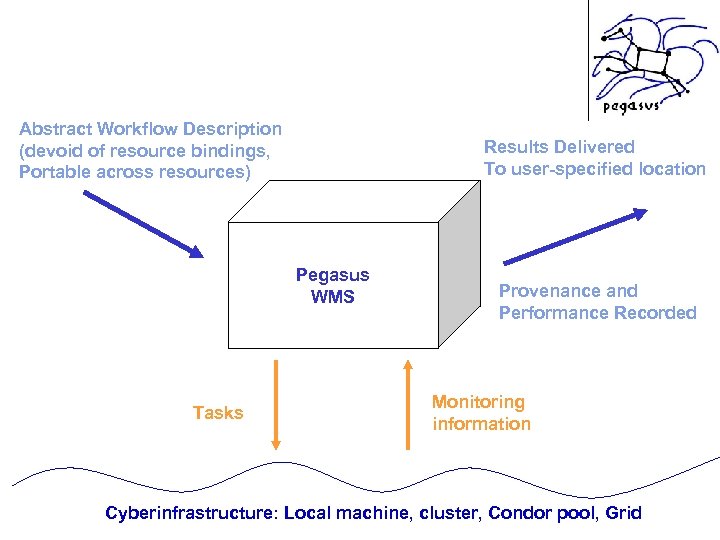

Abstract Workflow Description (devoid of resource bindings, Portable across resources) Results Delivered To user-specified location Pegasus WMS Tasks Provenance and Performance Recorded Monitoring information Cyberinfrastructure: Local machine, cluster, Condor pool, Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Abstract Workflow Description (devoid of resource bindings, Portable across resources) Results Delivered To user-specified location Pegasus WMS Tasks Provenance and Performance Recorded Monitoring information Cyberinfrastructure: Local machine, cluster, Condor pool, Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

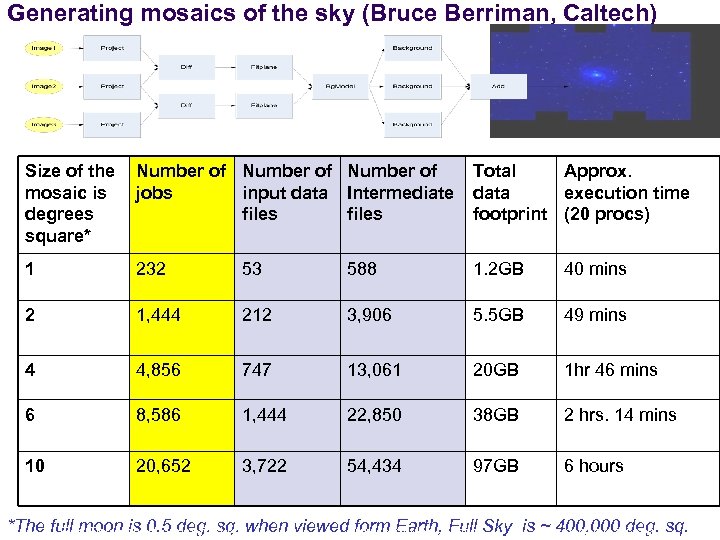

Generating mosaics of the sky (Bruce Berriman, Caltech) Size of the mosaic is degrees square* Number of jobs input data Intermediate files Total Approx. data execution time footprint (20 procs) 1 232 53 588 1. 2 GB 40 mins 2 1, 444 212 3, 906 5. 5 GB 49 mins 4 4, 856 747 13, 061 20 GB 1 hr 46 mins 6 8, 586 1, 444 22, 850 38 GB 2 hrs. 14 mins 10 20, 652 3, 722 54, 434 97 GB 6 hours *The full moon is 0. 5 deg. sq. when viewedwww. isi. edu/~deelman Sky is ~ 400, 000 deg. sq. form Earth, Full Ewa Deelman, deelman@isi. edu pegasus. isi. edu

Generating mosaics of the sky (Bruce Berriman, Caltech) Size of the mosaic is degrees square* Number of jobs input data Intermediate files Total Approx. data execution time footprint (20 procs) 1 232 53 588 1. 2 GB 40 mins 2 1, 444 212 3, 906 5. 5 GB 49 mins 4 4, 856 747 13, 061 20 GB 1 hr 46 mins 6 8, 586 1, 444 22, 850 38 GB 2 hrs. 14 mins 10 20, 652 3, 722 54, 434 97 GB 6 hours *The full moon is 0. 5 deg. sq. when viewedwww. isi. edu/~deelman Sky is ~ 400, 000 deg. sq. form Earth, Full Ewa Deelman, deelman@isi. edu pegasus. isi. edu

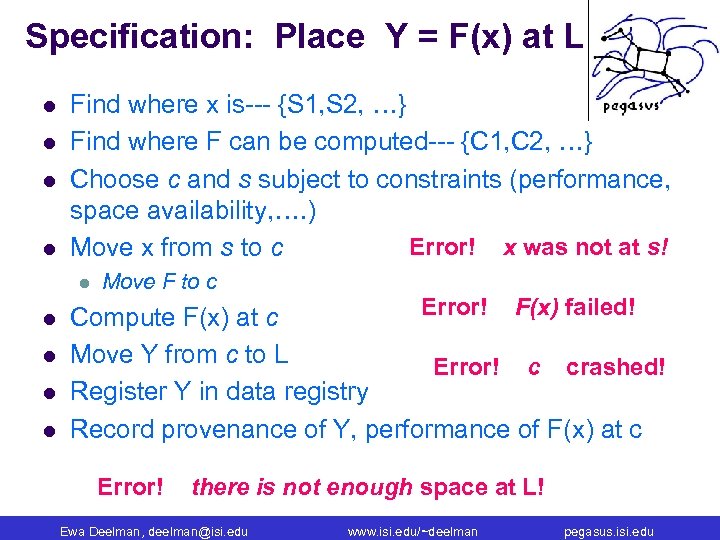

Specification: Place Y = F(x) at L l l Find where x is--- {S 1, S 2, …} Find where F can be computed--- {C 1, C 2, …} Choose c and s subject to constraints (performance, space availability, …. ) Error! x was not at s! Move x from s to c l l l Move F to c Error! F(x) failed! Compute F(x) at c Move Y from c to L Error! c crashed! Register Y in data registry Record provenance of Y, performance of F(x) at c Error! there is not enough space at L! Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Specification: Place Y = F(x) at L l l Find where x is--- {S 1, S 2, …} Find where F can be computed--- {C 1, C 2, …} Choose c and s subject to constraints (performance, space availability, …. ) Error! x was not at s! Move x from s to c l l l Move F to c Error! F(x) failed! Compute F(x) at c Move Y from c to L Error! c crashed! Register Y in data registry Record provenance of Y, performance of F(x) at c Error! there is not enough space at L! Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

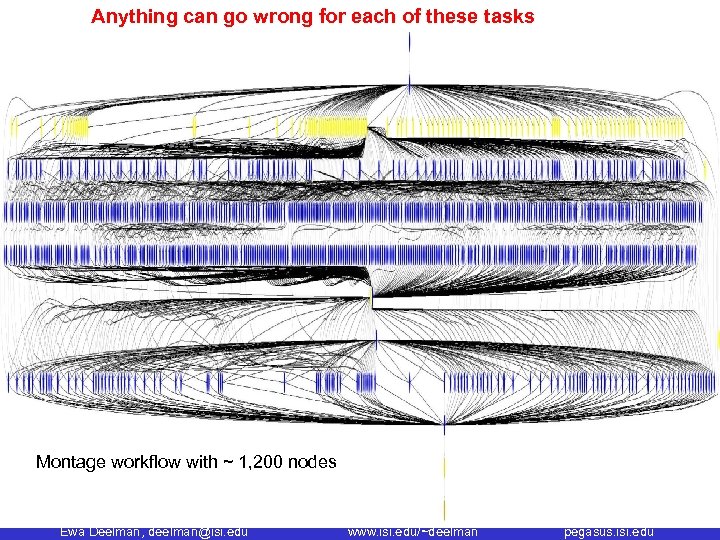

Anything can go wrong for each of these tasks Montage workflow with ~ 1, 200 nodes Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Anything can go wrong for each of these tasks Montage workflow with ~ 1, 200 nodes Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Pegasus-Workflow Management System l l Leverages abstraction for workflow description to obtain ease of use, scalability, and portability Provides a compiler to map from high-level descriptions to executable workflows Correct mapping l Performance enhanced mapping l l Provides a runtime engine to carry out the instructions Scalable manner l Reliable manner l Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Pegasus-Workflow Management System l l Leverages abstraction for workflow description to obtain ease of use, scalability, and portability Provides a compiler to map from high-level descriptions to executable workflows Correct mapping l Performance enhanced mapping l l Provides a runtime engine to carry out the instructions Scalable manner l Reliable manner l Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

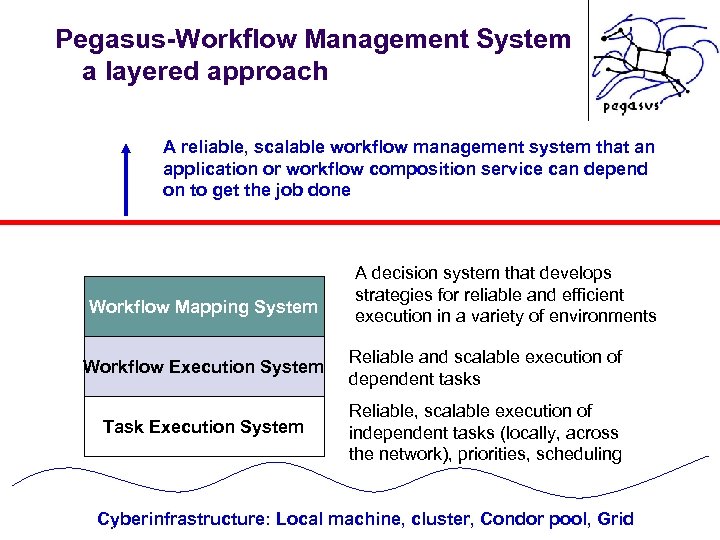

Pegasus-Workflow Management System a layered approach A reliable, scalable workflow management system that an application or workflow composition service can depend on to get the job done Workflow Mapping System A decision system that develops strategies for reliable and efficient execution in a variety of environments Workflow Execution System Reliable and scalable execution of dependent tasks Task Execution System Reliable, scalable execution of independent tasks (locally, across the network), priorities, scheduling Cyberinfrastructure: Local machine, cluster, Condor pool, Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Pegasus-Workflow Management System a layered approach A reliable, scalable workflow management system that an application or workflow composition service can depend on to get the job done Workflow Mapping System A decision system that develops strategies for reliable and efficient execution in a variety of environments Workflow Execution System Reliable and scalable execution of dependent tasks Task Execution System Reliable, scalable execution of independent tasks (locally, across the network), priorities, scheduling Cyberinfrastructure: Local machine, cluster, Condor pool, Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

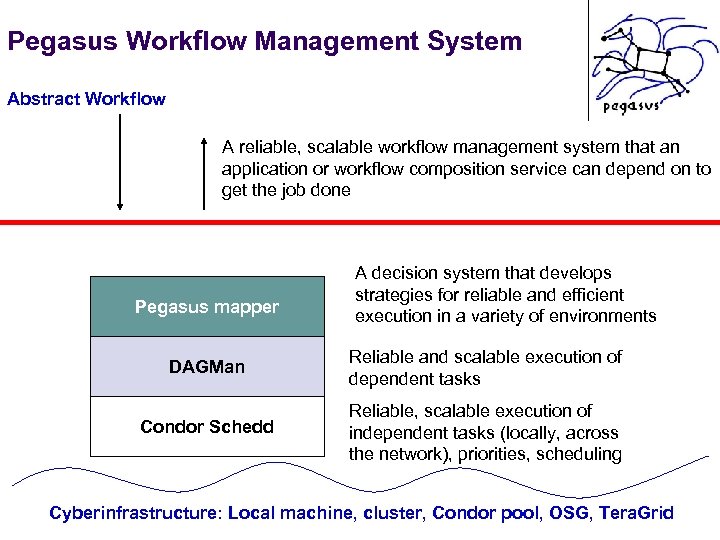

Pegasus Workflow Management System Abstract Workflow A reliable, scalable workflow management system that an application or workflow composition service can depend on to get the job done Pegasus mapper A decision system that develops strategies for reliable and efficient execution in a variety of environments DAGMan Reliable and scalable execution of dependent tasks Condor Schedd Reliable, scalable execution of independent tasks (locally, across the network), priorities, scheduling Cyberinfrastructure: Local machine, cluster, Condor pool, OSG, Tera. Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Pegasus Workflow Management System Abstract Workflow A reliable, scalable workflow management system that an application or workflow composition service can depend on to get the job done Pegasus mapper A decision system that develops strategies for reliable and efficient execution in a variety of environments DAGMan Reliable and scalable execution of dependent tasks Condor Schedd Reliable, scalable execution of independent tasks (locally, across the network), priorities, scheduling Cyberinfrastructure: Local machine, cluster, Condor pool, OSG, Tera. Grid Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

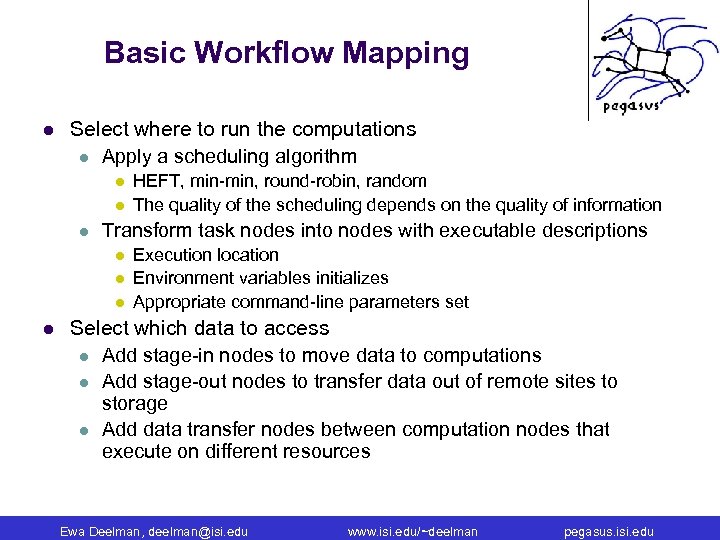

Basic Workflow Mapping l Select where to run the computations l Apply a scheduling algorithm l l l Transform task nodes into nodes with executable descriptions l l HEFT, min-min, round-robin, random The quality of the scheduling depends on the quality of information Execution location Environment variables initializes Appropriate command-line parameters set Select which data to access l Add stage-in nodes to move data to computations l Add stage-out nodes to transfer data out of remote sites to storage l Add data transfer nodes between computation nodes that execute on different resources Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Basic Workflow Mapping l Select where to run the computations l Apply a scheduling algorithm l l l Transform task nodes into nodes with executable descriptions l l HEFT, min-min, round-robin, random The quality of the scheduling depends on the quality of information Execution location Environment variables initializes Appropriate command-line parameters set Select which data to access l Add stage-in nodes to move data to computations l Add stage-out nodes to transfer data out of remote sites to storage l Add data transfer nodes between computation nodes that execute on different resources Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

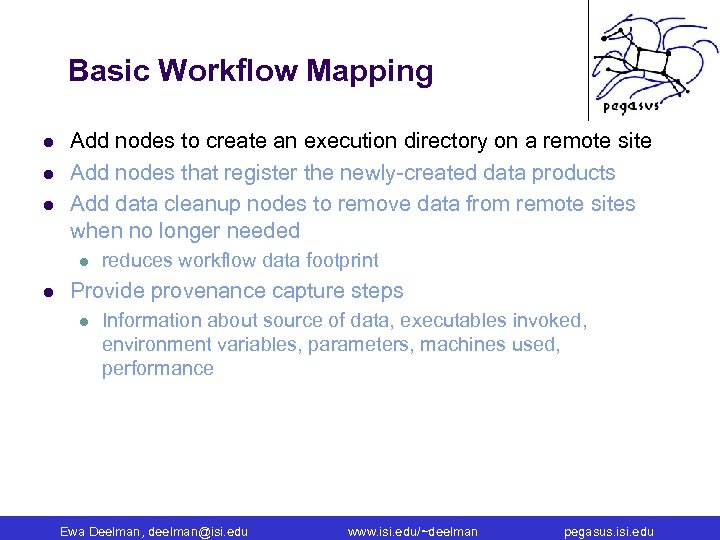

Basic Workflow Mapping l l l Add nodes to create an execution directory on a remote site Add nodes that register the newly-created data products Add data cleanup nodes to remove data from remote sites when no longer needed l l reduces workflow data footprint Provide provenance capture steps l Information about source of data, executables invoked, environment variables, parameters, machines used, performance Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Basic Workflow Mapping l l l Add nodes to create an execution directory on a remote site Add nodes that register the newly-created data products Add data cleanup nodes to remove data from remote sites when no longer needed l l reduces workflow data footprint Provide provenance capture steps l Information about source of data, executables invoked, environment variables, parameters, machines used, performance Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

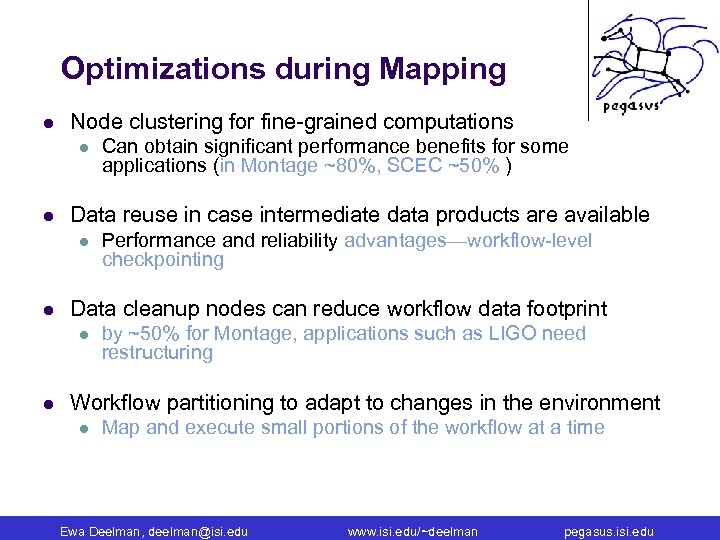

Optimizations during Mapping l Node clustering for fine-grained computations l l Data reuse in case intermediate data products are available l l Performance and reliability advantages—workflow-level checkpointing Data cleanup nodes can reduce workflow data footprint l l Can obtain significant performance benefits for some applications (in Montage ~80%, SCEC ~50% ) by ~50% for Montage, applications such as LIGO need restructuring Workflow partitioning to adapt to changes in the environment l Map and execute small portions of the workflow at a time Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Optimizations during Mapping l Node clustering for fine-grained computations l l Data reuse in case intermediate data products are available l l Performance and reliability advantages—workflow-level checkpointing Data cleanup nodes can reduce workflow data footprint l l Can obtain significant performance benefits for some applications (in Montage ~80%, SCEC ~50% ) by ~50% for Montage, applications such as LIGO need restructuring Workflow partitioning to adapt to changes in the environment l Map and execute small portions of the workflow at a time Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

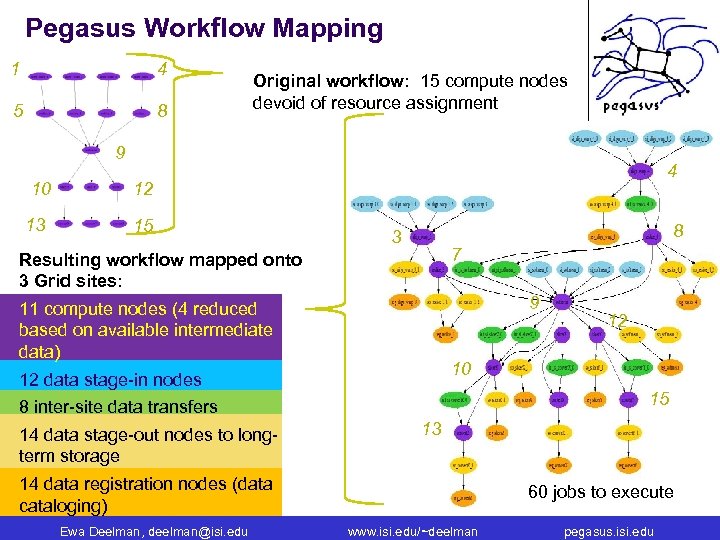

Pegasus Workflow Mapping 1 4 5 8 Original workflow: 15 compute nodes devoid of resource assignment 9 10 13 4 12 15 8 3 7 Resulting workflow mapped onto 3 Grid sites: 9 11 compute nodes (4 reduced based on available intermediate data) 10 12 data stage-in nodes 15 8 inter-site data transfers 14 data stage-out nodes to longterm storage 13 14 data registration nodes (data cataloging) Ewa Deelman, deelman@isi. edu 12 60 jobs to execute www. isi. edu/~deelman pegasus. isi. edu

Pegasus Workflow Mapping 1 4 5 8 Original workflow: 15 compute nodes devoid of resource assignment 9 10 13 4 12 15 8 3 7 Resulting workflow mapped onto 3 Grid sites: 9 11 compute nodes (4 reduced based on available intermediate data) 10 12 data stage-in nodes 15 8 inter-site data transfers 14 data stage-out nodes to longterm storage 13 14 data registration nodes (data cataloging) Ewa Deelman, deelman@isi. edu 12 60 jobs to execute www. isi. edu/~deelman pegasus. isi. edu

Reliability Features of Pegasus and DAGMan l l Provides workflow-level checkpointing through data re-use Allows for automatic re-tries of l l l task execution overall workflow execution workflow mapping l Tries alternative data sources for staging data Provides a rescue-DAG when all else fails l Clustering techniques can reduce some of failures l l Reduces load on CI services Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Reliability Features of Pegasus and DAGMan l l Provides workflow-level checkpointing through data re-use Allows for automatic re-tries of l l l task execution overall workflow execution workflow mapping l Tries alternative data sources for staging data Provides a rescue-DAG when all else fails l Clustering techniques can reduce some of failures l l Reduces load on CI services Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

OOI Challenges: Real Time Constraints l l l Issues of performance, adaptivity to changing environments, reliability Resource provisioning ahead of the execution l Currently semi-automated process used in Southern California Earthquake Center (SCEC) applications l Future plans of providing fully automated capabilities Possible use of on-demand capabilities l Spruce on the Tera. Grid Performance estimates for workflow executions l Needed for efficient resources provisioning (usually difficult to obtain) Reliability: Adding redundancy to the workflow tasks and the engine Adaptivity: Can fall out of the resource provisioning/retry process Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

OOI Challenges: Real Time Constraints l l l Issues of performance, adaptivity to changing environments, reliability Resource provisioning ahead of the execution l Currently semi-automated process used in Southern California Earthquake Center (SCEC) applications l Future plans of providing fully automated capabilities Possible use of on-demand capabilities l Spruce on the Tera. Grid Performance estimates for workflow executions l Needed for efficient resources provisioning (usually difficult to obtain) Reliability: Adding redundancy to the workflow tasks and the engine Adaptivity: Can fall out of the resource provisioning/retry process Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

OOI Challenges: Continuous Operations l l l Performance, reliability, multiple users, diverse concerns, tasks repeatable over time Support for prioritizing across multiple workflows, users l Effort under-way Fast-tracking of critical workflows Periodic, automated invocations of workflows as new data are ingested l Need to have performance estimates if want to pick workflows that would finish by a certain time l “Run this workflow every 24 hours” Robust workflow management capabilities l Support for “launch and forget” (Near-term plans) Development of debugging tools (beyond looking at logs)—part of year 2 SDCI plans l Provenance of the mapping/execution process Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

OOI Challenges: Continuous Operations l l l Performance, reliability, multiple users, diverse concerns, tasks repeatable over time Support for prioritizing across multiple workflows, users l Effort under-way Fast-tracking of critical workflows Periodic, automated invocations of workflows as new data are ingested l Need to have performance estimates if want to pick workflows that would finish by a certain time l “Run this workflow every 24 hours” Robust workflow management capabilities l Support for “launch and forget” (Near-term plans) Development of debugging tools (beyond looking at logs)—part of year 2 SDCI plans l Provenance of the mapping/execution process Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

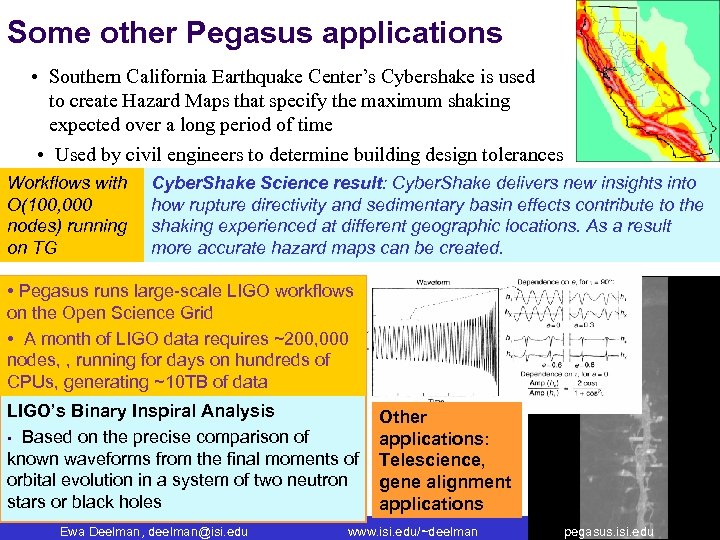

Some other Pegasus applications • Southern California Earthquake Center’s Cybershake is used to create Hazard Maps that specify the maximum shaking expected over a long period of time • Used by civil engineers to determine building design tolerances Workflows with O(100, 000 nodes) running on TG Cyber. Shake Science result: Cyber. Shake delivers new insights into how rupture directivity and sedimentary basin effects contribute to the shaking experienced at different geographic locations. As a result more accurate hazard maps can be created. • Pegasus runs large-scale LIGO workflows on the Open Science Grid • A month of LIGO data requires ~200, 000 nodes, , running for days on hundreds of CPUs, generating ~10 TB of data LIGO’s Binary Inspiral Analysis • Based on the precise comparison of known waveforms from the final moments of orbital evolution in a system of two neutron stars or black holes Ewa Deelman, deelman@isi. edu Other applications: Telescience, gene alignment applications www. isi. edu/~deelman pegasus. isi. edu

Some other Pegasus applications • Southern California Earthquake Center’s Cybershake is used to create Hazard Maps that specify the maximum shaking expected over a long period of time • Used by civil engineers to determine building design tolerances Workflows with O(100, 000 nodes) running on TG Cyber. Shake Science result: Cyber. Shake delivers new insights into how rupture directivity and sedimentary basin effects contribute to the shaking experienced at different geographic locations. As a result more accurate hazard maps can be created. • Pegasus runs large-scale LIGO workflows on the Open Science Grid • A month of LIGO data requires ~200, 000 nodes, , running for days on hundreds of CPUs, generating ~10 TB of data LIGO’s Binary Inspiral Analysis • Based on the precise comparison of known waveforms from the final moments of orbital evolution in a system of two neutron stars or black holes Ewa Deelman, deelman@isi. edu Other applications: Telescience, gene alignment applications www. isi. edu/~deelman pegasus. isi. edu

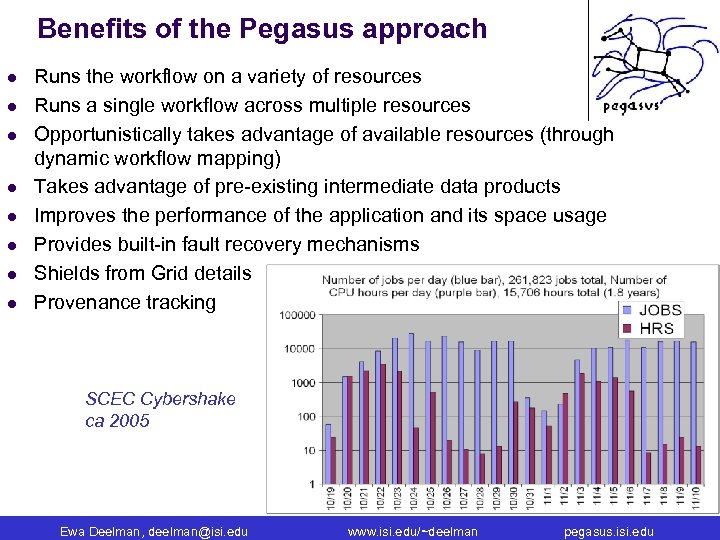

Benefits of the Pegasus approach l l l l Runs the workflow on a variety of resources Runs a single workflow across multiple resources Opportunistically takes advantage of available resources (through dynamic workflow mapping) Takes advantage of pre-existing intermediate data products Improves the performance of the application and its space usage Provides built-in fault recovery mechanisms Shields from Grid details Provenance tracking SCEC Cybershake ca 2005 Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu

Benefits of the Pegasus approach l l l l Runs the workflow on a variety of resources Runs a single workflow across multiple resources Opportunistically takes advantage of available resources (through dynamic workflow mapping) Takes advantage of pre-existing intermediate data products Improves the performance of the application and its space usage Provides built-in fault recovery mechanisms Shields from Grid details Provenance tracking SCEC Cybershake ca 2005 Ewa Deelman, deelman@isi. edu www. isi. edu/~deelman pegasus. isi. edu