450580a1e7377f8f142d4fe07ac791a3.ppt

- Количество слайдов: 37

The NLP TOOLTORIAL: Tools for Natural Language Processing and Text Mining Dragomir R. Radev April 22, 2011

The NLP TOOLTORIAL: Tools for Natural Language Processing and Text Mining Dragomir R. Radev April 22, 2011

Abuja, Nigeria (CNN) -- Incumbent Goodluck Jonathan is the winner of the presidential election in Nigeria, the chairman of Nigeria's Independent National Electoral Commission declared Monday. "Goodluck E. Jonathan of PDP, having satisfied the requirements of the law and scored the highest number of votes, is hereby declared the winner, " Chairman Attahiru Jega said. He said the ruling People's Democratic Party won 22, 495, 187 of the 39, 469, 484 votes cast Saturday. That number far outstripped the votes for Muhammadu Buhari, of the Congress for Progressive Change, the main opposition party, which won 12, 214, 853. To avoid a runoff, Jonathan needed at least a quarter of the vote in two-thirds of the 36 states and the capital. He won that amount in 31 states. Only the PDP signed the results; representatives of the other parties refused to do so. Nigeria's main opposition party, the Congress for Progressive Change, alleged that would-be voters had been intimidated and driven away from polling stations and that ballot boxes were stuffed with votes for Jonathan.

Abuja, Nigeria (CNN) -- Incumbent Goodluck Jonathan is the winner of the presidential election in Nigeria, the chairman of Nigeria's Independent National Electoral Commission declared Monday. "Goodluck E. Jonathan of PDP, having satisfied the requirements of the law and scored the highest number of votes, is hereby declared the winner, " Chairman Attahiru Jega said. He said the ruling People's Democratic Party won 22, 495, 187 of the 39, 469, 484 votes cast Saturday. That number far outstripped the votes for Muhammadu Buhari, of the Congress for Progressive Change, the main opposition party, which won 12, 214, 853. To avoid a runoff, Jonathan needed at least a quarter of the vote in two-thirds of the 36 states and the capital. He won that amount in 31 states. Only the PDP signed the results; representatives of the other parties refused to do so. Nigeria's main opposition party, the Congress for Progressive Change, alleged that would-be voters had been intimidated and driven away from polling stations and that ballot boxes were stuffed with votes for Jonathan.

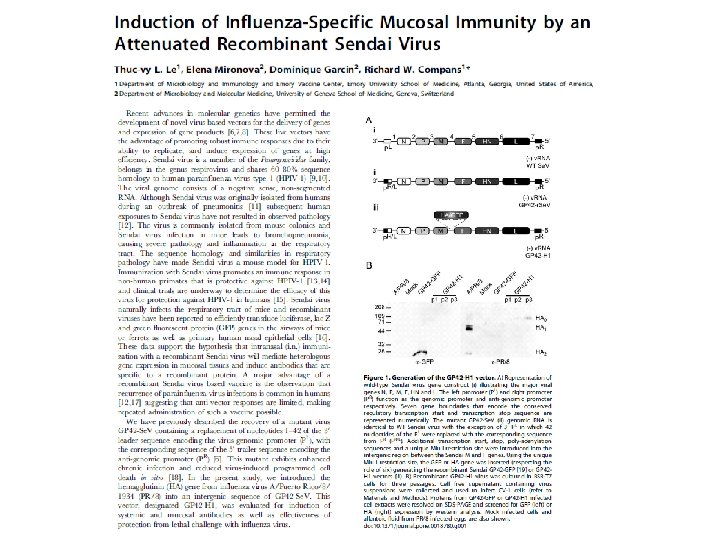

Recent advances in molecular genetics have permitted the development of novel virus-based vectors for the delivery of genes and expression of gene products [6, 7, 8]. These live vectors have the advantage of promoting robust immune responses due to their ability to replicate, and induce expression of genes at high efficiency. Sendai virus is a member of the Paramyxoviridae family, belongs in the genus respirovirus and shares 60– 80% sequence homology to human parainfluenza virus type 1 (HPIV-1) [9, 10]. The viral genome consists of a negative sense, non-segmented RNA. Although Sendai virus was originally isolated from humans during an outbreak of pneumonitis [11] subsequent human exposures to Sendai virus have not resulted in observed pathology [12]. The virus is commonly isolated from mouse colonies and Sendai virus infection in mice leads to bronchopneumonia, causing severe pathology and inflammation in the respiratory tract. The sequence homology and similarities in respiratory pathology have made Sendai virus a mouse model for HPIV-1. Immunization with Sendai virus promotes an immune response in non-human primates that is protective against HPIV-1 [13, 14] and clinical trials are underway to determine the efficacy of this virus for protection against HPIV-1 in humans [15]. Sendai virus naturally infects the respiratory tract of mice and recombinant viruses have been reported to efficiently transduce luciferase, lac Z and green fluorescent protein (GFP) genes in the airways of mice or ferrets as well as primary human nasal epithelial cells [16]. These data support the hypothesis that intranasal (i. n. ) immunization with a recombinant Sendai virus will mediate heterologous gene expression in mucosal tissues and induce antibodies that are specific to a recombinant protein. A major advantage of a recombinant Sendai virus based vaccine is the observation that recurrence of parainfluenza virus infections is common in humans [12, 17] suggesting that anti-vector responses are limited, making repeated administration of such a vaccine possible.

Recent advances in molecular genetics have permitted the development of novel virus-based vectors for the delivery of genes and expression of gene products [6, 7, 8]. These live vectors have the advantage of promoting robust immune responses due to their ability to replicate, and induce expression of genes at high efficiency. Sendai virus is a member of the Paramyxoviridae family, belongs in the genus respirovirus and shares 60– 80% sequence homology to human parainfluenza virus type 1 (HPIV-1) [9, 10]. The viral genome consists of a negative sense, non-segmented RNA. Although Sendai virus was originally isolated from humans during an outbreak of pneumonitis [11] subsequent human exposures to Sendai virus have not resulted in observed pathology [12]. The virus is commonly isolated from mouse colonies and Sendai virus infection in mice leads to bronchopneumonia, causing severe pathology and inflammation in the respiratory tract. The sequence homology and similarities in respiratory pathology have made Sendai virus a mouse model for HPIV-1. Immunization with Sendai virus promotes an immune response in non-human primates that is protective against HPIV-1 [13, 14] and clinical trials are underway to determine the efficacy of this virus for protection against HPIV-1 in humans [15]. Sendai virus naturally infects the respiratory tract of mice and recombinant viruses have been reported to efficiently transduce luciferase, lac Z and green fluorescent protein (GFP) genes in the airways of mice or ferrets as well as primary human nasal epithelial cells [16]. These data support the hypothesis that intranasal (i. n. ) immunization with a recombinant Sendai virus will mediate heterologous gene expression in mucosal tissues and induce antibodies that are specific to a recombinant protein. A major advantage of a recombinant Sendai virus based vaccine is the observation that recurrence of parainfluenza virus infections is common in humans [12, 17] suggesting that anti-vector responses are limited, making repeated administration of such a vaccine possible.

Background • What is NLP? • Components: tokenization, parsing, semantic analysis, information extraction, machine translation, speech processing, question answering, text summarization, sentiment analysis, relationship extraction, named entity recognition, text classification

Background • What is NLP? • Components: tokenization, parsing, semantic analysis, information extraction, machine translation, speech processing, question answering, text summarization, sentiment analysis, relationship extraction, named entity recognition, text classification

Preprocessing • • • Text extraction Sentence segmentation Word normalization Stemming Part of speech tagging Named entity extraction

Preprocessing • • • Text extraction Sentence segmentation Word normalization Stemming Part of speech tagging Named entity extraction

Part of speech tagging Secretariat is expected to race tomorrow

Part of speech tagging Secretariat is expected to race tomorrow

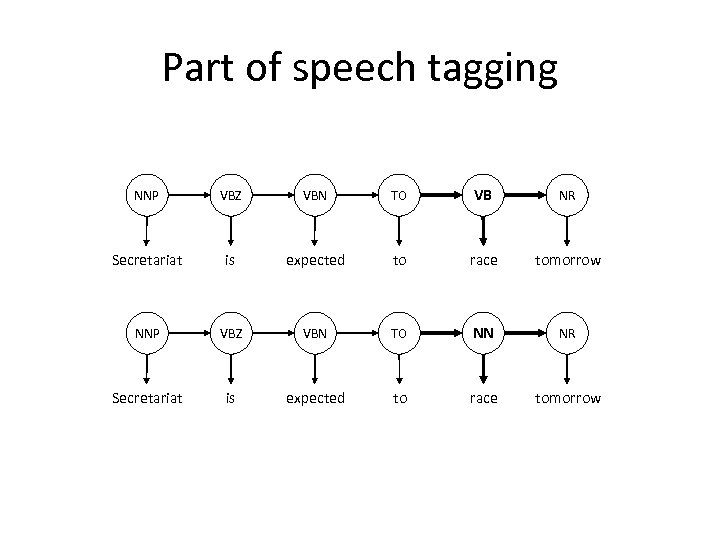

Part of speech tagging NNP VBZ VBN TO VB NR Secretariat is expected to race tomorrow NNP VBZ VBN TO NN NR Secretariat is expected to race tomorrow

Part of speech tagging NNP VBZ VBN TO VB NR Secretariat is expected to race tomorrow NNP VBZ VBN TO NN NR Secretariat is expected to race tomorrow

Parsing • • Myriam slept. Myriam wrote a novel. Myriam gave Sally flowers. Myriam ate pizza with olives. Myriam ate pizza with Sally. Myriam ate pizza with a fork. Myriam ate pizza with remorse.

Parsing • • Myriam slept. Myriam wrote a novel. Myriam gave Sally flowers. Myriam ate pizza with olives. Myriam ate pizza with Sally. Myriam ate pizza with a fork. Myriam ate pizza with remorse.

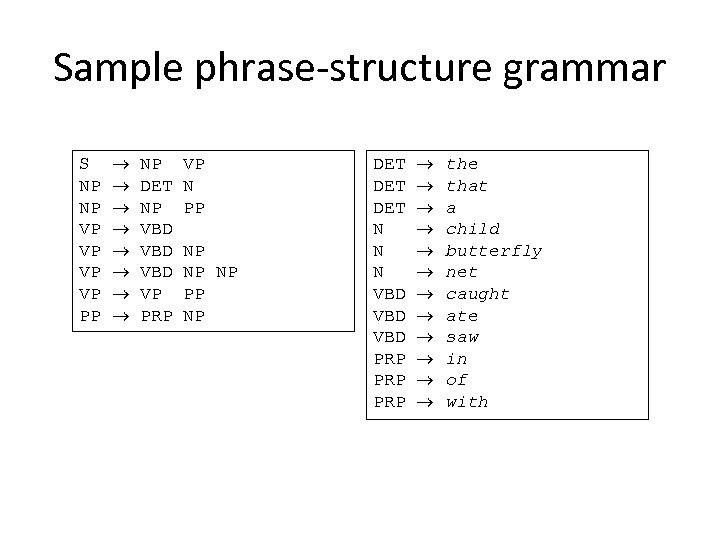

Sample phrase-structure grammar S NP NP VP VP PP NP DET NP VBD VBD VP PRP VP N PP NP NP NP PP NP DET DET N N N VBD VBD PRP PRP the that a child butterfly net caught ate saw in of with

Sample phrase-structure grammar S NP NP VP VP PP NP DET NP VBD VBD VP PRP VP N PP NP NP NP PP NP DET DET N N N VBD VBD PRP PRP the that a child butterfly net caught ate saw in of with

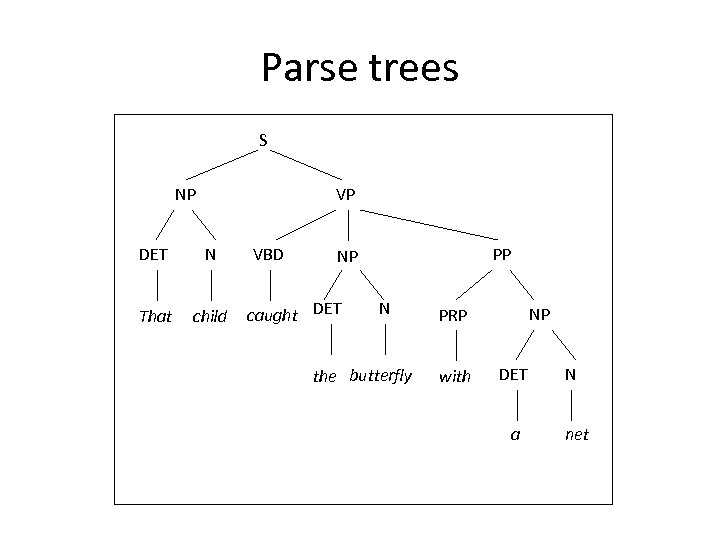

Parse trees S NP VP DET N That child VBD PP NP caught DET N the butterfly NP PRP with DET a N net

Parse trees S NP VP DET N That child VBD PP NP caught DET N the butterfly NP PRP with DET a N net

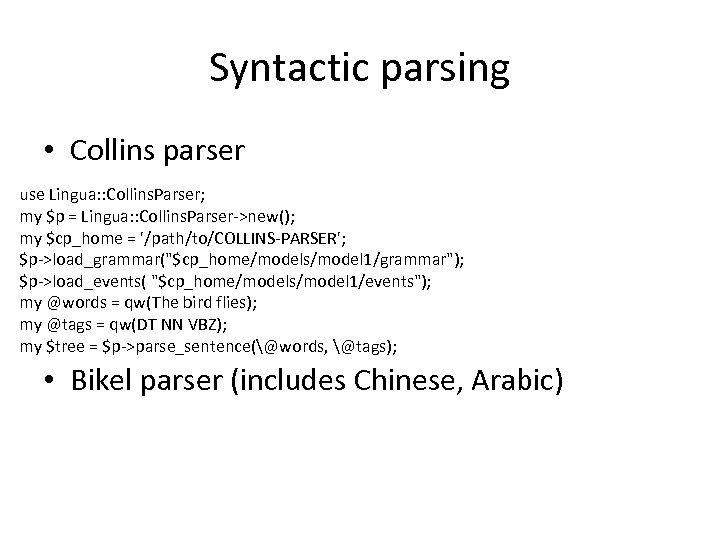

Syntactic parsing • Collins parser use Lingua: : Collins. Parser; my $p = Lingua: : Collins. Parser->new(); my $cp_home = '/path/to/COLLINS-PARSER'; $p->load_grammar("$cp_home/models/model 1/grammar"); $p->load_events( "$cp_home/models/model 1/events"); my @words = qw(The bird flies); my @tags = qw(DT NN VBZ); my $tree = $p->parse_sentence(@words, @tags); • Bikel parser (includes Chinese, Arabic)

Syntactic parsing • Collins parser use Lingua: : Collins. Parser; my $p = Lingua: : Collins. Parser->new(); my $cp_home = '/path/to/COLLINS-PARSER'; $p->load_grammar("$cp_home/models/model 1/grammar"); $p->load_events( "$cp_home/models/model 1/events"); my @words = qw(The bird flies); my @tags = qw(DT NN VBZ); my $tree = $p->parse_sentence(@words, @tags); • Bikel parser (includes Chinese, Arabic)

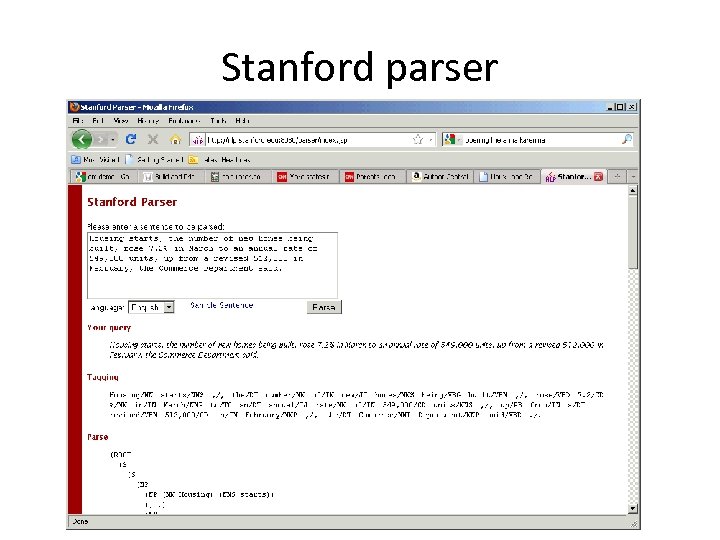

Stanford parser

Stanford parser

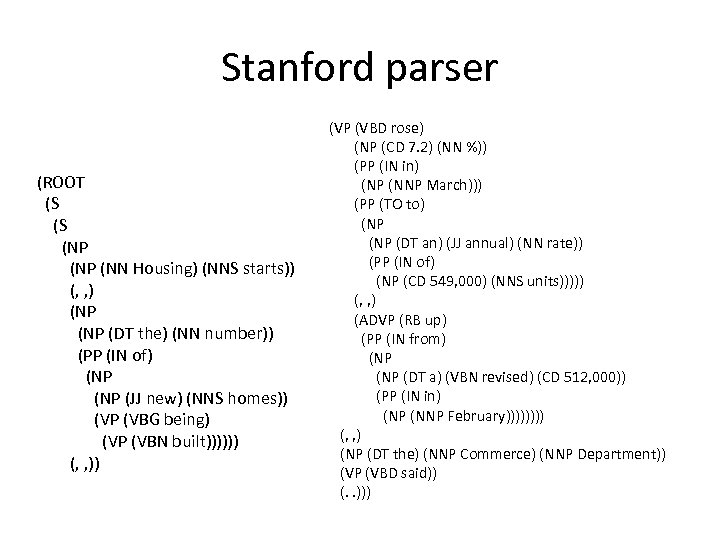

Stanford parser (ROOT (S (S (NP (NN Housing) (NNS starts)) (, , ) (NP (DT the) (NN number)) (PP (IN of) (NP (JJ new) (NNS homes)) (VP (VBG being) (VP (VBN built)))))) (, , )) (VP (VBD rose) (NP (CD 7. 2) (NN %)) (PP (IN in) (NP (NNP March))) (PP (TO to) (NP (DT an) (JJ annual) (NN rate)) (PP (IN of) (NP (CD 549, 000) (NNS units))))) (, , ) (ADVP (RB up) (PP (IN from) (NP (DT a) (VBN revised) (CD 512, 000)) (PP (IN in) (NP (NNP February)))) (, , ) (NP (DT the) (NNP Commerce) (NNP Department)) (VP (VBD said)) (. . )))

Stanford parser (ROOT (S (S (NP (NN Housing) (NNS starts)) (, , ) (NP (DT the) (NN number)) (PP (IN of) (NP (JJ new) (NNS homes)) (VP (VBG being) (VP (VBN built)))))) (, , )) (VP (VBD rose) (NP (CD 7. 2) (NN %)) (PP (IN in) (NP (NNP March))) (PP (TO to) (NP (DT an) (JJ annual) (NN rate)) (PP (IN of) (NP (CD 549, 000) (NNS units))))) (, , ) (ADVP (RB up) (PP (IN from) (NP (DT a) (VBN revised) (CD 512, 000)) (PP (IN in) (NP (NNP February)))) (, , ) (NP (DT the) (NNP Commerce) (NNP Department)) (VP (VBD said)) (. . )))

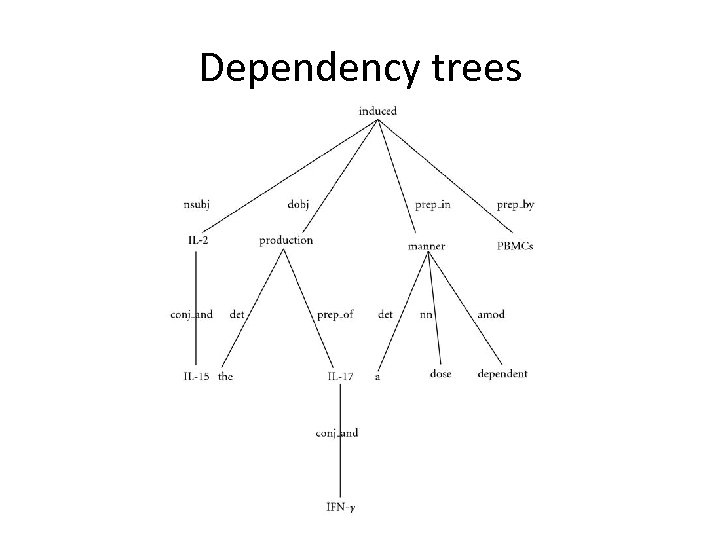

Dependency trees

Dependency trees

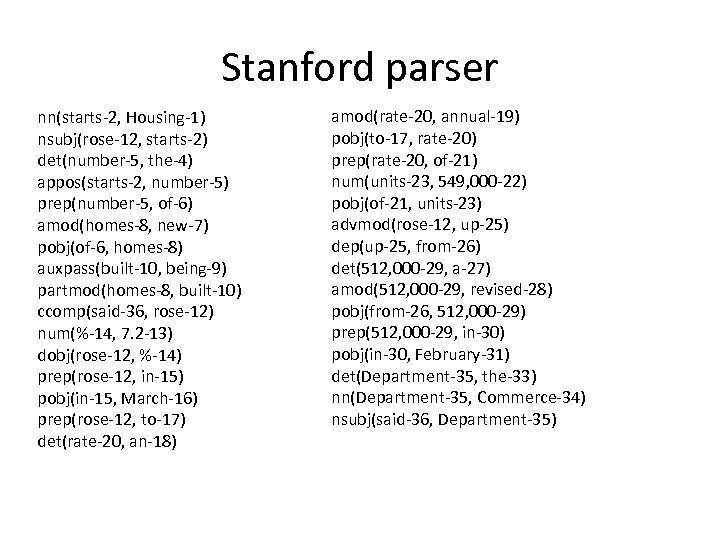

Stanford parser nn(starts-2, Housing-1) nsubj(rose-12, starts-2) det(number-5, the-4) appos(starts-2, number-5) prep(number-5, of-6) amod(homes-8, new-7) pobj(of-6, homes-8) auxpass(built-10, being-9) partmod(homes-8, built-10) ccomp(said-36, rose-12) num(%-14, 7. 2 -13) dobj(rose-12, %-14) prep(rose-12, in-15) pobj(in-15, March-16) prep(rose-12, to-17) det(rate-20, an-18) amod(rate-20, annual-19) pobj(to-17, rate-20) prep(rate-20, of-21) num(units-23, 549, 000 -22) pobj(of-21, units-23) advmod(rose-12, up-25) dep(up-25, from-26) det(512, 000 -29, a-27) amod(512, 000 -29, revised-28) pobj(from-26, 512, 000 -29) prep(512, 000 -29, in-30) pobj(in-30, February-31) det(Department-35, the-33) nn(Department-35, Commerce-34) nsubj(said-36, Department-35)

Stanford parser nn(starts-2, Housing-1) nsubj(rose-12, starts-2) det(number-5, the-4) appos(starts-2, number-5) prep(number-5, of-6) amod(homes-8, new-7) pobj(of-6, homes-8) auxpass(built-10, being-9) partmod(homes-8, built-10) ccomp(said-36, rose-12) num(%-14, 7. 2 -13) dobj(rose-12, %-14) prep(rose-12, in-15) pobj(in-15, March-16) prep(rose-12, to-17) det(rate-20, an-18) amod(rate-20, annual-19) pobj(to-17, rate-20) prep(rate-20, of-21) num(units-23, 549, 000 -22) pobj(of-21, units-23) advmod(rose-12, up-25) dep(up-25, from-26) det(512, 000 -29, a-27) amod(512, 000 -29, revised-28) pobj(from-26, 512, 000 -29) prep(512, 000 -29, in-30) pobj(in-30, February-31) det(Department-35, the-33) nn(Department-35, Commerce-34) nsubj(said-36, Department-35)

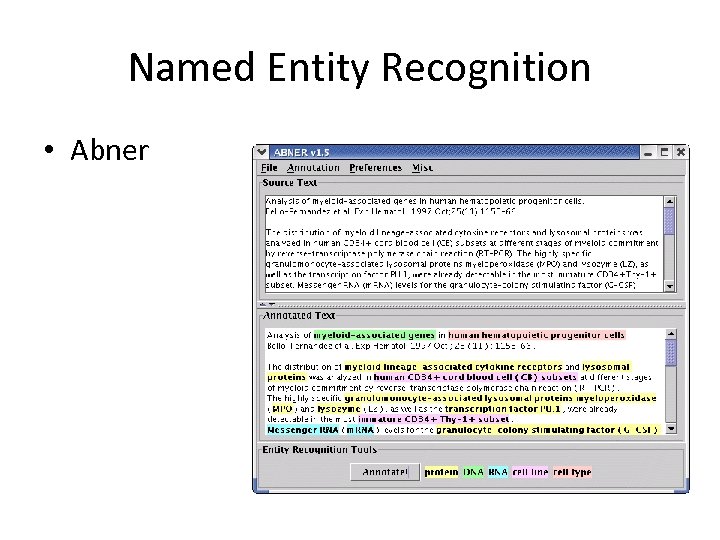

Named Entity Recognition • Abner

Named Entity Recognition • Abner

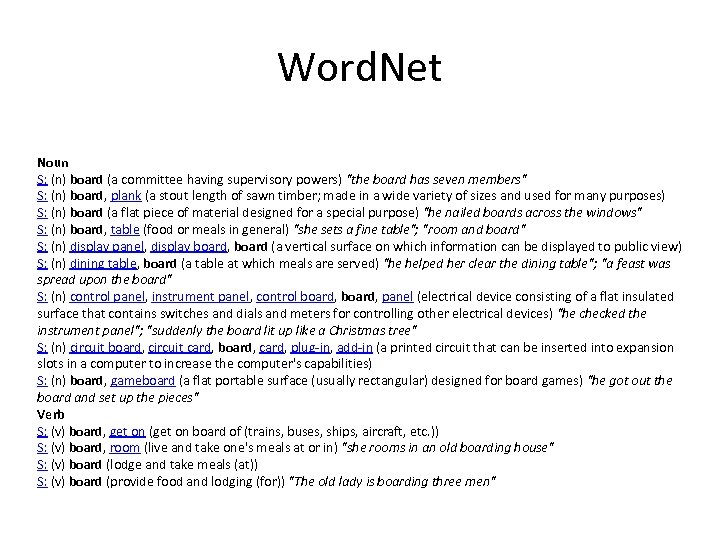

Word. Net Noun S: (n) board (a committee having supervisory powers) "the board has seven members" S: (n) board, plank (a stout length of sawn timber; made in a wide variety of sizes and used for many purposes) S: (n) board (a flat piece of material designed for a special purpose) "he nailed boards across the windows" S: (n) board, table (food or meals in general) "she sets a fine table"; "room and board" S: (n) display panel, display board, board (a vertical surface on which information can be displayed to public view) S: (n) dining table, board (a table at which meals are served) "he helped her clear the dining table"; "a feast was spread upon the board" S: (n) control panel, instrument panel, control board, panel (electrical device consisting of a flat insulated surface that contains switches and dials and meters for controlling other electrical devices) "he checked the instrument panel"; "suddenly the board lit up like a Christmas tree" S: (n) circuit board, circuit card, board, card, plug-in, add-in (a printed circuit that can be inserted into expansion slots in a computer to increase the computer's capabilities) S: (n) board, gameboard (a flat portable surface (usually rectangular) designed for board games) "he got out the board and set up the pieces" Verb S: (v) board, get on (get on board of (trains, buses, ships, aircraft, etc. )) S: (v) board, room (live and take one's meals at or in) "she rooms in an old boarding house" S: (v) board (lodge and take meals (at)) S: (v) board (provide food and lodging (for)) "The old lady is boarding three men"

Word. Net Noun S: (n) board (a committee having supervisory powers) "the board has seven members" S: (n) board, plank (a stout length of sawn timber; made in a wide variety of sizes and used for many purposes) S: (n) board (a flat piece of material designed for a special purpose) "he nailed boards across the windows" S: (n) board, table (food or meals in general) "she sets a fine table"; "room and board" S: (n) display panel, display board, board (a vertical surface on which information can be displayed to public view) S: (n) dining table, board (a table at which meals are served) "he helped her clear the dining table"; "a feast was spread upon the board" S: (n) control panel, instrument panel, control board, panel (electrical device consisting of a flat insulated surface that contains switches and dials and meters for controlling other electrical devices) "he checked the instrument panel"; "suddenly the board lit up like a Christmas tree" S: (n) circuit board, circuit card, board, card, plug-in, add-in (a printed circuit that can be inserted into expansion slots in a computer to increase the computer's capabilities) S: (n) board, gameboard (a flat portable surface (usually rectangular) designed for board games) "he got out the board and set up the pieces" Verb S: (v) board, get on (get on board of (trains, buses, ships, aircraft, etc. )) S: (v) board, room (live and take one's meals at or in) "she rooms in an old boarding house" S: (v) board (lodge and take meals (at)) S: (v) board (provide food and lodging (for)) "The old lady is boarding three men"

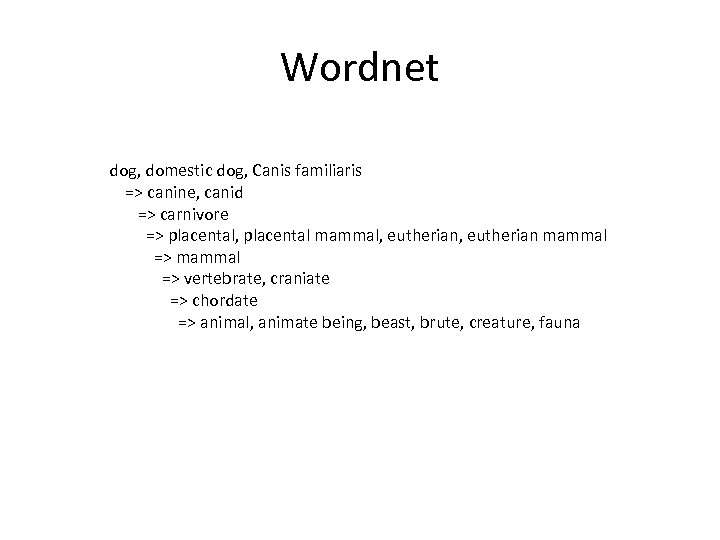

Wordnet dog, domestic dog, Canis familiaris => canine, canid => carnivore => placental, placental mammal, eutherian mammal => vertebrate, craniate => chordate => animal, animate being, beast, brute, creature, fauna

Wordnet dog, domestic dog, Canis familiaris => canine, canid => carnivore => placental, placental mammal, eutherian mammal => vertebrate, craniate => chordate => animal, animate being, beast, brute, creature, fauna

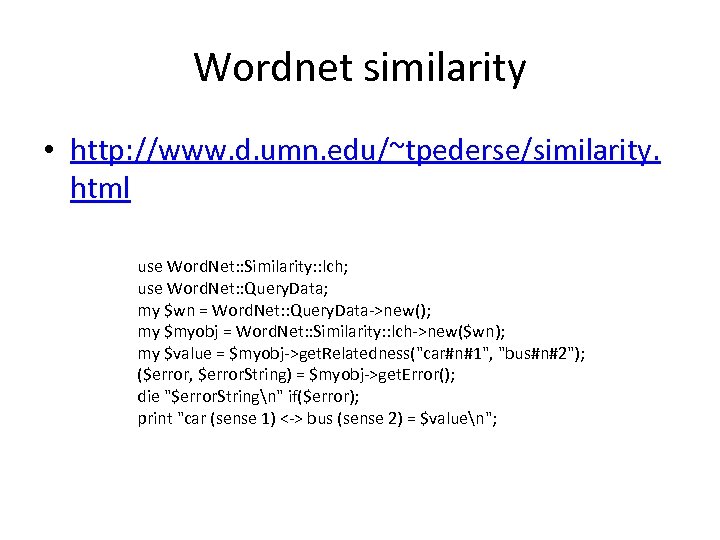

Wordnet similarity • http: //www. d. umn. edu/~tpederse/similarity. html use Word. Net: : Similarity: : lch; use Word. Net: : Query. Data; my $wn = Word. Net: : Query. Data->new(); my $myobj = Word. Net: : Similarity: : lch->new($wn); my $value = $myobj->get. Relatedness("car#n#1", "bus#n#2"); ($error, $error. String) = $myobj->get. Error(); die "$error. Stringn" if($error); print "car (sense 1) <-> bus (sense 2) = $valuen";

Wordnet similarity • http: //www. d. umn. edu/~tpederse/similarity. html use Word. Net: : Similarity: : lch; use Word. Net: : Query. Data; my $wn = Word. Net: : Query. Data->new(); my $myobj = Word. Net: : Similarity: : lch->new($wn); my $value = $myobj->get. Relatedness("car#n#1", "bus#n#2"); ($error, $error. String) = $myobj->get. Error(); die "$error. Stringn" if($error); print "car (sense 1) <-> bus (sense 2) = $valuen";

CMU-CAM LM toolkit • • • Language modeling Extracting n-grams Smoothing Computing perplexities http: //www. speech. cs. cmu. edu/SLM/toolkit_ documentation. html

CMU-CAM LM toolkit • • • Language modeling Extracting n-grams Smoothing Computing perplexities http: //www. speech. cs. cmu. edu/SLM/toolkit_ documentation. html

Machine translation • Moses

Machine translation • Moses

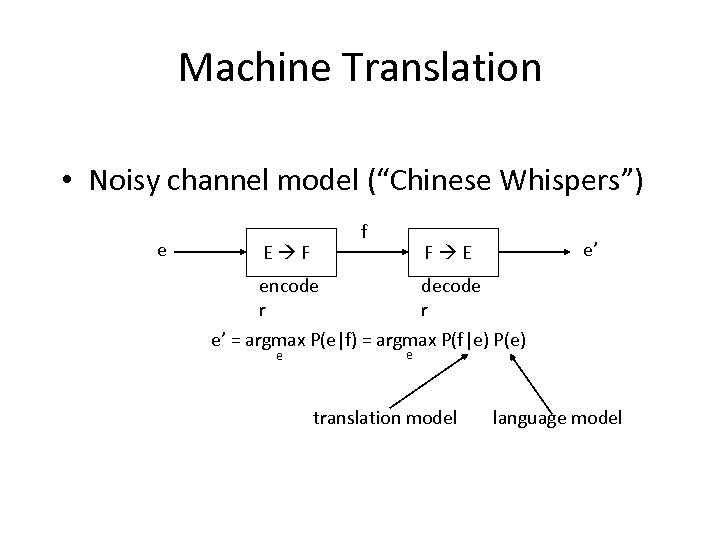

Machine Translation • Noisy channel model (“Chinese Whispers”) e E F f e’ F E encode decode r r e’ = argmax P(e|f) = argmax P(f|e) P(e) e e translation model language model

Machine Translation • Noisy channel model (“Chinese Whispers”) e E F f e’ F E encode decode r r e’ = argmax P(e|f) = argmax P(f|e) P(e) e e translation model language model

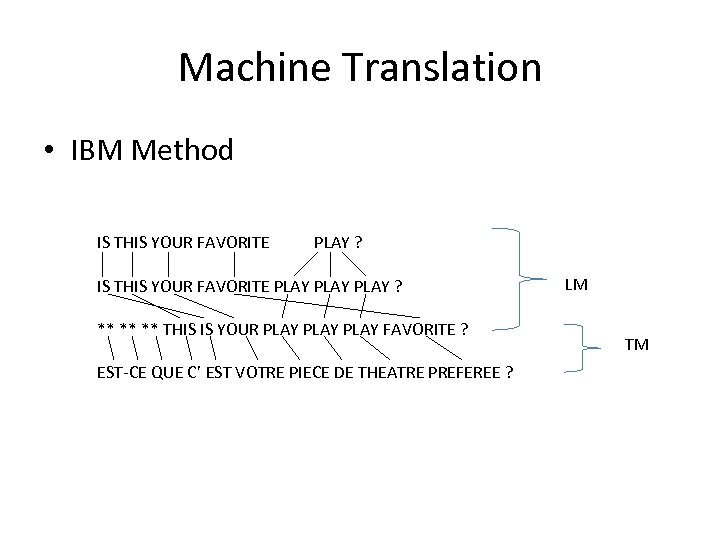

Machine Translation • IBM Method IS THIS YOUR FAVORITE PLAY ? IS THIS YOUR FAVORITE PLAY ? ** ** ** THIS IS YOUR PLAY FAVORITE ? EST-CE QUE C’ EST VOTRE PIECE DE THEATRE PREFEREE ? LM TM

Machine Translation • IBM Method IS THIS YOUR FAVORITE PLAY ? IS THIS YOUR FAVORITE PLAY ? ** ** ** THIS IS YOUR PLAY FAVORITE ? EST-CE QUE C’ EST VOTRE PIECE DE THEATRE PREFEREE ? LM TM

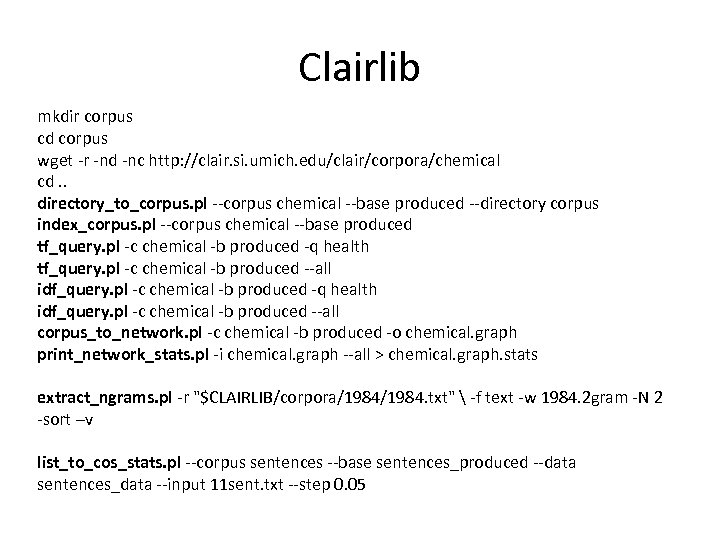

Clairlib mkdir corpus cd corpus wget -r -nd -nc http: //clair. si. umich. edu/clair/corpora/chemical cd. . directory_to_corpus. pl --corpus chemical --base produced --directory corpus index_corpus. pl --corpus chemical --base produced tf_query. pl -c chemical -b produced -q health tf_query. pl -c chemical -b produced --all idf_query. pl -c chemical -b produced -q health idf_query. pl -c chemical -b produced --all corpus_to_network. pl -c chemical -b produced -o chemical. graph print_network_stats. pl -i chemical. graph --all > chemical. graph. stats extract_ngrams. pl -r "$CLAIRLIB/corpora/1984. txt" -f text -w 1984. 2 gram -N 2 -sort –v list_to_cos_stats. pl --corpus sentences --base sentences_produced --data sentences_data --input 11 sent. txt --step 0. 05

Clairlib mkdir corpus cd corpus wget -r -nd -nc http: //clair. si. umich. edu/clair/corpora/chemical cd. . directory_to_corpus. pl --corpus chemical --base produced --directory corpus index_corpus. pl --corpus chemical --base produced tf_query. pl -c chemical -b produced -q health tf_query. pl -c chemical -b produced --all idf_query. pl -c chemical -b produced -q health idf_query. pl -c chemical -b produced --all corpus_to_network. pl -c chemical -b produced -o chemical. graph print_network_stats. pl -i chemical. graph --all > chemical. graph. stats extract_ngrams. pl -r "$CLAIRLIB/corpora/1984. txt" -f text -w 1984. 2 gram -N 2 -sort –v list_to_cos_stats. pl --corpus sentences --base sentences_produced --data sentences_data --input 11 sent. txt --step 0. 05

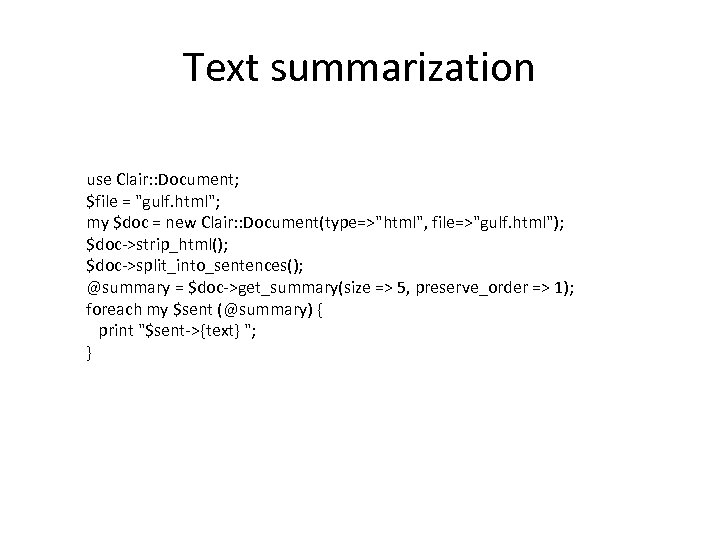

Text summarization use Clair: : Document; $file = "gulf. html"; my $doc = new Clair: : Document(type=>"html", file=>"gulf. html"); $doc->strip_html(); $doc->split_into_sentences(); @summary = $doc->get_summary(size => 5, preserve_order => 1); foreach my $sent (@summary) { print "$sent->{text} "; }

Text summarization use Clair: : Document; $file = "gulf. html"; my $doc = new Clair: : Document(type=>"html", file=>"gulf. html"); $doc->strip_html(); $doc->split_into_sentences(); @summary = $doc->get_summary(size => 5, preserve_order => 1); foreach my $sent (@summary) { print "$sent->{text} "; }

Latent Semantic Indexing (LSI/LSA) • Sense. Clusters – (Ted Pedersen)

Latent Semantic Indexing (LSI/LSA) • Sense. Clusters – (Ted Pedersen)

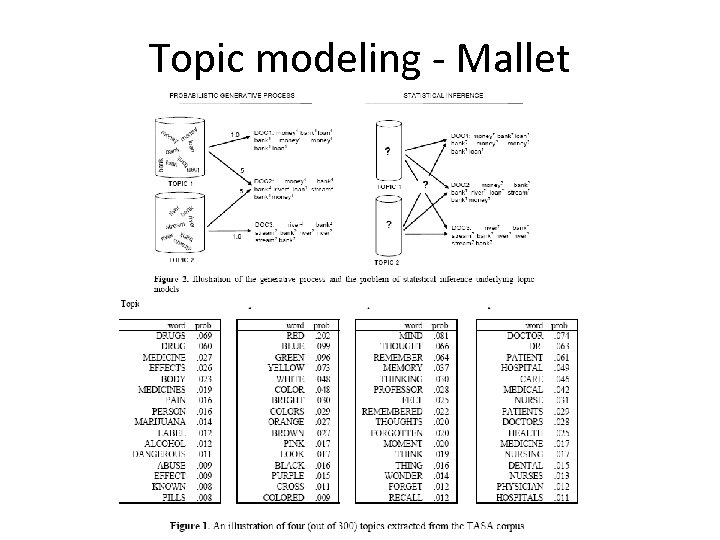

Topic modeling - Mallet

Topic modeling - Mallet

Machine learning • Clustering and classification • Weka – decision trees, naïve bayes, k-means, EM, etc. • For text classification (e. g. , spam recognition) – svmlight • Feature extraction – using pointwise mutual information, Chi-Square…

Machine learning • Clustering and classification • Weka – decision trees, naïve bayes, k-means, EM, etc. • For text classification (e. g. , spam recognition) – svmlight • Feature extraction – using pointwise mutual information, Chi-Square…

Main URLs • Collins parser: – http: //people. csail. mit. edu/mcollins/code. html • Bikel parser: – http: //www. cis. upenn. edu/~dbikel/software. html • Moses – http: //www. statmt. org/ • Clairlib/mead – http: //www. clairlib. org • NLTK – http: //www. nltk. org/ • Abner – http: //pages. cs. wisc. edu/~bsettles/abner/ • Java. RAP – http: //aye. comp. nus. edu. sg/~qiu/NLPTools/Java. RAP. html

Main URLs • Collins parser: – http: //people. csail. mit. edu/mcollins/code. html • Bikel parser: – http: //www. cis. upenn. edu/~dbikel/software. html • Moses – http: //www. statmt. org/ • Clairlib/mead – http: //www. clairlib. org • NLTK – http: //www. nltk. org/ • Abner – http: //pages. cs. wisc. edu/~bsettles/abner/ • Java. RAP – http: //aye. comp. nus. edu. sg/~qiu/NLPTools/Java. RAP. html

Main URLs • Mallet – http: //mallet. cs. umass. edu • Svmlight – http: //svmlight. joachims. org • Weka – http: //www. cs. waikato. ac. nz/ml/weka

Main URLs • Mallet – http: //mallet. cs. umass. edu • Svmlight – http: //svmlight. joachims. org • Weka – http: //www. cs. waikato. ac. nz/ml/weka

Additional tools • Language identification: – http: //www. let. rug. nl/~vannoord/Text. Cat/ • Porter stemmer: – http: //tartarus. org/~martin/Porter. Stemmer/ • MXTerminator: – ftp: //ftp. cis. upenn. edu/pub/adwait/jmx/ • PDFBOX: – http: //pdfbox. apache. org/ • Coreference: – http: //www. bart-coref. org/ • Stanford NER – http: //nlp. stanford. edu/software/CRF-NER. shtml • Citation parsing – http: //wing. comp. nus. edu. sg/pars. Cit/ • Lingpipe – http: //alias-i. com/lingpipe/

Additional tools • Language identification: – http: //www. let. rug. nl/~vannoord/Text. Cat/ • Porter stemmer: – http: //tartarus. org/~martin/Porter. Stemmer/ • MXTerminator: – ftp: //ftp. cis. upenn. edu/pub/adwait/jmx/ • PDFBOX: – http: //pdfbox. apache. org/ • Coreference: – http: //www. bart-coref. org/ • Stanford NER – http: //nlp. stanford. edu/software/CRF-NER. shtml • Citation parsing – http: //wing. comp. nus. edu. sg/pars. Cit/ • Lingpipe – http: //alias-i. com/lingpipe/

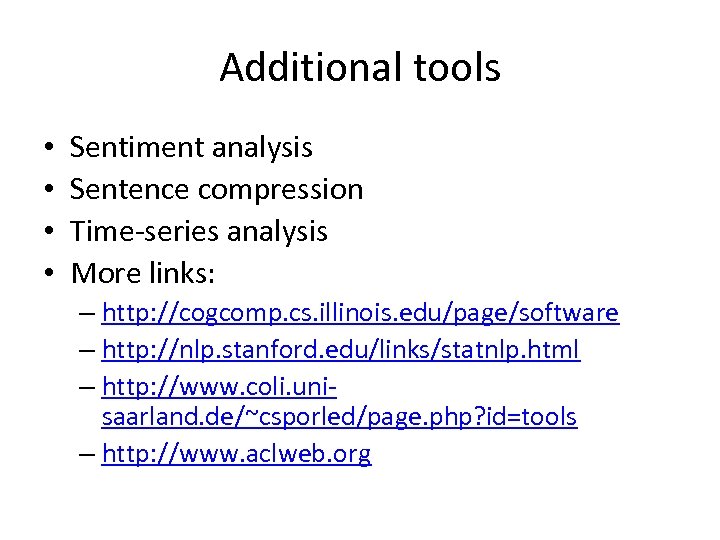

Additional tools • • Sentiment analysis Sentence compression Time-series analysis More links: – http: //cogcomp. cs. illinois. edu/page/software – http: //nlp. stanford. edu/links/statnlp. html – http: //www. coli. unisaarland. de/~csporled/page. php? id=tools – http: //www. aclweb. org

Additional tools • • Sentiment analysis Sentence compression Time-series analysis More links: – http: //cogcomp. cs. illinois. edu/page/software – http: //nlp. stanford. edu/links/statnlp. html – http: //www. coli. unisaarland. de/~csporled/page. php? id=tools – http: //www. aclweb. org