c52b05fac985b2e1cc9bfbfea8cba40e.ppt

- Количество слайдов: 22

The Integration of Lexical Knowledge and External Resources for QA Hui YANG, Tat-Seng Chua {yangh, chuats}@comp. nus. edu. sg Pris, School of Computing National University of Singapore 21/11/2002

The Integration of Lexical Knowledge and External Resources for QA Hui YANG, Tat-Seng Chua {yangh, chuats}@comp. nus. edu. sg Pris, School of Computing National University of Singapore 21/11/2002

Presentation Outline n Introduction n Pris QA System Design n Result and Analysis n Conclusion n Future Work 21/11/2002

Presentation Outline n Introduction n Pris QA System Design n Result and Analysis n Conclusion n Future Work 21/11/2002

Open Domain QA n Find answers to open-domain NLP questions by searching a large collection of documents n Question Processing May involve question re-formulation n To find answer type n n Query Expansion n To overcome concept mis-match between query & info base n Search for Candidate Answers n Documents, paragraphs, or sentences n Disambiguation n Ranking (or re-ranking) of answers n Location of exact answers 21/11/2002

Open Domain QA n Find answers to open-domain NLP questions by searching a large collection of documents n Question Processing May involve question re-formulation n To find answer type n n Query Expansion n To overcome concept mis-match between query & info base n Search for Candidate Answers n Documents, paragraphs, or sentences n Disambiguation n Ranking (or re-ranking) of answers n Location of exact answers 21/11/2002

Current Research Trends n Web-based QA n the Web redundancy n Probabilistic algorithm n Linguistic-based QA n part-of-speech tagging n syntactic parsing n semantic relations n named entity extraction n dictionaries n Word. Net, etc 21/11/2002

Current Research Trends n Web-based QA n the Web redundancy n Probabilistic algorithm n Linguistic-based QA n part-of-speech tagging n syntactic parsing n semantic relations n named entity extraction n dictionaries n Word. Net, etc 21/11/2002

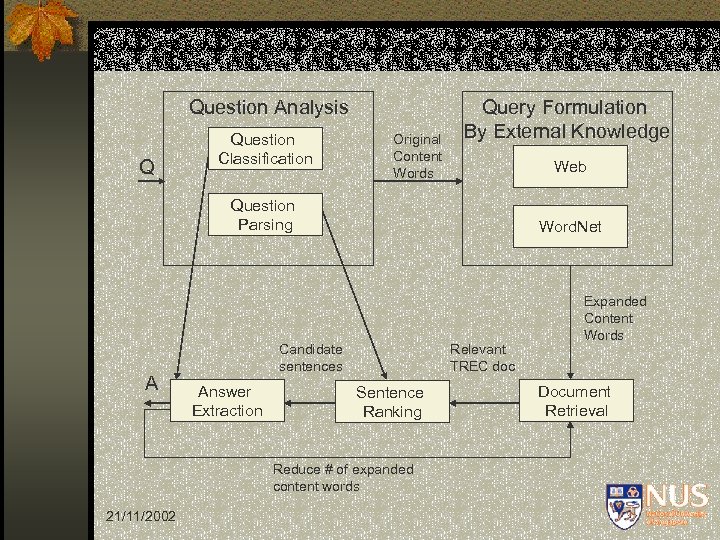

System Overview n Question Classification n Question Parsing n Query Formulation n Document Retrieval n Candidate Sentence Retrieval n Answer Extraction 21/11/2002

System Overview n Question Classification n Question Parsing n Query Formulation n Document Retrieval n Candidate Sentence Retrieval n Answer Extraction 21/11/2002

Question Analysis Q Question Classification Original Content Words Query Formulation By External Knowledge Web Question Parsing A Word. Net Candidate sentences Answer Extraction Relevant TREC doc Sentence Ranking Reduce # of expanded content words 21/11/2002 Expanded Content Words Document Retrieval

Question Analysis Q Question Classification Original Content Words Query Formulation By External Knowledge Web Question Parsing A Word. Net Candidate sentences Answer Extraction Relevant TREC doc Sentence Ranking Reduce # of expanded content words 21/11/2002 Expanded Content Words Document Retrieval

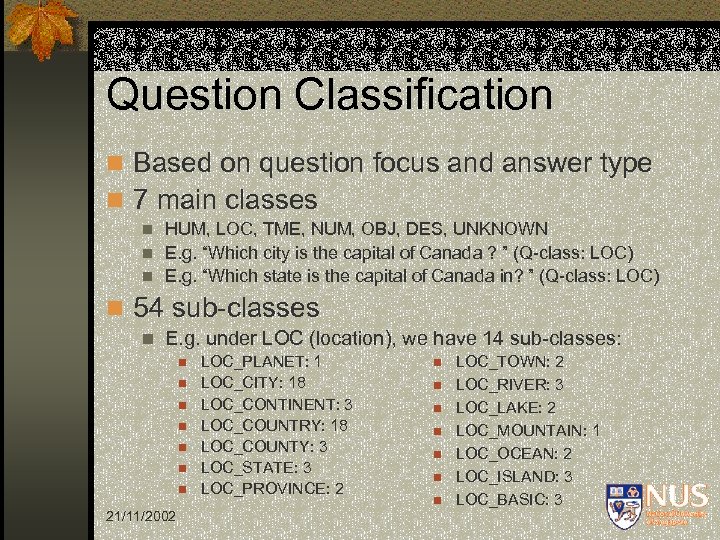

Question Classification n Based on question focus and answer type n 7 main classes HUM, LOC, TME, NUM, OBJ, DES, UNKNOWN n E. g. “Which city is the capital of Canada ? ” (Q-class: LOC) n E. g. “Which state is the capital of Canada in? ” (Q-class: LOC) n n 54 sub-classes n E. g. under LOC (location), we have 14 sub-classes: n n n n 21/11/2002 LOC_PLANET: 1 LOC_CITY: 18 LOC_CONTINENT: 3 LOC_COUNTRY: 18 LOC_COUNTY: 3 LOC_STATE: 3 LOC_PROVINCE: 2 n n n n LOC_TOWN: 2 LOC_RIVER: 3 LOC_LAKE: 2 LOC_MOUNTAIN: 1 LOC_OCEAN: 2 LOC_ISLAND: 3 LOC_BASIC: 3

Question Classification n Based on question focus and answer type n 7 main classes HUM, LOC, TME, NUM, OBJ, DES, UNKNOWN n E. g. “Which city is the capital of Canada ? ” (Q-class: LOC) n E. g. “Which state is the capital of Canada in? ” (Q-class: LOC) n n 54 sub-classes n E. g. under LOC (location), we have 14 sub-classes: n n n n 21/11/2002 LOC_PLANET: 1 LOC_CITY: 18 LOC_CONTINENT: 3 LOC_COUNTRY: 18 LOC_COUNTY: 3 LOC_STATE: 3 LOC_PROVINCE: 2 n n n n LOC_TOWN: 2 LOC_RIVER: 3 LOC_LAKE: 2 LOC_MOUNTAIN: 1 LOC_OCEAN: 2 LOC_ISLAND: 3 LOC_BASIC: 3

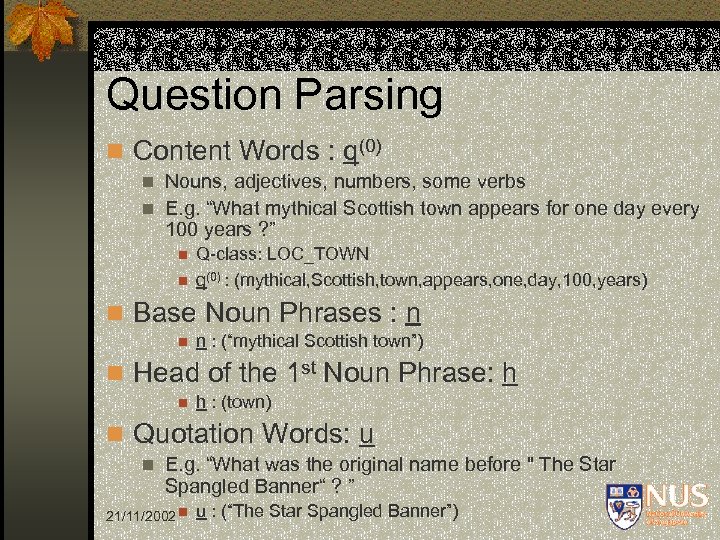

Question Parsing n Content Words : q(0) Nouns, adjectives, numbers, some verbs n E. g. “What mythical Scottish town appears for one day every 100 years ? ” n n n Q-class: LOC_TOWN q(0) : (mythical, Scottish, town, appears, one, day, 100, years) n Base Noun Phrases : n n : (“mythical Scottish town”) Head of the 1 st Noun Phrase: n n n h h : (town) n Quotation Words: u n E. g. “What was the original name before " The Star Spangled Banner“ ? ” 21/11/2002 n u : (“The Star Spangled Banner”)

Question Parsing n Content Words : q(0) Nouns, adjectives, numbers, some verbs n E. g. “What mythical Scottish town appears for one day every 100 years ? ” n n n Q-class: LOC_TOWN q(0) : (mythical, Scottish, town, appears, one, day, 100, years) n Base Noun Phrases : n n : (“mythical Scottish town”) Head of the 1 st Noun Phrase: n n n h h : (town) n Quotation Words: u n E. g. “What was the original name before " The Star Spangled Banner“ ? ” 21/11/2002 n u : (“The Star Spangled Banner”)

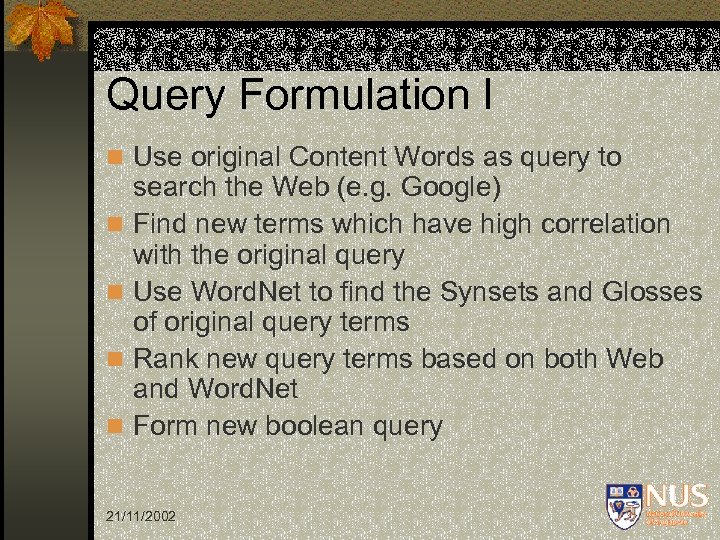

Query Formulation I n Use original Content Words as query to n n search the Web (e. g. Google) Find new terms which have high correlation with the original query Use Word. Net to find the Synsets and Glosses of original query terms Rank new query terms based on both Web and Word. Net Form new boolean query 21/11/2002

Query Formulation I n Use original Content Words as query to n n search the Web (e. g. Google) Find new terms which have high correlation with the original query Use Word. Net to find the Synsets and Glosses of original query terms Rank new query terms based on both Web and Word. Net Form new boolean query 21/11/2002

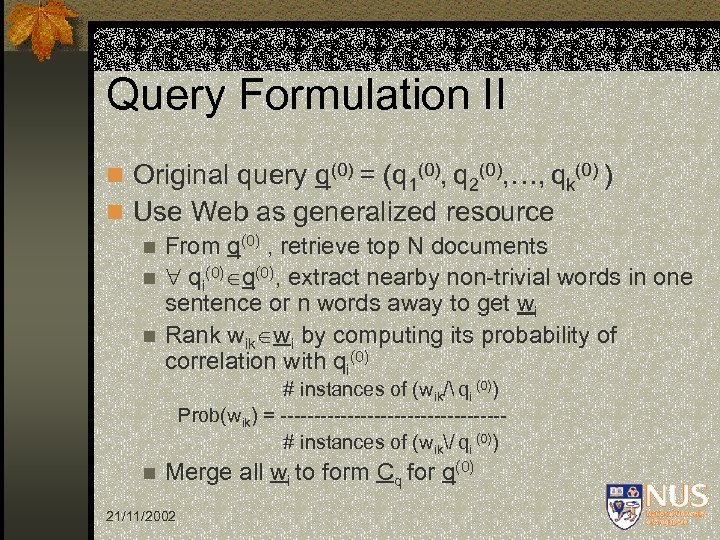

Query Formulation II n Original query q(0) = (q 1(0), q 2(0), …, qk(0) ) n Use Web as generalized resource n From q(0) , retrieve top N documents n qi(0) q(0), extract nearby non-trivial words in one sentence or n words away to get wi n Rank wi by computing its probability of correlation with qi(0) # instances of (wik/ qi (0)) Prob(wik) = -----------------# instances of (wik/ qi (0)) n Merge all wi to form Cq for q(0) 21/11/2002

Query Formulation II n Original query q(0) = (q 1(0), q 2(0), …, qk(0) ) n Use Web as generalized resource n From q(0) , retrieve top N documents n qi(0) q(0), extract nearby non-trivial words in one sentence or n words away to get wi n Rank wi by computing its probability of correlation with qi(0) # instances of (wik/ qi (0)) Prob(wik) = -----------------# instances of (wik/ qi (0)) n Merge all wi to form Cq for q(0) 21/11/2002

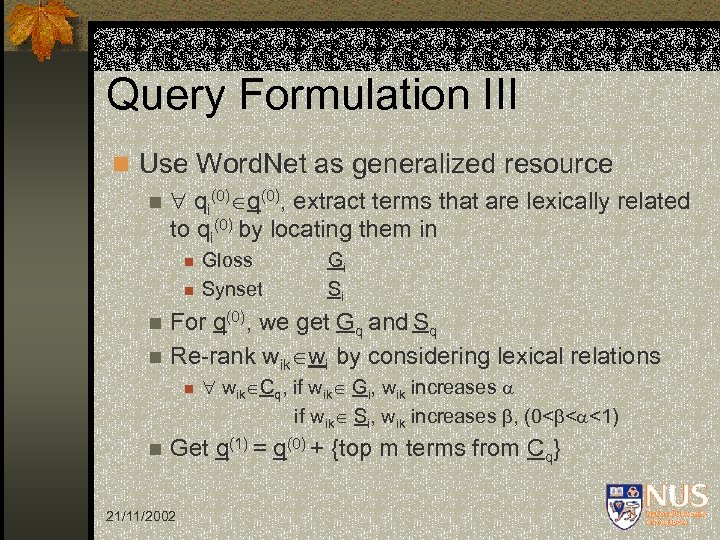

Query Formulation III n Use Word. Net as generalized resource n qi(0) q(0), extract terms that are lexically related to qi(0) by locating them in n n Gloss Synset Gi Si For q(0), we get Gq and Sq n Re-rank wi by considering lexical relations n n n wik Cq, if wik Gi, wik increases if wik Si, wik increases , (0< < <1) Get q(1) = q(0) + {top m terms from Cq} 21/11/2002

Query Formulation III n Use Word. Net as generalized resource n qi(0) q(0), extract terms that are lexically related to qi(0) by locating them in n n Gloss Synset Gi Si For q(0), we get Gq and Sq n Re-rank wi by considering lexical relations n n n wik Cq, if wik Gi, wik increases if wik Si, wik increases , (0< < <1) Get q(1) = q(0) + {top m terms from Cq} 21/11/2002

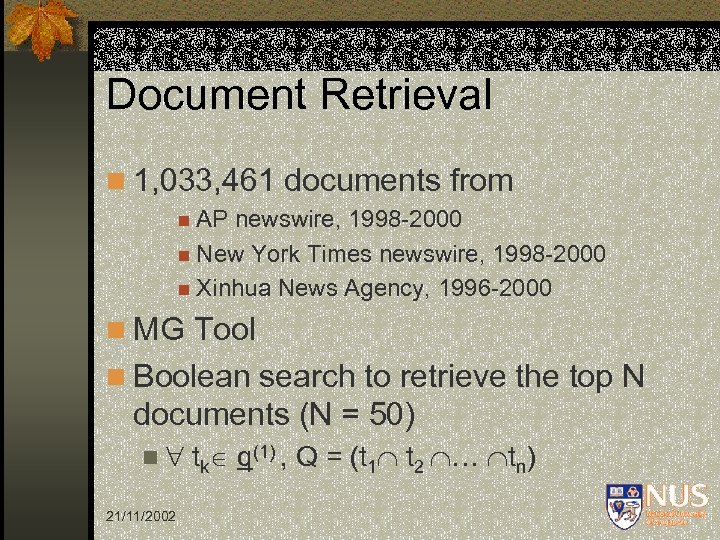

Document Retrieval n 1, 033, 461 documents from n AP newswire, 1998 -2000 n New York Times newswire, 1998 -2000 n Xinhua News Agency, 1996 -2000 n MG Tool n Boolean search to retrieve the top N documents (N = 50) n tk 21/11/2002 q(1) , Q = (t 1 t 2 … tn)

Document Retrieval n 1, 033, 461 documents from n AP newswire, 1998 -2000 n New York Times newswire, 1998 -2000 n Xinhua News Agency, 1996 -2000 n MG Tool n Boolean search to retrieve the top N documents (N = 50) n tk 21/11/2002 q(1) , Q = (t 1 t 2 … tn)

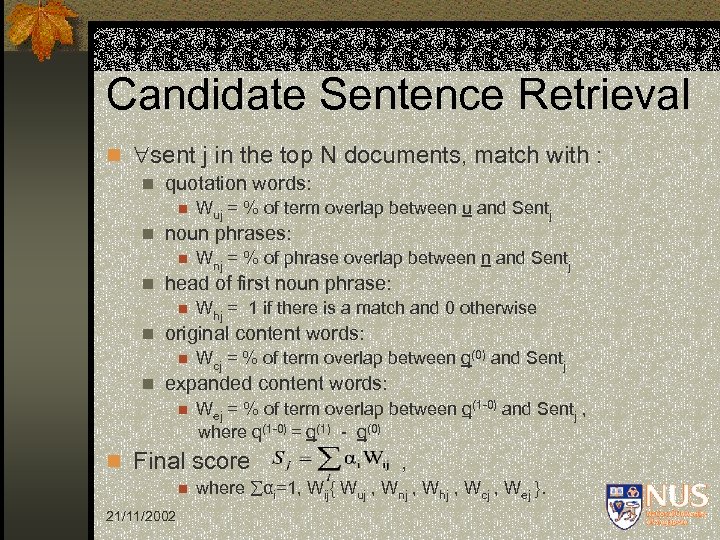

Candidate Sentence Retrieval n sent j in the top N documents, match with : n quotation words: n n noun phrases: n n Whj = 1 if there is a match and 0 otherwise original content words: n n Wnj = % of phrase overlap between n and Sentj head of first noun phrase: n n Wuj = % of term overlap between u and Sentj Wcj = % of term overlap between q(0) and Sentj expanded content words: n Wej = % of term overlap between q(1 -0) and Sentj , where q(1 -0) = q(1) - q(0) n Final score n 21/11/2002 , where αi=1, Wij{ Wuj , Wnj , Whj , Wcj , Wej }.

Candidate Sentence Retrieval n sent j in the top N documents, match with : n quotation words: n n noun phrases: n n Whj = 1 if there is a match and 0 otherwise original content words: n n Wnj = % of phrase overlap between n and Sentj head of first noun phrase: n n Wuj = % of term overlap between u and Sentj Wcj = % of term overlap between q(0) and Sentj expanded content words: n Wej = % of term overlap between q(1 -0) and Sentj , where q(1 -0) = q(1) - q(0) n Final score n 21/11/2002 , where αi=1, Wij{ Wuj , Wnj , Whj , Wcj , Wej }.

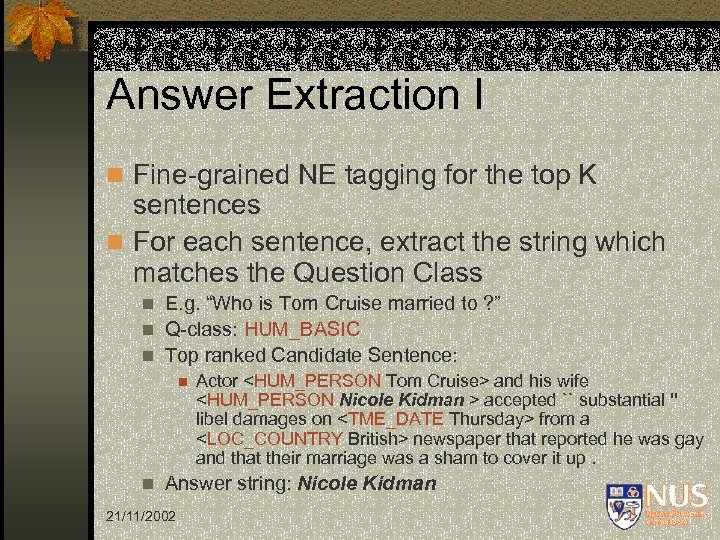

Answer Extraction I n Fine-grained NE tagging for the top K sentences n For each sentence, extract the string which matches the Question Class E. g. “Who is Tom Cruise married to ? ” n Q-class: HUM_BASIC n Top ranked Candidate Sentence: n n n Actor

Answer Extraction I n Fine-grained NE tagging for the top K sentences n For each sentence, extract the string which matches the Question Class E. g. “Who is Tom Cruise married to ? ” n Q-class: HUM_BASIC n Top ranked Candidate Sentence: n n n Actor

Answer Extraction II n For some questions, we cannot find any answer reduce the # of expanded query terms and repeat the Document Retrieval, Candidate Sentence Retrieval and Answer Extraction n The whole process lasts for N iterations (N=5) n If we still cannot find an exact answer, NIL is considered as the answer n n increase recall step by step while preserving precision 21/11/2002

Answer Extraction II n For some questions, we cannot find any answer reduce the # of expanded query terms and repeat the Document Retrieval, Candidate Sentence Retrieval and Answer Extraction n The whole process lasts for N iterations (N=5) n If we still cannot find an exact answer, NIL is considered as the answer n n increase recall step by step while preserving precision 21/11/2002

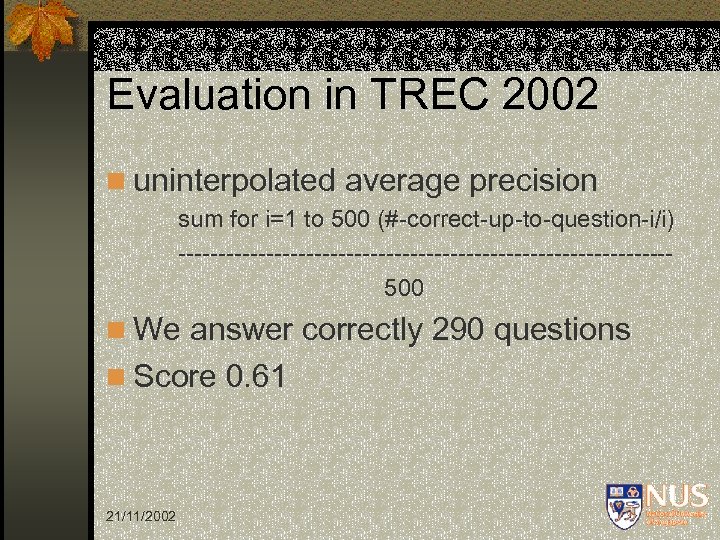

Evaluation in TREC 2002 n uninterpolated average precision sum for i=1 to 500 (#-correct-up-to-question-i/i) -------------------------------500 n We answer correctly 290 questions n Score 0. 61 21/11/2002

Evaluation in TREC 2002 n uninterpolated average precision sum for i=1 to 500 (#-correct-up-to-question-i/i) -------------------------------500 n We answer correctly 290 questions n Score 0. 61 21/11/2002

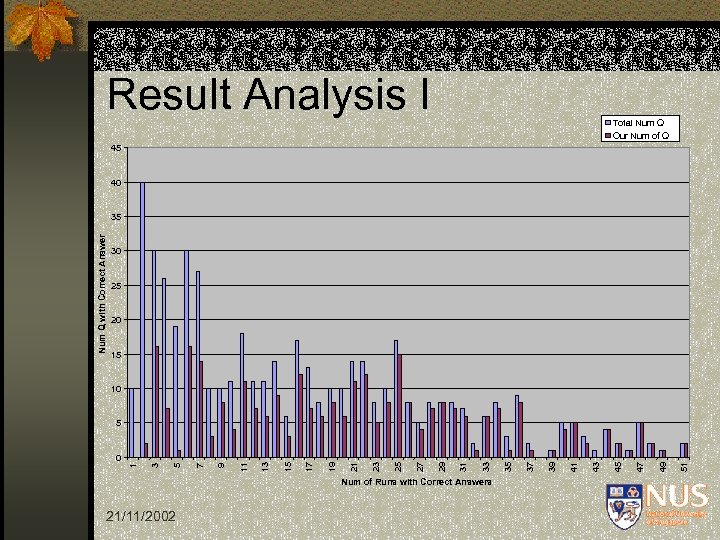

Result Analysis I Total Num Q Our Num of Q 45 40 Num Q with Correct Answer 35 30 25 20 15 10 Num of Runs with Correct Answers 21/11/2002 51 49 47 45 43 41 39 37 35 33 31 29 27 25 23 21 19 17 15 13 9 7 5 3 1 0 11 5

Result Analysis I Total Num Q Our Num of Q 45 40 Num Q with Correct Answer 35 30 25 20 15 10 Num of Runs with Correct Answers 21/11/2002 51 49 47 45 43 41 39 37 35 33 31 29 27 25 23 21 19 17 15 13 9 7 5 3 1 0 11 5

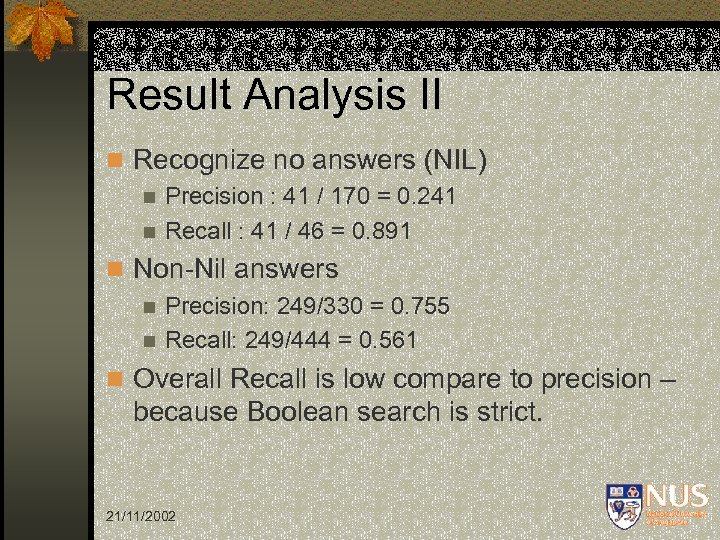

Result Analysis II n Recognize no answers (NIL) n Precision : 41 / 170 = 0. 241 n Recall : 41 / 46 = 0. 891 n Non-Nil answers n Precision: 249/330 = 0. 755 n Recall: 249/444 = 0. 561 n Overall Recall is low compare to precision – because Boolean search is strict. 21/11/2002

Result Analysis II n Recognize no answers (NIL) n Precision : 41 / 170 = 0. 241 n Recall : 41 / 46 = 0. 891 n Non-Nil answers n Precision: 249/330 = 0. 755 n Recall: 249/444 = 0. 561 n Overall Recall is low compare to precision – because Boolean search is strict. 21/11/2002

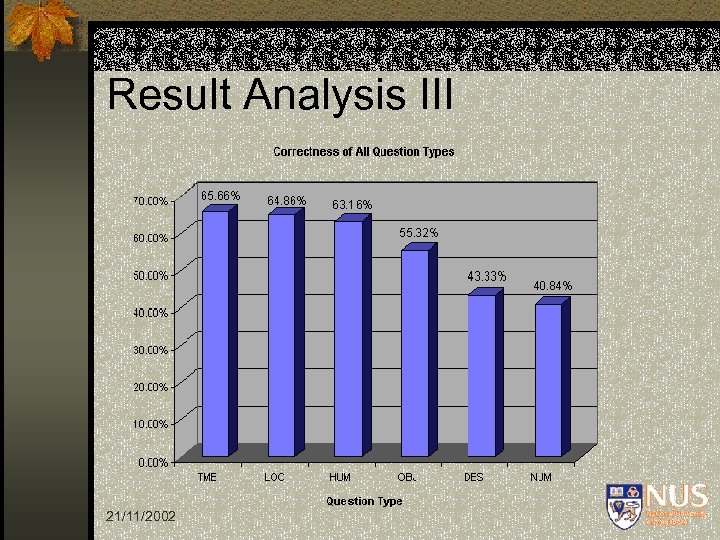

Result Analysis III 21/11/2002

Result Analysis III 21/11/2002

Conclusion n Integration of both Lexical Knowledge and External Resources n Detailed Question Classification n Use of Fine-grained Named Entities for Question Answering n Successive Constraint Relaxation 21/11/2002

Conclusion n Integration of both Lexical Knowledge and External Resources n Detailed Question Classification n Use of Fine-grained Named Entities for Question Answering n Successive Constraint Relaxation 21/11/2002

Future Work n Refining our terms correlation by considering a combination of local context, global context and lexical correlations n Exploring the structured use of external knowledge using the semantic perceptron net n Developing template-based answer selection n Longer-term research plan : Interactive QA, analysis and opinion questions 21/11/2002

Future Work n Refining our terms correlation by considering a combination of local context, global context and lexical correlations n Exploring the structured use of external knowledge using the semantic perceptron net n Developing template-based answer selection n Longer-term research plan : Interactive QA, analysis and opinion questions 21/11/2002

Thank You ! 21/11/2002

Thank You ! 21/11/2002