97e5db04a22a8c6aa689dfc4e8da1cdf.ppt

- Количество слайдов: 44

The impact of grid computing on UK research R Perrott Queen’s University Belfast

The impact of grid computing on UK research R Perrott Queen’s University Belfast

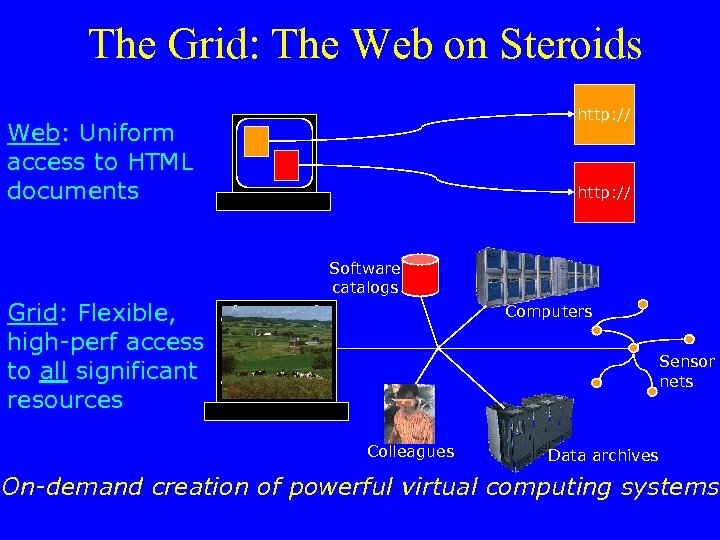

The Grid: The Web on Steroids http: // Web: Uniform access to HTML documents http: // Software catalogs Grid: Flexible, high-perf access to all significant resources Computers Sensor nets Colleagues Data archives On-demand creation of powerful virtual computing systems

The Grid: The Web on Steroids http: // Web: Uniform access to HTML documents http: // Software catalogs Grid: Flexible, high-perf access to all significant resources Computers Sensor nets Colleagues Data archives On-demand creation of powerful virtual computing systems

Why Now? • The Internet as infrastructure – Increasing bandwidth, advanced services • Advances in storage capacity – Terabyte for < $15, 000 • Increased availability of compute resources – Clusters, supercomputers, etc. • Advances in application concepts – Simulation-based design, advanced scientific instruments, collaborative engineering, . . .

Why Now? • The Internet as infrastructure – Increasing bandwidth, advanced services • Advances in storage capacity – Terabyte for < $15, 000 • Increased availability of compute resources – Clusters, supercomputers, etc. • Advances in application concepts – Simulation-based design, advanced scientific instruments, collaborative engineering, . . .

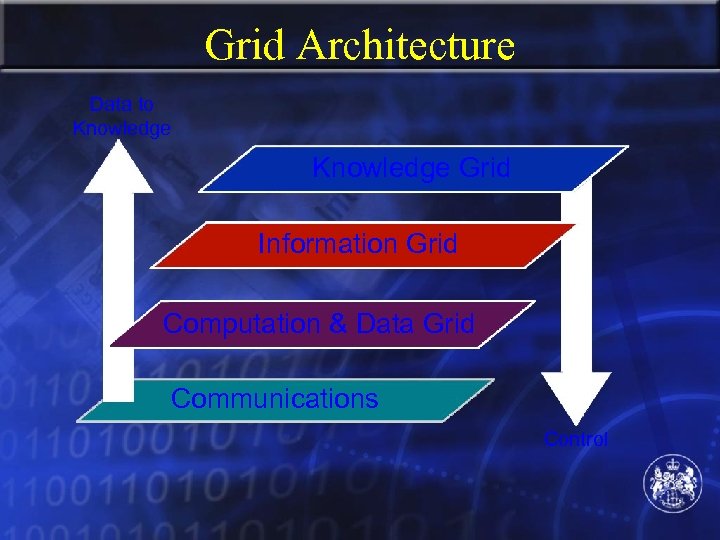

Grids – computational grid • provides the raw computing power, high speed bandwidth interconnection and associate data storage – information grid • allows easily accessible connections to major sources of information and tools for its analysis and visualisation – knowledge grid • gives added value to the information and also provides intelligent guidance for decisionmakers

Grids – computational grid • provides the raw computing power, high speed bandwidth interconnection and associate data storage – information grid • allows easily accessible connections to major sources of information and tools for its analysis and visualisation – knowledge grid • gives added value to the information and also provides intelligent guidance for decisionmakers

Grid Architecture Data to Knowledge Grid Information Grid Computation & Data Grid Communications Control

Grid Architecture Data to Knowledge Grid Information Grid Computation & Data Grid Communications Control

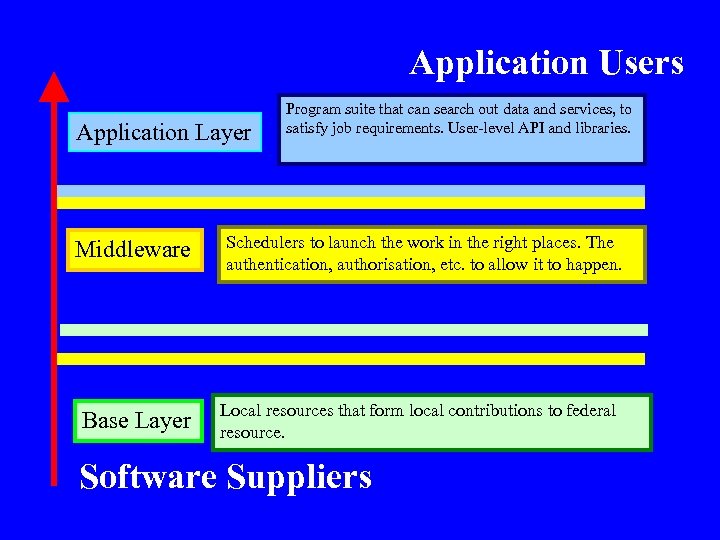

Application Users Application Layer Middleware Base Layer Program suite that can search out data and services, to satisfy job requirements. User-level API and libraries. Schedulers to launch the work in the right places. The authentication, authorisation, etc. to allow it to happen. Local resources that form local contributions to federal resource. Software Suppliers

Application Users Application Layer Middleware Base Layer Program suite that can search out data and services, to satisfy job requirements. User-level API and libraries. Schedulers to launch the work in the right places. The authentication, authorisation, etc. to allow it to happen. Local resources that form local contributions to federal resource. Software Suppliers

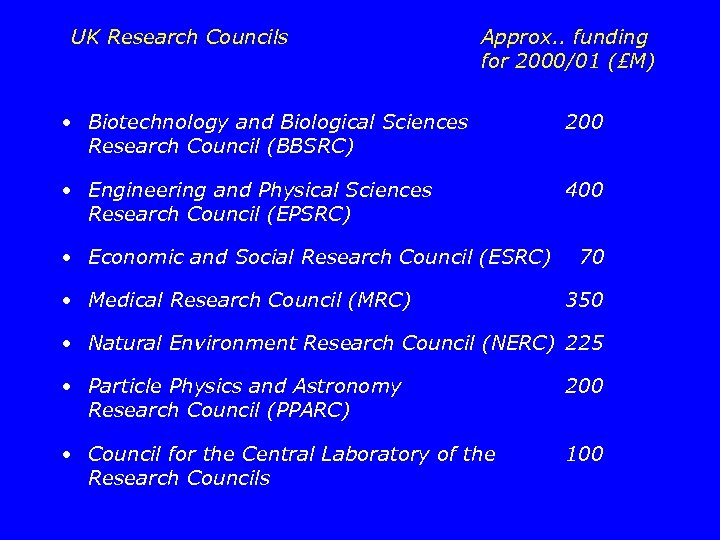

UK Research Councils Approx. . funding for 2000/01 (£M) • Biotechnology and Biological Sciences Research Council (BBSRC) 200 • Engineering and Physical Sciences Research Council (EPSRC) 400 • Economic and Social Research Council (ESRC) • Medical Research Council (MRC) 70 350 • Natural Environment Research Council (NERC) 225 • Particle Physics and Astronomy Research Council (PPARC) 200 • Council for the Central Laboratory of the Research Councils 100

UK Research Councils Approx. . funding for 2000/01 (£M) • Biotechnology and Biological Sciences Research Council (BBSRC) 200 • Engineering and Physical Sciences Research Council (EPSRC) 400 • Economic and Social Research Council (ESRC) • Medical Research Council (MRC) 70 350 • Natural Environment Research Council (NERC) 225 • Particle Physics and Astronomy Research Council (PPARC) 200 • Council for the Central Laboratory of the Research Councils 100

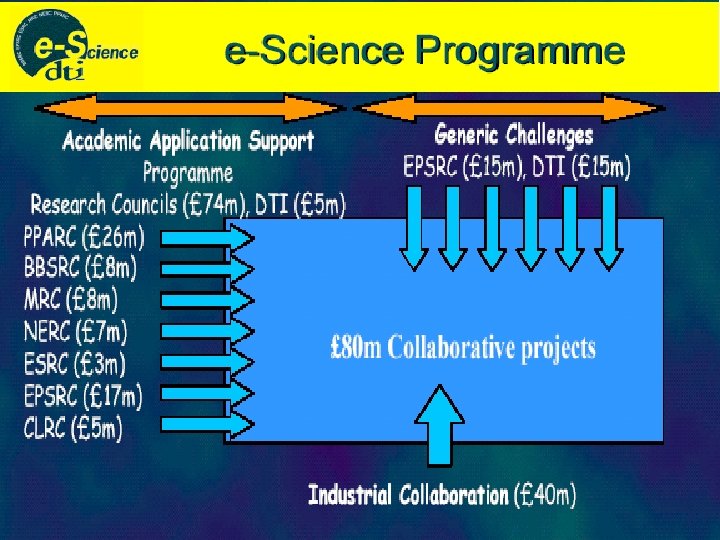

UK Grid Development Plan • Network of Grid Core Programme e. Science Centres • Development of Generic Grid Middleware • Grid Grand Challenge Project • Support for e-Science Projects • International Involvement • Grid Network Team

UK Grid Development Plan • Network of Grid Core Programme e. Science Centres • Development of Generic Grid Middleware • Grid Grand Challenge Project • Support for e-Science Projects • International Involvement • Grid Network Team

1. Grid Core Programme Centres • National e-Science Centre to achieve international visibility • National Centre will host international e. Science seminars ‘similar’ to Newton Institute • Funding 8 Regional e-Science Centres to form coherent UK Grid • DTI funding requires matching industrial involvement • Good overlap with Particle Physics and Astro. Grid Centres

1. Grid Core Programme Centres • National e-Science Centre to achieve international visibility • National Centre will host international e. Science seminars ‘similar’ to Newton Institute • Funding 8 Regional e-Science Centres to form coherent UK Grid • DTI funding requires matching industrial involvement • Good overlap with Particle Physics and Astro. Grid Centres

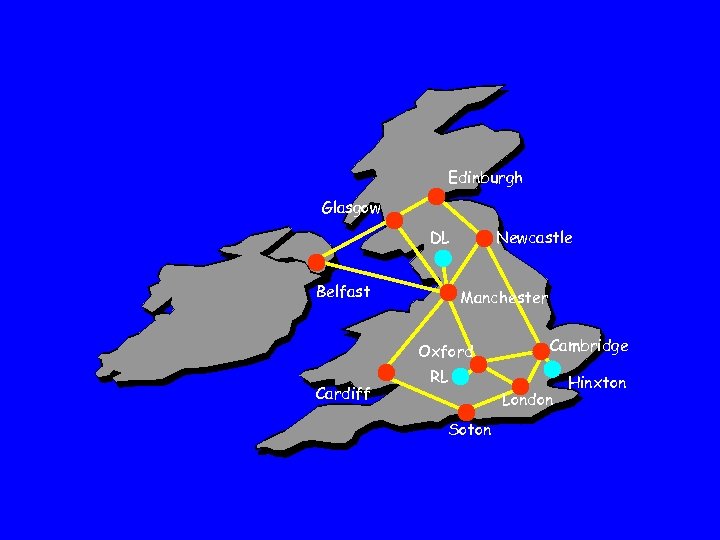

Edinburgh Glasgow DL Belfast Newcastle Manchester Oxford Cardiff Cambridge RL London Soton Hinxton

Edinburgh Glasgow DL Belfast Newcastle Manchester Oxford Cardiff Cambridge RL London Soton Hinxton

Access Grid Centres will be Access Grid Nodes • Access Grid will enable informal and formal group to group collaboration • It enables: – Distributed lectures and seminars – Virtual meetings – Complex distributed grid demos • Will improve the user experience (“sense of presence”) - natural interactions (natural audio, big display)

Access Grid Centres will be Access Grid Nodes • Access Grid will enable informal and formal group to group collaboration • It enables: – Distributed lectures and seminars – Virtual meetings – Complex distributed grid demos • Will improve the user experience (“sense of presence”) - natural interactions (natural audio, big display)

2. Generic Grid Middleware • Continuing dialogue with major industrial players - IBM, Microsoft, Oracle, Sun, HP. . - IBM Press Announcement August 2001 • Open Call for Proposals from July 2001 plus Centre industrial projects • Funding Computer Science involvement in EU Data. Grid Middleware Work Packages

2. Generic Grid Middleware • Continuing dialogue with major industrial players - IBM, Microsoft, Oracle, Sun, HP. . - IBM Press Announcement August 2001 • Open Call for Proposals from July 2001 plus Centre industrial projects • Funding Computer Science involvement in EU Data. Grid Middleware Work Packages

3. Grid Interdisciplinary Research Centres Project • 4 IT-centric IRCs funded - DIRC : Dependability - EQUATOR : HCI - AKT : Knowledge Management - Medical Informatics • ‘Grand Challenge’ in Medical/Healthcare Informatics - issues of security, privacy and trust

3. Grid Interdisciplinary Research Centres Project • 4 IT-centric IRCs funded - DIRC : Dependability - EQUATOR : HCI - AKT : Knowledge Management - Medical Informatics • ‘Grand Challenge’ in Medical/Healthcare Informatics - issues of security, privacy and trust

4. Support for e-Science Projects • ‘Grid Starter Kit’ Version 1. 0 available for distribution from July 2001 • Set up Grid Support Centre • Training Courses • National e-Science Centre Research Seminar Programme

4. Support for e-Science Projects • ‘Grid Starter Kit’ Version 1. 0 available for distribution from July 2001 • Set up Grid Support Centre • Training Courses • National e-Science Centre Research Seminar Programme

5. International Involvement • ‘Grid. Net’ at National Centre for UK participation in the Global Grid Forum • Funding CERN and i. VDGL ‘Grid Fellowships’ • Participation/Leadership in EU Grid Activities - New FP 5 Grid Projects (Data. Tag, GRIP, …) • Establishing links with major US Centres – San Diego Supercomputer Center, NCSA

5. International Involvement • ‘Grid. Net’ at National Centre for UK participation in the Global Grid Forum • Funding CERN and i. VDGL ‘Grid Fellowships’ • Participation/Leadership in EU Grid Activities - New FP 5 Grid Projects (Data. Tag, GRIP, …) • Establishing links with major US Centres – San Diego Supercomputer Center, NCSA

6. Grid Network Team • Tasked with ensuring adequate end-to-end bandwidth for e-Science Projects • Identify/fix network bottlenecks • Identify network requirements of e-Science projects • Funding traffic engineering project • Upgrade Super. JANET 4 connection to sites

6. Grid Network Team • Tasked with ensuring adequate end-to-end bandwidth for e-Science Projects • Identify/fix network bottlenecks • Identify network requirements of e-Science projects • Funding traffic engineering project • Upgrade Super. JANET 4 connection to sites

Network Issues • Upgrading SJ 4 backbone from 2. 5 Gbps to 10 Gbps • Installing 2. 5 Gbps link to GEANT pan. European network • Trans. Atlantic bandwidth procurement – 2. 5 Gbps dedicated fibre – Connections to Abilene and ESNet • EU Data. TAG project 2. 5 Gbps link from CERN to Chicago

Network Issues • Upgrading SJ 4 backbone from 2. 5 Gbps to 10 Gbps • Installing 2. 5 Gbps link to GEANT pan. European network • Trans. Atlantic bandwidth procurement – 2. 5 Gbps dedicated fibre – Connections to Abilene and ESNet • EU Data. TAG project 2. 5 Gbps link from CERN to Chicago

Early e-Science Demonstrators Funded • Dynamic Brain Atlas • Biodiversity • Chemical Structures Under Development/Consideration • Grid-Microscopy • Robotic Astronomy • Collaborative Visualisation • Mouse Genes • 3 D Engineering Prototypes • Medical Imaging/VR

Early e-Science Demonstrators Funded • Dynamic Brain Atlas • Biodiversity • Chemical Structures Under Development/Consideration • Grid-Microscopy • Robotic Astronomy • Collaborative Visualisation • Mouse Genes • 3 D Engineering Prototypes • Medical Imaging/VR

Particle Physics and Astronomy Research Council (PPARC) • Grid. PP (http: //www. gridpp. ac. uk/) • to develop the Grid technologies required to meet the LHC computing challenge • collaboration with international grid developments in Europe and the US

Particle Physics and Astronomy Research Council (PPARC) • Grid. PP (http: //www. gridpp. ac. uk/) • to develop the Grid technologies required to meet the LHC computing challenge • collaboration with international grid developments in Europe and the US

Particle Physics and Astronomy Research Council (PPARC) • ASTROGRID (http: //www. astrogrid. ac. uk/) • a ~£ 4 M project aimed at building a datagrid for UK astronomy, which will form the UK contribution to a global Virtual Observatory

Particle Physics and Astronomy Research Council (PPARC) • ASTROGRID (http: //www. astrogrid. ac. uk/) • a ~£ 4 M project aimed at building a datagrid for UK astronomy, which will form the UK contribution to a global Virtual Observatory

EPSRC Testbeds (1) • DAME : Distributed Aircraft Maintenance Environment • Reality. Grid : closely couple high performance computing, high throughput experiment and visualization • GEODISE : Grid Enabled Optimisation and Des. Ign Search for Engineering

EPSRC Testbeds (1) • DAME : Distributed Aircraft Maintenance Environment • Reality. Grid : closely couple high performance computing, high throughput experiment and visualization • GEODISE : Grid Enabled Optimisation and Des. Ign Search for Engineering

EPSRC Testbeds (2) • Combi. Chem : combinatorial chemistry structure-property mapping • My. Grid : personalised extensible environments for data-intensive experiments in biology • Discovery Net : high throughput sensing

EPSRC Testbeds (2) • Combi. Chem : combinatorial chemistry structure-property mapping • My. Grid : personalised extensible environments for data-intensive experiments in biology • Discovery Net : high throughput sensing

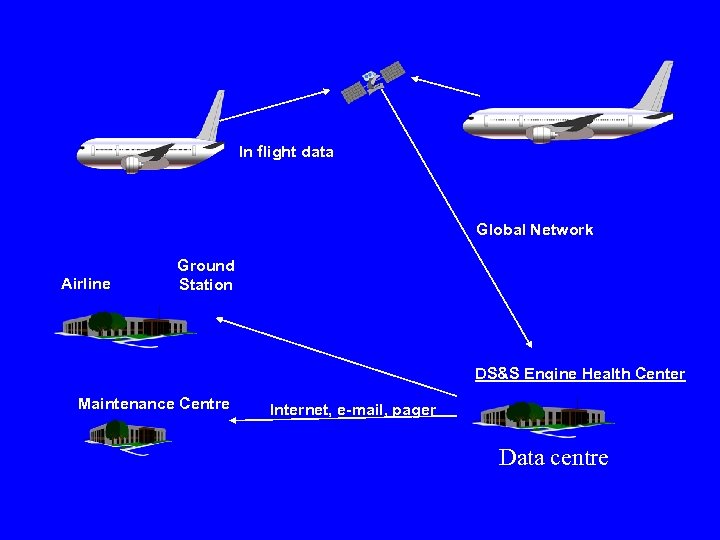

Distributed Aircraft Maintenance Environment Jim Austin, University of York Peter Dew, Leeds Graham Hesketh, Rolls-Royce

Distributed Aircraft Maintenance Environment Jim Austin, University of York Peter Dew, Leeds Graham Hesketh, Rolls-Royce

In flight data Global Network Airline Ground Station DS&S Engine Health Center Maintenance Centre Internet, e-mail, pager Data centre

In flight data Global Network Airline Ground Station DS&S Engine Health Center Maintenance Centre Internet, e-mail, pager Data centre

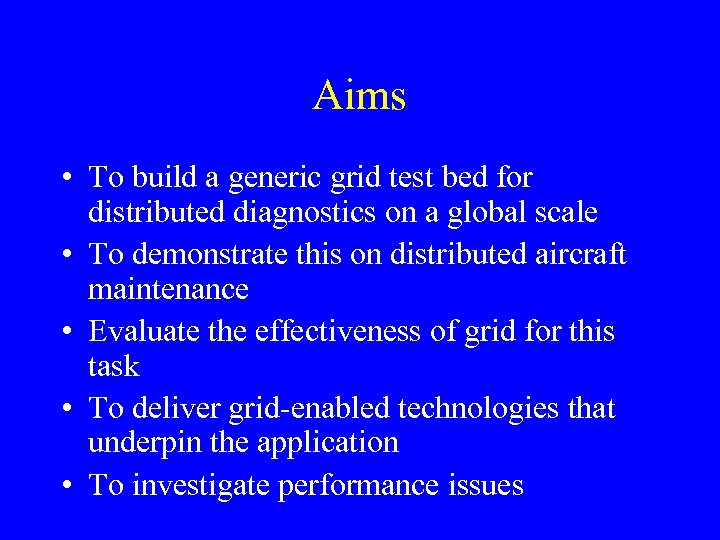

Aims • To build a generic grid test bed for distributed diagnostics on a global scale • To demonstrate this on distributed aircraft maintenance • Evaluate the effectiveness of grid for this task • To deliver grid-enabled technologies that underpin the application • To investigate performance issues

Aims • To build a generic grid test bed for distributed diagnostics on a global scale • To demonstrate this on distributed aircraft maintenance • Evaluate the effectiveness of grid for this task • To deliver grid-enabled technologies that underpin the application • To investigate performance issues

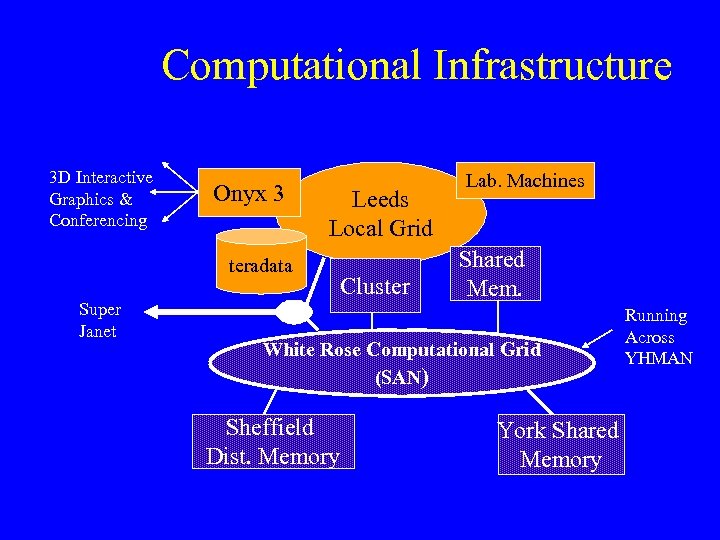

Computational Infrastructure 3 D Interactive Graphics & Conferencing Onyx 3 Leeds Local Grid teradata Super Janet Cluster Lab. Machines Shared Mem. White Rose Computational Grid (SAN) Sheffield Dist. Memory York Shared Memory Running Across YHMAN

Computational Infrastructure 3 D Interactive Graphics & Conferencing Onyx 3 Leeds Local Grid teradata Super Janet Cluster Lab. Machines Shared Mem. White Rose Computational Grid (SAN) Sheffield Dist. Memory York Shared Memory Running Across YHMAN

ibm My. Grid Personalised extensible environments for data-intensive experiments in biology Professor Carole Goble, University of Manchester Dr Alan Robinson, EBI

ibm My. Grid Personalised extensible environments for data-intensive experiments in biology Professor Carole Goble, University of Manchester Dr Alan Robinson, EBI

Consortium • Scientific Team – Biologists – GSK, AZ, Merck KGa. A, Manchester, EBI • Technical Team – Manchester, Southampton, Newcastle, Sheffield, EBI, Nottingham – IBM, SUN – Genetic. Xchange – Network Inference, Epistemics Ltd

Consortium • Scientific Team – Biologists – GSK, AZ, Merck KGa. A, Manchester, EBI • Technical Team – Manchester, Southampton, Newcastle, Sheffield, EBI, Nottingham – IBM, SUN – Genetic. Xchange – Network Inference, Epistemics Ltd

Comparative Functional Genomics • Vast amounts of data & escalating • Highly heterogeneous – Data types – Data forms – Community • Highly complex and inter-related • Volatile

Comparative Functional Genomics • Vast amounts of data & escalating • Highly heterogeneous – Data types – Data forms – Community • Highly complex and inter-related • Volatile

My. Grid e-Science Objectives Revolutionise scientific practice in biology • • Straightforward discovery, interoperation, sharing Improving quality of both experiments and data Individual creativity & collaborative working Enabling genomic level bioinformatics Cottage Industry to an Industrial Scale

My. Grid e-Science Objectives Revolutionise scientific practice in biology • • Straightforward discovery, interoperation, sharing Improving quality of both experiments and data Individual creativity & collaborative working Enabling genomic level bioinformatics Cottage Industry to an Industrial Scale

On the shoulders of giants We are not starting from scratch… • Globus Starter Kit … • Web Service initiatives … • Our own environments … • Integration platforms for bioinformatics … • Standards e. g. OMG LSR, I 3 C … • Experience with Open Source

On the shoulders of giants We are not starting from scratch… • Globus Starter Kit … • Web Service initiatives … • Our own environments … • Integration platforms for bioinformatics … • Standards e. g. OMG LSR, I 3 C … • Experience with Open Source

Specific Outcomes • E-Scientists – Environment built on toolkits for service access, personalisation & community – Gene function expression analysis – Annotation workbench for the PRINTS pattern database • Developers – My. Grid-in-a-Box developers kit – Re-purposing existing integration platforms

Specific Outcomes • E-Scientists – Environment built on toolkits for service access, personalisation & community – Gene function expression analysis – Annotation workbench for the PRINTS pattern database • Developers – My. Grid-in-a-Box developers kit – Re-purposing existing integration platforms

Discovery Net • Yike Guo, John Darlington (Dept. of Computing), • John Hassard (Depts. of Physics and Bioengineering) • Bob Spence (Dept. of Electrical Engineering) • Tony Cass (Department of Biochemistry), • Sevket Durucan (T. H. Huxley School of Environment) • Imperial College London

Discovery Net • Yike Guo, John Darlington (Dept. of Computing), • John Hassard (Depts. of Physics and Bioengineering) • Bob Spence (Dept. of Electrical Engineering) • Tony Cass (Department of Biochemistry), • Sevket Durucan (T. H. Huxley School of Environment) • Imperial College London

AIM • To design, develop and implement an infrastructure to support real time processing, interaction, integration, visualisation and mining of massive amounts of time critical data generated by high throughput devices.

AIM • To design, develop and implement an infrastructure to support real time processing, interaction, integration, visualisation and mining of massive amounts of time critical data generated by high throughput devices.

The Consortium • Industry Connection : 4 Spin-off companies + related companies (Astra. Zeneca, Pfizer, GSK, Cisco, IBM, HP, Fujitsu, Gene Logic, Applera, Evotec, International Power, Hydro Quebec, BP, British Energy, …. )

The Consortium • Industry Connection : 4 Spin-off companies + related companies (Astra. Zeneca, Pfizer, GSK, Cisco, IBM, HP, Fujitsu, Gene Logic, Applera, Evotec, International Power, Hydro Quebec, BP, British Energy, …. )

Industrial Contribution • Hardware : sensors (photodiode arrays), systems (optics, mechanical systems, DSPs, FPGAs) • Software (analysis packages, algorithms, data warehousing and mining systems) • Intellectual Property: access to IP portfolio suite at no cost • Data: raw and processed data from biotechnology, pharmacogenomic, remote sensing (GUSTO installations, satellite data from geo-hazard programmes) and renewable energy data (from remote tidal power systems)

Industrial Contribution • Hardware : sensors (photodiode arrays), systems (optics, mechanical systems, DSPs, FPGAs) • Software (analysis packages, algorithms, data warehousing and mining systems) • Intellectual Property: access to IP portfolio suite at no cost • Data: raw and processed data from biotechnology, pharmacogenomic, remote sensing (GUSTO installations, satellite data from geo-hazard programmes) and renewable energy data (from remote tidal power systems)

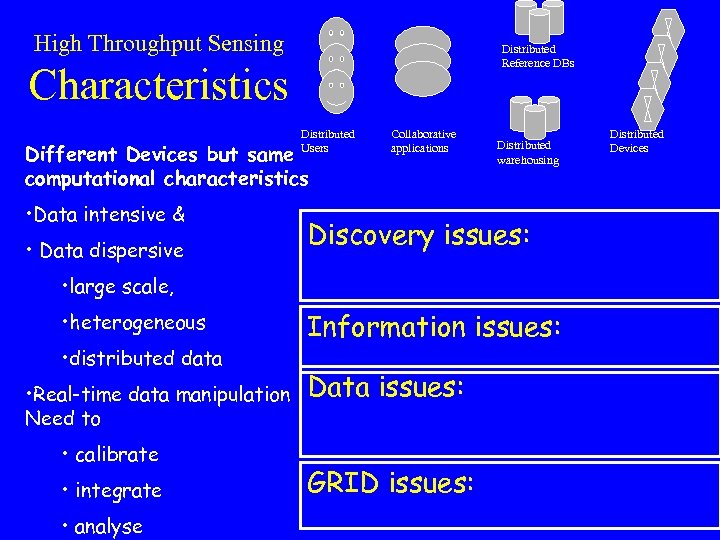

High Throughput Sensing Distributed Reference DBs Characteristics Distributed Users Different Devices but same computational characteristics • Data intensive & • Data dispersive Collaborative applications Distributed warehousing Discovery issues: • large scale, • heterogeneous • distributed data • Real-time data manipulation Need to • calibrate • integrate • analyse Information issues: Data issues: GRID issues: Distributed Devices

High Throughput Sensing Distributed Reference DBs Characteristics Distributed Users Different Devices but same computational characteristics • Data intensive & • Data dispersive Collaborative applications Distributed warehousing Discovery issues: • large scale, • heterogeneous • distributed data • Real-time data manipulation Need to • calibrate • integrate • analyse Information issues: Data issues: GRID issues: Distributed Devices

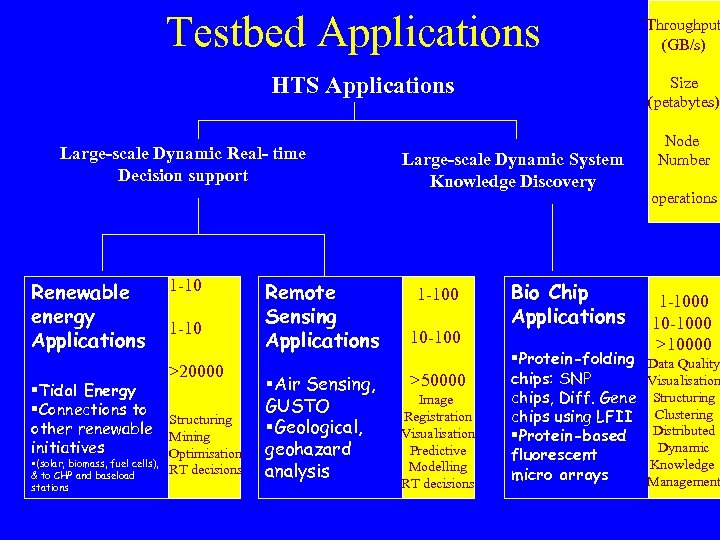

Testbed Applications HTS Applications Large-scale Dynamic Real- time Decision support Renewable energy Applications 1 -10 >20000 §Tidal Energy §Connections to Structuring other renewable Mining initiatives Optimisation §(solar, biomass, fuel cells), & to CHP and baseload stations RT decisions Remote Sensing Applications §Air Sensing, GUSTO §Geological, geohazard analysis Size (petabytes) Large-scale Dynamic System Knowledge Discovery 1 -100 10 -100 >50000 Image Registration Visualisation Predictive Modelling RT decisions Throughput (GB/s) Bio Chip Applications §Protein-folding chips: SNP chips, Diff. Gene chips using LFII §Protein-based fluorescent micro arrays Node Number operations 1 -1000 10 -1000 >10000 Data Quality Visualisation Structuring Clustering Distributed Dynamic Knowledge Management

Testbed Applications HTS Applications Large-scale Dynamic Real- time Decision support Renewable energy Applications 1 -10 >20000 §Tidal Energy §Connections to Structuring other renewable Mining initiatives Optimisation §(solar, biomass, fuel cells), & to CHP and baseload stations RT decisions Remote Sensing Applications §Air Sensing, GUSTO §Geological, geohazard analysis Size (petabytes) Large-scale Dynamic System Knowledge Discovery 1 -100 10 -100 >50000 Image Registration Visualisation Predictive Modelling RT decisions Throughput (GB/s) Bio Chip Applications §Protein-folding chips: SNP chips, Diff. Gene chips using LFII §Protein-based fluorescent micro arrays Node Number operations 1 -1000 10 -1000 >10000 Data Quality Visualisation Structuring Clustering Distributed Dynamic Knowledge Management

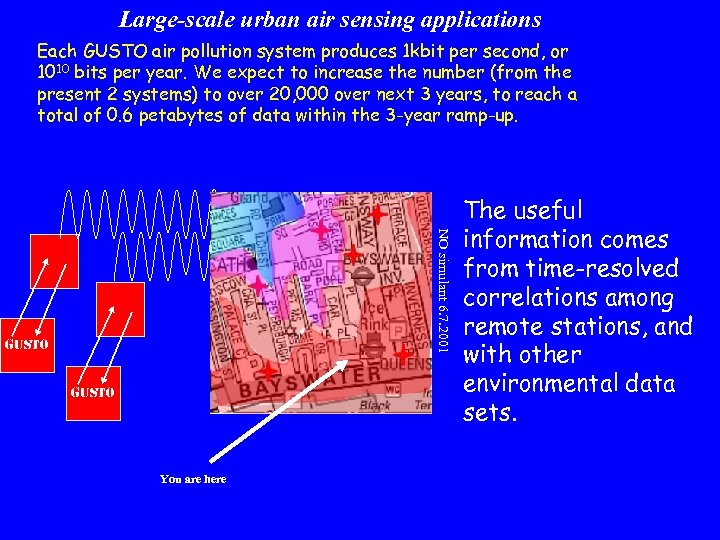

Large-scale urban air sensing applications Each GUSTO air pollution system produces 1 kbit per second, or 1010 bits per year. We expect to increase the number (from the present 2 systems) to over 20, 000 over next 3 years, to reach a total of 0. 6 petabytes of data within the 3 -year ramp-up. NO simulant 6. 7. 2001 GUSTO You are here The useful information comes from time-resolved correlations among remote stations, and with other environmental data sets.

Large-scale urban air sensing applications Each GUSTO air pollution system produces 1 kbit per second, or 1010 bits per year. We expect to increase the number (from the present 2 systems) to over 20, 000 over next 3 years, to reach a total of 0. 6 petabytes of data within the 3 -year ramp-up. NO simulant 6. 7. 2001 GUSTO You are here The useful information comes from time-resolved correlations among remote stations, and with other environmental data sets.

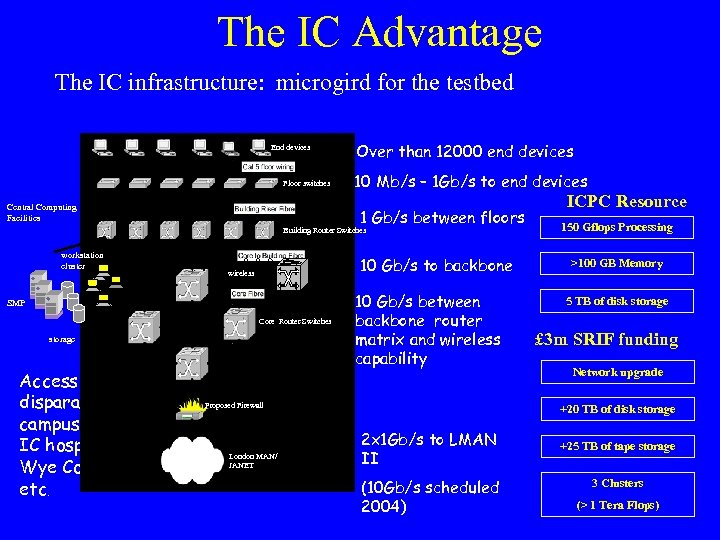

The IC Advantage The IC infrastructure: microgird for the testbed End devices Floor switches Central Computing Facilities Over than 12000 end devices 10 Mb/s – 1 Gb/s to end devices 1 Gb/s between floors Building Router Switches workstation cluster 10 Gb/s to backbone wireless SMP Core Router Switches storage Access to disparate offcampus sites: IC hospitals, Wye College etc. 10 Gb/s between backbone router matrix and wireless capability Proposed Firewall London MAN/ JANET ICPC Resource 150 Gflops Processing >100 GB Memory 5 TB of disk storage £ 3 m SRIF funding Network upgrade +20 TB of disk storage 2 x 1 Gb/s to LMAN II (10 Gb/s scheduled 2004) +25 TB of tape storage 3 Clusters (> 1 Tera Flops)

The IC Advantage The IC infrastructure: microgird for the testbed End devices Floor switches Central Computing Facilities Over than 12000 end devices 10 Mb/s – 1 Gb/s to end devices 1 Gb/s between floors Building Router Switches workstation cluster 10 Gb/s to backbone wireless SMP Core Router Switches storage Access to disparate offcampus sites: IC hospitals, Wye College etc. 10 Gb/s between backbone router matrix and wireless capability Proposed Firewall London MAN/ JANET ICPC Resource 150 Gflops Processing >100 GB Memory 5 TB of disk storage £ 3 m SRIF funding Network upgrade +20 TB of disk storage 2 x 1 Gb/s to LMAN II (10 Gb/s scheduled 2004) +25 TB of tape storage 3 Clusters (> 1 Tera Flops)

Conclusions • Good ‘buy-in’ from scientists and engineers • Considerable industrial interest • Reasonable ‘buy-in’ from good fraction of Computer Science community but not all • Serious interest in Grids from IBM, HP, Oracle and Sun • On paper UK now has most visible and focussed e-Science/Grid programme in Europe Ø Now have to deliver!

Conclusions • Good ‘buy-in’ from scientists and engineers • Considerable industrial interest • Reasonable ‘buy-in’ from good fraction of Computer Science community but not all • Serious interest in Grids from IBM, HP, Oracle and Sun • On paper UK now has most visible and focussed e-Science/Grid programme in Europe Ø Now have to deliver!

US Grid Projects/Proposals • • • NASA Information Power Grid DOE Science Grid NSF National Virtual Observatory NSF Gri. Phy. N DOE Particle Physics Data Grid NSF Distributed Terascale Facility DOE ASCI Grid DOE Earth Systems Grid DARPA Co. ABS Grid NEESGrid NSF BIRN NSF i. VDGL

US Grid Projects/Proposals • • • NASA Information Power Grid DOE Science Grid NSF National Virtual Observatory NSF Gri. Phy. N DOE Particle Physics Data Grid NSF Distributed Terascale Facility DOE ASCI Grid DOE Earth Systems Grid DARPA Co. ABS Grid NEESGrid NSF BIRN NSF i. VDGL

EU Grid. Projects • • • Data. Grid (CERN, . . ) Euro. Grid (Unicore) Data. Tag (TTT…) Astrophysical Virtual Observatory GRIP (Globus/Unicore) GRIA (Industrial applications) Grid. Lab (Cactus Toolkit) Cross. Grid (Infrastructure Components) EGSO (Solar Physics)

EU Grid. Projects • • • Data. Grid (CERN, . . ) Euro. Grid (Unicore) Data. Tag (TTT…) Astrophysical Virtual Observatory GRIP (Globus/Unicore) GRIA (Industrial applications) Grid. Lab (Cactus Toolkit) Cross. Grid (Infrastructure Components) EGSO (Solar Physics)