9b3f3aad6cdea3319fbf15215f08ad48.ppt

- Количество слайдов: 28

The Grid approach for the HEP computing problem Massimo Sgaravatto INFN Padova massimo. sgaravatto@pd. infn. it

HEP computing characteristics n Large numbers of independent events to process n n n Large data sets, mostly read-only Modest floating point requirement n n n SPECint performance Batch processing for production & selection - interactive for analysis Commodity components are just fine for HEP n n n trivial parallelism Masses of experience with inexpensive farms Long experience with mass storage systems Very large aggregate requirements – computation, data

The LHC Challenge n Jump in orders of magnitude wrt. previous experiments n Geographical dispersion of people and of resources n n Scale n n n n Also a political issue Petabytes per year of data Thousands of processors Thousands of disks Terabits/second of I/O bandwidth … Complexity Lifetime (20 years) …

World Wide Collaboration distributed computing & storage capacity CMS: 1800 physicists 150 institutes 32 countries

The scale …

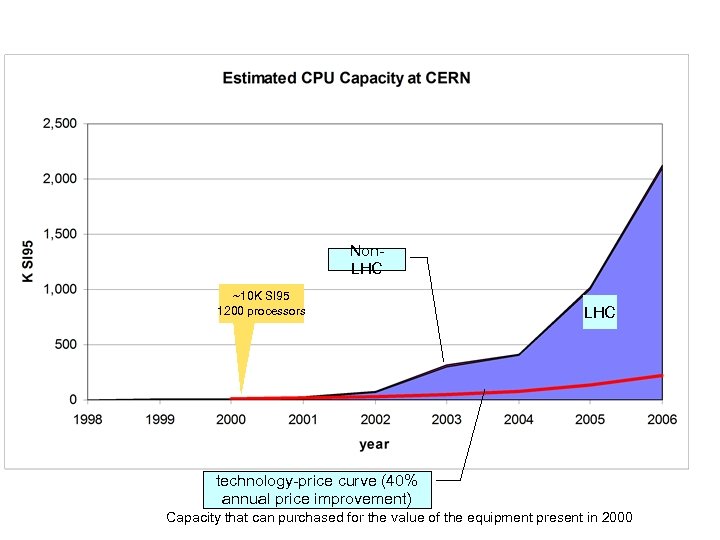

Non. LHC ~10 K SI 95 1200 processors LHC technology-price curve (40% annual price improvement) Capacity that can purchased for the value of the equipment present in 2000

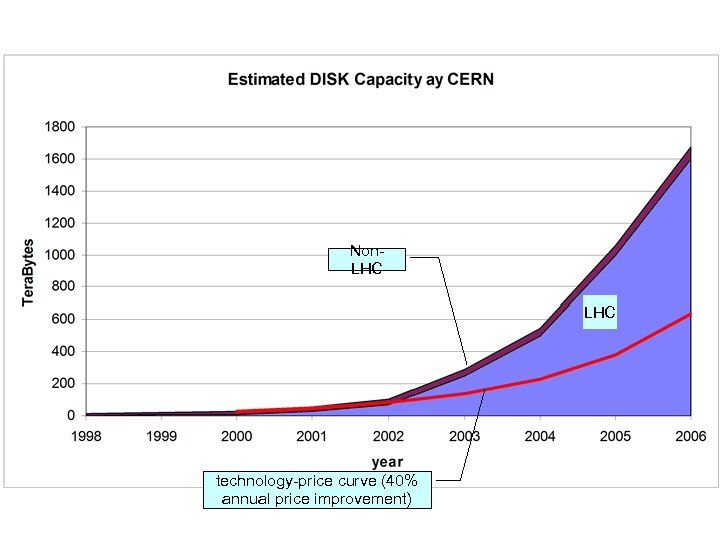

Non. LHC technology-price curve (40% annual price improvement)

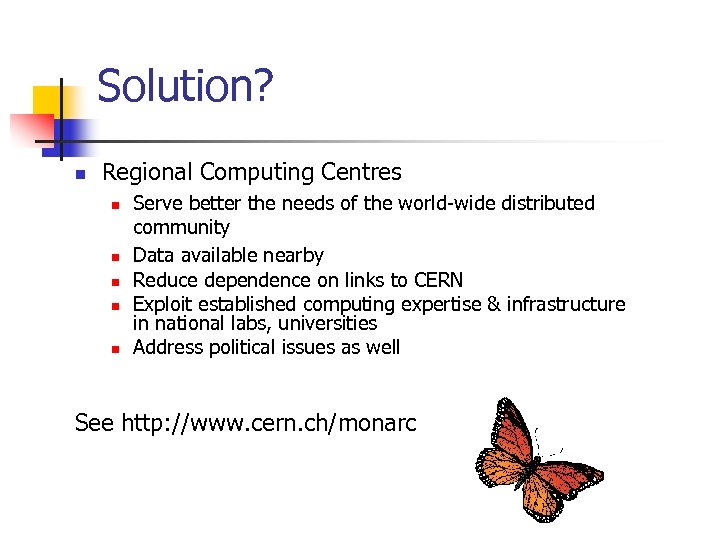

Solution? n Regional Computing Centres n n n Serve better the needs of the world-wide distributed community Data available nearby Reduce dependence on links to CERN Exploit established computing expertise & infrastructure in national labs, universities Address political issues as well See http: //www. cern. ch/monarc

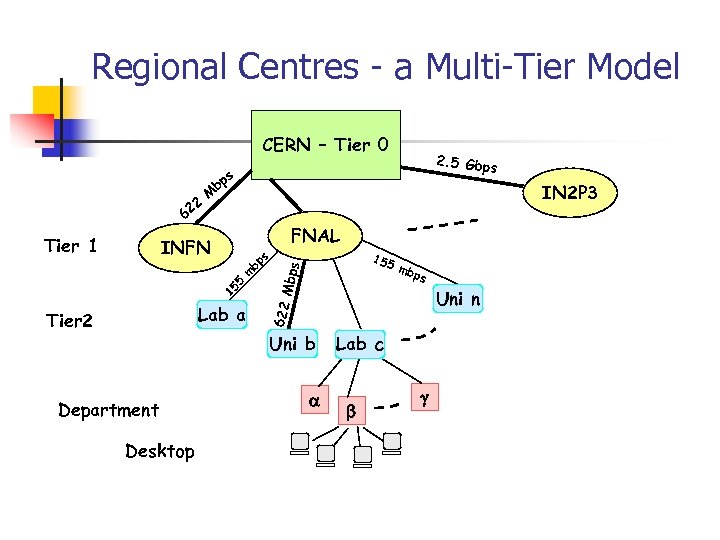

Regional Centres - a Multi-Tier Model CERN – Tier 0 22 s M bp IN 2 P 3 6 FNAL Mbps bp m 5 15 Lab a Tier 2 155 s INFN Desktop ps Uni n Uni b Department mb 622 Tier 1 2. 5 Gbp s Lab c

Open issues n Various technical issues to address n n Resource Discovery Resource Management n n Data Management n n n Petabyte-scale information volumes, high speed data moving and replica, replica synchronization, data caching, uniform interface to mass storage management systems, … Automated system management techniques of large computing fabrics Monitoring Services Security n n Distributed scheduling, optimal co-allocation of CPU, data and network resources, uniform interface to different local resource managers, … Authentication, Authorization … Scalability, Robustness, Resilience

Are Grids the solution ?

What is a Grid ? “Dependable, consistent, pervasive access to resources” n n Enable communities (“virtual organizations”) to share geographically distributed resources as they pursue common goals in the absence of central control, omniscience, trust relationships Make it easy to use diverse, geographically distributed, locally managed and controlled computing facilities as if they formed a coherent local cluster

What does the Grid do for you? n n You submit your work And the Grid n n n “Partitions” your work into convenient execution units based on the available resources, data distribution, … if there is scope for parallelism Finds convenient places for it to be run Organises efficient access to your data n n n n Caching, migration, replication Deals with authentication and authorization to the different sites that you will be using Interfaces to local site resource allocation mechanisms, policies Runs your jobs Monitors progress Recovers from problems Tells you when your work is complete

State (HEP-centric view) circa 1. 5 years ago n Globus project n n Globus toolkit: core services for Grid tools and applications (Authentication, Information service, Resource management, etc…) Good basis to build on but: n n No higher level services Handling of lots of data not addressed No production quality implementations Not possible to do real work with Grids yet …

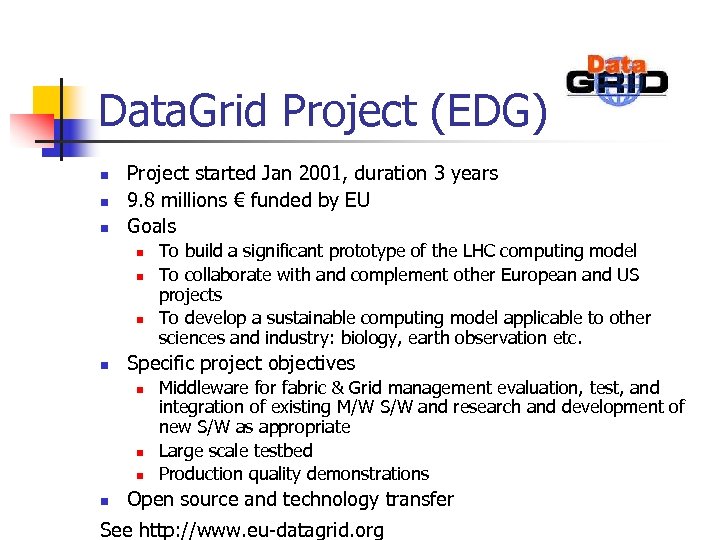

Data. Grid Project (EDG) n n n Project started Jan 2001, duration 3 years 9. 8 millions € funded by EU Goals n n Specific project objectives n n To build a significant prototype of the LHC computing model To collaborate with and complement other European and US projects To develop a sustainable computing model applicable to other sciences and industry: biology, earth observation etc. Middleware for fabric & Grid management evaluation, test, and integration of existing M/W S/W and research and development of new S/W as appropriate Large scale testbed Production quality demonstrations Open source and technology transfer See http: //www. eu-datagrid. org

Main Partners n CERN n CNRS - France n ESA/ESRIN - Italy n INFN - Italy n NIKHEF – The Netherlands n PPARC - UK

Associated Partners Research and Academic Institutes • CESNET (Czech Republic) • Commissariat à l'énergie atomique (CEA) – France • Computer and Automation Research Institute, Hungarian Academy of Sciences (MTA SZTAKI) • Consiglio Nazionale delle Ricerche (Italy) • Helsinki Institute of Physics – Finland • Institut de Fisica d'Altes Energies (IFAE) - Spain • Istituto Trentino di Cultura (IRST) – Italy • Konrad-Zuse-Zentrum für Informationstechnik Berlin - Germany • Royal Netherlands Meteorological Institute (KNMI) • Ruprecht-Karls-Universität Heidelberg - Germany • Stichting Academisch Rekencentrum Amsterdam (SARA) – Netherlands • Swedish Natural Science Research Council (NFR) - Sweden Industry Partners • Datamat (Italy) • IBM (UK) • Compagnie des Signaux (France)

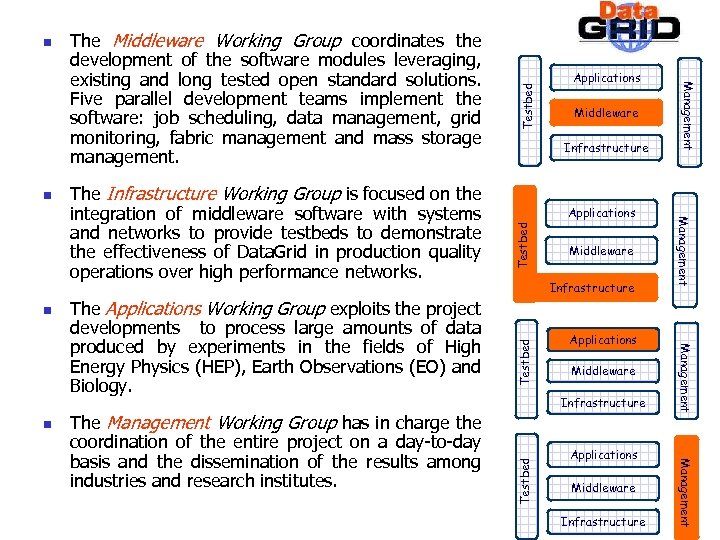

Testbed Applications Middleware Testbed Infrastructure Applications Middleware Infrastructure Management The Management Working Group has in charge the coordination of the entire project on a day-to-day basis and the dissemination of the results among industries and research institutes. Infrastructure Management n The Applications Working Group exploits the project developments to process large amounts of data produced by experiments in the fields of High Energy Physics (HEP), Earth Observations (EO) and Biology. Middleware Management n The Infrastructure Working Group is focused on the integration of middleware software with systems and networks to provide testbeds to demonstrate the effectiveness of Data. Grid in production quality operations over high performance networks. Applications Management n The Middleware Working Group coordinates the development of the software modules leveraging, existing and long tested open standard solutions. Five parallel development teams implement the software: job scheduling, data management, grid monitoring, fabric management and mass storage management. Testbed n

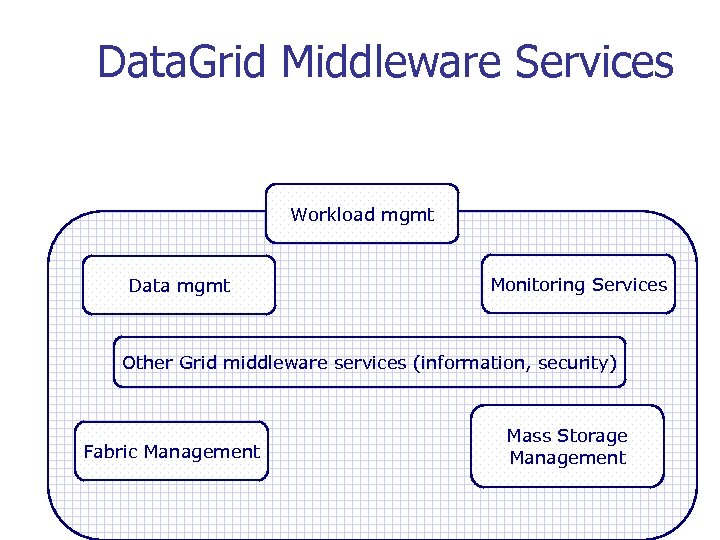

Data. Grid Middleware Services Workload mgmt Data mgmt Monitoring Services Other Grid middleware services (information, security) Fabric Management Mass Storage Management

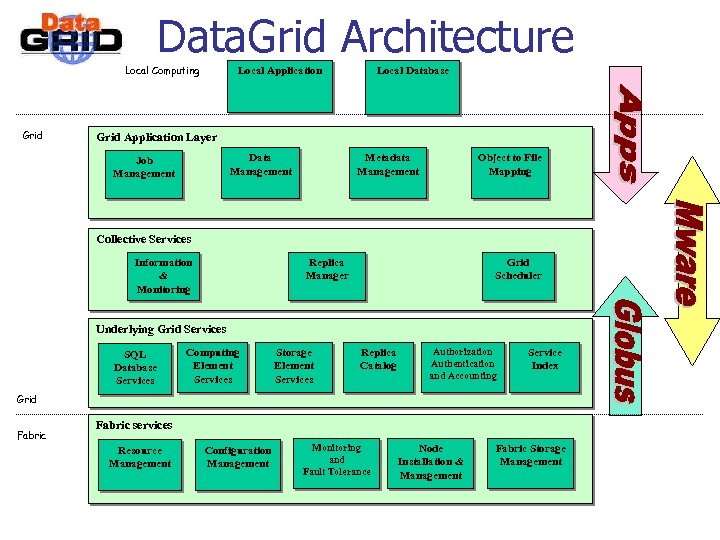

Data. Grid Architecture Local Computing Grid Local Application Local Database Grid Application Layer Data Management Job Management Metadata Management Object to File Mapping Collective Services Information & Monitoring Replica Manager Grid Scheduler Underlying Grid Services SQL Database Services Computing Element Services Storage Element Services Replica Catalog Authorization Authentication and Accounting Service Index Grid Fabric services Resource Management Configuration Management Monitoring and Fault Tolerance Node Installation & Management Fabric Storage Management

Data. Grid achievements n Testbed 1: first release of EDG middleware n First workload management system n n n “Super scheduling" component using application data and computing elements requirements File Replication Tools (GDMP), Replica Catalog, SQL Grid Database Service, … Tools for farm installation and configuration … Used for real production demos

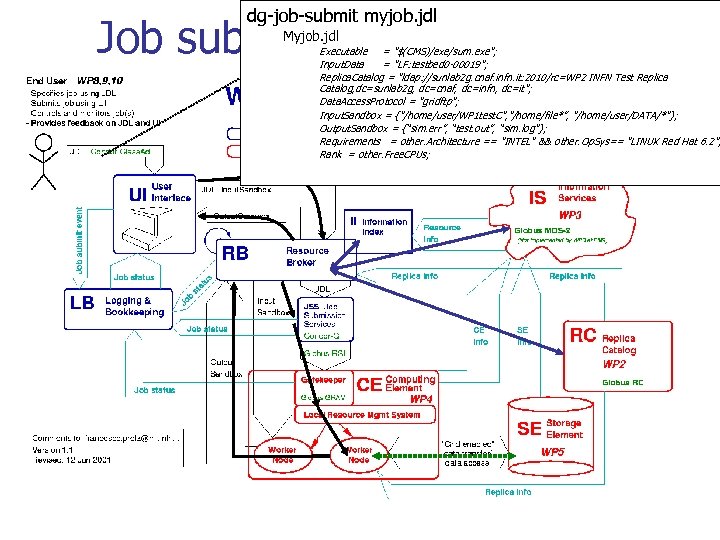

dg-job-submit myjob. jdl Job submission scenario Myjob. jdl Executable = "$(CMS)/exe/sum. exe"; Input. Data = "LF: testbed 0 -00019"; Replica. Catalog = "ldap: //sunlab 2 g. cnaf. infn. it: 2010/rc=WP 2 INFN Test Replica Catalog, dc=sunlab 2 g, dc=cnaf, dc=infn, dc=it"; Data. Access. Protocol = "gridftp"; Input. Sandbox = {"/home/user/WP 1 test. C", "/home/file*”, "/home/user/DATA/*"}; Output. Sandbox = {“sim. err”, “test. out”, “sim. log"}; Requirements = other. Architecture == "INTEL" && other. Op. Sys== "LINUX Red Hat 6. 2"; Rank = other. Free. CPUs;

Other HEP Grid initiatives n n n PPDG (US) Gri. Phy. N (US) Data. Tag & i. VDLG n Transatlantic testbeds n HENP Inter. Grid Coordination Board n LHC Computing Grid Project

Grid approach not only for HEP applications …

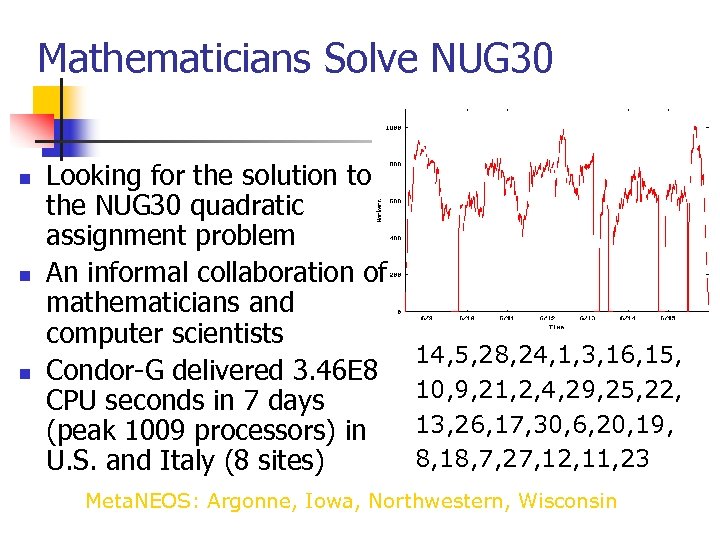

Mathematicians Solve NUG 30 n n n Looking for the solution to the NUG 30 quadratic assignment problem An informal collaboration of mathematicians and computer scientists Condor-G delivered 3. 46 E 8 CPU seconds in 7 days (peak 1009 processors) in U. S. and Italy (8 sites) 14, 5, 28, 24, 1, 3, 16, 15, 10, 9, 21, 2, 4, 29, 25, 22, 13, 26, 17, 30, 6, 20, 19, 8, 18, 7, 27, 12, 11, 23 Meta. NEOS: Argonne, Iowa, Northwestern, Wisconsin

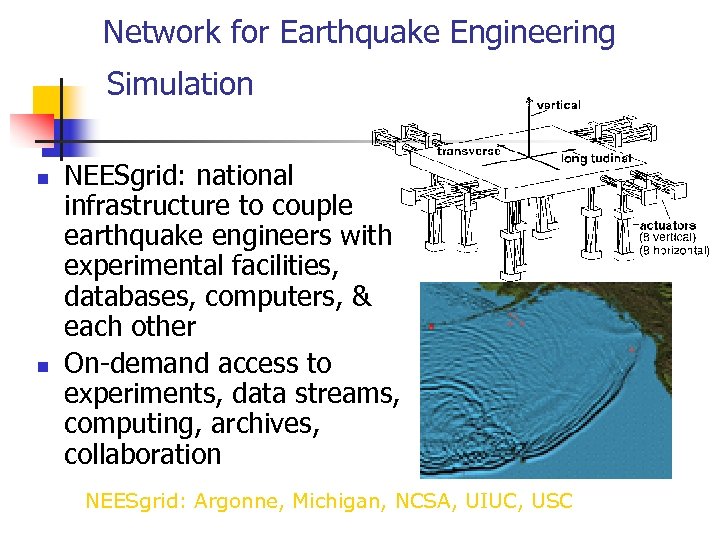

Network for Earthquake Engineering Simulation n n NEESgrid: national infrastructure to couple earthquake engineers with experimental facilities, databases, computers, & each other On-demand access to experiments, data streams, computing, archives, collaboration NEESgrid: Argonne, Michigan, NCSA, UIUC, USC

Global Grid Forum n Mission n To focus on the promotion and development of Grid technologies and applications via the development and documentation of "best practices, " implementation guidelines, and standards with an emphasis on "rough consensus and running code" An Open Process for Development of Standards n A Forum for Information Exchange n A Regular Gathering to Encourage Shared Effort n See http: //www. globalgridforum. org

Summary n n Regional Centers – Multi Tier model as envisaged approach for the LHC computing challenge Many issues to be addressed n n Many problems still to be solved … n n The Grid approach R&D required … but some tools and frameworks already available n Being used for real applications

9b3f3aad6cdea3319fbf15215f08ad48.ppt