ebbed6bce6b96f00498c2f304671b330.ppt

- Количество слайдов: 24

The German HEP Community Grid P. Malzacher@gsi. de for the German HEP Community Grid 27 -March-2007, ISGC 2007, Taipei Agenda: D-Grid in context HEP Community Grid HEP-CG Work Packages Summary

The German HEP Community Grid P. Malzacher@gsi. de for the German HEP Community Grid 27 -March-2007, ISGC 2007, Taipei Agenda: D-Grid in context HEP Community Grid HEP-CG Work Packages Summary

~ 10 000 scientists from 1000 institutes out of more then 100 countries, investigate with the help of huge accelerators basic problems of particle physics.

~ 10 000 scientists from 1000 institutes out of more then 100 countries, investigate with the help of huge accelerators basic problems of particle physics.

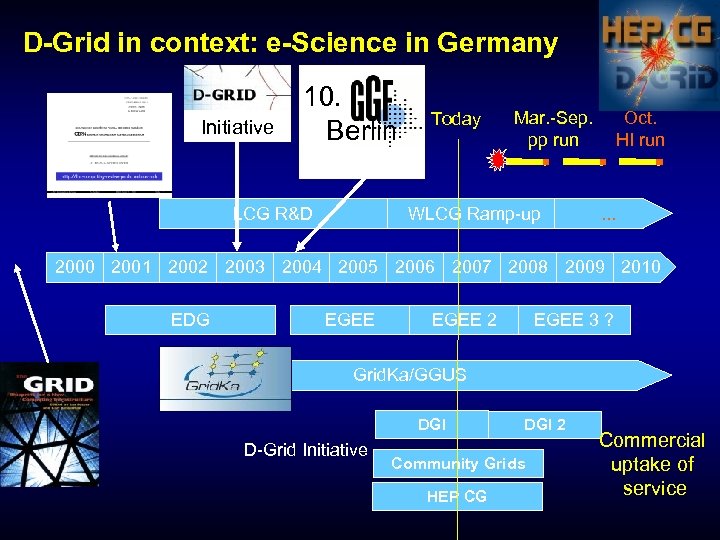

D-Grid in context: e-Science in Germany Initiative 10. Berlin LCG R&D Today Mar. -Sep. pp run WLCG Ramp-up Oct. HI run . . . 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 EDG EGEE 2 EGEE 3 ? Grid. Ka/GGUS DGI D-Grid Initiative DGI 2 Community Grids HEP CG Commercial uptake of service

D-Grid in context: e-Science in Germany Initiative 10. Berlin LCG R&D Today Mar. -Sep. pp run WLCG Ramp-up Oct. HI run . . . 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 EDG EGEE 2 EGEE 3 ? Grid. Ka/GGUS DGI D-Grid Initiative DGI 2 Community Grids HEP CG Commercial uptake of service

www. d-grid. de

www. d-grid. de

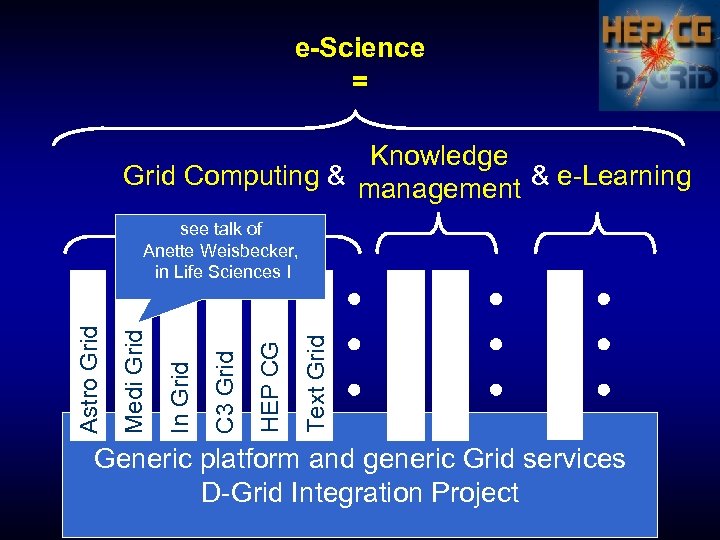

e-Science = Knowledge Grid Computing & management & e-Learning C 3 Grid Text Grid HEP CG C 3 Grid In Grid Medi Grid Astro Grid see talk of Anette Weisbecker, in Life Sciences I Generic platform and generic Grid services D-Grid Integration Project

e-Science = Knowledge Grid Computing & management & e-Learning C 3 Grid Text Grid HEP CG C 3 Grid In Grid Medi Grid Astro Grid see talk of Anette Weisbecker, in Life Sciences I Generic platform and generic Grid services D-Grid Integration Project

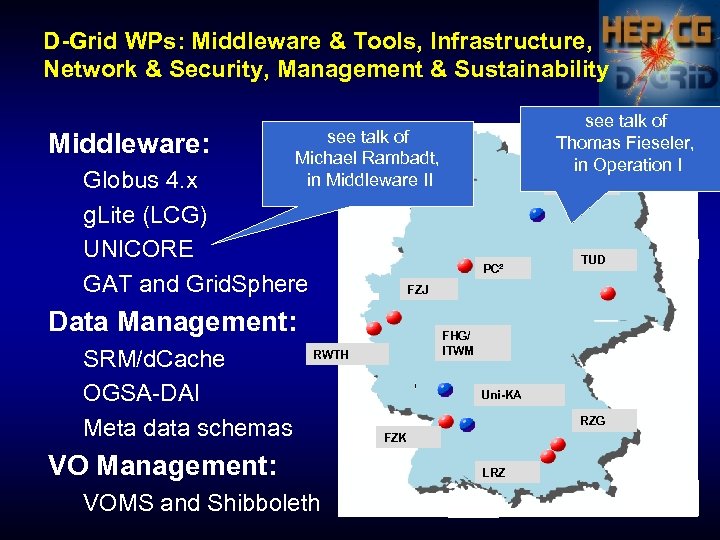

D-Grid WPs: Middleware & Tools, Infrastructure, Network & Security, Management & Sustainability Middleware: see talk of Michael Rambadt, in Middleware II Globus 4. x g. Lite (LCG) UNICORE GAT and Grid. Sphere RRZN PC² TUD FZJ Data Management: SRM/d. Cache OGSA-DAI Meta data schemas see talk of Thomas Fieseler, in Operation I FHG/ ITWM RWTH VO Management: VOMS and Shibboleth Uni-KA RZG FZK LRZ

D-Grid WPs: Middleware & Tools, Infrastructure, Network & Security, Management & Sustainability Middleware: see talk of Michael Rambadt, in Middleware II Globus 4. x g. Lite (LCG) UNICORE GAT and Grid. Sphere RRZN PC² TUD FZJ Data Management: SRM/d. Cache OGSA-DAI Meta data schemas see talk of Thomas Fieseler, in Operation I FHG/ ITWM RWTH VO Management: VOMS and Shibboleth Uni-KA RZG FZK LRZ

LHC groups in Germany Alice: Darmstadt, Frankfurt, Heidelberg, Münster ATLAS: Berlin, Bonn, Dortmund, Dresden, Freiburg, Gießen, Heidelberg, Mainz, Mannheim, München, Siegen, Wuppertal CMS: Aachen, Hamburg, Karlsruhe LHCb: Heidelberg, Dortmund

LHC groups in Germany Alice: Darmstadt, Frankfurt, Heidelberg, Münster ATLAS: Berlin, Bonn, Dortmund, Dresden, Freiburg, Gießen, Heidelberg, Mainz, Mannheim, München, Siegen, Wuppertal CMS: Aachen, Hamburg, Karlsruhe LHCb: Heidelberg, Dortmund

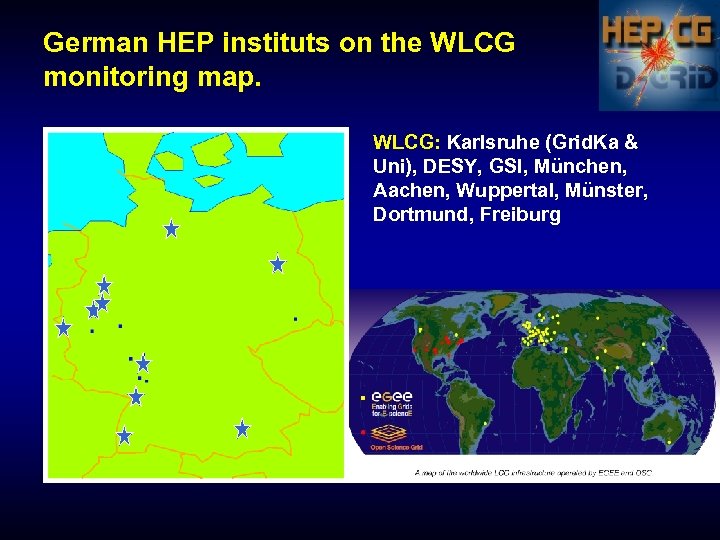

German HEP instituts on the WLCG monitoring map. WLCG: Karlsruhe (Grid. Ka & Uni), DESY, GSI, München, Aachen, Wuppertal, Münster, Dortmund, Freiburg

German HEP instituts on the WLCG monitoring map. WLCG: Karlsruhe (Grid. Ka & Uni), DESY, GSI, München, Aachen, Wuppertal, Münster, Dortmund, Freiburg

HEP CG partner: Project partner: Uni Dortmund, TU Dresden, LMU München, Uni Siegen, Uni Wuppertal, DESY (Hamburg & Zeuthen), GSI via subcontract: Uni Freiburg, Konrad-Zuse-Zentrum Berlin, unfunded: Uni Mainz, HU Berlin, MPI f. Physik München, LRZ München, Uni Karlsruhe, MPI Heidelberg, RZ Garching, John von Neumann Institut für Computing, FZ Karlsruhe

HEP CG partner: Project partner: Uni Dortmund, TU Dresden, LMU München, Uni Siegen, Uni Wuppertal, DESY (Hamburg & Zeuthen), GSI via subcontract: Uni Freiburg, Konrad-Zuse-Zentrum Berlin, unfunded: Uni Mainz, HU Berlin, MPI f. Physik München, LRZ München, Uni Karlsruhe, MPI Heidelberg, RZ Garching, John von Neumann Institut für Computing, FZ Karlsruhe

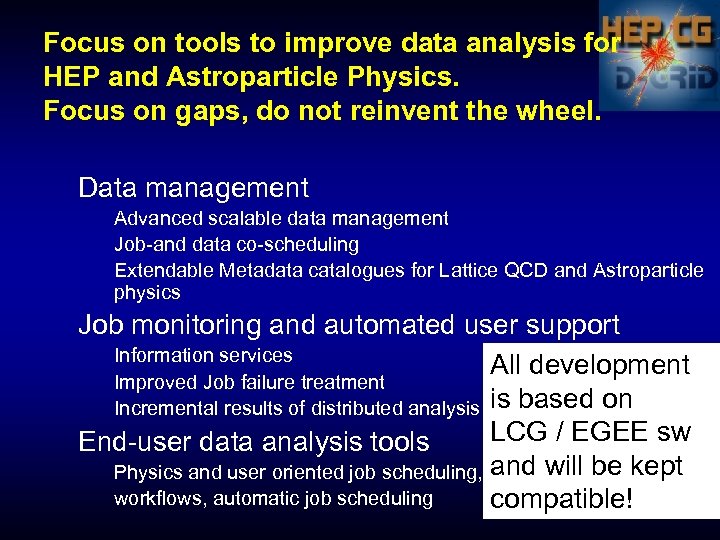

Focus on tools to improve data analysis for HEP and Astroparticle Physics. Focus on gaps, do not reinvent the wheel. Data management Advanced scalable data management Job-and data co-scheduling Extendable Metadata catalogues for Lattice QCD and Astroparticle physics Job monitoring and automated user support Information services All development Improved Job failure treatment Incremental results of distributed analysis is based on LCG / EGEE sw End-user data analysis tools Physics and user oriented job scheduling, and will be kept workflows, automatic job scheduling compatible!

Focus on tools to improve data analysis for HEP and Astroparticle Physics. Focus on gaps, do not reinvent the wheel. Data management Advanced scalable data management Job-and data co-scheduling Extendable Metadata catalogues for Lattice QCD and Astroparticle physics Job monitoring and automated user support Information services All development Improved Job failure treatment Incremental results of distributed analysis is based on LCG / EGEE sw End-user data analysis tools Physics and user oriented job scheduling, and will be kept workflows, automatic job scheduling compatible!

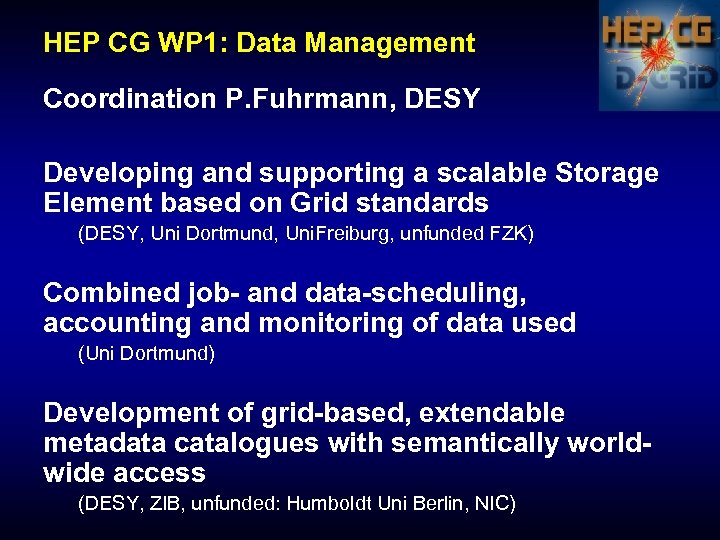

HEP CG WP 1: Data Management Coordination P. Fuhrmann, DESY Developing and supporting a scalable Storage Element based on Grid standards (DESY, Uni Dortmund, Uni. Freiburg, unfunded FZK) Combined job- and data-scheduling, accounting and monitoring of data used (Uni Dortmund) Development of grid-based, extendable metadata catalogues with semantically worldwide access (DESY, Zl. B, unfunded: Humboldt Uni Berlin, NIC)

HEP CG WP 1: Data Management Coordination P. Fuhrmann, DESY Developing and supporting a scalable Storage Element based on Grid standards (DESY, Uni Dortmund, Uni. Freiburg, unfunded FZK) Combined job- and data-scheduling, accounting and monitoring of data used (Uni Dortmund) Development of grid-based, extendable metadata catalogues with semantically worldwide access (DESY, Zl. B, unfunded: Humboldt Uni Berlin, NIC)

Scalable Storage Element: d. Cache. ORG - thousands of pool - PB disk storage - hundreds of file transfers per second - not more than 2 FTEs - only one host - ~ 10 TB - zero maintenance The d. Cache project is funded from DESY, FERMI Lab, Open. Science Grid and in part from the Nordic Data Grid Facility. HEP CG contributes: Professional product management: code versioning, packaging, user support and test suites.

Scalable Storage Element: d. Cache. ORG - thousands of pool - PB disk storage - hundreds of file transfers per second - not more than 2 FTEs - only one host - ~ 10 TB - zero maintenance The d. Cache project is funded from DESY, FERMI Lab, Open. Science Grid and in part from the Nordic Data Grid Facility. HEP CG contributes: Professional product management: code versioning, packaging, user support and test suites.

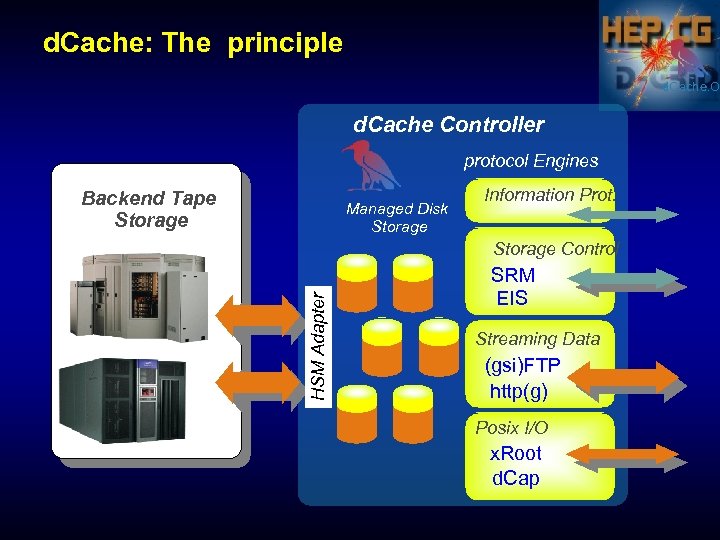

d. Cache: The principle d. Cache. O d. Cache Controller protocol Engines Backend Tape Storage Managed Disk Storage Information Prot. HSM Adapter Storage Control SRM EIS Streaming Data (gsi)FTP http(g) Posix I/O x. Root d. Cap

d. Cache: The principle d. Cache. O d. Cache Controller protocol Engines Backend Tape Storage Managed Disk Storage Information Prot. HSM Adapter Storage Control SRM EIS Streaming Data (gsi)FTP http(g) Posix I/O x. Root d. Cap

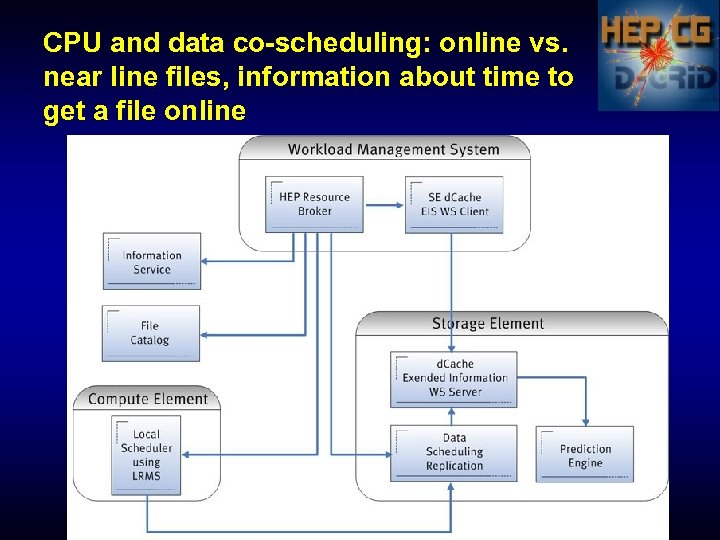

CPU and data co-scheduling: online vs. near line files, information about time to get a file online

CPU and data co-scheduling: online vs. near line files, information about time to get a file online

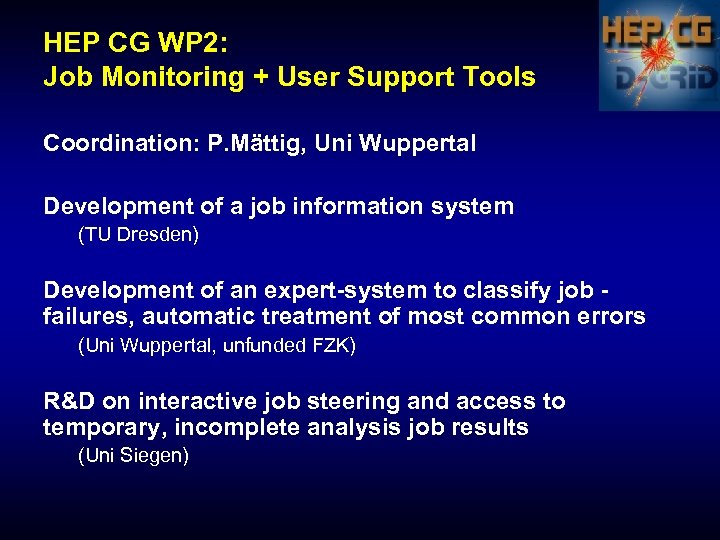

HEP CG WP 2: Job Monitoring + User Support Tools Coordination: P. Mättig, Uni Wuppertal Development of a job information system (TU Dresden) Development of an expert-system to classify job failures, automatic treatment of most common errors (Uni Wuppertal, unfunded FZK) R&D on interactive job steering and access to temporary, incomplete analysis job results (Uni Siegen)

HEP CG WP 2: Job Monitoring + User Support Tools Coordination: P. Mättig, Uni Wuppertal Development of a job information system (TU Dresden) Development of an expert-system to classify job failures, automatic treatment of most common errors (Uni Wuppertal, unfunded FZK) R&D on interactive job steering and access to temporary, incomplete analysis job results (Uni Siegen)

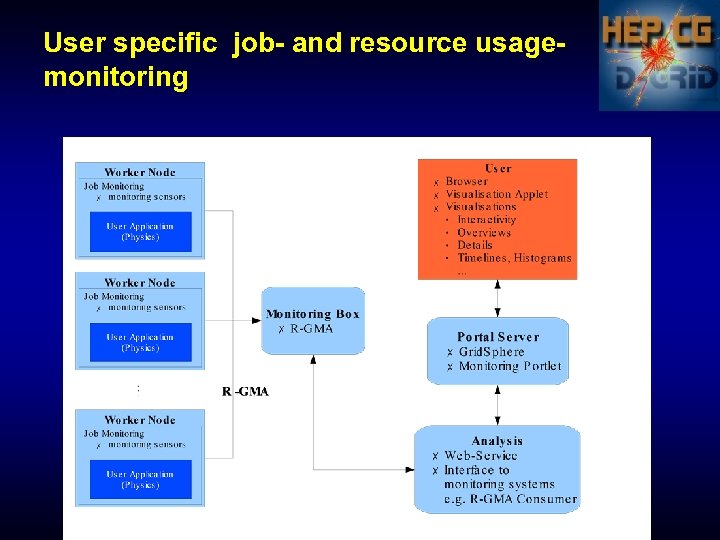

User specific job- and resource usagemonitoring

User specific job- and resource usagemonitoring

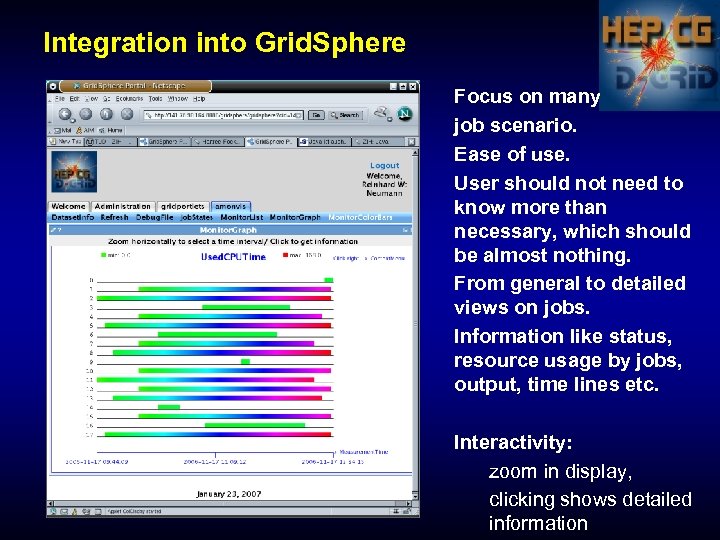

Integration into Grid. Sphere Focus on many job scenario. Ease of use. User should not need to know more than necessary, which should be almost nothing. From general to detailed views on jobs. Information like status, resource usage by jobs, output, time lines etc. Interactivity: zoom in display, clicking shows detailed information

Integration into Grid. Sphere Focus on many job scenario. Ease of use. User should not need to know more than necessary, which should be almost nothing. From general to detailed views on jobs. Information like status, resource usage by jobs, output, time lines etc. Interactivity: zoom in display, clicking shows detailed information

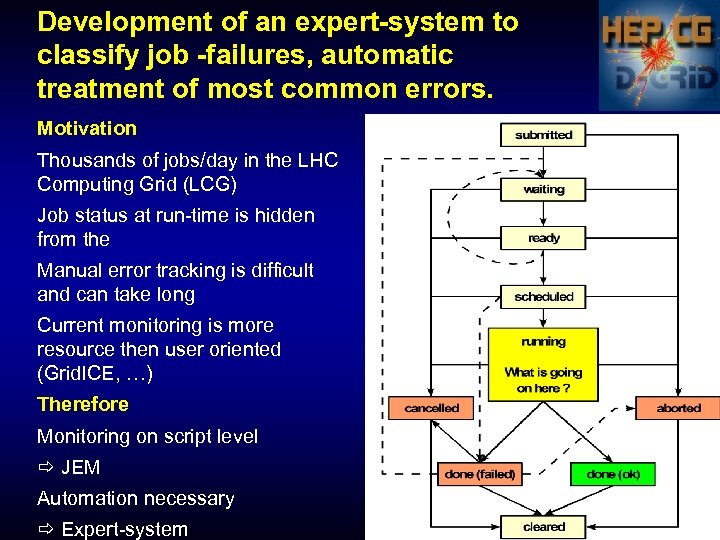

Development of an expert-system to classify job -failures, automatic treatment of most common errors. Motivation Thousands of jobs/day in the LHC Computing Grid (LCG) Job status at run-time is hidden from the Manual error tracking is difficult and can take long Current monitoring is more resource then user oriented (Grid. ICE, …) Therefore Monitoring on script level ð JEM Automation necessary ð Expert-system

Development of an expert-system to classify job -failures, automatic treatment of most common errors. Motivation Thousands of jobs/day in the LHC Computing Grid (LCG) Job status at run-time is hidden from the Manual error tracking is difficult and can take long Current monitoring is more resource then user oriented (Grid. ICE, …) Therefore Monitoring on script level ð JEM Automation necessary ð Expert-system

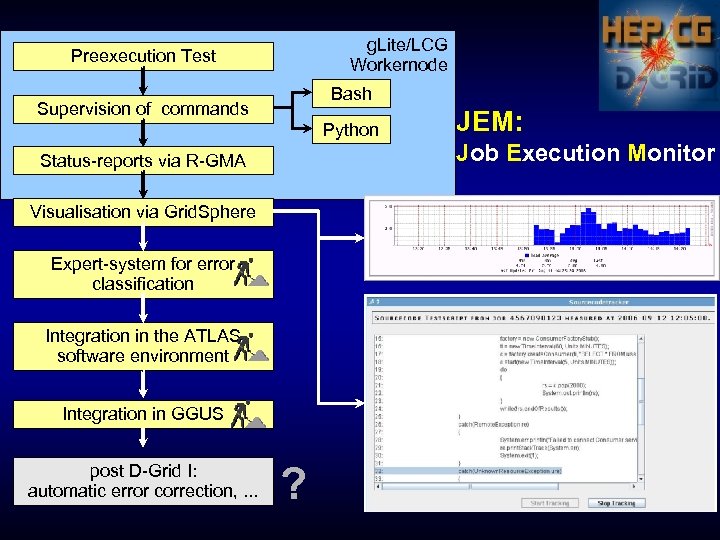

g. Lite/LCG Workernode Preexecution Test Bash Supervision of commands Python Status-reports via R-GMA Visualisation via Grid. Sphere Expert-system for error classification Integration in the ATLAS software environment Integration in GGUS post D-Grid I: automatic error correction, . . . ? JEM: Job Execution Monitor

g. Lite/LCG Workernode Preexecution Test Bash Supervision of commands Python Status-reports via R-GMA Visualisation via Grid. Sphere Expert-system for error classification Integration in the ATLAS software environment Integration in GGUS post D-Grid I: automatic error correction, . . . ? JEM: Job Execution Monitor

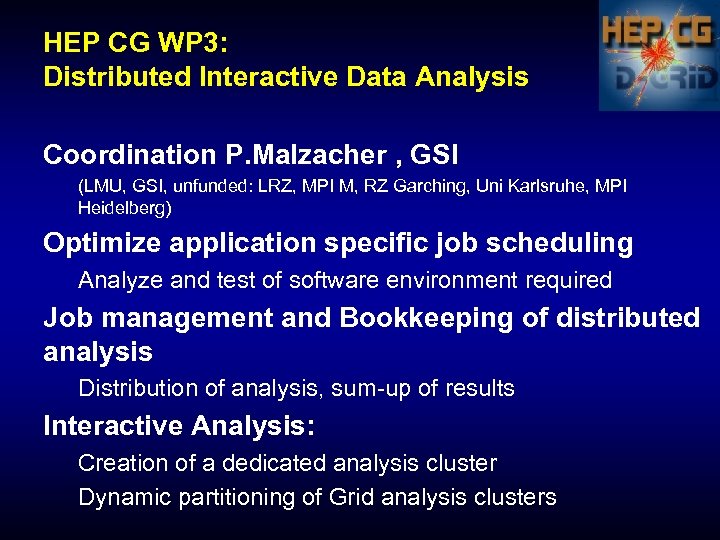

HEP CG WP 3: Distributed Interactive Data Analysis Coordination P. Malzacher , GSI (LMU, GSI, unfunded: LRZ, MPI M, RZ Garching, Uni Karlsruhe, MPI Heidelberg) Optimize application specific job scheduling Analyze and test of software environment required Job management and Bookkeeping of distributed analysis Distribution of analysis, sum-up of results Interactive Analysis: Creation of a dedicated analysis cluster Dynamic partitioning of Grid analysis clusters

HEP CG WP 3: Distributed Interactive Data Analysis Coordination P. Malzacher , GSI (LMU, GSI, unfunded: LRZ, MPI M, RZ Garching, Uni Karlsruhe, MPI Heidelberg) Optimize application specific job scheduling Analyze and test of software environment required Job management and Bookkeeping of distributed analysis Distribution of analysis, sum-up of results Interactive Analysis: Creation of a dedicated analysis cluster Dynamic partitioning of Grid analysis clusters

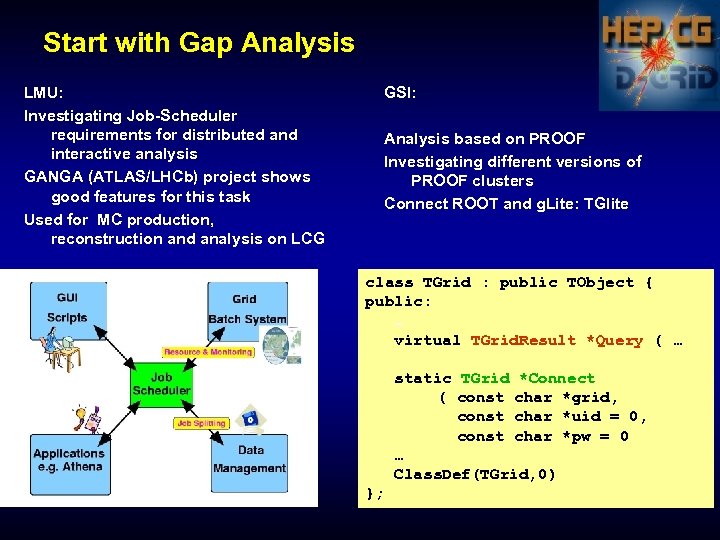

Start with Gap Analysis LMU: Investigating Job-Scheduler requirements for distributed and interactive analysis GANGA (ATLAS/LHCb) project shows good features for this task Used for MC production, reconstruction and analysis on LCG GSI: Analysis based on PROOF Investigating different versions of PROOF clusters Connect ROOT and g. Lite: TGlite class TGrid : public TObject { public: … virtual TGrid. Result *Query ( … static TGrid *Connect ( const char *grid, const char *uid = 0, const char *pw = 0 … Class. Def(TGrid, 0) };

Start with Gap Analysis LMU: Investigating Job-Scheduler requirements for distributed and interactive analysis GANGA (ATLAS/LHCb) project shows good features for this task Used for MC production, reconstruction and analysis on LCG GSI: Analysis based on PROOF Investigating different versions of PROOF clusters Connect ROOT and g. Lite: TGlite class TGrid : public TObject { public: … virtual TGrid. Result *Query ( … static TGrid *Connect ( const char *grid, const char *uid = 0, const char *pw = 0 … Class. Def(TGrid, 0) };

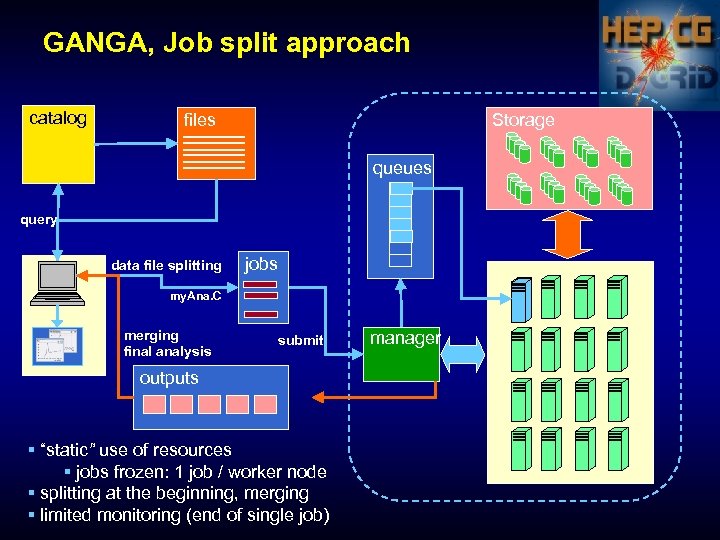

GANGA, Job split approach catalog files Storage queues query data file splitting jobs my. Ana. C merging final analysis submit outputs § “static” use of resources § jobs frozen: 1 job / worker node § splitting at the beginning, merging § limited monitoring (end of single job) manager

GANGA, Job split approach catalog files Storage queues query data file splitting jobs my. Ana. C merging final analysis submit outputs § “static” use of resources § jobs frozen: 1 job / worker node § splitting at the beginning, merging § limited monitoring (end of single job) manager

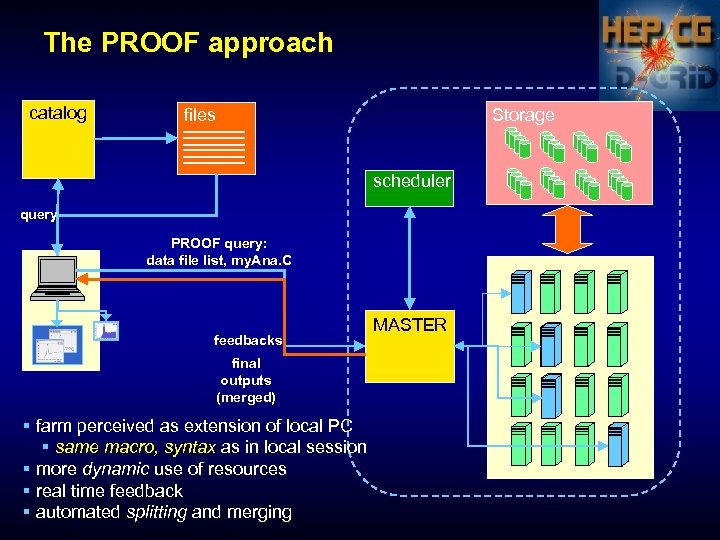

The PROOF approach catalog files Storage scheduler query PROOF query: data file list, my. Ana. C feedbacks final outputs (merged) § farm perceived as extension of local PC § same macro, syntax as in local session § more dynamic use of resources § real time feedback § automated splitting and merging MASTER

The PROOF approach catalog files Storage scheduler query PROOF query: data file list, my. Ana. C feedbacks final outputs (merged) § farm perceived as extension of local PC § same macro, syntax as in local session § more dynamic use of resources § real time feedback § automated splitting and merging MASTER

Summary: • Rather late compared to other national Grid initiatives a German e -science program is well under way. It is build on top of 3 different middleware flavors: UNICORE, Globus 4 and g. Lite. • The HEP-CG production environment is based on LCG / EGEE software. • The HEP-CG focuses on gaps in three work packages: data management, automated user support and interactive analysis. Challenges for HEP: • Very heterogeneous disciplines and stakeholders. • LCG/EGEE is not basis for many other partners. More Information • I showed only a few highlights for more info see: http: //www. d-grid. de

Summary: • Rather late compared to other national Grid initiatives a German e -science program is well under way. It is build on top of 3 different middleware flavors: UNICORE, Globus 4 and g. Lite. • The HEP-CG production environment is based on LCG / EGEE software. • The HEP-CG focuses on gaps in three work packages: data management, automated user support and interactive analysis. Challenges for HEP: • Very heterogeneous disciplines and stakeholders. • LCG/EGEE is not basis for many other partners. More Information • I showed only a few highlights for more info see: http: //www. d-grid. de