31930b9a822e650f36e0a1b1b3852cda.ppt

- Количество слайдов: 34

The Gelato Federation What is it exactly ? Sverre Jarp March, 2003 1

The Gelato Federation What is it exactly ? Sverre Jarp March, 2003 1

Gelato is a collaboration n Goal: n n Promote Linux on Itanium-based systems Sponsor n n n Hewlett-Packard Others coming Members n n n 13 (right now) Mainly from the High Performance/High Throughput Community Expected to grow rapidly SJ – Mar 2003 2

Gelato is a collaboration n Goal: n n Promote Linux on Itanium-based systems Sponsor n n n Hewlett-Packard Others coming Members n n n 13 (right now) Mainly from the High Performance/High Throughput Community Expected to grow rapidly SJ – Mar 2003 2

Current members n North America n n n n Europe n n n NCAR (National Center for Atmospheric Research) NCSA (National Center for Supercomputing Applications) PNNL (Pacific Northwest National Lab) PSC (Pittsburgh Supercomputer Center) University of Illinois-Urbana/Champaign University of Waterloo CERN DIKU (Datalogic Institute, University of Copenhagen) ESIEE (École Supérieure d’ingénieurs near Paris) INRIA (Institut National de Recherche en Informatique et Automatique) Far-East/Australia n n n Bio-informatics Institute (Singapore) University of Tsinghua (Beijing) University of New South Wales (Sydney) SJ – Mar 2003 3

Current members n North America n n n n Europe n n n NCAR (National Center for Atmospheric Research) NCSA (National Center for Supercomputing Applications) PNNL (Pacific Northwest National Lab) PSC (Pittsburgh Supercomputer Center) University of Illinois-Urbana/Champaign University of Waterloo CERN DIKU (Datalogic Institute, University of Copenhagen) ESIEE (École Supérieure d’ingénieurs near Paris) INRIA (Institut National de Recherche en Informatique et Automatique) Far-East/Australia n n n Bio-informatics Institute (Singapore) University of Tsinghua (Beijing) University of New South Wales (Sydney) SJ – Mar 2003 3

Center of gravity n Web portal (http: //www. gelato. org) n Rich content n (Pointers to) Open source IA-64 applications n Examples: n n n News Information, advice, hints n n ROOT (from CERN) OSCAR (Cluster mgmt software from NSCA) Open. Impact compiler (UIUC) Related to IPF, Linux kernel, etc. Member overview n Who is who, etc. SJ – Mar 2003 4

Center of gravity n Web portal (http: //www. gelato. org) n Rich content n (Pointers to) Open source IA-64 applications n Examples: n n n News Information, advice, hints n n ROOT (from CERN) OSCAR (Cluster mgmt software from NSCA) Open. Impact compiler (UIUC) Related to IPF, Linux kernel, etc. Member overview n Who is who, etc. SJ – Mar 2003 4

Current development focus n Six “performance” areas: n Single system scalability n n Cluster Scalability and Performance Mgmt n n BII Compilers n n Up to 128 -nodes: NSCA Parallel File System n n From 2 -way to 16 -way (HP, Fort Collins) UIUC Performance tools, management n HP Labs SJ – Mar 2003 5

Current development focus n Six “performance” areas: n Single system scalability n n Cluster Scalability and Performance Mgmt n n BII Compilers n n Up to 128 -nodes: NSCA Parallel File System n n From 2 -way to 16 -way (HP, Fort Collins) UIUC Performance tools, management n HP Labs SJ – Mar 2003 5

CERN Requirement # 1 n Better C++ performance through n Better compilers n Faster systems n to la Ge s ocu f z n@ H 5 G 1. o dis Ma Both! SJ – Mar 2003 6

CERN Requirement # 1 n Better C++ performance through n Better compilers n Faster systems n to la Ge s ocu f z n@ H 5 G 1. o dis Ma Both! SJ – Mar 2003 6

Further Gelato Research and Development n Linux memory management n n Superpages TLB sharing between processes IA-64 pre-emption support Compilers/Debuggers n n Open. Impact C compiler (UIUC) Open Research Compiler enhancements (Tsinghua) n n Fortran, C, C++ Parallel debugger (Tsinghua) SJ – Mar 2003 7

Further Gelato Research and Development n Linux memory management n n Superpages TLB sharing between processes IA-64 pre-emption support Compilers/Debuggers n n Open. Impact C compiler (UIUC) Open Research Compiler enhancements (Tsinghua) n n Fortran, C, C++ Parallel debugger (Tsinghua) SJ – Mar 2003 7

The “opencluster” and the “openlab” Sverre Jarp IT Division CERN SJ – Mar 2003 8

The “opencluster” and the “openlab” Sverre Jarp IT Division CERN SJ – Mar 2003 8

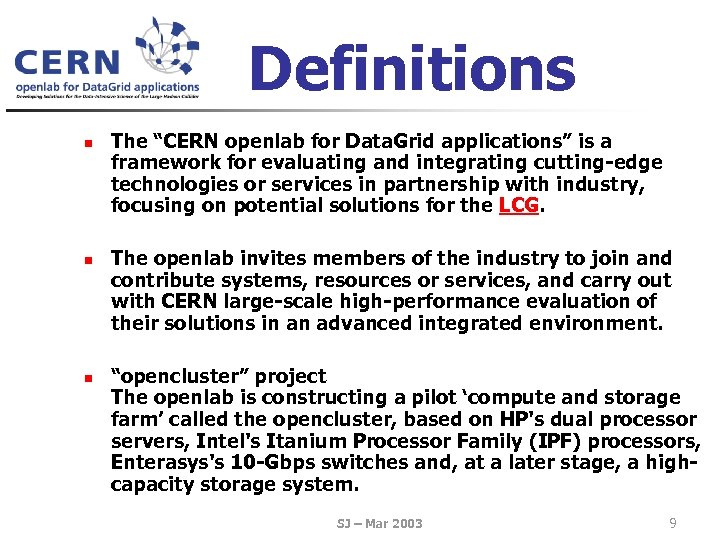

Definitions n n n The “CERN openlab for Data. Grid applications” is a framework for evaluating and integrating cutting-edge technologies or services in partnership with industry, focusing on potential solutions for the LCG. The openlab invites members of the industry to join and contribute systems, resources or services, and carry out with CERN large-scale high-performance evaluation of their solutions in an advanced integrated environment. “opencluster” project The openlab is constructing a pilot ‘compute and storage farm’ called the opencluster, based on HP's dual processor servers, Intel's Itanium Processor Family (IPF) processors, Enterasys's 10 -Gbps switches and, at a later stage, a highcapacity storage system. SJ – Mar 2003 9

Definitions n n n The “CERN openlab for Data. Grid applications” is a framework for evaluating and integrating cutting-edge technologies or services in partnership with industry, focusing on potential solutions for the LCG. The openlab invites members of the industry to join and contribute systems, resources or services, and carry out with CERN large-scale high-performance evaluation of their solutions in an advanced integrated environment. “opencluster” project The openlab is constructing a pilot ‘compute and storage farm’ called the opencluster, based on HP's dual processor servers, Intel's Itanium Processor Family (IPF) processors, Enterasys's 10 -Gbps switches and, at a later stage, a highcapacity storage system. SJ – Mar 2003 9

Technology onslaught n Large amounts of new technology will become available between now and LHC start-up. A few HW examples: n Processors n n Memory Not all, b Interconnect this ut so will me o Computer architecture defin used f itely by L be HC n n n SMT (Symmetric Multi-Threading) CMP (Chip Multiprocessor) Ubiquitous 64 -bit computing (even in laptops) n n DDR II-400 (fast) Servers with 1 TB (large) PCI-X 2 PCI-Express (serial) Infiniband Chipsets on steroids Modular computers ISC 2003 Keynote Presentation Building Efficient HPC Systems from Catalog Components Justin Rattner, Intel Corp. , Santa Clara, USA n n Disks n n Serial-ATA Ethernet n n 10 Gb. E (NICs and switches) 1 Terabit backplanes SJ – Mar 2003 10

Technology onslaught n Large amounts of new technology will become available between now and LHC start-up. A few HW examples: n Processors n n Memory Not all, b Interconnect this ut so will me o Computer architecture defin used f itely by L be HC n n n SMT (Symmetric Multi-Threading) CMP (Chip Multiprocessor) Ubiquitous 64 -bit computing (even in laptops) n n DDR II-400 (fast) Servers with 1 TB (large) PCI-X 2 PCI-Express (serial) Infiniband Chipsets on steroids Modular computers ISC 2003 Keynote Presentation Building Efficient HPC Systems from Catalog Components Justin Rattner, Intel Corp. , Santa Clara, USA n n Disks n n Serial-ATA Ethernet n n 10 Gb. E (NICs and switches) 1 Terabit backplanes SJ – Mar 2003 10

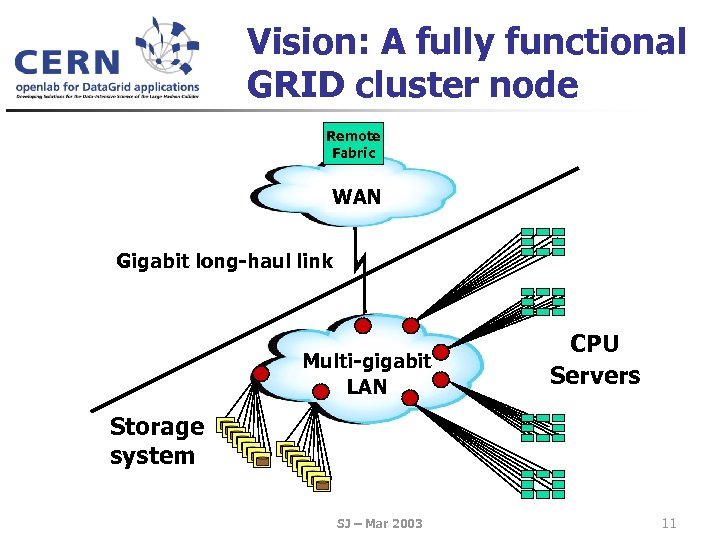

Vision: A fully functional GRID cluster node Remote Fabric WAN Gigabit long-haul link Multi-gigabit LAN CPU Servers Storage system SJ – Mar 2003 11

Vision: A fully functional GRID cluster node Remote Fabric WAN Gigabit long-haul link Multi-gigabit LAN CPU Servers Storage system SJ – Mar 2003 11

opencluster strategy n Demonstrate promising technologies n n LCG and LHC on-line Deploy the technologies well beyond the opencluster itself n n 10 Gb. E interconnect in the LHC Testbed Act as a 64 -bit Porting Centre n n Storage subsystem as CERN-wide pilot Focal point for vendor collaborations n n CMS and Alice already active; ATLAS is interested CASTOR 64 -bit reference platform For instance, in the “ 10 Gb. E Challenge” everybody must collaborate in order to be successful Channel for providing information to vendors n Thematic workshops SJ – Mar 2003 12

opencluster strategy n Demonstrate promising technologies n n LCG and LHC on-line Deploy the technologies well beyond the opencluster itself n n 10 Gb. E interconnect in the LHC Testbed Act as a 64 -bit Porting Centre n n Storage subsystem as CERN-wide pilot Focal point for vendor collaborations n n CMS and Alice already active; ATLAS is interested CASTOR 64 -bit reference platform For instance, in the “ 10 Gb. E Challenge” everybody must collaborate in order to be successful Channel for providing information to vendors n Thematic workshops SJ – Mar 2003 12

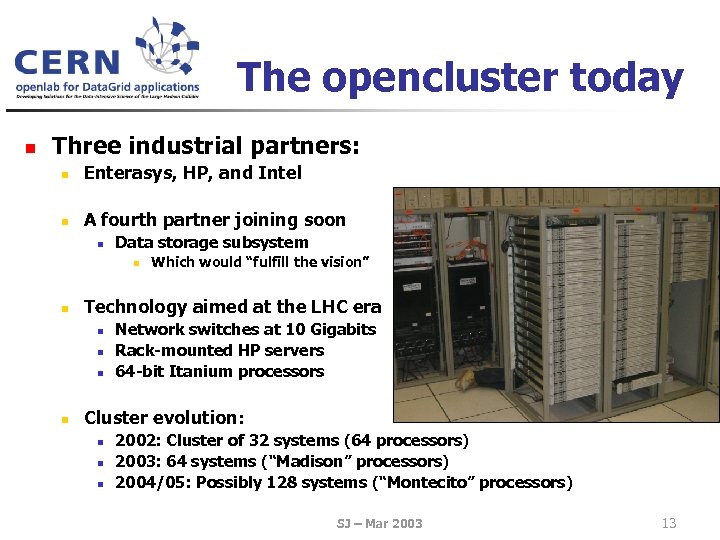

The opencluster today n Three industrial partners: n Enterasys, HP, and Intel n A fourth partner joining soon n Data storage subsystem n n Technology aimed at the LHC era n n Which would “fulfill the vision” Network switches at 10 Gigabits Rack-mounted HP servers 64 -bit Itanium processors Cluster evolution: n n n 2002: Cluster of 32 systems (64 processors) 2003: 64 systems (“Madison” processors) 2004/05: Possibly 128 systems (“Montecito” processors) SJ – Mar 2003 13

The opencluster today n Three industrial partners: n Enterasys, HP, and Intel n A fourth partner joining soon n Data storage subsystem n n Technology aimed at the LHC era n n Which would “fulfill the vision” Network switches at 10 Gigabits Rack-mounted HP servers 64 -bit Itanium processors Cluster evolution: n n n 2002: Cluster of 32 systems (64 processors) 2003: 64 systems (“Madison” processors) 2004/05: Possibly 128 systems (“Montecito” processors) SJ – Mar 2003 13

Activity overview n Over the last few months n n n Cluster installation, middleware Application porting, compiler installations, benchmarking Initialization of “Challenges” Planned first thematic workshop Future n n n Porting of grid middleware Grid integration and benchmarking Storage partnership Cluster upgrades/expansion New generation network switches SJ – Mar 2003 14

Activity overview n Over the last few months n n n Cluster installation, middleware Application porting, compiler installations, benchmarking Initialization of “Challenges” Planned first thematic workshop Future n n n Porting of grid middleware Grid integration and benchmarking Storage partnership Cluster upgrades/expansion New generation network switches SJ – Mar 2003 14

opencluster in detail n Integration of the cluster: n n n Fully automated network installations 32 nodes + development nodes Red. Hat Advanced Workstation 2. 1 Open. AFS, LSF GNU, Intel, ORC Compilers (64 -bit) n n n ORC (Open Research Compiler, used to belong to SGI) CERN middleware: Castor data mgmt CERN Applications n n n Many thanks to my colleagues in ADC, FIO and CS Porting, Benchmarking, Performance improvements CLHEP, GEANT 4, ROOT, Sixtrack, CERNLIB, etc. Database software (My. SQL, Oracle? ) SJ – Mar 2003 15

opencluster in detail n Integration of the cluster: n n n Fully automated network installations 32 nodes + development nodes Red. Hat Advanced Workstation 2. 1 Open. AFS, LSF GNU, Intel, ORC Compilers (64 -bit) n n n ORC (Open Research Compiler, used to belong to SGI) CERN middleware: Castor data mgmt CERN Applications n n n Many thanks to my colleagues in ADC, FIO and CS Porting, Benchmarking, Performance improvements CLHEP, GEANT 4, ROOT, Sixtrack, CERNLIB, etc. Database software (My. SQL, Oracle? ) SJ – Mar 2003 15

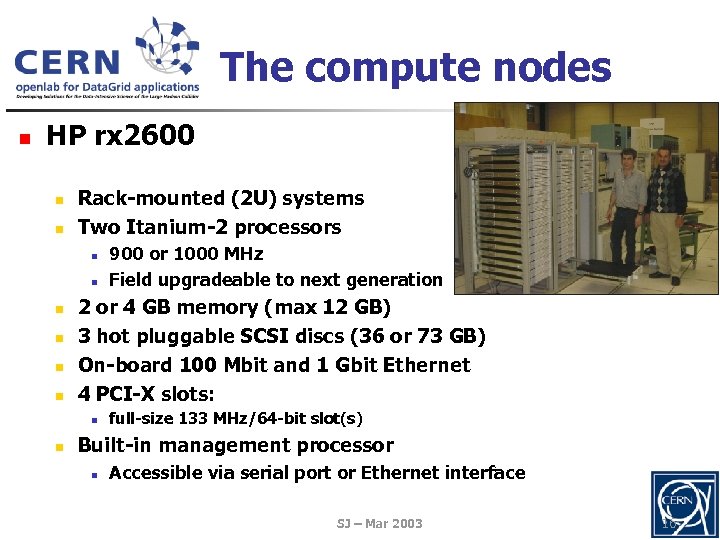

The compute nodes n HP rx 2600 n n Rack-mounted (2 U) systems Two Itanium-2 processors n n n 2 or 4 GB memory (max 12 GB) 3 hot pluggable SCSI discs (36 or 73 GB) On-board 100 Mbit and 1 Gbit Ethernet 4 PCI-X slots: n n 900 or 1000 MHz Field upgradeable to next generation full-size 133 MHz/64 -bit slot(s) Built-in management processor n Accessible via serial port or Ethernet interface SJ – Mar 2003 16

The compute nodes n HP rx 2600 n n Rack-mounted (2 U) systems Two Itanium-2 processors n n n 2 or 4 GB memory (max 12 GB) 3 hot pluggable SCSI discs (36 or 73 GB) On-board 100 Mbit and 1 Gbit Ethernet 4 PCI-X slots: n n 900 or 1000 MHz Field upgradeable to next generation full-size 133 MHz/64 -bit slot(s) Built-in management processor n Accessible via serial port or Ethernet interface SJ – Mar 2003 16

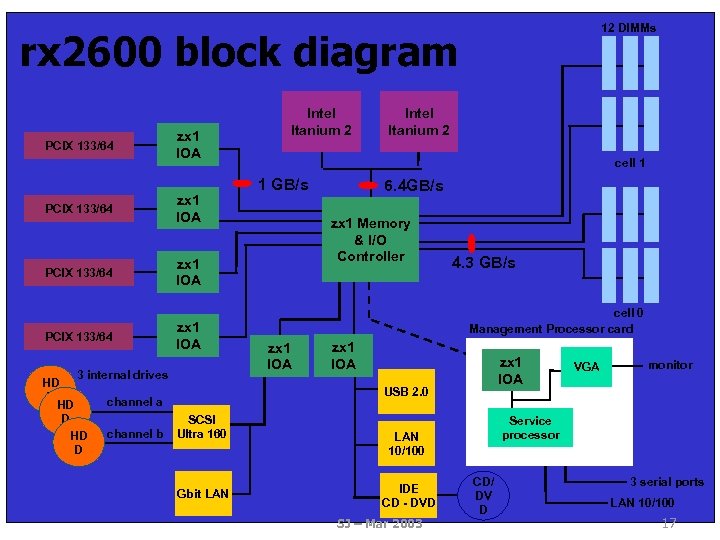

12 DIMMs rx 2600 block diagram PCIX 133/64 zx 1 IOA PCIX 133/64 Intel Itanium 2 cell 1 1 GB/s zx 1 IOA PCIX 133/64 zx 1 IOA 3 internal drives HD D 6. 4 GB/s zx 1 Memory & I/O Controller 4. 3 GB/s cell 0 Management Processor card zx 1 IOA USB 2. 0 channel a channel b Intel Itanium 2 SCSI Ultra 160 Gbit LAN SJ – Mar 2003 monitor Service processor LAN 10/100 IDE CD - DVD VGA CD/ DV D 3 serial ports LAN 10/100 17

12 DIMMs rx 2600 block diagram PCIX 133/64 zx 1 IOA PCIX 133/64 Intel Itanium 2 cell 1 1 GB/s zx 1 IOA PCIX 133/64 zx 1 IOA 3 internal drives HD D 6. 4 GB/s zx 1 Memory & I/O Controller 4. 3 GB/s cell 0 Management Processor card zx 1 IOA USB 2. 0 channel a channel b Intel Itanium 2 SCSI Ultra 160 Gbit LAN SJ – Mar 2003 monitor Service processor LAN 10/100 IDE CD - DVD VGA CD/ DV D 3 serial ports LAN 10/100 17

Benchmarks Comment: Note that 64 -bit benchmarks will pay a performance penalty for LP 64, i. e. 64 -bit pointers. Need to wait for AMD systems that can run natively either a 32 -bit OS or a 64 -bit OS to understand the exact cost for our benchmarks. SJ – Mar 2003 18

Benchmarks Comment: Note that 64 -bit benchmarks will pay a performance penalty for LP 64, i. e. 64 -bit pointers. Need to wait for AMD systems that can run natively either a 32 -bit OS or a 64 -bit OS to understand the exact cost for our benchmarks. SJ – Mar 2003 18

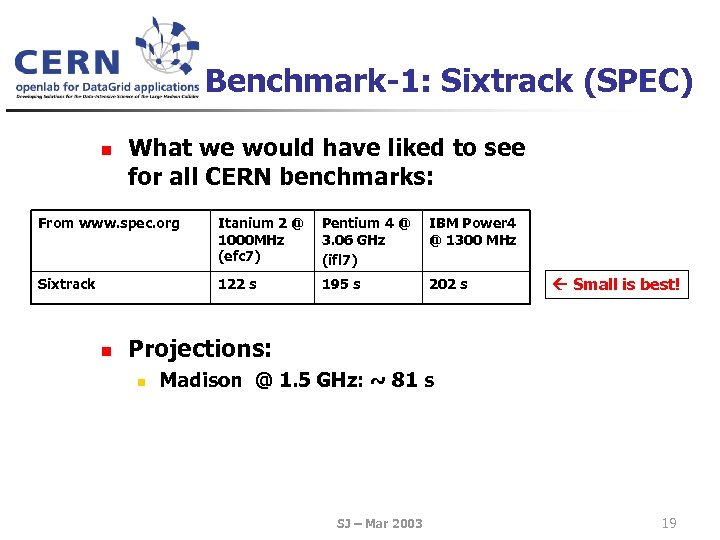

Benchmark-1: Sixtrack (SPEC) n What we would have liked to see for all CERN benchmarks: From www. spec. org Itanium 2 @ Pentium 4 @ 1000 MHz 3. 06 GHz (efc 7) (ifl 7) IBM Power 4 @ 1300 MHz Sixtrack 122 s 202 s n 195 s Small is best! Projections: n Madison @ 1. 5 GHz: ~ 81 s SJ – Mar 2003 19

Benchmark-1: Sixtrack (SPEC) n What we would have liked to see for all CERN benchmarks: From www. spec. org Itanium 2 @ Pentium 4 @ 1000 MHz 3. 06 GHz (efc 7) (ifl 7) IBM Power 4 @ 1300 MHz Sixtrack 122 s 202 s n 195 s Small is best! Projections: n Madison @ 1. 5 GHz: ~ 81 s SJ – Mar 2003 19

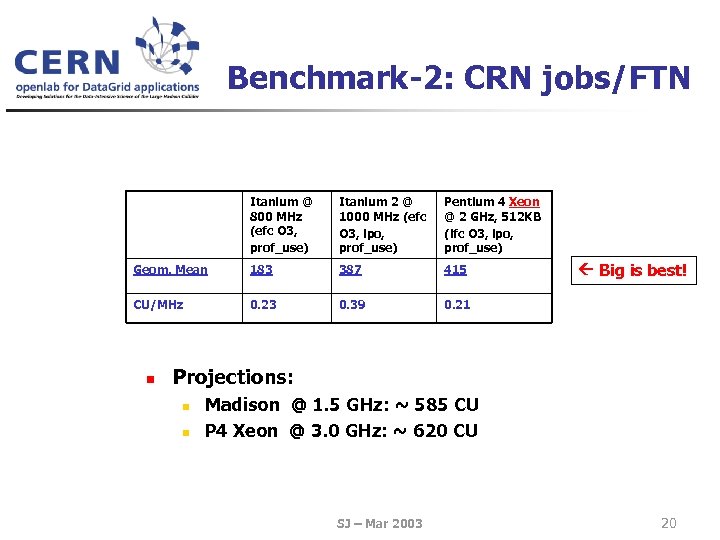

Benchmark-2: CRN jobs/FTN Itanium @ 800 MHz (efc O 3, prof_use) Itanium 2 @ 1000 MHz (efc O 3, ipo, prof_use) Pentium 4 Xeon @ 2 GHz, 512 KB (ifc O 3, ipo, prof_use) Geom. Mean 183 387 415 CU/MHz 0. 23 0. 39 0. 21 n Big is best! Projections: n n Madison @ 1. 5 GHz: ~ 585 CU P 4 Xeon @ 3. 0 GHz: ~ 620 CU SJ – Mar 2003 20

Benchmark-2: CRN jobs/FTN Itanium @ 800 MHz (efc O 3, prof_use) Itanium 2 @ 1000 MHz (efc O 3, ipo, prof_use) Pentium 4 Xeon @ 2 GHz, 512 KB (ifc O 3, ipo, prof_use) Geom. Mean 183 387 415 CU/MHz 0. 23 0. 39 0. 21 n Big is best! Projections: n n Madison @ 1. 5 GHz: ~ 585 CU P 4 Xeon @ 3. 0 GHz: ~ 620 CU SJ – Mar 2003 20

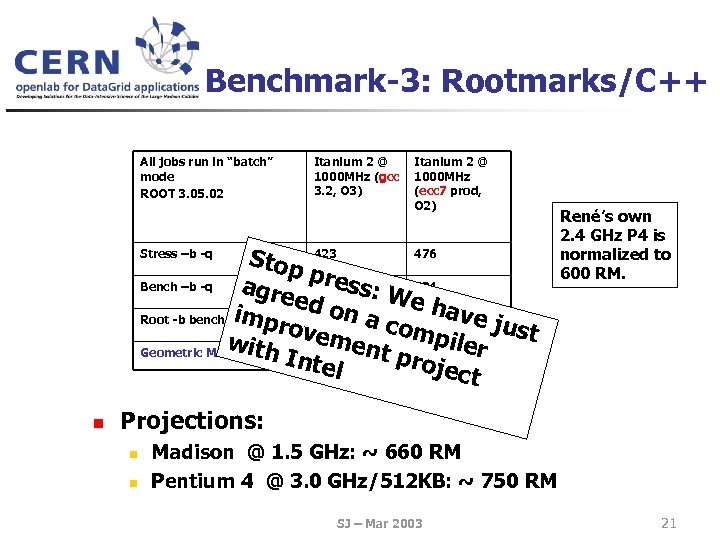

Benchmark-3: Rootmarks/C++ All jobs run in “batch” mode ROOT 3. 05. 02 Itanium 2 @ 1000 MHz (gcc 3. 2, O 3) Itanium 2 @ 1000 MHz (ecc 7 prod, O 2) 476 Stop 423 pre Bench –b -q agre 449 ss: W 534 ed o e h impr 344 n a c 325 ave j Root -b benchmarks. C -q ust omp ovem iler w Geometric Mean ith 402 ent 436 proj Inte ect l Stress –b -q n René’s own 2. 4 GHz P 4 is normalized to 600 RM. Projections: n n Madison @ 1. 5 GHz: ~ 660 RM Pentium 4 @ 3. 0 GHz/512 KB: ~ 750 RM SJ – Mar 2003 21

Benchmark-3: Rootmarks/C++ All jobs run in “batch” mode ROOT 3. 05. 02 Itanium 2 @ 1000 MHz (gcc 3. 2, O 3) Itanium 2 @ 1000 MHz (ecc 7 prod, O 2) 476 Stop 423 pre Bench –b -q agre 449 ss: W 534 ed o e h impr 344 n a c 325 ave j Root -b benchmarks. C -q ust omp ovem iler w Geometric Mean ith 402 ent 436 proj Inte ect l Stress –b -q n René’s own 2. 4 GHz P 4 is normalized to 600 RM. Projections: n n Madison @ 1. 5 GHz: ~ 660 RM Pentium 4 @ 3. 0 GHz/512 KB: ~ 750 RM SJ – Mar 2003 21

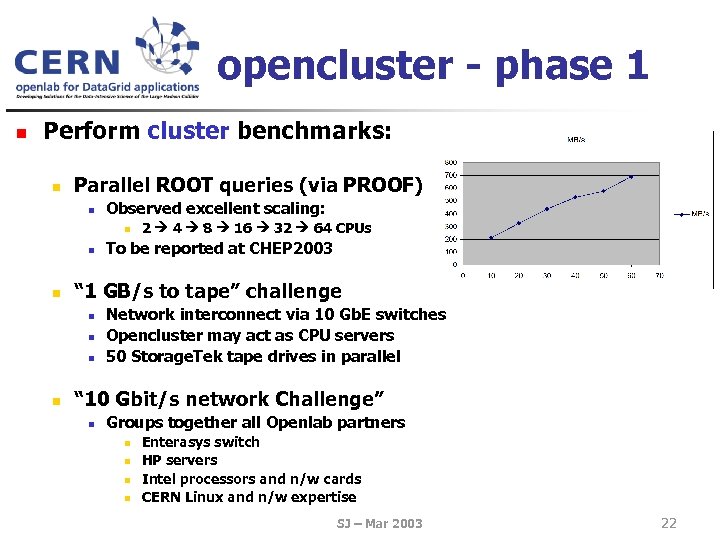

opencluster - phase 1 n Perform cluster benchmarks: n Parallel ROOT queries (via PROOF) n Observed excellent scaling: n n n To be reported at CHEP 2003 “ 1 GB/s to tape” challenge n n 2 4 8 16 32 64 CPUs Network interconnect via 10 Gb. E switches Opencluster may act as CPU servers 50 Storage. Tek tape drives in parallel “ 10 Gbit/s network Challenge” n Groups together all Openlab partners n n Enterasys switch HP servers Intel processors and n/w cards CERN Linux and n/w expertise SJ – Mar 2003 22

opencluster - phase 1 n Perform cluster benchmarks: n Parallel ROOT queries (via PROOF) n Observed excellent scaling: n n n To be reported at CHEP 2003 “ 1 GB/s to tape” challenge n n 2 4 8 16 32 64 CPUs Network interconnect via 10 Gb. E switches Opencluster may act as CPU servers 50 Storage. Tek tape drives in parallel “ 10 Gbit/s network Challenge” n Groups together all Openlab partners n n Enterasys switch HP servers Intel processors and n/w cards CERN Linux and n/w expertise SJ – Mar 2003 22

10 Gb. E Challenge SJ – Mar 2003 23

10 Gb. E Challenge SJ – Mar 2003 23

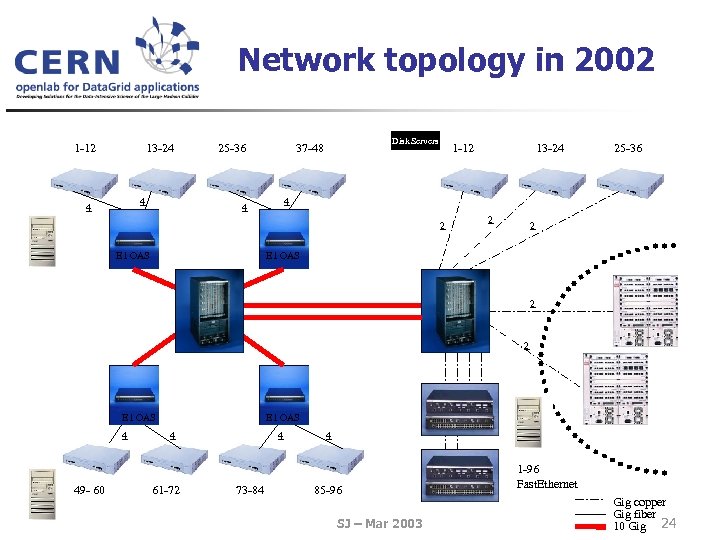

Network topology in 2002 1 -12 13 -24 4 4 25 -36 Disk Servers 37 -48 1 -12 25 -36 4 4 2 E 1 OAS 13 -24 2 2 E 1 OAS 4 49 - 60 E 1 OAS 4 61 -72 4 73 -84 4 85 -96 SJ – Mar 2003 1 -96 Fast. Ethernet Gig copper Gig fiber 10 Gig 24

Network topology in 2002 1 -12 13 -24 4 4 25 -36 Disk Servers 37 -48 1 -12 25 -36 4 4 2 E 1 OAS 13 -24 2 2 E 1 OAS 4 49 - 60 E 1 OAS 4 61 -72 4 73 -84 4 85 -96 SJ – Mar 2003 1 -96 Fast. Ethernet Gig copper Gig fiber 10 Gig 24

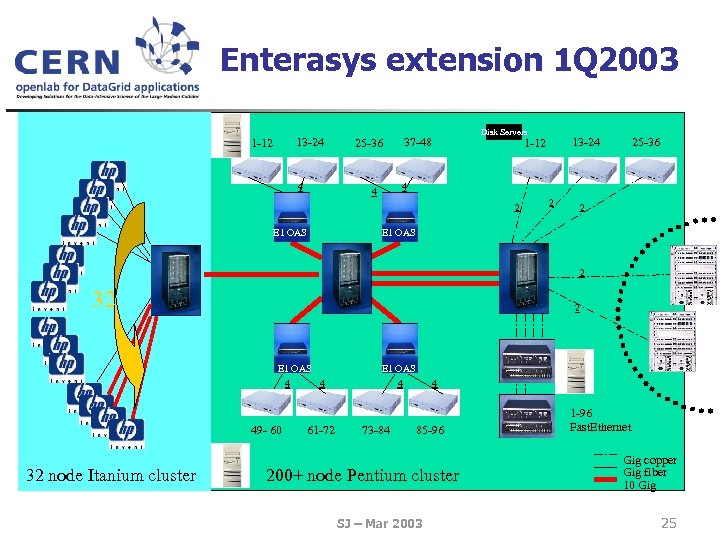

Enterasys extension 1 Q 2003 13 -24 1 -12 4 Disk Servers 37 -48 25 -36 4 4 2 E 1 OAS 13 -24 1 -12 2 2 E 1 OAS 2 32 2 E 1 OAS 4 49 - 60 32 node Itanium cluster E 1 OAS 4 4 61 -72 73 -84 4 85 -96 200+ node Pentium cluster SJ – Mar 2003 1 -96 Fast. Ethernet Gig copper Gig fiber 10 Gig 25

Enterasys extension 1 Q 2003 13 -24 1 -12 4 Disk Servers 37 -48 25 -36 4 4 2 E 1 OAS 13 -24 1 -12 2 2 E 1 OAS 2 32 2 E 1 OAS 4 49 - 60 32 node Itanium cluster E 1 OAS 4 4 61 -72 73 -84 4 85 -96 200+ node Pentium cluster SJ – Mar 2003 1 -96 Fast. Ethernet Gig copper Gig fiber 10 Gig 25

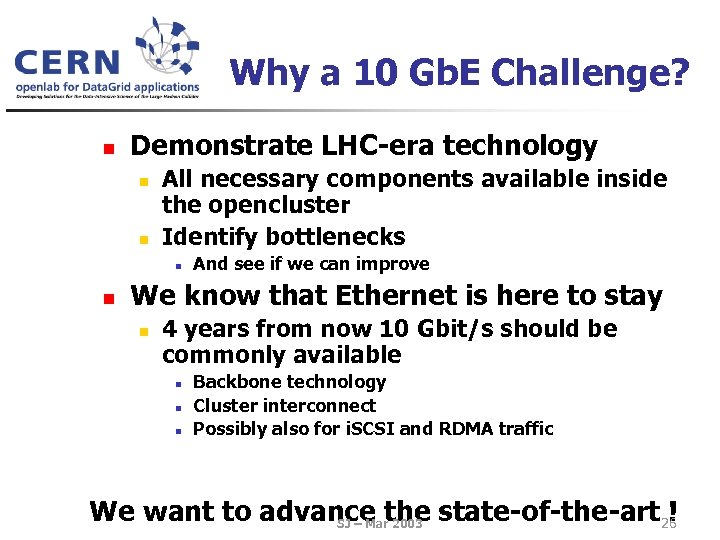

Why a 10 Gb. E Challenge? n Demonstrate LHC-era technology n n All necessary components available inside the opencluster Identify bottlenecks n n And see if we can improve We know that Ethernet is here to stay n 4 years from now 10 Gbit/s should be commonly available n n n Backbone technology Cluster interconnect Possibly also for i. SCSI and RDMA traffic We want to advance the state-of-the-art ! 26 SJ – Mar 2003

Why a 10 Gb. E Challenge? n Demonstrate LHC-era technology n n All necessary components available inside the opencluster Identify bottlenecks n n And see if we can improve We know that Ethernet is here to stay n 4 years from now 10 Gbit/s should be commonly available n n n Backbone technology Cluster interconnect Possibly also for i. SCSI and RDMA traffic We want to advance the state-of-the-art ! 26 SJ – Mar 2003

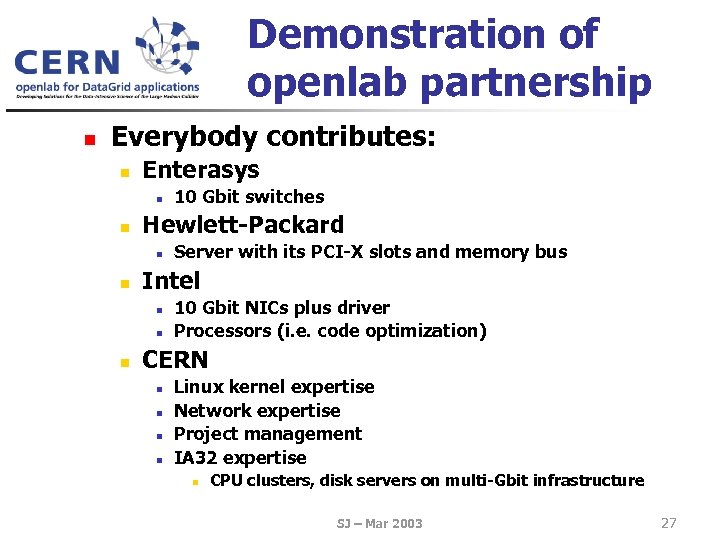

Demonstration of openlab partnership n Everybody contributes: n Enterasys n n Hewlett-Packard n n Server with its PCI-X slots and memory bus Intel n n n 10 Gbit switches 10 Gbit NICs plus driver Processors (i. e. code optimization) CERN n n Linux kernel expertise Network expertise Project management IA 32 expertise n CPU clusters, disk servers on multi-Gbit infrastructure SJ – Mar 2003 27

Demonstration of openlab partnership n Everybody contributes: n Enterasys n n Hewlett-Packard n n Server with its PCI-X slots and memory bus Intel n n n 10 Gbit switches 10 Gbit NICs plus driver Processors (i. e. code optimization) CERN n n Linux kernel expertise Network expertise Project management IA 32 expertise n CPU clusters, disk servers on multi-Gbit infrastructure SJ – Mar 2003 27

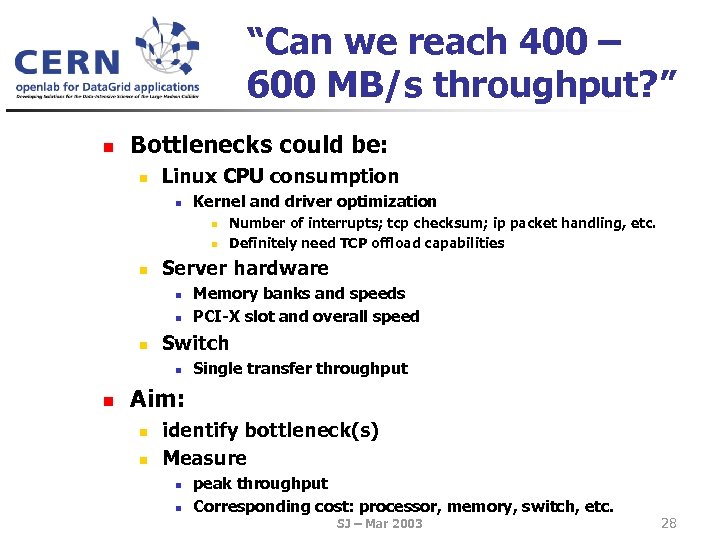

“Can we reach 400 – 600 MB/s throughput? ” n Bottlenecks could be: n Linux CPU consumption n Kernel and driver optimization n Server hardware n n n Memory banks and speeds PCI-X slot and overall speed Switch n n Number of interrupts; tcp checksum; ip packet handling, etc. Definitely need TCP offload capabilities Single transfer throughput Aim: n n identify bottleneck(s) Measure n n peak throughput Corresponding cost: processor, memory, switch, etc. SJ – Mar 2003 28

“Can we reach 400 – 600 MB/s throughput? ” n Bottlenecks could be: n Linux CPU consumption n Kernel and driver optimization n Server hardware n n n Memory banks and speeds PCI-X slot and overall speed Switch n n Number of interrupts; tcp checksum; ip packet handling, etc. Definitely need TCP offload capabilities Single transfer throughput Aim: n n identify bottleneck(s) Measure n n peak throughput Corresponding cost: processor, memory, switch, etc. SJ – Mar 2003 28

Gridification SJ – Mar 2003 29

Gridification SJ – Mar 2003 29

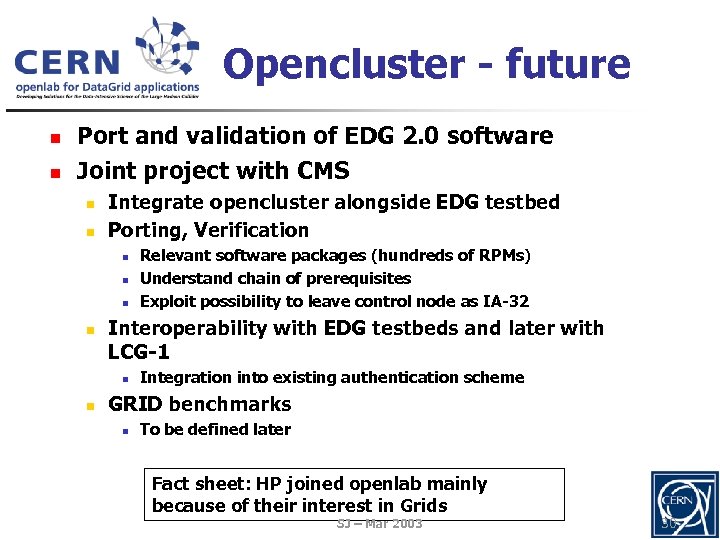

Opencluster - future n n Port and validation of EDG 2. 0 software Joint project with CMS n n Integrate opencluster alongside EDG testbed Porting, Verification n n Interoperability with EDG testbeds and later with LCG-1 n n Relevant software packages (hundreds of RPMs) Understand chain of prerequisites Exploit possibility to leave control node as IA-32 Integration into existing authentication scheme GRID benchmarks n To be defined later Fact sheet: HP joined openlab mainly because of their interest in Grids SJ – Mar 2003 30

Opencluster - future n n Port and validation of EDG 2. 0 software Joint project with CMS n n Integrate opencluster alongside EDG testbed Porting, Verification n n Interoperability with EDG testbeds and later with LCG-1 n n Relevant software packages (hundreds of RPMs) Understand chain of prerequisites Exploit possibility to leave control node as IA-32 Integration into existing authentication scheme GRID benchmarks n To be defined later Fact sheet: HP joined openlab mainly because of their interest in Grids SJ – Mar 2003 30

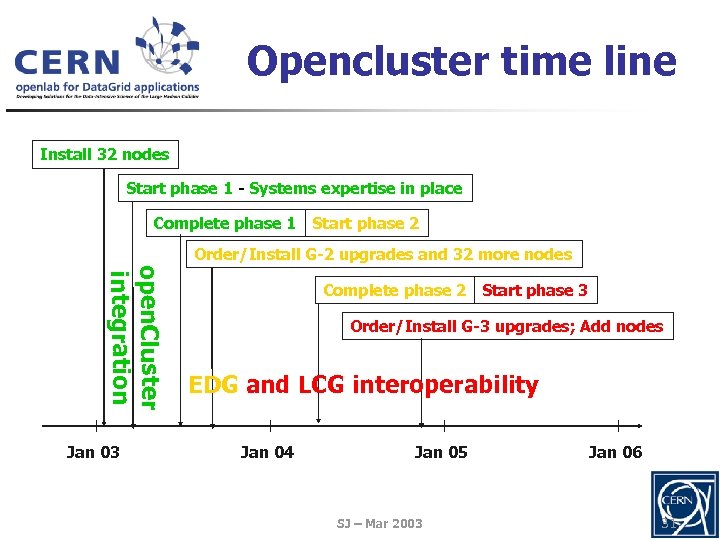

Opencluster time line Install 32 nodes Start phase 1 - Systems expertise in place Complete phase 1 Start phase 2 Order/Install G-2 upgrades and 32 more nodes open. Cluster integration Jan 03 Complete phase 2 Start phase 3 Order/Install G-3 upgrades; Add nodes EDG and LCG interoperability Jan 04 Jan 05 SJ – Mar 2003 Jan 06 31

Opencluster time line Install 32 nodes Start phase 1 - Systems expertise in place Complete phase 1 Start phase 2 Order/Install G-2 upgrades and 32 more nodes open. Cluster integration Jan 03 Complete phase 2 Start phase 3 Order/Install G-3 upgrades; Add nodes EDG and LCG interoperability Jan 04 Jan 05 SJ – Mar 2003 Jan 06 31

Recap: opencluster strategy n Demonstrate promising IT technologies n n File system technology to come Deploy the technologies well beyond the opencluster itself Focal point for vendor collaborations Channel for providing information to vendors SJ – Mar 2003 32

Recap: opencluster strategy n Demonstrate promising IT technologies n n File system technology to come Deploy the technologies well beyond the opencluster itself Focal point for vendor collaborations Channel for providing information to vendors SJ – Mar 2003 32

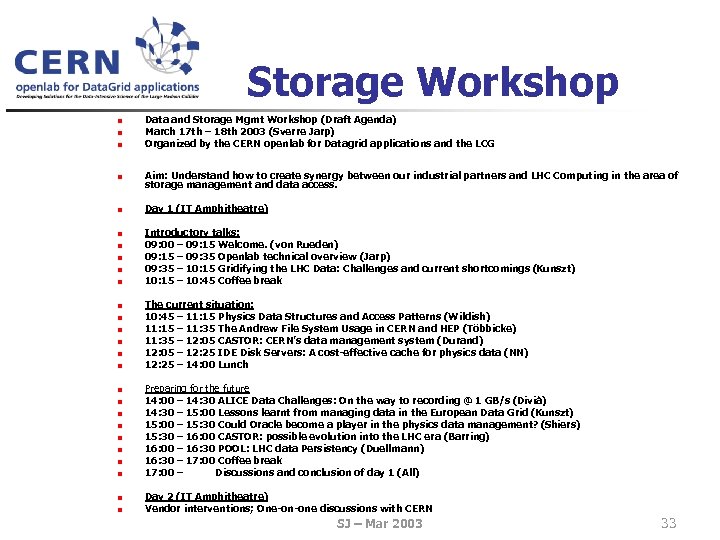

Storage Workshop n n n Data and Storage Mgmt Workshop (Draft Agenda) March 17 th – 18 th 2003 (Sverre Jarp) Organized by the CERN openlab for Datagrid applications and the LCG n Aim: Understand how to create synergy between our industrial partners and LHC Computing in the area of storage management and data access. n Day 1 (IT Amphitheatre) n n n n n n Introductory talks: 09: 00 – 09: 15 Welcome. (von Rueden) 09: 15 – 09: 35 Openlab technical overview (Jarp) 09: 35 – 10: 15 Gridifying the LHC Data: Challenges and current shortcomings (Kunszt) 10: 15 – 10: 45 Coffee break The current situation: 10: 45 – 11: 15 Physics Data Structures and Access Patterns (Wildish) 11: 15 – 11: 35 The Andrew File System Usage in CERN and HEP (Többicke) 11: 35 – 12: 05 CASTOR: CERN’s data management system (Durand) 12: 05 – 12: 25 IDE Disk Servers: A cost-effective cache for physics data (NN) 12: 25 – 14: 00 Lunch Preparing for the future 14: 00 – 14: 30 ALICE Data Challenges: On the way to recording @ 1 GB/s (Divià) 14: 30 – 15: 00 Lessons learnt from managing data in the European Data Grid (Kunszt) 15: 00 – 15: 30 Could Oracle become a player in the physics data management? (Shiers) 15: 30 – 16: 00 CASTOR: possible evolution into the LHC era (Barring) 16: 00 – 16: 30 POOL: LHC data Persistency (Duellmann) 16: 30 – 17: 00 Coffee break 17: 00 – Discussions and conclusion of day 1 (All) Day 2 (IT Amphitheatre) Vendor interventions; One-on-one discussions with CERN SJ – Mar 2003 33

Storage Workshop n n n Data and Storage Mgmt Workshop (Draft Agenda) March 17 th – 18 th 2003 (Sverre Jarp) Organized by the CERN openlab for Datagrid applications and the LCG n Aim: Understand how to create synergy between our industrial partners and LHC Computing in the area of storage management and data access. n Day 1 (IT Amphitheatre) n n n n n n Introductory talks: 09: 00 – 09: 15 Welcome. (von Rueden) 09: 15 – 09: 35 Openlab technical overview (Jarp) 09: 35 – 10: 15 Gridifying the LHC Data: Challenges and current shortcomings (Kunszt) 10: 15 – 10: 45 Coffee break The current situation: 10: 45 – 11: 15 Physics Data Structures and Access Patterns (Wildish) 11: 15 – 11: 35 The Andrew File System Usage in CERN and HEP (Többicke) 11: 35 – 12: 05 CASTOR: CERN’s data management system (Durand) 12: 05 – 12: 25 IDE Disk Servers: A cost-effective cache for physics data (NN) 12: 25 – 14: 00 Lunch Preparing for the future 14: 00 – 14: 30 ALICE Data Challenges: On the way to recording @ 1 GB/s (Divià) 14: 30 – 15: 00 Lessons learnt from managing data in the European Data Grid (Kunszt) 15: 00 – 15: 30 Could Oracle become a player in the physics data management? (Shiers) 15: 30 – 16: 00 CASTOR: possible evolution into the LHC era (Barring) 16: 00 – 16: 30 POOL: LHC data Persistency (Duellmann) 16: 30 – 17: 00 Coffee break 17: 00 – Discussions and conclusion of day 1 (All) Day 2 (IT Amphitheatre) Vendor interventions; One-on-one discussions with CERN SJ – Mar 2003 33

THANK YOU SJ – Mar 2003 34

THANK YOU SJ – Mar 2003 34