dcf04651256186269b45ac8a4024395e.ppt

- Количество слайдов: 28

The Evolution of ESnet Joint Techs Summary William E. Johnston ESnet Manager and Senior Scientist Lawrence Berkeley National Laboratory (wej@es. net, www. es. net) 1

The Evolution of ESnet Joint Techs Summary William E. Johnston ESnet Manager and Senior Scientist Lawrence Berkeley National Laboratory (wej@es. net, www. es. net) 1

What Does ESnet Provide? • The purpose of ESnet is to support the science missions of the Department of Energy’s Office of Science, as well as other parts of DOE (mostly NNSA). To this end ESnet provides o Comprehensive physical and logical connectivity - High bandwidth access to DOE sites and DOE’s primary science collaborators – the Research and Education institutions in the US, Europe, Asia Pacific, and elsewhere - Full access to the global Internet for DOE Labs (160, 000 routes from 180 peers at 40 peering points) o An architecture designed to move huge amounts of data between a small number of sites that are scattered all over the world o Full ISP services 2

What Does ESnet Provide? • The purpose of ESnet is to support the science missions of the Department of Energy’s Office of Science, as well as other parts of DOE (mostly NNSA). To this end ESnet provides o Comprehensive physical and logical connectivity - High bandwidth access to DOE sites and DOE’s primary science collaborators – the Research and Education institutions in the US, Europe, Asia Pacific, and elsewhere - Full access to the global Internet for DOE Labs (160, 000 routes from 180 peers at 40 peering points) o An architecture designed to move huge amounts of data between a small number of sites that are scattered all over the world o Full ISP services 2

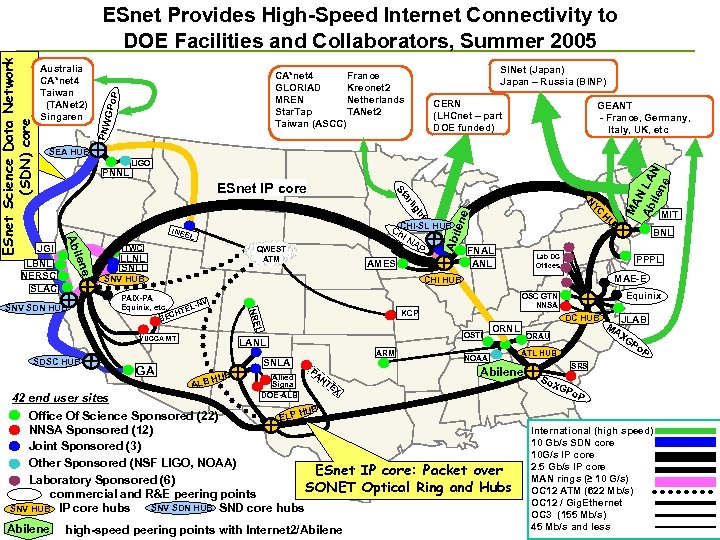

SINet (Japan) Japan – Russia (BINP) GPo P CA*net 4 France GLORIAD Kreonet 2 MREN Netherlands Star. Tap TANet 2 Taiwan (ASCC) PNW Australia CA*net 4 Taiwan (TANet 2) Singaren CERN (LHCnet – part DOE funded) GEANT - France, Germany, Italy, UK, etc SEA HUB LIGO e hi L TWC QWEST ATM LLNL SNLL NA P AMES SNV HUB FNAL ANL PAIX-PA -NV Equinix, etc. TEL ECH B L SNLA GA A UB LB H Allied Signal DOE-ALB PA NOAA EX high-speed peering points with Internet 2/Abilene JLAB MA XG ORAU Po P ATL HUB Abilene NT Equinix DC HUB ORNL 42 end user sites HUB Office Of Science Sponsored (22) ELP NNSA Sponsored (12) Joint Sponsored (3) Other Sponsored (NSF LIGO, NOAA) ESnet IP core: Packet over Laboratory Sponsored (6) SONET Optical Ring and Hubs commercial and R&E peering points SNV SDN HUB SND core hubs SNV HUB IP core hubs Abilene PPPL OSC GTN NNSA OSTI ARM BNL MAE-E KCP LANL MIT Lab DC Offices CHI HUB YUCCA MT SDSC HUB len e INEE Abi C CHI-SL HUB NRE SNV SDN HUB B U H ilen JGI LBNL NERSC SLAC YC N t gh rli a St ESnet IP core MA N Ab LA ile N ne PNNL Ab ESnet Science Data Network (SDN) core ESnet Provides High-Speed Internet Connectivity to DOE Facilities and Collaborators, Summer 2005 SRS So XG Po P International (high speed) 10 Gb/s SDN core 10 G/s IP core 2. 5 Gb/s IP core MAN rings (≥ 10 G/s) OC 12 ATM (622 Mb/s) OC 12 / Gig. Ethernet OC 3 (155 Mb/s) 45 Mb/s and less

SINet (Japan) Japan – Russia (BINP) GPo P CA*net 4 France GLORIAD Kreonet 2 MREN Netherlands Star. Tap TANet 2 Taiwan (ASCC) PNW Australia CA*net 4 Taiwan (TANet 2) Singaren CERN (LHCnet – part DOE funded) GEANT - France, Germany, Italy, UK, etc SEA HUB LIGO e hi L TWC QWEST ATM LLNL SNLL NA P AMES SNV HUB FNAL ANL PAIX-PA -NV Equinix, etc. TEL ECH B L SNLA GA A UB LB H Allied Signal DOE-ALB PA NOAA EX high-speed peering points with Internet 2/Abilene JLAB MA XG ORAU Po P ATL HUB Abilene NT Equinix DC HUB ORNL 42 end user sites HUB Office Of Science Sponsored (22) ELP NNSA Sponsored (12) Joint Sponsored (3) Other Sponsored (NSF LIGO, NOAA) ESnet IP core: Packet over Laboratory Sponsored (6) SONET Optical Ring and Hubs commercial and R&E peering points SNV SDN HUB SND core hubs SNV HUB IP core hubs Abilene PPPL OSC GTN NNSA OSTI ARM BNL MAE-E KCP LANL MIT Lab DC Offices CHI HUB YUCCA MT SDSC HUB len e INEE Abi C CHI-SL HUB NRE SNV SDN HUB B U H ilen JGI LBNL NERSC SLAC YC N t gh rli a St ESnet IP core MA N Ab LA ile N ne PNNL Ab ESnet Science Data Network (SDN) core ESnet Provides High-Speed Internet Connectivity to DOE Facilities and Collaborators, Summer 2005 SRS So XG Po P International (high speed) 10 Gb/s SDN core 10 G/s IP core 2. 5 Gb/s IP core MAN rings (≥ 10 G/s) OC 12 ATM (622 Mb/s) OC 12 / Gig. Ethernet OC 3 (155 Mb/s) 45 Mb/s and less

DOE Office of Science Drivers for Networking • The large-scale science that is the mission of the Office of Science is dependent on networks for o o Supporting thousands of collaborators world-wide o Distributed data processing o Distributed simulation, visualization, and computational steering o • Sharing of massive amounts of data Distributed data management These issues were explored in two Office of Science workshops that formulated networking requirements to meet the needs of the science programs (see refs. ) 4

DOE Office of Science Drivers for Networking • The large-scale science that is the mission of the Office of Science is dependent on networks for o o Supporting thousands of collaborators world-wide o Distributed data processing o Distributed simulation, visualization, and computational steering o • Sharing of massive amounts of data Distributed data management These issues were explored in two Office of Science workshops that formulated networking requirements to meet the needs of the science programs (see refs. ) 4

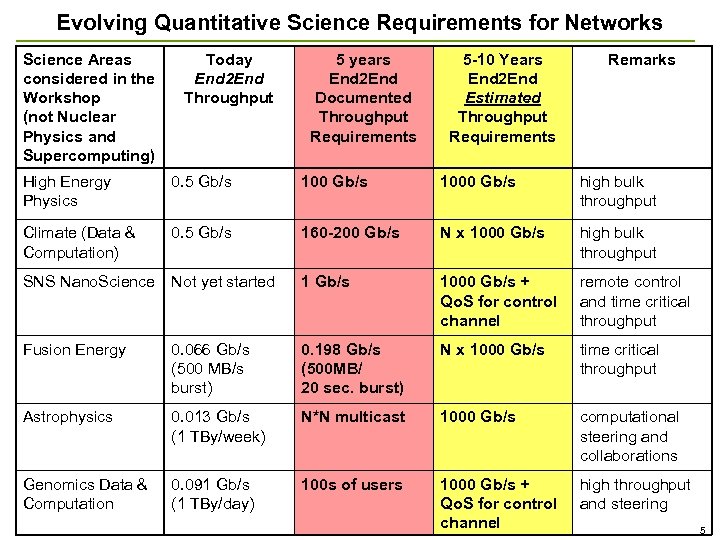

Evolving Quantitative Science Requirements for Networks Science Areas considered in the Workshop (not Nuclear Physics and Supercomputing) Today End 2 End Throughput 5 years End 2 End Documented Throughput Requirements 5 -10 Years End 2 End Estimated Throughput Requirements Remarks High Energy Physics 0. 5 Gb/s 1000 Gb/s high bulk throughput Climate (Data & Computation) 0. 5 Gb/s 160 -200 Gb/s N x 1000 Gb/s high bulk throughput SNS Nano. Science Not yet started 1 Gb/s 1000 Gb/s + Qo. S for control channel remote control and time critical throughput Fusion Energy 0. 066 Gb/s (500 MB/s burst) 0. 198 Gb/s (500 MB/ 20 sec. burst) N x 1000 Gb/s time critical throughput Astrophysics 0. 013 Gb/s (1 TBy/week) N*N multicast 1000 Gb/s computational steering and collaborations Genomics Data & Computation 0. 091 Gb/s (1 TBy/day) 100 s of users 1000 Gb/s + Qo. S for control channel high throughput and steering 5

Evolving Quantitative Science Requirements for Networks Science Areas considered in the Workshop (not Nuclear Physics and Supercomputing) Today End 2 End Throughput 5 years End 2 End Documented Throughput Requirements 5 -10 Years End 2 End Estimated Throughput Requirements Remarks High Energy Physics 0. 5 Gb/s 1000 Gb/s high bulk throughput Climate (Data & Computation) 0. 5 Gb/s 160 -200 Gb/s N x 1000 Gb/s high bulk throughput SNS Nano. Science Not yet started 1 Gb/s 1000 Gb/s + Qo. S for control channel remote control and time critical throughput Fusion Energy 0. 066 Gb/s (500 MB/s burst) 0. 198 Gb/s (500 MB/ 20 sec. burst) N x 1000 Gb/s time critical throughput Astrophysics 0. 013 Gb/s (1 TBy/week) N*N multicast 1000 Gb/s computational steering and collaborations Genomics Data & Computation 0. 091 Gb/s (1 TBy/day) 100 s of users 1000 Gb/s + Qo. S for control channel high throughput and steering 5

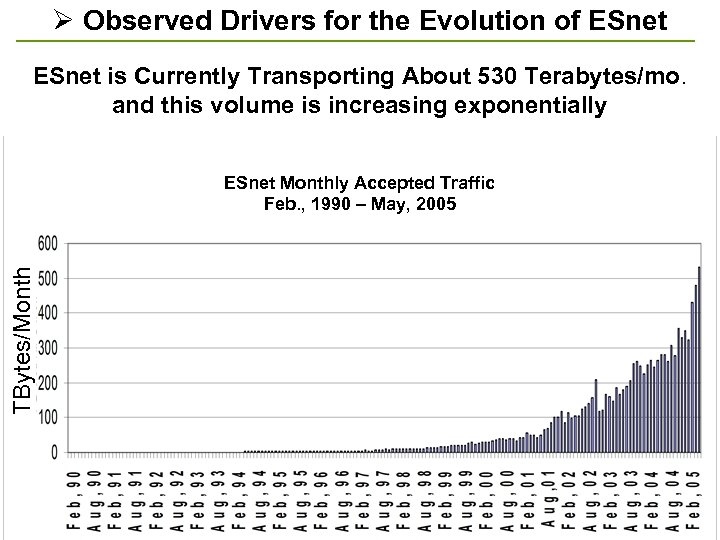

Ø Observed Drivers for the Evolution of ESnet is Currently Transporting About 530 Terabytes/mo. and this volume is increasing exponentially TBytes/Month ESnet Monthly Accepted Traffic Feb. , 1990 – May, 2005 6

Ø Observed Drivers for the Evolution of ESnet is Currently Transporting About 530 Terabytes/mo. and this volume is increasing exponentially TBytes/Month ESnet Monthly Accepted Traffic Feb. , 1990 – May, 2005 6

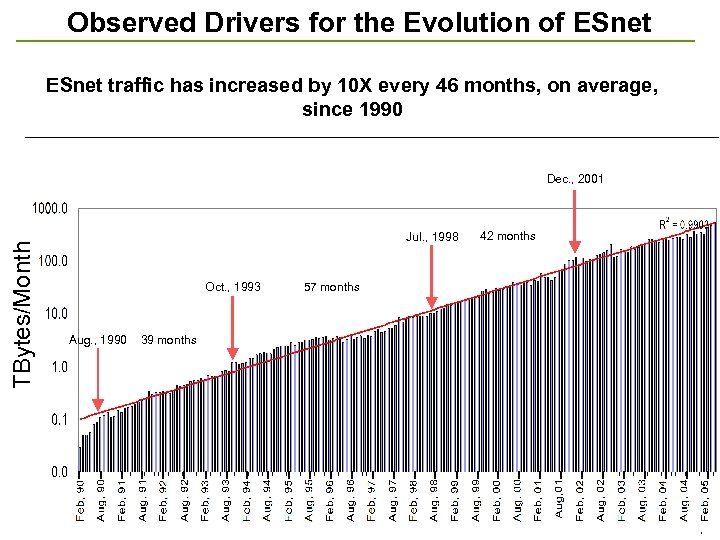

Observed Drivers for the Evolution of ESnet traffic has increased by 10 X every 46 months, on average, since 1990 TBytes/Month Dec. , 2001 Jul. , 1998 Oct. , 1993 Aug. , 1990 42 months 57 months 39 months 7

Observed Drivers for the Evolution of ESnet traffic has increased by 10 X every 46 months, on average, since 1990 TBytes/Month Dec. , 2001 Jul. , 1998 Oct. , 1993 Aug. , 1990 42 months 57 months 39 months 7

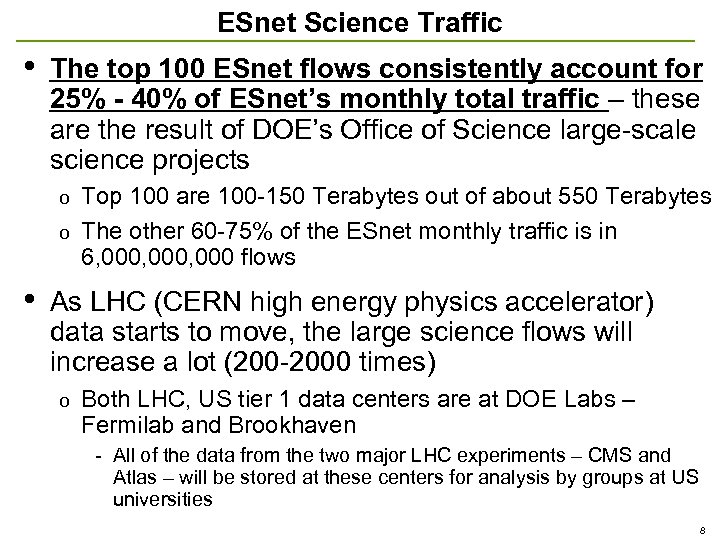

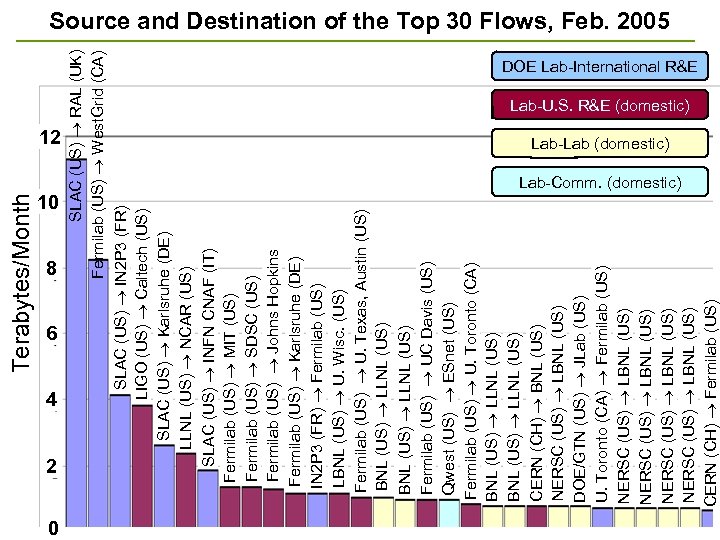

ESnet Science Traffic • The top 100 ESnet flows consistently account for 25% - 40% of ESnet’s monthly total traffic – these are the result of DOE’s Office of Science large-scale science projects Top 100 are 100 -150 Terabytes out of about 550 Terabytes o The other 60 -75% of the ESnet monthly traffic is in 6, 000, 000 flows o • As LHC (CERN high energy physics accelerator) data starts to move, the large science flows will increase a lot (200 -2000 times) o Both LHC, US tier 1 data centers are at DOE Labs – Fermilab and Brookhaven - All of the data from the two major LHC experiments – CMS and Atlas – will be stored at these centers for analysis by groups at US universities 8

ESnet Science Traffic • The top 100 ESnet flows consistently account for 25% - 40% of ESnet’s monthly total traffic – these are the result of DOE’s Office of Science large-scale science projects Top 100 are 100 -150 Terabytes out of about 550 Terabytes o The other 60 -75% of the ESnet monthly traffic is in 6, 000, 000 flows o • As LHC (CERN high energy physics accelerator) data starts to move, the large science flows will increase a lot (200 -2000 times) o Both LHC, US tier 1 data centers are at DOE Labs – Fermilab and Brookhaven - All of the data from the two major LHC experiments – CMS and Atlas – will be stored at these centers for analysis by groups at US universities 8

Terabytes/Month DOE Lab-International R&E Lab-U. S. R&E (domestic) 12 10 8 6 4 2 0 SLAC (US) RAL (UK) Fermilab (US) West. Grid (CA) SLAC (US) IN 2 P 3 (FR) LIGO (US) Caltech (US) SLAC (US) Karlsruhe (DE) LLNL (US) NCAR (US) SLAC (US) INFN CNAF (IT) Fermilab (US) MIT (US) Fermilab (US) SDSC (US) Fermilab (US) Johns Hopkins Fermilab (US) Karlsruhe (DE) IN 2 P 3 (FR) Fermilab (US) LBNL (US) U. Wisc. (US) Fermilab (US) U. Texas, Austin (US) BNL (US) LLNL (US) Fermilab (US) UC Davis (US) Qwest (US) ESnet (US) Fermilab (US) U. Toronto (CA) BNL (US) LLNL (US) CERN (CH) BNL (US) NERSC (US) LBNL (US) DOE/GTN (US) JLab (US) U. Toronto (CA) Fermilab (US) NERSC (US) LBNL (US) CERN (CH) Fermilab (US) Source and Destination of the Top 30 Flows, Feb. 2005 Lab-Lab (domestic) Lab-Comm. (domestic) 9

Terabytes/Month DOE Lab-International R&E Lab-U. S. R&E (domestic) 12 10 8 6 4 2 0 SLAC (US) RAL (UK) Fermilab (US) West. Grid (CA) SLAC (US) IN 2 P 3 (FR) LIGO (US) Caltech (US) SLAC (US) Karlsruhe (DE) LLNL (US) NCAR (US) SLAC (US) INFN CNAF (IT) Fermilab (US) MIT (US) Fermilab (US) SDSC (US) Fermilab (US) Johns Hopkins Fermilab (US) Karlsruhe (DE) IN 2 P 3 (FR) Fermilab (US) LBNL (US) U. Wisc. (US) Fermilab (US) U. Texas, Austin (US) BNL (US) LLNL (US) Fermilab (US) UC Davis (US) Qwest (US) ESnet (US) Fermilab (US) U. Toronto (CA) BNL (US) LLNL (US) CERN (CH) BNL (US) NERSC (US) LBNL (US) DOE/GTN (US) JLab (US) U. Toronto (CA) Fermilab (US) NERSC (US) LBNL (US) CERN (CH) Fermilab (US) Source and Destination of the Top 30 Flows, Feb. 2005 Lab-Lab (domestic) Lab-Comm. (domestic) 9

DOE Science Requirements for Networking 1) Network bandwidth must increase substantially, not just in the backbone but all the way to the sites and the attached computing and storage systems 2) A highly reliable network is critical for science – when large-scale experiments depend on the network for success, the network must not fail 3) There must be network services that can guarantee various forms of quality-of-service (e. g. , bandwidth guarantees) and provide traffic isolation 4) A production, extremely reliable, IP network with Internet services must support the process of science 10

DOE Science Requirements for Networking 1) Network bandwidth must increase substantially, not just in the backbone but all the way to the sites and the attached computing and storage systems 2) A highly reliable network is critical for science – when large-scale experiments depend on the network for success, the network must not fail 3) There must be network services that can guarantee various forms of quality-of-service (e. g. , bandwidth guarantees) and provide traffic isolation 4) A production, extremely reliable, IP network with Internet services must support the process of science 10

Strategy For The Evolution of ESnet A three part strategy for the evolution of ESnet 1) Metropolitan Area Network (MAN) rings to provide - dual site connectivity for reliability - much higher site-to-core bandwidth - support for both production IP and circuit-based traffic 2) A Science Data Network (SDN) core for - provisioned, guaranteed bandwidth circuits to support large, high-speed science data flows - very high total bandwidth - multiply connecting MAN rings for protection against hub failure - alternate path for production IP traffic 3) A High-reliability IP core (e. g. the current ESnet core) to address - general science requirements - Lab operational requirements - Backup for the SDN core - vehicle for science services 11

Strategy For The Evolution of ESnet A three part strategy for the evolution of ESnet 1) Metropolitan Area Network (MAN) rings to provide - dual site connectivity for reliability - much higher site-to-core bandwidth - support for both production IP and circuit-based traffic 2) A Science Data Network (SDN) core for - provisioned, guaranteed bandwidth circuits to support large, high-speed science data flows - very high total bandwidth - multiply connecting MAN rings for protection against hub failure - alternate path for production IP traffic 3) A High-reliability IP core (e. g. the current ESnet core) to address - general science requirements - Lab operational requirements - Backup for the SDN core - vehicle for science services 11

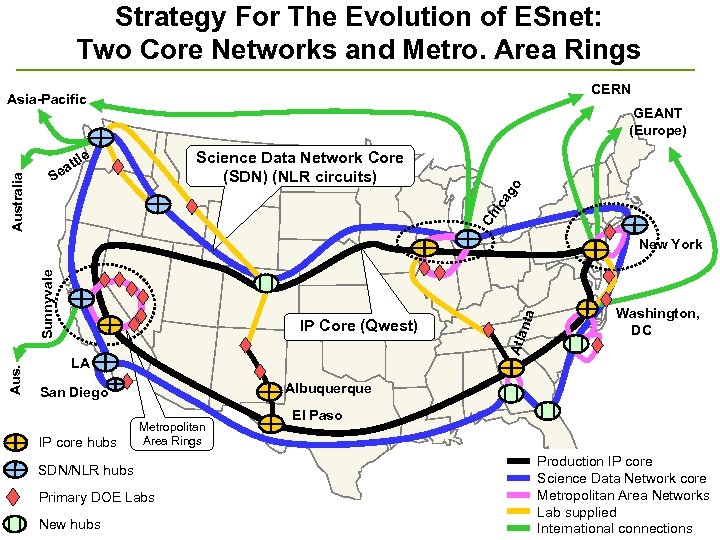

Strategy For The Evolution of ESnet: Two Core Networks and Metro. Area Rings CERN Asia-Pacific GEANT (Europe) ic ag o Science Data Network Core (SDN) (NLR circuits) Ch Australia le tt ea S Aus. LA Metropolitan Area Rings SDN/NLR hubs Primary DOE Labs New hubs Washington, DC Albuquerque San Diego IP core hubs nta IP Core (Qwest) Atla Sunnyvale New York El Paso Production IP core Science Data Network core Metropolitan Area Networks Lab supplied International connections

Strategy For The Evolution of ESnet: Two Core Networks and Metro. Area Rings CERN Asia-Pacific GEANT (Europe) ic ag o Science Data Network Core (SDN) (NLR circuits) Ch Australia le tt ea S Aus. LA Metropolitan Area Rings SDN/NLR hubs Primary DOE Labs New hubs Washington, DC Albuquerque San Diego IP core hubs nta IP Core (Qwest) Atla Sunnyvale New York El Paso Production IP core Science Data Network core Metropolitan Area Networks Lab supplied International connections

First Two Steps in the Evolution of ESnet 1) The SF Bay Area MAN will provide to the five OSC Bay Area sites o Very high speed site access – 20 Gb/s o Fully redundant site access 2) The first two segments of the second national 10 Gb/s core – the Science Data Network – will be San Diego to Sunnyvale to Seattle 13

First Two Steps in the Evolution of ESnet 1) The SF Bay Area MAN will provide to the five OSC Bay Area sites o Very high speed site access – 20 Gb/s o Fully redundant site access 2) The first two segments of the second national 10 Gb/s core – the Science Data Network – will be San Diego to Sunnyvale to Seattle 13

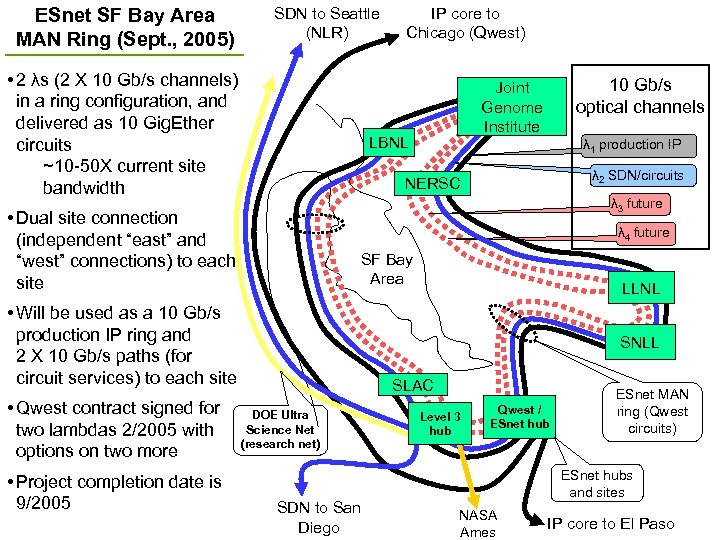

ESnet SF Bay Area MAN Ring (Sept. , 2005) SDN to Seattle (NLR) • 2 λs (2 X 10 Gb/s channels) in a ring configuration, and delivered as 10 Gig. Ether circuits ~10 -50 X current site bandwidth λ 1 production IP λ 2 SDN/circuits NERSC λ 3 future λ 4 future SF Bay Area • Will be used as a 10 Gb/s production IP ring and 2 X 10 Gb/s paths (for circuit services) to each site • Project completion date is 9/2005 10 Gb/s optical channels Joint Genome Institute LBNL • Dual site connection (independent “east” and “west” connections) to each site • Qwest contract signed for two lambdas 2/2005 with options on two more IP core to Chicago (Qwest) LLNL SNLL SLAC DOE Ultra Science Net (research net) SDN to San Diego Level 3 hub Qwest / ESnet hub ESnet MAN ring (Qwest circuits) ESnet hubs and sites NASA Ames IP core to El Paso

ESnet SF Bay Area MAN Ring (Sept. , 2005) SDN to Seattle (NLR) • 2 λs (2 X 10 Gb/s channels) in a ring configuration, and delivered as 10 Gig. Ether circuits ~10 -50 X current site bandwidth λ 1 production IP λ 2 SDN/circuits NERSC λ 3 future λ 4 future SF Bay Area • Will be used as a 10 Gb/s production IP ring and 2 X 10 Gb/s paths (for circuit services) to each site • Project completion date is 9/2005 10 Gb/s optical channels Joint Genome Institute LBNL • Dual site connection (independent “east” and “west” connections) to each site • Qwest contract signed for two lambdas 2/2005 with options on two more IP core to Chicago (Qwest) LLNL SNLL SLAC DOE Ultra Science Net (research net) SDN to San Diego Level 3 hub Qwest / ESnet hub ESnet MAN ring (Qwest circuits) ESnet hubs and sites NASA Ames IP core to El Paso

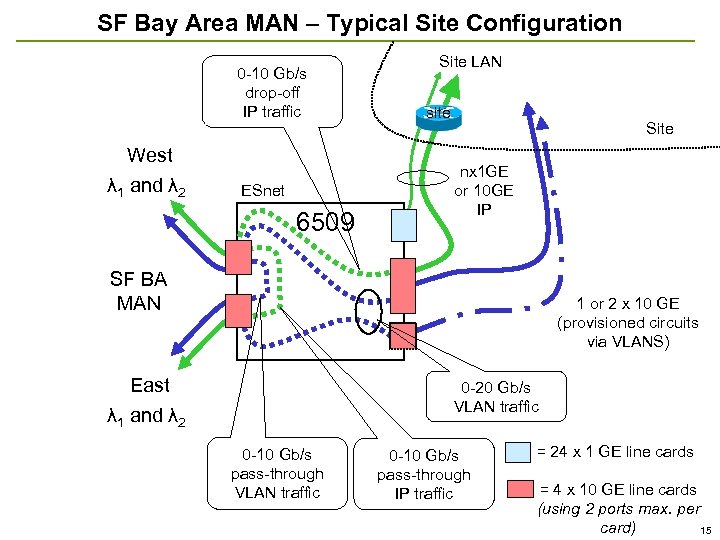

SF Bay Area MAN – Typical Site Configuration 0 -10 Gb/s drop-off IP traffic West λ 1 and λ 2 ESnet 6509 Site LAN site Site nx 1 GE or 10 GE IP SF BA MAN 1 or 2 x 10 GE (provisioned circuits via VLANS) East λ 1 and λ 2 0 -20 Gb/s VLAN traffic 0 -10 Gb/s pass-through IP traffic = 24 x 1 GE line cards = 4 x 10 GE line cards (using 2 ports max. per card) 15

SF Bay Area MAN – Typical Site Configuration 0 -10 Gb/s drop-off IP traffic West λ 1 and λ 2 ESnet 6509 Site LAN site Site nx 1 GE or 10 GE IP SF BA MAN 1 or 2 x 10 GE (provisioned circuits via VLANS) East λ 1 and λ 2 0 -20 Gb/s VLAN traffic 0 -10 Gb/s pass-through IP traffic = 24 x 1 GE line cards = 4 x 10 GE line cards (using 2 ports max. per card) 15

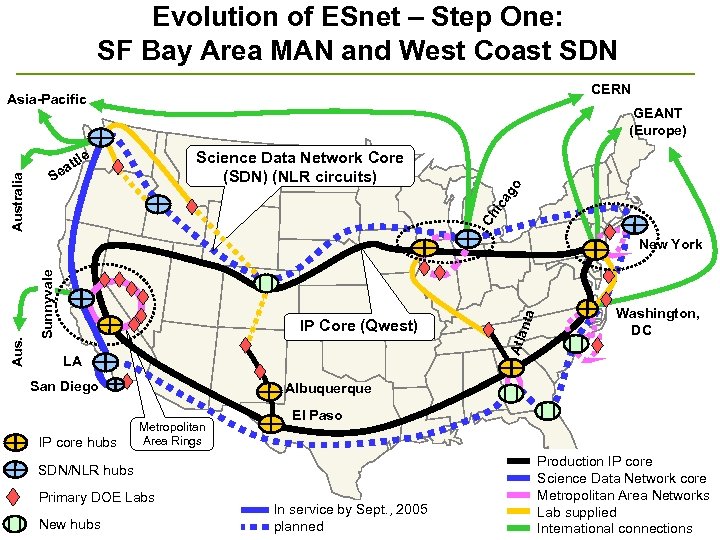

Evolution of ESnet – Step One: SF Bay Area MAN and West Coast SDN CERN Asia-Pacific GEANT (Europe) ic ag o Science Data Network Core (SDN) (NLR circuits) Ch Australia le tt ea S LA San Diego IP core hubs Washington, DC Albuquerque Metropolitan Area Rings El Paso SDN/NLR hubs Primary DOE Labs New hubs nta IP Core (Qwest) Atla Sunnyvale Aus. New York In service by Sept. , 2005 planned Production IP core Science Data Network core Metropolitan Area Networks Lab supplied International connections

Evolution of ESnet – Step One: SF Bay Area MAN and West Coast SDN CERN Asia-Pacific GEANT (Europe) ic ag o Science Data Network Core (SDN) (NLR circuits) Ch Australia le tt ea S LA San Diego IP core hubs Washington, DC Albuquerque Metropolitan Area Rings El Paso SDN/NLR hubs Primary DOE Labs New hubs nta IP Core (Qwest) Atla Sunnyvale Aus. New York In service by Sept. , 2005 planned Production IP core Science Data Network core Metropolitan Area Networks Lab supplied International connections

Evolution – Next Steps • • ORNL 10 G circuit to Chicago MAN o • Long Island MAN o • • IWire partnership Try and achieve some diversity in NYC by including a hub at 60 Hudson as well as 32 Ao. A More SDN segments Jefferson Lab via MATP and VORTEX 17

Evolution – Next Steps • • ORNL 10 G circuit to Chicago MAN o • Long Island MAN o • • IWire partnership Try and achieve some diversity in NYC by including a hub at 60 Hudson as well as 32 Ao. A More SDN segments Jefferson Lab via MATP and VORTEX 17

New Network Services • New network services are also critical for ESnet to meet the needs of large-scale science • Most important new network service is dynamically provisioned virtual circuits that provide o Traffic isolation - will enable the use of high-performance, non-standard transport mechanisms that cannot co-exist with commodity TCP based transport (see, e. g. , Tom Dunigan’s compendium http: //www. csm. ornl. gov/~dunigan/netperf/netlinks. html ) o Guaranteed bandwidth - the only way that we have currently to address deadline scheduling – e. g. where fixed amounts of data have to reach sites on a fixed schedule in order that the processing does not fall behind far enough so that it could never catch up – very important for experiment data analysis 18

New Network Services • New network services are also critical for ESnet to meet the needs of large-scale science • Most important new network service is dynamically provisioned virtual circuits that provide o Traffic isolation - will enable the use of high-performance, non-standard transport mechanisms that cannot co-exist with commodity TCP based transport (see, e. g. , Tom Dunigan’s compendium http: //www. csm. ornl. gov/~dunigan/netperf/netlinks. html ) o Guaranteed bandwidth - the only way that we have currently to address deadline scheduling – e. g. where fixed amounts of data have to reach sites on a fixed schedule in order that the processing does not fall behind far enough so that it could never catch up – very important for experiment data analysis 18

OSCARS: Guaranteed Bandwidth Service • Testing OSCARS Label Switched Paths (MPLS based virtual circuits) o • (update in the panel discussion) A collaboration with the other major science R&E networks to ensure compatible services (so that virtual services can be set up end-to-end across ESnet, Abilene, and GEANT) o code is being jointly developed with Internet 2's Bandwidth Reservation for User Work (BRUW) project – part of the Abilene HOPI (Hybrid Optical-Packet Infrastructure) project o Close cooperation with the GEANT virtual circuit project (“lightpaths – Joint Research Activity 3 project) 19

OSCARS: Guaranteed Bandwidth Service • Testing OSCARS Label Switched Paths (MPLS based virtual circuits) o • (update in the panel discussion) A collaboration with the other major science R&E networks to ensure compatible services (so that virtual services can be set up end-to-end across ESnet, Abilene, and GEANT) o code is being jointly developed with Internet 2's Bandwidth Reservation for User Work (BRUW) project – part of the Abilene HOPI (Hybrid Optical-Packet Infrastructure) project o Close cooperation with the GEANT virtual circuit project (“lightpaths – Joint Research Activity 3 project) 19

Federated Trust Services • Remote, multi-institutional, identity authentication is critical for distributed, collaborative science in order to permit sharing computing and data resources, and other Grid services • Managing cross site trust agreements among many organizations is crucial for authentication in collaborative environments o • ESnet assists in negotiating and managing the cross-site, cross-organization, and international trust relationships to provide policies that are tailored to collaborative science The form of the ESnet trust services are driven entirely by the requirements of the science community and direct input from the science community 20

Federated Trust Services • Remote, multi-institutional, identity authentication is critical for distributed, collaborative science in order to permit sharing computing and data resources, and other Grid services • Managing cross site trust agreements among many organizations is crucial for authentication in collaborative environments o • ESnet assists in negotiating and managing the cross-site, cross-organization, and international trust relationships to provide policies that are tailored to collaborative science The form of the ESnet trust services are driven entirely by the requirements of the science community and direct input from the science community 20

ØESnet Public Key Infrastructure • ESnet provides Public Key Infrastructure and X. 509 identity certificates that are the basis of secure, cross-site authentication of people and Grid systems • These services (www. doegrids. org) provide o Several Certification Authorities (CA) with different uses and policies that issue certificates after validating request against policy Ø This service was the basis of the first routine sharing of HEP computing resources between US and Europe 21

ØESnet Public Key Infrastructure • ESnet provides Public Key Infrastructure and X. 509 identity certificates that are the basis of secure, cross-site authentication of people and Grid systems • These services (www. doegrids. org) provide o Several Certification Authorities (CA) with different uses and policies that issue certificates after validating request against policy Ø This service was the basis of the first routine sharing of HEP computing resources between US and Europe 21

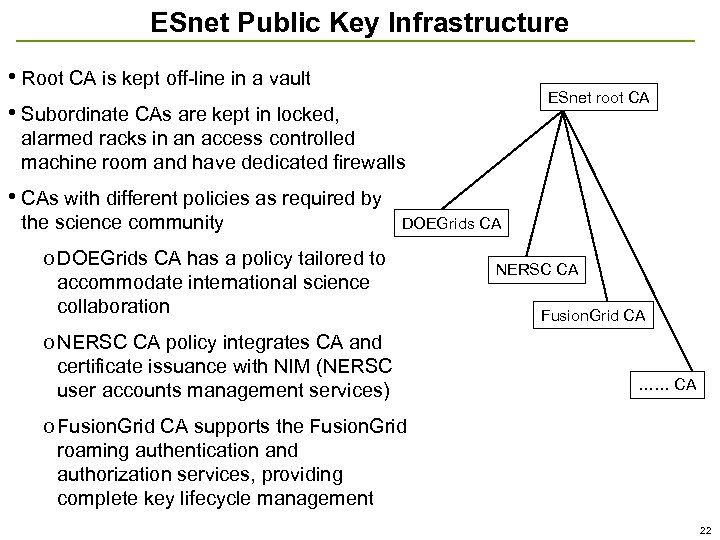

ESnet Public Key Infrastructure • Root CA is kept off-line in a vault • Subordinate CAs are kept in locked, ESnet root CA alarmed racks in an access controlled machine room and have dedicated firewalls • CAs with different policies as required by the science community DOEGrids CA o DOEGrids CA has a policy tailored to accommodate international science collaboration o NERSC CA policy integrates CA and certificate issuance with NIM (NERSC user accounts management services) NERSC CA Fusion. Grid CA …… CA o Fusion. Grid CA supports the Fusion. Grid roaming authentication and authorization services, providing complete key lifecycle management 22

ESnet Public Key Infrastructure • Root CA is kept off-line in a vault • Subordinate CAs are kept in locked, ESnet root CA alarmed racks in an access controlled machine room and have dedicated firewalls • CAs with different policies as required by the science community DOEGrids CA o DOEGrids CA has a policy tailored to accommodate international science collaboration o NERSC CA policy integrates CA and certificate issuance with NIM (NERSC user accounts management services) NERSC CA Fusion. Grid CA …… CA o Fusion. Grid CA supports the Fusion. Grid roaming authentication and authorization services, providing complete key lifecycle management 22

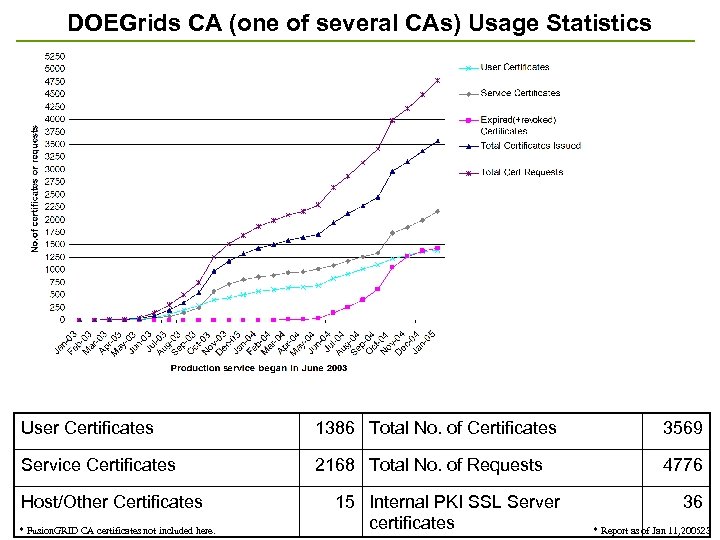

DOEGrids CA (one of several CAs) Usage Statistics User Certificates 1386 Total No. of Certificates 3569 Service Certificates 2168 Total No. of Requests 4776 Host/Other Certificates * Fusion. GRID CA certificates not included here. 15 Internal PKI SSL Server certificates 36 * Report as of Jan 11, 200523

DOEGrids CA (one of several CAs) Usage Statistics User Certificates 1386 Total No. of Certificates 3569 Service Certificates 2168 Total No. of Requests 4776 Host/Other Certificates * Fusion. GRID CA certificates not included here. 15 Internal PKI SSL Server certificates 36 * Report as of Jan 11, 200523

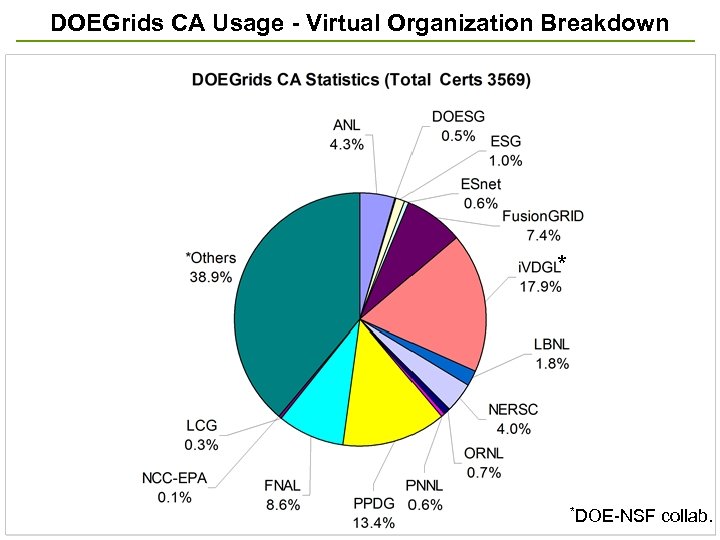

DOEGrids CA Usage - Virtual Organization Breakdown * *DOE-NSF collab. 24

DOEGrids CA Usage - Virtual Organization Breakdown * *DOE-NSF collab. 24

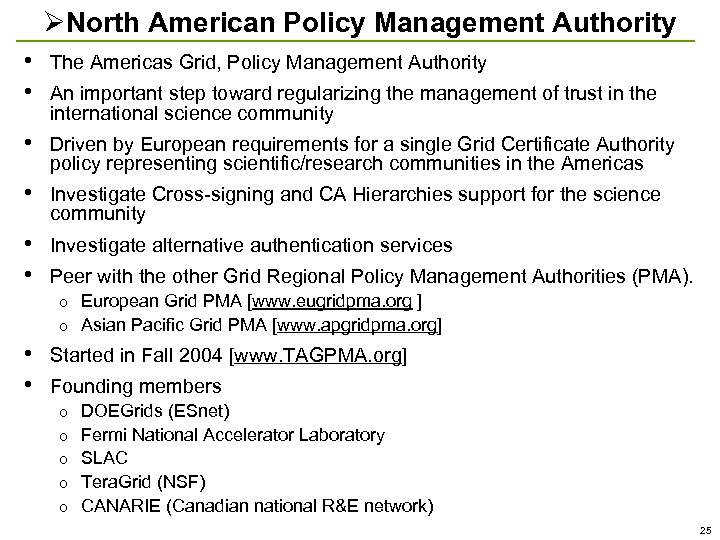

ØNorth American Policy Management Authority • • The Americas Grid, Policy Management Authority • Driven by European requirements for a single Grid Certificate Authority policy representing scientific/research communities in the Americas • Investigate Cross-signing and CA Hierarchies support for the science community • • Investigate alternative authentication services An important step toward regularizing the management of trust in the international science community Peer with the other Grid Regional Policy Management Authorities (PMA). European Grid PMA [www. eugridpma. org ] o Asian Pacific Grid PMA [www. apgridpma. org] o • • Started in Fall 2004 [www. TAGPMA. org] Founding members o o o DOEGrids (ESnet) Fermi National Accelerator Laboratory SLAC Tera. Grid (NSF) CANARIE (Canadian national R&E network) 25

ØNorth American Policy Management Authority • • The Americas Grid, Policy Management Authority • Driven by European requirements for a single Grid Certificate Authority policy representing scientific/research communities in the Americas • Investigate Cross-signing and CA Hierarchies support for the science community • • Investigate alternative authentication services An important step toward regularizing the management of trust in the international science community Peer with the other Grid Regional Policy Management Authorities (PMA). European Grid PMA [www. eugridpma. org ] o Asian Pacific Grid PMA [www. apgridpma. org] o • • Started in Fall 2004 [www. TAGPMA. org] Founding members o o o DOEGrids (ESnet) Fermi National Accelerator Laboratory SLAC Tera. Grid (NSF) CANARIE (Canadian national R&E network) 25

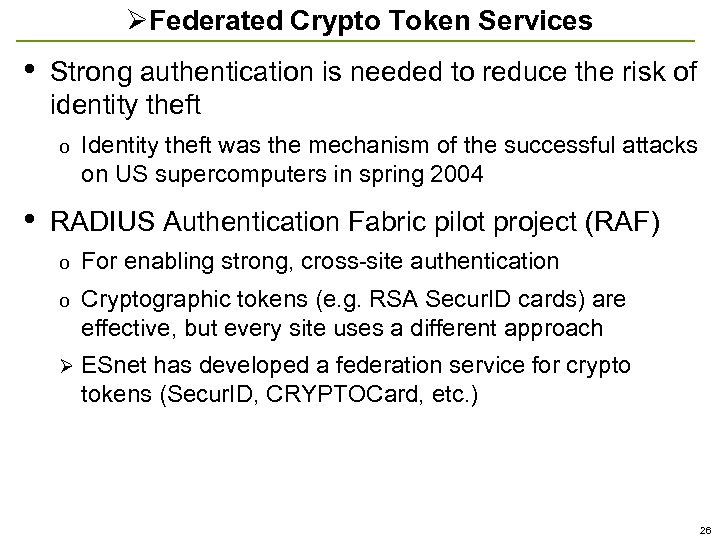

ØFederated Crypto Token Services • Strong authentication is needed to reduce the risk of identity theft o • Identity theft was the mechanism of the successful attacks on US supercomputers in spring 2004 RADIUS Authentication Fabric pilot project (RAF) o For enabling strong, cross-site authentication o Cryptographic tokens (e. g. RSA Secur. ID cards) are effective, but every site uses a different approach Ø ESnet has developed a federation service for crypto tokens (Secur. ID, CRYPTOCard, etc. ) 26

ØFederated Crypto Token Services • Strong authentication is needed to reduce the risk of identity theft o • Identity theft was the mechanism of the successful attacks on US supercomputers in spring 2004 RADIUS Authentication Fabric pilot project (RAF) o For enabling strong, cross-site authentication o Cryptographic tokens (e. g. RSA Secur. ID cards) are effective, but every site uses a different approach Ø ESnet has developed a federation service for crypto tokens (Secur. ID, CRYPTOCard, etc. ) 26

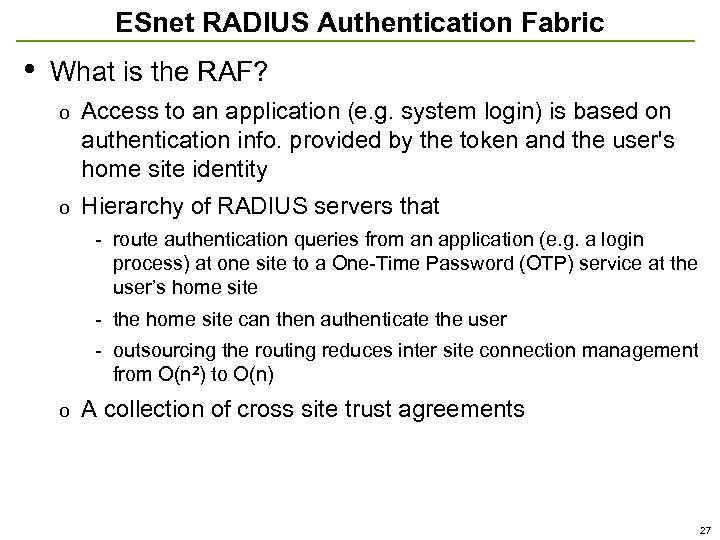

ESnet RADIUS Authentication Fabric • What is the RAF? o Access to an application (e. g. system login) is based on authentication info. provided by the token and the user's home site identity o Hierarchy of RADIUS servers that - route authentication queries from an application (e. g. a login process) at one site to a One-Time Password (OTP) service at the user’s home site - the home site can then authenticate the user - outsourcing the routing reduces inter site connection management from O(n 2) to O(n) o A collection of cross site trust agreements 27

ESnet RADIUS Authentication Fabric • What is the RAF? o Access to an application (e. g. system login) is based on authentication info. provided by the token and the user's home site identity o Hierarchy of RADIUS servers that - route authentication queries from an application (e. g. a login process) at one site to a One-Time Password (OTP) service at the user’s home site - the home site can then authenticate the user - outsourcing the routing reduces inter site connection management from O(n 2) to O(n) o A collection of cross site trust agreements 27

References – DOE Network Related Planning Workshops Ø 1) High Performance Network Planning Workshop, August 2002 http: //www. doecollaboratory. org/meetings/hpnpw Ø 2) DOE Science Networking Roadmap Meeting, June 2003 http: //www. es. net/hypertext/welcome/pr/Roadmap/index. html 3) DOE Workshop on Ultra High-Speed Transport Protocols and Network Provisioning for Large-Scale Science Applications, April 2003 http: //www. csm. ornl. gov/ghpn/wk 2003 4) Science Case for Large Scale Simulation, June 2003 http: //www. pnl. gov/scales/ 5) Workshop on the Road Map for the Revitalization of High End Computing, June 2003 http: //www. cra. org/Activities/workshops/nitrd http: //www. sc. doe. gov/ascr/20040510_hecrtf. pdf (public report) 6) ASCR Strategic Planning Workshop, July 2003 http: //www. fp-mcs. anl. gov/ascr-july 03 spw 7) Planning Workshops-Office of Science Data-Management Strategy, March & May 2004 o http: //www-conf. slac. stanford. edu/dmw 2004 28

References – DOE Network Related Planning Workshops Ø 1) High Performance Network Planning Workshop, August 2002 http: //www. doecollaboratory. org/meetings/hpnpw Ø 2) DOE Science Networking Roadmap Meeting, June 2003 http: //www. es. net/hypertext/welcome/pr/Roadmap/index. html 3) DOE Workshop on Ultra High-Speed Transport Protocols and Network Provisioning for Large-Scale Science Applications, April 2003 http: //www. csm. ornl. gov/ghpn/wk 2003 4) Science Case for Large Scale Simulation, June 2003 http: //www. pnl. gov/scales/ 5) Workshop on the Road Map for the Revitalization of High End Computing, June 2003 http: //www. cra. org/Activities/workshops/nitrd http: //www. sc. doe. gov/ascr/20040510_hecrtf. pdf (public report) 6) ASCR Strategic Planning Workshop, July 2003 http: //www. fp-mcs. anl. gov/ascr-july 03 spw 7) Planning Workshops-Office of Science Data-Management Strategy, March & May 2004 o http: //www-conf. slac. stanford. edu/dmw 2004 28