113544d0f6ea062cb5821409a5b9307c.ppt

- Количество слайдов: 45

The European X-Ray Laser Project XFEL Work Package 76 DAQ and control (PBS) Christopher Youngman Work Package 76 DESY/XFEL, Hamburg christopher. youngman@desy. de Christopher Youngman, DESY XDAQ 17 -18 April 2008 XFEL X-Ray Free-Electron Laser

The European X-Ray Laser Project XFEL Work Package 76 DAQ and control (PBS) Christopher Youngman Work Package 76 DESY/XFEL, Hamburg christopher. youngman@desy. de Christopher Youngman, DESY XDAQ 17 -18 April 2008 XFEL X-Ray Free-Electron Laser

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Outline Scope of WP 76 10 -11 th March DAQ and control workshop Review Issues and decisions Workshop follow up Network Infrastructure Intermediate layer possible 2 D pixel readout architecture Computing TDR DOOCS Conclusions Christopher Youngman, DESY XDAC 17 -18 April 2008 2

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Outline Scope of WP 76 10 -11 th March DAQ and control workshop Review Issues and decisions Workshop follow up Network Infrastructure Intermediate layer possible 2 D pixel readout architecture Computing TDR DOOCS Conclusions Christopher Youngman, DESY XDAC 17 -18 April 2008 2

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser WP 76 scope WP 76 = “DAQ and control” Provide data acquisition (DAQ) systems and control software for the experiment detectors (run) control, (slow) control (HV, LV, …), readout hardware, data storage and management, online data analysis (monitoring) Provide control of Photon Beamline Systems (PBS) instruments. control, monitoring, readout hardware Christopher Youngman, DESY XDAC 17 -18 April 2008 3

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser WP 76 scope WP 76 = “DAQ and control” Provide data acquisition (DAQ) systems and control software for the experiment detectors (run) control, (slow) control (HV, LV, …), readout hardware, data storage and management, online data analysis (monitoring) Provide control of Photon Beamline Systems (PBS) instruments. control, monitoring, readout hardware Christopher Youngman, DESY XDAC 17 -18 April 2008 3

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control workshop for photon beam systems Held at DESY on 10 -11 th March at DESY 47 registered participants: ALBA, DESY(20), Daresbury(4), ESRF(3), ITEP, JINR, NIKHEF(2), PSI(3), RAL(4), SLAC(2), Spring 8(2), Bologna, Heidelberg, Konstanz, Slovakia, … Agenda and slides: https: //indico. desy. de/conference. Display. py? conf. Id=762 The workshop was a Pre-XFEL project partially funded by the European Commission under the 7 th Framework programme. Christopher Youngman, DESY XDAC 17 -18 April 2008 4

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control workshop for photon beam systems Held at DESY on 10 -11 th March at DESY 47 registered participants: ALBA, DESY(20), Daresbury(4), ESRF(3), ITEP, JINR, NIKHEF(2), PSI(3), RAL(4), SLAC(2), Spring 8(2), Bologna, Heidelberg, Konstanz, Slovakia, … Agenda and slides: https: //indico. desy. de/conference. Display. py? conf. Id=762 The workshop was a Pre-XFEL project partially funded by the European Commission under the 7 th Framework programme. Christopher Youngman, DESY XDAC 17 -18 April 2008 4

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Workshop aims Review ALL areas of WP 76 = 8 sections & 20 talks & 529 slides Machine parameters and timing Photon beam line instruments and detectors Control systems Archiving and data processing DAQ and control at other Labs Infrastructure requirements Perspectives for data rejection and size reductions 2 D pixel detectors Aims meet other groups, exchange ideas, etc. produce a list of work, milestones required = any fires clarify, if possible, work with other WPs identify regions of in-kind contribution are sufficient manpower and other resources available? … Christopher Youngman, DESY XDAC 17 -18 April 2008 5

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Workshop aims Review ALL areas of WP 76 = 8 sections & 20 talks & 529 slides Machine parameters and timing Photon beam line instruments and detectors Control systems Archiving and data processing DAQ and control at other Labs Infrastructure requirements Perspectives for data rejection and size reductions 2 D pixel detectors Aims meet other groups, exchange ideas, etc. produce a list of work, milestones required = any fires clarify, if possible, work with other WPs identify regions of in-kind contribution are sufficient manpower and other resources available? … Christopher Youngman, DESY XDAC 17 -18 April 2008 5

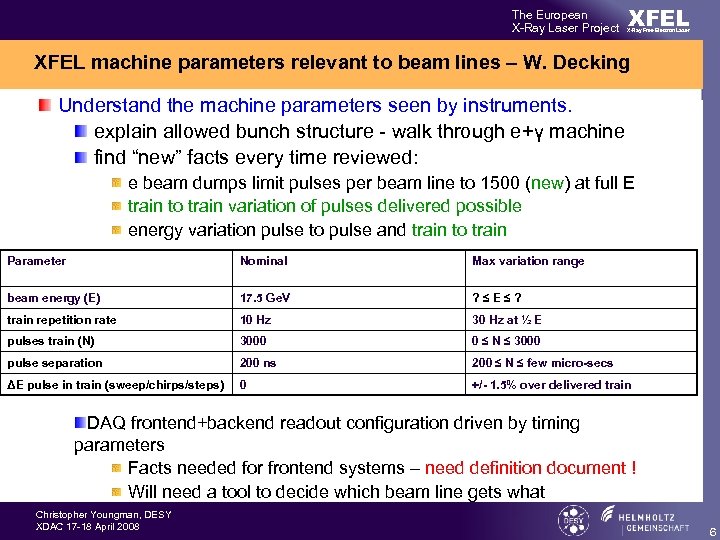

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser XFEL machine parameters relevant to beam lines – W. Decking Understand the machine parameters seen by instruments. explain allowed bunch structure - walk through e+γ machine find “new” facts every time reviewed: e beam dumps limit pulses per beam line to 1500 (new) at full E train to train variation of pulses delivered possible energy variation pulse to pulse and train to train Parameter Nominal Max variation range beam energy (E) 17. 5 Ge. V ? ≤E≤? train repetition rate 10 Hz 30 Hz at ½ E pulses train (N) 3000 0 ≤ N ≤ 3000 pulse separation 200 ns 200 ≤ N ≤ few micro-secs ΔE pulse in train (sweep/chirps/steps) 0 +/- 1. 5% over delivered train DAQ frontend+backend readout configuration driven by timing parameters Facts needed for frontend systems – need definition document ! Will need a tool to decide which beam line gets what Christopher Youngman, DESY XDAC 17 -18 April 2008 6

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser XFEL machine parameters relevant to beam lines – W. Decking Understand the machine parameters seen by instruments. explain allowed bunch structure - walk through e+γ machine find “new” facts every time reviewed: e beam dumps limit pulses per beam line to 1500 (new) at full E train to train variation of pulses delivered possible energy variation pulse to pulse and train to train Parameter Nominal Max variation range beam energy (E) 17. 5 Ge. V ? ≤E≤? train repetition rate 10 Hz 30 Hz at ½ E pulses train (N) 3000 0 ≤ N ≤ 3000 pulse separation 200 ns 200 ≤ N ≤ few micro-secs ΔE pulse in train (sweep/chirps/steps) 0 +/- 1. 5% over delivered train DAQ frontend+backend readout configuration driven by timing parameters Facts needed for frontend systems – need definition document ! Will need a tool to decide which beam line gets what Christopher Youngman, DESY XDAC 17 -18 April 2008 6

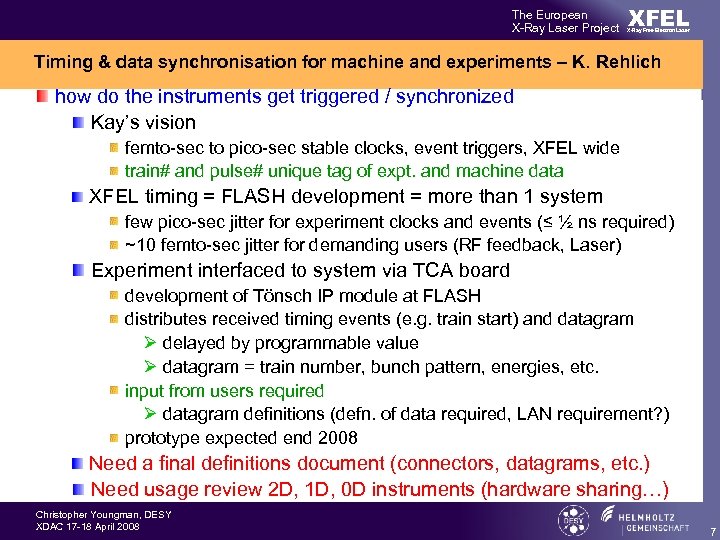

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Timing & data synchronisation for machine and experiments – K. Rehlich how do the instruments get triggered / synchronized Kay’s vision femto-sec to pico-sec stable clocks, event triggers, XFEL wide train# and pulse# unique tag of expt. and machine data XFEL timing = FLASH development = more than 1 system few pico-sec jitter for experiment clocks and events (≤ ½ ns required) ~10 femto-sec jitter for demanding users (RF feedback, Laser) Experiment interfaced to system via TCA board development of Tönsch IP module at FLASH distributes received timing events (e. g. train start) and datagram Ø delayed by programmable value Ø datagram = train number, bunch pattern, energies, etc. input from users required Ø datagram definitions (defn. of data required, LAN requirement? ) prototype expected end 2008 Need a final definitions document (connectors, datagrams, etc. ) Need usage review 2 D, 1 D, 0 D instruments (hardware sharing…) Christopher Youngman, DESY XDAC 17 -18 April 2008 7

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Timing & data synchronisation for machine and experiments – K. Rehlich how do the instruments get triggered / synchronized Kay’s vision femto-sec to pico-sec stable clocks, event triggers, XFEL wide train# and pulse# unique tag of expt. and machine data XFEL timing = FLASH development = more than 1 system few pico-sec jitter for experiment clocks and events (≤ ½ ns required) ~10 femto-sec jitter for demanding users (RF feedback, Laser) Experiment interfaced to system via TCA board development of Tönsch IP module at FLASH distributes received timing events (e. g. train start) and datagram Ø delayed by programmable value Ø datagram = train number, bunch pattern, energies, etc. input from users required Ø datagram definitions (defn. of data required, LAN requirement? ) prototype expected end 2008 Need a final definitions document (connectors, datagrams, etc. ) Need usage review 2 D, 1 D, 0 D instruments (hardware sharing…) Christopher Youngman, DESY XDAC 17 -18 April 2008 7

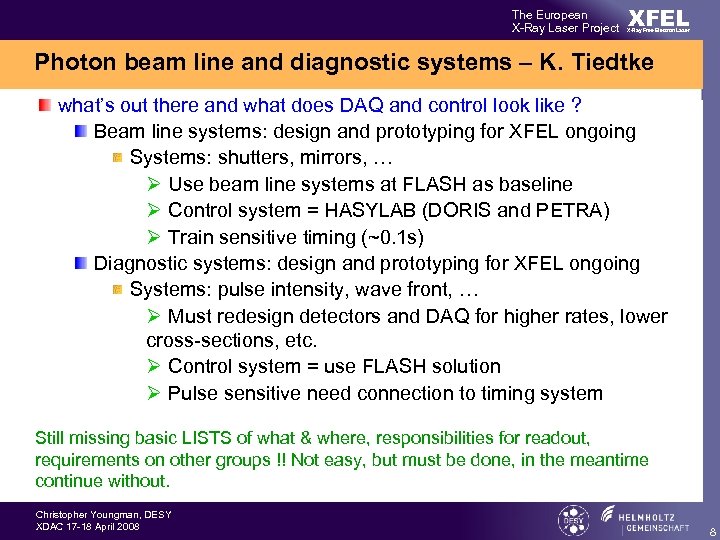

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Photon beam line and diagnostic systems – K. Tiedtke what’s out there and what does DAQ and control look like ? Beam line systems: design and prototyping for XFEL ongoing Systems: shutters, mirrors, … Ø Use beam line systems at FLASH as baseline Ø Control system = HASYLAB (DORIS and PETRA) Ø Train sensitive timing (~0. 1 s) Diagnostic systems: design and prototyping for XFEL ongoing Systems: pulse intensity, wave front, … Ø Must redesign detectors and DAQ for higher rates, lower cross-sections, etc. Ø Control system = use FLASH solution Ø Pulse sensitive need connection to timing system Still missing basic LISTS of what & where, responsibilities for readout, requirements on other groups !! Not easy, but must be done, in the meantime continue without. Christopher Youngman, DESY XDAC 17 -18 April 2008 8

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Photon beam line and diagnostic systems – K. Tiedtke what’s out there and what does DAQ and control look like ? Beam line systems: design and prototyping for XFEL ongoing Systems: shutters, mirrors, … Ø Use beam line systems at FLASH as baseline Ø Control system = HASYLAB (DORIS and PETRA) Ø Train sensitive timing (~0. 1 s) Diagnostic systems: design and prototyping for XFEL ongoing Systems: pulse intensity, wave front, … Ø Must redesign detectors and DAQ for higher rates, lower cross-sections, etc. Ø Control system = use FLASH solution Ø Pulse sensitive need connection to timing system Still missing basic LISTS of what & where, responsibilities for readout, requirements on other groups !! Not easy, but must be done, in the meantime continue without. Christopher Youngman, DESY XDAC 17 -18 April 2008 8

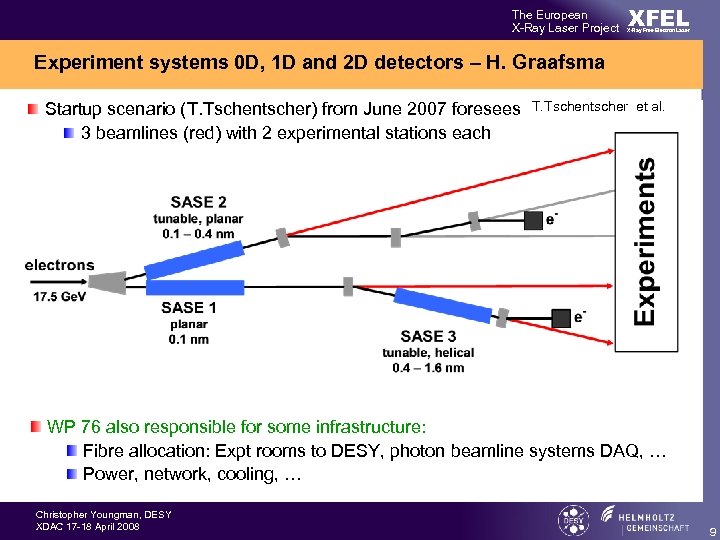

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Experiment systems 0 D, 1 D and 2 D detectors – H. Graafsma Startup scenario (T. Tschentscher) from June 2007 foresees 3 beamlines (red) with 2 experimental stations each T. Tschentscher et al. WP 76 also responsible for some infrastructure: Fibre allocation: Expt rooms to DESY, photon beamline systems DAQ, … Power, network, cooling, … Christopher Youngman, DESY XDAC 17 -18 April 2008 9

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Experiment systems 0 D, 1 D and 2 D detectors – H. Graafsma Startup scenario (T. Tschentscher) from June 2007 foresees 3 beamlines (red) with 2 experimental stations each T. Tschentscher et al. WP 76 also responsible for some infrastructure: Fibre allocation: Expt rooms to DESY, photon beamline systems DAQ, … Power, network, cooling, … Christopher Youngman, DESY XDAC 17 -18 April 2008 9

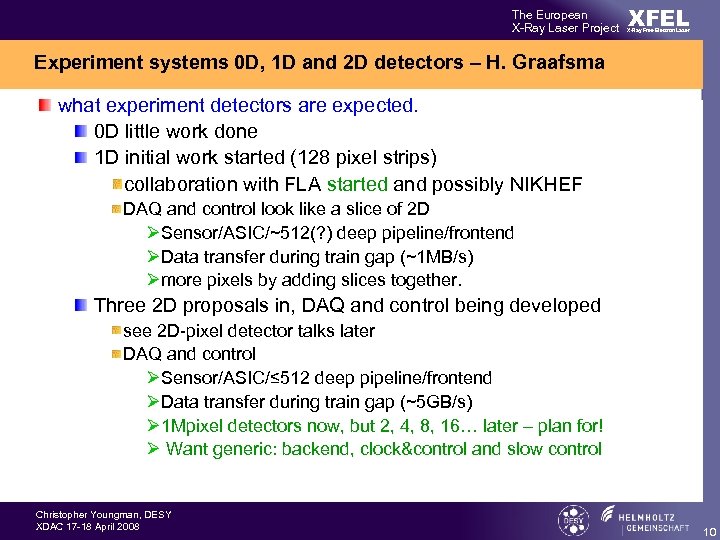

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Experiment systems 0 D, 1 D and 2 D detectors – H. Graafsma what experiment detectors are expected. 0 D little work done 1 D initial work started (128 pixel strips) collaboration with FLA started and possibly NIKHEF DAQ and control look like a slice of 2 D ØSensor/ASIC/~512(? ) deep pipeline/frontend ØData transfer during train gap (~1 MB/s) Ømore pixels by adding slices together. Three 2 D proposals in, DAQ and control being developed see 2 D-pixel detector talks later DAQ and control ØSensor/ASIC/≤ 512 deep pipeline/frontend ØData transfer during train gap (~5 GB/s) Ø 1 Mpixel detectors now, but 2, 4, 8, 16… later – plan for! Ø Want generic: backend, clock&control and slow control Christopher Youngman, DESY XDAC 17 -18 April 2008 10

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Experiment systems 0 D, 1 D and 2 D detectors – H. Graafsma what experiment detectors are expected. 0 D little work done 1 D initial work started (128 pixel strips) collaboration with FLA started and possibly NIKHEF DAQ and control look like a slice of 2 D ØSensor/ASIC/~512(? ) deep pipeline/frontend ØData transfer during train gap (~1 MB/s) Ømore pixels by adding slices together. Three 2 D proposals in, DAQ and control being developed see 2 D-pixel detector talks later DAQ and control ØSensor/ASIC/≤ 512 deep pipeline/frontend ØData transfer during train gap (~5 GB/s) Ø 1 Mpixel detectors now, but 2, 4, 8, 16… later – plan for! Ø Want generic: backend, clock&control and slow control Christopher Youngman, DESY XDAC 17 -18 April 2008 10

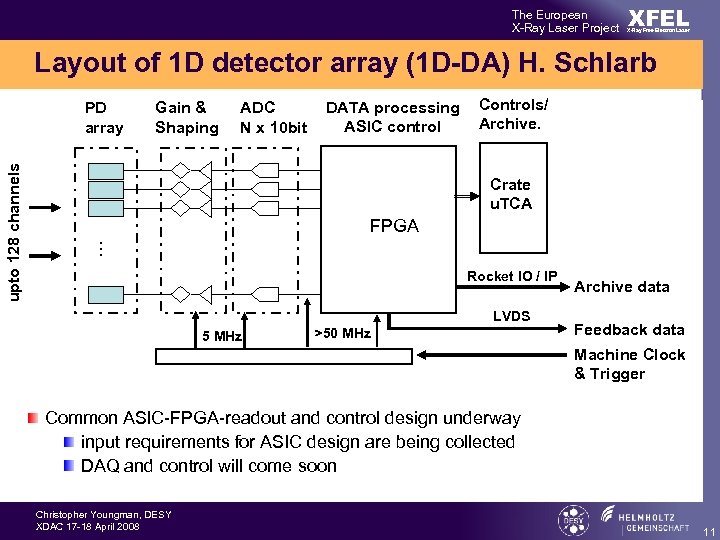

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Layout of 1 D detector array (1 D-DA) H. Schlarb Gain & Shaping ADC N x 10 bit DATA processing ASIC control Controls/ Archive. Crate u. TCA FPGA … upto 128 channels PD array Rocket IO / IP LVDS 5 MHz >50 MHz Archive data Feedback data Machine Clock & Trigger Common ASIC-FPGA-readout and control design underway input requirements for ASIC design are being collected DAQ and control will come soon Christopher Youngman, DESY XDAC 17 -18 April 2008 11

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Layout of 1 D detector array (1 D-DA) H. Schlarb Gain & Shaping ADC N x 10 bit DATA processing ASIC control Controls/ Archive. Crate u. TCA FPGA … upto 128 channels PD array Rocket IO / IP LVDS 5 MHz >50 MHz Archive data Feedback data Machine Clock & Trigger Common ASIC-FPGA-readout and control design underway input requirements for ASIC design are being collected DAQ and control will come soon Christopher Youngman, DESY XDAC 17 -18 April 2008 11

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Other boundary conditions – H. Graafsma • We need to modify the experiment: add, remove (auxiliary) detectors within a day flexibility • We want to be able to use different (new) detectors flexibility and standardization. • We want to be able to use larger detectors in the future (4, 9, 25, … Mpixels) Modular approach • We need to CONTROL the experiment (see SR-talks). Means “move and count” (part of) the data needs to be visible “immediately” • We need to store other data (machine and experiment) with the images • We want to store only “useful” images (fast veto) • We will have single module prototypes by 2010 (LCLS; Petra, …) Heinz’s wish list ! Christopher Youngman, DESY XDAC 17 -18 April 2008 12

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Other boundary conditions – H. Graafsma • We need to modify the experiment: add, remove (auxiliary) detectors within a day flexibility • We want to be able to use different (new) detectors flexibility and standardization. • We want to be able to use larger detectors in the future (4, 9, 25, … Mpixels) Modular approach • We need to CONTROL the experiment (see SR-talks). Means “move and count” (part of) the data needs to be visible “immediately” • We need to store other data (machine and experiment) with the images • We want to store only “useful” images (fast veto) • We will have single module prototypes by 2010 (LCLS; Petra, …) Heinz’s wish list ! Christopher Youngman, DESY XDAC 17 -18 April 2008 12

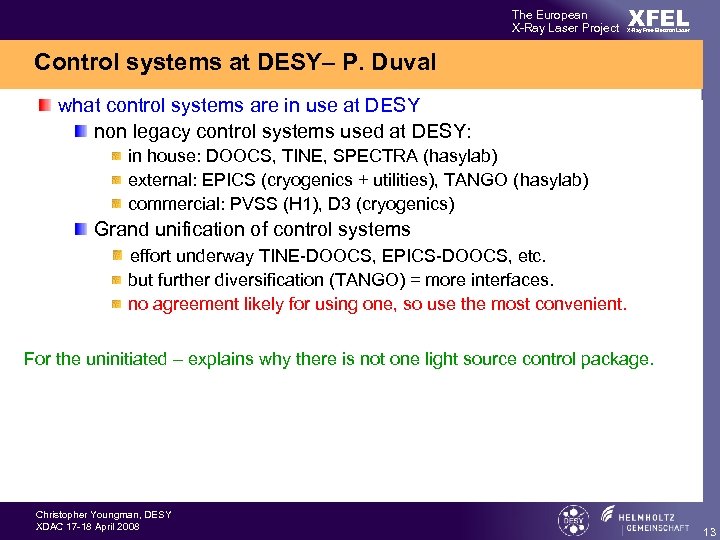

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Control systems at DESY– P. Duval what control systems are in use at DESY non legacy control systems used at DESY: in house: DOOCS, TINE, SPECTRA (hasylab) external: EPICS (cryogenics + utilities), TANGO (hasylab) commercial: PVSS (H 1), D 3 (cryogenics) Grand unification of control systems effort underway TINE-DOOCS, EPICS-DOOCS, etc. but further diversification (TANGO) = more interfaces. no agreement likely for using one, so use the most convenient. For the uninitiated – explains why there is not one light source control package. Christopher Youngman, DESY XDAC 17 -18 April 2008 13

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Control systems at DESY– P. Duval what control systems are in use at DESY non legacy control systems used at DESY: in house: DOOCS, TINE, SPECTRA (hasylab) external: EPICS (cryogenics + utilities), TANGO (hasylab) commercial: PVSS (H 1), D 3 (cryogenics) Grand unification of control systems effort underway TINE-DOOCS, EPICS-DOOCS, etc. but further diversification (TANGO) = more interfaces. no agreement likely for using one, so use the most convenient. For the uninitiated – explains why there is not one light source control package. Christopher Youngman, DESY XDAC 17 -18 April 2008 13

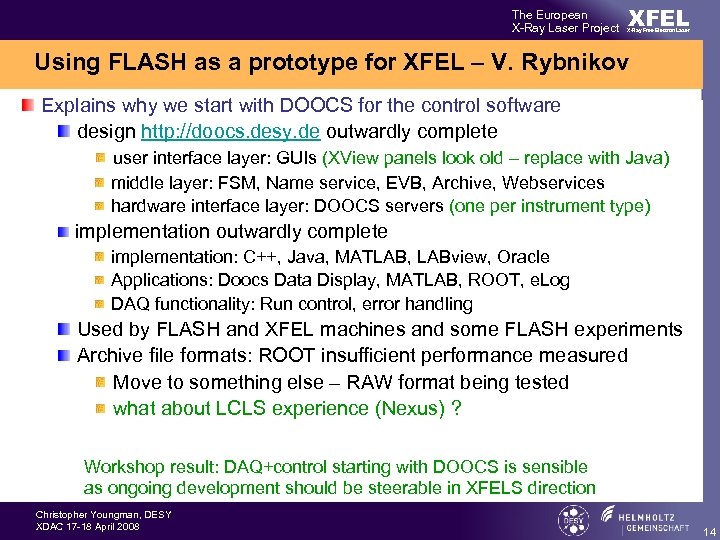

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Using FLASH as a prototype for XFEL – V. Rybnikov Explains why we start with DOOCS for the control software design http: //doocs. desy. de outwardly complete user interface layer: GUIs (XView panels look old – replace with Java) middle layer: FSM, Name service, EVB, Archive, Webservices hardware interface layer: DOOCS servers (one per instrument type) implementation outwardly complete implementation: C++, Java, MATLAB, LABview, Oracle Applications: Doocs Data Display, MATLAB, ROOT, e. Log DAQ functionality: Run control, error handling Used by FLASH and XFEL machines and some FLASH experiments Archive file formats: ROOT insufficient performance measured Move to something else – RAW format being tested what about LCLS experience (Nexus) ? Workshop result: DAQ+control starting with DOOCS is sensible as ongoing development should be steerable in XFELS direction Christopher Youngman, DESY XDAC 17 -18 April 2008 14

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Using FLASH as a prototype for XFEL – V. Rybnikov Explains why we start with DOOCS for the control software design http: //doocs. desy. de outwardly complete user interface layer: GUIs (XView panels look old – replace with Java) middle layer: FSM, Name service, EVB, Archive, Webservices hardware interface layer: DOOCS servers (one per instrument type) implementation outwardly complete implementation: C++, Java, MATLAB, LABview, Oracle Applications: Doocs Data Display, MATLAB, ROOT, e. Log DAQ functionality: Run control, error handling Used by FLASH and XFEL machines and some FLASH experiments Archive file formats: ROOT insufficient performance measured Move to something else – RAW format being tested what about LCLS experience (Nexus) ? Workshop result: DAQ+control starting with DOOCS is sensible as ongoing development should be steerable in XFELS direction Christopher Youngman, DESY XDAC 17 -18 April 2008 14

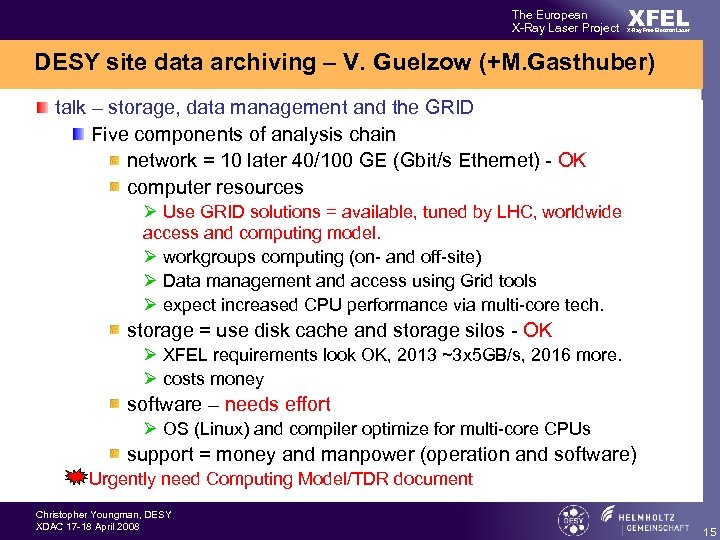

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DESY site data archiving – V. Guelzow (+M. Gasthuber) talk – storage, data management and the GRID Five components of analysis chain network = 10 later 40/100 GE (Gbit/s Ethernet) - OK computer resources Ø Use GRID solutions = available, tuned by LHC, worldwide access and computing model. Ø workgroups computing (on- and off-site) Ø Data management and access using Grid tools Ø expect increased CPU performance via multi-core tech. storage = use disk cache and storage silos - OK Ø XFEL requirements look OK, 2013 ~3 x 5 GB/s, 2016 more. Ø costs money software – needs effort Ø OS (Linux) and compiler optimize for multi-core CPUs support = money and manpower (operation and software) Urgently need Computing Model/TDR document Christopher Youngman, DESY XDAC 17 -18 April 2008 15

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DESY site data archiving – V. Guelzow (+M. Gasthuber) talk – storage, data management and the GRID Five components of analysis chain network = 10 later 40/100 GE (Gbit/s Ethernet) - OK computer resources Ø Use GRID solutions = available, tuned by LHC, worldwide access and computing model. Ø workgroups computing (on- and off-site) Ø Data management and access using Grid tools Ø expect increased CPU performance via multi-core tech. storage = use disk cache and storage silos - OK Ø XFEL requirements look OK, 2013 ~3 x 5 GB/s, 2016 more. Ø costs money software – needs effort Ø OS (Linux) and compiler optimize for multi-core CPUs support = money and manpower (operation and software) Urgently need Computing Model/TDR document Christopher Youngman, DESY XDAC 17 -18 April 2008 15

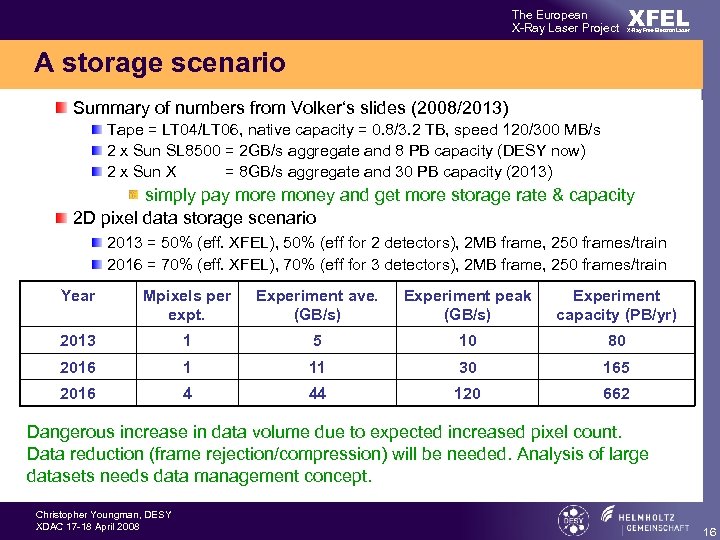

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser A storage scenario Summary of numbers from Volker‘s slides (2008/2013) Tape = LT 04/LT 06, native capacity = 0. 8/3. 2 TB, speed 120/300 MB/s 2 x Sun SL 8500 = 2 GB/s aggregate and 8 PB capacity (DESY now) 2 x Sun X = 8 GB/s aggregate and 30 PB capacity (2013) simply pay more money and get more storage rate & capacity 2 D pixel data storage scenario 2013 = 50% (eff. XFEL), 50% (eff for 2 detectors), 2 MB frame, 250 frames/train 2016 = 70% (eff. XFEL), 70% (eff for 3 detectors), 2 MB frame, 250 frames/train Year Mpixels per expt. Experiment ave. (GB/s) Experiment peak (GB/s) Experiment capacity (PB/yr) 2013 1 5 10 80 2016 1 11 30 165 2016 4 44 120 662 Dangerous increase in data volume due to expected increased pixel count. Data reduction (frame rejection/compression) will be needed. Analysis of large datasets needs data management concept. Christopher Youngman, DESY XDAC 17 -18 April 2008 16

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser A storage scenario Summary of numbers from Volker‘s slides (2008/2013) Tape = LT 04/LT 06, native capacity = 0. 8/3. 2 TB, speed 120/300 MB/s 2 x Sun SL 8500 = 2 GB/s aggregate and 8 PB capacity (DESY now) 2 x Sun X = 8 GB/s aggregate and 30 PB capacity (2013) simply pay more money and get more storage rate & capacity 2 D pixel data storage scenario 2013 = 50% (eff. XFEL), 50% (eff for 2 detectors), 2 MB frame, 250 frames/train 2016 = 70% (eff. XFEL), 70% (eff for 3 detectors), 2 MB frame, 250 frames/train Year Mpixels per expt. Experiment ave. (GB/s) Experiment peak (GB/s) Experiment capacity (PB/yr) 2013 1 5 10 80 2016 1 11 30 165 2016 4 44 120 662 Dangerous increase in data volume due to expected increased pixel count. Data reduction (frame rejection/compression) will be needed. Analysis of large datasets needs data management concept. Christopher Youngman, DESY XDAC 17 -18 April 2008 16

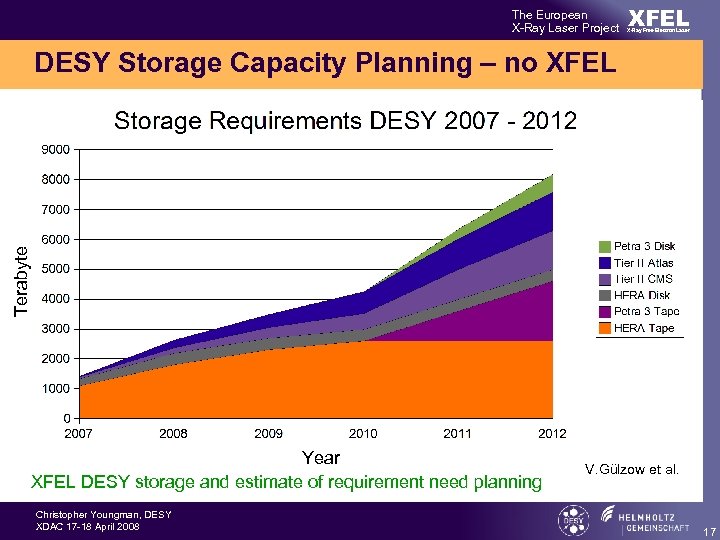

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Terabyte DESY Storage Capacity Planning – no XFEL Year XFEL DESY storage and estimate of requirement need planning Christopher Youngman, DESY XDAC 17 -18 April 2008 V. Gülzow et al. 17

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Terabyte DESY Storage Capacity Planning – no XFEL Year XFEL DESY storage and estimate of requirement need planning Christopher Youngman, DESY XDAC 17 -18 April 2008 V. Gülzow et al. 17

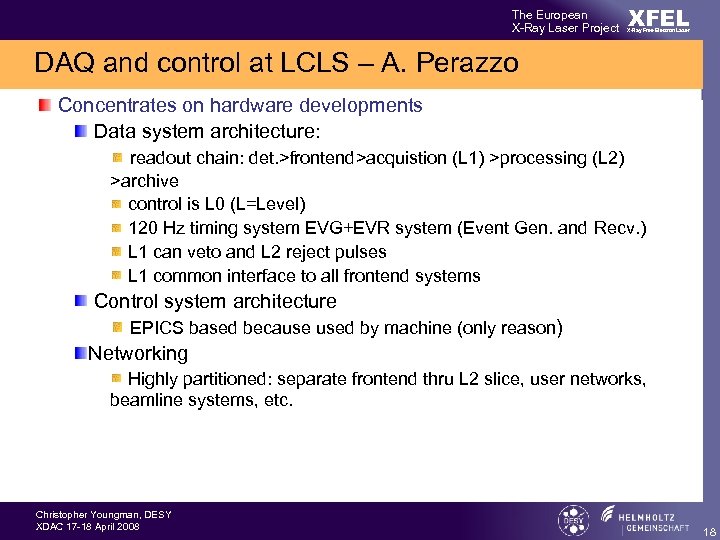

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control at LCLS – A. Perazzo Concentrates on hardware developments Data system architecture: readout chain: det. >frontend>acquistion (L 1) >processing (L 2) >archive control is L 0 (L=Level) 120 Hz timing system EVG+EVR system (Event Gen. and Recv. ) L 1 can veto and L 2 reject pulses L 1 common interface to all frontend systems Control system architecture EPICS based because used by machine (only reason) Networking Highly partitioned: separate frontend thru L 2 slice, user networks, beamline systems, etc. Christopher Youngman, DESY XDAC 17 -18 April 2008 18

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control at LCLS – A. Perazzo Concentrates on hardware developments Data system architecture: readout chain: det. >frontend>acquistion (L 1) >processing (L 2) >archive control is L 0 (L=Level) 120 Hz timing system EVG+EVR system (Event Gen. and Recv. ) L 1 can veto and L 2 reject pulses L 1 common interface to all frontend systems Control system architecture EPICS based because used by machine (only reason) Networking Highly partitioned: separate frontend thru L 2 slice, user networks, beamline systems, etc. Christopher Youngman, DESY XDAC 17 -18 April 2008 18

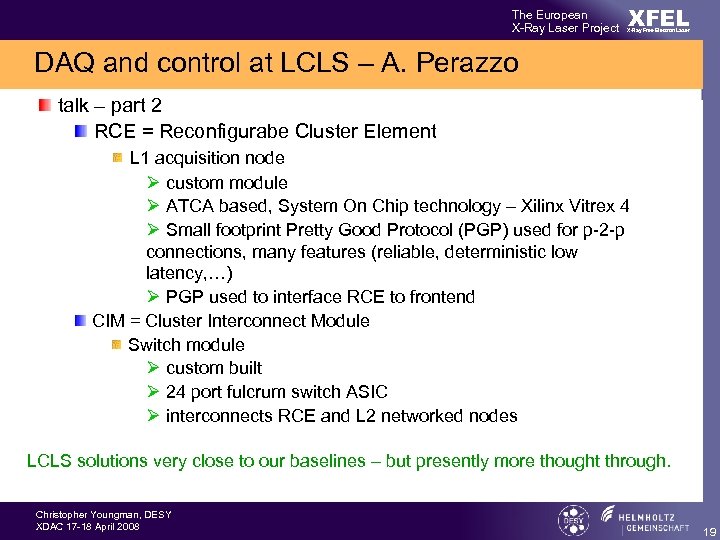

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control at LCLS – A. Perazzo talk – part 2 RCE = Reconfigurabe Cluster Element L 1 acquisition node Ø custom module Ø ATCA based, System On Chip technology – Xilinx Vitrex 4 Ø Small footprint Pretty Good Protocol (PGP) used for p-2 -p connections, many features (reliable, deterministic low latency, …) Ø PGP used to interface RCE to frontend CIM = Cluster Interconnect Module Switch module Ø custom built Ø 24 port fulcrum switch ASIC Ø interconnects RCE and L 2 networked nodes LCLS solutions very close to our baselines – but presently more thought through. Christopher Youngman, DESY XDAC 17 -18 April 2008 19

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control at LCLS – A. Perazzo talk – part 2 RCE = Reconfigurabe Cluster Element L 1 acquisition node Ø custom module Ø ATCA based, System On Chip technology – Xilinx Vitrex 4 Ø Small footprint Pretty Good Protocol (PGP) used for p-2 -p connections, many features (reliable, deterministic low latency, …) Ø PGP used to interface RCE to frontend CIM = Cluster Interconnect Module Switch module Ø custom built Ø 24 port fulcrum switch ASIC Ø interconnects RCE and L 2 networked nodes LCLS solutions very close to our baselines – but presently more thought through. Christopher Youngman, DESY XDAC 17 -18 April 2008 19

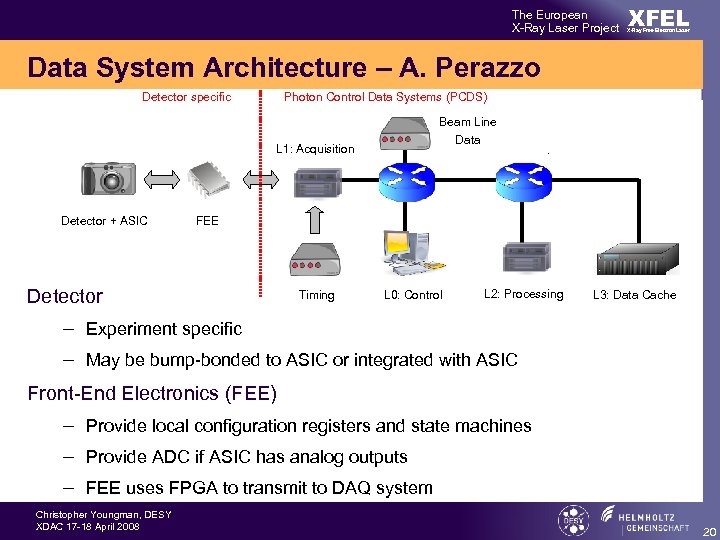

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Data System Architecture – A. Perazzo Detector specific Photon Control Data Systems (PCDS) Beam Line Data L 1: Acquisition Detector + ASIC FEE Detector Timing L 0: Control L 2: Processing L 3: Data Cache – Experiment specific – May be bump-bonded to ASIC or integrated with ASIC Front-End Electronics (FEE) – Provide local configuration registers and state machines – Provide ADC if ASIC has analog outputs – FEE uses FPGA to transmit to DAQ system Christopher Youngman, DESY XDAC 17 -18 April 2008 20

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Data System Architecture – A. Perazzo Detector specific Photon Control Data Systems (PCDS) Beam Line Data L 1: Acquisition Detector + ASIC FEE Detector Timing L 0: Control L 2: Processing L 3: Data Cache – Experiment specific – May be bump-bonded to ASIC or integrated with ASIC Front-End Electronics (FEE) – Provide local configuration registers and state machines – Provide ADC if ASIC has analog outputs – FEE uses FPGA to transmit to DAQ system Christopher Youngman, DESY XDAC 17 -18 April 2008 20

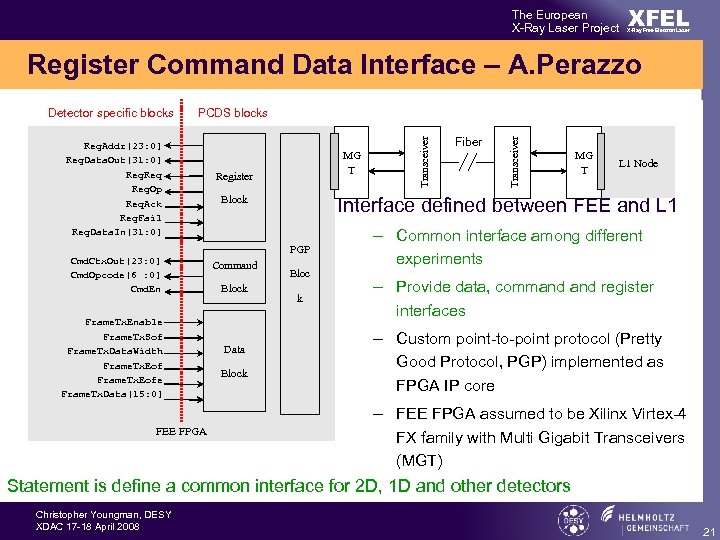

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Register Command Data Interface – A. Perazzo Reg. Addr[23: 0] Reg. Data. Out[31: 0] Reg. Req Reg. Op Req. Ack Reg. Fail Reg. Data. In[31: 0] Cmd. Ctx. Out[23: 0] Cmd. Opcode[6 : 0] Cmd. En Frame. Tx. Enable Frame. Tx. Sof Frame. Tx. Data. Width Frame. Tx. Eofe Frame. Tx. Data[15: 0] MG T Register Block Data Block MG T L 1 Node Interface defined between FEE and L 1 PGP Command Fiber Transceiver PCDS blocks Transceiver Detector specific blocks Bloc k – Common interface among different experiments – Provide data, command register interfaces – Custom point-to-point protocol (Pretty Good Protocol, PGP) implemented as FPGA IP core – FEE FPGA assumed to be Xilinx Virtex-4 FEE FPGA FX family with Multi Gigabit Transceivers (MGT) Statement is define a common interface for 2 D, 1 D and other detectors Christopher Youngman, DESY XDAC 17 -18 April 2008 21

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Register Command Data Interface – A. Perazzo Reg. Addr[23: 0] Reg. Data. Out[31: 0] Reg. Req Reg. Op Req. Ack Reg. Fail Reg. Data. In[31: 0] Cmd. Ctx. Out[23: 0] Cmd. Opcode[6 : 0] Cmd. En Frame. Tx. Enable Frame. Tx. Sof Frame. Tx. Data. Width Frame. Tx. Eofe Frame. Tx. Data[15: 0] MG T Register Block Data Block MG T L 1 Node Interface defined between FEE and L 1 PGP Command Fiber Transceiver PCDS blocks Transceiver Detector specific blocks Bloc k – Common interface among different experiments – Provide data, command register interfaces – Custom point-to-point protocol (Pretty Good Protocol, PGP) implemented as FPGA IP core – FEE FPGA assumed to be Xilinx Virtex-4 FEE FPGA FX family with Multi Gigabit Transceivers (MGT) Statement is define a common interface for 2 D, 1 D and other detectors Christopher Youngman, DESY XDAC 17 -18 April 2008 21

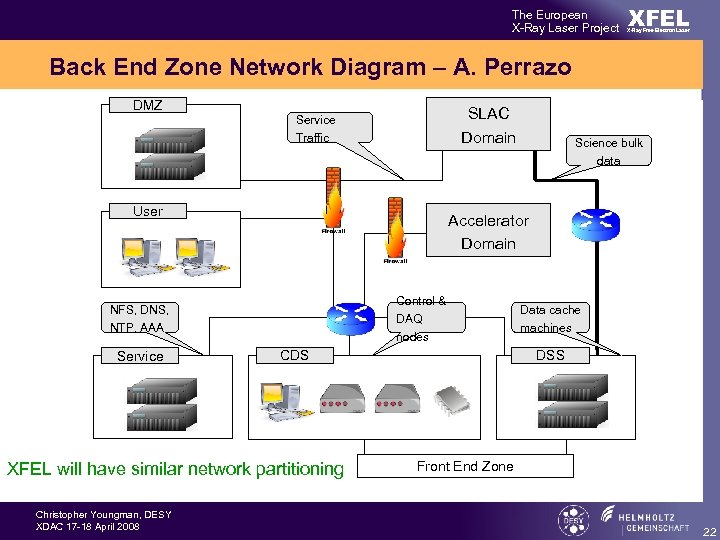

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Back End Zone Network Diagram – A. Perrazo DMZ SLAC Domain Service Traffic User Accelerator Domain Control & DAQ nodes NFS, DNS, NTP, AAA Service CDS XFEL will have similar network partitioning Christopher Youngman, DESY XDAC 17 -18 April 2008 Science bulk data Data cache machines DSS Front End Zone 22

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Back End Zone Network Diagram – A. Perrazo DMZ SLAC Domain Service Traffic User Accelerator Domain Control & DAQ nodes NFS, DNS, NTP, AAA Service CDS XFEL will have similar network partitioning Christopher Youngman, DESY XDAC 17 -18 April 2008 Science bulk data Data cache machines DSS Front End Zone 22

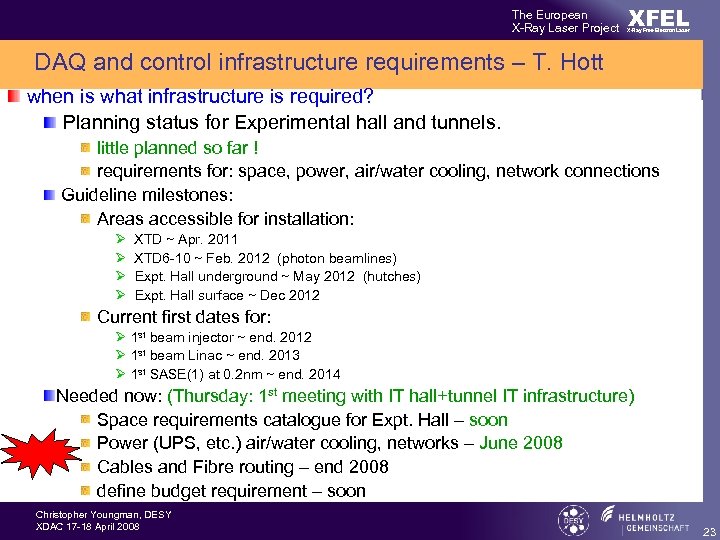

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control infrastructure requirements – T. Hott when is what infrastructure is required? Planning status for Experimental hall and tunnels. little planned so far ! requirements for: space, power, air/water cooling, network connections Guideline milestones: Areas accessible for installation: Ø Ø XTD ~ Apr. 2011 XTD 6 -10 ~ Feb. 2012 (photon beamlines) Expt. Hall underground ~ May 2012 (hutches) Expt. Hall surface ~ Dec 2012 Current first dates for: Ø 1 st beam injector ~ end. 2012 Ø 1 st beam Linac ~ end. 2013 Ø 1 st SASE(1) at 0. 2 nm ~ end. 2014 Needed now: (Thursday: 1 st meeting with IT hall+tunnel IT infrastructure) Space requirements catalogue for Expt. Hall – soon Power (UPS, etc. ) air/water cooling, networks – June 2008 Cables and Fibre routing – end 2008 define budget requirement – soon Christopher Youngman, DESY XDAC 17 -18 April 2008 23

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser DAQ and control infrastructure requirements – T. Hott when is what infrastructure is required? Planning status for Experimental hall and tunnels. little planned so far ! requirements for: space, power, air/water cooling, network connections Guideline milestones: Areas accessible for installation: Ø Ø XTD ~ Apr. 2011 XTD 6 -10 ~ Feb. 2012 (photon beamlines) Expt. Hall underground ~ May 2012 (hutches) Expt. Hall surface ~ Dec 2012 Current first dates for: Ø 1 st beam injector ~ end. 2012 Ø 1 st beam Linac ~ end. 2013 Ø 1 st SASE(1) at 0. 2 nm ~ end. 2014 Needed now: (Thursday: 1 st meeting with IT hall+tunnel IT infrastructure) Space requirements catalogue for Expt. Hall – soon Power (UPS, etc. ) air/water cooling, networks – June 2008 Cables and Fibre routing – end 2008 define budget requirement – soon Christopher Youngman, DESY XDAC 17 -18 April 2008 23

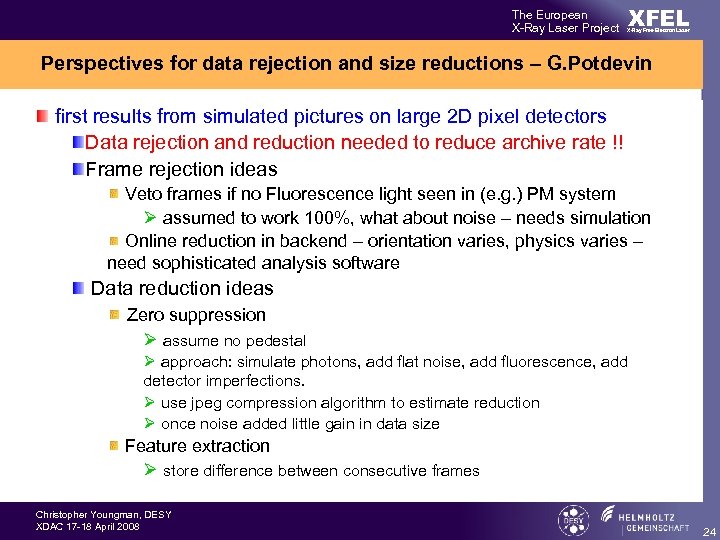

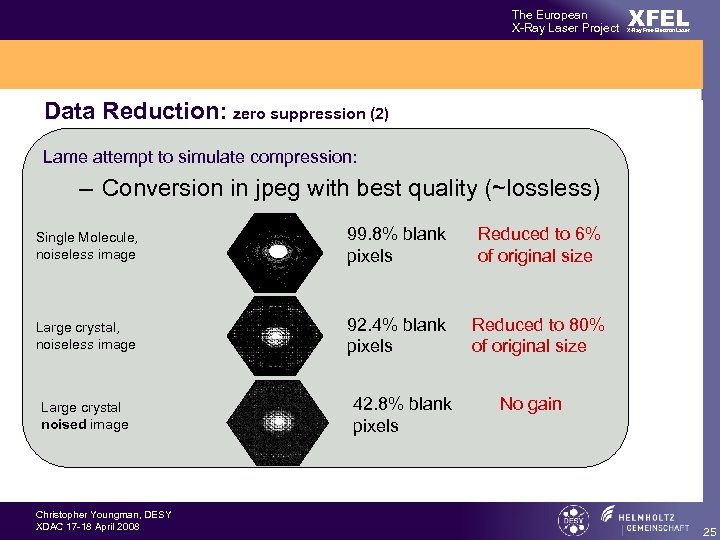

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Perspectives for data rejection and size reductions – G. Potdevin first results from simulated pictures on large 2 D pixel detectors Data rejection and reduction needed to reduce archive rate !! Frame rejection ideas Veto frames if no Fluorescence light seen in (e. g. ) PM system Ø assumed to work 100%, what about noise – needs simulation Online reduction in backend – orientation varies, physics varies – need sophisticated analysis software Data reduction ideas Zero suppression Ø assume no pedestal Ø approach: simulate photons, add flat noise, add fluorescence, add detector imperfections. Ø use jpeg compression algorithm to estimate reduction Ø once noise added little gain in data size Feature extraction Ø store difference between consecutive frames Christopher Youngman, DESY XDAC 17 -18 April 2008 24

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Perspectives for data rejection and size reductions – G. Potdevin first results from simulated pictures on large 2 D pixel detectors Data rejection and reduction needed to reduce archive rate !! Frame rejection ideas Veto frames if no Fluorescence light seen in (e. g. ) PM system Ø assumed to work 100%, what about noise – needs simulation Online reduction in backend – orientation varies, physics varies – need sophisticated analysis software Data reduction ideas Zero suppression Ø assume no pedestal Ø approach: simulate photons, add flat noise, add fluorescence, add detector imperfections. Ø use jpeg compression algorithm to estimate reduction Ø once noise added little gain in data size Feature extraction Ø store difference between consecutive frames Christopher Youngman, DESY XDAC 17 -18 April 2008 24

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Data Reduction: zero suppression (2) Lame attempt to simulate compression: – Conversion in jpeg with best quality (~lossless) Single Molecule, noiseless image 99. 8% blank pixels Reduced to 6% of original size Large crystal, noiseless image 92. 4% blank pixels Reduced to 80% of original size Large crystal noised image Christopher Youngman, DESY XDAC 17 -18 April 2008 42. 8% blank pixels No gain 25

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Data Reduction: zero suppression (2) Lame attempt to simulate compression: – Conversion in jpeg with best quality (~lossless) Single Molecule, noiseless image 99. 8% blank pixels Reduced to 6% of original size Large crystal, noiseless image 92. 4% blank pixels Reduced to 80% of original size Large crystal noised image Christopher Youngman, DESY XDAC 17 -18 April 2008 42. 8% blank pixels No gain 25

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Conclusion – G. Potdevin Data rejection with veto ® What will be the proportion of rejection is unknown Data reduction possible with Zero suppression ® What the data will look like we don’t know ® How strong the background will be we don’t know But, preliminary simulations tend to show that not so much can be gained in this direction Early days – more work needed, initial results not encouraging large set of images for different experiments improve background simulation cross check results with expt. Christopher Youngman, DESY XDAC 17 -18 April 2008 26

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Conclusion – G. Potdevin Data rejection with veto ® What will be the proportion of rejection is unknown Data reduction possible with Zero suppression ® What the data will look like we don’t know ® How strong the background will be we don’t know But, preliminary simulations tend to show that not so much can be gained in this direction Early days – more work needed, initial results not encouraging large set of images for different experiments improve background simulation cross check results with expt. Christopher Youngman, DESY XDAC 17 -18 April 2008 26

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser XFEL 2 D pixel detector status Review as a single block HPAD LPD LSDD – now DEPFET Similarities: initially 1 Mpixel detector, later 2, 4, 8, 16, 25, … similar geometrical tile design sensor > ASIC > frontend > backend readout modular design, e. g. 32 modules = full detector similar ASIC 50 MHz ADC digitize data into pipeline similar readout pipeline processing/readout during inter-train gap similar control requirements Christopher Youngman, DESY XDAC 17 -18 April 2008 27

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser XFEL 2 D pixel detector status Review as a single block HPAD LPD LSDD – now DEPFET Similarities: initially 1 Mpixel detector, later 2, 4, 8, 16, 25, … similar geometrical tile design sensor > ASIC > frontend > backend readout modular design, e. g. 32 modules = full detector similar ASIC 50 MHz ADC digitize data into pipeline similar readout pipeline processing/readout during inter-train gap similar control requirements Christopher Youngman, DESY XDAC 17 -18 April 2008 27

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser XFEL 2 D pixel detector status Differences pixel sizes (LPD 500 x 500, HPAD&LSDD 200 x 200μm) gain handling: HPAD switch dynamically LPD 3 gains pick best DEPFET specific layout: HPAD Sensor&ASIC&frontend on detector head LPD Sensor&ASIC one detector head, frontend O(10)cm away LSDD Sensor&ASIC one detector head, frontend O(10)m away … Christopher Youngman, DESY XDAC 17 -18 April 2008 28

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser XFEL 2 D pixel detector status Differences pixel sizes (LPD 500 x 500, HPAD&LSDD 200 x 200μm) gain handling: HPAD switch dynamically LPD 3 gains pick best DEPFET specific layout: HPAD Sensor&ASIC&frontend on detector head LPD Sensor&ASIC one detector head, frontend O(10)cm away LSDD Sensor&ASIC one detector head, frontend O(10)m away … Christopher Youngman, DESY XDAC 17 -18 April 2008 28

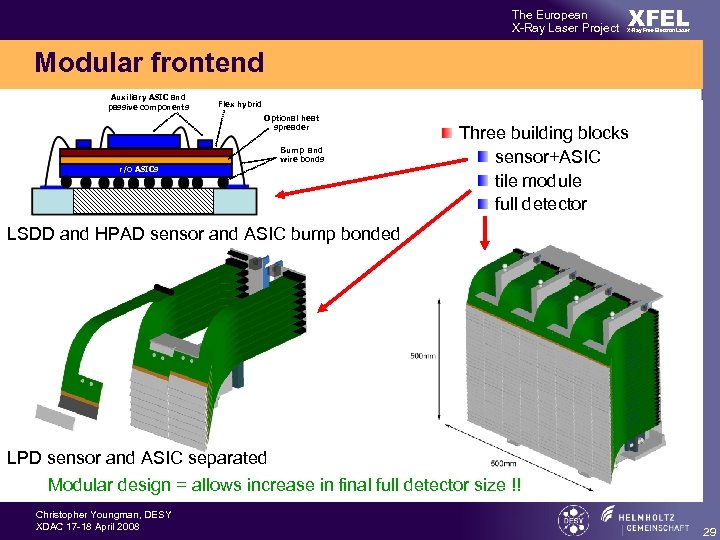

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Modular frontend Auxiliary ASIC and passive components Flex hybrid Optional heat spreader Bump and wire bonds r/o ASICs sensitive DEPFET array Three building blocks sensor+ASIC tile module full detector LSDD and HPAD sensor and ASIC bump bonded LPD sensor and ASIC separated Modular design = allows increase in final full detector size !! Christopher Youngman, DESY XDAC 17 -18 April 2008 29

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Modular frontend Auxiliary ASIC and passive components Flex hybrid Optional heat spreader Bump and wire bonds r/o ASICs sensitive DEPFET array Three building blocks sensor+ASIC tile module full detector LSDD and HPAD sensor and ASIC bump bonded LPD sensor and ASIC separated Modular design = allows increase in final full detector size !! Christopher Youngman, DESY XDAC 17 -18 April 2008 29

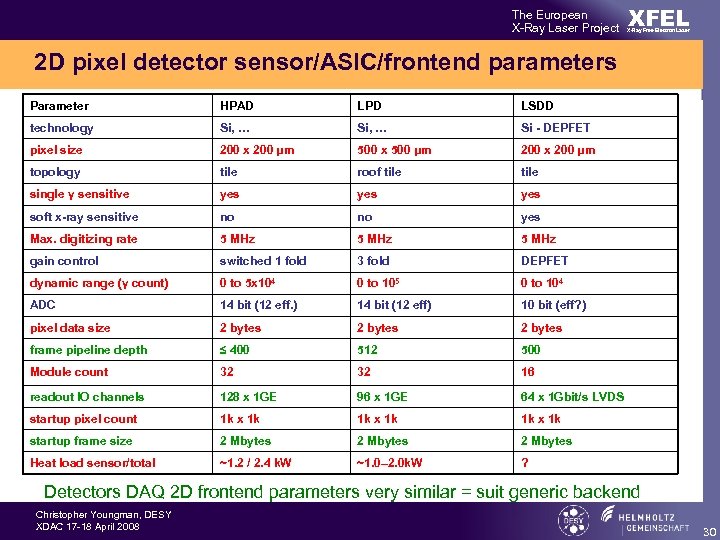

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser 2 D pixel detector sensor/ASIC/frontend parameters Parameter HPAD LPD LSDD technology Si, … Si - DEPFET pixel size 200 x 200 μm 500 x 500 μm 200 x 200 μm topology tile roof tile single γ sensitive yes yes soft x-ray sensitive no no yes Max. digitizing rate 5 MHz gain control switched 1 fold 3 fold DEPFET dynamic range (γ count) 0 to 5 x 104 0 to 105 0 to 104 ADC 14 bit (12 eff. ) 14 bit (12 eff) 10 bit (eff? ) pixel data size 2 bytes frame pipeline depth ≤ 400 512 500 Module count 32 32 16 readout IO channels 128 x 1 GE 96 x 1 GE 64 x 1 Gbit/s LVDS startup pixel count 1 k x 1 k startup frame size 2 Mbytes Heat load sensor/total ~1. 2 / 2. 4 k. W ~1. 0– 2. 0 k. W ? Detectors DAQ 2 D frontend parameters very similar = suit generic backend Christopher Youngman, DESY XDAC 17 -18 April 2008 30

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser 2 D pixel detector sensor/ASIC/frontend parameters Parameter HPAD LPD LSDD technology Si, … Si - DEPFET pixel size 200 x 200 μm 500 x 500 μm 200 x 200 μm topology tile roof tile single γ sensitive yes yes soft x-ray sensitive no no yes Max. digitizing rate 5 MHz gain control switched 1 fold 3 fold DEPFET dynamic range (γ count) 0 to 5 x 104 0 to 105 0 to 104 ADC 14 bit (12 eff. ) 14 bit (12 eff) 10 bit (eff? ) pixel data size 2 bytes frame pipeline depth ≤ 400 512 500 Module count 32 32 16 readout IO channels 128 x 1 GE 96 x 1 GE 64 x 1 Gbit/s LVDS startup pixel count 1 k x 1 k startup frame size 2 Mbytes Heat load sensor/total ~1. 2 / 2. 4 k. W ~1. 0– 2. 0 k. W ? Detectors DAQ 2 D frontend parameters very similar = suit generic backend Christopher Youngman, DESY XDAC 17 -18 April 2008 30

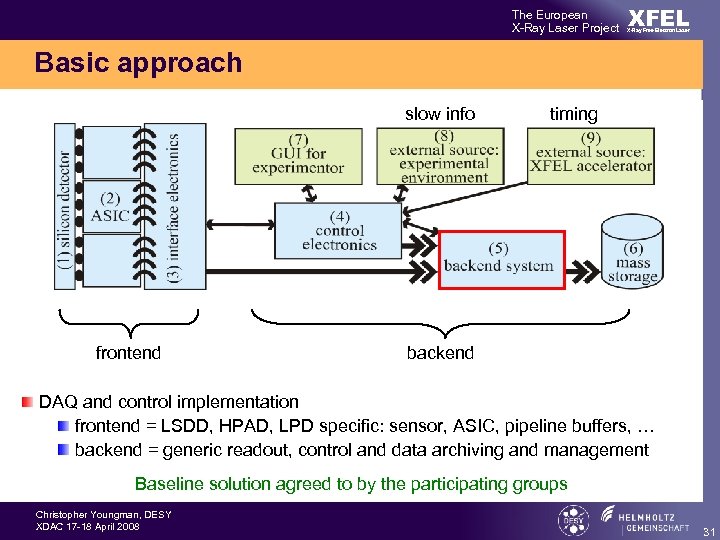

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Basic approach slow info frontend timing backend DAQ and control implementation frontend = LSDD, HPAD, LPD specific: sensor, ASIC, pipeline buffers, … backend = generic readout, control and data archiving and management Baseline solution agreed to by the participating groups Christopher Youngman, DESY XDAC 17 -18 April 2008 31

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Basic approach slow info frontend timing backend DAQ and control implementation frontend = LSDD, HPAD, LPD specific: sensor, ASIC, pipeline buffers, … backend = generic readout, control and data archiving and management Baseline solution agreed to by the participating groups Christopher Youngman, DESY XDAC 17 -18 April 2008 31

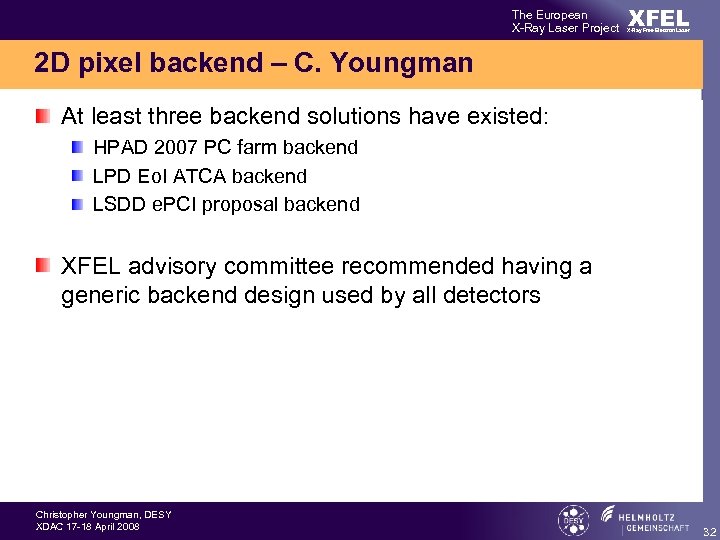

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser 2 D pixel backend – C. Youngman At least three backend solutions have existed: HPAD 2007 PC farm backend LPD Eo. I ATCA backend LSDD e. PCI proposal backend XFEL advisory committee recommended having a generic backend design used by all detectors Christopher Youngman, DESY XDAC 17 -18 April 2008 32

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser 2 D pixel backend – C. Youngman At least three backend solutions have existed: HPAD 2007 PC farm backend LPD Eo. I ATCA backend LSDD e. PCI proposal backend XFEL advisory committee recommended having a generic backend design used by all detectors Christopher Youngman, DESY XDAC 17 -18 April 2008 32

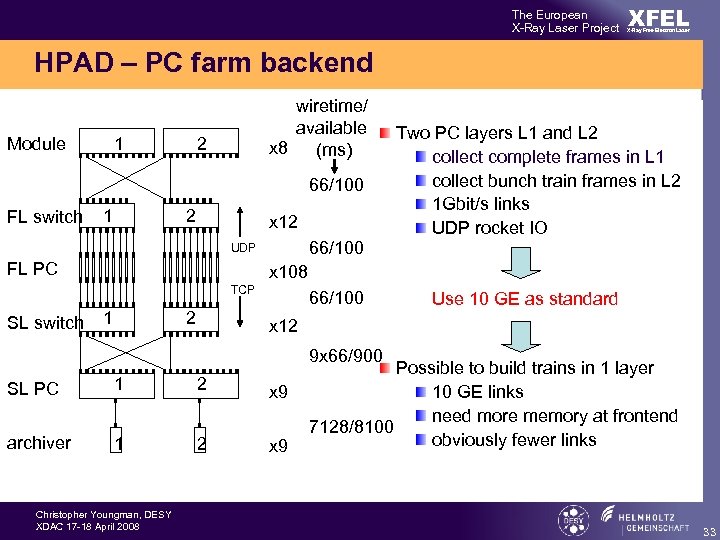

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser HPAD – PC farm backend 1 Module wiretime/ available x 8 (ms) 2 66/100 FL switch 1 2 x 12 66/100 UDP FL PC x 108 TCP SL switch Two PC layers L 1 and L 2 collect complete frames in L 1 collect bunch train frames in L 2 1 Gbit/s links UDP rocket IO 1 2 66/100 Use 10 GE as standard x 12 9 x 66/900 SL PC 1 2 x 9 archiver 1 2 x 9 Christopher Youngman, DESY XDAC 17 -18 April 2008 Possible to build trains in 1 layer 10 GE links need more memory at frontend 7128/8100 obviously fewer links 33

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser HPAD – PC farm backend 1 Module wiretime/ available x 8 (ms) 2 66/100 FL switch 1 2 x 12 66/100 UDP FL PC x 108 TCP SL switch Two PC layers L 1 and L 2 collect complete frames in L 1 collect bunch train frames in L 2 1 Gbit/s links UDP rocket IO 1 2 66/100 Use 10 GE as standard x 12 9 x 66/900 SL PC 1 2 x 9 archiver 1 2 x 9 Christopher Youngman, DESY XDAC 17 -18 April 2008 Possible to build trains in 1 layer 10 GE links need more memory at frontend 7128/8100 obviously fewer links 33

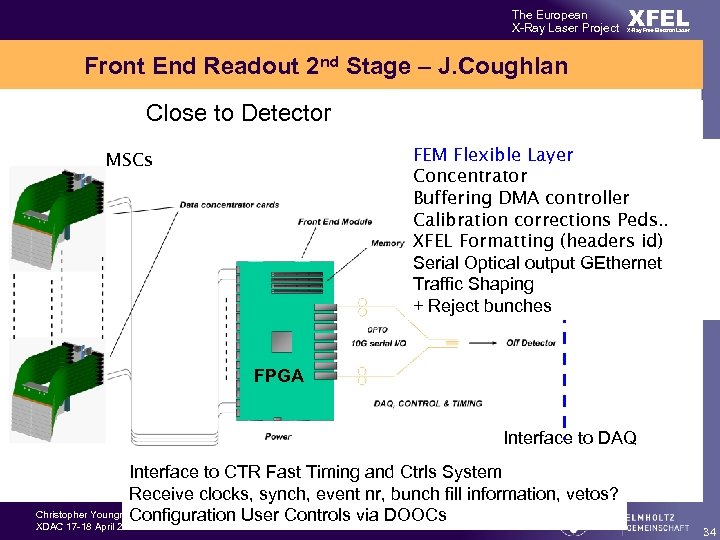

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Front End Readout 2 nd Stage – J. Coughlan Close to Detector FEM Flexible Layer Concentrator Buffering DMA controller Calibration corrections Peds. . XFEL Formatting (headers id) Serial Optical output GEthernet Traffic Shaping + Reject bunches MSCs FPGA Interface to DAQ Interface to CTR Fast Timing and Ctrls System Receive clocks, synch, event nr, bunch fill information, vetos? Christopher Youngman, DESY Configuration User Controls via DOOCs XDAC 17 -18 April 2008 34

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Front End Readout 2 nd Stage – J. Coughlan Close to Detector FEM Flexible Layer Concentrator Buffering DMA controller Calibration corrections Peds. . XFEL Formatting (headers id) Serial Optical output GEthernet Traffic Shaping + Reject bunches MSCs FPGA Interface to DAQ Interface to CTR Fast Timing and Ctrls System Receive clocks, synch, event nr, bunch fill information, vetos? Christopher Youngman, DESY Configuration User Controls via DOOCs XDAC 17 -18 April 2008 34

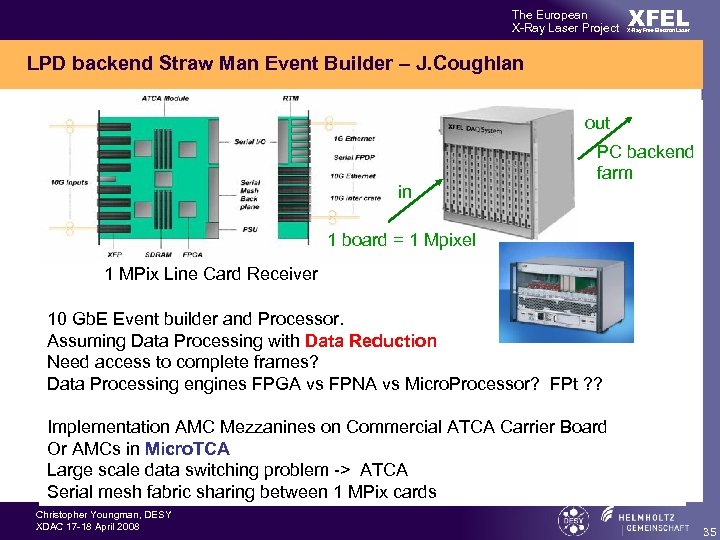

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser LPD backend Straw Man Event Builder – J. Coughlan out in PC backend farm 1 board = 1 Mpixel 1 MPix Line Card Receiver 10 Gb. E Event builder and Processor. Assuming Data Processing with Data Reduction Need access to complete frames? Data Processing engines FPGA vs FPNA vs Micro. Processor? FPt ? ? Implementation AMC Mezzanines on Commercial ATCA Carrier Board Or AMCs in Micro. TCA Large scale data switching problem -> ATCA Serial mesh fabric sharing between 1 MPix cards Christopher Youngman, DESY XDAC 17 -18 April 2008 35

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser LPD backend Straw Man Event Builder – J. Coughlan out in PC backend farm 1 board = 1 Mpixel 1 MPix Line Card Receiver 10 Gb. E Event builder and Processor. Assuming Data Processing with Data Reduction Need access to complete frames? Data Processing engines FPGA vs FPNA vs Micro. Processor? FPt ? ? Implementation AMC Mezzanines on Commercial ATCA Carrier Board Or AMCs in Micro. TCA Large scale data switching problem -> ATCA Serial mesh fabric sharing between 1 MPix cards Christopher Youngman, DESY XDAC 17 -18 April 2008 35

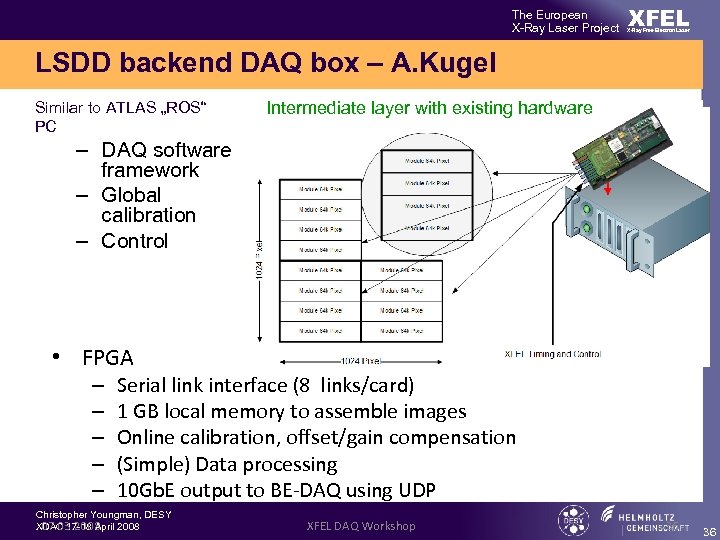

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser LSDD backend DAQ box – A. Kugel Similar to ATLAS „ROS“ PC Intermediate layer with existing hardware – DAQ software framework – Global calibration – Control • FPGA – – – Serial link interface (8 links/card) 1 GB local memory to assemble images Online calibration, offset/gain compensation (Simple) Data processing 10 Gb. E output to BE-DAQ using UDP Christopher Youngman, DESY XDAC 17 -18 April 2008 07. 03. 2008 XFEL DAQ Workshop 36 36

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser LSDD backend DAQ box – A. Kugel Similar to ATLAS „ROS“ PC Intermediate layer with existing hardware – DAQ software framework – Global calibration – Control • FPGA – – – Serial link interface (8 links/card) 1 GB local memory to assemble images Online calibration, offset/gain compensation (Simple) Data processing 10 Gb. E output to BE-DAQ using UDP Christopher Youngman, DESY XDAC 17 -18 April 2008 07. 03. 2008 XFEL DAQ Workshop 36 36

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser How to progress on backend Need agreement on a generic solution for all detectors Agree on protocol from frontend Scalability issues for >1 Mpixel detectors Intermediate layer between frontend and backend PC farm Sub group formed to look at other intermediate layer solutions: M. French, J. Couglan, T. Nicholls, R. Halsall, P. Goettlicher, M. Zimmer, A. Kugel, J. Visschers, M. v. Beuzekom, C. Youngman Produce an on paper design of a ATCA intermediate to investigate feasibility. Assume Ø 10 GE inputs Ø 1 board per 1 Mpixel input Ø Build frames and ordered trains Ø Send result to backend PC farm LPD had looked at other solutions: i. WARP, … Christopher Youngman, DESY XDAC 17 -18 April 2008 37

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser How to progress on backend Need agreement on a generic solution for all detectors Agree on protocol from frontend Scalability issues for >1 Mpixel detectors Intermediate layer between frontend and backend PC farm Sub group formed to look at other intermediate layer solutions: M. French, J. Couglan, T. Nicholls, R. Halsall, P. Goettlicher, M. Zimmer, A. Kugel, J. Visschers, M. v. Beuzekom, C. Youngman Produce an on paper design of a ATCA intermediate to investigate feasibility. Assume Ø 10 GE inputs Ø 1 board per 1 Mpixel input Ø Build frames and ordered trains Ø Send result to backend PC farm LPD had looked at other solutions: i. WARP, … Christopher Youngman, DESY XDAC 17 -18 April 2008 37

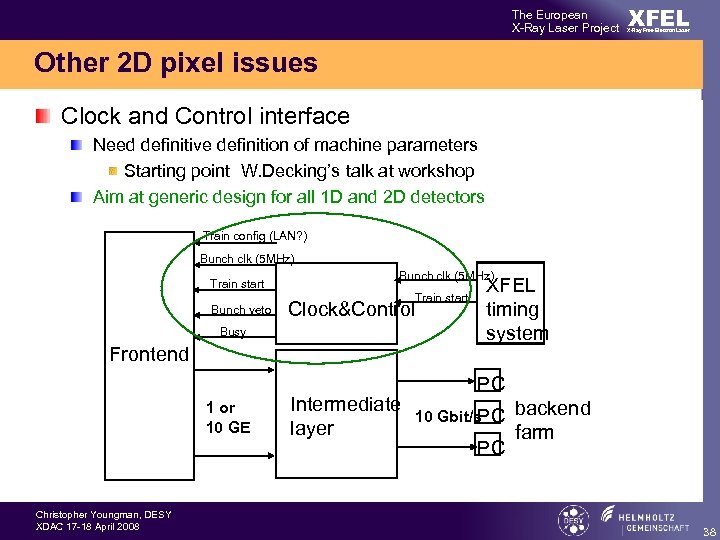

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Other 2 D pixel issues Clock and Control interface Need definitive definition of machine parameters Starting point W. Decking’s talk at workshop Aim at generic design for all 1 D and 2 D detectors Train config (LAN? ) Bunch clk (5 MHz) Train start Bunch veto Bunch clk (5 MHz) Train start Clock&Control Busy Frontend 1 or 10 GE Christopher Youngman, DESY XDAC 17 -18 April 2008 Intermediate layer XFEL timing system PC 10 Gbit/s PC PC backend farm 38

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Other 2 D pixel issues Clock and Control interface Need definitive definition of machine parameters Starting point W. Decking’s talk at workshop Aim at generic design for all 1 D and 2 D detectors Train config (LAN? ) Bunch clk (5 MHz) Train start Bunch veto Bunch clk (5 MHz) Train start Clock&Control Busy Frontend 1 or 10 GE Christopher Youngman, DESY XDAC 17 -18 April 2008 Intermediate layer XFEL timing system PC 10 Gbit/s PC PC backend farm 38

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Workshop issues and decisions prepare a machine operation parameters and timing document use DOOCS software for control of experiments use existing software to control beam line and diagnostics systems standardize on 10 GE links set up working group for generic large 2 D pixel backend systems decide on a solution generic clock and control TCA interface to XFEL timing system make sure that expt. requirements (what info. Required discusion) computing TDR is needed (participate in it) what storage and computing resources are required use central DESY-IT data storage systems to archive the data? GRID based data management, access and job submission? get foothold space allocated in tunnels and xhexp network infrastructure computing hardware Christopher Youngman, DESY XDAC 17 -18 April 2008 39

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Workshop issues and decisions prepare a machine operation parameters and timing document use DOOCS software for control of experiments use existing software to control beam line and diagnostics systems standardize on 10 GE links set up working group for generic large 2 D pixel backend systems decide on a solution generic clock and control TCA interface to XFEL timing system make sure that expt. requirements (what info. Required discusion) computing TDR is needed (participate in it) what storage and computing resources are required use central DESY-IT data storage systems to archive the data? GRID based data management, access and job submission? get foothold space allocated in tunnels and xhexp network infrastructure computing hardware Christopher Youngman, DESY XDAC 17 -18 April 2008 39

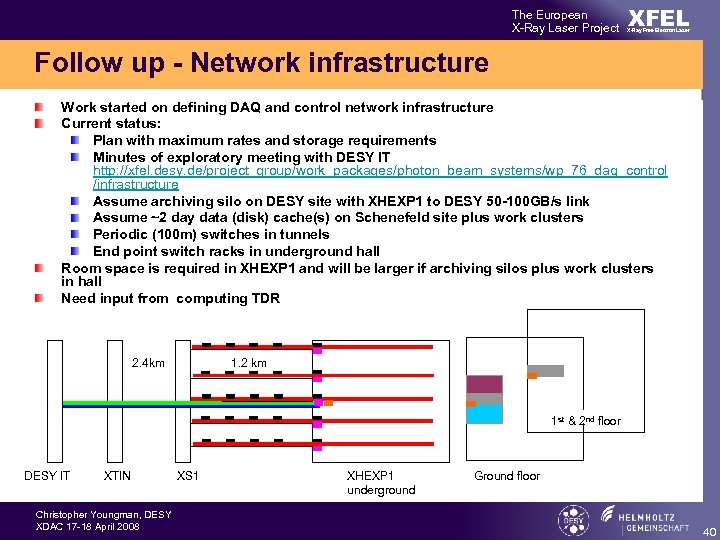

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up - Network infrastructure Work started on defining DAQ and control network infrastructure Current status: Plan with maximum rates and storage requirements Minutes of exploratory meeting with DESY IT http: //xfel. desy. de/project_group/work_packages/photon_beam_systems/wp_76_daq_control /infrastructure Assume archiving silo on DESY site with XHEXP 1 to DESY 50 -100 GB/s link Assume ~2 day data (disk) cache(s) on Schenefeld site plus work clusters Periodic (100 m) switches in tunnels End point switch racks in underground hall Room space is required in XHEXP 1 and will be larger if archiving silos plus work clusters in hall Need input from computing TDR 1. 2 km 2. 4 km 1 st & 2 nd floor DESY IT XTIN Christopher Youngman, DESY XDAC 17 -18 April 2008 XS 1 XHEXP 1 underground Ground floor 40

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up - Network infrastructure Work started on defining DAQ and control network infrastructure Current status: Plan with maximum rates and storage requirements Minutes of exploratory meeting with DESY IT http: //xfel. desy. de/project_group/work_packages/photon_beam_systems/wp_76_daq_control /infrastructure Assume archiving silo on DESY site with XHEXP 1 to DESY 50 -100 GB/s link Assume ~2 day data (disk) cache(s) on Schenefeld site plus work clusters Periodic (100 m) switches in tunnels End point switch racks in underground hall Room space is required in XHEXP 1 and will be larger if archiving silos plus work clusters in hall Need input from computing TDR 1. 2 km 2. 4 km 1 st & 2 nd floor DESY IT XTIN Christopher Youngman, DESY XDAC 17 -18 April 2008 XS 1 XHEXP 1 underground Ground floor 40

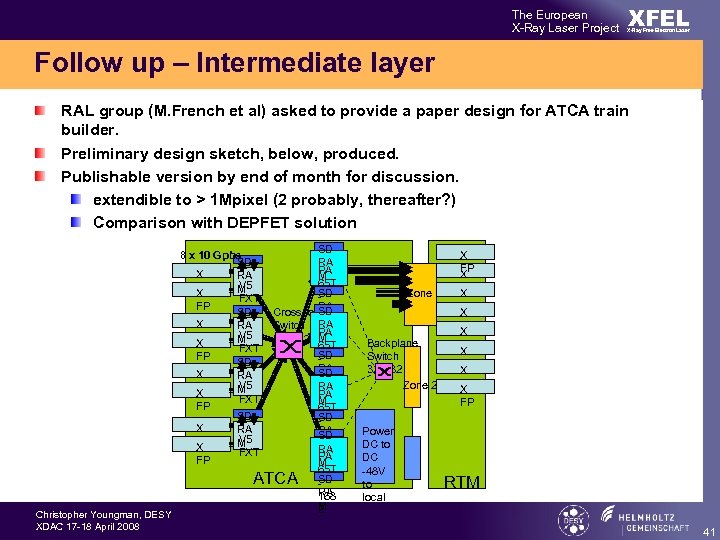

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up – Intermediate layer RAL group (M. French et al) asked to provide a paper design for ATCA train builder. Preliminary design sketch, below, produced. Publishable version by end of month for discussion. extendible to > 1 Mpixel (2 probably, thereafter? ) Comparison with DEPFET solution SD RA PA M 65 T SD RA 168 SD Crosspoint M 2 Switch RA PA 32 x 32 M 65 T SD RA 168 SD M 2 RA PA M 65 T SD ATCA RA 168 M 2 8 x 10 Gpbs SD X RA V 5 FP M X FXT FP Christopher Youngman, DESY XDAC 17 -18 April 2008 X FP X Zone 3 Backplane Switch 32 x 32 Zone 2 Power DC to DC -48 V to local FP X FP X FP RTM 41

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up – Intermediate layer RAL group (M. French et al) asked to provide a paper design for ATCA train builder. Preliminary design sketch, below, produced. Publishable version by end of month for discussion. extendible to > 1 Mpixel (2 probably, thereafter? ) Comparison with DEPFET solution SD RA PA M 65 T SD RA 168 SD Crosspoint M 2 Switch RA PA 32 x 32 M 65 T SD RA 168 SD M 2 RA PA M 65 T SD ATCA RA 168 M 2 8 x 10 Gpbs SD X RA V 5 FP M X FXT FP Christopher Youngman, DESY XDAC 17 -18 April 2008 X FP X Zone 3 Backplane Switch 32 x 32 Zone 2 Power DC to DC -48 V to local FP X FP X FP RTM 41

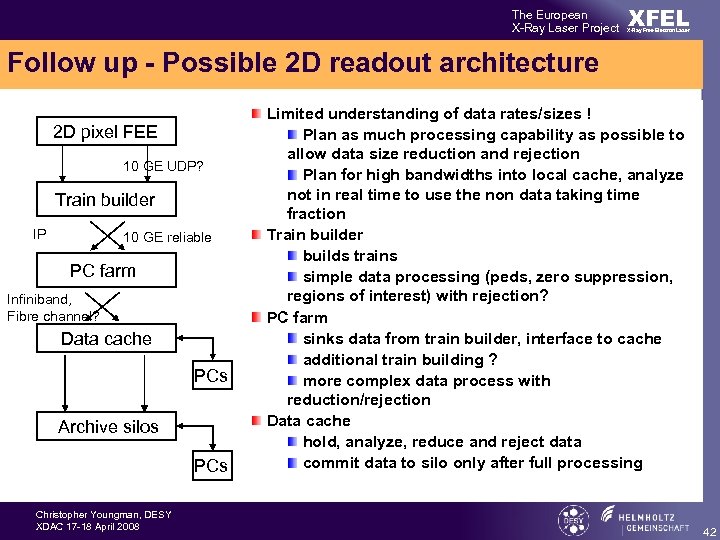

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up - Possible 2 D readout architecture 2 D pixel FEE 10 GE UDP? Train builder IP 10 GE reliable PC farm Infiniband, Fibre channel? Data cache PCs Archive silos PCs Christopher Youngman, DESY XDAC 17 -18 April 2008 Limited understanding of data rates/sizes ! Plan as much processing capability as possible to allow data size reduction and rejection Plan for high bandwidths into local cache, analyze not in real time to use the non data taking time fraction Train builder builds trains simple data processing (peds, zero suppression, regions of interest) with rejection? PC farm sinks data from train builder, interface to cache additional train building ? more complex data process with reduction/rejection Data cache hold, analyze, reduce and reject data commit data to silo only after full processing 42

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up - Possible 2 D readout architecture 2 D pixel FEE 10 GE UDP? Train builder IP 10 GE reliable PC farm Infiniband, Fibre channel? Data cache PCs Archive silos PCs Christopher Youngman, DESY XDAC 17 -18 April 2008 Limited understanding of data rates/sizes ! Plan as much processing capability as possible to allow data size reduction and rejection Plan for high bandwidths into local cache, analyze not in real time to use the non data taking time fraction Train builder builds trains simple data processing (peds, zero suppression, regions of interest) with rejection? PC farm sinks data from train builder, interface to cache additional train building ? more complex data process with reduction/rejection Data cache hold, analyze, reduce and reject data commit data to silo only after full processing 42

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up – Computing TDR The DAQ and control needs an understanding of what comes later We need a computing TDR WP 76 already making assumptions about DESY site silos, etc. What is the DESY-IT role ? Do we need a group working on the TDR ? WP 76 has to be involved We need to tie in the various FP 7/GRID calls and external offers how are external requests to be handled – put them in the group ? Who does this ? Christopher Youngman, DESY XDAC 17 -18 April 2008 43

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up – Computing TDR The DAQ and control needs an understanding of what comes later We need a computing TDR WP 76 already making assumptions about DESY site silos, etc. What is the DESY-IT role ? Do we need a group working on the TDR ? WP 76 has to be involved We need to tie in the various FP 7/GRID calls and external offers how are external requests to be handled – put them in the group ? Who does this ? Christopher Youngman, DESY XDAC 17 -18 April 2008 43

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up – DOOCS Sergey Esenov joined WP 76 in March To get experience of DOOCS: set up some simple test systems run control system to control PC farm, etc. gather and report experience of DOOCS usage work in progress … Options if DOOCS is inadequate for our needs get the DOOCS people to improve their system e. g. improved code management and builds write our own code and layer it under DOOCS Christopher Youngman, DESY XDAC 17 -18 April 2008 44

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Follow up – DOOCS Sergey Esenov joined WP 76 in March To get experience of DOOCS: set up some simple test systems run control system to control PC farm, etc. gather and report experience of DOOCS usage work in progress … Options if DOOCS is inadequate for our needs get the DOOCS people to improve their system e. g. improved code management and builds write our own code and layer it under DOOCS Christopher Youngman, DESY XDAC 17 -18 April 2008 44

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Conclusions Important issues finalize the 2 D detector intermediate layer aim at a single 1 D and 2 D control signal handling scheme participate in a computing TDR DOOCS feedback Acknowledgements Thanks to all who contributed Christopher Youngman, DESY XDAC 17 -18 April 2008 45

The European X-Ray Laser Project XFEL X-Ray Free-Electron Laser Conclusions Important issues finalize the 2 D detector intermediate layer aim at a single 1 D and 2 D control signal handling scheme participate in a computing TDR DOOCS feedback Acknowledgements Thanks to all who contributed Christopher Youngman, DESY XDAC 17 -18 April 2008 45