ccedbe80d0d5bca03cfaf5ceeb73b7f4.ppt

- Количество слайдов: 40

The Energy Sciences Network BESAC August 2004 Mary Anne Scott Program Manager Advanced Scientific Computing Research Office of Science Department of Energy William E. Johnston, ESnet Dept. Head and Senior Scientist R. P. Singh, Federal Project Manager Michael S. Collins, Stan Kluz, Joseph Burrescia, and James V. Gagliardi, ESnet Leads Gizella Kapus, Resource Manager and the ESnet Team Lawrence Berkeley National Laboratory 1

What is ESnet? • Mission: • • Vision: • • Provide, interoperable, effective and reliable communications infrastructure and leading-edge network services that support missions of the Department of Energy, especially the Office of Science Provide seamless and ubiquitous access, via shared collaborative information and computational environments, to the facilities, data, and colleagues needed to accomplish their goals. Role: • A component of the Office of Science infrastructure critical to the success of its research programs (program funded through ASCR/MICS; managed and operated by ESnet staff at LBNL). 2

Why is ESnet important? • Enables thousands of DOE, university and industry scientists and collaborators worldwide to make effective use of unique DOE research facilities and computing resources independent of time and geographic location Direct connections to all major DOE sites o Access to the global Internet (managing 150, 000 routes at 10 commercial peering points) o User demand has grown by a factor of more than 10, 000 since its inception in the mid 1990’s—a 100 percent increase every year since 1990 o • Capabilities not available through commercial networks - Architected to move huge amounts of data between a small number of sites - High bandwidth peering to provide access to US, European, Asia. Pacific, and other research and education networks. Objective: Support scientific research by providing seamless and ubiquitous access to the facilities, data, and colleagues 3

How is ESnet Managed? • A community endeavor o Strategic guidance from the OSC programs - Energy Science Network Steering Committee (ESSC) – BES represented by Nestor Zaluzec, ANL and Jeff Nichols, ORNL o Network operation is a shared activity with the community - ESnet Site Coordinators Committee - Ensures the right operational “sociology” for success • Complex and specialized – both in the network engineering and the network management – in order to provide its services to the laboratories in an integrated support environment • Extremely reliable in several dimensions Ø Taken together these points make ESnet a unique facility supporting DOE science that is quite different from a commercial ISP or University network 4

…what now? ? ? VISION - A scalable, secure, integrated network environment for ultra-scale distributed science is being developed to make it possible to combine resources and expertise to address complex questions that no single institution could manage alone. • Network Strategy Production network - Base TCP/IP services; +99. 9% reliable High-impact network - Increments of 10 Gbps; switched lambdas (other solutions); 99% reliable Research network - Interfaces with production, high-impact and other research networks; start electronic and advance towards optical switching; very flexible [Ultra. Science Net] • Revisit governance model SC-wide coordination o Advisory Committee involvement o 5

Where do you come in? • Early identification of requirements Evolving programs o New facilities o • • • Participation in management activities Interaction with BES representatives on ESSC Next ESSC meeting on Oct 13 -15 in DC area 6

What Does ESnet Provide? • A network connecting DOE Labs and their collaborators that is critical to the future process of science • An architecture tailored to accommodate DOE’s large-scale science o move huge amounts of data between a small number of sites • High bandwidth access to DOE’s primary science collaborators: Research and Education institutions in the US, Europe, Asia Pacific, and elsewhere • • Full access to the global Internet for DOE Labs • Grid middleware and collaboration services supporting collaborative science Comprehensive user support, including “owning” all trouble tickets involving ESnet users (including problems at the far end of an ESnet connection) until they are resolved – 24 x 7 coverage o trust, persistence, and science oriented policy 7

What is ESnet Today? • Essentially all of the national data traffic supporting US science is carried by two networks – ESnet and Internet-2 / Abilene (which plays a similar role for the university community) 8

How Do Networks Work? • Accessing a service, Grid or otherwise, such as a Web server, FTP server, etc. , from a client computer and client application (e. g. a Web browser_ involves o Target host names o Host addresses o Service identification o Routing 9

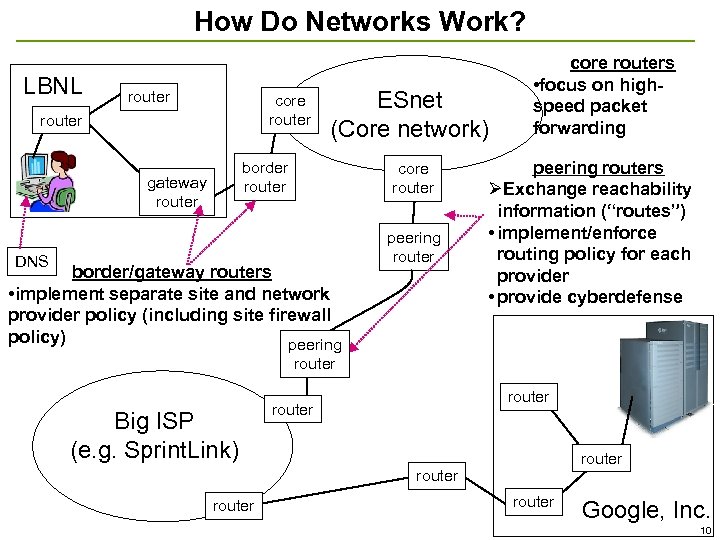

How Do Networks Work? LBNL router core router ESnet (Core network) border router gateway router core router DNS border/gateway routers • implement separate site and network provider policy (including site firewall policy) peering router core routers • focus on highspeed packet forwarding peering routers ØExchange reachability information (“routes”) • implement/enforce routing policy for each provider • provide cyberdefense router Big ISP (e. g. Sprint. Link) router router Google, Inc. 10

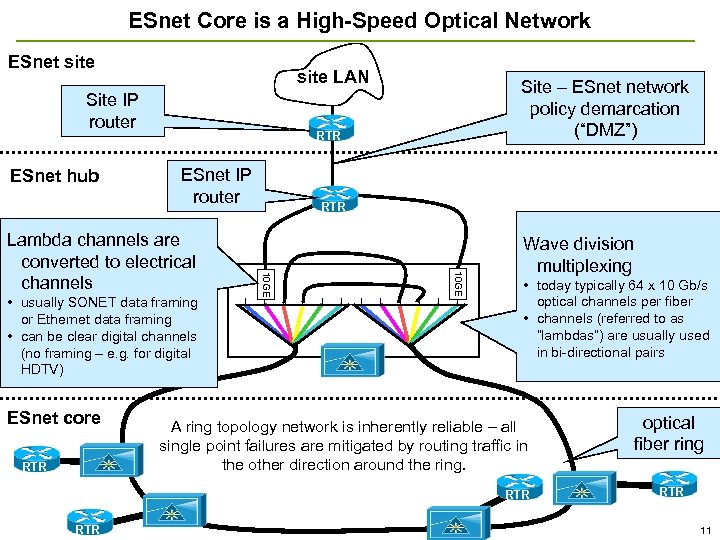

ESnet Core is a High-Speed Optical Network ESnet site LAN Site IP router ESnet hub RTR ESnet IP router RTR 10 GE • usually SONET data framing or Ethernet data framing • can be clear digital channels (no framing – e. g. for digital HDTV) RTR 10 GE Lambda channels are converted to electrical channels ESnet core Site – ESnet network policy demarcation (“DMZ”) Wave division multiplexing • today typically 64 x 10 Gb/s optical channels per fiber • channels (referred to as “lambdas”) are usually used in bi-directional pairs A ring topology network is inherently reliable – all single point failures are mitigated by routing traffic in the other direction around the ring. RTR optical fiber ring RTR 11

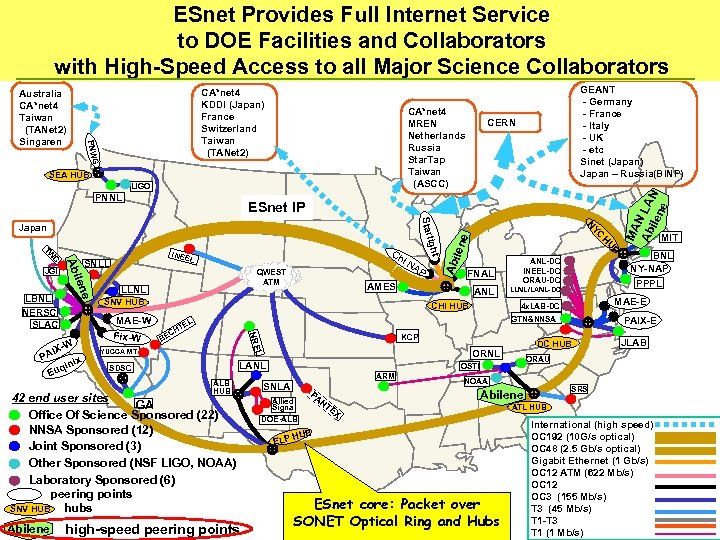

ESnet Provides Full Internet Service to DOE Facilities and Collaborators with High-Speed Access to all Major Science Collaborators CA*net 4 MREN Netherlands Russia Star. Tap Taiwan (ASCC) SEA HUB LIGO PNNL LLNL len e Abi FNAL ANL-DC INEEL-DC ORAU-DC ANL AP LLNL/LANL-DC AMES SNV HUB MAE-W Fix-W B U H QWEST ATM CHI HUB GTN&NNSA BE KCP LANL SDSC 42 end user sites GA Office Of Science Sponsored (22) NNSA Sponsored (12) Joint Sponsored (3) Other Sponsored (NSF LIGO, NOAA) Laboratory Sponsored (6) peering points SNV HUB hubs high-speed peering points ARM PA NOAA SRS Abilene NT Allied Signal DOE-ALB EX UB H ELP ESnet core: Packet over SONET Optical Ring and Hubs JLAB ORAU OSTI SNLA BNL NY-NAP PPPL PAIX-E DC HUB ORNL L YUCCA MT MIT MAE-E 4 x. LAB-DC EL T CH ALB HUB Abilene ght i. N L NRE W IXPA x ini uq E INEE SNLL e ilen Ab LBNL NERSC SLAC Ch YC N li Star TW JGI CERN ESnet IP Japan C GEANT - Germany - France - Italy - UK - etc Sinet (Japan) Japan – Russia(BINP) MA N Ab LA ile N ne CA*net 4 KDDI (Japan) France Switzerland Taiwan (TANet 2) G PNW Australia CA*net 4 Taiwan (TANet 2) Singaren ATL HUB International (high speed) OC 192 (10 G/s optical) OC 48 (2. 5 Gb/s optical) Gigabit Ethernet (1 Gb/s) OC 12 ATM (622 Mb/s) OC 12 OC 3 (155 Mb/s) T 3 (45 Mb/s) T 1 -T 3 T 1 (1 Mb/s)

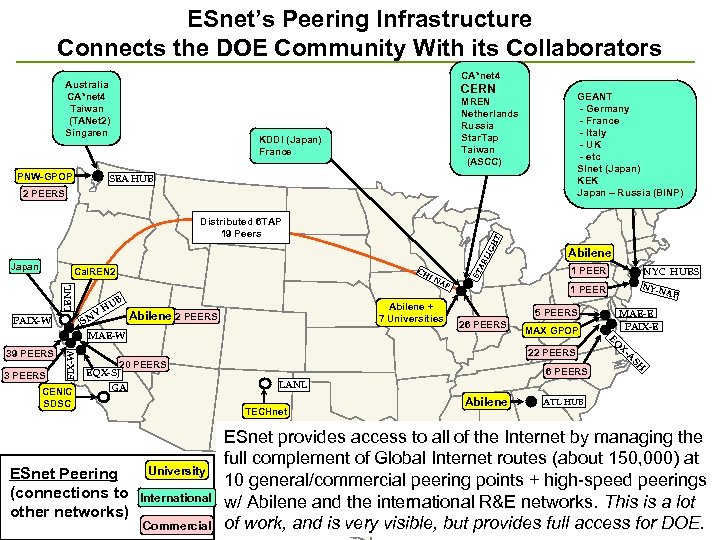

ESnet’s Peering Infrastructure Connects the DOE Community With its Collaborators CA*net 4 Australia CA*net 4 Taiwan (TANet 2) Singaren PNW-GPOP CERN GEANT - Germany - France - Italy - UK - etc SInet (Japan) KEK Japan – Russia (BINP) MREN Netherlands Russia Star. Tap Taiwan (ASCC) KDDI (Japan) France SEA HUB 2 PEERS CH IN LBNL Cal. REN 2 PAIX-W V SN UB H AP Abilene + 7 Universities Abilene 2 PEERS 39 PEERS 3 PEERS FIX-W MAE-W CENIC SDSC ST A Japan RL IG HT Distributed 6 TAP 19 Peers Abilene 1 PEER NYC HUBS 1 PEER NYN 5 PEERS 26 PEERS MAX GPOP 22 PEERS 20 PEERS EQX-SJ GA ESnet Peering (connections to other networks) 6 PEERS International Commercial EQ MAE-E PAIX-E XA SH LANL TECHnet University AP Abilene ATL HUB ESnet provides access to all of the Internet by managing the full complement of Global Internet routes (about 150, 000) at 10 general/commercial peering points + high-speed peerings w/ Abilene and the international R&E networks. This is a lot of work, and is very visible, but provides full access for DOE.

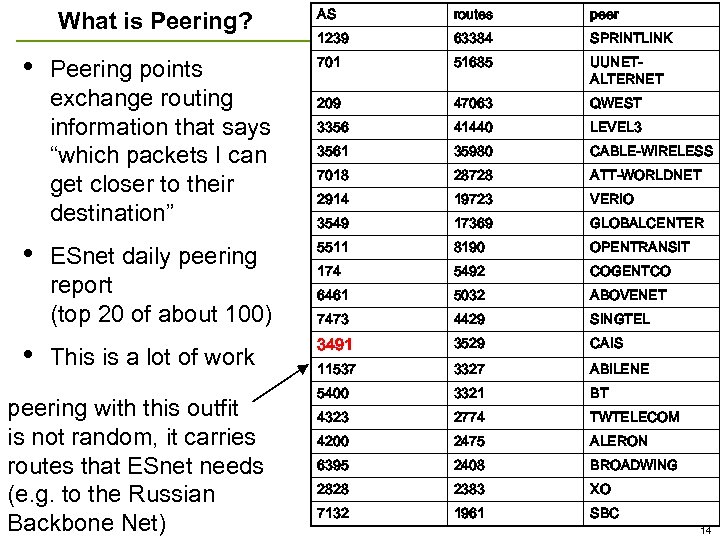

AS routes peer 1239 63384 SPRINTLINK Peering points exchange routing information that says “which packets I can get closer to their destination” 701 51685 UUNETALTERNET 209 47063 QWEST 3356 41440 LEVEL 3 3561 35980 CABLE-WIRELESS 7018 28728 ATT-WORLDNET 2914 19723 VERIO 3549 17369 GLOBALCENTER ESnet daily peering report (top 20 of about 100) 5511 8190 OPENTRANSIT 174 5492 COGENTCO 6461 5032 ABOVENET 7473 4429 SINGTEL 3491 3529 CAIS 11537 3327 ABILENE 5400 3321 BT 4323 2774 TWTELECOM 4200 2475 ALERON 6395 2408 BROADWING 2828 2383 XO 7132 1961 SBC What is Peering? • • • This is a lot of work peering with this outfit is not random, it carries routes that ESnet needs (e. g. to the Russian Backbone Net) 14

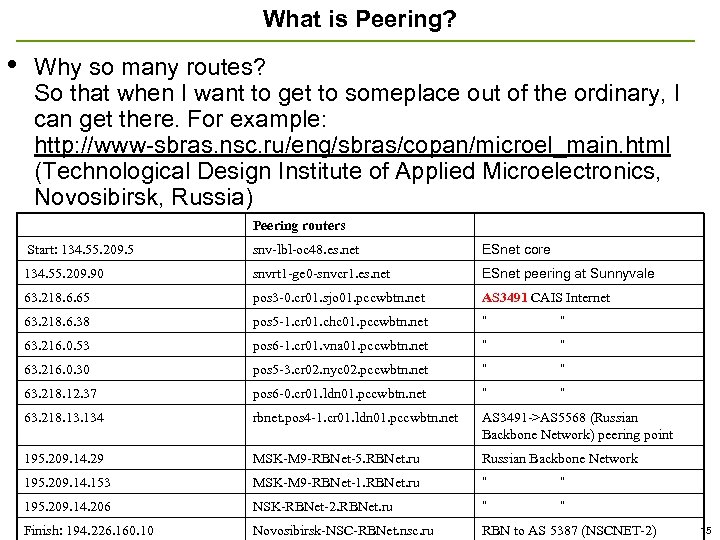

What is Peering? • Why so many routes? So that when I want to get to someplace out of the ordinary, I can get there. For example: http: //www-sbras. nsc. ru/eng/sbras/copan/microel_main. html (Technological Design Institute of Applied Microelectronics, Novosibirsk, Russia) Peering routers Start: 134. 55. 209. 5 snv-lbl-oc 48. es. net ESnet core 134. 55. 209. 90 snvrt 1 -ge 0 -snvcr 1. es. net ESnet peering at Sunnyvale 63. 218. 6. 65 pos 3 -0. cr 01. sjo 01. pccwbtn. net AS 3491 CAIS Internet 63. 218. 6. 38 pos 5 -1. cr 01. chc 01. pccwbtn. net “ “ 63. 216. 0. 53 pos 6 -1. cr 01. vna 01. pccwbtn. net “ “ 63. 216. 0. 30 pos 5 -3. cr 02. nyc 02. pccwbtn. net “ “ 63. 218. 12. 37 pos 6 -0. cr 01. ldn 01. pccwbtn. net “ “ 63. 218. 134 rbnet. pos 4 -1. cr 01. ldn 01. pccwbtn. net AS 3491 ->AS 5568 (Russian Backbone Network) peering point 195. 209. 14. 29 MSK-M 9 -RBNet-5. RBNet. ru Russian Backbone Network 195. 209. 14. 153 MSK-M 9 -RBNet-1. RBNet. ru “ “ 195. 209. 14. 206 NSK-RBNet-2. RBNet. ru “ “ Finish: 194. 226. 160. 10 Novosibirsk-NSC-RBNet. nsc. ru RBN to AS 5387 (NSCNET-2) 15

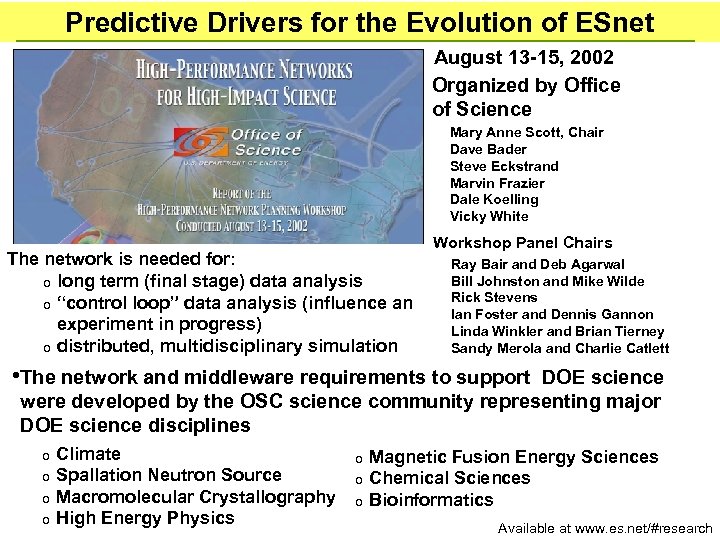

Predictive Drivers for the Evolution of ESnet August 13 -15, 2002 Organized by Office of Science Mary Anne Scott, Chair Dave Bader Steve Eckstrand Marvin Frazier Dale Koelling Vicky White The network is needed for: o long term (final stage) data analysis o “control loop” data analysis (influence an experiment in progress) o distributed, multidisciplinary simulation Workshop Panel Chairs Ray Bair and Deb Agarwal Bill Johnston and Mike Wilde Rick Stevens Ian Foster and Dennis Gannon Linda Winkler and Brian Tierney Sandy Merola and Charlie Catlett • The network and middleware requirements to support DOE science were developed by the OSC science community representing major DOE science disciplines o o Climate Spallation Neutron Source Macromolecular Crystallography High Energy Physics o o o Magnetic Fusion Energy Sciences Chemical Sciences Bioinformatics Available at www. es. net/#research 16

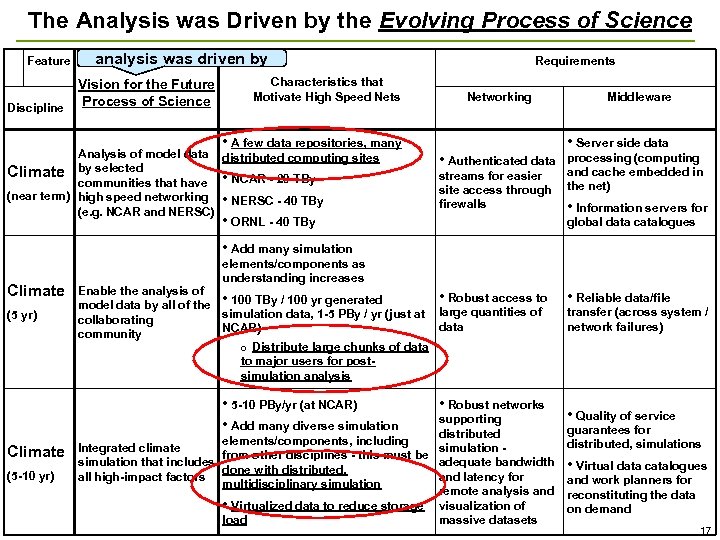

The Analysis was Driven by the Evolving Process of Science Feature Discipline analysis was driven by Vision for the Future Process of Science Characteristics that Motivate High Speed Nets • A few data repositories, many Analysis of model data distributed computing sites Climate by selected communities that have • NCAR - 20 TBy (near term) high speed networking • NERSC - 40 TBy (e. g. NCAR and NERSC) • ORNL - 40 TBy Requirements Networking Middleware • Server side data • Authenticated data streams for easier site access through firewalls processing (computing and cache embedded in the net) • Information servers for global data catalogues • Add many simulation Climate (5 yr) elements/components as understanding increases Enable the analysis of • Robust access to model data by all of the • 100 TBy / 100 yr generated simulation data, 1 -5 PBy / yr (just at large quantities of collaborating data NCAR) community o Distribute large chunks of data to major users for postsimulation analysis • 5 -10 PBy/yr (at NCAR) • Add many diverse simulation Climate (5 -10 yr) • Robust networks supporting distributed elements/components, including simulation Integrated climate from other disciplines - this must be simulation that includes adequate bandwidth done with distributed, all high-impact factors and latency for multidisciplinary simulation remote analysis and • Virtualized data to reduce storage visualization of load massive datasets • Reliable data/file transfer (across system / network failures) • Quality of service guarantees for distributed, simulations • Virtual data catalogues and work planners for reconstituting the data on demand 17

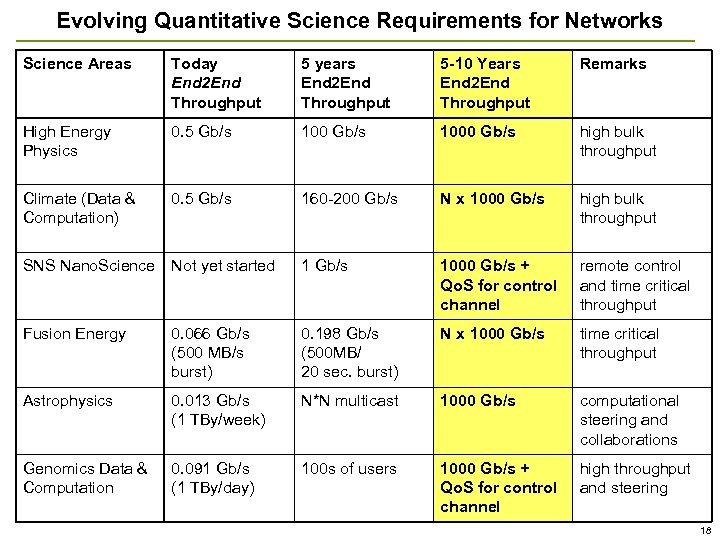

Evolving Quantitative Science Requirements for Networks Science Areas Today End 2 End Throughput 5 years End 2 End Throughput 5 -10 Years End 2 End Throughput Remarks High Energy Physics 0. 5 Gb/s 1000 Gb/s high bulk throughput Climate (Data & Computation) 0. 5 Gb/s 160 -200 Gb/s N x 1000 Gb/s high bulk throughput SNS Nano. Science Not yet started 1 Gb/s 1000 Gb/s + Qo. S for control channel remote control and time critical throughput Fusion Energy 0. 066 Gb/s (500 MB/s burst) 0. 198 Gb/s (500 MB/ 20 sec. burst) N x 1000 Gb/s time critical throughput Astrophysics 0. 013 Gb/s (1 TBy/week) N*N multicast 1000 Gb/s computational steering and collaborations Genomics Data & Computation 0. 091 Gb/s (1 TBy/day) 100 s of users 1000 Gb/s + Qo. S for control channel high throughput and steering 18

Observed Drivers for ESnet Evolution • Are we seeing the predictions of two years ago come true? • Yes! 19

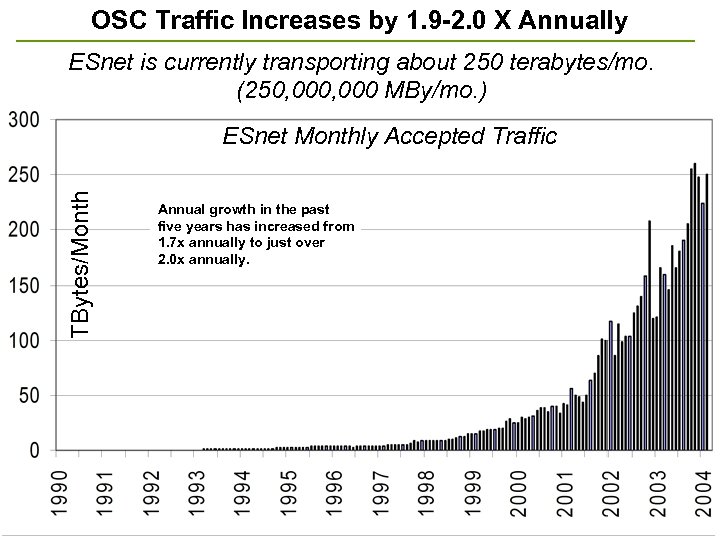

OSC Traffic Increases by 1. 9 -2. 0 X Annually ESnet is currently transporting about 250 terabytes/mo. (250, 000 MBy/mo. ) TBytes/Month ESnet Monthly Accepted Traffic Annual growth in the past five years has increased from 1. 7 x annually to just over 2. 0 x annually. 20

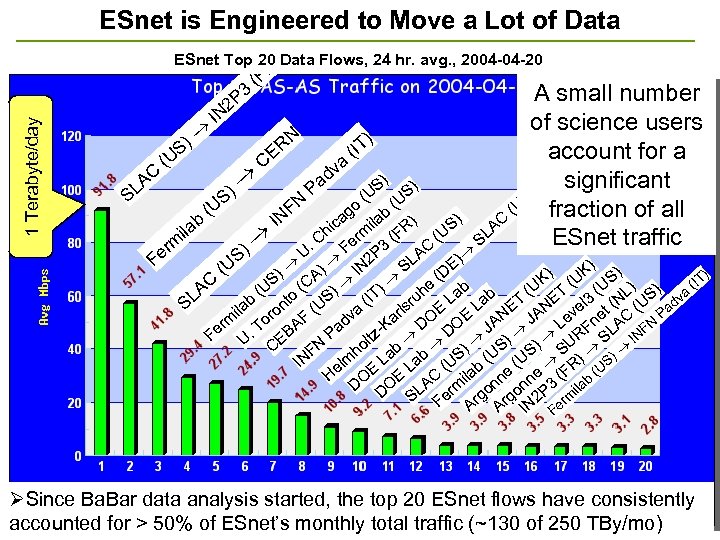

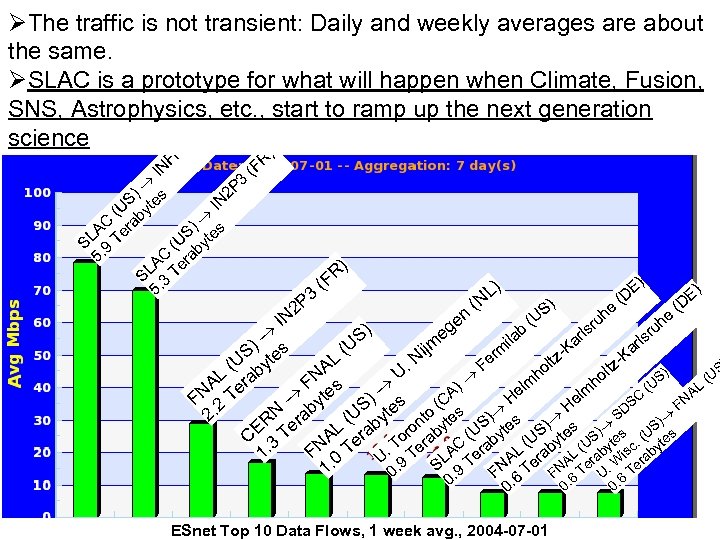

ESnet is Engineered to Move a Lot of Data ESnet Top 20 )Data Flows, 24 hr. avg. , 2004 -04 -20 1 Terabyte/day R SL AC ( S) U 3 2 P N (F I RN E ) S C a dv Pa ) IT ( A small number of science users account for a significant ) S (U fraction of all C LA ESnet traffic S ) US US) ( o b( U FN ( g ) b IN ica mila R) h US ila F r ( (. C Fe 3 C rm ) U P A Fe N 2 ) E) US ) SL ( I ) UK S) ) ) A (D K ( S (C C (IT e b ) (U ) ) (U h (U to LA S) La Lab T NET el 3 (NL (US adva b (IT lsru S E E t a ila ron F (U r v m To dv -Ka DO OE JAN JA Le Fne AC FN P r L BA Pa Fe U. tz D S) ) UR S IN E N ol C ) b h F S S La Lab (US b (U (U R) S) m IN l He OE E AC ila nne ne (F b (U n 3 ila D DO L m S Fer rgo 2 P erm A A IN F ØSince Ba. Bar data analysis started, the top 20 ESnet flows have consistently accounted for > 50% of ESnet’s monthly total traffic (~130 of 250 TBy/mo) 21

ØThe traffic is not transient: Daily and weekly averages are about the same. ØSLAC is a prototype) for what will happen when Climate, Fusion, (IT SNS, Astrophysics, uaetc. , start to ramp up the next generation ad science P FN IN ) 3 ) s 2 P S te N I (U by AC era S) tes SL. 9 T (U by C ra 5 LA Te S. 3 5 R (F 3 2 P IN ( R) F en L) N ( S) (U ijm. N U eg ila 0 ) ) US ( 0. b e h ru ls ar K E) D ( r e uh ls ar -K E) D ( m S tes er L U y ltz F ( tz S ho ol L rab NA S) (U h m F s L (U el A) NA Te lm C te ) s e H NA F 2 by C S ( F N ra US yte nto tes S) s ) H SD 2. ( ) ER Te L rab oro aby (U yte S tes S) s (US s C 3 NA Te. T Ter AC rab L (U aby L (U byte isc. byte. F 0 1 e a a r U 9 SL 9 T FNA Ter U. W Ter. 1. 0. 6 6 6 ESnet Top 10 Data Flows, 1 week avg. , 2004 -07 -01 0.

ESnet is a Critical Element of Large-Scale Science • ESnet is a critical part of the large-scale science infrastructure of high energy physics experiments, climate modeling, magnetic fusion experiments, astrophysics data analysis, etc. • As other large-scale facilities – such as SNS – turn on, this will be true across DOE 23

Science Mission Critical Infrastructure • ESnet is a visible and critical piece of general DOE science infrastructure o • if ESnet fails, tens of thousands of DOE and University users know it within minutes if not seconds Requires high reliability and high operational security in the o network operations, and o ESnet infrastructure support – the systems that support the operation and management of the network and services - Secure and redundant mail and Web systems are central to the operation and security of ESnet – trouble tickets are by email – engineering communication by email – engineering database interface is via Web - Secure network access to Hub equipment - Backup secure telephony access to all routers - 24 x 7 help desk (joint w/ NERSC) and 24 x 7 on-call network engineers 24

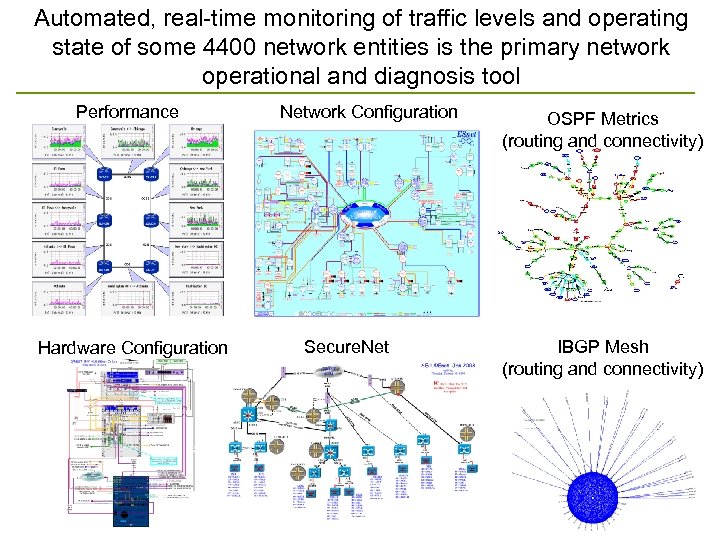

Automated, real-time monitoring of traffic levels and operating state of some 4400 network entities is the primary network operational and diagnosis tool Performance Hardware Configuration Network Configuration Secure. Net OSPF Metrics (routing and connectivity) IBGP Mesh (routing and connectivity)

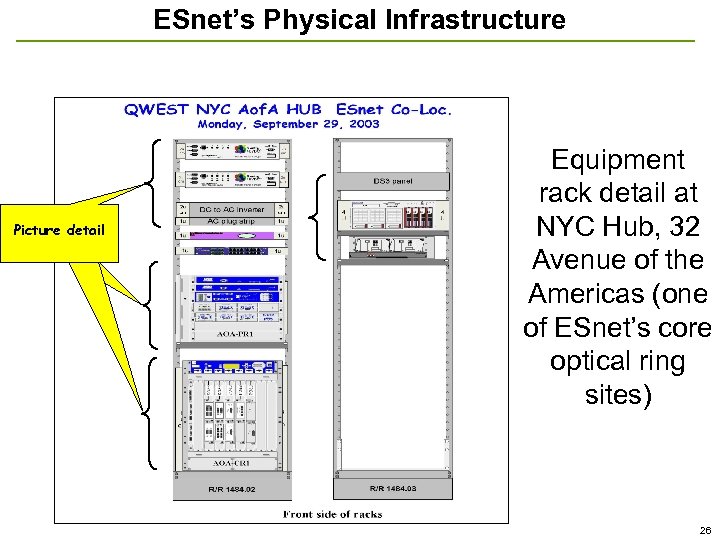

ESnet’s Physical Infrastructure Picture detail Equipment rack detail at NYC Hub, 32 Avenue of the Americas (one of ESnet’s core optical ring sites) 26

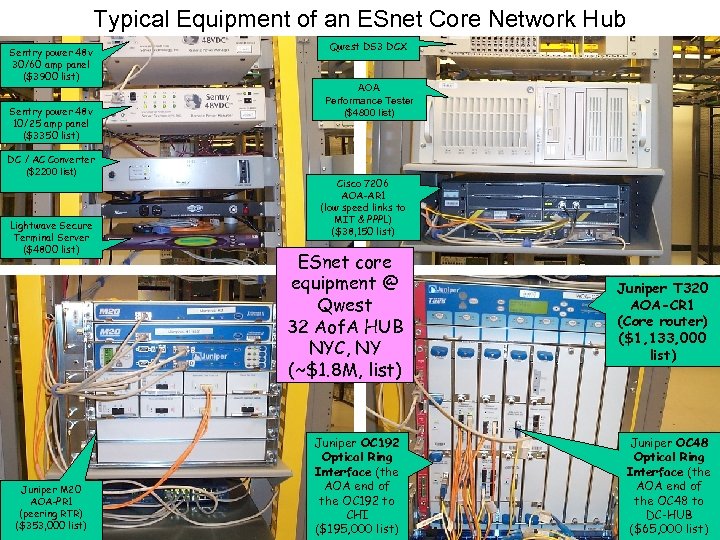

Typical Equipment of an ESnet Core Network Hub Sentry power 48 v 30/60 amp panel ($3900 list) Sentry power 48 v 10/25 amp panel ($3350 list) DC / AC Converter ($2200 list) Lightwave Secure Terminal Server ($4800 list) Juniper M 20 AOA-PR 1 (peering RTR) ($353, 000 list) Qwest DS 3 DCX AOA Performance Tester ($4800 list) Cisco 7206 AOA-AR 1 (low speed links to MIT & PPPL) ($38, 150 list) ESnet core equipment @ Qwest 32 Aof. A HUB NYC, NY (~$1. 8 M, list) Juniper OC 192 Optical Ring Interface (the AOA end of the OC 192 to CHI ($195, 000 list) Juniper T 320 AOA-CR 1 (Core router) ($1, 133, 000 list) Juniper OC 48 Optical Ring Interface (the AOA end of the OC 48 to DC-HUB ($65, 000 list) 27

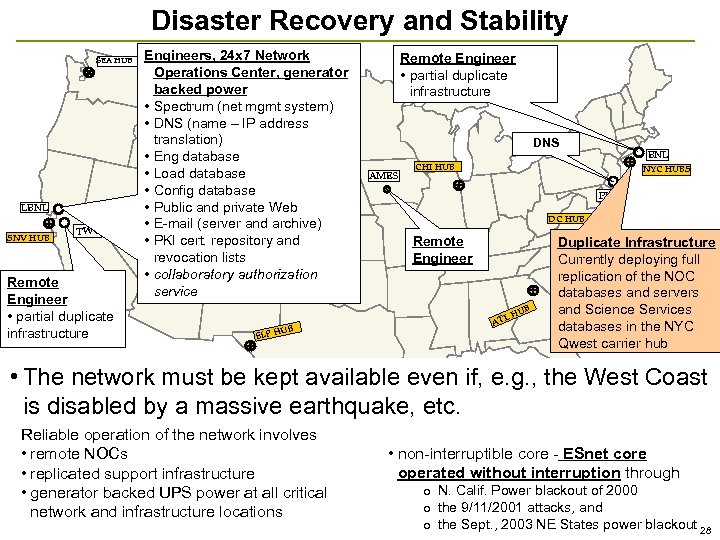

Disaster Recovery and Stability SEA HUB LBNL SNV HUB TWC Remote Engineer • partial duplicate infrastructure Engineers, 24 x 7 Network Operations Center, generator backed power • Spectrum (net mgmt system) • DNS (name – IP address translation) • Eng database • Load database • Config database • Public and private Web • E-mail (server and archive) • PKI cert. repository and revocation lists • collaboratory authorization ALB HUB service Remote Engineer • partial duplicate infrastructure DNS AMES NYC HUBS PPPL DC HUB Remote Engineer UB LH UB ELP H BNL CHI HUB AT Duplicate Infrastructure Currently deploying full replication of the NOC databases and servers and Science Services databases in the NYC Qwest carrier hub • The network must be kept available even if, e. g. , the West Coast is disabled by a massive earthquake, etc. Reliable operation of the network involves • remote NOCs • replicated support infrastructure • generator backed UPS power at all critical network and infrastructure locations • non-interruptible core - ESnet core operated without interruption through o N. Calif. Power blackout of 2000 o the 9/11/2001 attacks, and o the Sept. , 2003 NE States power blackout 28

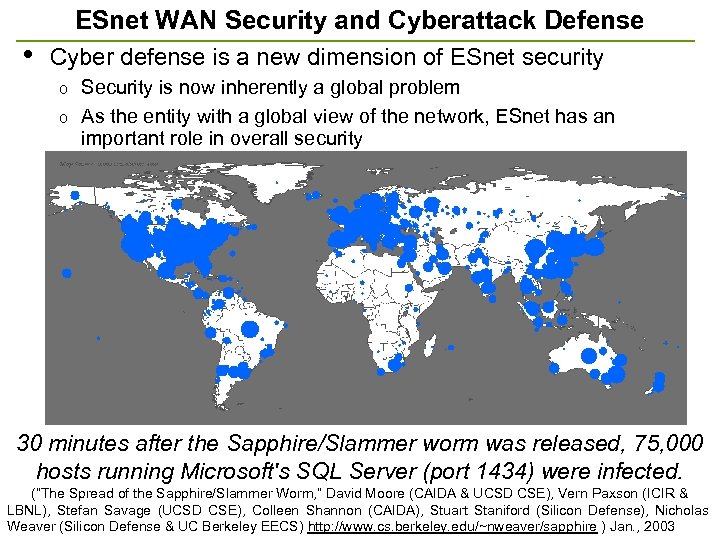

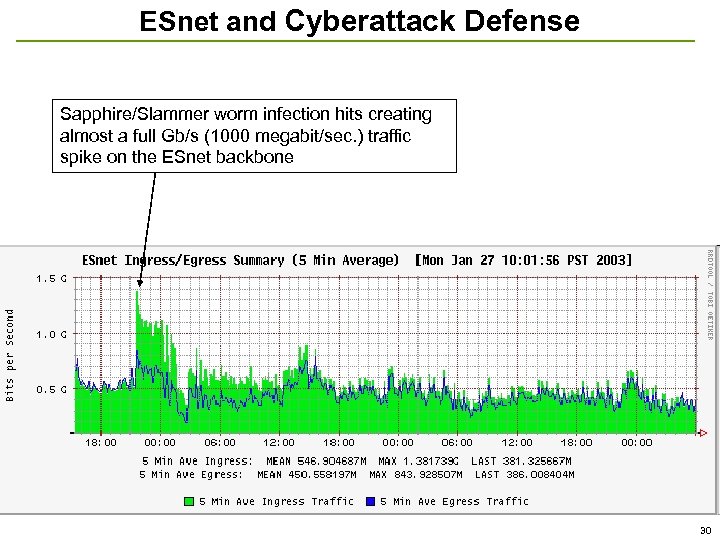

ESnet WAN Security and Cyberattack Defense • Cyber defense is a new dimension of ESnet security Security is now inherently a global problem o As the entity with a global view of the network, ESnet has an important role in overall security o 30 minutes after the Sapphire/Slammer worm was released, 75, 000 hosts running Microsoft's SQL Server (port 1434) were infected. (“The Spread of the Sapphire/Slammer Worm, ” David Moore (CAIDA & UCSD CSE), Vern Paxson (ICIR & LBNL), Stefan Savage (UCSD CSE), Colleen Shannon (CAIDA), Stuart Staniford (Silicon Defense), Nicholas Weaver (Silicon Defense & UC Berkeley EECS) http: //www. cs. berkeley. edu/~nweaver/sapphire ) Jan. , 2003 29

ESnet and Cyberattack Defense Sapphire/Slammer worm infection hits creating almost a full Gb/s (1000 megabit/sec. ) traffic spike on the ESnet backbone 30

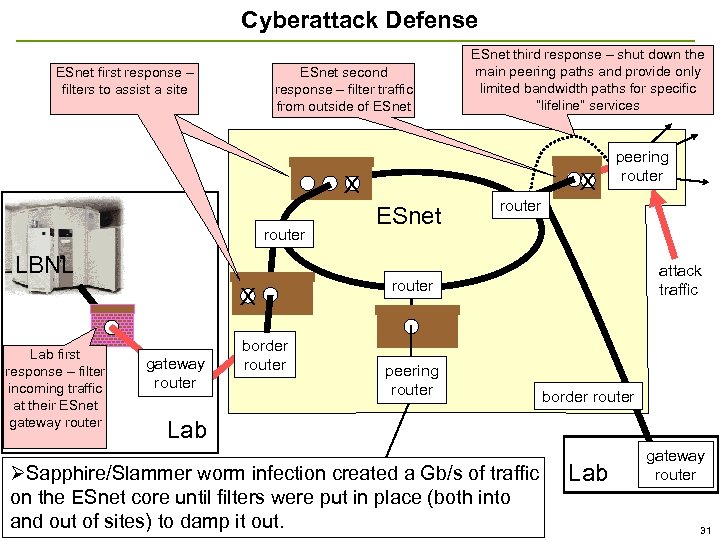

Cyberattack Defense ESnet first response – filters to assist a site ESnet second response – filter traffic from outside of ESnet X X router LBNL X Lab first response – filter incoming traffic at their ESnet gateway router border router ESnet third response – shut down the main peering paths and provide only limited bandwidth paths for specific “lifeline” services ESnet peering router attack traffic router peering router border router Lab ØSapphire/Slammer worm infection created a Gb/s of traffic on the ESnet core until filters were put in place (both into and out of sites) to damp it out. Lab gateway router 31

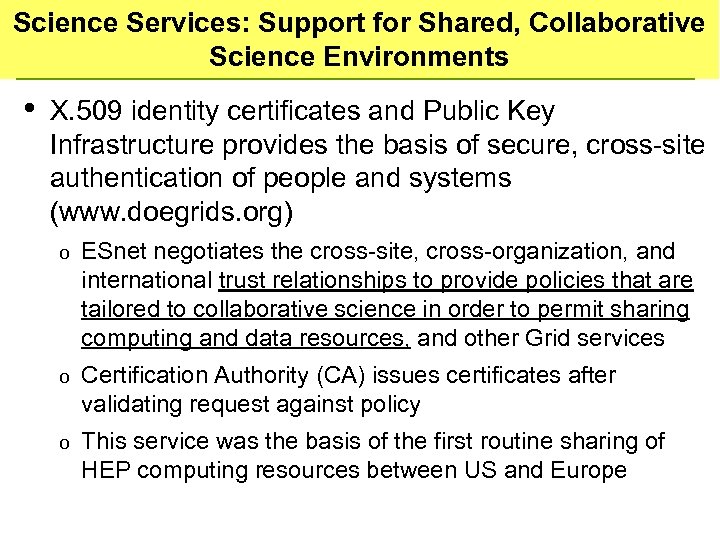

Science Services: Support for Shared, Collaborative Science Environments • X. 509 identity certificates and Public Key Infrastructure provides the basis of secure, cross-site authentication of people and systems (www. doegrids. org) o ESnet negotiates the cross-site, cross-organization, and international trust relationships to provide policies that are tailored to collaborative science in order to permit sharing computing and data resources, and other Grid services o Certification Authority (CA) issues certificates after validating request against policy o This service was the basis of the first routine sharing of HEP computing resources between US and Europe

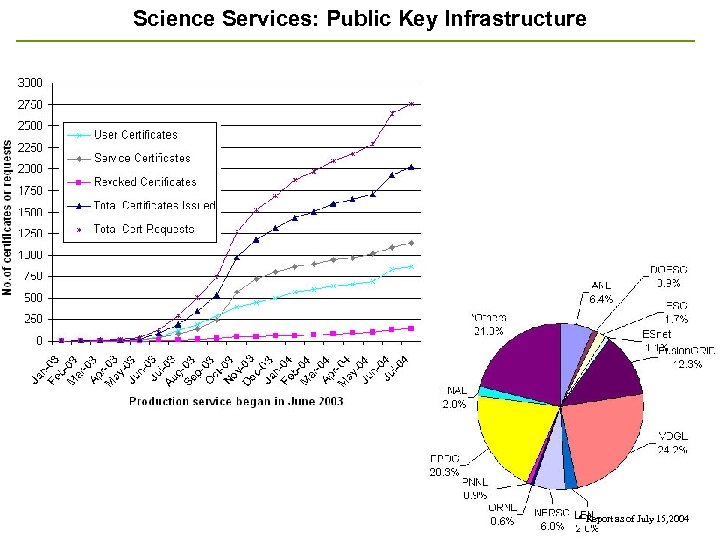

Science Services: Public Key Infrastructure * Report as of July 15, 2004 33

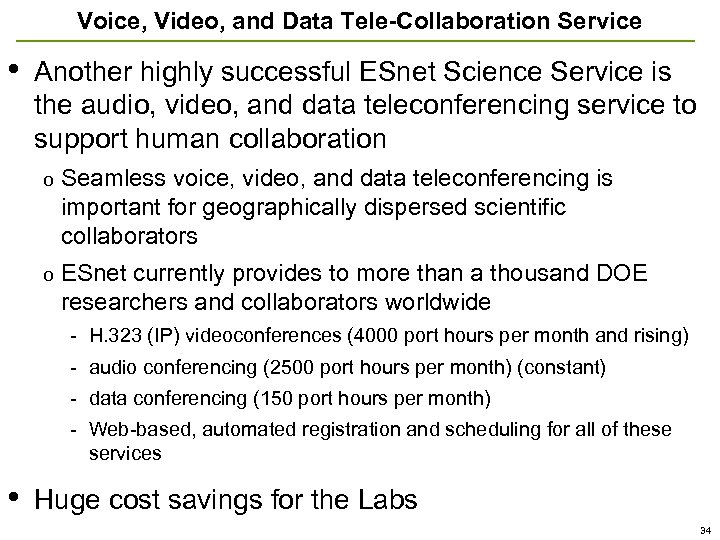

Voice, Video, and Data Tele-Collaboration Service • Another highly successful ESnet Science Service is the audio, video, and data teleconferencing service to support human collaboration o Seamless voice, video, and data teleconferencing is important for geographically dispersed scientific collaborators o ESnet currently provides to more than a thousand DOE researchers and collaborators worldwide - H. 323 (IP) videoconferences (4000 port hours per month and rising) - audio conferencing (2500 port hours per month) (constant) - data conferencing (150 port hours per month) - Web-based, automated registration and scheduling for all of these services • Huge cost savings for the Labs 34

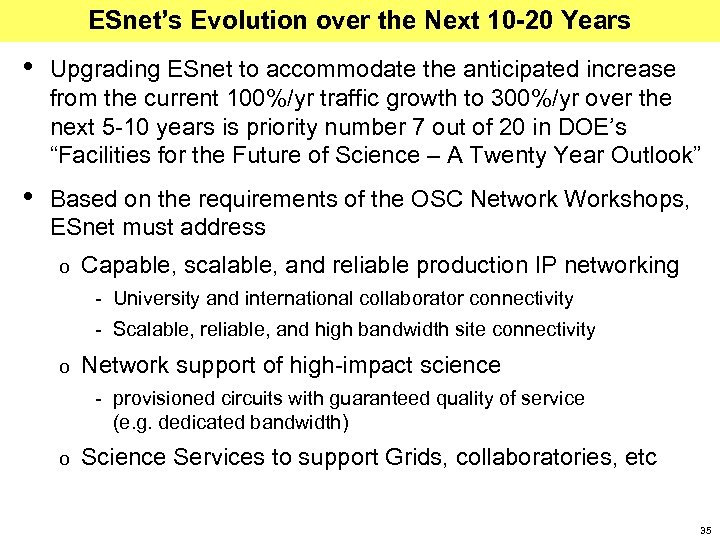

ESnet’s Evolution over the Next 10 -20 Years • Upgrading ESnet to accommodate the anticipated increase from the current 100%/yr traffic growth to 300%/yr over the next 5 -10 years is priority number 7 out of 20 in DOE’s “Facilities for the Future of Science – A Twenty Year Outlook” • Based on the requirements of the OSC Network Workshops, ESnet must address o Capable, scalable, and reliable production IP networking - University and international collaborator connectivity - Scalable, reliable, and high bandwidth site connectivity o Network support of high-impact science - provisioned circuits with guaranteed quality of service (e. g. dedicated bandwidth) o Science Services to support Grids, collaboratories, etc 35

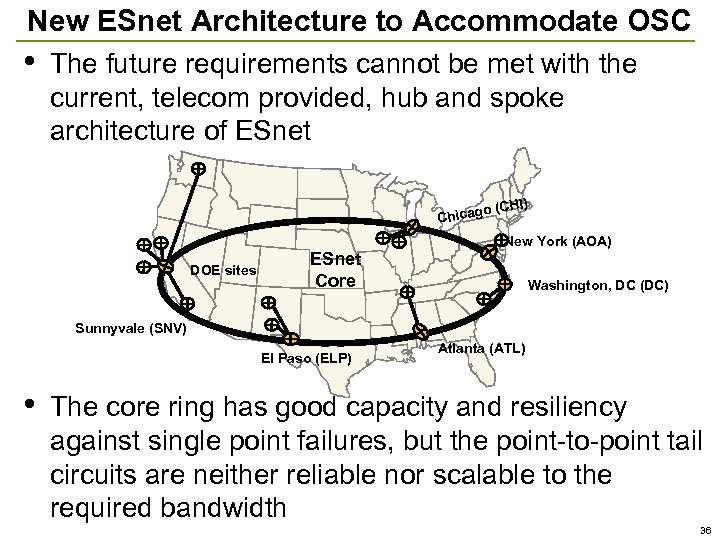

New ESnet Architecture to Accommodate OSC • The future requirements cannot be met with the current, telecom provided, hub and spoke architecture of ESnet ) o (CHI Chicag New York (AOA) DOE sites ESnet Core Washington, DC (DC) Sunnyvale (SNV) El Paso (ELP) • Atlanta (ATL) The core ring has good capacity and resiliency against single point failures, but the point-to-point tail circuits are neither reliable nor scalable to the required bandwidth 36

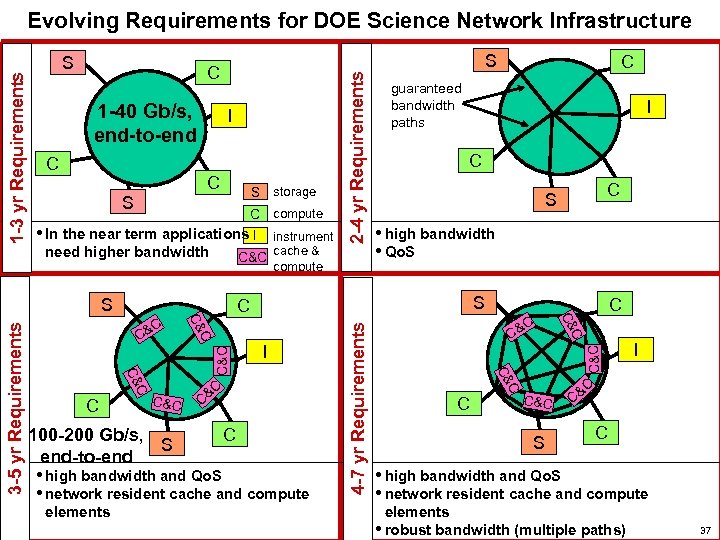

S C I C C S S storage C compute • In the near term applications I instrument C&C cache & compute need higher bandwidth C • high bandwidth • Qo. S I C • high bandwidth and Qo. S • network resident cache and compute C C& C&C C S C C& C C C&C 100 -200 Gb/s, S end-to-end elements I S C& C guaranteed bandwidth paths C C& 3 -5 yr Requirements S C C&C 1 -40 Gb/s, end-to-end 2 -4 yr Requirements S 4 -7 yr Requirements 1 -3 yr Requirements Evolving Requirements for DOE Science Network Infrastructure C&C S I &C C C • high bandwidth and Qo. S • network resident cache and compute elements • robust bandwidth (multiple paths) 37

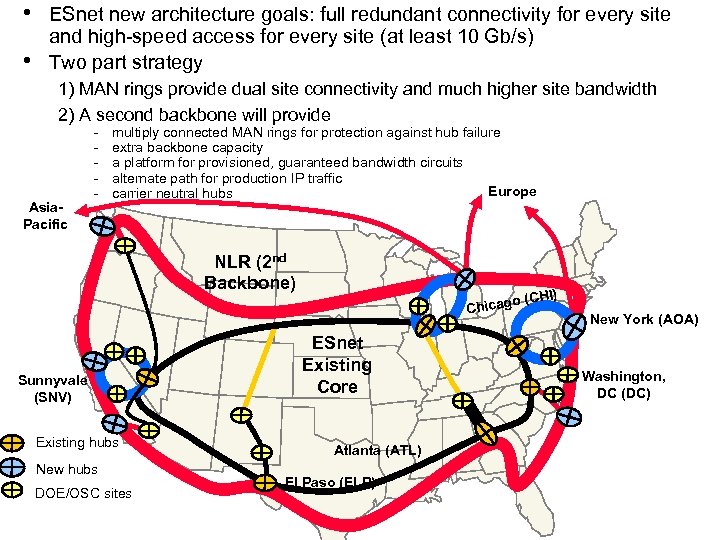

• • ESnet new architecture goals: full redundant connectivity for every site and high-speed access for every site (at least 10 Gb/s) Two part strategy 1) MAN rings provide dual site connectivity and much higher site bandwidth 2) A second backbone will provide Asia. Pacific - multiply connected MAN rings for protection against hub failure extra backbone capacity a platform for provisioned, guaranteed bandwidth circuits alternate path for production IP traffic Europe carrier neutral hubs NLR (2 nd Backbone) o Chicag Sunnyvale (SNV) Existing hubs New hubs DOE/OSC sites ESnet Existing Core Atlanta (ATL) El Paso (ELP) (CHI) New York (AOA) Washington, DC (DC)

Conclusions • ESnet is an infrastructure that is critical to DOE’s science mission • Focused on the Office of Science Labs, but serves many other parts of DOE • ESnet is working hard to meet the current and future networking need of DOE mission science in several ways: o Evolving a new high speed, high reliability, leveraged architecture o Championing several new initiatives which will keep ESnet’s contributions relevant to the needs of our community 39

Reference -- Planning Workshops • High Performance Network Planning Workshop, August 2002 http: //www. doecollaboratory. org/meetings/hpnpw • DOE Workshop on Ultra High-Speed Transport Protocols and Network Provisioning for Large-Scale Science Applications, April 2003 http: //www. csm. ornl. gov/ghpn/wk 2003 • Science Case for Large Scale Simulation, June 2003 http: //www. pnl. gov/scales/ • DOE Science Networking Roadmap Meeting, June 2003 http: //www. es. net/hypertext/welcome/pr/Roadmap/index. html • Workshop on the Road Map for the Revitalization of High End Computing, June 2003 http: //www. cra. org/Activities/workshops/nitrd http: //www. sc. doe. gov/ascr/20040510_hecrtf. pdf (public report) • ASCR Strategic Planning Workshop, July 2003 http: //www. fp-mcs. anl. gov/ascr-july 03 spw • Planning Workshops-Office of Science Data-Management Strategy, March & May 2004 o http: //www-conf. slac. stanford. edu/dmw 2004 (report coming soon) 40

ccedbe80d0d5bca03cfaf5ceeb73b7f4.ppt