74581a1c2ee14424a05e92e82e9d90bd.ppt

- Количество слайдов: 27

The EN dataset Simon Good and Claire Bartholomew © Crown copyright Met Office

What is the EN dataset? • Climate dataset of temperature and salinity profiles • Data quality controlled using a suite of automatic checks • Monthly objective analyses are created from the data (and used in quality control) • EN name has origins in European projects that funded initial versions • Current publicly available version of the EN dataset is EN 3 (v 2 a) (see www. metoffice. gov. uk/hadobs/en 3) • A new version is being prepared (EN 4; Good et al. 2013 submitted) • In this presentation I am mostly information the new version (EN 4) © Crown copyright Met Office

The EN dataset Table of Contents • Data sources • Quality control procedures • Data format and dissemination • Data users • Performance of quality control © Crown copyright Met Office

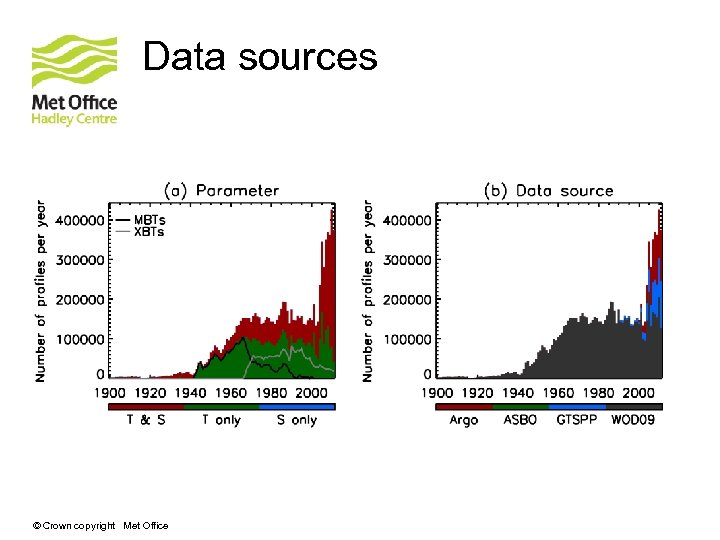

Data sources © Crown copyright Met Office

Data sources • We use data from • World Ocean Database 2009 (WOD 09) • Global Temperature-Salinity Profile Program (GTSPP) • Argo • Arctic Synoptic Basinwide Observations project • Main data source is WOD 09 • Monthly updates performed using data from GTSPP and Argo • Data from any profiling instrument are used © Crown copyright Met Office

Data sources © Crown copyright Met Office

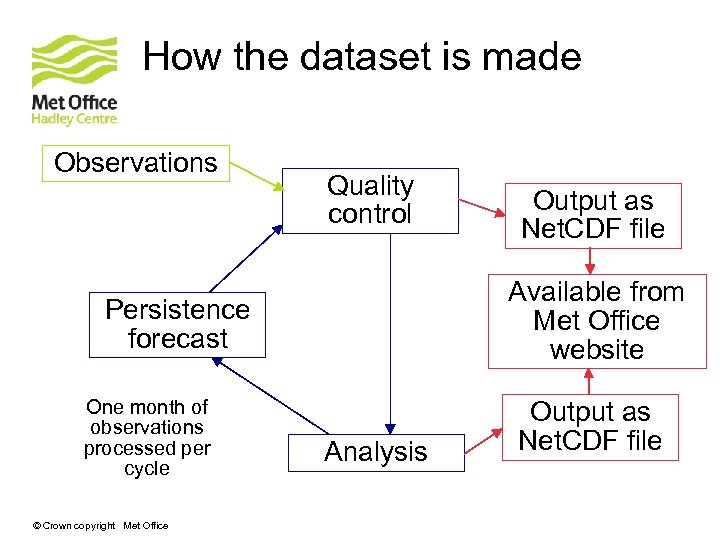

Data processing and quality control © Crown copyright Met Office

How the dataset is made Observations Quality control Available from Met Office website Persistence forecast One month of observations processed per cycle © Crown copyright Met Office Output as Net. CDF file Analysis Output as Net. CDF file

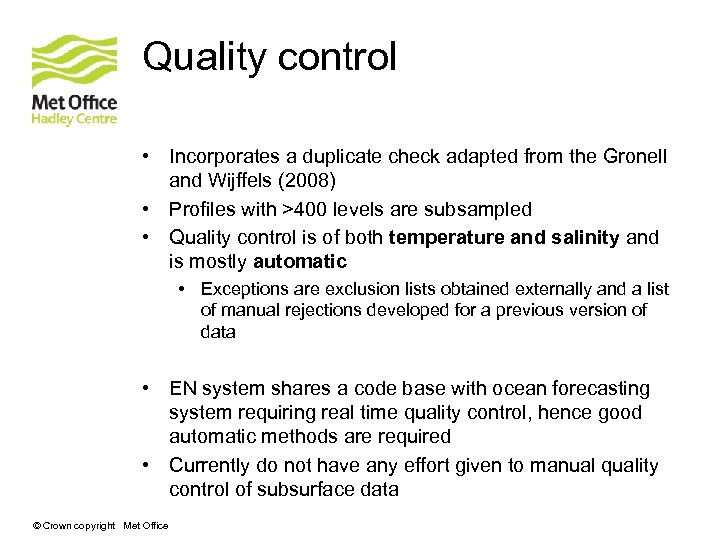

Quality control • Incorporates a duplicate check adapted from the Gronell and Wijffels (2008) • Profiles with >400 levels are subsampled • Quality control is of both temperature and salinity and is mostly automatic • Exceptions are exclusion lists obtained externally and a list of manual rejections developed for a previous version of data • EN system shares a code base with ocean forecasting system requiring real time quality control, hence good automatic methods are required • Currently do not have any effort given to manual quality control of subsurface data © Crown copyright Met Office

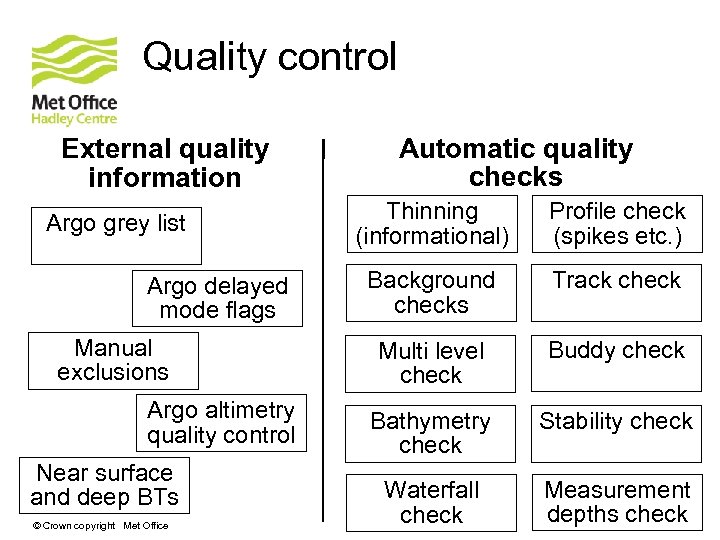

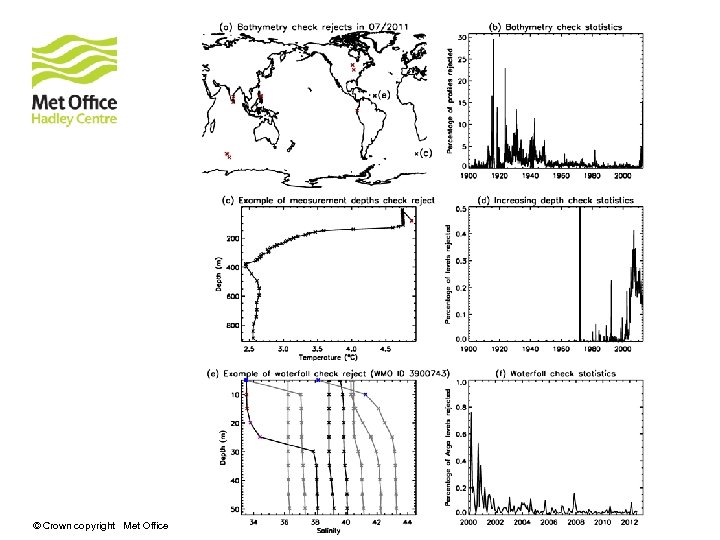

Quality control External quality information Argo grey list Argo delayed mode flags Manual exclusions Argo altimetry quality control Near surface and deep BTs © Crown copyright Met Office Automatic quality checks Thinning (informational) Profile check (spikes etc. ) Background checks Track check Multi level check Buddy check Bathymetry check Stability check Waterfall check Measurement depths check

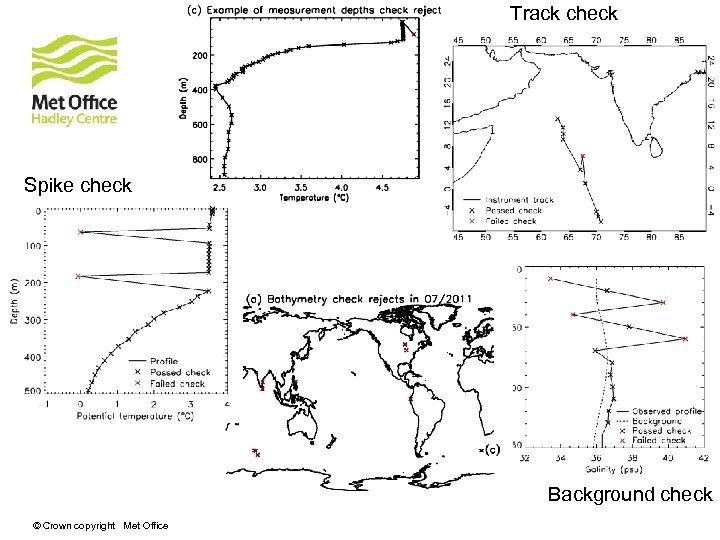

Track check Spike check Background check © Crown copyright Met Office

Quality control code • Written in Fortran • Owing to way the data are processed and stored, can require a lot of memory if there a lot of profiles and/or levels • Number of levels are thinned to 400 if profile has more • A month of data requires between a few seconds to ~25 minutes to run (depending on data quantity) on a desktop machine © Crown copyright Met Office

Data availability and use • Each month of data is added around the middle of the following month • Data are provided on Met Office website (current version at www. metoffice. gov. uk/hadobs/en 3) • Uses are varied • • • © Crown copyright Met Office Gridded products Monitoring ocean conditions Time series of ocean heat content Initialising seasonal/decadal predictions Ocean reanalysis Comparisons to climate model data

Comparison between results from the EN system and the Qu. OTA dataset © Crown copyright Met Office

Comparison between Qu. OTA and EN processing • For profiles with between 2 and 400 temperature levels • Results are preliminary – we are still working on understanding the quality control flags and the impact that the differences have • We count the number of profiles with any levels rejected • Level by level comparison is difficult • EN system = automated system • CSIRO system = semi-automated system © Crown copyright Met Office

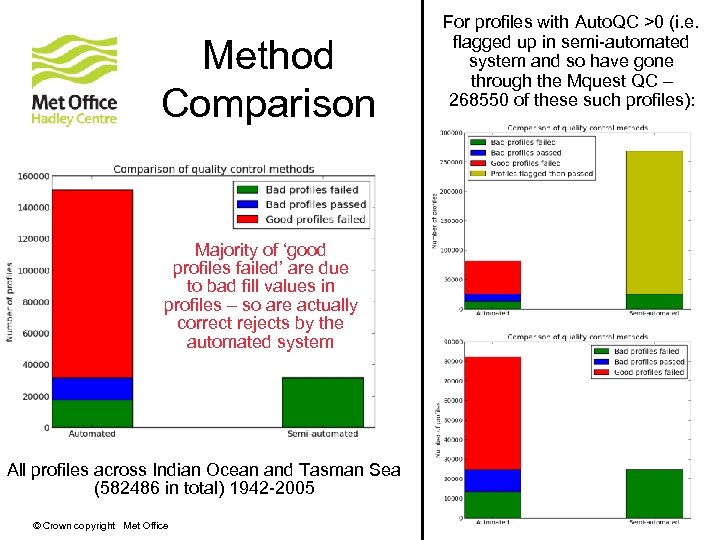

Method Comparison Majority of ‘good profiles failed’ are due to bad fill values in profiles – so are actually correct rejects by the automated system All profiles across Indian Ocean and Tasman Sea (582486 in total) 1942 -2005 © Crown copyright Met Office For profiles with Auto. QC >0 (i. e. flagged up in semi-automated system and so have gone through the Mquest QC – 268550 of these such profiles):

Proportion of failed levels • For all profiles that have one or more temperature level rejected, the average proportion of levels rejected per profile is: • for automated (MO) system: 0. 025 • for semi-automated (CSIRO) system: 0. 169 • However, when looking over all profiles, the difference between the two systems is less significant: • for automated (MO) system: 0. 0056 • for semi-automated (CSIRO) system: 0. 0065 (as semi-automated system has more profiles with all levels passed, and so help to lower the average when looking at all profiles. ) This analysis is done over 4 months of all profiles (not just ones with Auto. QC > 0). © Crown copyright Met Office

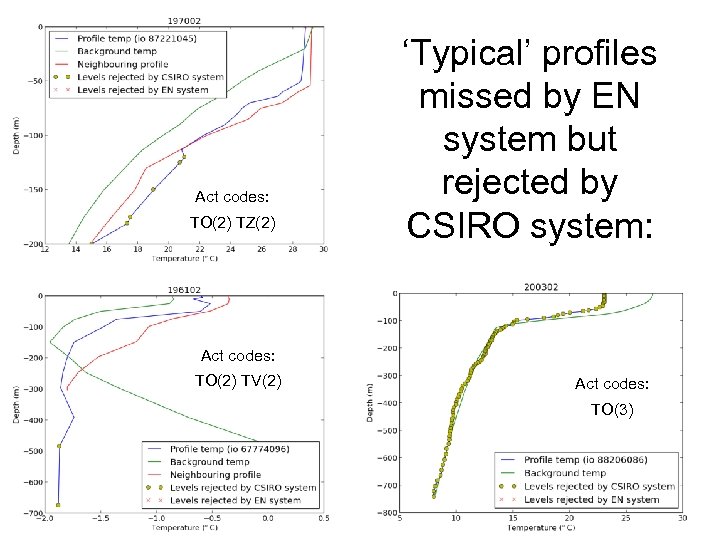

Act codes: TO(2) TZ(2) ‘Typical’ profiles missed by EN system but rejected by CSIRO system: Act codes: TO(2) TV(2) Act codes: TO(3) © Crown copyright Met Office

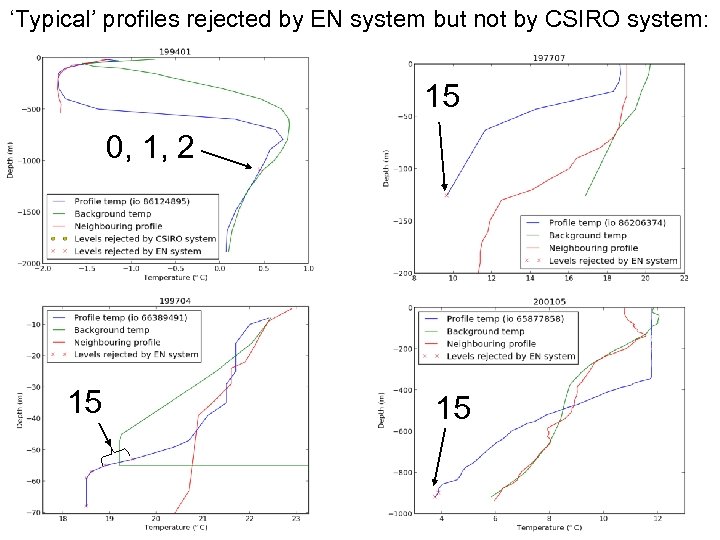

‘Typical’ profiles rejected by EN system but not by CSIRO system: 15 0, 1, 2 15 © Crown copyright Met Office 15

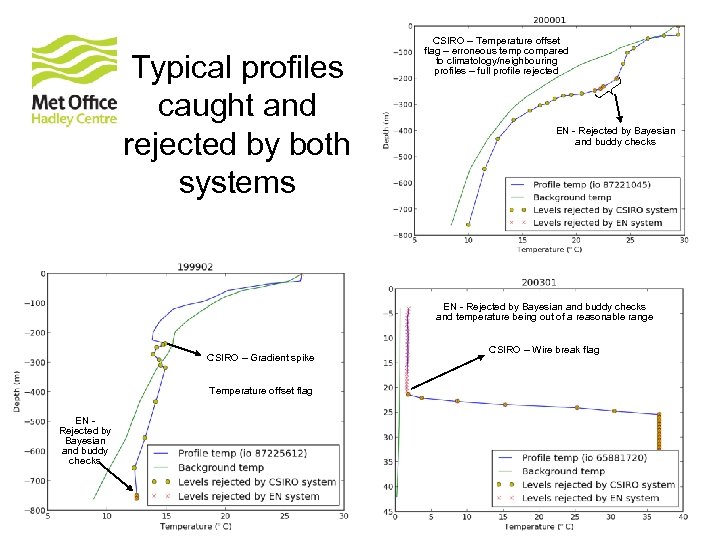

Typical profiles caught and rejected by both systems CSIRO – Temperature offset flag – erroneous temp compared to climatology/neighbouring profiles – full profile rejected EN - Rejected by Bayesian and buddy checks and temperature being out of a reasonable range CSIRO – Gradient spike Temperature offset flag EN Rejected by Bayesian and buddy checks © Crown copyright Met Office CSIRO – Wire break flag

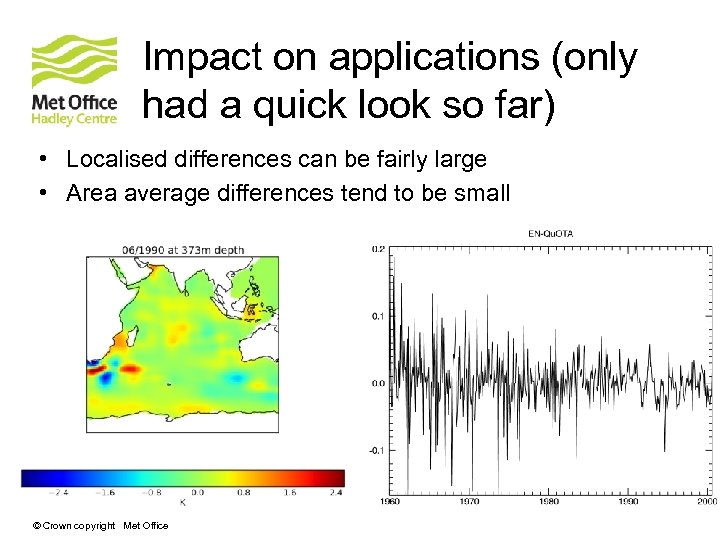

Impact on applications (only had a quick look so far) • Localised differences can be fairly large • Area average differences tend to be small © Crown copyright Met Office

Questions and answers © Crown copyright Met Office

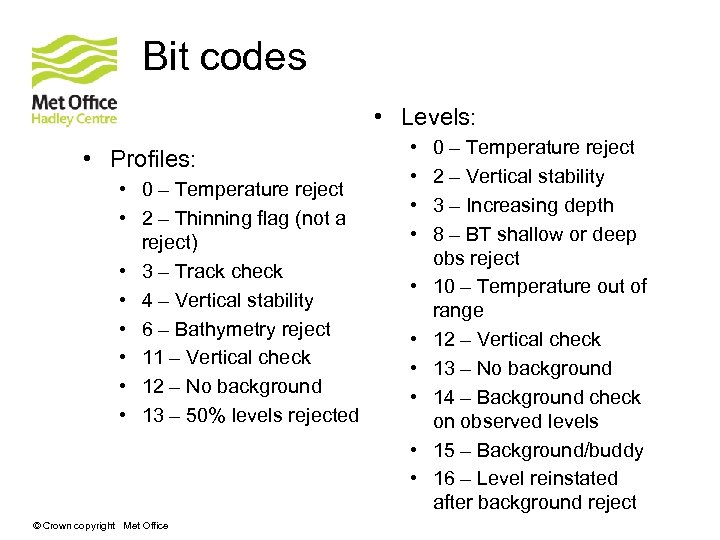

Bit codes • Levels: • Profiles: • 0 – Temperature reject • 2 – Thinning flag (not a reject) • 3 – Track check • 4 – Vertical stability • 6 – Bathymetry reject • 11 – Vertical check • 12 – No background • 13 – 50% levels rejected • • • © Crown copyright Met Office 0 – Temperature reject 2 – Vertical stability 3 – Increasing depth 8 – BT shallow or deep obs reject 10 – Temperature out of range 12 – Vertical check 13 – No background 14 – Background check on observed levels 15 – Background/buddy 16 – Level reinstated after background reject

© Crown copyright Met Office

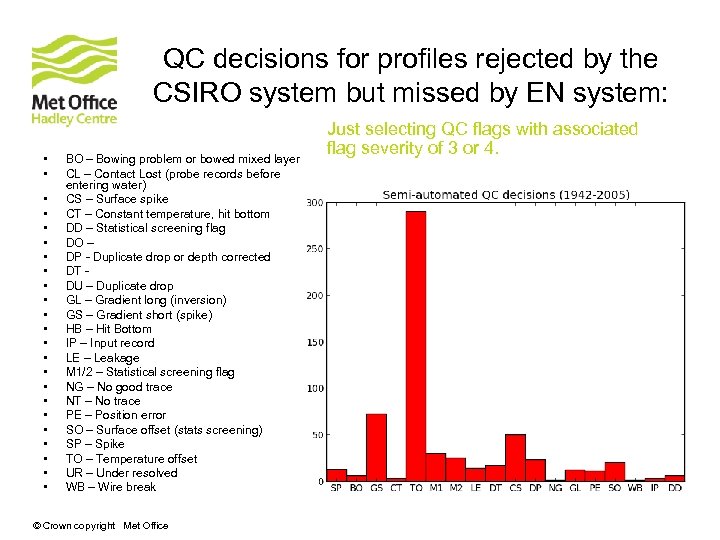

QC decisions for profiles rejected by the CSIRO system but missed by EN system: • • • • • • BO – Bowing problem or bowed mixed layer CL – Contact Lost (probe records before entering water) CS – Surface spike CT – Constant temperature, hit bottom DD – Statistical screening flag DO – DP - Duplicate drop or depth corrected DT DU – Duplicate drop GL – Gradient long (inversion) GS – Gradient short (spike) HB – Hit Bottom IP – Input record LE – Leakage M 1/2 – Statistical screening flag NG – No good trace NT – No trace PE – Position error SO – Surface offset (stats screening) SP – Spike TO – Temperature offset UR – Under resolved WB – Wire break © Crown copyright Met Office Just selecting QC flags with associated flag severity of 3 or 4.

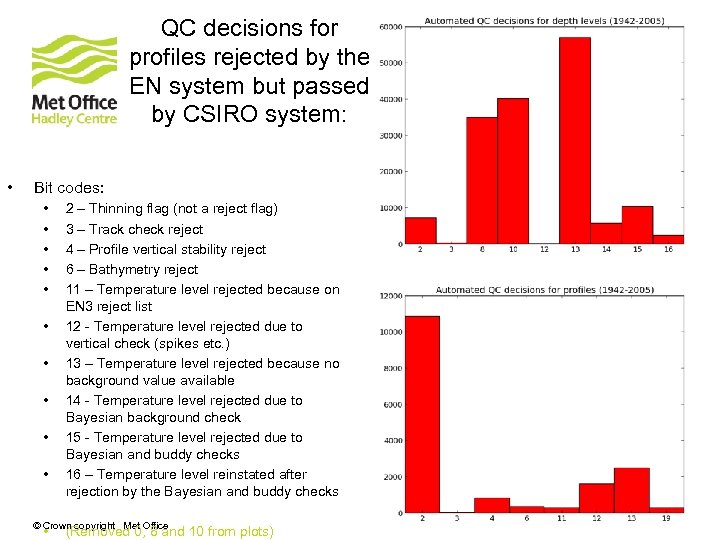

QC decisions for profiles rejected by the EN system but passed by CSIRO system: • Bit codes: • • • 2 – Thinning flag (not a reject flag) 3 – Track check reject 4 – Profile vertical stability reject 6 – Bathymetry reject 11 – Temperature level rejected because on EN 3 reject list 12 - Temperature level rejected due to vertical check (spikes etc. ) 13 – Temperature level rejected because no background value available 14 - Temperature level rejected due to Bayesian background check 15 - Temperature level rejected due to Bayesian and buddy checks 16 – Temperature level reinstated after rejection by the Bayesian and buddy checks © Crown copyright Met Office • (Removed 0, 8 and 10 from plots)

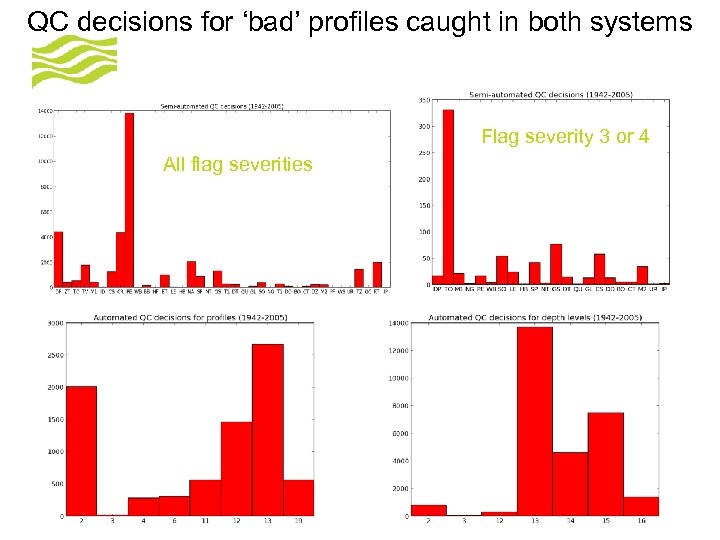

QC decisions for ‘bad’ profiles caught in both systems Flag severity 3 or 4 All flag severities © Crown copyright Met Office

74581a1c2ee14424a05e92e82e9d90bd.ppt