fe3888a3f86d03721ae22b880a755dce.ppt

- Количество слайдов: 31

The CTA Computing Grid Project Cecile Barbier, Nukri Komin, Sabine Elles, Giovanni Lamanna, LAPP, CNRS/IN 2 P 3 Annecy-le-Vieux Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 1

CTA-CG • CTA Computing Grid • LAPP, Annecy • Giovanni Lamanna, Nukri Komin • Cecile Barbier, Sabine Elles • LUPM, Montpellier • Georges Vasileiadis • Claudia Lavallay, Luisa Arrabito • Goal: Bring CTA on the Grid Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 2

CTA-CG • Aim • provide working environment, tools and services for all tasks assigned to Data Management and Processing Center • simulation • data processing • storage • offline analysis • user's interface • Test • Grid computing • software around Grid computing • estimate computing needs and requirements • requests at Lyon, close contact with DESY Zeuthen Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 3

Outline • CTA and its Data Management and Processing Centre • current activities: • massive Monte Carlo Simulations • preparation of Meta Data Base • short-term plan • bring the user on the Grid and to the data • ideas for future data management and analysis pipe-line • Note: CTA is in preparatory phase • here mostly work in progress and ideas Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 4

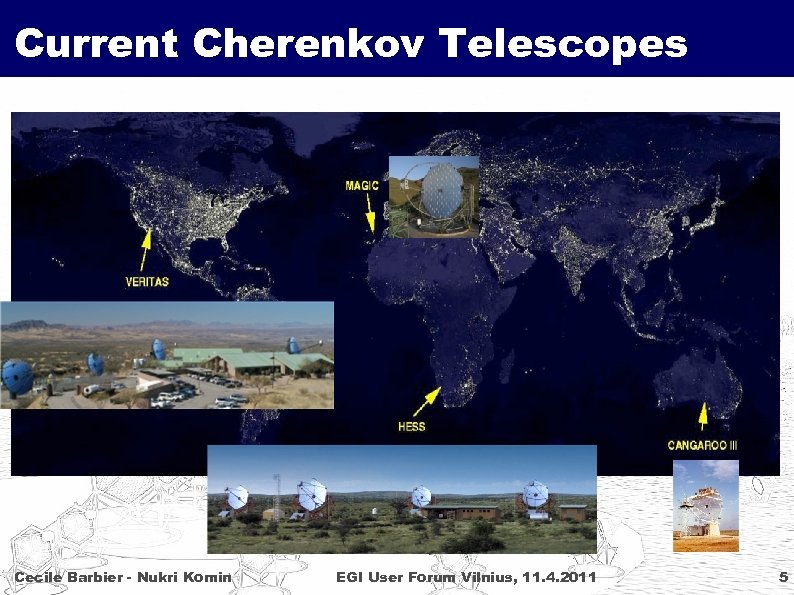

Current Cherenkov Telescopes Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 5

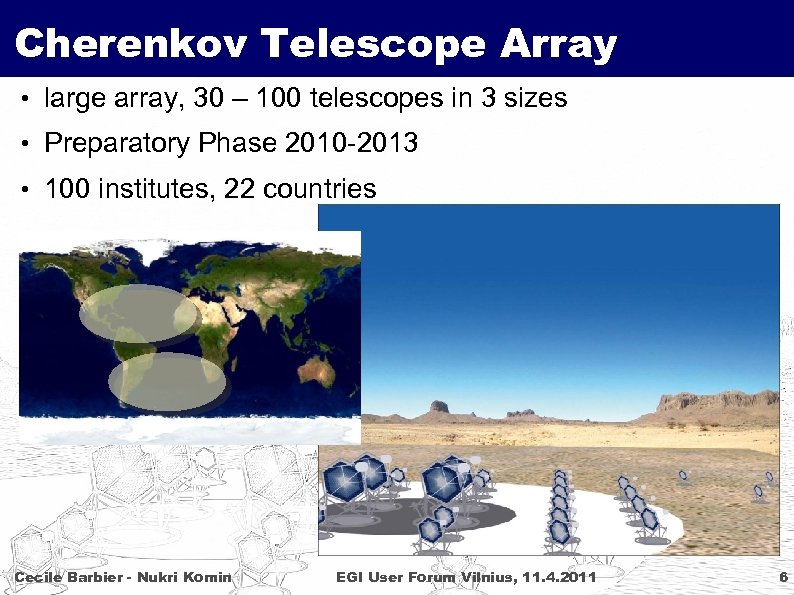

Cherenkov Telescope Array • large array, 30 – 100 telescopes in 3 sizes • Preparatory Phase 2010 -2013 • 100 institutes, 22 countries Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 6

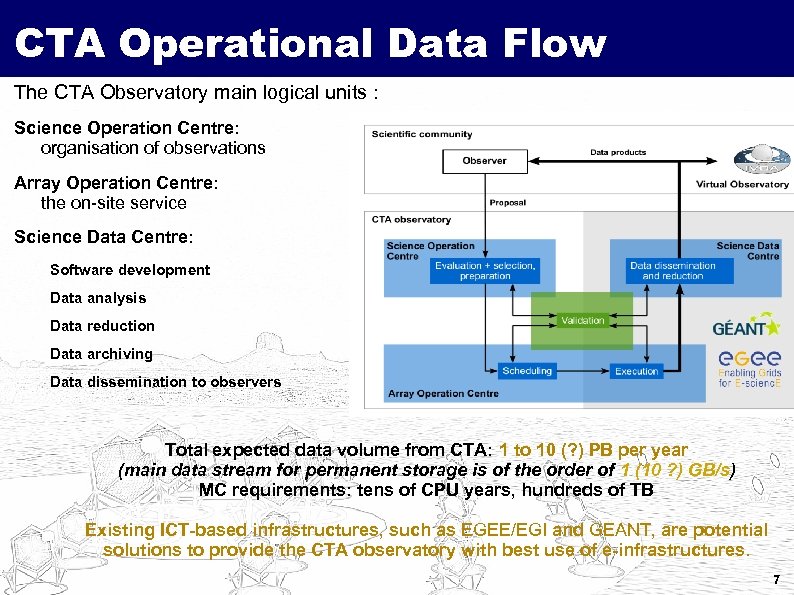

CTA Operational Data Flow The CTA Observatory main logical units : Science Operation Centre: organisation of observations Array Operation Centre: the on-site service Science Data Centre: Software development Data analysis Data reduction Data archiving Data dissemination to observers Total expected data volume from CTA: 1 to 10 (? ) PB per year (main data stream for permanent storage is of the order of 1 (10 ? ) GB/s) MC requirements: tens of CPU years, hundreds of TB Existing ICT-based infrastructures, such as EGEE/EGI and GEANT, are potential solutions to provide the CTA observatory with best use of e-infrastructures. 7

CTA Virtual Grid Organisation • Benefits of the EGEE/EGI Grid • institutes can provide easily computing power • minimal man power needed, usually sites already supporting LHC • can be managed centrally (e. g. for massive simulations) • distributed but transparent for all users (compare HESS) • CTA Virtual Organisation: vo. cta. in 2 p 3. fr • French name, but open to everyone (renaming almost impossible) • VO manager: G. Lamanna @ LAPP Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 8

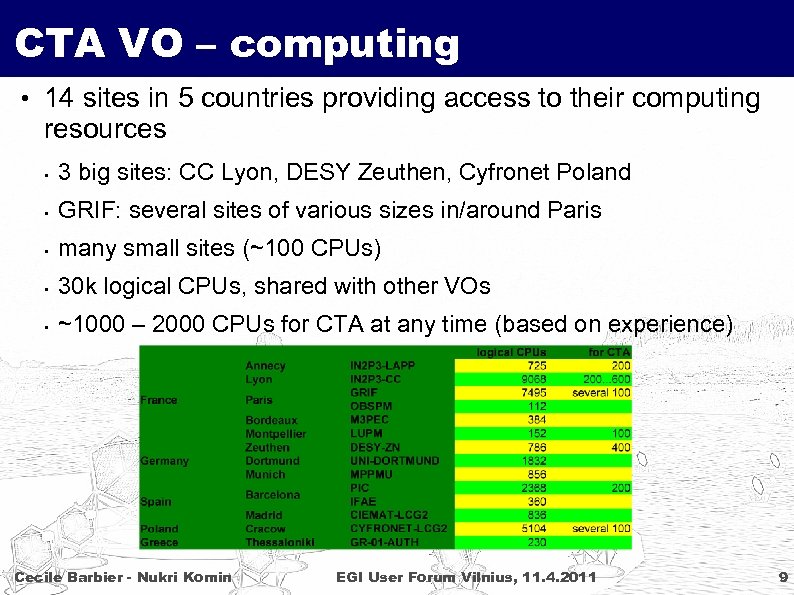

CTA VO – computing • 14 sites in 5 countries providing access to their computing resources • 3 big sites: CC Lyon, DESY Zeuthen, Cyfronet Poland • GRIF: several sites of various sizes in/around Paris • many small sites (~100 CPUs) • 30 k logical CPUs, shared with other VOs • ~1000 – 2000 CPUs for CTA at any time (based on experience) Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 9

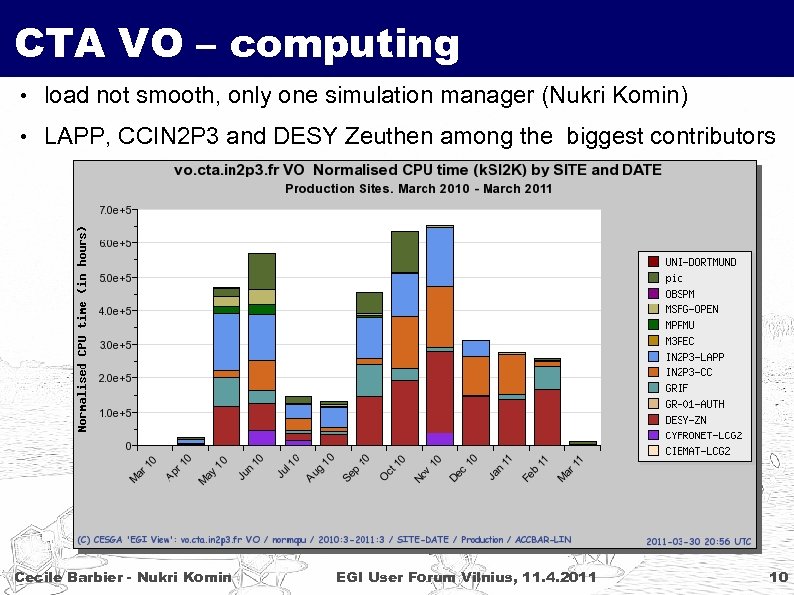

CTA VO – computing • load not smooth, only one simulation manager (Nukri Komin) • LAPP, CCIN 2 P 3 and DESY Zeuthen among the biggest contributors Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 10

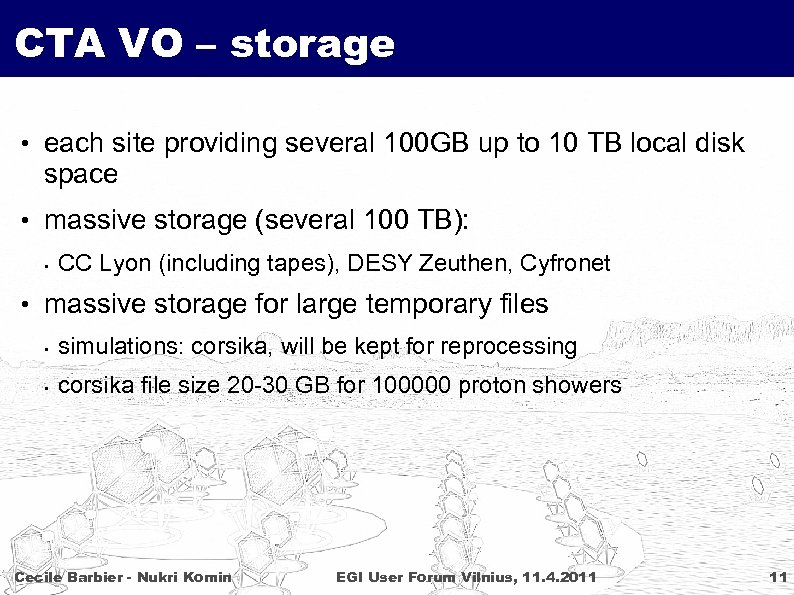

CTA VO – storage • each site providing several 100 GB up to 10 TB local disk space • massive storage (several 100 TB): • CC Lyon (including tapes), DESY Zeuthen, Cyfronet • massive storage for large temporary files • simulations: corsika, will be kept for reprocessing • corsika file size 20 -30 GB for 100000 proton showers Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 11

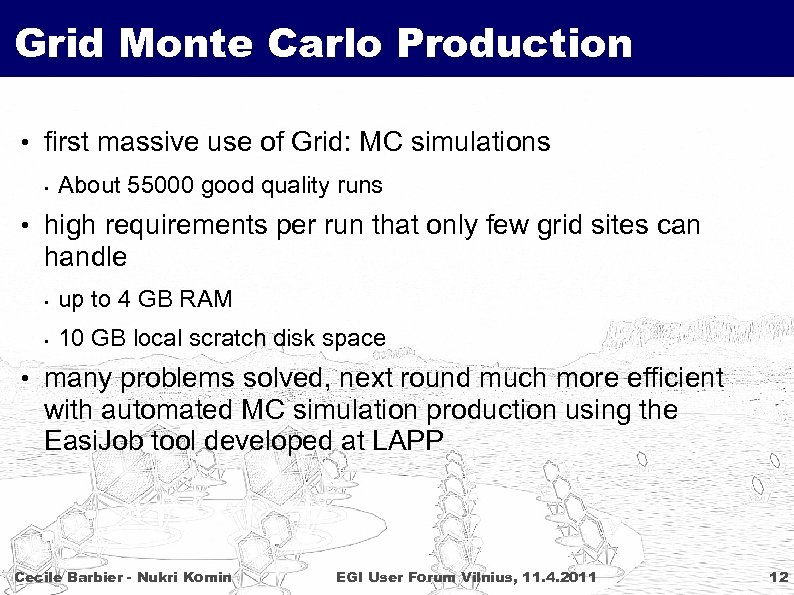

Grid Monte Carlo Production • first massive use of Grid: MC simulations • About 55000 good quality runs • high requirements per run that only few grid sites can handle • up to 4 GB RAM • 10 GB local scratch disk space • many problems solved, next round much more efficient with automated MC simulation production using the Easi. Job tool developed at LAPP Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 12

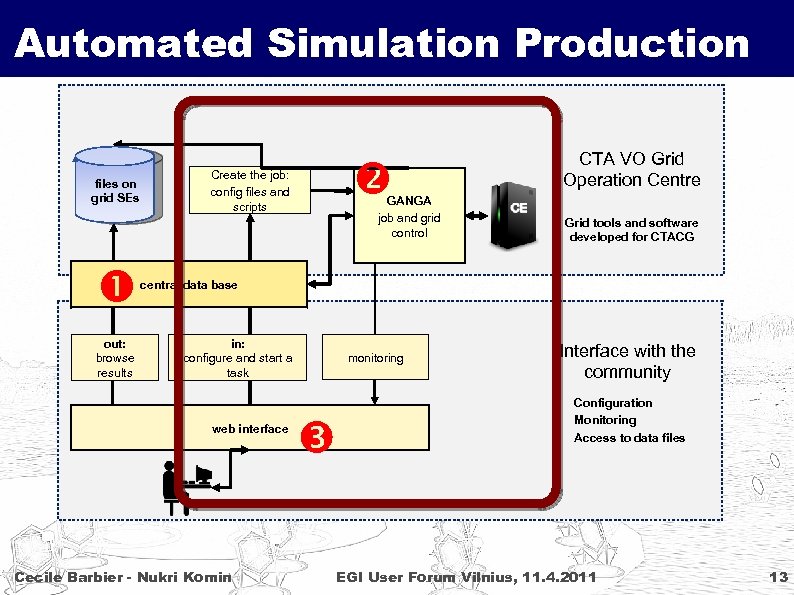

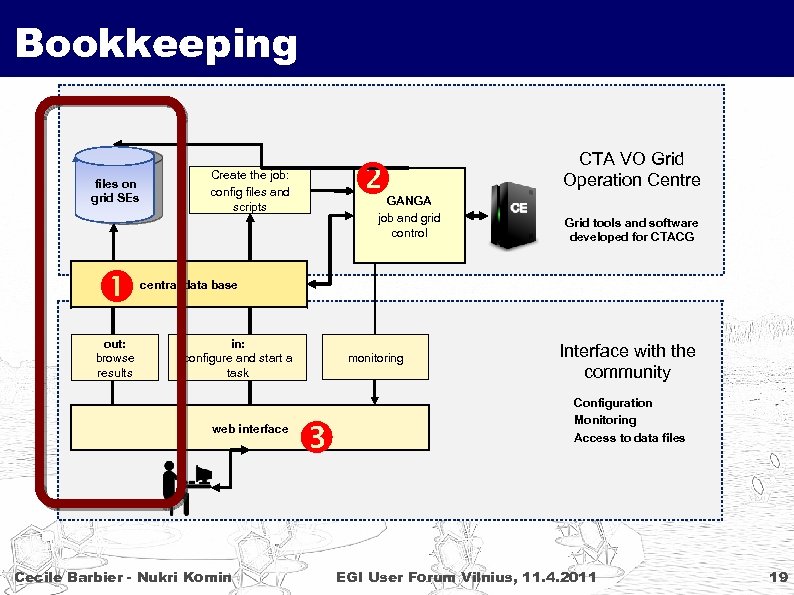

Automated Simulation Production files on grid SEs out: browse results Create the job: config files and scripts GANGA job and grid control CTA VO Grid Operation Centre Grid tools and software developed for CTACG central data base in: configure and start a task web interface Cecile Barbier - Nukri Komin monitoring Interface with the community Configuration Monitoring Access to data files EGI User Forum Vilnius, 11. 4. 2011 13

Automated Simulation Production • Easi. Job – Easy Integrated Job Submission • developed by S. Elles within the MUST frame work • MUST = Mid-Range Data Storage and Computing Centre widely open to Grid Infrastructure, at LAPP Annecy and Savoie University • more general than CTA • can be used for any software and every experiment • based on GANGA (Gaudi/Athena a. Nd Grid Alliance) • http: //ganga. web. cern. ch/ganga/ • Grid front-end in python • developed for Atlas and LHCb, used by many other experiments • task configuration, job submission and monitoring, file bookkeeping Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 14

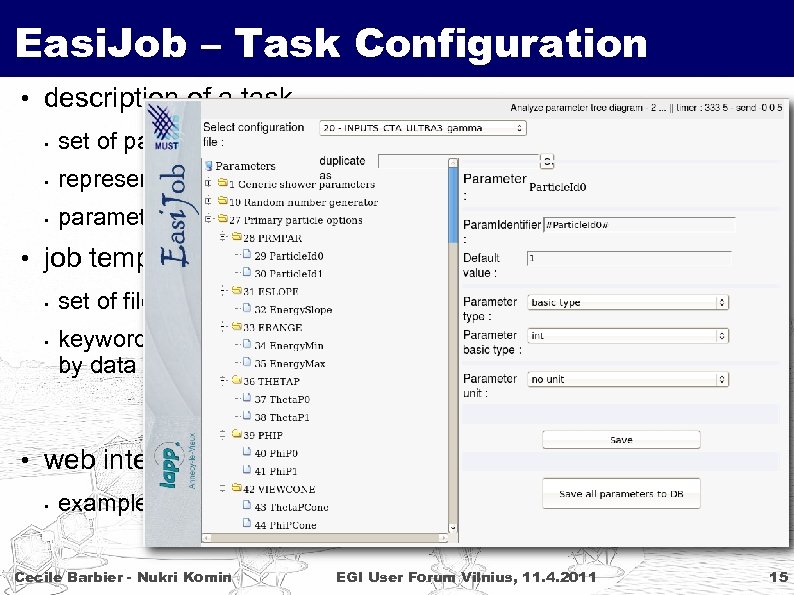

Easi. Job – Task Configuration • description of a task • set of parameters, with default values, define if browsable • representation in data base • parameter keyword (#key 1) • job template • • set of files (input sandbox) keywords will be replaced by data base values • web interface • example: corsika Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 15

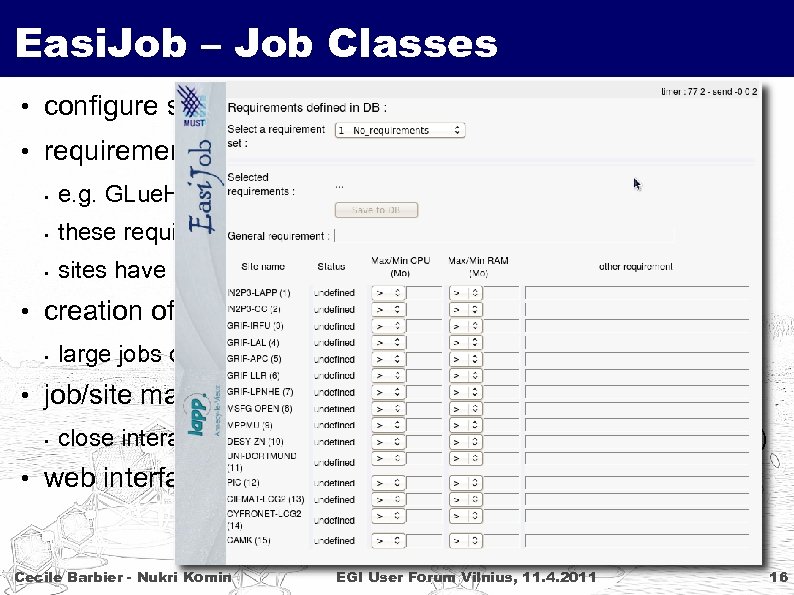

Easi. Job – Job Classes • configure site classes • requirements are based on published parameters • e. g. GLue. Host. Main. Memory. RAMSize > 2000 • these requirements are interpreted differently at each site • sites have different storage capacities • creation of job classes • large jobs only on a subset of sites • job/site matching currently semi-manual • close interaction with local admins (in particular Lyon and Zeuthen) • web interface Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 16

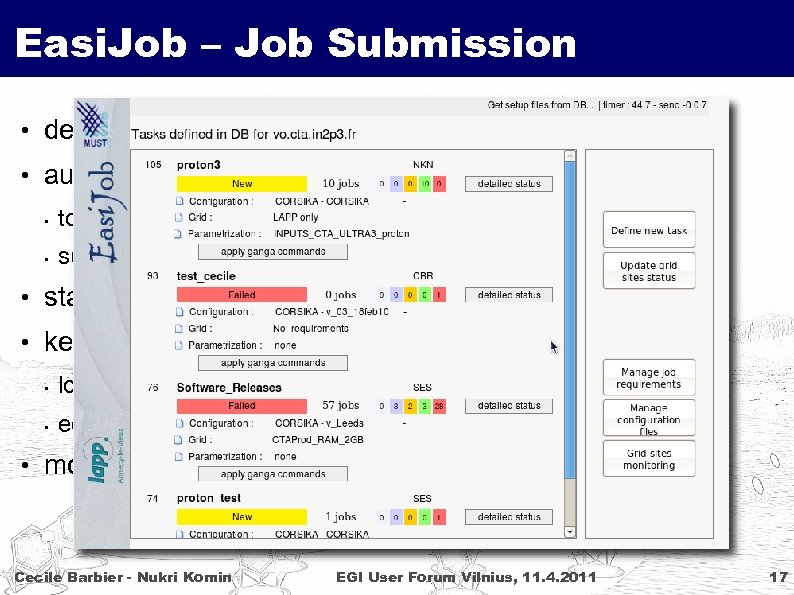

Easi. Job – Job Submission • define number of jobs for a task • automated job submission • to the site with the minimum of waiting jobs • submission is paused when too many jobs are pending • status monitoring and re-submission of failed jobs • keeps track of produced files • logical file name (LFN) on the Grid • echo statement in execution script • monitoring on web page Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 17

Easi. Job – Status • deployed in Annecy, will be used for next simulations • configuration and job submission not open to public • want to avoid massive non-sense productions • user certificates need to be installed manually • idea: provide “software as a service” Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 18

Bookkeeping files on grid SEs out: browse results Create the job: config files and scripts GANGA job and grid control CTA VO Grid Operation Centre Grid tools and software developed for CTACG central data base in: configure and start a task web interface Cecile Barbier - Nukri Komin monitoring Interface with the community Configuration Monitoring Access to data files EGI User Forum Vilnius, 11. 4. 2011 19

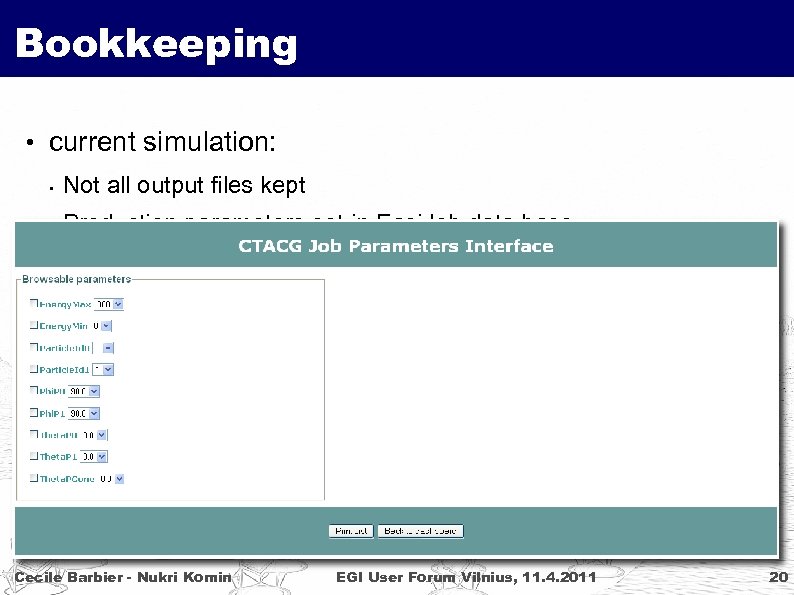

Bookkeeping • current simulation: • Not all output files kept • Production parameters set in Easi. Job data base • automatically generated web interface [C. Barbier] • shows only parameters defined as browsable • proposes only values which were produced • returns list of lfn (logical file names) • starting point for more powerful meta data base Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 20

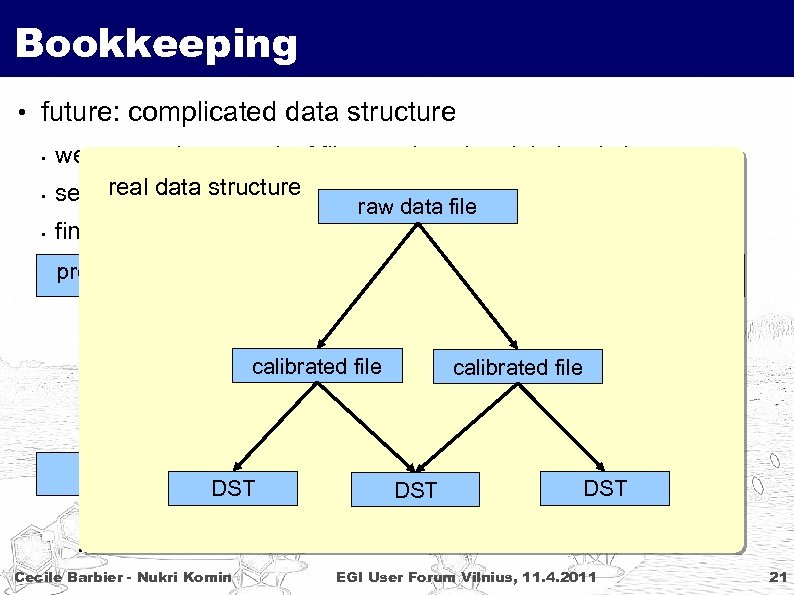

Bookkeeping • future: complicated data structure • we want to keep track of files produced and their relations real data using the search for filesstructure production parameters • find information on files, even if the files have been removed • raw data file production file 1 calibrated file production file 2 DST file DST Cecile Barbier - Nukri Komin . . . production file 1 calibrated file production file 2 DST file DST . . . DST EGI User Forum Vilnius, 11. 4. 2011 21

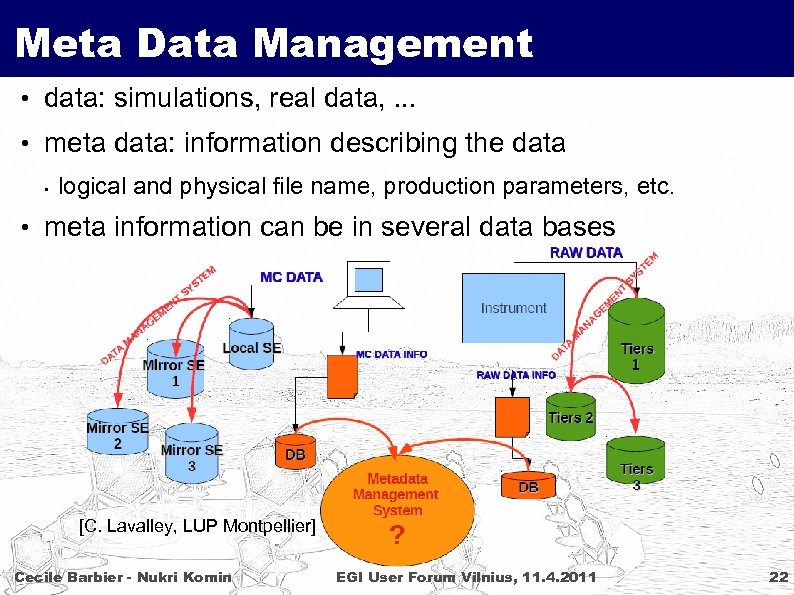

Meta Data Management • data: simulations, real data, . . . • meta data: information describing the data • logical and physical file name, production parameters, etc. • meta information can be in several data bases [C. Lavalley, LUP Montpellier] Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 22

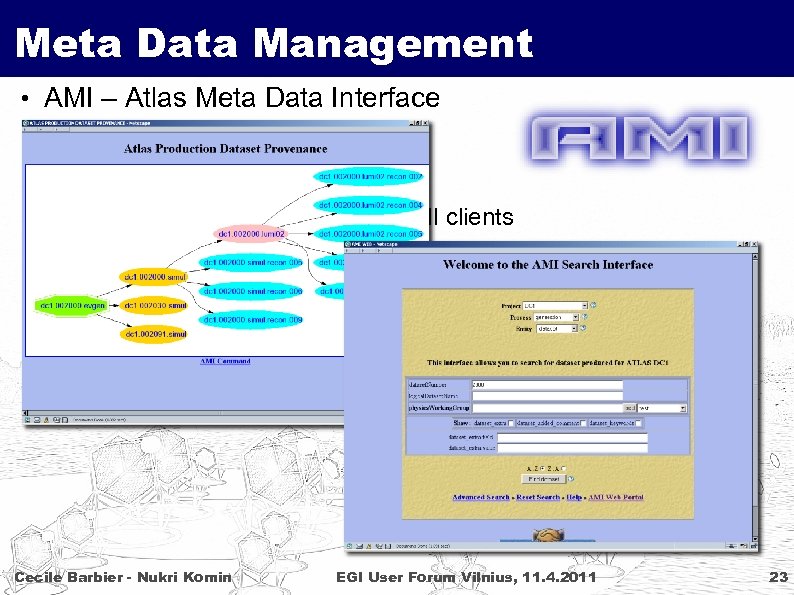

Meta Data Management • AMI – Atlas Meta Data Interface • developed at LPSC Grenoble • can interrogate other data bases • information can be pushed with AMI clients • web, python, C++, Java clients • manages access rights: username/password, certificate, . . . • we will deploy AMI for CTA (with LUPM and LPSC) • for simulations bookkeeping and file search • to be tested for future use in CTA Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 23

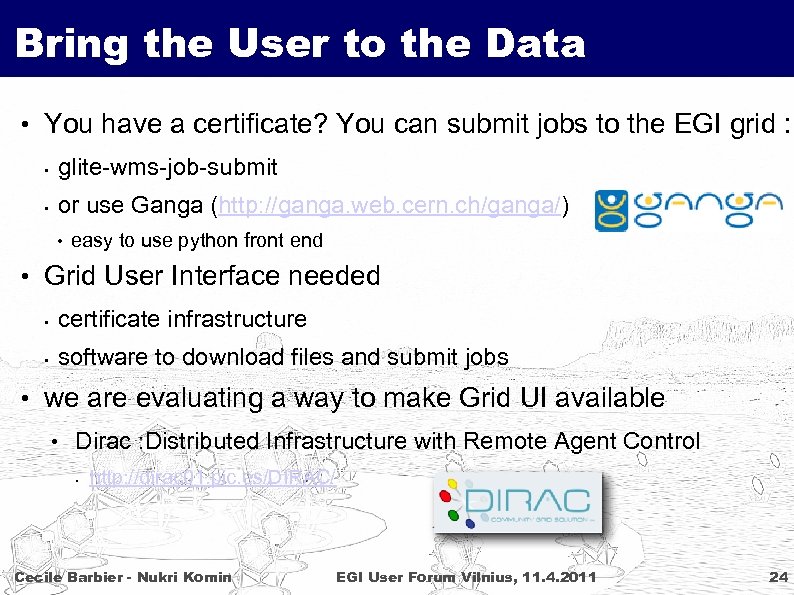

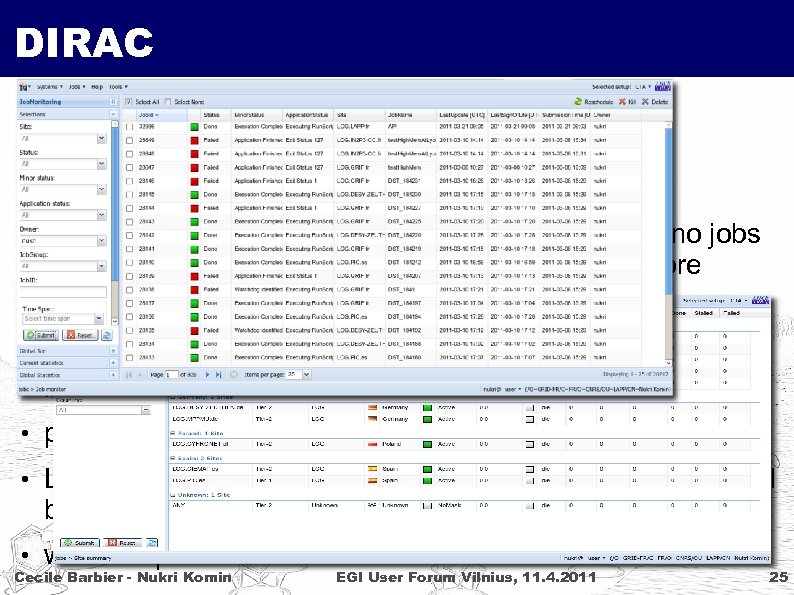

Bring the User to the Data • You have a certificate? You can submit jobs to the EGI grid : • glite-wms-job-submit • or use Ganga (http: //ganga. web. cern. ch/ganga/) • easy to use python front end • Grid User Interface needed • certificate infrastructure • software to download files and submit jobs • we are evaluating a way to make Grid UI available • Dirac : Distributed Infrastructure with Remote Agent Control • http: //dirac 01. pic. es/DIRAC/ Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 24

DIRAC • initially developed for LHCb, now generic version • very easy to install • Grid (and beyond) front-end • work load management with pilot jobs : pull mode, no jobs lost due to Grid problems, shorter waiting time before execution • integrated Data Management System • integrated software management • python and web interfaces for job submission • LAPP, LUPM and Pic-IFAE Barcelona for setup/testing, will be open to collaboration soon • we don't plan to use it for simulations Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 25

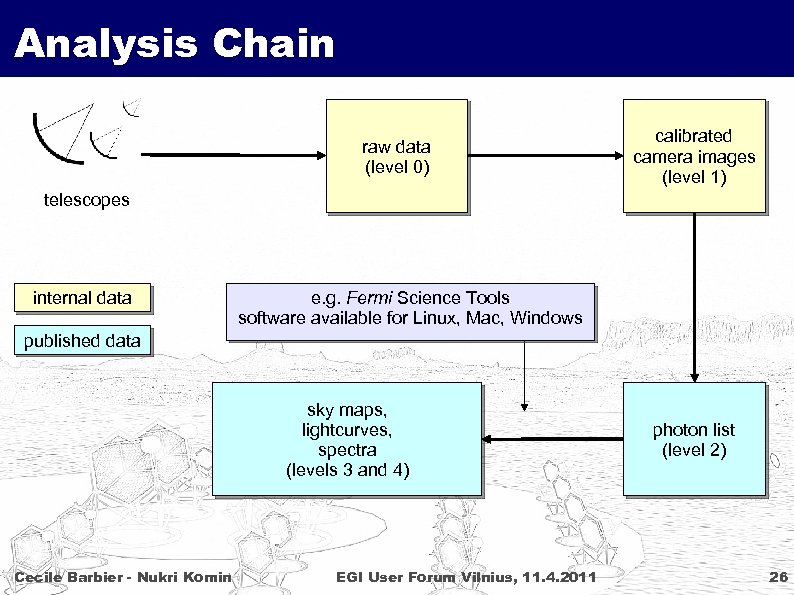

Analysis Chain raw data (level 0) calibrated camera images (level 1) telescopes internal data e. g. Fermi Science Tools software available for Linux, Mac, Windows published data sky maps, lightcurves, spectra (levels 3 and 4) Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 photon list (level 2) 26

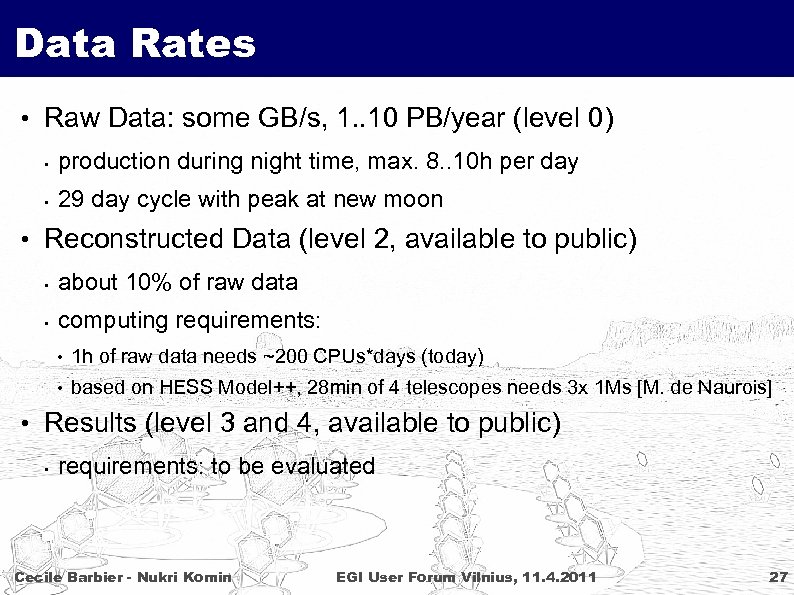

Data Rates • Raw Data: some GB/s, 1. . 10 PB/year (level 0) • production during night time, max. 8. . 10 h per day • 29 day cycle with peak at new moon • Reconstructed Data (level 2, available to public) • about 10% of raw data • computing requirements: • 1 h of raw data needs ~200 CPUs*days (today) • based on HESS Model++, 28 min of 4 telescopes needs 3 x 1 Ms [M. de Naurois] • Results (level 3 and 4, available to public) • requirements: to be evaluated Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 27

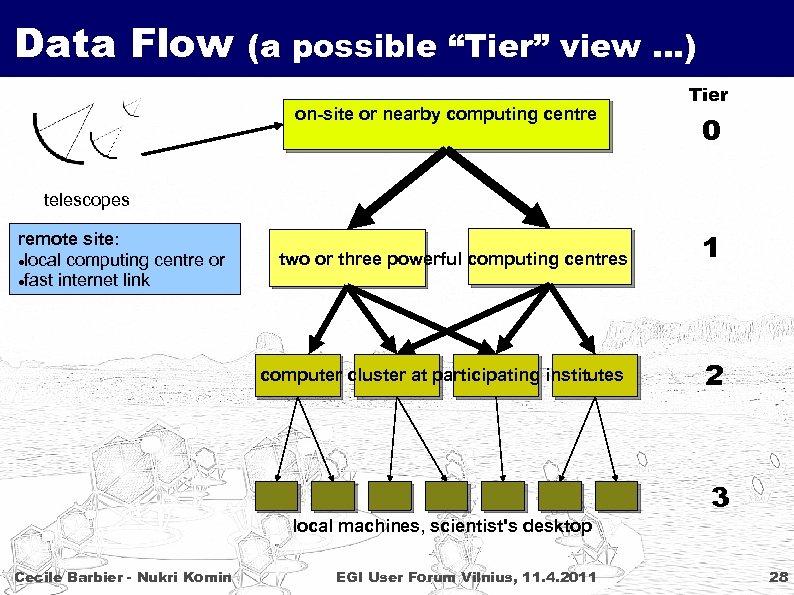

Data Flow (a possible “Tier” view …) on-site or nearby computing centre Tier 0 telescopes two or three powerful computing centres 1 computer cluster at participating institutes remote site: local computing centre or fast internet link 2 3 local machines, scientist's desktop Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 28

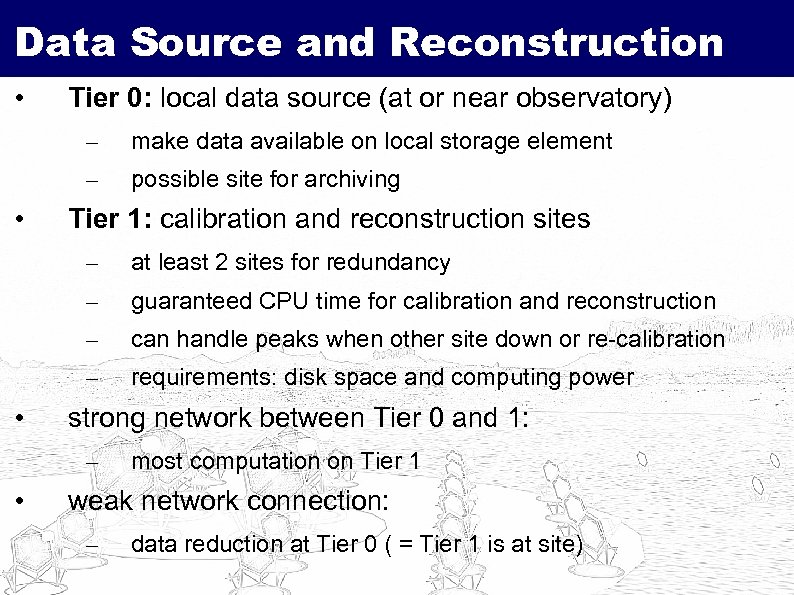

Data Source and Reconstruction • Tier 0: local data source (at or near observatory) – – • make data available on local storage element possible site for archiving Tier 1: calibration and reconstruction sites – – guaranteed CPU time for calibration and reconstruction – can handle peaks when other site down or re-calibration – • at least 2 sites for redundancy requirements: disk space and computing power strong network between Tier 0 and 1: – • most computation on Tier 1 weak network connection: – data reduction at Tier 0 ( = Tier 1 is at site)

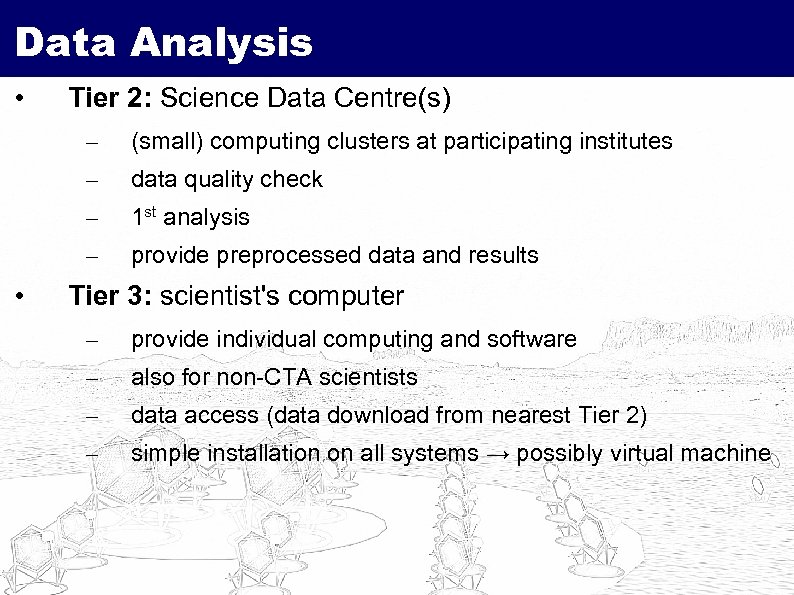

Data Analysis • Tier 2: Science Data Centre(s) – – data quality check – 1 st analysis – • (small) computing clusters at participating institutes provide preprocessed data and results Tier 3: scientist's computer – provide individual computing and software – also for non-CTA scientists – data access (data download from nearest Tier 2) – simple installation on all systems → possibly virtual machine

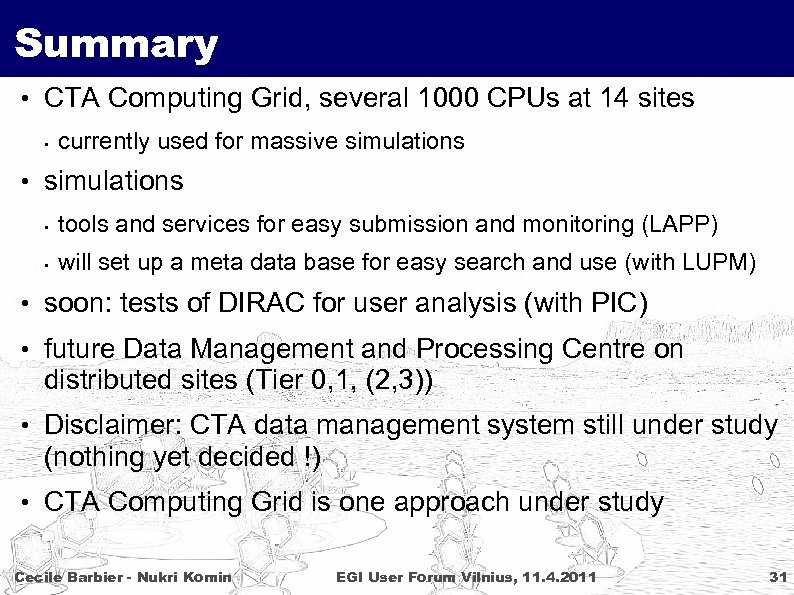

Summary • CTA Computing Grid, several 1000 CPUs at 14 sites • currently used for massive simulations • simulations • tools and services for easy submission and monitoring (LAPP) • will set up a meta data base for easy search and use (with LUPM) • soon: tests of DIRAC for user analysis (with PIC) • future Data Management and Processing Centre on distributed sites (Tier 0, 1, (2, 3)) • Disclaimer: CTA data management system still under study (nothing yet decided !) • CTA Computing Grid is one approach under study Cecile Barbier - Nukri Komin EGI User Forum Vilnius, 11. 4. 2011 31

fe3888a3f86d03721ae22b880a755dce.ppt