6bff57c0ebf8fdaf1951fa79466ac040.ppt

- Количество слайдов: 31

The 3 rd FKPPL Workshop @ KIAS FKPPL VO: Status and Perspectives of Grid Infrastructure March 8 -9, 2011 Soonwook Hwang KISTI 1

The 3 rd FKPPL Workshop @ KIAS FKPPL VO: Status and Perspectives of Grid Infrastructure March 8 -9, 2011 Soonwook Hwang KISTI 1

Introduction to FKPPL § FKPPL (France-Korea Particle Physics Laboratory) § International Associated Laboratory between French and Korean laboratories § Promote joint cooperative activities (research projects) under a scientific research program in the area of § Particle Physics § LHC § ILC § e-Science § Bioinformatics § Grid Computing § Geant 4

Introduction to FKPPL § FKPPL (France-Korea Particle Physics Laboratory) § International Associated Laboratory between French and Korean laboratories § Promote joint cooperative activities (research projects) under a scientific research program in the area of § Particle Physics § LHC § ILC § e-Science § Bioinformatics § Grid Computing § Geant 4

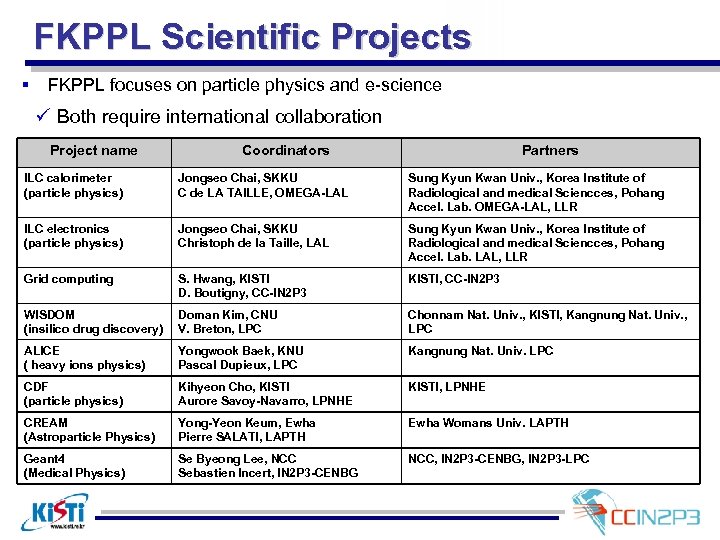

FKPPL Scientific Projects § FKPPL focuses on particle physics and e-science FKPPL scientific projects ü Both require international collaboration Project name Coordinators Partners ILC calorimeter (particle physics) Jongseo Chai, SKKU C de LA TAILLE, OMEGA-LAL Sung Kyun Kwan Univ. , Korea Institute of Radiological and medical Sciencces, Pohang Accel. Lab. OMEGA-LAL, LLR ILC electronics (particle physics) Jongseo Chai, SKKU Christoph de la Taille, LAL Sung Kyun Kwan Univ. , Korea Institute of Radiological and medical Sciencces, Pohang Accel. Lab. LAL, LLR Grid computing S. Hwang, KISTI D. Boutigny, CC-IN 2 P 3 KISTI, CC-IN 2 P 3 WISDOM (insilico drug discovery) Doman Kim, CNU V. Breton, LPC Chonnam Nat. Univ. , KISTI, Kangnung Nat. Univ. , LPC ALICE ( heavy ions physics) Yongwook Baek, KNU Pascal Dupieux, LPC Kangnung Nat. Univ. LPC CDF (particle physics) Kihyeon Cho, KISTI Aurore Savoy-Navarro, LPNHE KISTI, LPNHE CREAM (Astroparticle Physics) Yong-Yeon Keum, Ewha Pierre SALATI, LAPTH Ewha Womans Univ. LAPTH Geant 4 (Medical Physics) Se Byeong Lee, NCC Sebastien Incert, IN 2 P 3 -CENBG NCC, IN 2 P 3 -CENBG, IN 2 P 3 -LPC

FKPPL Scientific Projects § FKPPL focuses on particle physics and e-science FKPPL scientific projects ü Both require international collaboration Project name Coordinators Partners ILC calorimeter (particle physics) Jongseo Chai, SKKU C de LA TAILLE, OMEGA-LAL Sung Kyun Kwan Univ. , Korea Institute of Radiological and medical Sciencces, Pohang Accel. Lab. OMEGA-LAL, LLR ILC electronics (particle physics) Jongseo Chai, SKKU Christoph de la Taille, LAL Sung Kyun Kwan Univ. , Korea Institute of Radiological and medical Sciencces, Pohang Accel. Lab. LAL, LLR Grid computing S. Hwang, KISTI D. Boutigny, CC-IN 2 P 3 KISTI, CC-IN 2 P 3 WISDOM (insilico drug discovery) Doman Kim, CNU V. Breton, LPC Chonnam Nat. Univ. , KISTI, Kangnung Nat. Univ. , LPC ALICE ( heavy ions physics) Yongwook Baek, KNU Pascal Dupieux, LPC Kangnung Nat. Univ. LPC CDF (particle physics) Kihyeon Cho, KISTI Aurore Savoy-Navarro, LPNHE KISTI, LPNHE CREAM (Astroparticle Physics) Yong-Yeon Keum, Ewha Pierre SALATI, LAPTH Ewha Womans Univ. LAPTH Geant 4 (Medical Physics) Se Byeong Lee, NCC Sebastien Incert, IN 2 P 3 -CENBG NCC, IN 2 P 3 -CENBG, IN 2 P 3 -LPC

Grid Computing @ FKPPL § Participating Organizations § CC-IN 2 P 3 and KISTI § Group Leaders § Dominique Boutigny, Director of CC-IN 2 P 3, France § Soonwook Hwang, KISTI, Korea § ’ 10 Budget § France: ~6000 Euro § Mainly traveling cost funded by CNRS § Korea: 20, 000 Won § traveling cost and organizing grid workshop and training § funded by KRCF under the framework of the CNRS-KRCFST Joint Programme § Common Interest § Joint in Grid computing § Collaboration on ALICE computing: CC-IN 2 P 3 (Tier 1) and KISTI (Tier 2) § Joint operation and maintenance of production grid infrastructure

Grid Computing @ FKPPL § Participating Organizations § CC-IN 2 P 3 and KISTI § Group Leaders § Dominique Boutigny, Director of CC-IN 2 P 3, France § Soonwook Hwang, KISTI, Korea § ’ 10 Budget § France: ~6000 Euro § Mainly traveling cost funded by CNRS § Korea: 20, 000 Won § traveling cost and organizing grid workshop and training § funded by KRCF under the framework of the CNRS-KRCFST Joint Programme § Common Interest § Joint in Grid computing § Collaboration on ALICE computing: CC-IN 2 P 3 (Tier 1) and KISTI (Tier 2) § Joint operation and maintenance of production grid infrastructure

Objective § Background § Collaborative work between KISTI in Korea and CC-IN 2 P 3 in France in the area of Grid computing § Objective § Provide computing facilities and user support needed to foster the scientific applications established under the framework of FKPPL collaboration and beyond § Promote the adoption of grid technology and grid awareness in Korea and France by providing scientists and researchers with production Grid infrastructure and technical support necessary for them

Objective § Background § Collaborative work between KISTI in Korea and CC-IN 2 P 3 in France in the area of Grid computing § Objective § Provide computing facilities and user support needed to foster the scientific applications established under the framework of FKPPL collaboration and beyond § Promote the adoption of grid technology and grid awareness in Korea and France by providing scientists and researchers with production Grid infrastructure and technical support necessary for them

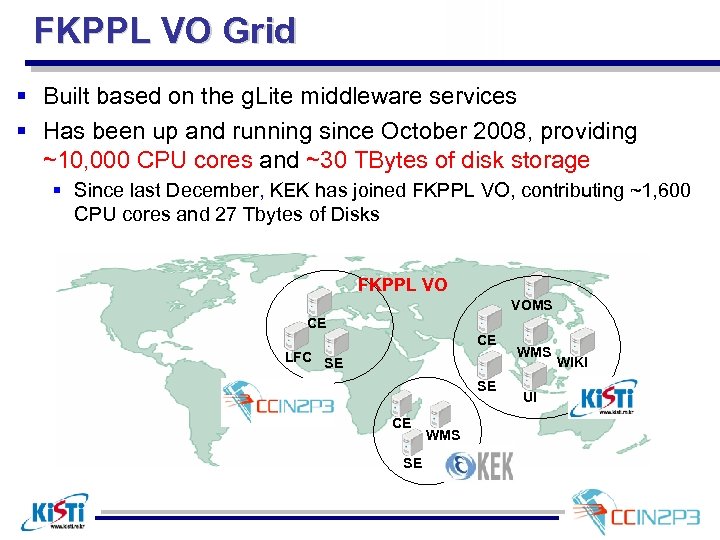

FKPPL VO Grid § Built based on the g. Lite middleware services § Has been up and running since October 2008, providing ~10, 000 CPU cores and ~30 TBytes of disk storage § Since last December, KEK has joined FKPPL VO, contributing ~1, 600 CPU cores and 27 Tbytes of Disks FKPPL VO VOMS CE CE LFC SE SE CE SE WMS UI WIKI

FKPPL VO Grid § Built based on the g. Lite middleware services § Has been up and running since October 2008, providing ~10, 000 CPU cores and ~30 TBytes of disk storage § Since last December, KEK has joined FKPPL VO, contributing ~1, 600 CPU cores and 27 Tbytes of Disks FKPPL VO VOMS CE CE LFC SE SE CE SE WMS UI WIKI

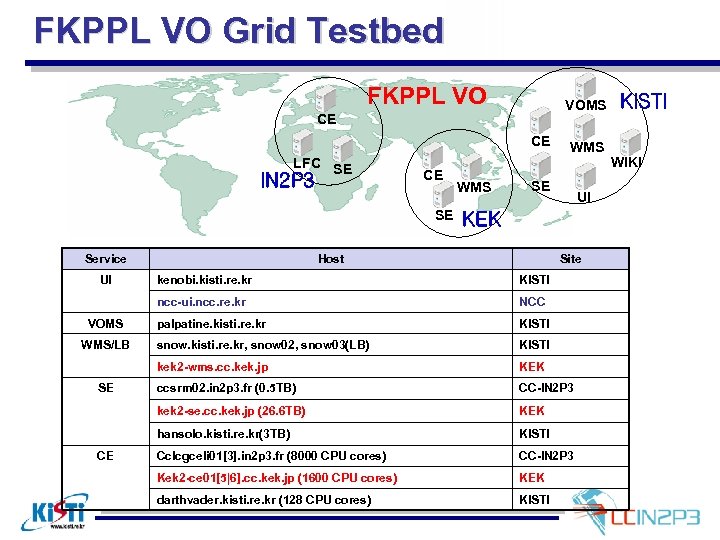

FKPPL VO Grid Testbed FKPPL VO VOMS CE CE LFC SE IN 2 P 3 CE SE Service UI WMS SE UI KEK Host Site KISTI snow. kisti. re. kr, snow 02, snow 03(LB) KISTI KEK ccsrm 02. in 2 p 3. fr (0. 5 TB) CC-IN 2 P 3 KEK hansolo. kisti. re. kr(3 TB) CE palpatine. kisti. re. kr kek 2 -se. cc. kek. jp (26. 6 TB) SE NCC kek 2 -wms. cc. kek. jp WMS/LB KISTI ncc-ui. ncc. re. kr VOMS kenobi. kisti. re. kr KISTI Cclcgceli 01[3]. in 2 p 3. fr (8000 CPU cores) CC-IN 2 P 3 Kek 2 -ce 01[5|6]. cc. kek. jp (1600 CPU cores) KEK darthvader. kisti. re. kr (128 CPU cores) KISTI WIKI

FKPPL VO Grid Testbed FKPPL VO VOMS CE CE LFC SE IN 2 P 3 CE SE Service UI WMS SE UI KEK Host Site KISTI snow. kisti. re. kr, snow 02, snow 03(LB) KISTI KEK ccsrm 02. in 2 p 3. fr (0. 5 TB) CC-IN 2 P 3 KEK hansolo. kisti. re. kr(3 TB) CE palpatine. kisti. re. kr kek 2 -se. cc. kek. jp (26. 6 TB) SE NCC kek 2 -wms. cc. kek. jp WMS/LB KISTI ncc-ui. ncc. re. kr VOMS kenobi. kisti. re. kr KISTI Cclcgceli 01[3]. in 2 p 3. fr (8000 CPU cores) CC-IN 2 P 3 Kek 2 -ce 01[5|6]. cc. kek. jp (1600 CPU cores) KEK darthvader. kisti. re. kr (128 CPU cores) KISTI WIKI

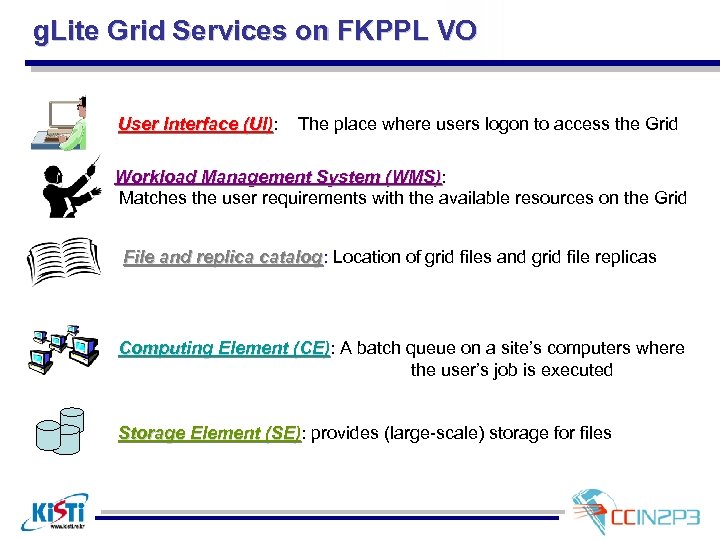

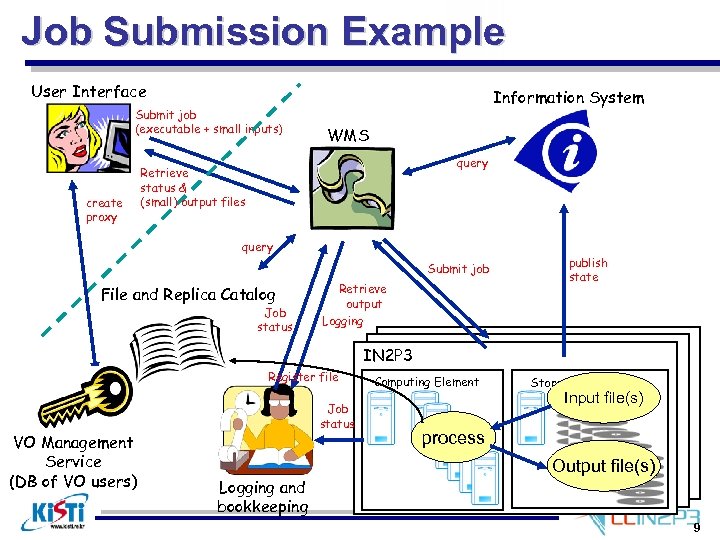

g. Lite Grid Services on FKPPL VO User Interface (UI): (UI) The place where users logon to access the Grid Workload Management System (WMS): (WMS) Matches the user requirements with the available resources on the Grid File and replica catalog: Location of grid files and grid file replicas catalog Computing Element (CE): A batch queue on a site’s computers where (CE) the user’s job is executed Storage Element (SE): provides (large-scale) storage for files (SE)

g. Lite Grid Services on FKPPL VO User Interface (UI): (UI) The place where users logon to access the Grid Workload Management System (WMS): (WMS) Matches the user requirements with the available resources on the Grid File and replica catalog: Location of grid files and grid file replicas catalog Computing Element (CE): A batch queue on a site’s computers where (CE) the user’s job is executed Storage Element (SE): provides (large-scale) storage for files (SE)

Job Submission Example User Interface Submit job (executable + small inputs) create proxy Information System WMS query Retrieve status & (small) output files query Submit job File and Replica Catalog Job status Retrieve output Logging publish state IN 2 P 3 Register file VO Management Service (DB of VO users) Job status Logging and bookkeeping Computing Element Storage Element Input file(s) process Output file(s) 9

Job Submission Example User Interface Submit job (executable + small inputs) create proxy Information System WMS query Retrieve status & (small) output files query Submit job File and Replica Catalog Job status Retrieve output Logging publish state IN 2 P 3 Register file VO Management Service (DB of VO users) Job status Logging and bookkeeping Computing Element Storage Element Input file(s) process Output file(s) 9

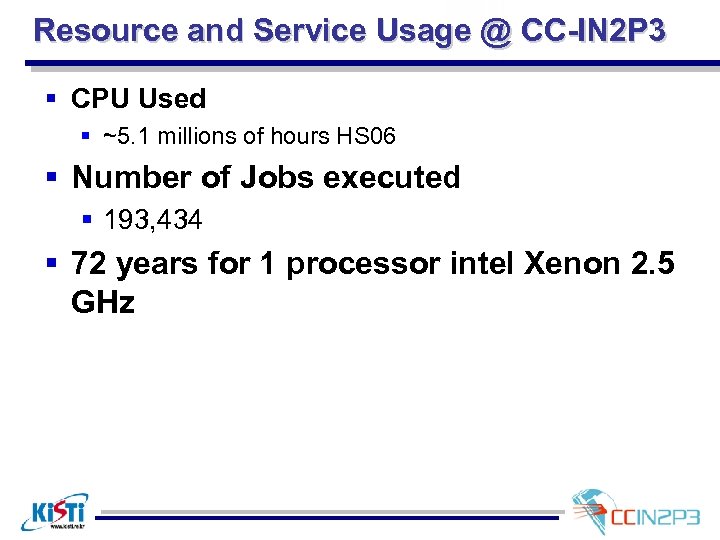

Resource and Service Usage @ CC-IN 2 P 3 § CPU Used § ~5. 1 millions of hours HS 06 § Number of Jobs executed § 193, 434 § 72 years for 1 processor intel Xenon 2. 5 GHz

Resource and Service Usage @ CC-IN 2 P 3 § CPU Used § ~5. 1 millions of hours HS 06 § Number of Jobs executed § 193, 434 § 72 years for 1 processor intel Xenon 2. 5 GHz

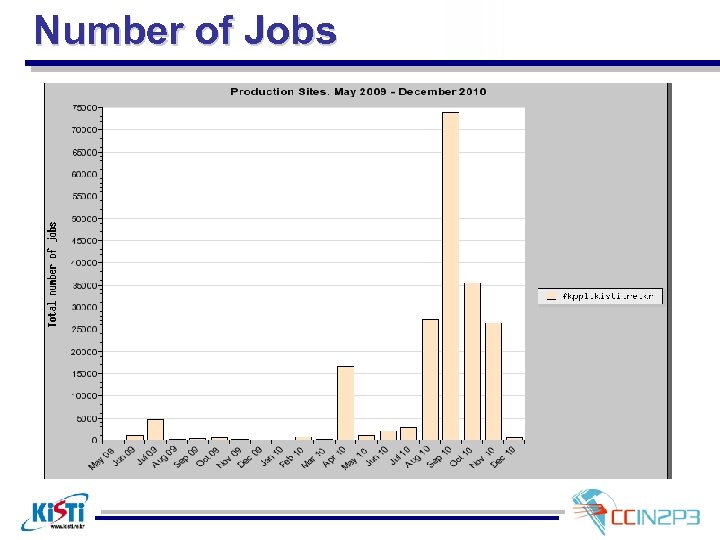

Number of Jobs

Number of Jobs

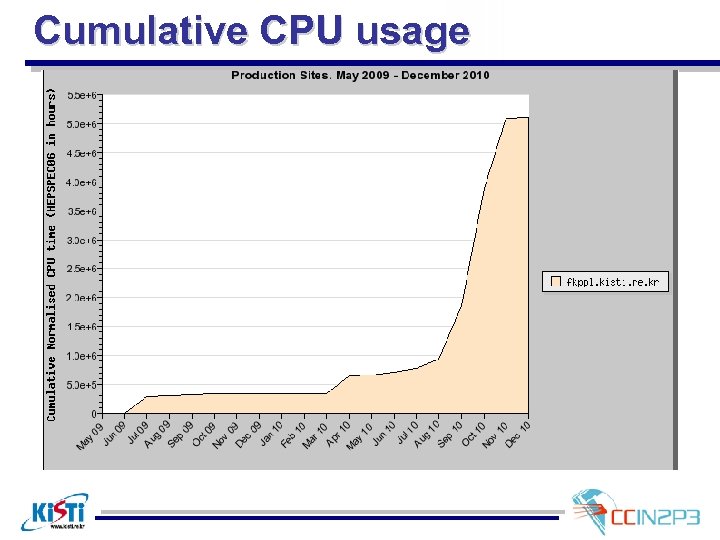

Cumulative CPU usage

Cumulative CPU usage

User Support § FKPPL VO Wiki site § http: //esgtech. springnote. com/pages/4262263 § User Accounts on UI § 104 User accounts has been created § FKPPL VO Membership Registration § 70 Users have been registered at FKPPL VO membership

User Support § FKPPL VO Wiki site § http: //esgtech. springnote. com/pages/4262263 § User Accounts on UI § 104 User accounts has been created § FKPPL VO Membership Registration § 70 Users have been registered at FKPPL VO membership

Grid Training (1/2) § In February 2010, we organized Geant 4 and Grid tutorial 2010 for Korean medical physics communities § Co-hosted by KISTI and NCC § About 34 participants from major hospitals in Korea § About 20 new users joined the FKPPL VO membership

Grid Training (1/2) § In February 2010, we organized Geant 4 and Grid tutorial 2010 for Korean medical physics communities § Co-hosted by KISTI and NCC § About 34 participants from major hospitals in Korea § About 20 new users joined the FKPPL VO membership

Grid Training (2/2) u “ 2010 Summer Training Course on Geant 4, GATE and Grid computing” held in Seoul in July § Co-hosted by KISTI and NCC § About 50 participants from about 20 institutes in Korea

Grid Training (2/2) u “ 2010 Summer Training Course on Geant 4, GATE and Grid computing” held in Seoul in July § Co-hosted by KISTI and NCC § About 50 participants from about 20 institutes in Korea

Application porting Support on FKPPL VO § Deployment of Geant 4 applications § Used extensively by the National Cancel Center in Korea to carry out compute-intensive simulations relevant to cancer treatment planning § In collaboration with National Cancer Center in Korea § Deployment of two-color QCD (Quantum Chromo. Dynamics) simulations in theoretical Physics § Several hundreds or thousands of QCD jobs are required to be run on the Grid, with each jobs taking about 10 days. § In collaboration with Prof. Seyong Kim of Sejong University

Application porting Support on FKPPL VO § Deployment of Geant 4 applications § Used extensively by the National Cancel Center in Korea to carry out compute-intensive simulations relevant to cancer treatment planning § In collaboration with National Cancer Center in Korea § Deployment of two-color QCD (Quantum Chromo. Dynamics) simulations in theoretical Physics § Several hundreds or thousands of QCD jobs are required to be run on the Grid, with each jobs taking about 10 days. § In collaboration with Prof. Seyong Kim of Sejong University

User Community Support § Sejong Univerisity § Porting of two-color QCD (Quantum chromo-dynamics) simulations on the Grid and large-scale execution on it § National Cancer Center § Porting of Geant 4 simulations on the Grid for planning § KAIST § Used as a testbed for a grid and distributed computing course in computer science department § East-West Neo Medical Center in Kyung Hee University § Porting of Geant 4 simulations on the Grid § Ewha Womans University § Porting of Gate applications on the grid

User Community Support § Sejong Univerisity § Porting of two-color QCD (Quantum chromo-dynamics) simulations on the Grid and large-scale execution on it § National Cancer Center § Porting of Geant 4 simulations on the Grid for planning § KAIST § Used as a testbed for a grid and distributed computing course in computer science department § East-West Neo Medical Center in Kyung Hee University § Porting of Geant 4 simulations on the Grid § Ewha Womans University § Porting of Gate applications on the grid

Deployment of QCD Simulations on the FKPPL VO

Deployment of QCD Simulations on the FKPPL VO

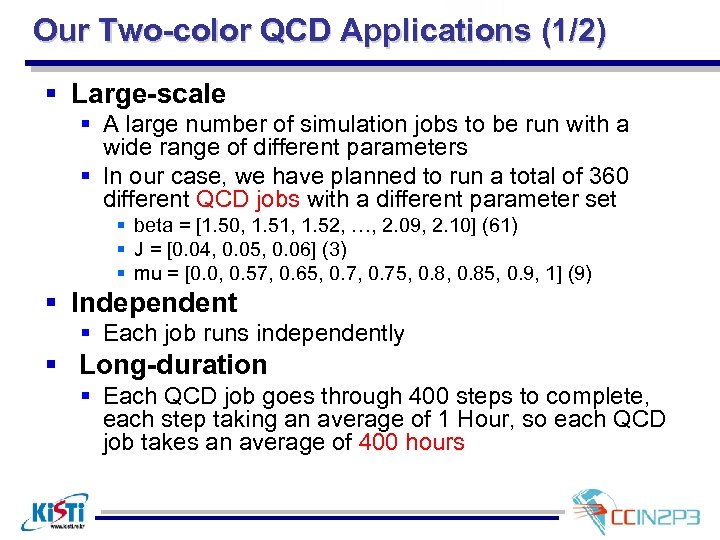

Our Two-color QCD Applications (1/2) § Large-scale § A large number of simulation jobs to be run with a wide range of different parameters § In our case, we have planned to run a total of 360 different QCD jobs with a different parameter set § beta = [1. 50, 1. 51, 1. 52, …, 2. 09, 2. 10] (61) § J = [0. 04, 0. 05, 0. 06] (3) § mu = [0. 0, 0. 57, 0. 65, 0. 75, 0. 85, 0. 9, 1] (9) § Independent § Each job runs independently § Long-duration § Each QCD job goes through 400 steps to complete, each step taking an average of 1 Hour, so each QCD job takes an average of 400 hours

Our Two-color QCD Applications (1/2) § Large-scale § A large number of simulation jobs to be run with a wide range of different parameters § In our case, we have planned to run a total of 360 different QCD jobs with a different parameter set § beta = [1. 50, 1. 51, 1. 52, …, 2. 09, 2. 10] (61) § J = [0. 04, 0. 05, 0. 06] (3) § mu = [0. 0, 0. 57, 0. 65, 0. 75, 0. 85, 0. 9, 1] (9) § Independent § Each job runs independently § Long-duration § Each QCD job goes through 400 steps to complete, each step taking an average of 1 Hour, so each QCD job takes an average of 400 hours

Our Two-color QCD Applications (2/2) § Need a computing facility to run a large number of jobs § FKPPL VO provides computing resources sufficient to run the 360 QCD jobs all together concurrently § Need some grid tool to effectively maintain such a large-scale jobs running on the Grid without having to know the details of the underlying Grid § Ganga seems to be appropriate as a tool for managing such large number of jobs on the Grid

Our Two-color QCD Applications (2/2) § Need a computing facility to run a large number of jobs § FKPPL VO provides computing resources sufficient to run the 360 QCD jobs all together concurrently § Need some grid tool to effectively maintain such a large-scale jobs running on the Grid without having to know the details of the underlying Grid § Ganga seems to be appropriate as a tool for managing such large number of jobs on the Grid

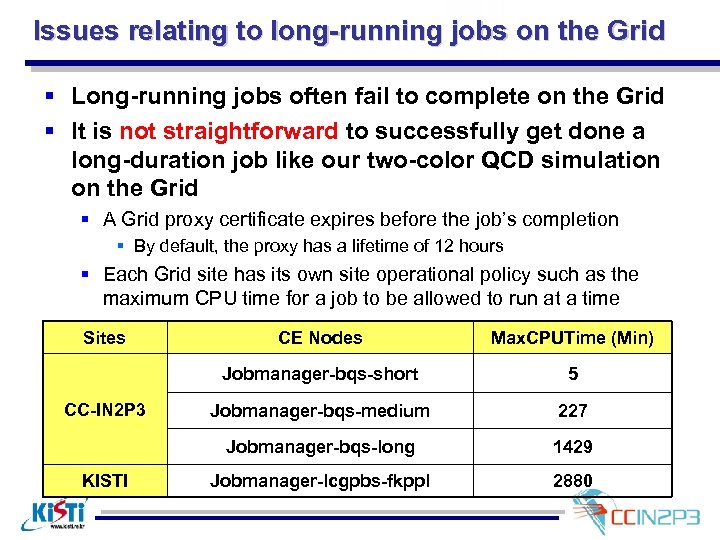

Issues relating to long-running jobs on the Grid § Long-running jobs often fail to complete on the Grid § It is not straightforward to successfully get done a long-duration job like our two-color QCD simulation on the Grid § A Grid proxy certificate expires before the job’s completion § By default, the proxy has a lifetime of 12 hours § Each Grid site has its own site operational policy such as the maximum CPU time for a job to be allowed to run at a time Sites 5 Jobmanager-bqs-medium 227 Jobmanager-bqs-long KISTI Max. CPUTime (Min) Jobmanager-bqs-short CC-IN 2 P 3 CE Nodes 1429 Jobmanager-lcgpbs-fkppl 2880

Issues relating to long-running jobs on the Grid § Long-running jobs often fail to complete on the Grid § It is not straightforward to successfully get done a long-duration job like our two-color QCD simulation on the Grid § A Grid proxy certificate expires before the job’s completion § By default, the proxy has a lifetime of 12 hours § Each Grid site has its own site operational policy such as the maximum CPU time for a job to be allowed to run at a time Sites 5 Jobmanager-bqs-medium 227 Jobmanager-bqs-long KISTI Max. CPUTime (Min) Jobmanager-bqs-short CC-IN 2 P 3 CE Nodes 1429 Jobmanager-lcgpbs-fkppl 2880

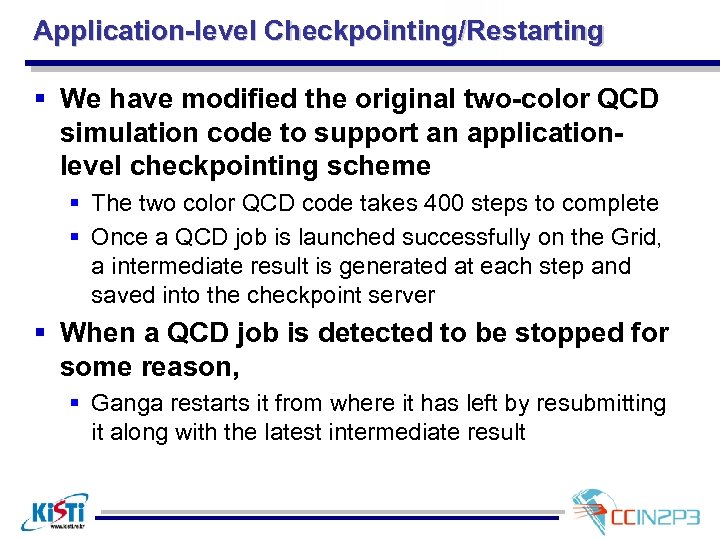

Application-level Checkpointing/Restarting § We have modified the original two-color QCD simulation code to support an applicationlevel checkpointing scheme § The two color QCD code takes 400 steps to complete § Once a QCD job is launched successfully on the Grid, a intermediate result is generated at each step and saved into the checkpoint server § When a QCD job is detected to be stopped for some reason, § Ganga restarts it from where it has left by resubmitting it along with the latest intermediate result

Application-level Checkpointing/Restarting § We have modified the original two-color QCD simulation code to support an applicationlevel checkpointing scheme § The two color QCD code takes 400 steps to complete § Once a QCD job is launched successfully on the Grid, a intermediate result is generated at each step and saved into the checkpoint server § When a QCD job is detected to be stopped for some reason, § Ganga restarts it from where it has left by resubmitting it along with the latest intermediate result

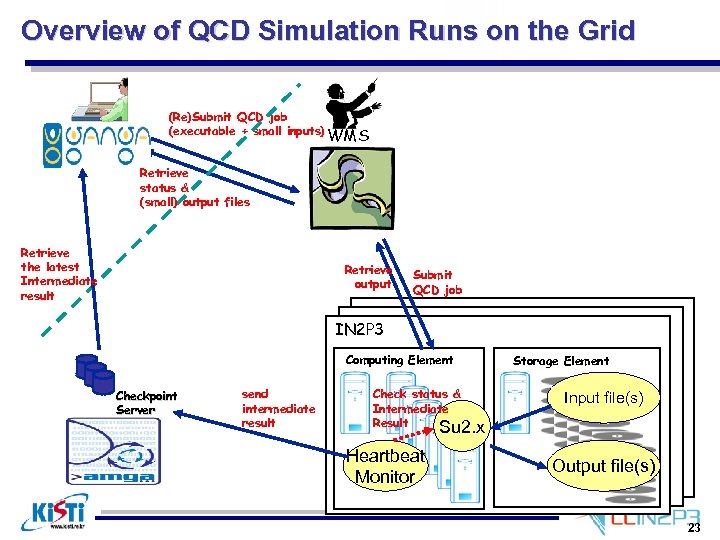

Overview of QCD Simulation Runs on the Grid (Re)Submit QCD job (executable + small inputs) WMS Retrieve status & (small) output files Retrieve the latest Intermediate result Retrieve output Submit QCD job IN 2 P 3 Computing Element Checkpoint Server send intermediate result Check status & Intermediate Result Su 2. x Heartbeat Monitor Storage Element Input file(s) Output file(s) 23

Overview of QCD Simulation Runs on the Grid (Re)Submit QCD job (executable + small inputs) WMS Retrieve status & (small) output files Retrieve the latest Intermediate result Retrieve output Submit QCD job IN 2 P 3 Computing Element Checkpoint Server send intermediate result Check status & Intermediate Result Su 2. x Heartbeat Monitor Storage Element Input file(s) Output file(s) 23

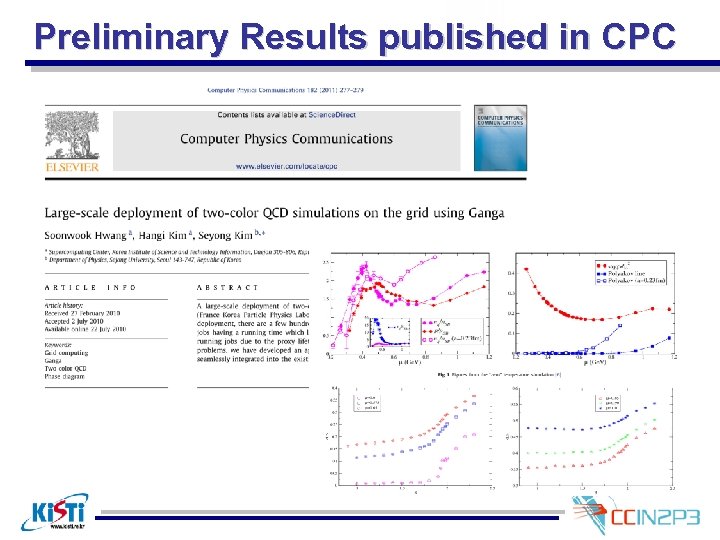

Preliminary Results published in CPC

Preliminary Results published in CPC

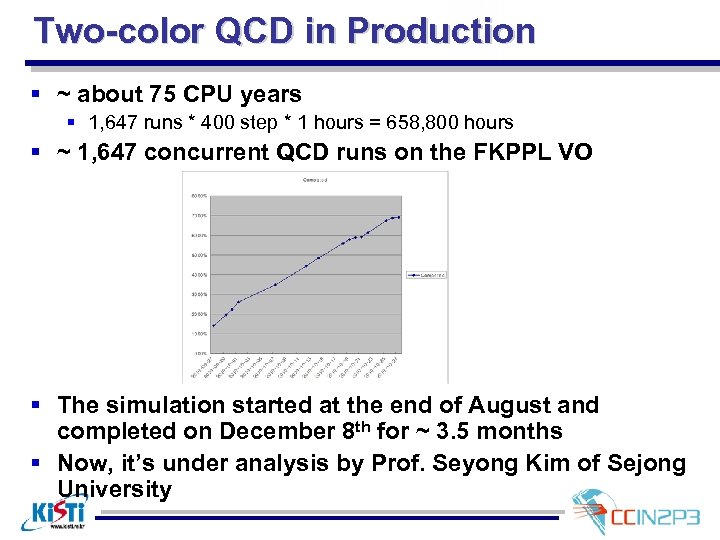

Two-color QCD in Production § ~ about 75 CPU years § 1, 647 runs * 400 step * 1 hours = 658, 800 hours § ~ 1, 647 concurrent QCD runs on the FKPPL VO § The simulation started at the end of August and completed on December 8 th for ~ 3. 5 months § Now, it’s under analysis by Prof. Seyong Kim of Sejong University

Two-color QCD in Production § ~ about 75 CPU years § 1, 647 runs * 400 step * 1 hours = 658, 800 hours § ~ 1, 647 concurrent QCD runs on the FKPPL VO § The simulation started at the end of August and completed on December 8 th for ~ 3. 5 months § Now, it’s under analysis by Prof. Seyong Kim of Sejong University

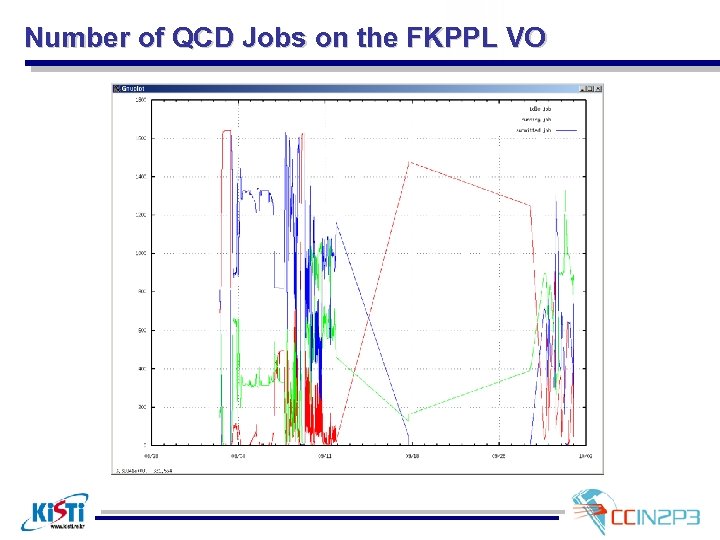

Number of QCD Jobs on the FKPPL VO

Number of QCD Jobs on the FKPPL VO

Towards France-Asia VO

Towards France-Asia VO

FJKPPL Workshop on Grid Computing § FJPPL/FKPPL Joint workshop on Grid computing was held in KEK on December 20 -22 § Hosted by KEK § CC-IN 2 P 3, KEK and KISTI agreed to move forward towards France-Asia VO

FJKPPL Workshop on Grid Computing § FJPPL/FKPPL Joint workshop on Grid computing was held in KEK on December 20 -22 § Hosted by KEK § CC-IN 2 P 3, KEK and KISTI agreed to move forward towards France-Asia VO

Perspectives for France-Asia VO § Computing Infrastructure § g. Lite Middleware based § Computing centers offering resources § CC-IN 2 P 3, KEK, KISTI § IHEP in China ? § IOIT in Vietnam? § Data Infrastructure § IRODS (Integrated Rule-Oriented Data System) § Both KEK and CC-IN 2 P 3 have some expertise on the operation and management the IRODS service and might be able to provide IRODS service in the future § Applications § It is important to have some applications with scientific results § As of now, we have applications such as: § In-silico docking applications § QCD simulations § Geant 4/GATE applications

Perspectives for France-Asia VO § Computing Infrastructure § g. Lite Middleware based § Computing centers offering resources § CC-IN 2 P 3, KEK, KISTI § IHEP in China ? § IOIT in Vietnam? § Data Infrastructure § IRODS (Integrated Rule-Oriented Data System) § Both KEK and CC-IN 2 P 3 have some expertise on the operation and management the IRODS service and might be able to provide IRODS service in the future § Applications § It is important to have some applications with scientific results § As of now, we have applications such as: § In-silico docking applications § QCD simulations § Geant 4/GATE applications

Perspectives for France-Asia VO § User Communities § As of now, we have user communities mainly from Korea § We might be able to have communities from Japan and Vietnam § Geant 4 communities (Japan) § In silico drug discovery communities (Vietnam) § ? ? (China) § High-level Tools/Services § It is important to provide users with an easy-to-use high level tools § Some of tools that we have some expertise on § § § WISDOM Ganga JSAGA DIRAC ? ? § Training § In order to promote the awareness of the France-Aisa VO, it is important to organize some tutorials on glite middleware, high-level tools and applications

Perspectives for France-Asia VO § User Communities § As of now, we have user communities mainly from Korea § We might be able to have communities from Japan and Vietnam § Geant 4 communities (Japan) § In silico drug discovery communities (Vietnam) § ? ? (China) § High-level Tools/Services § It is important to provide users with an easy-to-use high level tools § Some of tools that we have some expertise on § § § WISDOM Ganga JSAGA DIRAC ? ? § Training § In order to promote the awareness of the France-Aisa VO, it is important to organize some tutorials on glite middleware, high-level tools and applications

Thank you for your attention

Thank you for your attention