7adac3d29265ddaf07610069039f3cf3.ppt

- Количество слайдов: 64

Text to speech to text: a third orality? Lawrie Hunter Kochi University of Technology http: //www. core. kochi-tech. ac. jp/hunter

Text to speech to text: a third orality? Lawrie Hunter Kochi University of Technology http: //www. core. kochi-tech. ac. jp/hunter

Current state: Fragmentation of knowledge as a result of the ongoing creation of research niches A voracious, yet protective and covetous knowledge industry

Current state: Fragmentation of knowledge as a result of the ongoing creation of research niches A voracious, yet protective and covetous knowledge industry

Current state: Isn’t CALL just a subset of User Experience (UX? )

Current state: Isn’t CALL just a subset of User Experience (UX? )

Hunter (2006)*: Learners are evolving URGENT: Just-in-time learner sociology URGENT: Near-instant learner profiling Upgrade: Learner => USER User Experience (UX) practice UZANTO’s Mind. Canvas: -user profiling for a large target group in a matter of hours RUMM: rapid user mental modelling GEMS: game emulation This may be very fruitfully adapted to the foundation explorations leading to CALL decision-making. The expanding palette: Emergent CALL paradigms (Invited virtual presentation) Antwerp CALL 2006 http: //www. core. kochi-tech. ac. jp/hunter/professional/CALLparadigms/index. html

Hunter (2006)*: Learners are evolving URGENT: Just-in-time learner sociology URGENT: Near-instant learner profiling Upgrade: Learner => USER User Experience (UX) practice UZANTO’s Mind. Canvas: -user profiling for a large target group in a matter of hours RUMM: rapid user mental modelling GEMS: game emulation This may be very fruitfully adapted to the foundation explorations leading to CALL decision-making. The expanding palette: Emergent CALL paradigms (Invited virtual presentation) Antwerp CALL 2006 http: //www. core. kochi-tech. ac. jp/hunter/professional/CALLparadigms/index. html

Now text-to-speech and speech-to-text (T 2 S 2 T) software have become truly usable in a very practical sense. This blurs the line between speech and text in a very immediate way. http: //www. nextuptech. com/ http: //www. nuance. com/naturallyspeaking/

Now text-to-speech and speech-to-text (T 2 S 2 T) software have become truly usable in a very practical sense. This blurs the line between speech and text in a very immediate way. http: //www. nextuptech. com/ http: //www. nuance. com/naturallyspeaking/

Usable T 2 S 2 T No more typing. No more reading. No more hands. Composition by speaking. . . ooh! Information acquisition by listening. . . ahh! If we do this, we will be in a new orality.

Usable T 2 S 2 T No more typing. No more reading. No more hands. Composition by speaking. . . ooh! Information acquisition by listening. . . ahh! If we do this, we will be in a new orality.

What? ? Audio is lame: VIDEO is the game. We are in the youtube era. Get a second life!

What? ? Audio is lame: VIDEO is the game. We are in the youtube era. Get a second life!

T 2 S will be fully usable in 2 (or x) years; we must assume the future and shift our place of work there.

T 2 S will be fully usable in 2 (or x) years; we must assume the future and shift our place of work there.

QUESTION: For second language learning systems development, is audio going out?

QUESTION: For second language learning systems development, is audio going out?

TODAY: A search for principles governing the use of voice in CALL

TODAY: A search for principles governing the use of voice in CALL

Investigation of voice and cognition

Investigation of voice and cognition

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word PRIMARY ORAL cultures (no system of writing) think differently from CHIROGRAPHIC cultures

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word PRIMARY ORAL cultures (no system of writing) think differently from CHIROGRAPHIC cultures

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word: “Electronic media (e. g. telephone, radio and television) brought about a second orality” [paraphrase] “Both primary and secondary oralities afford a strong sense of membership in a group. ” [paraphrase]

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word: “Electronic media (e. g. telephone, radio and television) brought about a second orality” [paraphrase] “Both primary and secondary oralities afford a strong sense of membership in a group. ” [paraphrase]

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word: “Electronic media (e. g. telephone, radio and television) brought about a second orality” [paraphrase] “Both primary and secondary oralities afford a strong sense of membership in a group. ” [paraphrase] BUT Secondary orality is "essentially a more deliberate and self-conscious orality, based permanently on the use of writing and print, " and produces much larger groups.

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word: “Electronic media (e. g. telephone, radio and television) brought about a second orality” [paraphrase] “Both primary and secondary oralities afford a strong sense of membership in a group. ” [paraphrase] BUT Secondary orality is "essentially a more deliberate and self-conscious orality, based permanently on the use of writing and print, " and produces much larger groups.

Kathleen Welch* rejects claims that Ong posits mutually exclusive, competitive, reductive orality-literacy divide. Welch argues that Ong emphasizes -a mingling of these types of consciousness -tenacity of established forms as new ones appear Welch, K. (1999) Electric Rhetoric: Classical Rhetoric, Oralism, and a New Literacy. MIT Press. p. 59

Kathleen Welch* rejects claims that Ong posits mutually exclusive, competitive, reductive orality-literacy divide. Welch argues that Ong emphasizes -a mingling of these types of consciousness -tenacity of established forms as new ones appear Welch, K. (1999) Electric Rhetoric: Classical Rhetoric, Oralism, and a New Literacy. MIT Press. p. 59

Welch argues that TV's ubiquity has resulted in a new, electronic literacy. We shall not go there today.

Welch argues that TV's ubiquity has resulted in a new, electronic literacy. We shall not go there today.

Workable T 2 S 2 T promises to change the nature of cognitive load constraints in text production/decoding, and hence in language learning task.

Workable T 2 S 2 T promises to change the nature of cognitive load constraints in text production/decoding, and hence in language learning task.

Workable T 2 S 2 T There is now S 2 T (Dragon Voice) for Indian English*, British English. . . but not for Japanese English yet. (Ever? ) * http: //labnol. blogspot. com/2007/01/dragon-naturallyspeaking-9 -speech. html

Workable T 2 S 2 T There is now S 2 T (Dragon Voice) for Indian English*, British English. . . but not for Japanese English yet. (Ever? ) * http: //labnol. blogspot. com/2007/01/dragon-naturallyspeaking-9 -speech. html

Workable T 2 S 2 T There is now S 2 T (Dragon Voice) for Indian English*, British English. . . but not for Japanese English yet. (Ever? ) * http: //labnol. blogspot. com/2007/01/dragon-naturallyspeaking-9 -speech. html So the tech is there for computers to decode human speech better than humans can. . . ?

Workable T 2 S 2 T There is now S 2 T (Dragon Voice) for Indian English*, British English. . . but not for Japanese English yet. (Ever? ) * http: //labnol. blogspot. com/2007/01/dragon-naturallyspeaking-9 -speech. html So the tech is there for computers to decode human speech better than humans can. . . ?

HOWEVER we don’t know much about how orality works. Perhaps that is because orality is so ingrained in us.

HOWEVER we don’t know much about how orality works. Perhaps that is because orality is so ingrained in us.

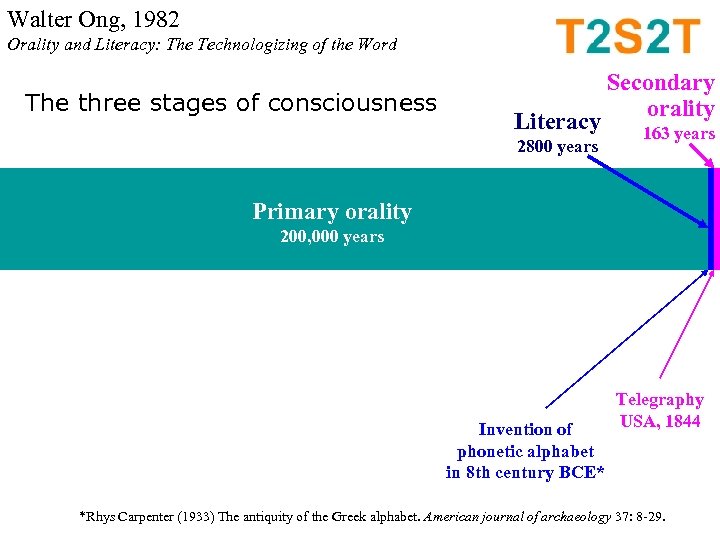

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word The three stages of consciousness Literacy 2800 years Secondary orality 163 years Primary orality 200, 000 years Invention of phonetic alphabet in 8 th century BCE* Telegraphy USA, 1844 *Rhys Carpenter (1933) The antiquity of the Greek alphabet. American journal of archaeology 37: 8 -29.

Walter Ong, 1982 Orality and Literacy: The Technologizing of the Word The three stages of consciousness Literacy 2800 years Secondary orality 163 years Primary orality 200, 000 years Invention of phonetic alphabet in 8 th century BCE* Telegraphy USA, 1844 *Rhys Carpenter (1933) The antiquity of the Greek alphabet. American journal of archaeology 37: 8 -29.

WIRED FOR SPEECH* Orality has been part of human life for a long time. After 200, 000 years of evolution: “. . . humans have become voice-activated, with brains that are wired to equate voices with people and to act quickly on that information. ” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

WIRED FOR SPEECH* Orality has been part of human life for a long time. After 200, 000 years of evolution: “. . . humans have become voice-activated, with brains that are wired to equate voices with people and to act quickly on that information. ” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Writing: ‘a secondary modelling system’ Lotman, J. , trans. R. Vroon (1977) The structure of the artistic text. Michigan Slavic Studies, 7. Writing can never exist without orality. p. 8 Speeches that were studied as rhetoric could only be studied if they were transcribed. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Writing: ‘a secondary modelling system’ Lotman, J. , trans. R. Vroon (1977) The structure of the artistic text. Michigan Slavic Studies, 7. Writing can never exist without orality. p. 8 Speeches that were studied as rhetoric could only be studied if they were transcribed. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Writing: ‘a secondary modelling system’ Lotman, J. , trans. R. Vroon (1977) The structure of the artistic text. Michigan Slavic Studies, 7. “. . . to this day no concepts have yet been formed for effectively, let alone gracefully, conceiving of oral art as such without reference, conscious or unconscious, to writing. ” p. 10 Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Writing: ‘a secondary modelling system’ Lotman, J. , trans. R. Vroon (1977) The structure of the artistic text. Michigan Slavic Studies, 7. “. . . to this day no concepts have yet been formed for effectively, let alone gracefully, conceiving of oral art as such without reference, conscious or unconscious, to writing. ” p. 10 Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Psychodynamics of orality “. . . you know what you can recall. ” Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Psychodynamics of orality “. . . you know what you can recall. ” Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Psychodynamics of orality Pythagoras and the acousmatics The term acousmatic dates back to Pythagoras, who is believed to have tutored his students from behind a screen so as not to let his presence distract them from the content of his lectures. wikipedia. org May 20, 2007: edited from Chion, M. (1994). "Audio-Vision: Sound on Screen", Columbia University Press.

Psychodynamics of orality Pythagoras and the acousmatics The term acousmatic dates back to Pythagoras, who is believed to have tutored his students from behind a screen so as not to let his presence distract them from the content of his lectures. wikipedia. org May 20, 2007: edited from Chion, M. (1994). "Audio-Vision: Sound on Screen", Columbia University Press.

Psychodynamics of orality Pythagoras and the acousmatics In cinema, acousmatic sound is sound one hears without seeing an originating cause - an invisible sound source. Radio, phonograph and telephone, all which transmit sounds without showing the source cause, are acousmatic media. wikipedia. org May 20, 2007: edited from Chion, M. (1994). "Audio-Vision: Sound on Screen", Columbia University Press.

Psychodynamics of orality Pythagoras and the acousmatics In cinema, acousmatic sound is sound one hears without seeing an originating cause - an invisible sound source. Radio, phonograph and telephone, all which transmit sounds without showing the source cause, are acousmatic media. wikipedia. org May 20, 2007: edited from Chion, M. (1994). "Audio-Vision: Sound on Screen", Columbia University Press.

Psychodynamics of orality Acousmatic is ubiquitous in CALL. Aren’t there situations where acousmatic sound is appropriate? and situations where it is not?

Psychodynamics of orality Acousmatic is ubiquitous in CALL. Aren’t there situations where acousmatic sound is appropriate? and situations where it is not?

Orality and writing production Kellogg: Sentence Production Demands: Verbal Working Memory “Orthographic as well as phonological representations must be activated for written spelling. ” o Bonin, Fayol, & Gombert (1997) “Verbal WM is necessary to maintain representations during grammatical, phonological, and orthographic encoding. ” o Levy & Marek (1999) o Chenoweth & Hayes (2001) o Kellogg, Olive, & Piolat (2006) Kellogg, R. (2006) Training writing skills: A cognitive developmental perspective. EARLI Sig. Writing 2006 Antwerp. http: //webhost. ua. ac. be/sigwriting 2006/Kellogg_Sig. Writing 2006. pdf

Orality and writing production Kellogg: Sentence Production Demands: Verbal Working Memory “Orthographic as well as phonological representations must be activated for written spelling. ” o Bonin, Fayol, & Gombert (1997) “Verbal WM is necessary to maintain representations during grammatical, phonological, and orthographic encoding. ” o Levy & Marek (1999) o Chenoweth & Hayes (2001) o Kellogg, Olive, & Piolat (2006) Kellogg, R. (2006) Training writing skills: A cognitive developmental perspective. EARLI Sig. Writing 2006 Antwerp. http: //webhost. ua. ac. be/sigwriting 2006/Kellogg_Sig. Writing 2006. pdf

Audio sources in life John Thackara* tells of Ivan Illich’s finding that In the 1930 s, 9 out of 10 words a man heard by age 20 were spoken directly to him. In the 1970 s, 9 out of 10 words a man heard by age 20 were spoken through a loudspeaker. Illich (1982): “Computers are doing to communication what fences did to pastures and what cars did to streets. ” * book: In the Bubble blog: http: //www. doorsofperception. com/

Audio sources in life John Thackara* tells of Ivan Illich’s finding that In the 1930 s, 9 out of 10 words a man heard by age 20 were spoken directly to him. In the 1970 s, 9 out of 10 words a man heard by age 20 were spoken through a loudspeaker. Illich (1982): “Computers are doing to communication what fences did to pastures and what cars did to streets. ” * book: In the Bubble blog: http: //www. doorsofperception. com/

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 -even in the womb we can distinguish our mother’s voice from that of another. * *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 -even in the womb we can distinguish our mother’s voice from that of another. * *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 -even in the womb we can distinguish our mother’s voice from that of another. * -a few days after birth, newborns prefer their mother’s voice to that of others, and can distinguish one unfamiliar voice from another. * *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 -even in the womb we can distinguish our mother’s voice from that of another. * -a few days after birth, newborns prefer their mother’s voice to that of others, and can distinguish one unfamiliar voice from another. * *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 -even in the womb we can distinguish our mother’s voice from that of another. * -a few days after birth, newborns prefer their mother’s voice to that of others, and can distinguish one unfamiliar voice from another. * -by 8 months of age we can attend to one voice even when another is speaking at the same time. *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

We are innately orate Human beings “can quickly distinguish one person’s voice from another. ” p. 3 -even in the womb we can distinguish our mother’s voice from that of another. * -a few days after birth, newborns prefer their mother’s voice to that of others, and can distinguish one unfamiliar voice from another. * -by 8 months of age we can attend to one voice even when another is speaking at the same time. *we know these things from differing heartbeat responses Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Humans: experts at extracting social from speech Word choice carries social information. UX work makes choices such as blaming: 1. “Speak up. ” 2. “I’m sorry, I didn’t catch that. ” 3. “We seem to have a bad connection. Could you please repeat that? ” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Humans: experts at extracting social from speech Word choice carries social information. UX work makes choices such as blaming: 1. “Speak up. ” 2. “I’m sorry, I didn’t catch that. ” 3. “We seem to have a bad connection. Could you please repeat that? ” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Humans: experts at extracting social from speech Word choice carries social information. UX work makes choices such as voice quality: Booming deep voice: “Could I possible ask you if you wouldn’t mind doing a tiny favor? ” High-pitched, soft voice: “Pick up that shovel and start digging!” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Humans: experts at extracting social from speech Word choice carries social information. UX work makes choices such as voice quality: Booming deep voice: “Could I possible ask you if you wouldn’t mind doing a tiny favor? ” High-pitched, soft voice: “Pick up that shovel and start digging!” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Humans: automatically react socially to ‘voice’ “. . . the conscious knowledge that speech can have a non-human origin is not enough for the brain to overcome the historically appropriate activation of social relationships by voice [even when voice quality is low and speech understanding is poor]. ” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Humans: automatically react socially to ‘voice’ “. . . the conscious knowledge that speech can have a non-human origin is not enough for the brain to overcome the historically appropriate activation of social relationships by voice [even when voice quality is low and speech understanding is poor]. ” Nass, C. & S. Brave. (2005) Wired for speech. (2005). MIT Press.

Interiority of sound “. . . in an oral noetic economy, mnemonic serviceability is sine qua non. . . ” p. 70 In other words, oral information must be arranged in a certain way [a visual way] if it is to be remembered. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Interiority of sound “. . . in an oral noetic economy, mnemonic serviceability is sine qua non. . . ” p. 70 In other words, oral information must be arranged in a certain way [a visual way] if it is to be remembered. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Incorporating interiority The eye cannot perceive interiority, only surfaces. Taste and smell are not much help in registering interiority/exteriority. Touch can detect interiority but in the process damages it. Hearing can register interiority without violating it. Sight isolates, sound incorporates. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Incorporating interiority The eye cannot perceive interiority, only surfaces. Taste and smell are not much help in registering interiority/exteriority. Touch can detect interiority but in the process damages it. Hearing can register interiority without violating it. Sight isolates, sound incorporates. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge.

Incorporating interiority

Incorporating interiority

Oral memory In primary oral cultures, need for an aide memoire: -heavily rhythmic speech -balanced patterns -epithetic expressions -formulary expressions -standard thematic settings Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge. p. 33

Oral memory In primary oral cultures, need for an aide memoire: -heavily rhythmic speech -balanced patterns -epithetic expressions -formulary expressions -standard thematic settings Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge. p. 33

Oral memory In primary oral cultures, thought and expression are additive rather than subordinate. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge. p. 37 ff.

Oral memory In primary oral cultures, thought and expression are additive rather than subordinate. Ong, W. (1982) Orality and literacy: The technologizing of the word. 1997 reprint: Routledge. p. 37 ff.

Tentative observations based on the exploratory hands-on experience of second language users. Innisfree 1 Innisfree 2 Innisfree 3 Coney Island 1 Coney Island 2 Coney Island 3 Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Innisfree 1 Innisfree 2 Innisfree 3 Coney Island 1 Coney Island 2 Coney Island 3 Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Self-reported estimates of comprehension of samples. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Self-reported estimates of comprehension of samples. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Self-reported estimates of comprehension of samples. Ph. D technical writing class, KUT, May 24, 2007

Tentative observations based on the exploratory hands-on experience of second language users. Self-reported estimates of comprehension of samples. Ph. D technical writing class, KUT, May 24, 2007

How might language learning support systems be influenced by the new T 2 S 2 T technological reality?

How might language learning support systems be influenced by the new T 2 S 2 T technological reality?

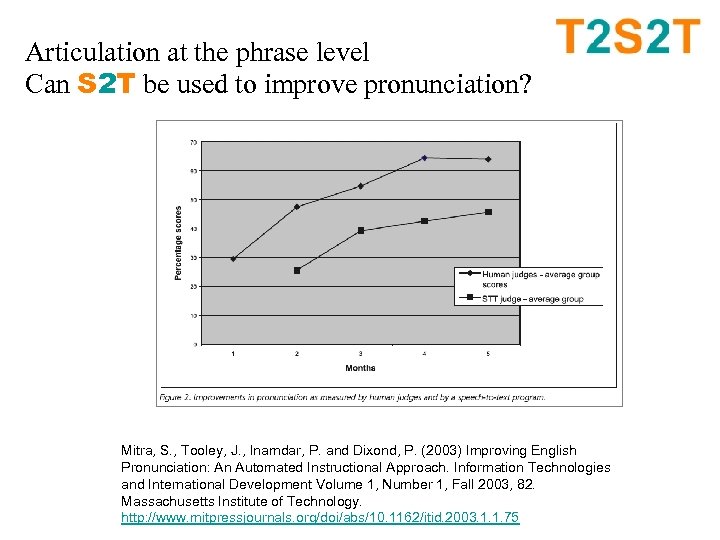

Articulation at the phrase level In the learner’s awareness: S 2 T software foregrounds articulation T 2 S foregrounds intonation, blending, pausing

Articulation at the phrase level In the learner’s awareness: S 2 T software foregrounds articulation T 2 S foregrounds intonation, blending, pausing

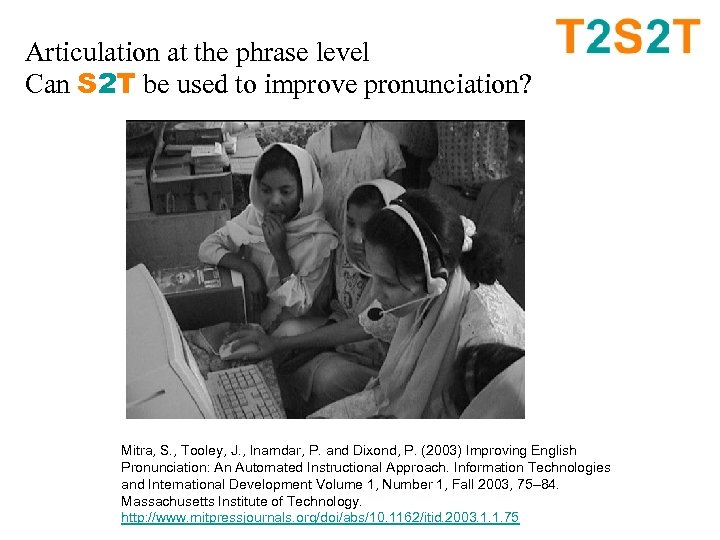

Articulation at the phrase level Can S 2 T be used to improve pronunciation? Mitra, S. , Tooley, J. , Inamdar, P. and Dixond, P. (2003) Improving English Pronunciation: An Automated Instructional Approach. Information Technologies and International Development Volume 1, Number 1, Fall 2003, 75– 84. Massachusetts Institute of Technology. http: //www. mitpressjournals. org/doi/abs/10. 1162/itid. 2003. 1. 1. 75

Articulation at the phrase level Can S 2 T be used to improve pronunciation? Mitra, S. , Tooley, J. , Inamdar, P. and Dixond, P. (2003) Improving English Pronunciation: An Automated Instructional Approach. Information Technologies and International Development Volume 1, Number 1, Fall 2003, 75– 84. Massachusetts Institute of Technology. http: //www. mitpressjournals. org/doi/abs/10. 1162/itid. 2003. 1. 1. 75

Articulation at the phrase level Can S 2 T be used to improve pronunciation? Mitra, S. , Tooley, J. , Inamdar, P. and Dixond, P. (2003) Improving English Pronunciation: An Automated Instructional Approach. Information Technologies and International Development Volume 1, Number 1, Fall 2003, 82. Massachusetts Institute of Technology. http: //www. mitpressjournals. org/doi/abs/10. 1162/itid. 2003. 1. 1. 75

Articulation at the phrase level Can S 2 T be used to improve pronunciation? Mitra, S. , Tooley, J. , Inamdar, P. and Dixond, P. (2003) Improving English Pronunciation: An Automated Instructional Approach. Information Technologies and International Development Volume 1, Number 1, Fall 2003, 82. Massachusetts Institute of Technology. http: //www. mitpressjournals. org/doi/abs/10. 1162/itid. 2003. 1. 1. 75

The looming prospect of a text-reduced world: Specificity as a foreign language e. g. University X web site: Japanese interview => English web site

The looming prospect of a text-reduced world: Specificity as a foreign language e. g. University X web site: Japanese interview => English web site

T 2 S 2 T brings richness to materials design. T 2 S 2 T should imply that there will be a broad, instantaneous choice of interface with data. Aside from tangible choices of medium, other parameters demand attention: input density -number of communication objects per signal input complexity -degree of text reduction -visual field richness -number of simultaneous signals

T 2 S 2 T brings richness to materials design. T 2 S 2 T should imply that there will be a broad, instantaneous choice of interface with data. Aside from tangible choices of medium, other parameters demand attention: input density -number of communication objects per signal input complexity -degree of text reduction -visual field richness -number of simultaneous signals

Sometimes signals are 1. complementary, e. g. Chang’s* sound track supplies many possible intonations for a hypertext. one of 2. conflicting, e. g. phone user in a movie theater 3. mutually irrelevant, e. g. Muzak vs. supermarket sale signs 4. channel competing, e. g. powerpoint text and speech e. g. mosquito buzz vs. TV images 5. internal-external conflicting e. g. on-screen text back-checking during S 2 T writing *http: //www. yhchang. com

Sometimes signals are 1. complementary, e. g. Chang’s* sound track supplies many possible intonations for a hypertext. one of 2. conflicting, e. g. phone user in a movie theater 3. mutually irrelevant, e. g. Muzak vs. supermarket sale signs 4. channel competing, e. g. powerpoint text and speech e. g. mosquito buzz vs. TV images 5. internal-external conflicting e. g. on-screen text back-checking during S 2 T writing *http: //www. yhchang. com

A cubist look at text and attention: Chang, Young-Hae. NIPPON. html/ Here, is text so reduced as to be iconic? How is this parallel to sound objects? *http: //www. yhchang. com

A cubist look at text and attention: Chang, Young-Hae. NIPPON. html/ Here, is text so reduced as to be iconic? How is this parallel to sound objects? *http: //www. yhchang. com

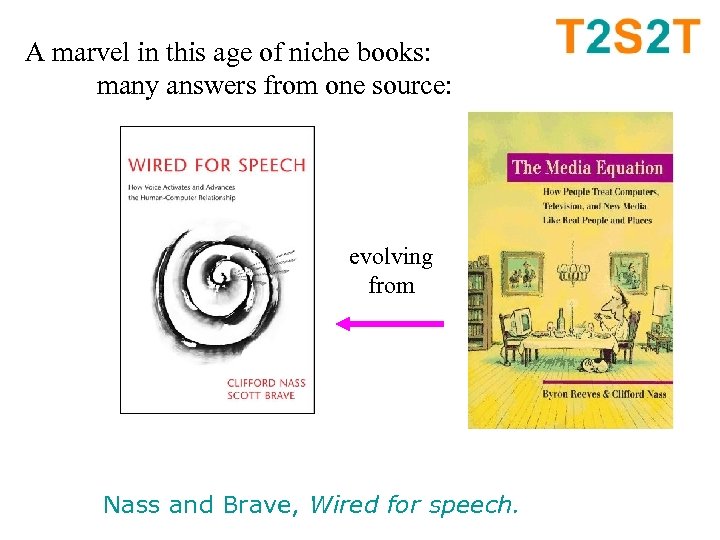

A marvel in this age of niche books: many answers from one source: evolving from Nass and Brave, Wired for speech.

A marvel in this age of niche books: many answers from one source: evolving from Nass and Brave, Wired for speech.

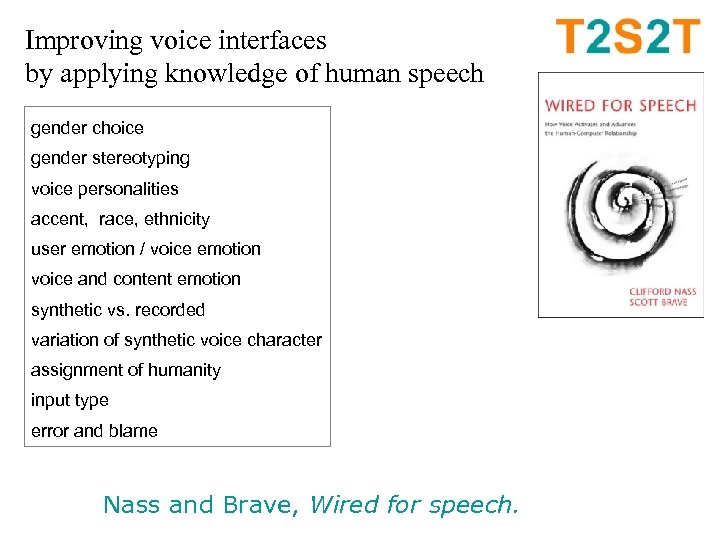

Improving voice interfaces by applying knowledge of human speech gender choice gender stereotyping voice personalities accent, race, ethnicity user emotion / voice emotion voice and content emotion synthetic vs. recorded variation of synthetic voice character assignment of humanity input type error and blame Nass and Brave, Wired for speech.

Improving voice interfaces by applying knowledge of human speech gender choice gender stereotyping voice personalities accent, race, ethnicity user emotion / voice emotion voice and content emotion synthetic vs. recorded variation of synthetic voice character assignment of humanity input type error and blame Nass and Brave, Wired for speech.

Improving voice interfaces by applying knowledge of human speech gender choice gender stereotyping voice personalities accent, race, ethnicity user emotion / voice emotion voice and content emotion synthetic vs. recorded variation of synthetic voice character Emotion can direct users towards or away from an aspect of an interface. Emotion affects cognition, e. g. in vehicle driving support software. Finding: people find it easier and more natural to attend to voice emotions consistent with their own present emotions. p. 77 assignment of humanity input type error and blame Nass and Brave, Wired for speech.

Improving voice interfaces by applying knowledge of human speech gender choice gender stereotyping voice personalities accent, race, ethnicity user emotion / voice emotion voice and content emotion synthetic vs. recorded variation of synthetic voice character Emotion can direct users towards or away from an aspect of an interface. Emotion affects cognition, e. g. in vehicle driving support software. Finding: people find it easier and more natural to attend to voice emotions consistent with their own present emotions. p. 77 assignment of humanity input type error and blame Nass and Brave, Wired for speech.

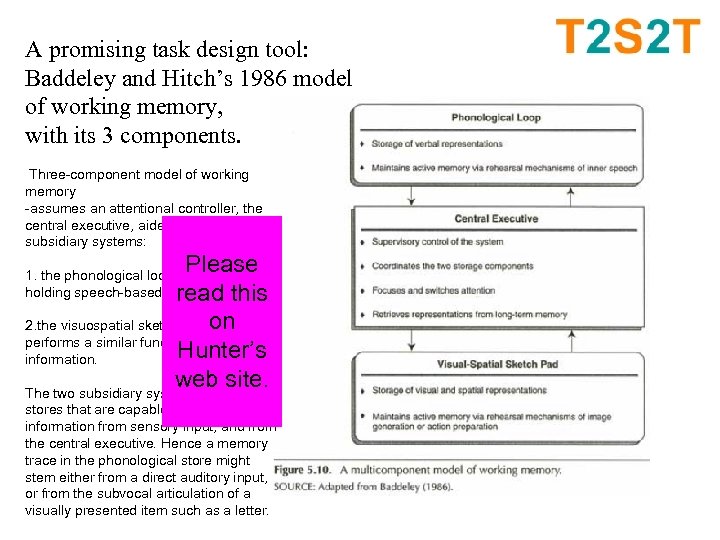

A promising task design tool: Baddeley and Hitch’s 1986 model of working memory, with its 3 components. Three-component model of working memory -assumes an attentional controller, the central executive, aided by two subsidiary systems: Please read this on 2. the visuospatial sketchpad, which performs a similar function for visual Hunter’s information. web site. The two subsidiary systems form active 1. the phonological loop, capable of holding speech-based information, and stores that are capable of combining information from sensory input, and from the central executive. Hence a memory trace in the phonological store might stem either from a direct auditory input, or from the subvocal articulation of a visually presented item such as a letter.

A promising task design tool: Baddeley and Hitch’s 1986 model of working memory, with its 3 components. Three-component model of working memory -assumes an attentional controller, the central executive, aided by two subsidiary systems: Please read this on 2. the visuospatial sketchpad, which performs a similar function for visual Hunter’s information. web site. The two subsidiary systems form active 1. the phonological loop, capable of holding speech-based information, and stores that are capable of combining information from sensory input, and from the central executive. Hence a memory trace in the phonological store might stem either from a direct auditory input, or from the subvocal articulation of a visually presented item such as a letter.

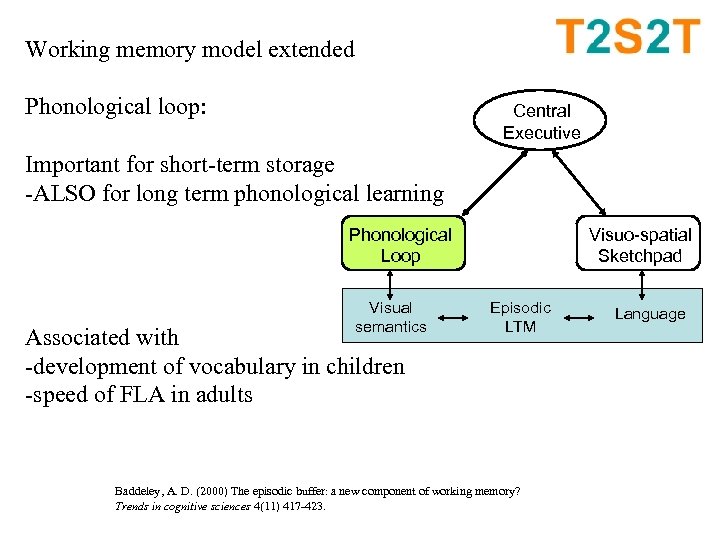

Working memory model extended Phonological loop: Central Executive Important for short-term storage -ALSO for long term phonological learning Phonological Loop Visual semantics Associated with -development of vocabulary in children -speed of FLA in adults Visuo-spatial Sketchpad Episodic LTM Baddeley, A. D. (2000) The episodic buffer: a new component of working memory? Trends in cognitive sciences 4(11) 417 -423. Language

Working memory model extended Phonological loop: Central Executive Important for short-term storage -ALSO for long term phonological learning Phonological Loop Visual semantics Associated with -development of vocabulary in children -speed of FLA in adults Visuo-spatial Sketchpad Episodic LTM Baddeley, A. D. (2000) The episodic buffer: a new component of working memory? Trends in cognitive sciences 4(11) 417 -423. Language

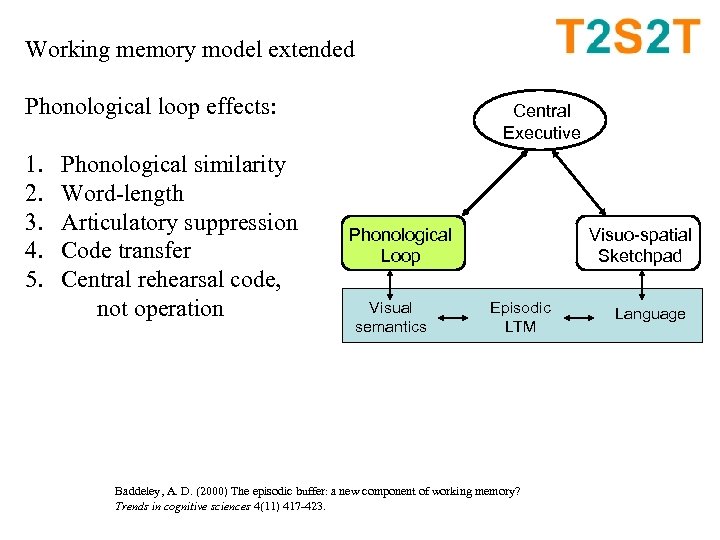

Working memory model extended Phonological loop effects: 1. 2. 3. 4. 5. Phonological similarity Word-length Articulatory suppression Code transfer Central rehearsal code, not operation Central Executive Phonological Loop Visual semantics Visuo-spatial Sketchpad Episodic LTM Baddeley, A. D. (2000) The episodic buffer: a new component of working memory? Trends in cognitive sciences 4(11) 417 -423. Language

Working memory model extended Phonological loop effects: 1. 2. 3. 4. 5. Phonological similarity Word-length Articulatory suppression Code transfer Central rehearsal code, not operation Central Executive Phonological Loop Visual semantics Visuo-spatial Sketchpad Episodic LTM Baddeley, A. D. (2000) The episodic buffer: a new component of working memory? Trends in cognitive sciences 4(11) 417 -423. Language

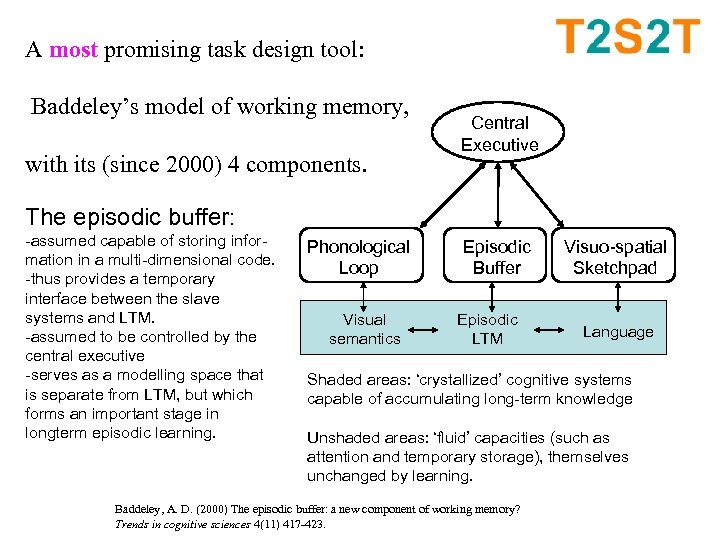

A most promising task design tool: Baddeley’s model of working memory, with its (since 2000) 4 components. Central Executive The episodic buffer: -assumed capable of storing information in a multi-dimensional code. -thus provides a temporary interface between the slave systems and LTM. -assumed to be controlled by the central executive -serves as a modelling space that is separate from LTM, but which forms an important stage in longterm episodic learning. Phonological Loop Visual semantics Episodic Buffer Episodic LTM Visuo-spatial Sketchpad Language Shaded areas: ‘crystallized’ cognitive systems capable of accumulating long-term knowledge Unshaded areas: ‘fluid’ capacities (such as attention and temporary storage), themselves unchanged by learning. Baddeley, A. D. (2000) The episodic buffer: a new component of working memory? Trends in cognitive sciences 4(11) 417 -423.

A most promising task design tool: Baddeley’s model of working memory, with its (since 2000) 4 components. Central Executive The episodic buffer: -assumed capable of storing information in a multi-dimensional code. -thus provides a temporary interface between the slave systems and LTM. -assumed to be controlled by the central executive -serves as a modelling space that is separate from LTM, but which forms an important stage in longterm episodic learning. Phonological Loop Visual semantics Episodic Buffer Episodic LTM Visuo-spatial Sketchpad Language Shaded areas: ‘crystallized’ cognitive systems capable of accumulating long-term knowledge Unshaded areas: ‘fluid’ capacities (such as attention and temporary storage), themselves unchanged by learning. Baddeley, A. D. (2000) The episodic buffer: a new component of working memory? Trends in cognitive sciences 4(11) 417 -423.

Current state: Isn’t CALL just a subset of User Experience (UX? )

Current state: Isn’t CALL just a subset of User Experience (UX? )

Thank you for your kind attention. Don’t hesitate to write to me. Lawrie Hunter Kochi University of Technology http: //www. core. kochi-tech. ac. jp/hunter

Thank you for your kind attention. Don’t hesitate to write to me. Lawrie Hunter Kochi University of Technology http: //www. core. kochi-tech. ac. jp/hunter