648a0e1505a6ce35e32a390575ce11ef.ppt

- Количество слайдов: 192

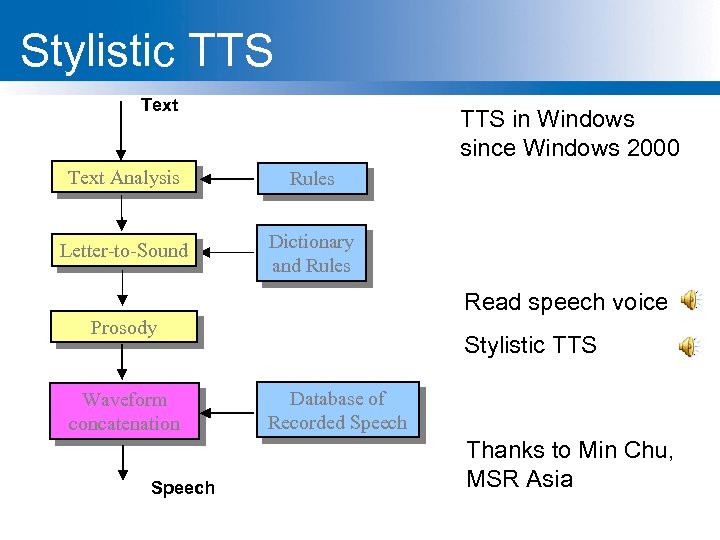

Text to Speech Systems (TTS) EE 516 Spring 2009 Alex Acero

Text to Speech Systems (TTS) EE 516 Spring 2009 Alex Acero

Acknowledgments • Thanks to Dan Jurafsky for a lot of slides • Also thanks to Alan Black, Jennifer Venditti, Richard Sproat

Acknowledgments • Thanks to Dan Jurafsky for a lot of slides • Also thanks to Alan Black, Jennifer Venditti, Richard Sproat

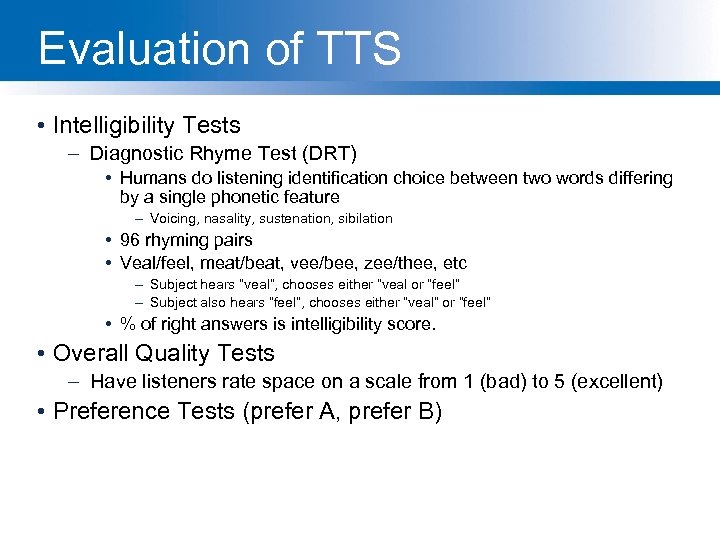

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Dave Barry on TTS “And computers are getting smarter all the time; scientists tell us that soon they will be able to talk with us. (By "they", I mean computers; I doubt scientists will ever be able to talk to us. )

Dave Barry on TTS “And computers are getting smarter all the time; scientists tell us that soon they will be able to talk with us. (By "they", I mean computers; I doubt scientists will ever be able to talk to us. )

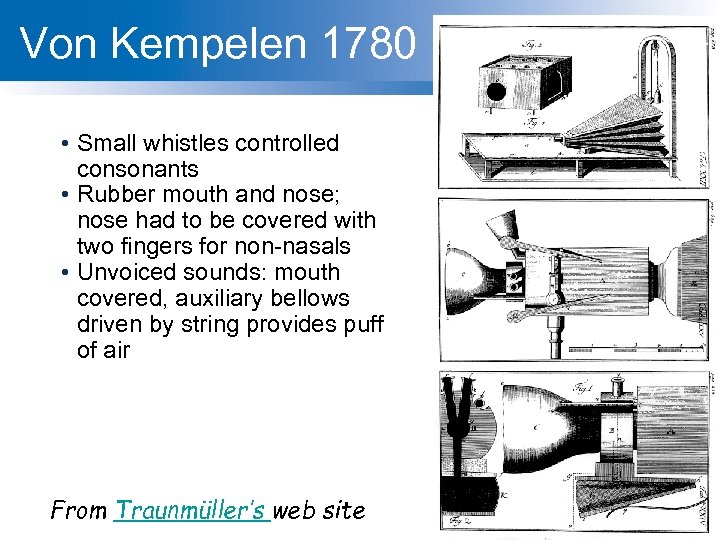

Von Kempelen 1780 • Small whistles controlled consonants • Rubber mouth and nose; nose had to be covered with two fingers for non-nasals • Unvoiced sounds: mouth covered, auxiliary bellows driven by string provides puff of air From Traunmüller’s web site 5

Von Kempelen 1780 • Small whistles controlled consonants • Rubber mouth and nose; nose had to be covered with two fingers for non-nasals • Unvoiced sounds: mouth covered, auxiliary bellows driven by string provides puff of air From Traunmüller’s web site 5

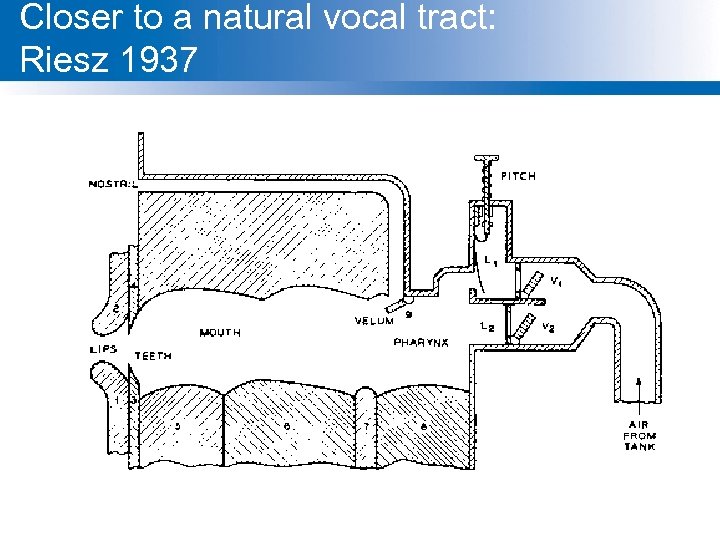

Closer to a natural vocal tract: Riesz 1937

Closer to a natural vocal tract: Riesz 1937

The 1936 UK Speaking Clock From http: //web. ukonline. co. uk/freshwater/clocks/spkgclock. htm

The 1936 UK Speaking Clock From http: //web. ukonline. co. uk/freshwater/clocks/spkgclock. htm

The UK Speaking Clock • • July 24, 1936 Photographic storage on 4 glass disks 2 disks for minutes, 1 for hour, one for seconds. Other words in sentence distributed across 4 disks, so all 4 used at once. • Voice of “Miss J. Cain”

The UK Speaking Clock • • July 24, 1936 Photographic storage on 4 glass disks 2 disks for minutes, 1 for hour, one for seconds. Other words in sentence distributed across 4 disks, so all 4 used at once. • Voice of “Miss J. Cain”

A technician adjusts the amplifiers of the first speaking clock From http: //web. ukonline. co. uk/freshwater/clocks/spkgclock. htm

A technician adjusts the amplifiers of the first speaking clock From http: //web. ukonline. co. uk/freshwater/clocks/spkgclock. htm

Homer Dudley’s VODER 1939 • Synthesizing speech by electrical means • 1939 World’s Fair • Manually controlled through complex keyboard • Operator training was a problem • 1939 vocoder

Homer Dudley’s VODER 1939 • Synthesizing speech by electrical means • 1939 World’s Fair • Manually controlled through complex keyboard • Operator training was a problem • 1939 vocoder

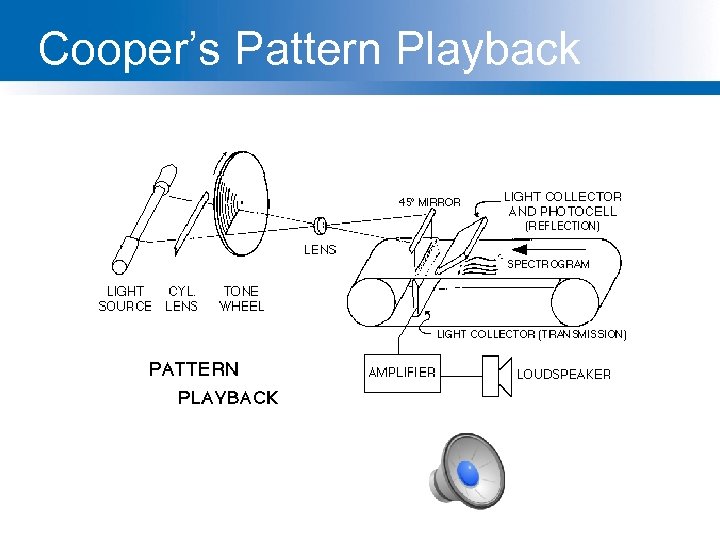

Cooper’s Pattern Playback

Cooper’s Pattern Playback

Dennis Klatt’s history of TTS (1987) • More history at http: //www. festvox. org/history/klatt. html (Dennis Klatt)

Dennis Klatt’s history of TTS (1987) • More history at http: //www. festvox. org/history/klatt. html (Dennis Klatt)

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

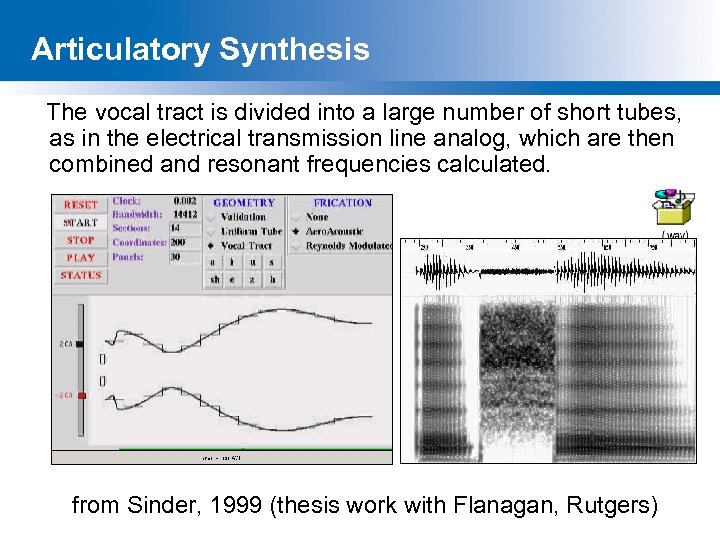

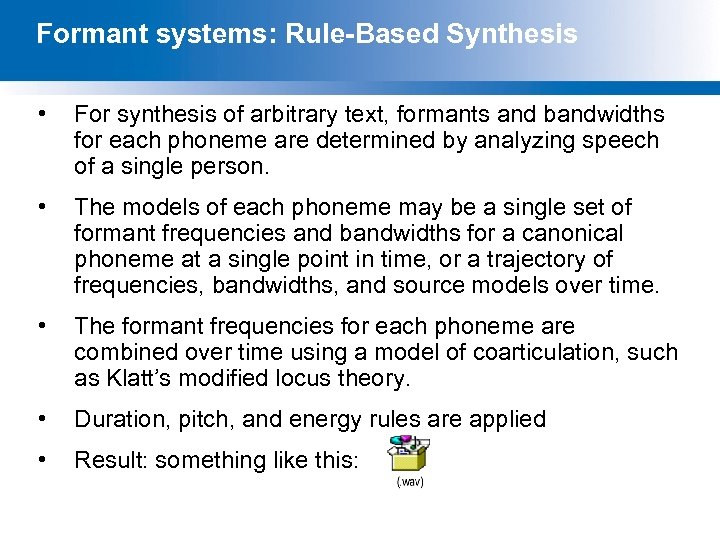

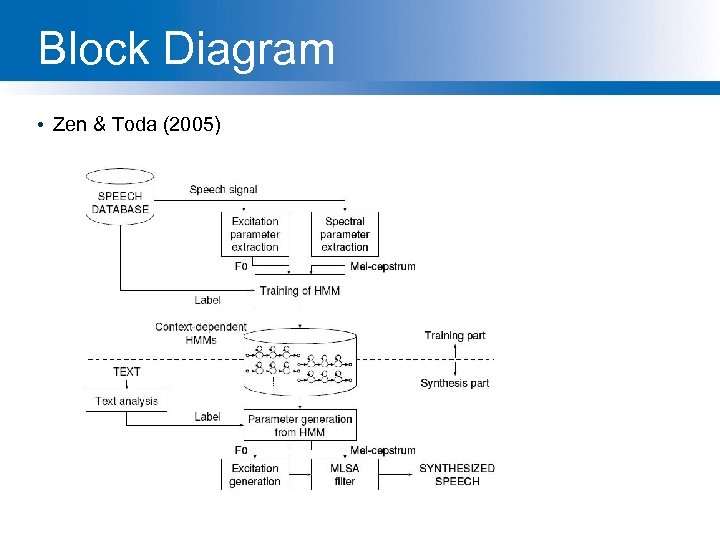

Types of Modern Synthesis • Articulatory Synthesis: – Model movements of articulators and acoustics of vocal tract • Formant Synthesis: – Start with acoustics, create rules/filters to create each formant • Concatenative Synthesis: – Use databases of stored speech to assemble new utterances • HMM-Based Synthesis – Run an HMM in generation mode

Types of Modern Synthesis • Articulatory Synthesis: – Model movements of articulators and acoustics of vocal tract • Formant Synthesis: – Start with acoustics, create rules/filters to create each formant • Concatenative Synthesis: – Use databases of stored speech to assemble new utterances • HMM-Based Synthesis – Run an HMM in generation mode

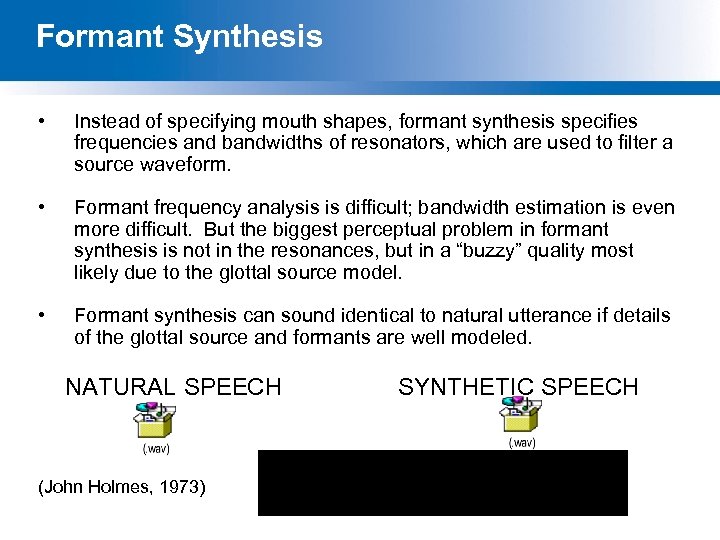

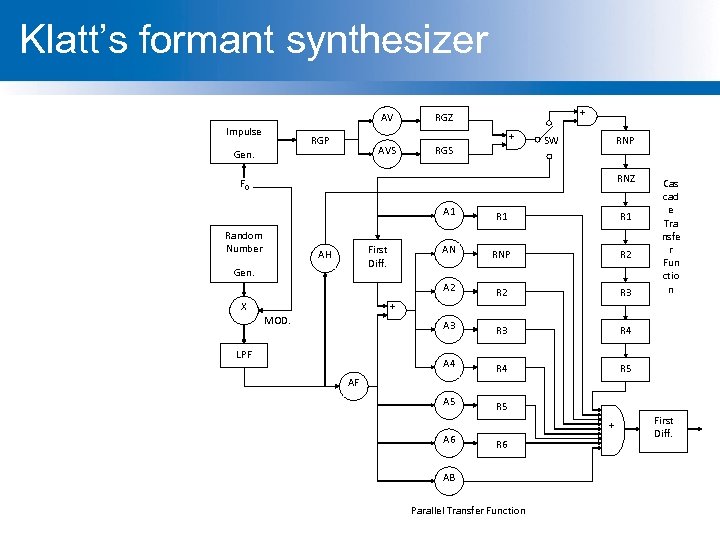

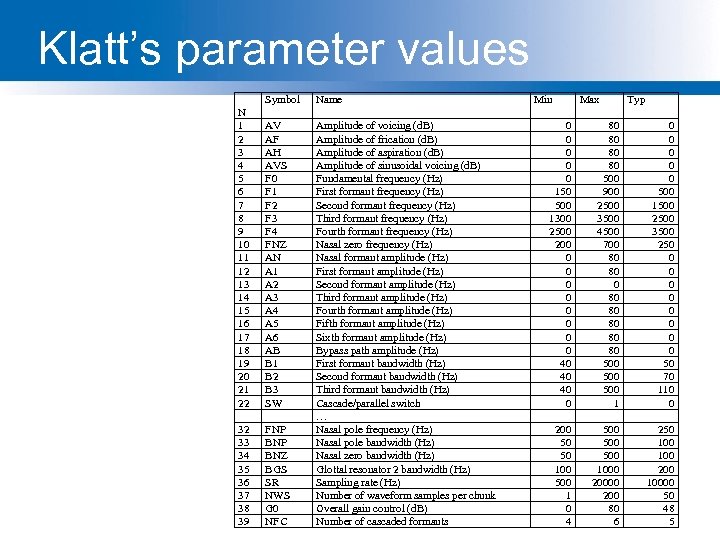

Formant Synthesis • Were the most common commercial systems while computers were relatively underpowered. • 1979 MITalk (Allen, Hunnicut, Klatt) • 1983 DECtalk system • The voice of Stephen Hawking

Formant Synthesis • Were the most common commercial systems while computers were relatively underpowered. • 1979 MITalk (Allen, Hunnicut, Klatt) • 1983 DECtalk system • The voice of Stephen Hawking

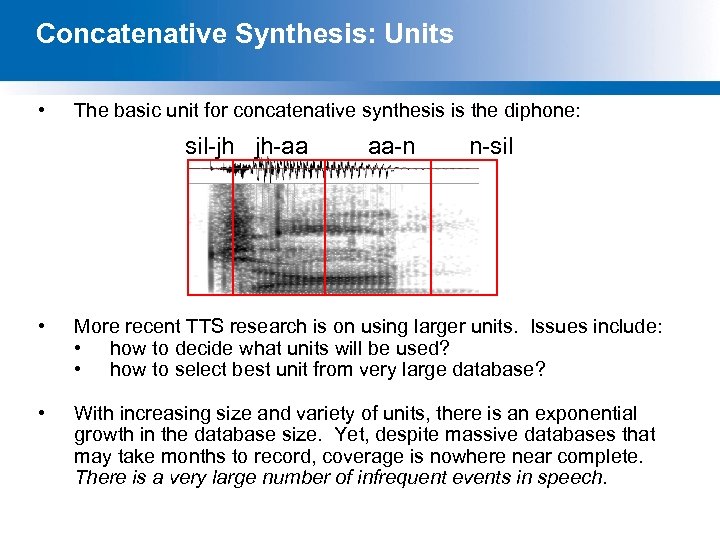

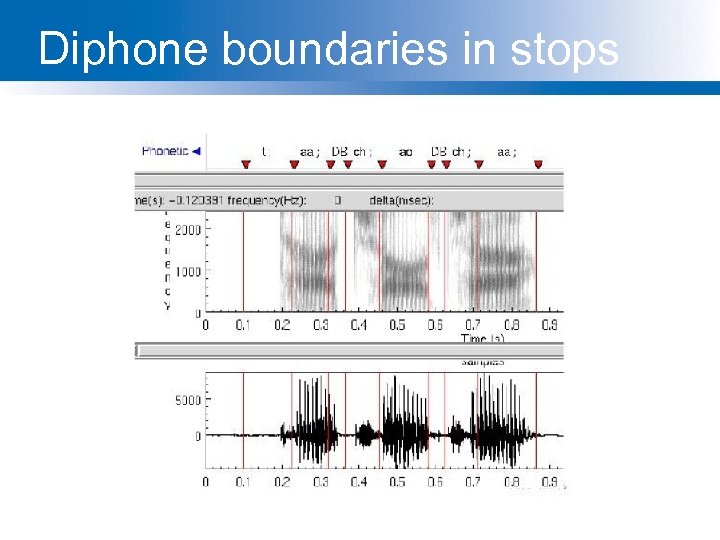

Concatenative Synthesis • All current commercial systems. • Diphone Synthesis – Units are diphones; middle of one phone to middle of next. – Why? Middle of phone is steady state. – Record 1 speaker saying each diphone • Unit Selection Synthesis – Larger units – Record 10 hours or more, so have multiple copies of each unit – Use search to find best sequence of units

Concatenative Synthesis • All current commercial systems. • Diphone Synthesis – Units are diphones; middle of one phone to middle of next. – Why? Middle of phone is steady state. – Record 1 speaker saying each diphone • Unit Selection Synthesis – Larger units – Record 10 hours or more, so have multiple copies of each unit – Use search to find best sequence of units

TTS Demos (all are Unit-Selection) • ATT: – http: //www. research. att. com/~ttsweb/tts/demo. php • Microsoft – http: //research. microsoft. com/en-us/groups/speech/tts. aspx • Festival – http: //www-2. cs. cmu. edu/~awb/festival_demos/index. html • Cepstral – http: //www. cepstral. com/cgi-bin/demos/general • IBM – http: //www-306. ibm. com/software/pervasive/tech/demos/tts. shtml

TTS Demos (all are Unit-Selection) • ATT: – http: //www. research. att. com/~ttsweb/tts/demo. php • Microsoft – http: //research. microsoft. com/en-us/groups/speech/tts. aspx • Festival – http: //www-2. cs. cmu. edu/~awb/festival_demos/index. html • Cepstral – http: //www. cepstral. com/cgi-bin/demos/general • IBM – http: //www-306. ibm. com/software/pervasive/tech/demos/tts. shtml

Text Normalization • Analysis of raw text into pronounceable words • Sample problems: – – He stole $100 million from the bank It's 13 St. Andrews St. The home page is http: //ee. washington. edu yes, see you the following tues, that's 11/12/01 • Steps – – Identify tokens in text Chunk tokens into reasonably sized sections Map tokens to words Identify types for words

Text Normalization • Analysis of raw text into pronounceable words • Sample problems: – – He stole $100 million from the bank It's 13 St. Andrews St. The home page is http: //ee. washington. edu yes, see you the following tues, that's 11/12/01 • Steps – – Identify tokens in text Chunk tokens into reasonably sized sections Map tokens to words Identify types for words

Grapheme to Phoneme • How to pronounce a word? Look in dictionary! But: – Unknown words and names will be missing – Turkish, German, and other hard languages • uygarla. St. Iramad. Iklar. Im. Izdanm. ISs. In. Izcas. Ina • ``(behaving) as if you are among those whom we could not civilize’ • uygar +la. S +t. Ir +ama +d. Ik +lar +Im. Iz +dan +m. IS +s. In. Iz +cas. Ina civilized +bec +caus +Neg. Able +ppart +pl +p 1 pl +abl +past +2 pl +As. If • So need Letter to Sound Rules • Also homograph disambiguation (wind, live, read)

Grapheme to Phoneme • How to pronounce a word? Look in dictionary! But: – Unknown words and names will be missing – Turkish, German, and other hard languages • uygarla. St. Iramad. Iklar. Im. Izdanm. ISs. In. Izcas. Ina • ``(behaving) as if you are among those whom we could not civilize’ • uygar +la. S +t. Ir +ama +d. Ik +lar +Im. Iz +dan +m. IS +s. In. Iz +cas. Ina civilized +bec +caus +Neg. Able +ppart +pl +p 1 pl +abl +past +2 pl +As. If • So need Letter to Sound Rules • Also homograph disambiguation (wind, live, read)

Prosody: from words+phones to boundaries, accent, F 0, duration • Prosodic phrasing – Need to break utterances into phrases – Punctuation is useful, not sufficient • Accents: – Predictions of accents: which syllables should be accented – Realization of F 0 contour: given accents/tones, generate F 0 contour • Duration: – Predicting duration of each phone

Prosody: from words+phones to boundaries, accent, F 0, duration • Prosodic phrasing – Need to break utterances into phrases – Punctuation is useful, not sufficient • Accents: – Predictions of accents: which syllables should be accented – Realization of F 0 contour: given accents/tones, generate F 0 contour • Duration: – Predicting duration of each phone

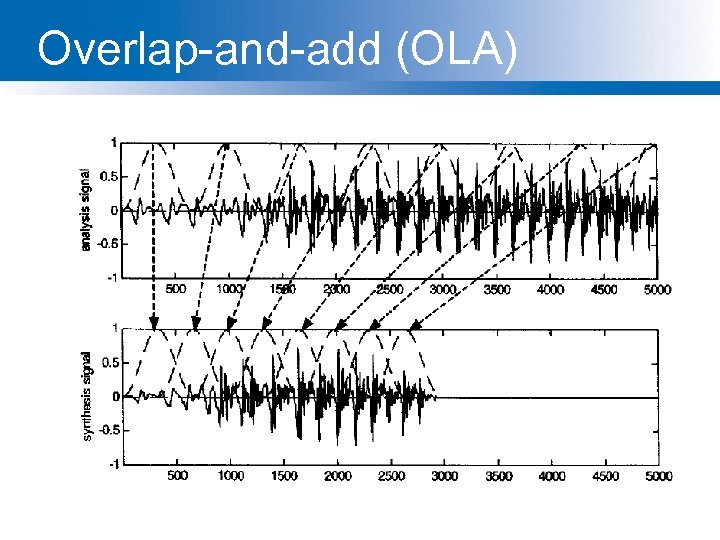

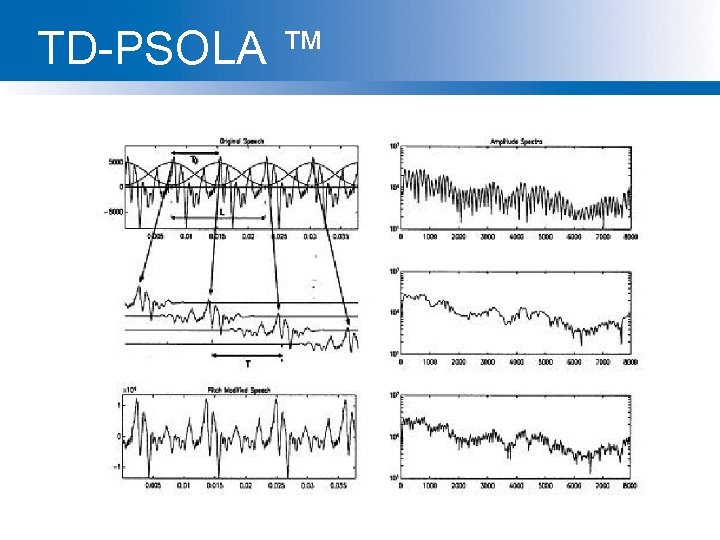

Waveform synthesis: from segments, f 0, duration to waveform • Collecting diphones: – need to record diphones in correct contexts • l sounds different in onset than coda, t is flapped sometimes, etc. – need quiet recording room, maybe EEG, etc. – then need to label them very exactly • Unit selection: how to pick the right unit? Search • Joining the units • dumb (just stick'em together) • PSOLA (Pitch-Synchronous Overlap and Add) • MBROLA (Multi-band overlap and add)

Waveform synthesis: from segments, f 0, duration to waveform • Collecting diphones: – need to record diphones in correct contexts • l sounds different in onset than coda, t is flapped sometimes, etc. – need quiet recording room, maybe EEG, etc. – then need to label them very exactly • Unit selection: how to pick the right unit? Search • Joining the units • dumb (just stick'em together) • PSOLA (Pitch-Synchronous Overlap and Add) • MBROLA (Multi-band overlap and add)

Festival • http: //festvox. org/festival/ • Open source speech synthesis system • Multiplatform (Windows/Unix) • Designed for development and runtime use – Use in many academic systems (and some commercial) – Hundreds of thousands of users – Multilingual • No built-in language • Designed to allow addition of new languages – Additional tools for rapid voice development • Statistical learning tools • Scripts for building models

Festival • http: //festvox. org/festival/ • Open source speech synthesis system • Multiplatform (Windows/Unix) • Designed for development and runtime use – Use in many academic systems (and some commercial) – Hundreds of thousands of users – Multilingual • No built-in language • Designed to allow addition of new languages – Additional tools for rapid voice development • Statistical learning tools • Scripts for building models

Festival as software • C/C++ code with Scheme scripting language • General replaceable modules: – Lexicons, LTS, duration, intonation, phrasing, POS tagging, tokenizing, diphone/unit selection, signal processing • General tools – Intonation analysis (f 0, Tilt), signal processing, CART building, Ngram, SCFG, WFST

Festival as software • C/C++ code with Scheme scripting language • General replaceable modules: – Lexicons, LTS, duration, intonation, phrasing, POS tagging, tokenizing, diphone/unit selection, signal processing • General tools – Intonation analysis (f 0, Tilt), signal processing, CART building, Ngram, SCFG, WFST

CMU Fest. Vox project • Festival is an engine, how do you make voices? • Festvox: building synthetic voices: – – Tools, scripts, documentation Discussion and examples for building voices Example voice databases Step by step walkthroughs of processes • Support for English and other languages • Support for different waveform synthesis methods – Diphone – Unit selection – Limited domain

CMU Fest. Vox project • Festival is an engine, how do you make voices? • Festvox: building synthetic voices: – – Tools, scripts, documentation Discussion and examples for building voices Example voice databases Step by step walkthroughs of processes • Support for English and other languages • Support for different waveform synthesis methods – Diphone – Unit selection – Limited domain

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Text Processing • • He stole $100 million from the bank It’s 13 St. Andrews St. The home page is http: //ee. washington. edu Yes, see you the following tues, that’s 11/12/01 IV: four, fourth, I. V. IRA: I. R. A. or Ira 1750: seventeen fifty (date, address) or one thousand seven… (dollars)

Text Processing • • He stole $100 million from the bank It’s 13 St. Andrews St. The home page is http: //ee. washington. edu Yes, see you the following tues, that’s 11/12/01 IV: four, fourth, I. V. IRA: I. R. A. or Ira 1750: seventeen fifty (date, address) or one thousand seven… (dollars)

Steps • • Identify tokens in text Chunk tokens Identify types of tokens Convert tokens to words

Steps • • Identify tokens in text Chunk tokens Identify types of tokens Convert tokens to words

Step 1: identify tokens and chunk • Whitespace can be viewed as separators • Punctuation can be separated from the raw tokens • Festival converts text into – ordered list of tokens – each with features: • its own preceding whitespace • its own succeeding punctuation

Step 1: identify tokens and chunk • Whitespace can be viewed as separators • Punctuation can be separated from the raw tokens • Festival converts text into – ordered list of tokens – each with features: • its own preceding whitespace • its own succeeding punctuation

End-of-utterance detection • Relatively simple if utterance ends in ? ! • But what about ambiguity of “. ” • Ambiguous between end-of-utterance and end-ofabbreviation – My place on Forest Ave. is around the corner. – I live at 360 Forest Ave. – (Not “I live at 360 Forest Ave. . ”) • How to solve this period-disambiguation task?

End-of-utterance detection • Relatively simple if utterance ends in ? ! • But what about ambiguity of “. ” • Ambiguous between end-of-utterance and end-ofabbreviation – My place on Forest Ave. is around the corner. – I live at 360 Forest Ave. – (Not “I live at 360 Forest Ave. . ”) • How to solve this period-disambiguation task?

Some rules used • A dot with one or two letters is an abbrev • A dot with 3 cap letters is an abbrev. • An abbrev followed by 2 spaces and a capital letter is an end-of-utterance • Non-abbrevs followed by capitalized word are breaks • This fails for – Cog. Sci. Newsletter – Lots of cases at end of line. – Badly spaced/capitalized sentences

Some rules used • A dot with one or two letters is an abbrev • A dot with 3 cap letters is an abbrev. • An abbrev followed by 2 spaces and a capital letter is an end-of-utterance • Non-abbrevs followed by capitalized word are breaks • This fails for – Cog. Sci. Newsletter – Lots of cases at end of line. – Badly spaced/capitalized sentences

More sophisticated decision tree features • Prob(word with “. ” occurs at end-of-s) • Prob(word after “. ” occurs at begin-of-s) • Length of word with “. ” • Length of word after “. ” • Case of word with “. ”: Upper, Lower, Cap, Number • Case of word after “. ”: Upper, Lower, Cap, Number • Punctuation after “. ” (if any) • Abbreviation class of word with “. ” (month name, unit-ofmeasure, title, address name, etc)

More sophisticated decision tree features • Prob(word with “. ” occurs at end-of-s) • Prob(word after “. ” occurs at begin-of-s) • Length of word with “. ” • Length of word after “. ” • Case of word with “. ”: Upper, Lower, Cap, Number • Case of word after “. ”: Upper, Lower, Cap, Number • Punctuation after “. ” (if any) • Abbreviation class of word with “. ” (month name, unit-ofmeasure, title, address name, etc)

CART • Breiman, Friedman, Olshen, Stone. 1984. Classification and Regression Trees. Chapman & Hall, New York. • Description/Use: – Binary tree of decisions, terminal nodes determine prediction (“ 20 questions”) – If dependent variable is categorial, “classification tree”, – If continuous, “regression tree”

CART • Breiman, Friedman, Olshen, Stone. 1984. Classification and Regression Trees. Chapman & Hall, New York. • Description/Use: – Binary tree of decisions, terminal nodes determine prediction (“ 20 questions”) – If dependent variable is categorial, “classification tree”, – If continuous, “regression tree”

Learning DTs • • DTs are rarely built by hand Hand-building only possible for very simple features, domains Lots of algorithms for DT induction I’ll give quick intuition here

Learning DTs • • DTs are rarely built by hand Hand-building only possible for very simple features, domains Lots of algorithms for DT induction I’ll give quick intuition here

CART Estimation • Creating a binary decision tree for classification or regression involves 3 steps: – Splitting Rules: Which split to take at a node? – Stopping Rules: When to declare a node terminal? – Node Assignment: Which class/value to assign to a terminal node?

CART Estimation • Creating a binary decision tree for classification or regression involves 3 steps: – Splitting Rules: Which split to take at a node? – Stopping Rules: When to declare a node terminal? – Node Assignment: Which class/value to assign to a terminal node?

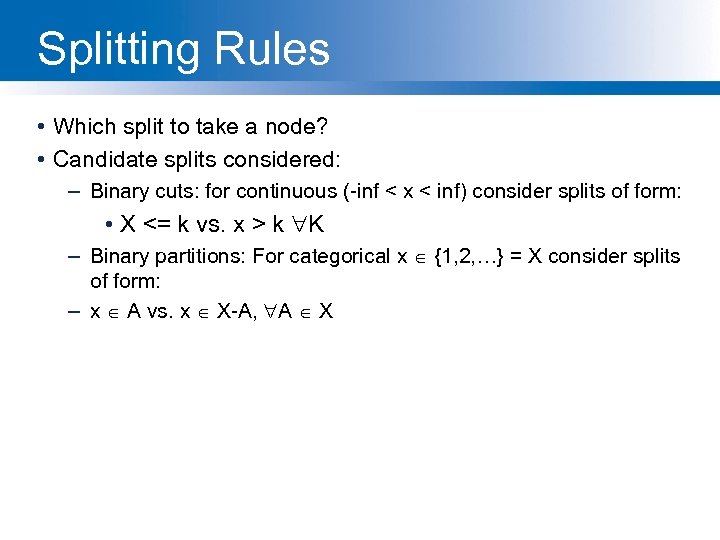

Splitting Rules • Which split to take a node? • Candidate splits considered: – Binary cuts: for continuous (-inf < x < inf) consider splits of form: • X <= k vs. x > k K – Binary partitions: For categorical x {1, 2, …} = X consider splits of form: – x A vs. x X-A, A X

Splitting Rules • Which split to take a node? • Candidate splits considered: – Binary cuts: for continuous (-inf < x < inf) consider splits of form: • X <= k vs. x > k K – Binary partitions: For categorical x {1, 2, …} = X consider splits of form: – x A vs. x X-A, A X

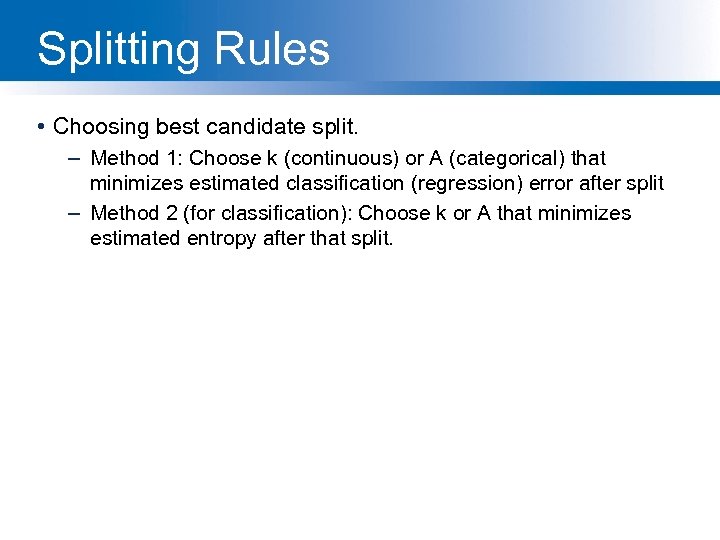

Splitting Rules • Choosing best candidate split. – Method 1: Choose k (continuous) or A (categorical) that minimizes estimated classification (regression) error after split – Method 2 (for classification): Choose k or A that minimizes estimated entropy after that split.

Splitting Rules • Choosing best candidate split. – Method 1: Choose k (continuous) or A (categorical) that minimizes estimated classification (regression) error after split – Method 2 (for classification): Choose k or A that minimizes estimated entropy after that split.

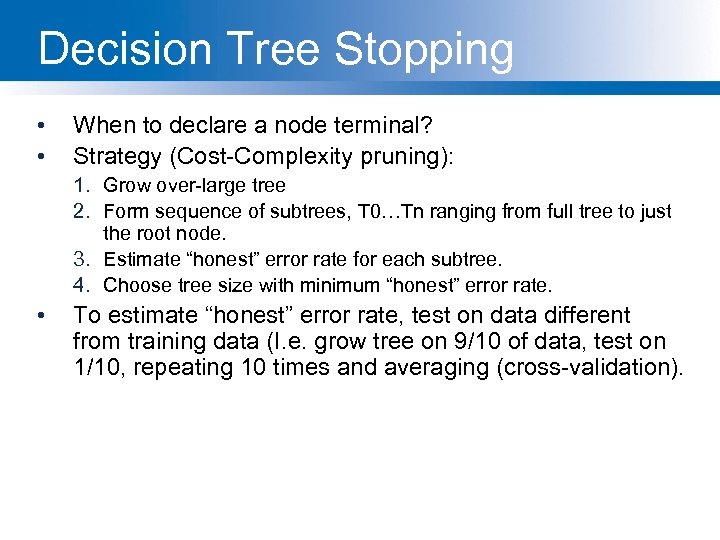

Decision Tree Stopping • • When to declare a node terminal? Strategy (Cost-Complexity pruning): 1. Grow over-large tree 2. Form sequence of subtrees, T 0…Tn ranging from full tree to just the root node. 3. Estimate “honest” error rate for each subtree. 4. Choose tree size with minimum “honest” error rate. • To estimate “honest” error rate, test on data different from training data (I. e. grow tree on 9/10 of data, test on 1/10, repeating 10 times and averaging (cross-validation).

Decision Tree Stopping • • When to declare a node terminal? Strategy (Cost-Complexity pruning): 1. Grow over-large tree 2. Form sequence of subtrees, T 0…Tn ranging from full tree to just the root node. 3. Estimate “honest” error rate for each subtree. 4. Choose tree size with minimum “honest” error rate. • To estimate “honest” error rate, test on data different from training data (I. e. grow tree on 9/10 of data, test on 1/10, repeating 10 times and averaging (cross-validation).

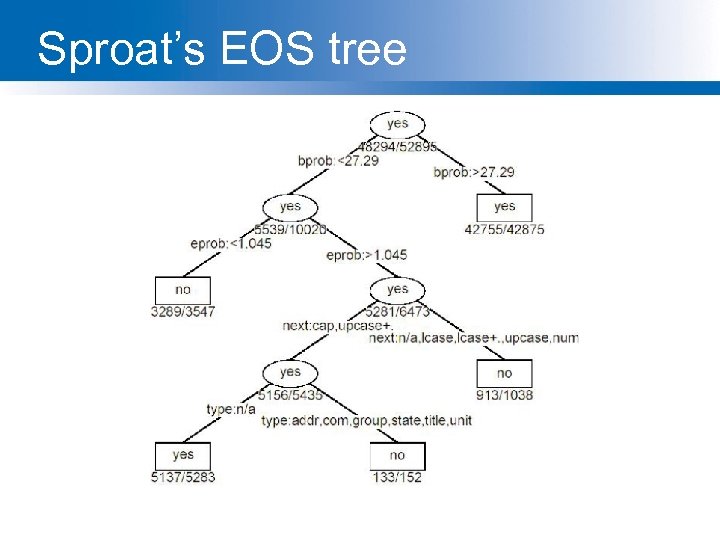

Sproat’s EOS tree

Sproat’s EOS tree

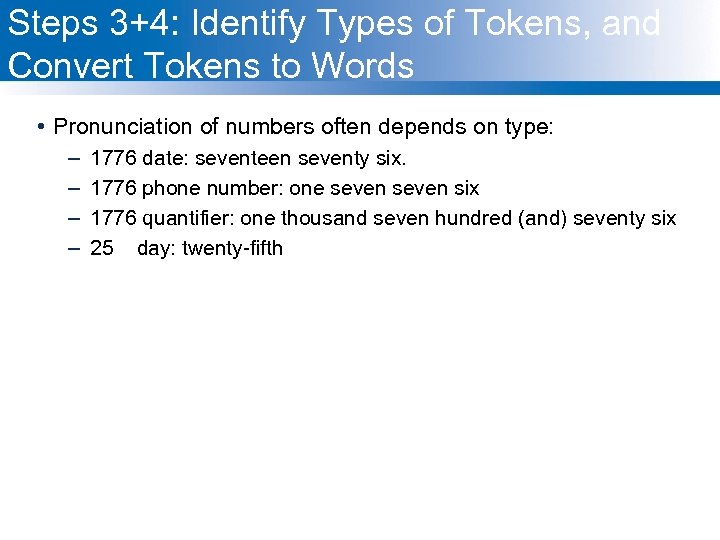

Steps 3+4: Identify Types of Tokens, and Convert Tokens to Words • Pronunciation of numbers often depends on type: – – 1776 date: seventeen seventy six. 1776 phone number: one seven six 1776 quantifier: one thousand seven hundred (and) seventy six 25 day: twenty-fifth

Steps 3+4: Identify Types of Tokens, and Convert Tokens to Words • Pronunciation of numbers often depends on type: – – 1776 date: seventeen seventy six. 1776 phone number: one seven six 1776 quantifier: one thousand seven hundred (and) seventy six 25 day: twenty-fifth

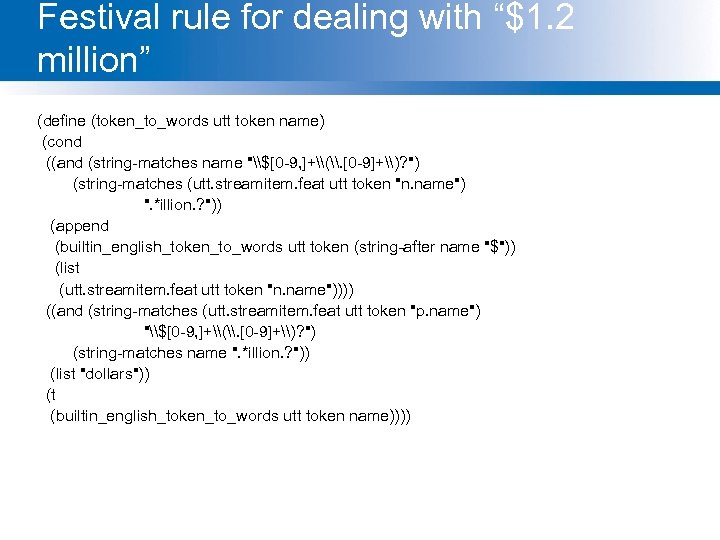

Festival rule for dealing with “$1. 2 million” (define (token_to_words utt token name) (cond ((and (string-matches name "\$[0 -9, ]+\(\. [0 -9]+\)? ") (string-matches (utt. streamitem. feat utt token "n. name") ". *illion. ? ")) (append (builtin_english_token_to_words utt token (string-after name "$")) (list (utt. streamitem. feat utt token "n. name")))) ((and (string-matches (utt. streamitem. feat utt token "p. name") "\$[0 -9, ]+\(\. [0 -9]+\)? ") (string-matches name ". *illion. ? ")) (list "dollars")) (t (builtin_english_token_to_words utt token name))))

Festival rule for dealing with “$1. 2 million” (define (token_to_words utt token name) (cond ((and (string-matches name "\$[0 -9, ]+\(\. [0 -9]+\)? ") (string-matches (utt. streamitem. feat utt token "n. name") ". *illion. ? ")) (append (builtin_english_token_to_words utt token (string-after name "$")) (list (utt. streamitem. feat utt token "n. name")))) ((and (string-matches (utt. streamitem. feat utt token "p. name") "\$[0 -9, ]+\(\. [0 -9]+\)? ") (string-matches name ". *illion. ? ")) (list "dollars")) (t (builtin_english_token_to_words utt token name))))

Rule-based versus machine learning • As always, we can do things either way, or more often by a combination • Rule-based: – Simple – Quick – Can be more robust • Machine Learning – Works for complex problems where rules hard to write – Higher accuracy in general – But worse generalization to very different test sets • Real TTS and NLP systems – Often use aspects of both.

Rule-based versus machine learning • As always, we can do things either way, or more often by a combination • Rule-based: – Simple – Quick – Can be more robust • Machine Learning – Works for complex problems where rules hard to write – Higher accuracy in general – But worse generalization to very different test sets • Real TTS and NLP systems – Often use aspects of both.

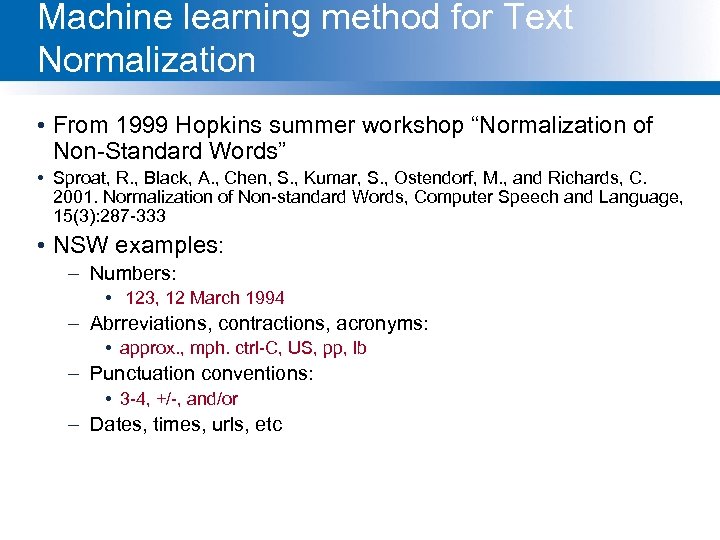

Machine learning method for Text Normalization • From 1999 Hopkins summer workshop “Normalization of Non-Standard Words” • Sproat, R. , Black, A. , Chen, S. , Kumar, S. , Ostendorf, M. , and Richards, C. 2001. Normalization of Non-standard Words, Computer Speech and Language, 15(3): 287 -333 • NSW examples: – Numbers: • 123, 12 March 1994 – Abrreviations, contractions, acronyms: • approx. , mph. ctrl-C, US, pp, lb – Punctuation conventions: • 3 -4, +/-, and/or – Dates, times, urls, etc

Machine learning method for Text Normalization • From 1999 Hopkins summer workshop “Normalization of Non-Standard Words” • Sproat, R. , Black, A. , Chen, S. , Kumar, S. , Ostendorf, M. , and Richards, C. 2001. Normalization of Non-standard Words, Computer Speech and Language, 15(3): 287 -333 • NSW examples: – Numbers: • 123, 12 March 1994 – Abrreviations, contractions, acronyms: • approx. , mph. ctrl-C, US, pp, lb – Punctuation conventions: • 3 -4, +/-, and/or – Dates, times, urls, etc

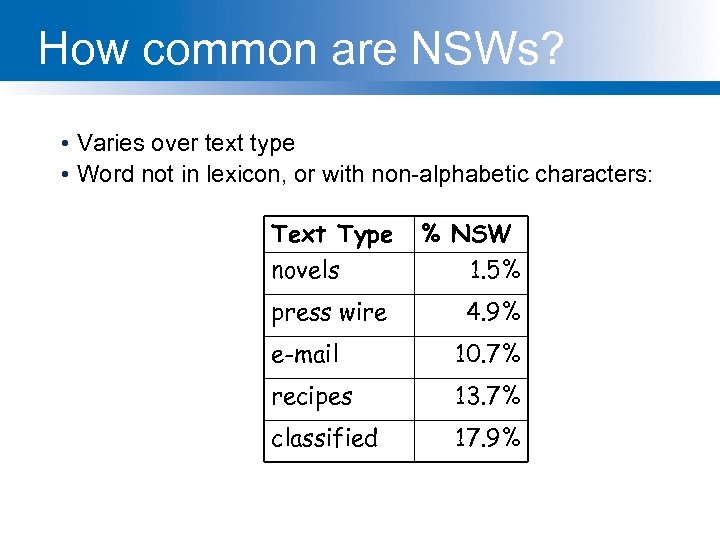

How common are NSWs? • Varies over text type • Word not in lexicon, or with non-alphabetic characters: Text Type novels % NSW 1. 5% press wire 4. 9% e-mail 10. 7% recipes 13. 7% classified 17. 9%

How common are NSWs? • Varies over text type • Word not in lexicon, or with non-alphabetic characters: Text Type novels % NSW 1. 5% press wire 4. 9% e-mail 10. 7% recipes 13. 7% classified 17. 9%

How hard are NSWs? • Identification: – Some homographs “Wed”, “PA” – False positives: OOV • Realization: – Simple rule: money, $2. 34 – Type identification+rules: numbers – Text type specific knowledge (in classified ads, BR for bedroom) • Ambiguity (acceptable multiple answers) – “D. C. ” as letters or full words – “MB” as “meg” or “megabyte” – 250

How hard are NSWs? • Identification: – Some homographs “Wed”, “PA” – False positives: OOV • Realization: – Simple rule: money, $2. 34 – Type identification+rules: numbers – Text type specific knowledge (in classified ads, BR for bedroom) • Ambiguity (acceptable multiple answers) – “D. C. ” as letters or full words – “MB” as “meg” or “megabyte” – 250

Step 1: Splitter • Letter/number confjunctions (Win. NT, Sun. OS, PC 110) • Hand-written rules in two parts: – Part I: group things not to be split (numbers, etc; including commas in numbers, slashes in dates) – Part II: apply rules: • • At transitions from lower to upper case After penultimate upper-case char in transitions from upper to lower At transitions from digits to alpha At punctuation

Step 1: Splitter • Letter/number confjunctions (Win. NT, Sun. OS, PC 110) • Hand-written rules in two parts: – Part I: group things not to be split (numbers, etc; including commas in numbers, slashes in dates) – Part II: apply rules: • • At transitions from lower to upper case After penultimate upper-case char in transitions from upper to lower At transitions from digits to alpha At punctuation

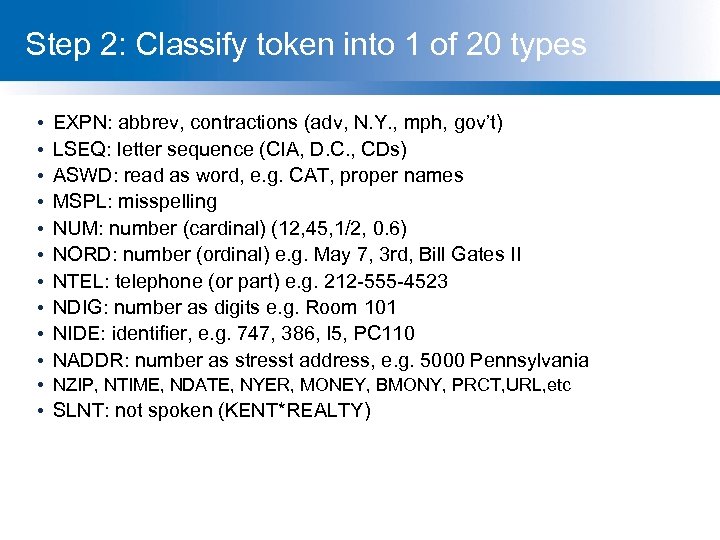

Step 2: Classify token into 1 of 20 types • • • EXPN: abbrev, contractions (adv, N. Y. , mph, gov’t) LSEQ: letter sequence (CIA, D. C. , CDs) ASWD: read as word, e. g. CAT, proper names MSPL: misspelling NUM: number (cardinal) (12, 45, 1/2, 0. 6) NORD: number (ordinal) e. g. May 7, 3 rd, Bill Gates II NTEL: telephone (or part) e. g. 212 -555 -4523 NDIG: number as digits e. g. Room 101 NIDE: identifier, e. g. 747, 386, I 5, PC 110 NADDR: number as stresst address, e. g. 5000 Pennsylvania • NZIP, NTIME, NDATE, NYER, MONEY, BMONY, PRCT, URL, etc • SLNT: not spoken (KENT*REALTY)

Step 2: Classify token into 1 of 20 types • • • EXPN: abbrev, contractions (adv, N. Y. , mph, gov’t) LSEQ: letter sequence (CIA, D. C. , CDs) ASWD: read as word, e. g. CAT, proper names MSPL: misspelling NUM: number (cardinal) (12, 45, 1/2, 0. 6) NORD: number (ordinal) e. g. May 7, 3 rd, Bill Gates II NTEL: telephone (or part) e. g. 212 -555 -4523 NDIG: number as digits e. g. Room 101 NIDE: identifier, e. g. 747, 386, I 5, PC 110 NADDR: number as stresst address, e. g. 5000 Pennsylvania • NZIP, NTIME, NDATE, NYER, MONEY, BMONY, PRCT, URL, etc • SLNT: not spoken (KENT*REALTY)

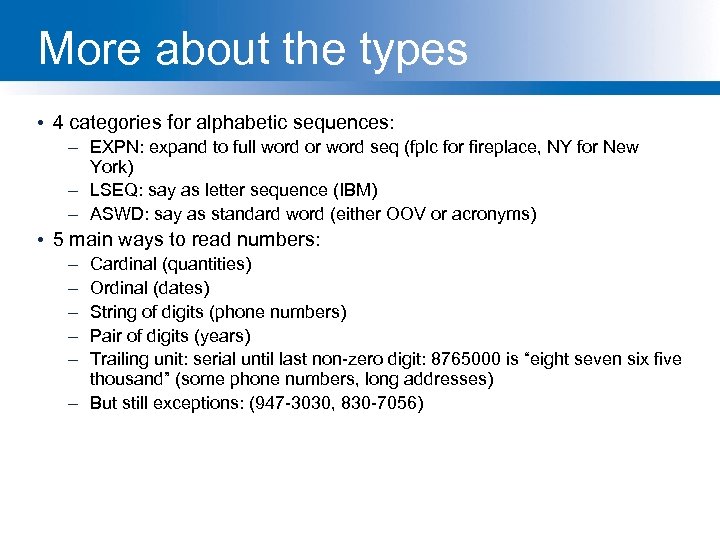

More about the types • 4 categories for alphabetic sequences: – EXPN: expand to full word or word seq (fplc for fireplace, NY for New York) – LSEQ: say as letter sequence (IBM) – ASWD: say as standard word (either OOV or acronyms) • 5 main ways to read numbers: – – – Cardinal (quantities) Ordinal (dates) String of digits (phone numbers) Pair of digits (years) Trailing unit: serial until last non-zero digit: 8765000 is “eight seven six five thousand” (some phone numbers, long addresses) – But still exceptions: (947 -3030, 830 -7056)

More about the types • 4 categories for alphabetic sequences: – EXPN: expand to full word or word seq (fplc for fireplace, NY for New York) – LSEQ: say as letter sequence (IBM) – ASWD: say as standard word (either OOV or acronyms) • 5 main ways to read numbers: – – – Cardinal (quantities) Ordinal (dates) String of digits (phone numbers) Pair of digits (years) Trailing unit: serial until last non-zero digit: 8765000 is “eight seven six five thousand” (some phone numbers, long addresses) – But still exceptions: (947 -3030, 830 -7056)

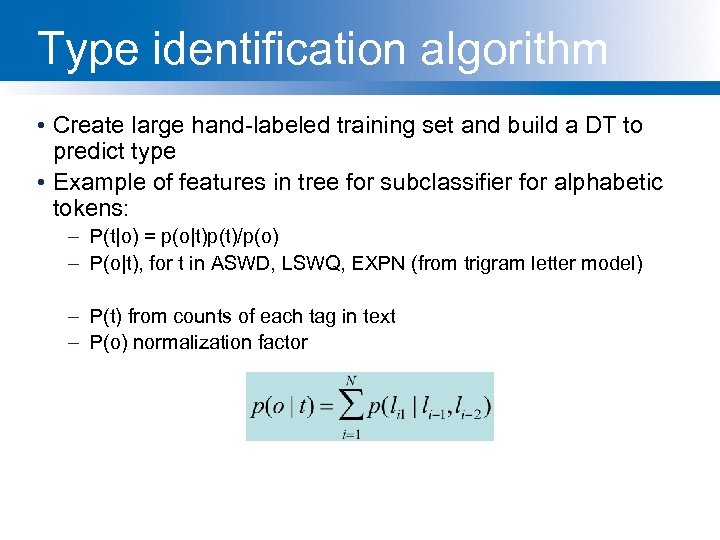

Type identification algorithm • Create large hand-labeled training set and build a DT to predict type • Example of features in tree for subclassifier for alphabetic tokens: – P(t|o) = p(o|t)p(t)/p(o) – P(o|t), for t in ASWD, LSWQ, EXPN (from trigram letter model) – P(t) from counts of each tag in text – P(o) normalization factor

Type identification algorithm • Create large hand-labeled training set and build a DT to predict type • Example of features in tree for subclassifier for alphabetic tokens: – P(t|o) = p(o|t)p(t)/p(o) – P(o|t), for t in ASWD, LSWQ, EXPN (from trigram letter model) – P(t) from counts of each tag in text – P(o) normalization factor

Type identification algorithm • Hand-written context-dependent rules: – List of lexical items (Act, Advantage, amendment) after which Roman numbers read as cardinals not ordinals • Classifier accuracy: – 98. 1% in news data, – 91. 8% in email

Type identification algorithm • Hand-written context-dependent rules: – List of lexical items (Act, Advantage, amendment) after which Roman numbers read as cardinals not ordinals • Classifier accuracy: – 98. 1% in news data, – 91. 8% in email

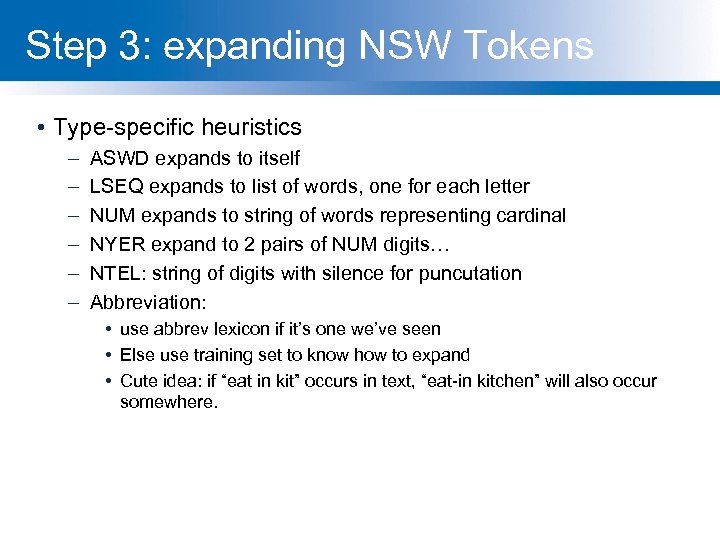

Step 3: expanding NSW Tokens • Type-specific heuristics – – – ASWD expands to itself LSEQ expands to list of words, one for each letter NUM expands to string of words representing cardinal NYER expand to 2 pairs of NUM digits… NTEL: string of digits with silence for puncutation Abbreviation: • use abbrev lexicon if it’s one we’ve seen • Else use training set to know how to expand • Cute idea: if “eat in kit” occurs in text, “eat-in kitchen” will also occur somewhere.

Step 3: expanding NSW Tokens • Type-specific heuristics – – – ASWD expands to itself LSEQ expands to list of words, one for each letter NUM expands to string of words representing cardinal NYER expand to 2 pairs of NUM digits… NTEL: string of digits with silence for puncutation Abbreviation: • use abbrev lexicon if it’s one we’ve seen • Else use training set to know how to expand • Cute idea: if “eat in kit” occurs in text, “eat-in kitchen” will also occur somewhere.

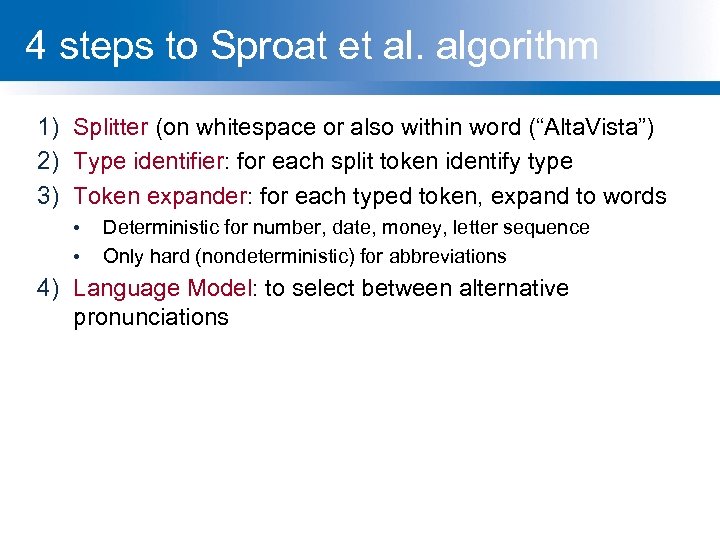

4 steps to Sproat et al. algorithm 1) Splitter (on whitespace or also within word (“Alta. Vista”) 2) Type identifier: for each split token identify type 3) Token expander: for each typed token, expand to words • • Deterministic for number, date, money, letter sequence Only hard (nondeterministic) for abbreviations 4) Language Model: to select between alternative pronunciations

4 steps to Sproat et al. algorithm 1) Splitter (on whitespace or also within word (“Alta. Vista”) 2) Type identifier: for each split token identify type 3) Token expander: for each typed token, expand to words • • Deterministic for number, date, money, letter sequence Only hard (nondeterministic) for abbreviations 4) Language Model: to select between alternative pronunciations

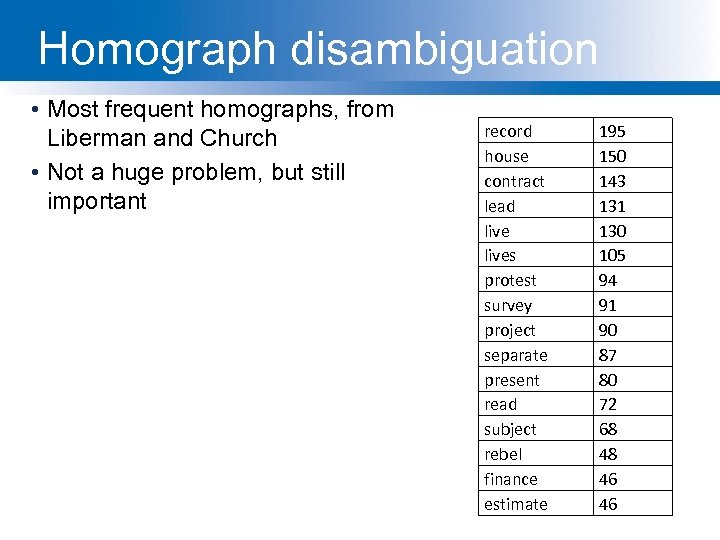

Homograph disambiguation • Most frequent homographs, from Liberman and Church • Not a huge problem, but still important record house contract lead lives protest survey project separate present read subject rebel finance estimate 195 150 143 131 130 105 94 91 90 87 80 72 68 48 46 46

Homograph disambiguation • Most frequent homographs, from Liberman and Church • Not a huge problem, but still important record house contract lead lives protest survey project separate present read subject rebel finance estimate 195 150 143 131 130 105 94 91 90 87 80 72 68 48 46 46

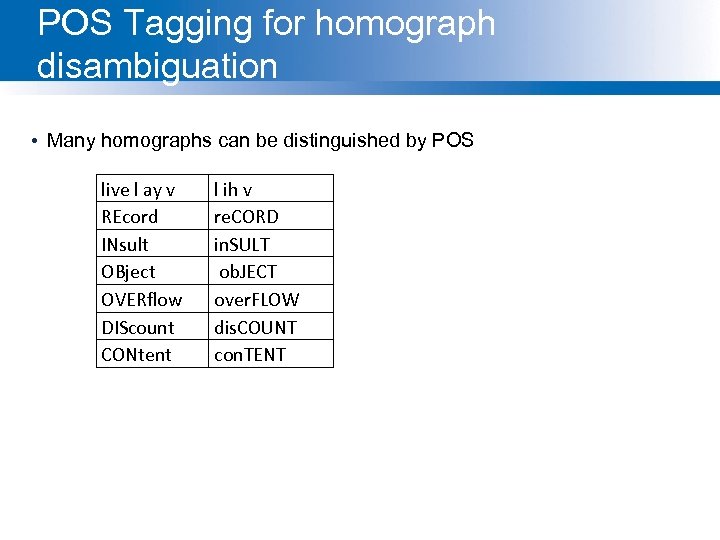

POS Tagging for homograph disambiguation • Many homographs can be distinguished by POS live l ay v REcord INsult OBject OVERflow DIScount CONtent l ih v re. CORD in. SULT ob. JECT over. FLOW dis. COUNT con. TENT

POS Tagging for homograph disambiguation • Many homographs can be distinguished by POS live l ay v REcord INsult OBject OVERflow DIScount CONtent l ih v re. CORD in. SULT ob. JECT over. FLOW dis. COUNT con. TENT

Part of speech tagging • 8 (ish) traditional parts of speech – This idea has been around for over 2000 years (Dionysius Thrax of Alexandria, c. 100 B. C. ) – Called: parts-of-speech, lexical category, word classes, morphological classes, lexical tags, POS – We’ll use POS most frequently

Part of speech tagging • 8 (ish) traditional parts of speech – This idea has been around for over 2000 years (Dionysius Thrax of Alexandria, c. 100 B. C. ) – Called: parts-of-speech, lexical category, word classes, morphological classes, lexical tags, POS – We’ll use POS most frequently

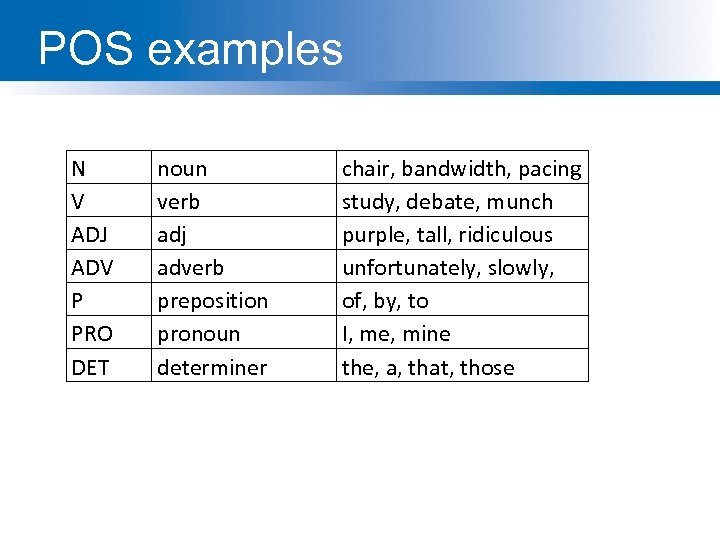

POS examples N V ADJ ADV P PRO DET noun verb adj adverb preposition pronoun determiner chair, bandwidth, pacing study, debate, munch purple, tall, ridiculous unfortunately, slowly, of, by, to I, me, mine the, a, that, those

POS examples N V ADJ ADV P PRO DET noun verb adj adverb preposition pronoun determiner chair, bandwidth, pacing study, debate, munch purple, tall, ridiculous unfortunately, slowly, of, by, to I, me, mine the, a, that, those

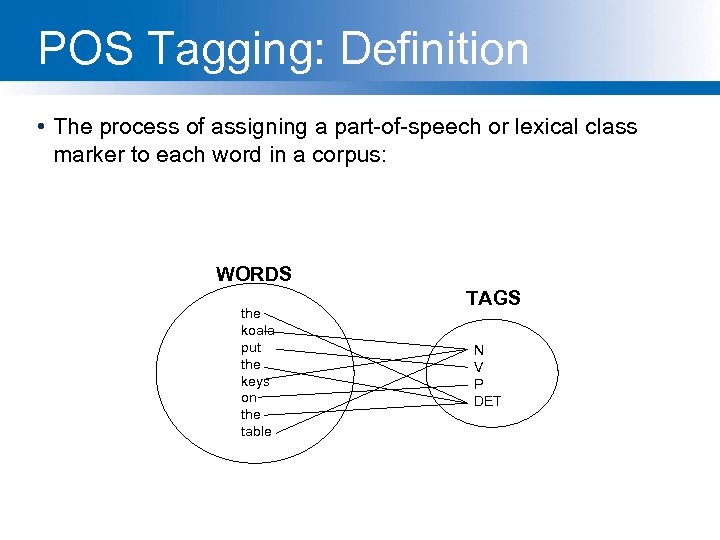

POS Tagging: Definition • The process of assigning a part-of-speech or lexical class marker to each word in a corpus: WORDS the koala put the keys on the table TAGS N V P DET

POS Tagging: Definition • The process of assigning a part-of-speech or lexical class marker to each word in a corpus: WORDS the koala put the keys on the table TAGS N V P DET

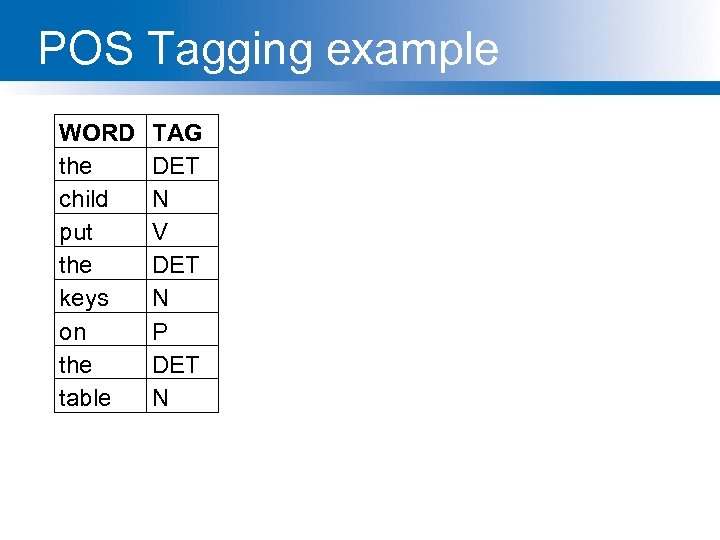

POS Tagging example WORD the child put the keys on the table TAG DET N V DET N P DET N

POS Tagging example WORD the child put the keys on the table TAG DET N V DET N P DET N

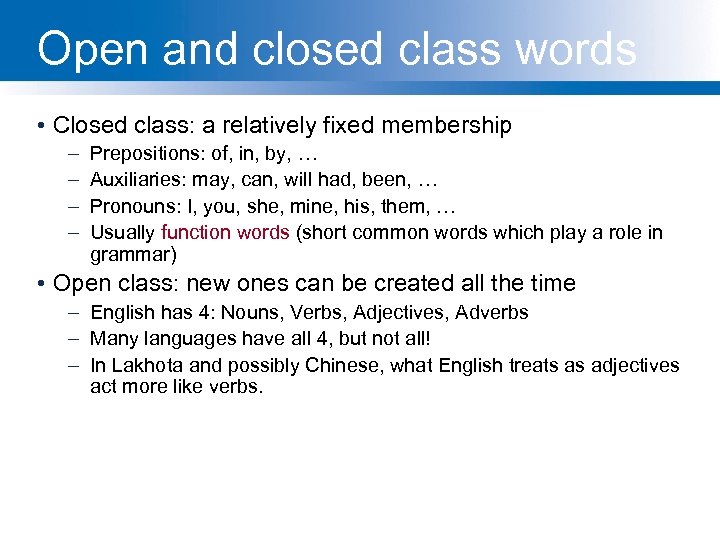

Open and closed class words • Closed class: a relatively fixed membership – – Prepositions: of, in, by, … Auxiliaries: may, can, will had, been, … Pronouns: I, you, she, mine, his, them, … Usually function words (short common words which play a role in grammar) • Open class: new ones can be created all the time – English has 4: Nouns, Verbs, Adjectives, Adverbs – Many languages have all 4, but not all! – In Lakhota and possibly Chinese, what English treats as adjectives act more like verbs.

Open and closed class words • Closed class: a relatively fixed membership – – Prepositions: of, in, by, … Auxiliaries: may, can, will had, been, … Pronouns: I, you, she, mine, his, them, … Usually function words (short common words which play a role in grammar) • Open class: new ones can be created all the time – English has 4: Nouns, Verbs, Adjectives, Adverbs – Many languages have all 4, but not all! – In Lakhota and possibly Chinese, what English treats as adjectives act more like verbs.

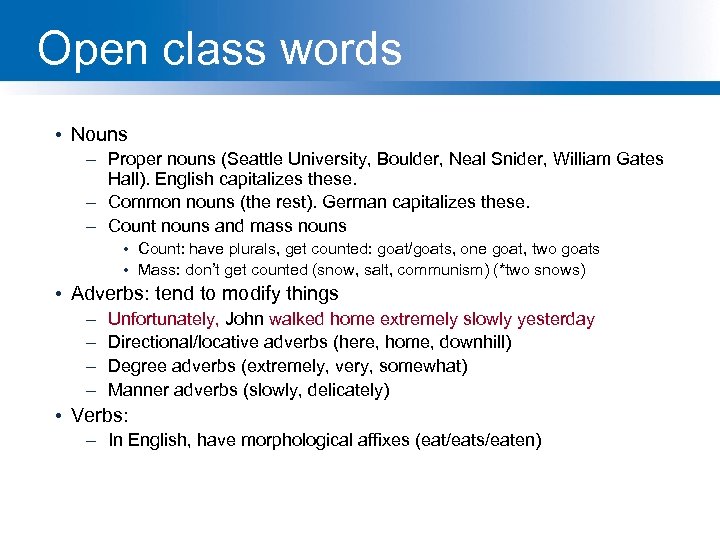

Open class words • Nouns – Proper nouns (Seattle University, Boulder, Neal Snider, William Gates Hall). English capitalizes these. – Common nouns (the rest). German capitalizes these. – Count nouns and mass nouns • Count: have plurals, get counted: goat/goats, one goat, two goats • Mass: don’t get counted (snow, salt, communism) (*two snows) • Adverbs: tend to modify things – – Unfortunately, John walked home extremely slowly yesterday Directional/locative adverbs (here, home, downhill) Degree adverbs (extremely, very, somewhat) Manner adverbs (slowly, delicately) • Verbs: – In English, have morphological affixes (eat/eats/eaten)

Open class words • Nouns – Proper nouns (Seattle University, Boulder, Neal Snider, William Gates Hall). English capitalizes these. – Common nouns (the rest). German capitalizes these. – Count nouns and mass nouns • Count: have plurals, get counted: goat/goats, one goat, two goats • Mass: don’t get counted (snow, salt, communism) (*two snows) • Adverbs: tend to modify things – – Unfortunately, John walked home extremely slowly yesterday Directional/locative adverbs (here, home, downhill) Degree adverbs (extremely, very, somewhat) Manner adverbs (slowly, delicately) • Verbs: – In English, have morphological affixes (eat/eats/eaten)

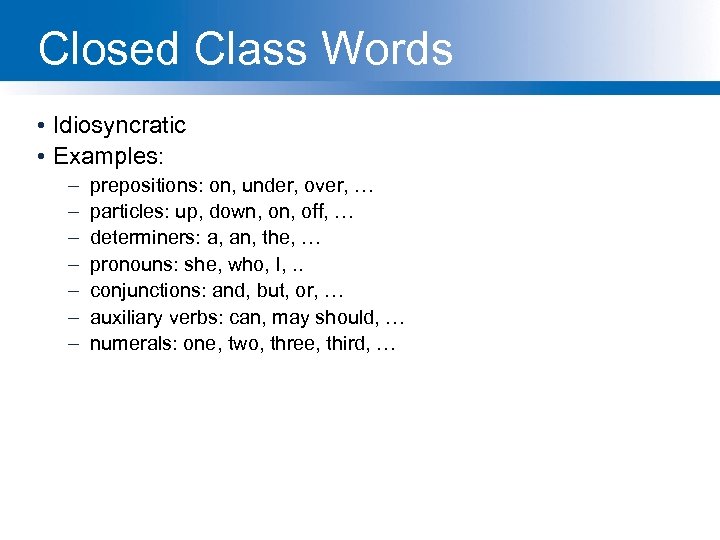

Closed Class Words • Idiosyncratic • Examples: – – – – prepositions: on, under, over, … particles: up, down, off, … determiners: a, an, the, … pronouns: she, who, I, . . conjunctions: and, but, or, … auxiliary verbs: can, may should, … numerals: one, two, three, third, …

Closed Class Words • Idiosyncratic • Examples: – – – – prepositions: on, under, over, … particles: up, down, off, … determiners: a, an, the, … pronouns: she, who, I, . . conjunctions: and, but, or, … auxiliary verbs: can, may should, … numerals: one, two, three, third, …

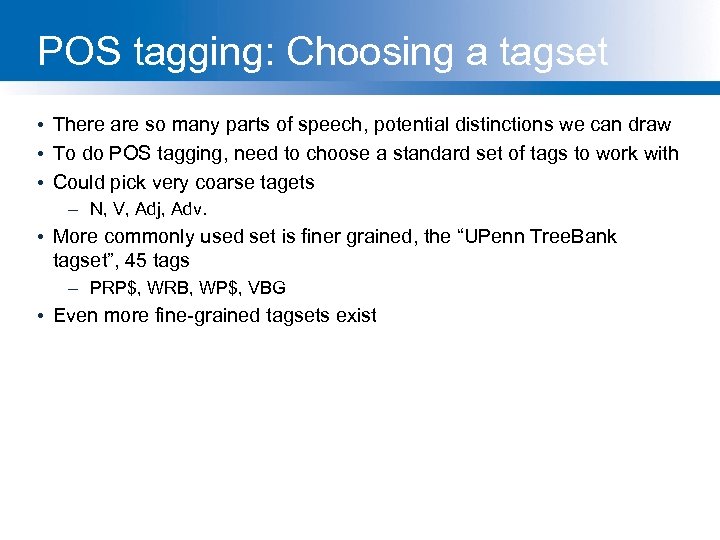

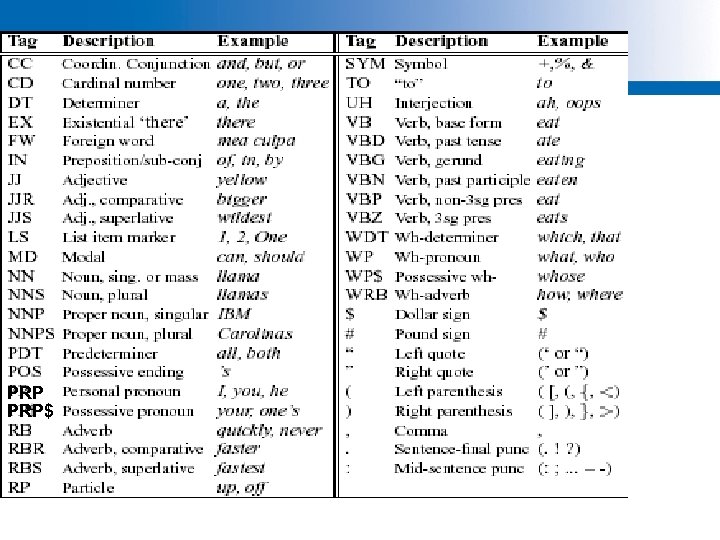

POS tagging: Choosing a tagset • There are so many parts of speech, potential distinctions we can draw • To do POS tagging, need to choose a standard set of tags to work with • Could pick very coarse tagets – N, V, Adj, Adv. • More commonly used set is finer grained, the “UPenn Tree. Bank tagset”, 45 tags – PRP$, WRB, WP$, VBG • Even more fine-grained tagsets exist

POS tagging: Choosing a tagset • There are so many parts of speech, potential distinctions we can draw • To do POS tagging, need to choose a standard set of tags to work with • Could pick very coarse tagets – N, V, Adj, Adv. • More commonly used set is finer grained, the “UPenn Tree. Bank tagset”, 45 tags – PRP$, WRB, WP$, VBG • Even more fine-grained tagsets exist

PRP PRP$

PRP PRP$

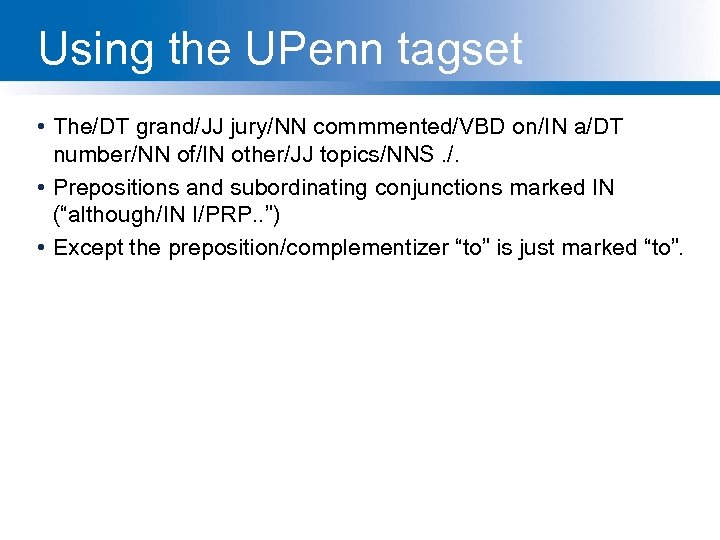

Using the UPenn tagset • The/DT grand/JJ jury/NN commmented/VBD on/IN a/DT number/NN of/IN other/JJ topics/NNS. /. • Prepositions and subordinating conjunctions marked IN (“although/IN I/PRP. . ”) • Except the preposition/complementizer “to” is just marked “to”.

Using the UPenn tagset • The/DT grand/JJ jury/NN commmented/VBD on/IN a/DT number/NN of/IN other/JJ topics/NNS. /. • Prepositions and subordinating conjunctions marked IN (“although/IN I/PRP. . ”) • Except the preposition/complementizer “to” is just marked “to”.

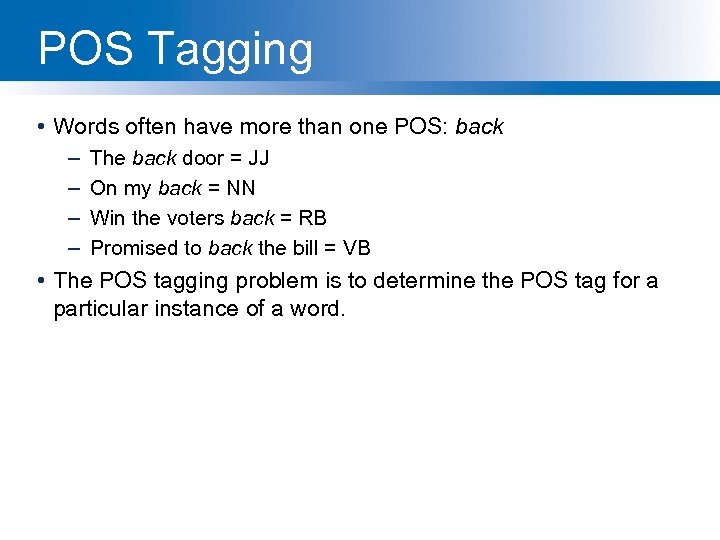

POS Tagging • Words often have more than one POS: back – – The back door = JJ On my back = NN Win the voters back = RB Promised to back the bill = VB • The POS tagging problem is to determine the POS tag for a particular instance of a word.

POS Tagging • Words often have more than one POS: back – – The back door = JJ On my back = NN Win the voters back = RB Promised to back the bill = VB • The POS tagging problem is to determine the POS tag for a particular instance of a word.

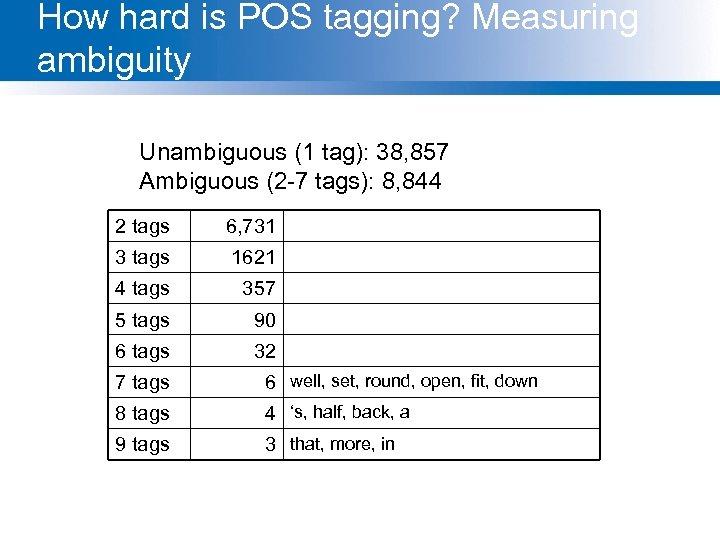

How hard is POS tagging? Measuring ambiguity Unambiguous (1 tag): 38, 857 Ambiguous (2 -7 tags): 8, 844 2 tags 6, 731 3 tags 1621 4 tags 357 5 tags 90 6 tags 32 7 tags 6 well, set, round, open, fit, down 8 tags 4 ‘s, half, back, a 9 tags 3 that, more, in

How hard is POS tagging? Measuring ambiguity Unambiguous (1 tag): 38, 857 Ambiguous (2 -7 tags): 8, 844 2 tags 6, 731 3 tags 1621 4 tags 357 5 tags 90 6 tags 32 7 tags 6 well, set, round, open, fit, down 8 tags 4 ‘s, half, back, a 9 tags 3 that, more, in

3 methods for POS tagging 1. Rule-based tagging – (ENGTWOL) 2. Stochastic (=Probabilistic) tagging – HMM (Hidden Markov Model) tagging 3. Transformation-based tagging – Brill tagger

3 methods for POS tagging 1. Rule-based tagging – (ENGTWOL) 2. Stochastic (=Probabilistic) tagging – HMM (Hidden Markov Model) tagging 3. Transformation-based tagging – Brill tagger

Rule-based tagging • • Start with a dictionary Assign all possible tags to words from the dictionary Write rules by hand to selectively remove tags Leaving the correct tag for each word

Rule-based tagging • • Start with a dictionary Assign all possible tags to words from the dictionary Write rules by hand to selectively remove tags Leaving the correct tag for each word

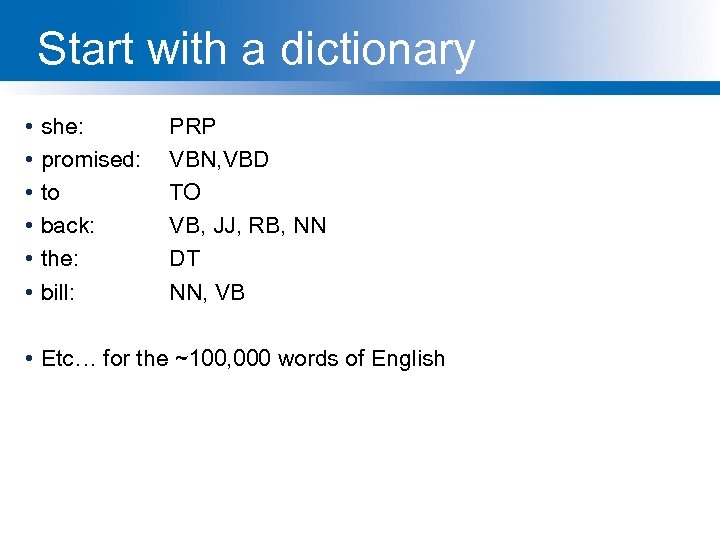

Start with a dictionary • • • she: promised: to back: the: bill: PRP VBN, VBD TO VB, JJ, RB, NN DT NN, VB • Etc… for the ~100, 000 words of English

Start with a dictionary • • • she: promised: to back: the: bill: PRP VBN, VBD TO VB, JJ, RB, NN DT NN, VB • Etc… for the ~100, 000 words of English

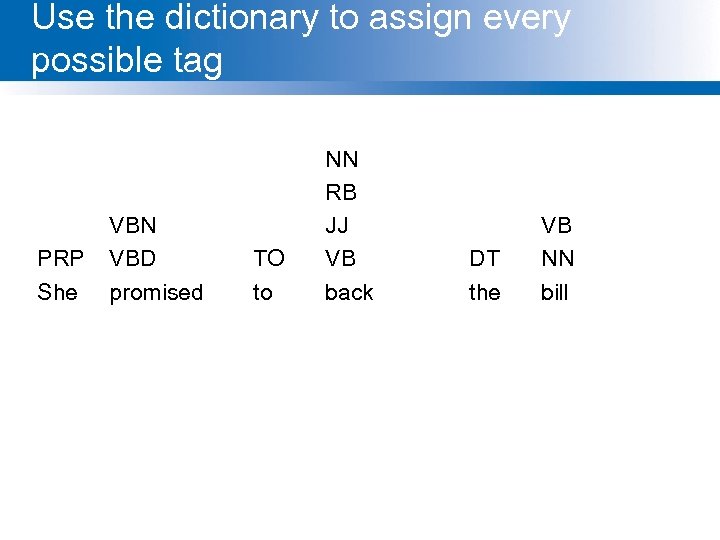

Use the dictionary to assign every possible tag PRP She VBN VBD promised TO to NN RB JJ VB back DT the VB NN bill

Use the dictionary to assign every possible tag PRP She VBN VBD promised TO to NN RB JJ VB back DT the VB NN bill

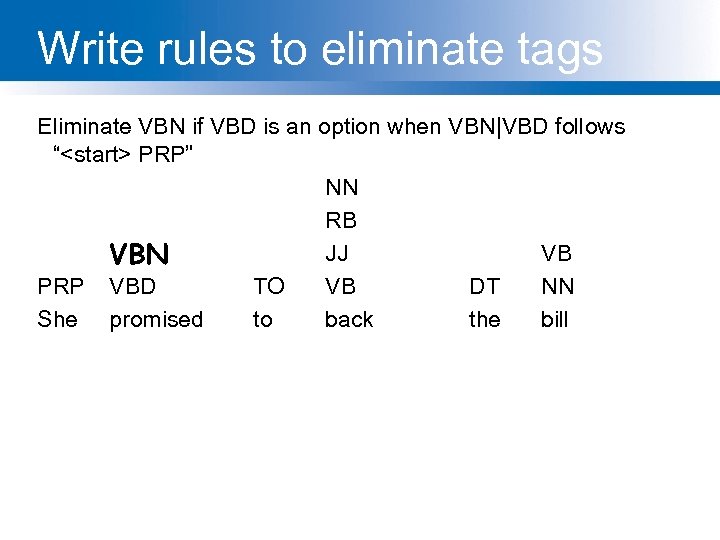

Write rules to eliminate tags Eliminate VBN if VBD is an option when VBN|VBD follows “

Write rules to eliminate tags Eliminate VBN if VBD is an option when VBN|VBD follows “

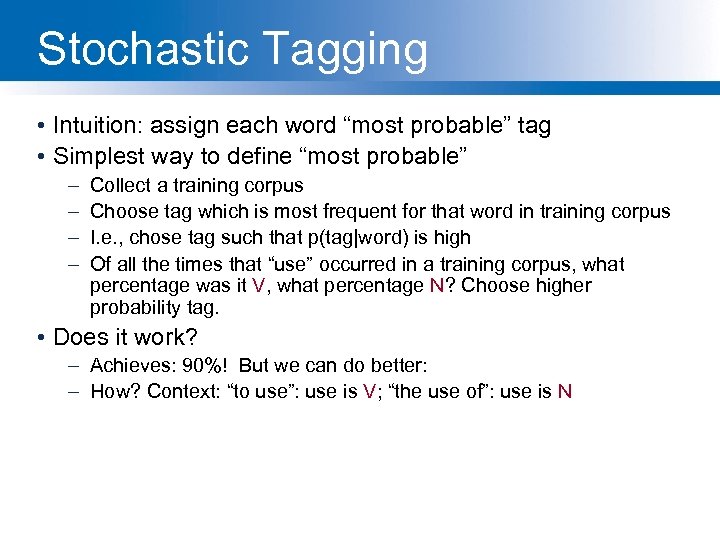

Stochastic Tagging • Intuition: assign each word “most probable” tag • Simplest way to define “most probable” – – Collect a training corpus Choose tag which is most frequent for that word in training corpus I. e. , chose tag such that p(tag|word) is high Of all the times that “use” occurred in a training corpus, what percentage was it V, what percentage N? Choose higher probability tag. • Does it work? – Achieves: 90%! But we can do better: – How? Context: “to use”: use is V; “the use of”: use is N

Stochastic Tagging • Intuition: assign each word “most probable” tag • Simplest way to define “most probable” – – Collect a training corpus Choose tag which is most frequent for that word in training corpus I. e. , chose tag such that p(tag|word) is high Of all the times that “use” occurred in a training corpus, what percentage was it V, what percentage N? Choose higher probability tag. • Does it work? – Achieves: 90%! But we can do better: – How? Context: “to use”: use is V; “the use of”: use is N

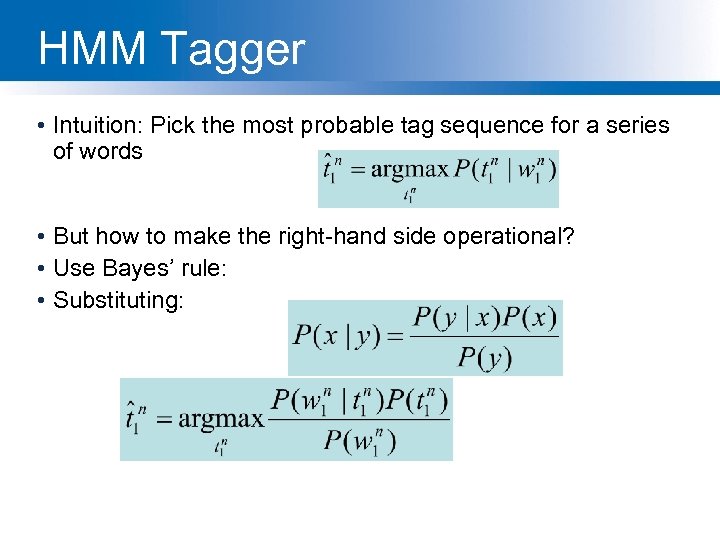

HMM Tagger • Intuition: Pick the most probable tag sequence for a series of words • But how to make the right-hand side operational? • Use Bayes’ rule: • Substituting:

HMM Tagger • Intuition: Pick the most probable tag sequence for a series of words • But how to make the right-hand side operational? • Use Bayes’ rule: • Substituting:

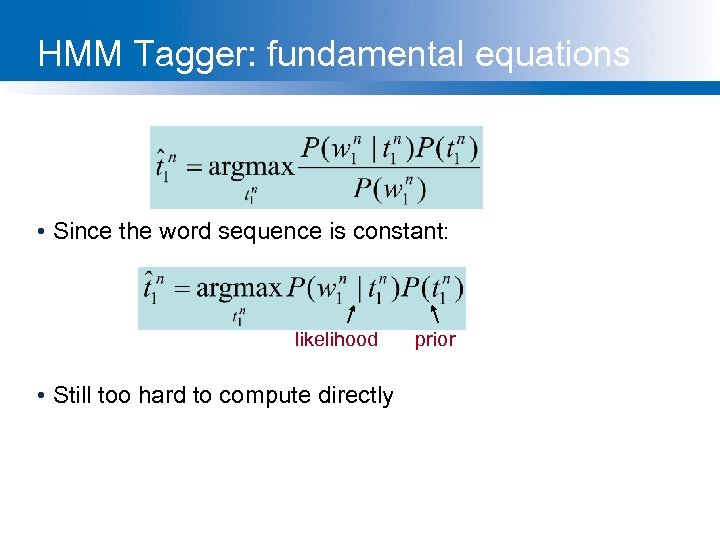

HMM Tagger: fundamental equations • Since the word sequence is constant: likelihood • Still too hard to compute directly prior

HMM Tagger: fundamental equations • Since the word sequence is constant: likelihood • Still too hard to compute directly prior

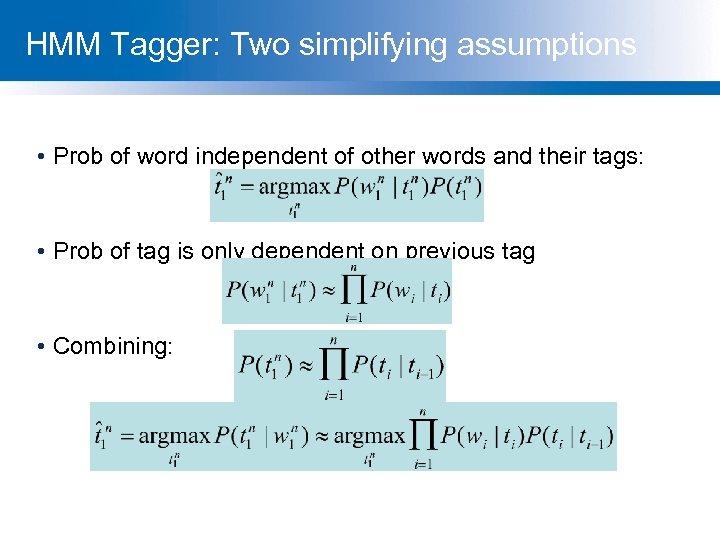

HMM Tagger: Two simplifying assumptions • Prob of word independent of other words and their tags: • Prob of tag is only dependent on previous tag • Combining:

HMM Tagger: Two simplifying assumptions • Prob of word independent of other words and their tags: • Prob of tag is only dependent on previous tag • Combining:

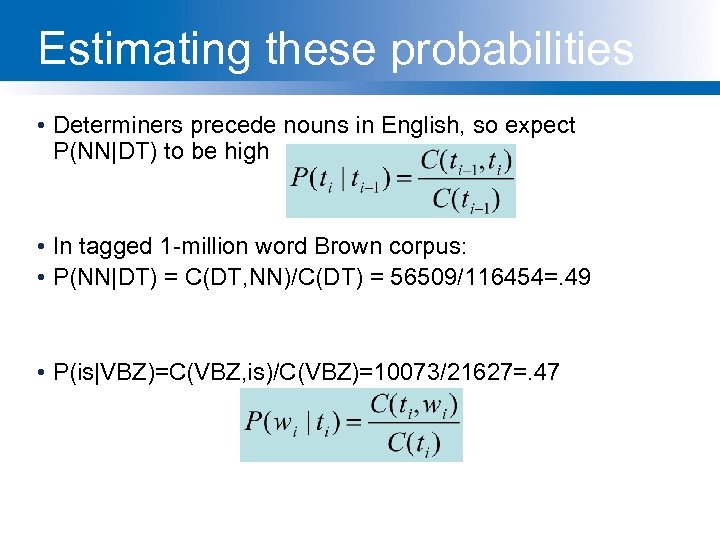

Estimating these probabilities • Determiners precede nouns in English, so expect P(NN|DT) to be high • In tagged 1 -million word Brown corpus: • P(NN|DT) = C(DT, NN)/C(DT) = 56509/116454=. 49 • P(is|VBZ)=C(VBZ, is)/C(VBZ)=10073/21627=. 47

Estimating these probabilities • Determiners precede nouns in English, so expect P(NN|DT) to be high • In tagged 1 -million word Brown corpus: • P(NN|DT) = C(DT, NN)/C(DT) = 56509/116454=. 49 • P(is|VBZ)=C(VBZ, is)/C(VBZ)=10073/21627=. 47

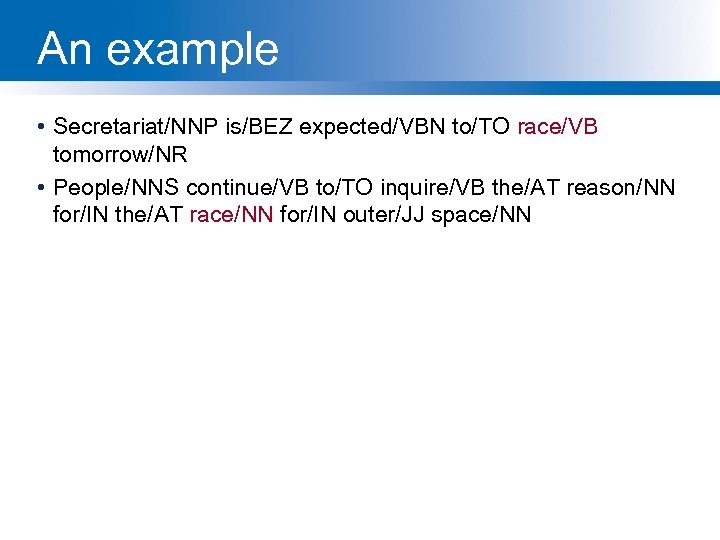

An example • Secretariat/NNP is/BEZ expected/VBN to/TO race/VB tomorrow/NR • People/NNS continue/VB to/TO inquire/VB the/AT reason/NN for/IN the/AT race/NN for/IN outer/JJ space/NN

An example • Secretariat/NNP is/BEZ expected/VBN to/TO race/VB tomorrow/NR • People/NNS continue/VB to/TO inquire/VB the/AT reason/NN for/IN the/AT race/NN for/IN outer/JJ space/NN

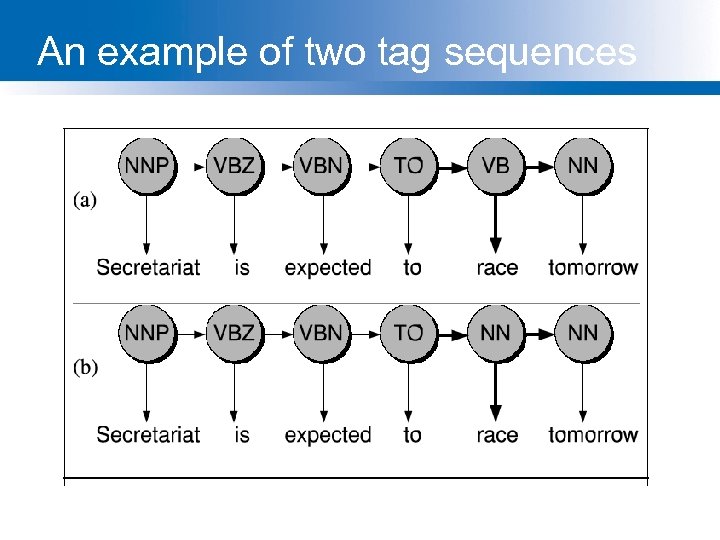

An example of two tag sequences

An example of two tag sequences

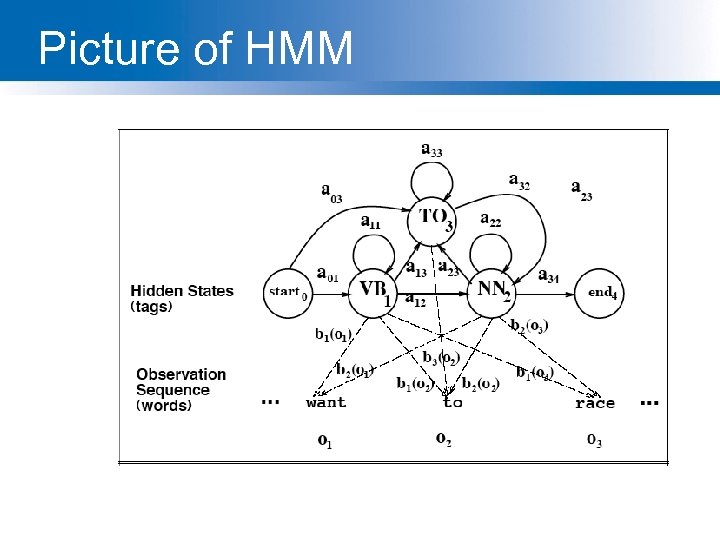

Picture of HMM

Picture of HMM

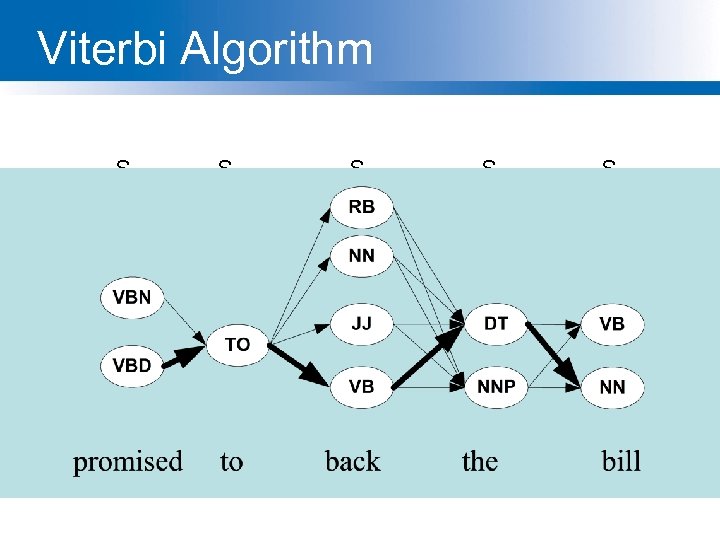

Viterbi Algorithm S 1 S 2 S 3 S 4 S 5

Viterbi Algorithm S 1 S 2 S 3 S 4 S 5

Evaluation • The result is compared with a manually coded “Gold Standard” – Typically accuracy reaches 96 -97% – This may be compared with result for a baseline tagger (one that uses no context). • Important: 100% is impossible even for human annotators

Evaluation • The result is compared with a manually coded “Gold Standard” – Typically accuracy reaches 96 -97% – This may be compared with result for a baseline tagger (one that uses no context). • Important: 100% is impossible even for human annotators

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

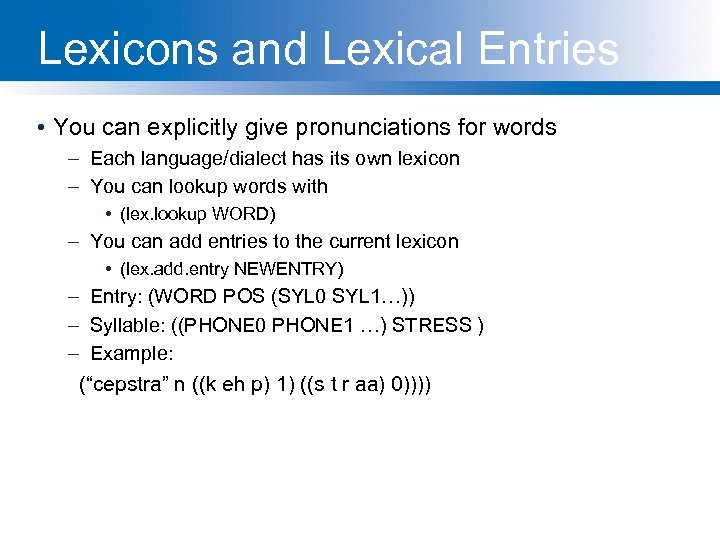

Lexicons and Lexical Entries • You can explicitly give pronunciations for words – Each language/dialect has its own lexicon – You can lookup words with • (lex. lookup WORD) – You can add entries to the current lexicon • (lex. add. entry NEWENTRY) – Entry: (WORD POS (SYL 0 SYL 1…)) – Syllable: ((PHONE 0 PHONE 1 …) STRESS ) – Example: (“cepstra” n ((k eh p) 1) ((s t r aa) 0))))

Lexicons and Lexical Entries • You can explicitly give pronunciations for words – Each language/dialect has its own lexicon – You can lookup words with • (lex. lookup WORD) – You can add entries to the current lexicon • (lex. add. entry NEWENTRY) – Entry: (WORD POS (SYL 0 SYL 1…)) – Syllable: ((PHONE 0 PHONE 1 …) STRESS ) – Example: (“cepstra” n ((k eh p) 1) ((s t r aa) 0))))

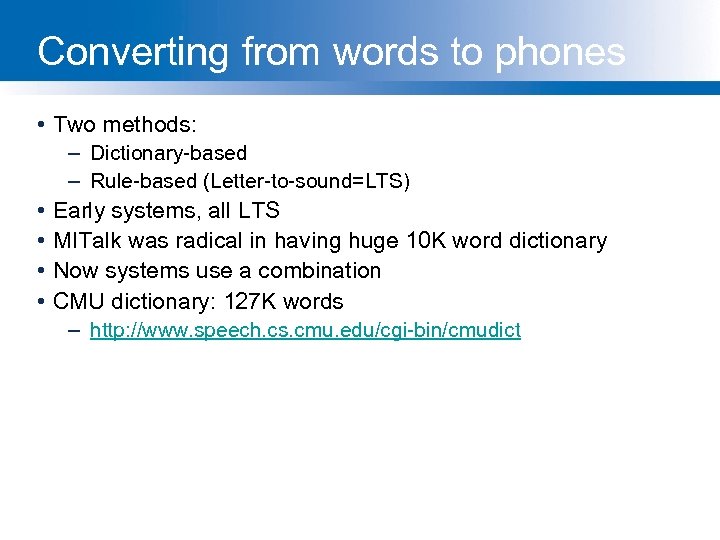

Converting from words to phones • Two methods: – Dictionary-based – Rule-based (Letter-to-sound=LTS) • • Early systems, all LTS MITalk was radical in having huge 10 K word dictionary Now systems use a combination CMU dictionary: 127 K words – http: //www. speech. cs. cmu. edu/cgi-bin/cmudict

Converting from words to phones • Two methods: – Dictionary-based – Rule-based (Letter-to-sound=LTS) • • Early systems, all LTS MITalk was radical in having huge 10 K word dictionary Now systems use a combination CMU dictionary: 127 K words – http: //www. speech. cs. cmu. edu/cgi-bin/cmudict

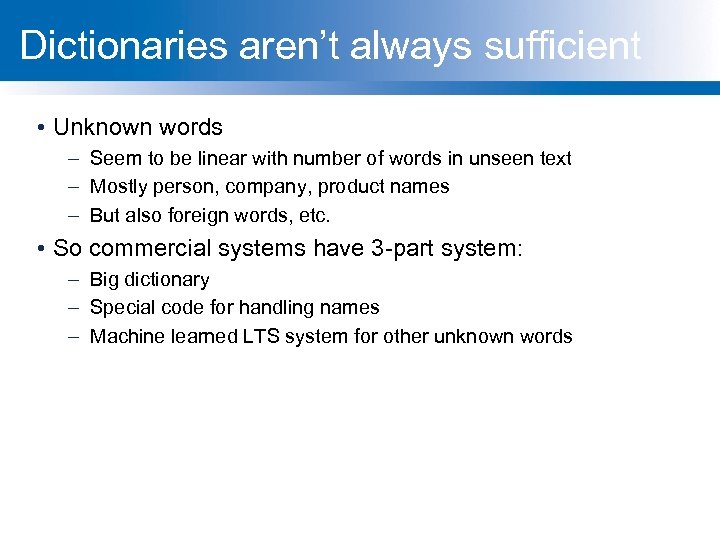

Dictionaries aren’t always sufficient • Unknown words – Seem to be linear with number of words in unseen text – Mostly person, company, product names – But also foreign words, etc. • So commercial systems have 3 -part system: – Big dictionary – Special code for handling names – Machine learned LTS system for other unknown words

Dictionaries aren’t always sufficient • Unknown words – Seem to be linear with number of words in unseen text – Mostly person, company, product names – But also foreign words, etc. • So commercial systems have 3 -part system: – Big dictionary – Special code for handling names – Machine learned LTS system for other unknown words

![Letter-to-Sound Rules • Festival LTS rules: • (LEFTCONTEXT [ ITEMS] RIGHTCONTEXT = NEWITEMS ) Letter-to-Sound Rules • Festival LTS rules: • (LEFTCONTEXT [ ITEMS] RIGHTCONTEXT = NEWITEMS )](https://present5.com/presentation/648a0e1505a6ce35e32a390575ce11ef/image-85.jpg) Letter-to-Sound Rules • Festival LTS rules: • (LEFTCONTEXT [ ITEMS] RIGHTCONTEXT = NEWITEMS ) • Example: – (#[ch]C=k) – ( # [ c h ] = ch ) • # denotes beginning of word • C means all consonants • Rules apply in order – “christmas” pronounced with [k] – But word with ch followed by non-consonant pronounced [ch] • E. g. , “choice”

Letter-to-Sound Rules • Festival LTS rules: • (LEFTCONTEXT [ ITEMS] RIGHTCONTEXT = NEWITEMS ) • Example: – (#[ch]C=k) – ( # [ c h ] = ch ) • # denotes beginning of word • C means all consonants • Rules apply in order – “christmas” pronounced with [k] – But word with ch followed by non-consonant pronounced [ch] • E. g. , “choice”

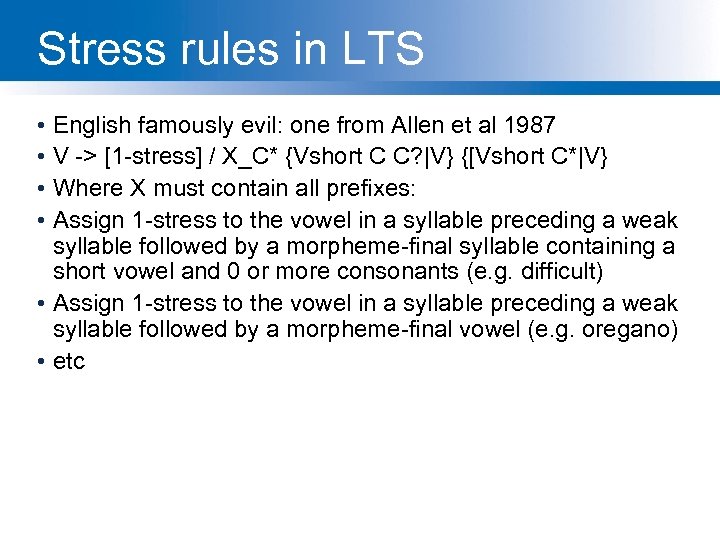

Stress rules in LTS • English famously evil: one from Allen et al 1987 • V -> [1 -stress] / X_C* {Vshort C C? |V} {[Vshort C*|V} • Where X must contain all prefixes: • Assign 1 -stress to the vowel in a syllable preceding a weak syllable followed by a morpheme-final syllable containing a short vowel and 0 or more consonants (e. g. difficult) • Assign 1 -stress to the vowel in a syllable preceding a weak syllable followed by a morpheme-final vowel (e. g. oregano) • etc

Stress rules in LTS • English famously evil: one from Allen et al 1987 • V -> [1 -stress] / X_C* {Vshort C C? |V} {[Vshort C*|V} • Where X must contain all prefixes: • Assign 1 -stress to the vowel in a syllable preceding a weak syllable followed by a morpheme-final syllable containing a short vowel and 0 or more consonants (e. g. difficult) • Assign 1 -stress to the vowel in a syllable preceding a weak syllable followed by a morpheme-final vowel (e. g. oregano) • etc

Modern method: Learning LTS rules automatically • • Induce LTS from a dictionary of the language Black et al. 1998 Applied to English, German, French Two steps: alignment and (CART-based) rule-induction

Modern method: Learning LTS rules automatically • • Induce LTS from a dictionary of the language Black et al. 1998 Applied to English, German, French Two steps: alignment and (CART-based) rule-induction

Alignment • Letters: c h e c k e d • Phones: ch _ eh _ k _ t • Black et al Method 1: – First scatter epsilons in all possible ways to cause letters and phones to align – Then collect stats for P(letter|phone) and select best to generate new stats – This iterated a number of times until settles (5 -6) – This is EM (expectation maximization) alg

Alignment • Letters: c h e c k e d • Phones: ch _ eh _ k _ t • Black et al Method 1: – First scatter epsilons in all possible ways to cause letters and phones to align – Then collect stats for P(letter|phone) and select best to generate new stats – This iterated a number of times until settles (5 -6) – This is EM (expectation maximization) alg

Alignment • Black et al method 2 • Hand specify which letters can be rendered as which phones – C goes to k/ch/s/sh – W goes to w/v/f, etc • Once mapping table is created, find all valid alignments, find p(letter|phone), score all alignments, take best

Alignment • Black et al method 2 • Hand specify which letters can be rendered as which phones – C goes to k/ch/s/sh – W goes to w/v/f, etc • Once mapping table is created, find all valid alignments, find p(letter|phone), score all alignments, take best

Alignment • Some alignments will turn out to be really bad. • These are just the cases where pronunciation doesn’t match letters: – Dept d ih p aa r t m ah n t – CMU s iy eh m y uw – Lieutenant l eh f t eh n ax n t (British) • Also foreign words • These can just be removed from alignment training

Alignment • Some alignments will turn out to be really bad. • These are just the cases where pronunciation doesn’t match letters: – Dept d ih p aa r t m ah n t – CMU s iy eh m y uw – Lieutenant l eh f t eh n ax n t (British) • Also foreign words • These can just be removed from alignment training

Building CART trees • Build a CART tree for each letter in alphabet (26 plus accented) using context of +-3 letters • # # # c h e c -> ch • c h e c k e d -> _ • This produces 92 -96% correct LETTER accuracy (58 -75 word acc) for English

Building CART trees • Build a CART tree for each letter in alphabet (26 plus accented) using context of +-3 letters • # # # c h e c -> ch • c h e c k e d -> _ • This produces 92 -96% correct LETTER accuracy (58 -75 word acc) for English

Improvements • • Take names out of the training data And acronyms Detect both of these separately And build special-purpose tools to do LTS for names and acronyms

Improvements • • Take names out of the training data And acronyms Detect both of these separately And build special-purpose tools to do LTS for names and acronyms

Names • Big problem area is names • Names are common – 20% of tokens in typical newswire text will be names – 1987 Donnelly list (72 million households) contains about 1. 5 million names – Personal names: Mc. Arthur, D’Angelo, Jimenez, Rajan, Raghavan, Sondhi, Xu, Hsu, Zhang, Chang, Nguyen – Company/Brand names: Infinit, Kmart, Cytyc, Medamicus, Inforte, Aaon, Idexx Labs, Bebe

Names • Big problem area is names • Names are common – 20% of tokens in typical newswire text will be names – 1987 Donnelly list (72 million households) contains about 1. 5 million names – Personal names: Mc. Arthur, D’Angelo, Jimenez, Rajan, Raghavan, Sondhi, Xu, Hsu, Zhang, Chang, Nguyen – Company/Brand names: Infinit, Kmart, Cytyc, Medamicus, Inforte, Aaon, Idexx Labs, Bebe

Names • Methods: – Can do morphology (Walters -> Walter, Lucasville) – Can write stress-shifting rules (Jordan -> Jordanian) – Rhyme analogy: Plotsky by analogy with Trostsky (replace tr with pl) – Liberman and Church: for 250 K most common names, got 212 K (85%) from these modified-dictionary methods, used LTS for rest. – Can do automatic country detection (from letter trigrams) and then do country-specific rules

Names • Methods: – Can do morphology (Walters -> Walter, Lucasville) – Can write stress-shifting rules (Jordan -> Jordanian) – Rhyme analogy: Plotsky by analogy with Trostsky (replace tr with pl) – Liberman and Church: for 250 K most common names, got 212 K (85%) from these modified-dictionary methods, used LTS for rest. – Can do automatic country detection (from letter trigrams) and then do country-specific rules

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

Defining Intonation • Ladd (1996) “Intonational phonology” • “The use of suprasegmental phonetic features Suprasegmental = above and beyond the segment/phone – F 0 – Intensity (energy) – Duration • to convey sentence-level pragmatic meanings” – I. e. meanings that apply to phrases or utterances as a whole, not lexical stress, not lexical tone.

Defining Intonation • Ladd (1996) “Intonational phonology” • “The use of suprasegmental phonetic features Suprasegmental = above and beyond the segment/phone – F 0 – Intensity (energy) – Duration • to convey sentence-level pragmatic meanings” – I. e. meanings that apply to phrases or utterances as a whole, not lexical stress, not lexical tone.

Three aspects of prosody • Prominence: some syllables/words are more prominent than others • Structure/boundaries: sentences have prosodic structure – Some words group naturally together – Others have a noticeable break or disjuncture between them • Tune: the intonational melody of an utterance.

Three aspects of prosody • Prominence: some syllables/words are more prominent than others • Structure/boundaries: sentences have prosodic structure – Some words group naturally together – Others have a noticeable break or disjuncture between them • Tune: the intonational melody of an utterance.

Prosodic Prominence: Pitch Accents A: What types of foods are a good source of vitamins? B 1: Legumes are a good source of VITAMINS. B 2: LEGUMES are a good source of vitamins. • Prominent syllables are: • Louder • Longer • Have higher F 0 and/or sharper changes in F 0 (higher F 0 velocity) Slides from Jennifer Venditti

Prosodic Prominence: Pitch Accents A: What types of foods are a good source of vitamins? B 1: Legumes are a good source of VITAMINS. B 2: LEGUMES are a good source of vitamins. • Prominent syllables are: • Louder • Longer • Have higher F 0 and/or sharper changes in F 0 (higher F 0 velocity) Slides from Jennifer Venditti

![Prosodic Boundaries. French [bread and cheese] [French bread] and [cheese] Prosodic Boundaries. French [bread and cheese] [French bread] and [cheese]](https://present5.com/presentation/648a0e1505a6ce35e32a390575ce11ef/image-99.jpg) Prosodic Boundaries. French [bread and cheese] [French bread] and [cheese]

Prosodic Boundaries. French [bread and cheese] [French bread] and [cheese]

Prosodic Tunes • Legumes are a good source of vitamins. • Are legumes a good source of vitamins?

Prosodic Tunes • Legumes are a good source of vitamins. • Are legumes a good source of vitamins?

TOPIC #1 Thinking about F 0

TOPIC #1 Thinking about F 0

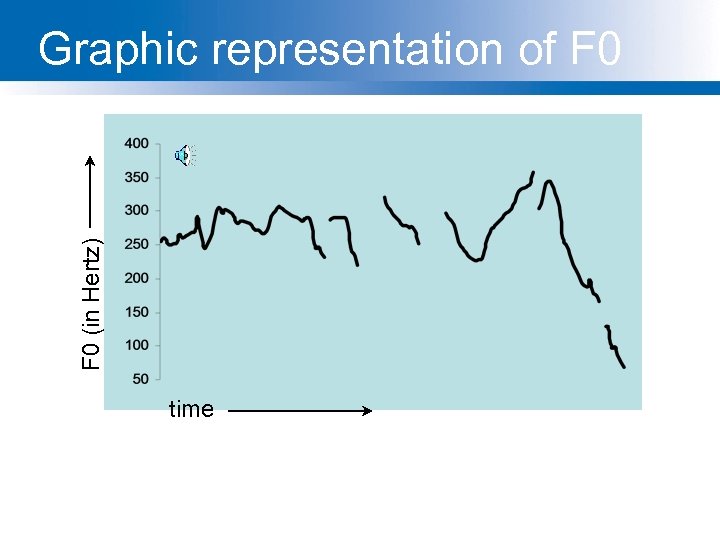

F 0 (in Hertz) Graphic representation of F 0 legumes are a good source of VITAMINS time

F 0 (in Hertz) Graphic representation of F 0 legumes are a good source of VITAMINS time

![The ‘ripples’ [s] [t] legumes are a good source of VITAMINS F 0 is The ‘ripples’ [s] [t] legumes are a good source of VITAMINS F 0 is](https://present5.com/presentation/648a0e1505a6ce35e32a390575ce11ef/image-103.jpg) The ‘ripples’ [s] [t] legumes are a good source of VITAMINS F 0 is not defined for consonants without vocal fold vibration.

The ‘ripples’ [s] [t] legumes are a good source of VITAMINS F 0 is not defined for consonants without vocal fold vibration.

![The ‘ripples’ [g] [z] [g] [v] legumes are a good source of VITAMINS. . The ‘ripples’ [g] [z] [g] [v] legumes are a good source of VITAMINS. .](https://present5.com/presentation/648a0e1505a6ce35e32a390575ce11ef/image-104.jpg) The ‘ripples’ [g] [z] [g] [v] legumes are a good source of VITAMINS. . . and F 0 can be perturbed by consonants with an extreme constriction in the vocal tract.

The ‘ripples’ [g] [z] [g] [v] legumes are a good source of VITAMINS. . . and F 0 can be perturbed by consonants with an extreme constriction in the vocal tract.

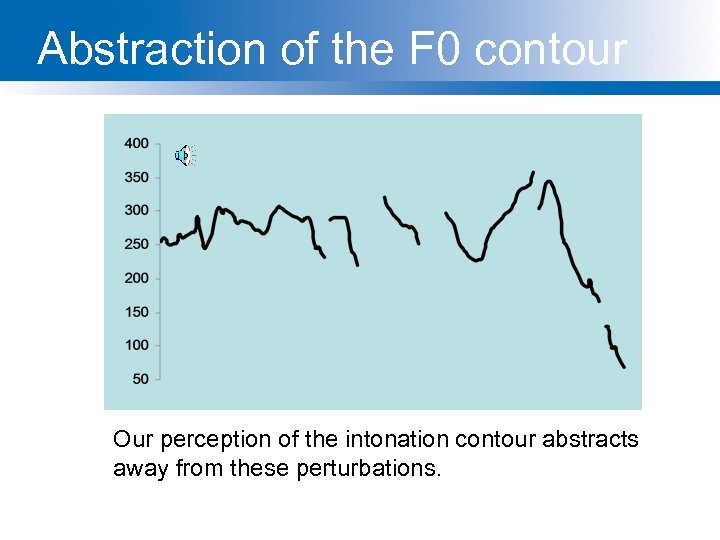

Abstraction of the F 0 contour legumes are a good source of VITAMINS Our perception of the intonation contour abstracts away from these perturbations.

Abstraction of the F 0 contour legumes are a good source of VITAMINS Our perception of the intonation contour abstracts away from these perturbations.

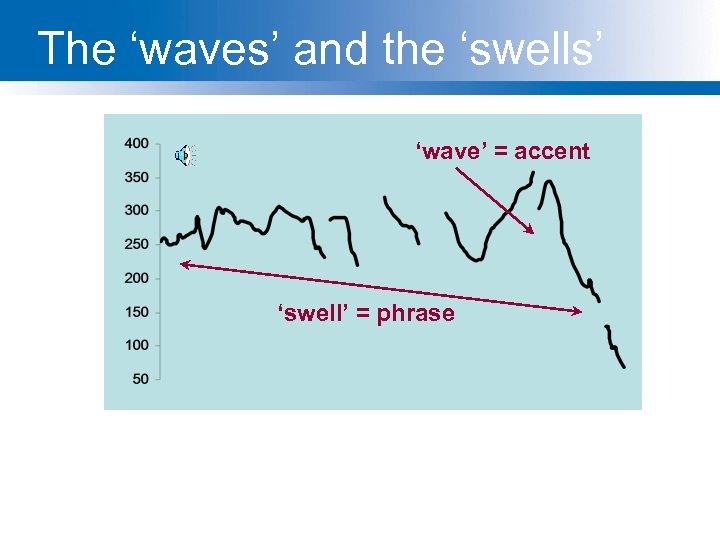

The ‘waves’ and the ‘swells’ ‘wave’ = accent ‘swell’ = phrase legumes are a good source of VITAMINS

The ‘waves’ and the ‘swells’ ‘wave’ = accent ‘swell’ = phrase legumes are a good source of VITAMINS

TOPIC #2 Accent Placement and Intonational Tunes

TOPIC #2 Accent Placement and Intonational Tunes

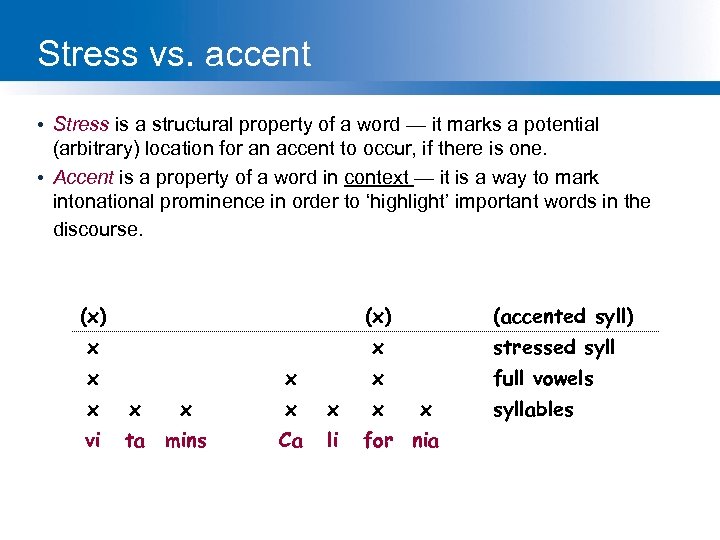

Stress vs. accent • Stress is a structural property of a word — it marks a potential (arbitrary) location for an accent to occur, if there is one. • Accent is a property of a word in context — it is a way to mark intonational prominence in order to ‘highlight’ important words in the discourse. (x) x x stressed syll x full vowels x x x x vi ta mins Ca li x (accented syll) x for nia syllables

Stress vs. accent • Stress is a structural property of a word — it marks a potential (arbitrary) location for an accent to occur, if there is one. • Accent is a property of a word in context — it is a way to mark intonational prominence in order to ‘highlight’ important words in the discourse. (x) x x stressed syll x full vowels x x x x vi ta mins Ca li x (accented syll) x for nia syllables

Stress vs. accent (2) • The speaker decides to make the word vitamin more prominent by accenting it. • Lexical stress tell us that this prominence will appear on the first syllable, hence VItamin.

Stress vs. accent (2) • The speaker decides to make the word vitamin more prominent by accenting it. • Lexical stress tell us that this prominence will appear on the first syllable, hence VItamin.

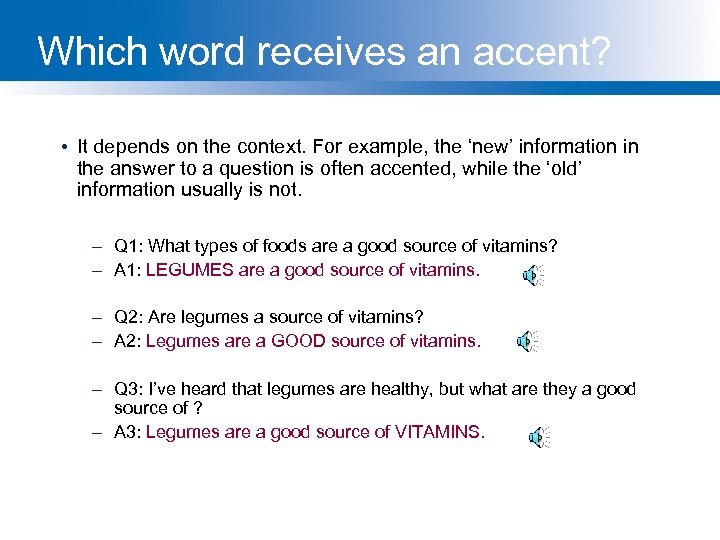

Which word receives an accent? • It depends on the context. For example, the ‘new’ information in the answer to a question is often accented, while the ‘old’ information usually is not. – Q 1: What types of foods are a good source of vitamins? – A 1: LEGUMES are a good source of vitamins. – Q 2: Are legumes a source of vitamins? – A 2: Legumes are a GOOD source of vitamins. – Q 3: I’ve heard that legumes are healthy, but what are they a good source of ? – A 3: Legumes are a good source of VITAMINS.

Which word receives an accent? • It depends on the context. For example, the ‘new’ information in the answer to a question is often accented, while the ‘old’ information usually is not. – Q 1: What types of foods are a good source of vitamins? – A 1: LEGUMES are a good source of vitamins. – Q 2: Are legumes a source of vitamins? – A 2: Legumes are a GOOD source of vitamins. – Q 3: I’ve heard that legumes are healthy, but what are they a good source of ? – A 3: Legumes are a good source of VITAMINS.

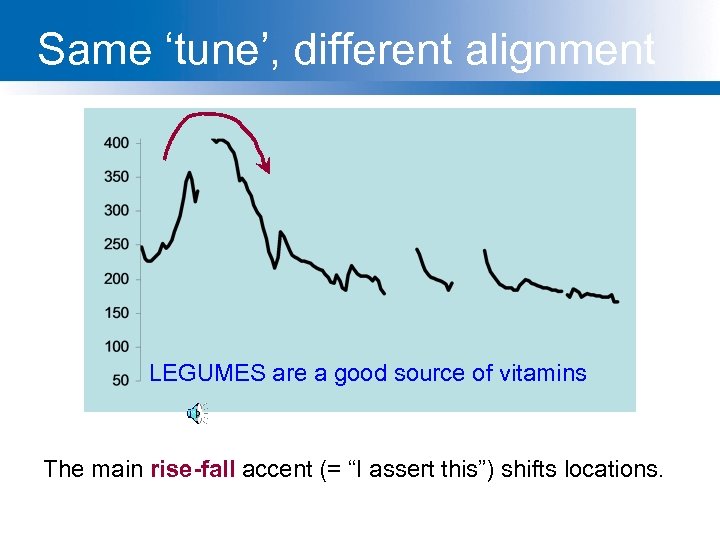

Same ‘tune’, different alignment LEGUMES are a good source of vitamins The main rise-fall accent (= “I assert this”) shifts locations.

Same ‘tune’, different alignment LEGUMES are a good source of vitamins The main rise-fall accent (= “I assert this”) shifts locations.

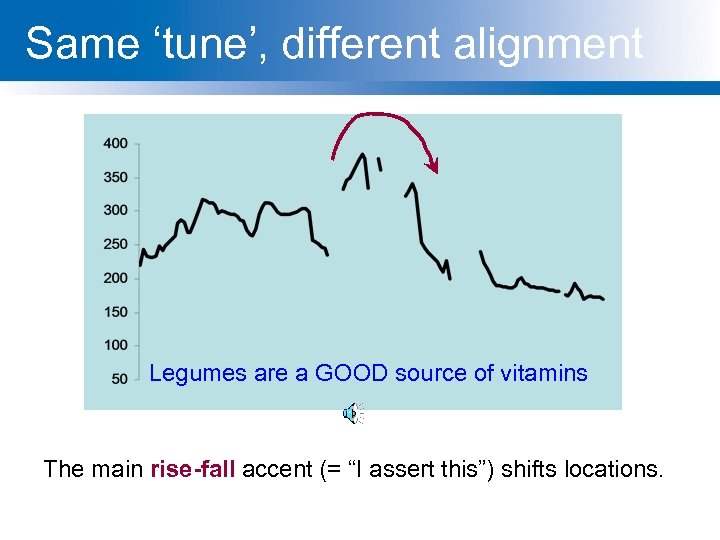

Same ‘tune’, different alignment Legumes are a GOOD source of vitamins The main rise-fall accent (= “I assert this”) shifts locations.

Same ‘tune’, different alignment Legumes are a GOOD source of vitamins The main rise-fall accent (= “I assert this”) shifts locations.

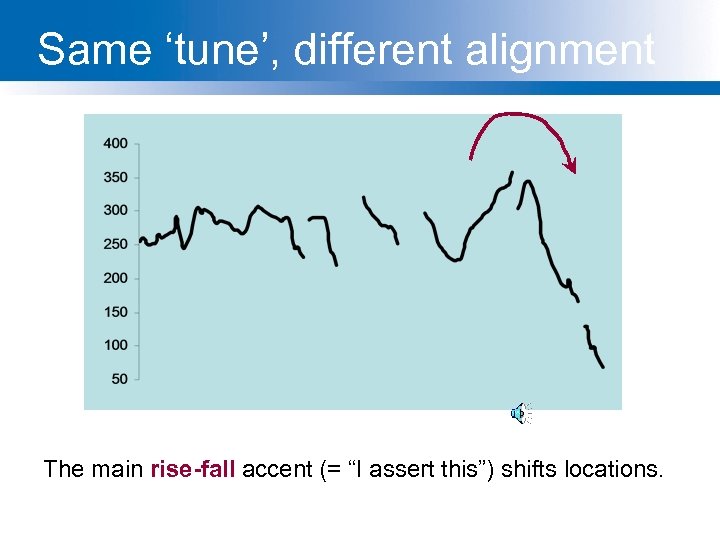

Same ‘tune’, different alignment legumes are a good source of VITAMINS The main rise-fall accent (= “I assert this”) shifts locations.

Same ‘tune’, different alignment legumes are a good source of VITAMINS The main rise-fall accent (= “I assert this”) shifts locations.

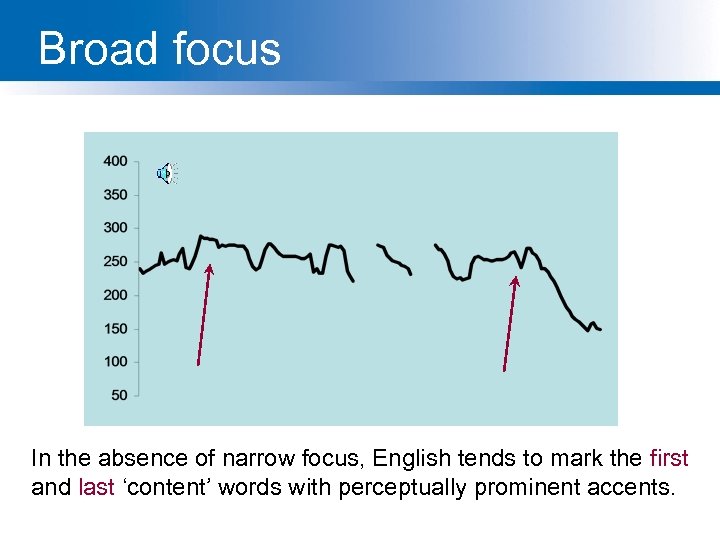

Broad focus legumes are a good source of vitamins In the absence of narrow focus, English tends to mark the first and last ‘content’ words with perceptually prominent accents.

Broad focus legumes are a good source of vitamins In the absence of narrow focus, English tends to mark the first and last ‘content’ words with perceptually prominent accents.

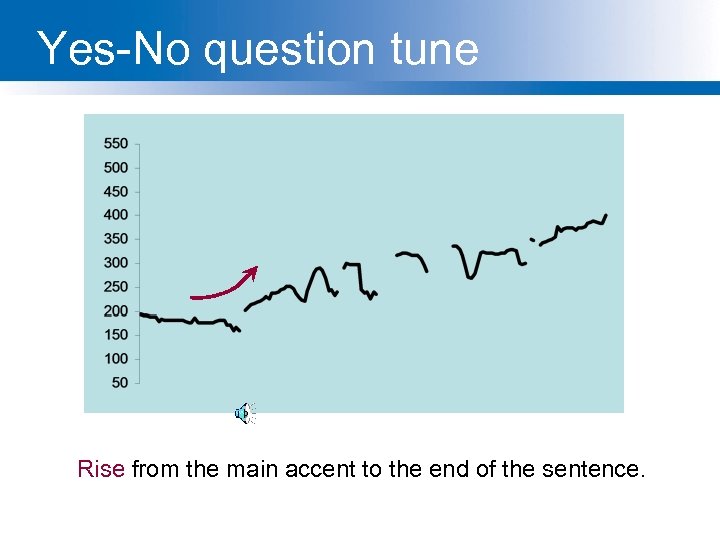

Yes-No question tune are LEGUMES a good source of vitamins Rise from the main accent to the end of the sentence.

Yes-No question tune are LEGUMES a good source of vitamins Rise from the main accent to the end of the sentence.

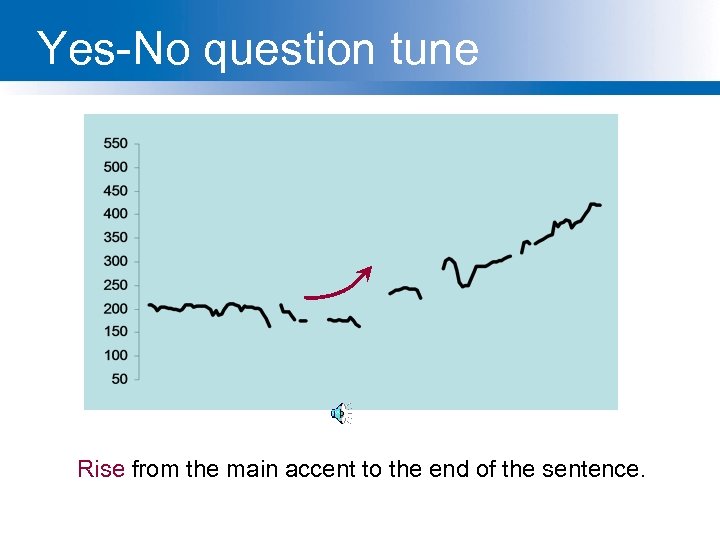

Yes-No question tune are legumes a GOOD source of vitamins Rise from the main accent to the end of the sentence.

Yes-No question tune are legumes a GOOD source of vitamins Rise from the main accent to the end of the sentence.

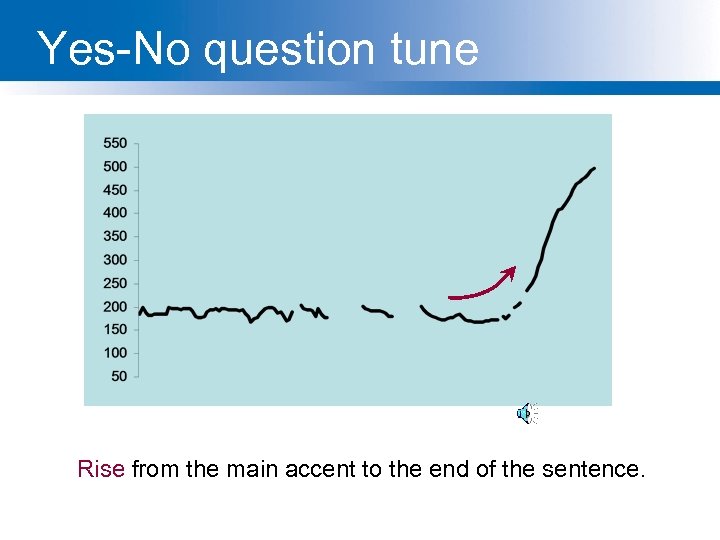

Yes-No question tune are legumes a good source of VITAMINS Rise from the main accent to the end of the sentence.

Yes-No question tune are legumes a good source of VITAMINS Rise from the main accent to the end of the sentence.

![WH-questions [I know that many natural foods are healthy, but. . . ] WHAT WH-questions [I know that many natural foods are healthy, but. . . ] WHAT](https://present5.com/presentation/648a0e1505a6ce35e32a390575ce11ef/image-118.jpg) WH-questions [I know that many natural foods are healthy, but. . . ] WHAT are a good source of vitamins WH-questions typically have falling contours, like statements.

WH-questions [I know that many natural foods are healthy, but. . . ] WHAT are a good source of vitamins WH-questions typically have falling contours, like statements.

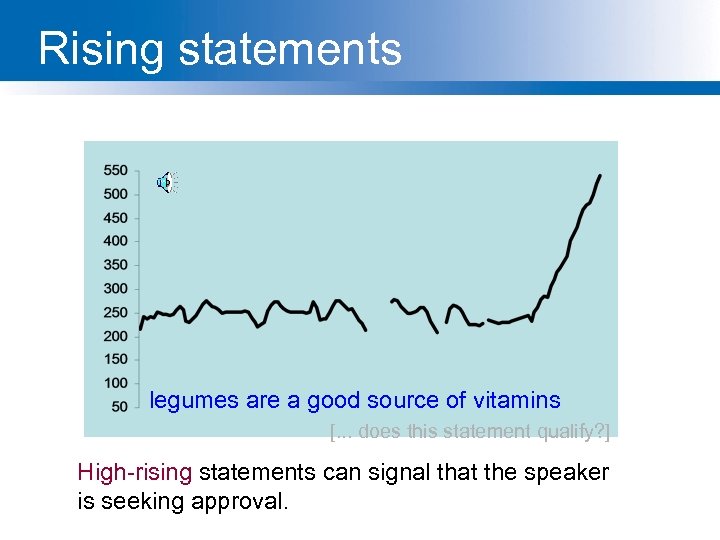

Rising statements legumes are a good source of vitamins [. . . does this statement qualify? ] High-rising statements can signal that the speaker is seeking approval.

Rising statements legumes are a good source of vitamins [. . . does this statement qualify? ] High-rising statements can signal that the speaker is seeking approval.

![‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ] ‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ]](https://present5.com/presentation/648a0e1505a6ce35e32a390575ce11ef/image-120.jpg) ‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ] legumes are a good source of vitamins Low beginning followed by a gradual rise to a high at the end.

‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ] legumes are a good source of vitamins Low beginning followed by a gradual rise to a high at the end.

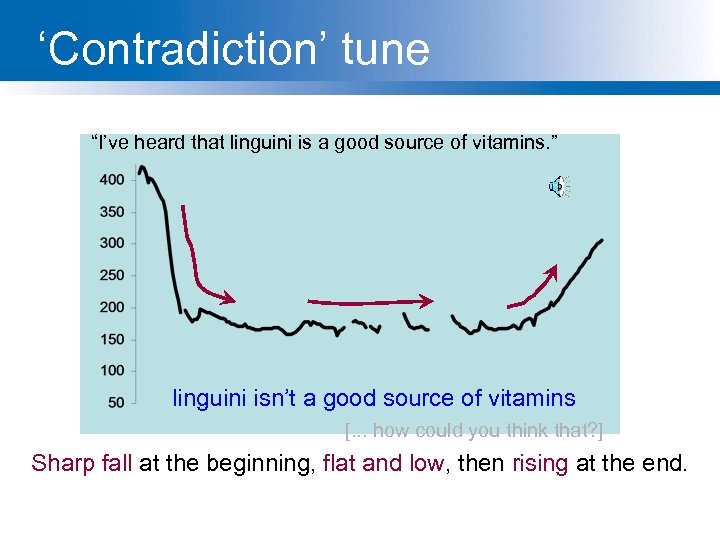

‘Contradiction’ tune “I’ve heard that linguini is a good source of vitamins. ” linguini isn’t a good source of vitamins [. . . how could you think that? ] Sharp fall at the beginning, flat and low, then rising at the end.

‘Contradiction’ tune “I’ve heard that linguini is a good source of vitamins. ” linguini isn’t a good source of vitamins [. . . how could you think that? ] Sharp fall at the beginning, flat and low, then rising at the end.

TOPIC #3 Intonational phrasing and disambiguation

TOPIC #3 Intonational phrasing and disambiguation

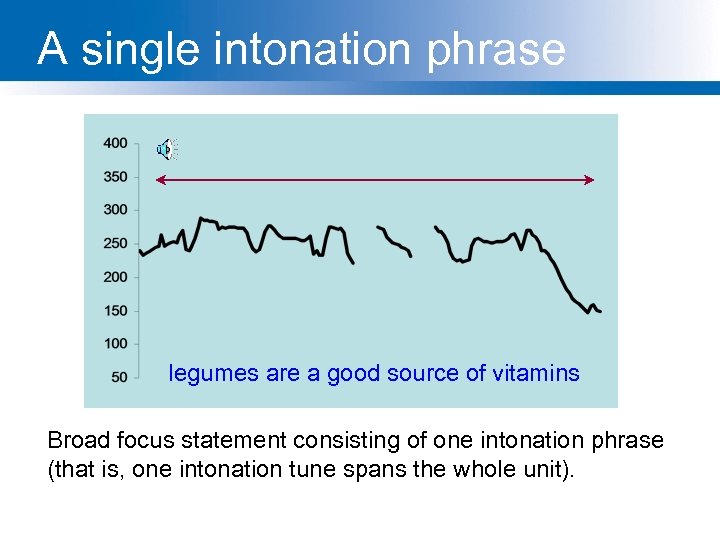

A single intonation phrase legumes are a good source of vitamins Broad focus statement consisting of one intonation phrase (that is, one intonation tune spans the whole unit).

A single intonation phrase legumes are a good source of vitamins Broad focus statement consisting of one intonation phrase (that is, one intonation tune spans the whole unit).

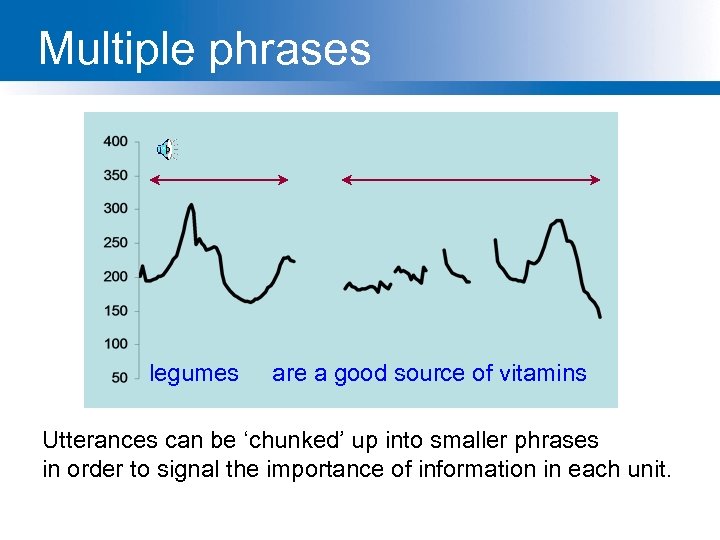

Multiple phrases legumes are a good source of vitamins Utterances can be ‘chunked’ up into smaller phrases in order to signal the importance of information in each unit.

Multiple phrases legumes are a good source of vitamins Utterances can be ‘chunked’ up into smaller phrases in order to signal the importance of information in each unit.

Phrasing can disambiguate • Global ambiguity: Sally saw % the man with the binoculars. Sally saw the man % with the binoculars.

Phrasing can disambiguate • Global ambiguity: Sally saw % the man with the binoculars. Sally saw the man % with the binoculars.

Phrasing can disambiguate • Temporary ambiguity: When Madonna sings the song. . .

Phrasing can disambiguate • Temporary ambiguity: When Madonna sings the song. . .

Phrasing can disambiguate • Temporary ambiguity: When Madonna sings the song is a hit.

Phrasing can disambiguate • Temporary ambiguity: When Madonna sings the song is a hit.

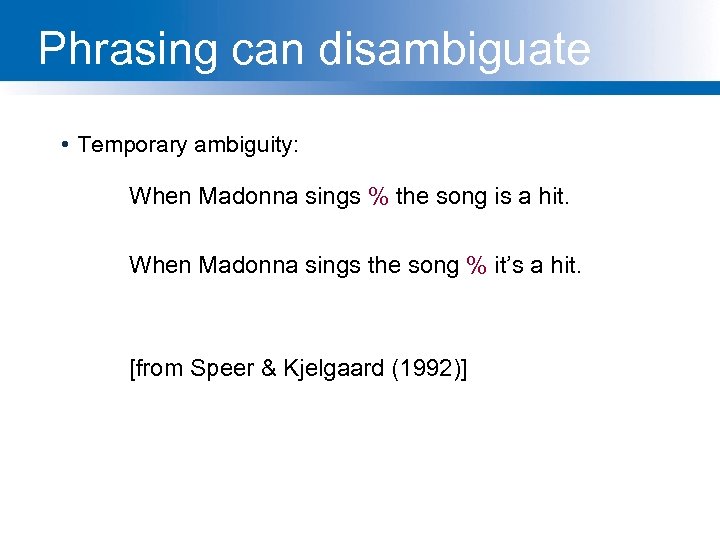

Phrasing can disambiguate • Temporary ambiguity: When Madonna sings % the song is a hit. When Madonna sings the song % it’s a hit. [from Speer & Kjelgaard (1992)]

Phrasing can disambiguate • Temporary ambiguity: When Madonna sings % the song is a hit. When Madonna sings the song % it’s a hit. [from Speer & Kjelgaard (1992)]

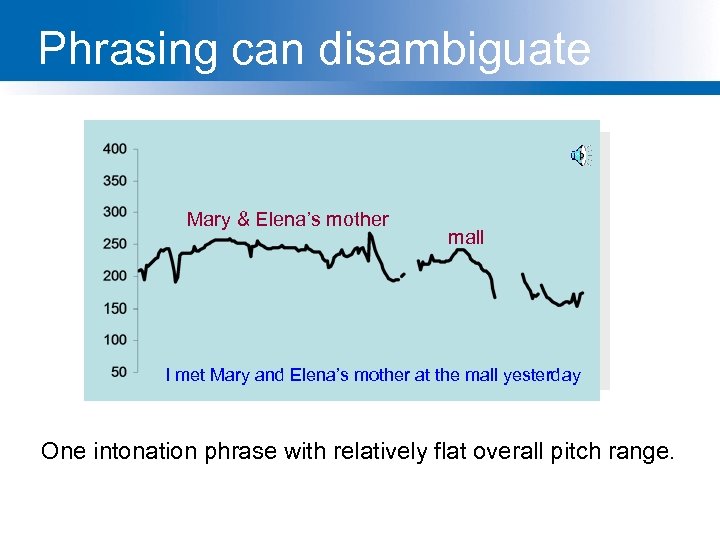

Phrasing can disambiguate Mary & Elena’s mother mall I met Mary and Elena’s mother at the mall yesterday One intonation phrase with relatively flat overall pitch range.

Phrasing can disambiguate Mary & Elena’s mother mall I met Mary and Elena’s mother at the mall yesterday One intonation phrase with relatively flat overall pitch range.

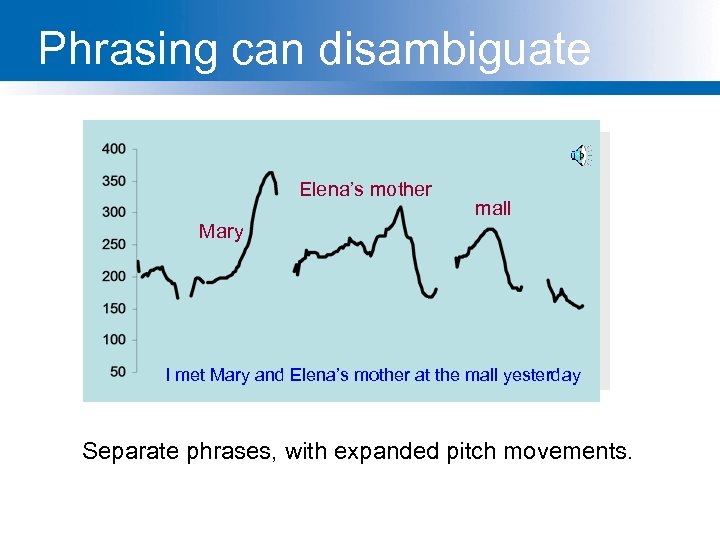

Phrasing can disambiguate Elena’s mother mall Mary I met Mary and Elena’s mother at the mall yesterday Separate phrases, with expanded pitch movements.

Phrasing can disambiguate Elena’s mother mall Mary I met Mary and Elena’s mother at the mall yesterday Separate phrases, with expanded pitch movements.

TOPIC #4 The TOBI Intonational Transcription Theory

TOPIC #4 The TOBI Intonational Transcription Theory

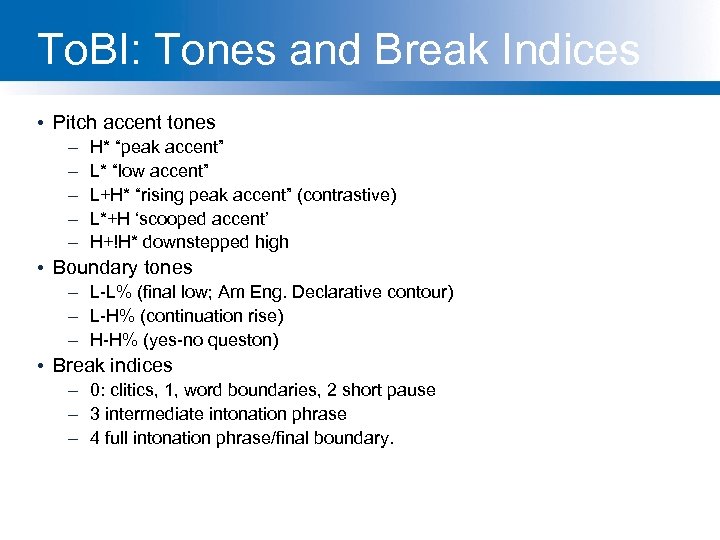

To. BI: Tones and Break Indices • Pitch accent tones – – – H* “peak accent” L* “low accent” L+H* “rising peak accent” (contrastive) L*+H ‘scooped accent’ H+!H* downstepped high • Boundary tones – L-L% (final low; Am Eng. Declarative contour) – L-H% (continuation rise) – H-H% (yes-no queston) • Break indices – 0: clitics, 1, word boundaries, 2 short pause – 3 intermediate intonation phrase – 4 full intonation phrase/final boundary.

To. BI: Tones and Break Indices • Pitch accent tones – – – H* “peak accent” L* “low accent” L+H* “rising peak accent” (contrastive) L*+H ‘scooped accent’ H+!H* downstepped high • Boundary tones – L-L% (final low; Am Eng. Declarative contour) – L-H% (continuation rise) – H-H% (yes-no queston) • Break indices – 0: clitics, 1, word boundaries, 2 short pause – 3 intermediate intonation phrase – 4 full intonation phrase/final boundary.

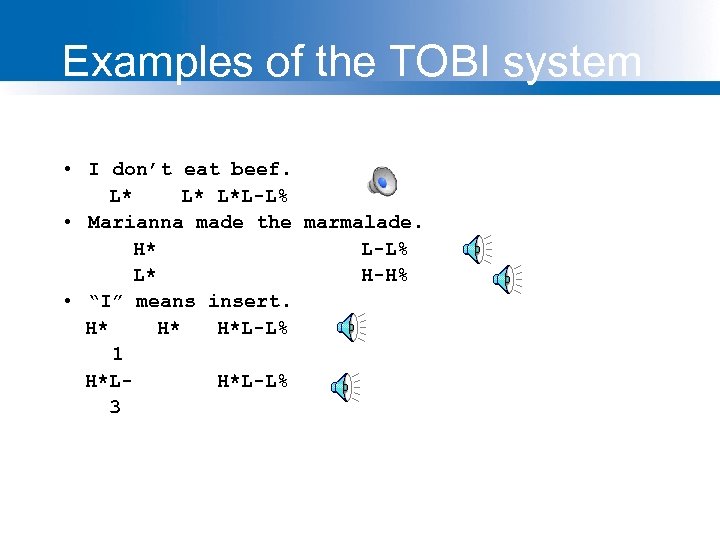

Examples of the TOBI system • I don’t eat beef. L* L* L*L-L% • Marianna made the marmalade. H* L-L% L* H-H% • “I” means insert. H* H* H*L-L% 1 H*LH*L-L% 3

Examples of the TOBI system • I don’t eat beef. L* L* L*L-L% • Marianna made the marmalade. H* L-L% L* H-H% • “I” means insert. H* H* H*L-L% 1 H*LH*L-L% 3

To. BI • http: //www. ling. ohio-state. edu/~tobi/ • TOBI for American English – http: //www. ling. ohio-state. edu/~tobi/ame_tobi/ • Silverman, K. , Beckman, M. , Pitrelli, J. , Ostendorf, M. , Wightman, C. , Price, P. , Pierrehumbert, J. , and Hirschberg, J. (1992). To. BI: a standard for labelling English prosody. In Proceedings of ICSLP 92, volume 2, pages 867 -870 • Pitrelli, J. F. , Beckman, M. E. , and Hirschberg, J. (1994). Evaluation of prosodic transcription labeling reliability in the To. BI framework. In ICSLP 94, volume 1, pages 123 -126 • Pierrehumbert, J. , and J. Hirschberg (1990) The meaning of intonation contours in the interpretation of discourse. In P. R. Cohen, J. Morgan, and M. E. Pollack, eds. , Plans and Intentions in. Communication and Discourse, 271 -311. MIT Press. • Beckman and Elam. Guidelines for To. BI Labelling. Web.

To. BI • http: //www. ling. ohio-state. edu/~tobi/ • TOBI for American English – http: //www. ling. ohio-state. edu/~tobi/ame_tobi/ • Silverman, K. , Beckman, M. , Pitrelli, J. , Ostendorf, M. , Wightman, C. , Price, P. , Pierrehumbert, J. , and Hirschberg, J. (1992). To. BI: a standard for labelling English prosody. In Proceedings of ICSLP 92, volume 2, pages 867 -870 • Pitrelli, J. F. , Beckman, M. E. , and Hirschberg, J. (1994). Evaluation of prosodic transcription labeling reliability in the To. BI framework. In ICSLP 94, volume 1, pages 123 -126 • Pierrehumbert, J. , and J. Hirschberg (1990) The meaning of intonation contours in the interpretation of discourse. In P. R. Cohen, J. Morgan, and M. E. Pollack, eds. , Plans and Intentions in. Communication and Discourse, 271 -311. MIT Press. • Beckman and Elam. Guidelines for To. BI Labelling. Web.

TOPIC #5 PRODUCING INTONATION IN TTS

TOPIC #5 PRODUCING INTONATION IN TTS

Intonation in TTS 1) Accent: Decide which words are accented, which syllable has accent, what sort of accent 2) Boundaries: Decide where intonational boundaries are 3) Duration: Specify length of each segment 4) F 0: Generate F 0 contour from these

Intonation in TTS 1) Accent: Decide which words are accented, which syllable has accent, what sort of accent 2) Boundaries: Decide where intonational boundaries are 3) Duration: Specify length of each segment 4) F 0: Generate F 0 contour from these

TOPIC #5 a Predicting pitch accent

TOPIC #5 a Predicting pitch accent

Factors in accent prediction • Contrast – Legumes are poor source of VITAMINS – No, legumes are a GOOD source of vitamins – I think JOHN and MARY should go – No, I think JOHN AND MARY should go

Factors in accent prediction • Contrast – Legumes are poor source of VITAMINS – No, legumes are a GOOD source of vitamins – I think JOHN and MARY should go – No, I think JOHN AND MARY should go

But it’s more than just contrast • List intonation: • I went and saw ANNA, LENNY, MARY, and NORA.

But it’s more than just contrast • List intonation: • I went and saw ANNA, LENNY, MARY, and NORA.

In fact, accents are common! • A Broadcast News example from Hirschberg (1993) • SUN MICROSYSTEMS INC, the UPSTART COMPANY that HELPED LAUNCH the DESKTOP COMPUTER industry TREND TOWARD HIGH powered WORKSTATIONS, was UNVEILING an ENTIRE OVERHAUL of its PRODUCT LINE TODAY. SOME of the new MACHINES, PRICED from FIVE THOUSAND NINE hundred NINETY five DOLLARS to seventy THREE thousand nine HUNDRED dollars, BOAST SOPHISTICATED new graphics and DIGITAL SOUND TECHNOLOGIES, HIGHER SPEEDS AND a CIRCUIT board that allows FULL motion VIDEO on a COMPUTER SCREEN.

In fact, accents are common! • A Broadcast News example from Hirschberg (1993) • SUN MICROSYSTEMS INC, the UPSTART COMPANY that HELPED LAUNCH the DESKTOP COMPUTER industry TREND TOWARD HIGH powered WORKSTATIONS, was UNVEILING an ENTIRE OVERHAUL of its PRODUCT LINE TODAY. SOME of the new MACHINES, PRICED from FIVE THOUSAND NINE hundred NINETY five DOLLARS to seventy THREE thousand nine HUNDRED dollars, BOAST SOPHISTICATED new graphics and DIGITAL SOUND TECHNOLOGIES, HIGHER SPEEDS AND a CIRCUIT board that allows FULL motion VIDEO on a COMPUTER SCREEN.

Factors in accent prediction • Part of speech: – Content words are usually accented – Function words are rarely accented • Of, for, in on, that, the, a, an, no, to, and but or will may would can her is their its our there is am are was were, etc

Factors in accent prediction • Part of speech: – Content words are usually accented – Function words are rarely accented • Of, for, in on, that, the, a, an, no, to, and but or will may would can her is their its our there is am are was were, etc

Factors in accent prediction • • Word Order Preposed items are accented more frequently TODAY we will BEGIN to LOOK at FROG anatomy. We will BEGIN to LOOK at FROG anatomy today.

Factors in accent prediction • • Word Order Preposed items are accented more frequently TODAY we will BEGIN to LOOK at FROG anatomy. We will BEGIN to LOOK at FROG anatomy today.

Factors in Accent Prediction • • Information Status: New versus old information. Old information is not deaccented There are LAWYERS, and there are GOOD lawyers

Factors in Accent Prediction • • Information Status: New versus old information. Old information is not deaccented There are LAWYERS, and there are GOOD lawyers

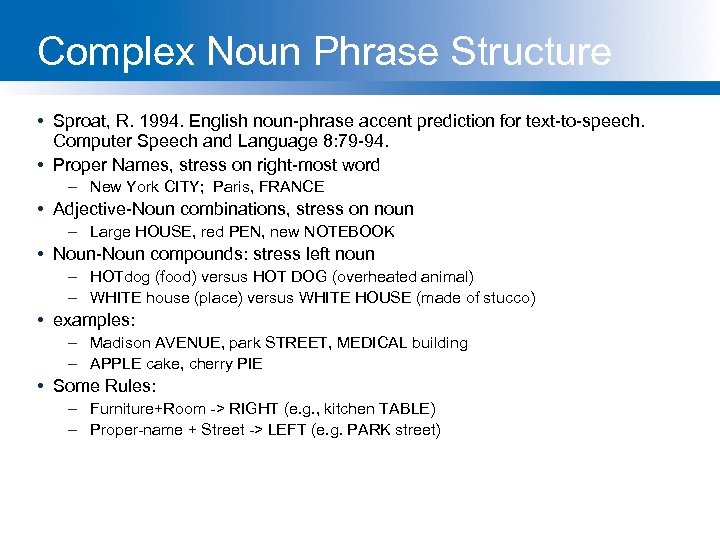

Complex Noun Phrase Structure • Sproat, R. 1994. English noun-phrase accent prediction for text-to-speech. Computer Speech and Language 8: 79 -94. • Proper Names, stress on right-most word – New York CITY; Paris, FRANCE • Adjective-Noun combinations, stress on noun – Large HOUSE, red PEN, new NOTEBOOK • Noun-Noun compounds: stress left noun – HOTdog (food) versus HOT DOG (overheated animal) – WHITE house (place) versus WHITE HOUSE (made of stucco) • examples: – Madison AVENUE, park STREET, MEDICAL building – APPLE cake, cherry PIE • Some Rules: – Furniture+Room -> RIGHT (e. g. , kitchen TABLE) – Proper-name + Street -> LEFT (e. g. PARK street)

Complex Noun Phrase Structure • Sproat, R. 1994. English noun-phrase accent prediction for text-to-speech. Computer Speech and Language 8: 79 -94. • Proper Names, stress on right-most word – New York CITY; Paris, FRANCE • Adjective-Noun combinations, stress on noun – Large HOUSE, red PEN, new NOTEBOOK • Noun-Noun compounds: stress left noun – HOTdog (food) versus HOT DOG (overheated animal) – WHITE house (place) versus WHITE HOUSE (made of stucco) • examples: – Madison AVENUE, park STREET, MEDICAL building – APPLE cake, cherry PIE • Some Rules: – Furniture+Room -> RIGHT (e. g. , kitchen TABLE) – Proper-name + Street -> LEFT (e. g. PARK street)

Other features • • POS of previous word POS of next word Stress of current, previous, next syllable Unigram probability of word Bigram probability of word Position of word in sentence

Other features • • POS of previous word POS of next word Stress of current, previous, next syllable Unigram probability of word Bigram probability of word Position of word in sentence

State of the art • • Hand-label large training sets Use CART, SVM, CRF, etc to predict accent Lots of rich features from context Classic lit: – Hirschberg, Julia. 1993. Pitch Accent in context: predicting intonational prominence from text. Artificial Intelligence 63, 305340

State of the art • • Hand-label large training sets Use CART, SVM, CRF, etc to predict accent Lots of rich features from context Classic lit: – Hirschberg, Julia. 1993. Pitch Accent in context: predicting intonational prominence from text. Artificial Intelligence 63, 305340

TOPIC #5 b Predicting boundaries

TOPIC #5 b Predicting boundaries

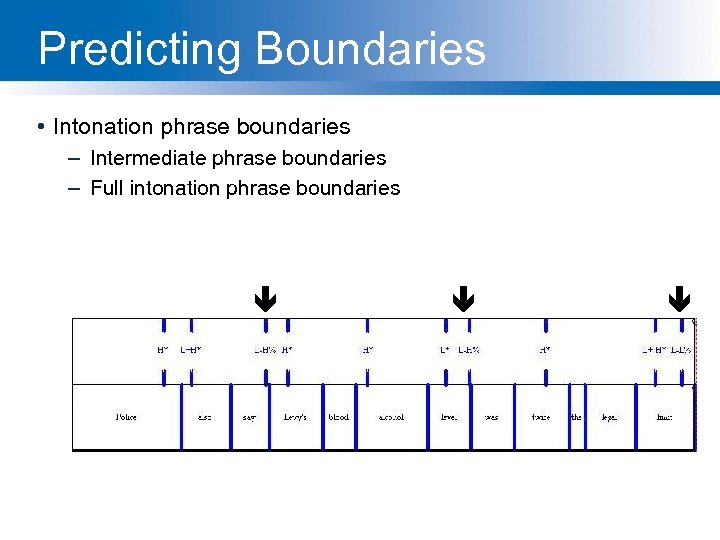

Predicting Boundaries • Intonation phrase boundaries – Intermediate phrase boundaries – Full intonation phrase boundaries

Predicting Boundaries • Intonation phrase boundaries – Intermediate phrase boundaries – Full intonation phrase boundaries

More examples • From Ostendorf and Veilleux. 1994 “Hierarchical Stochastic model for Automatic Prediction of Prosodic Boundary Location”, Computational Linguistics 20: 1 • Computer phone calls, || which do everything | from selling magazine subscriptions || to reminding people about meetings || have become the telephone equivalent | of junk mail. || • Doctor Norman Rosenblatt, || dean of the college | of criminal justice at Northeastern University, || agrees. || • For WBUR, || I’m Margo Melnicove.

More examples • From Ostendorf and Veilleux. 1994 “Hierarchical Stochastic model for Automatic Prediction of Prosodic Boundary Location”, Computational Linguistics 20: 1 • Computer phone calls, || which do everything | from selling magazine subscriptions || to reminding people about meetings || have become the telephone equivalent | of junk mail. || • Doctor Norman Rosenblatt, || dean of the college | of criminal justice at Northeastern University, || agrees. || • For WBUR, || I’m Margo Melnicove.

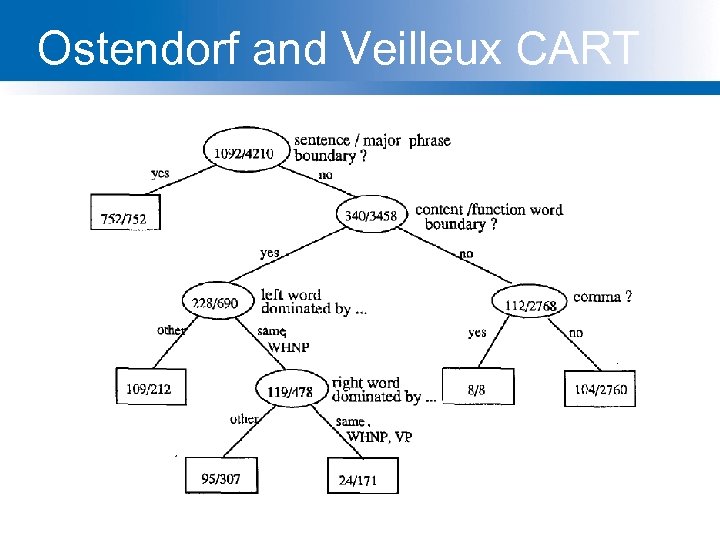

Ostendorf and Veilleux CART

Ostendorf and Veilleux CART

TOPIC #5 c Predicting duration

TOPIC #5 c Predicting duration

Duration • Simplest: fixed size for all phones (100 ms) • Next simplest: average duration for that phone (from training data). Samples from SWBD in ms: – – – aa ax ay eh Ih 118 59 138 87 77 b d dh f g 68 68 44 90 66 • Next Simplest: add in phrase-final and initial lengthening plus stress: • Better: average duration for each triphone

Duration • Simplest: fixed size for all phones (100 ms) • Next simplest: average duration for that phone (from training data). Samples from SWBD in ms: – – – aa ax ay eh Ih 118 59 138 87 77 b d dh f g 68 68 44 90 66 • Next Simplest: add in phrase-final and initial lengthening plus stress: • Better: average duration for each triphone

Duration in Festival (2) • Klatt duration rules. Modify duration based on: – – – Position in clause Syllable position in word Syllable type Lexical stress Left+right context phone Prepausal lengthening • Festival: 2 options – Klatt rules – Use labeled training set with Klatt features to train CART

Duration in Festival (2) • Klatt duration rules. Modify duration based on: – – – Position in clause Syllable position in word Syllable type Lexical stress Left+right context phone Prepausal lengthening • Festival: 2 options – Klatt rules – Use labeled training set with Klatt features to train CART

Duration: state of the art • Lots of fancy models of duration prediction: – Using Z-scores and other clever normalizations – Sum-of-products model – New features like word predictability • Words with higher bigram probability are shorter

Duration: state of the art • Lots of fancy models of duration prediction: – Using Z-scores and other clever normalizations – Sum-of-products model – New features like word predictability • Words with higher bigram probability are shorter

TOPIC #5 d F 0 Generation

TOPIC #5 d F 0 Generation

F 0 Generation • Generation in Festival – F 0 Generation by rule – F 0 Generation by linear regression • Some constraints – F 0 is constrained by accents and boundaries – F 0 declines gradually over an utterance (“declination”)

F 0 Generation • Generation in Festival – F 0 Generation by rule – F 0 Generation by linear regression • Some constraints – F 0 is constrained by accents and boundaries – F 0 declines gradually over an utterance (“declination”)

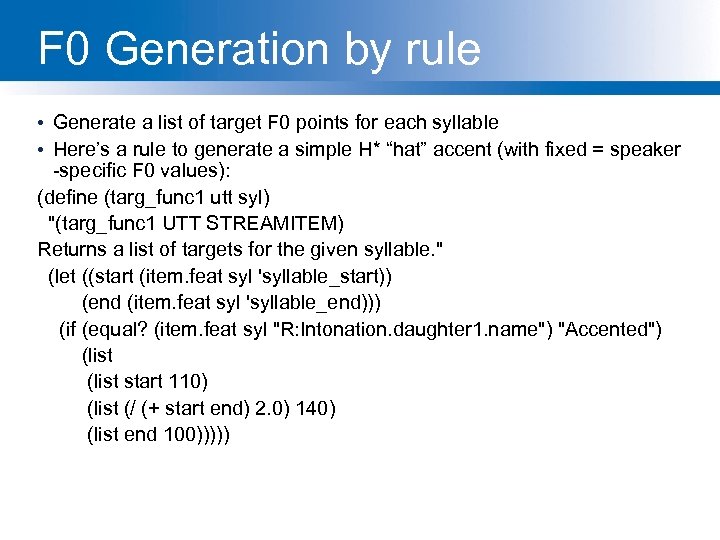

F 0 Generation by rule • Generate a list of target F 0 points for each syllable • Here’s a rule to generate a simple H* “hat” accent (with fixed = speaker -specific F 0 values): (define (targ_func 1 utt syl) "(targ_func 1 UTT STREAMITEM) Returns a list of targets for the given syllable. " (let ((start (item. feat syl 'syllable_start)) (end (item. feat syl 'syllable_end))) (if (equal? (item. feat syl "R: Intonation. daughter 1. name") "Accented") (list start 110) (list (/ (+ start end) 2. 0) 140) (list end 100)))))

F 0 Generation by rule • Generate a list of target F 0 points for each syllable • Here’s a rule to generate a simple H* “hat” accent (with fixed = speaker -specific F 0 values): (define (targ_func 1 utt syl) "(targ_func 1 UTT STREAMITEM) Returns a list of targets for the given syllable. " (let ((start (item. feat syl 'syllable_start)) (end (item. feat syl 'syllable_end))) (if (equal? (item. feat syl "R: Intonation. daughter 1. name") "Accented") (list start 110) (list (/ (+ start end) 2. 0) 140) (list end 100)))))

F 0 generation by regression • Supervised machine learning again • We predict: value of F 0 at 3 places in each syllable • Predictor features: – – Accent of current word, next word, previous Boundaries Syllable type, phonetic information Stress information • Need training sets with pitch accents labeled

F 0 generation by regression • Supervised machine learning again • We predict: value of F 0 at 3 places in each syllable • Predictor features: – – Accent of current word, next word, previous Boundaries Syllable type, phonetic information Stress information • Need training sets with pitch accents labeled

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation

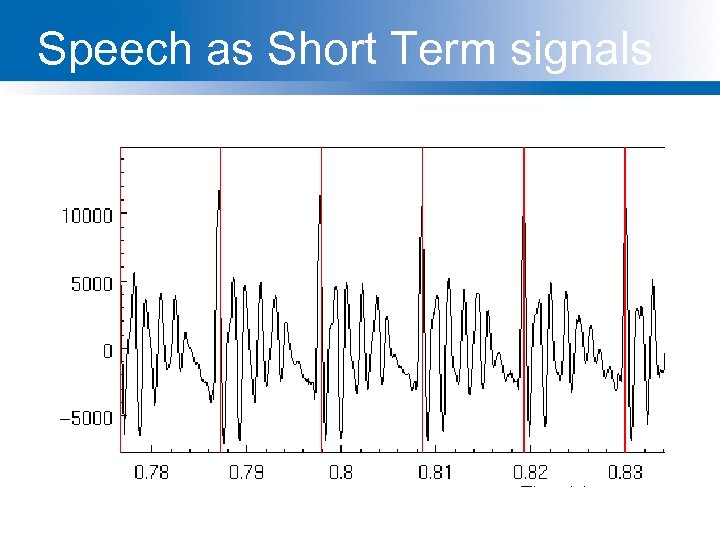

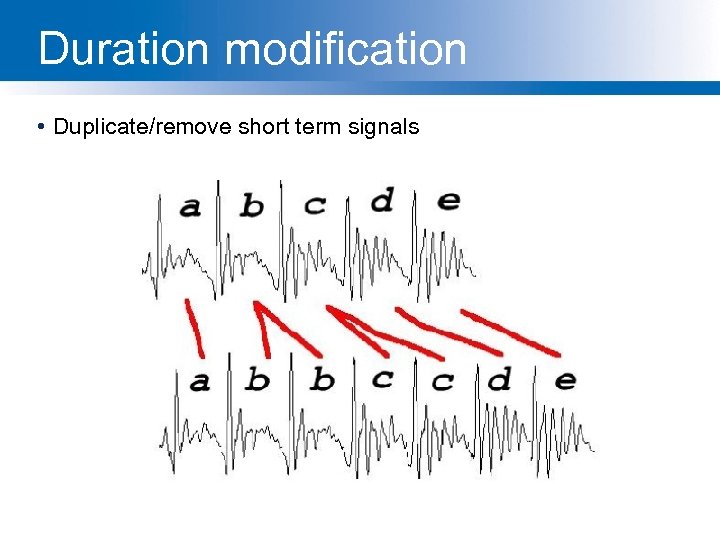

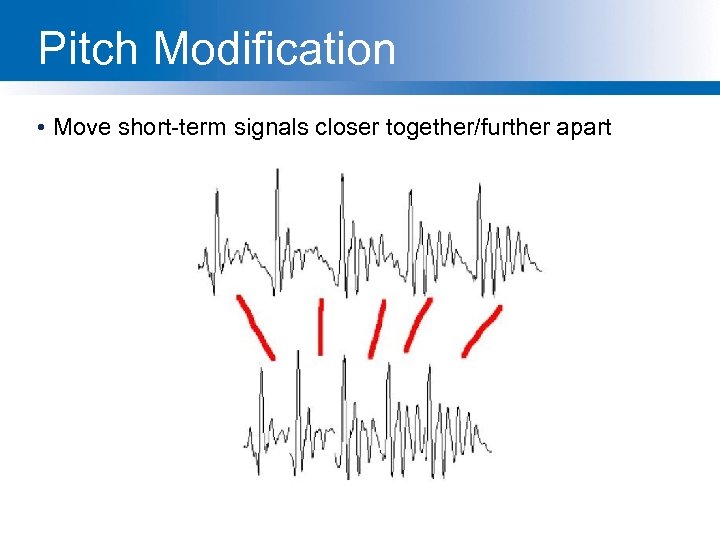

Outline • History of TTS • Architecture • Text Processing • Letter-to-Sound Rules • Prosody • Waveform Generation • Evaluation