c1f9d9904cd12519c3addfcef908720b.ppt

- Количество слайдов: 21

Testing -- CS 446 Gio Wiederhold Fall 1999 Testing only proves the presence of errors, not their absence [Edsger Dijkstra] 1

Testing -- CS 446 Gio Wiederhold Fall 1999 Testing only proves the presence of errors, not their absence [Edsger Dijkstra] 1

Objective ? • • Assure that program executes? Assure that it provides results? Assure that the results look good ? Assure that the results are correct wrt – expectations ? – specifications ? – customer expectations ? – interfaces to other programs ? 2

Objective ? • • Assure that program executes? Assure that it provides results? Assure that the results look good ? Assure that the results are correct wrt – expectations ? – specifications ? – customer expectations ? – interfaces to other programs ? 2

IMPORTANCE ? • Fraction of development cost - Significant ? • Objectives -- relevant ? • Setting to do testing -- simple/complex? 3

IMPORTANCE ? • Fraction of development cost - Significant ? • Objectives -- relevant ? • Setting to do testing -- simple/complex? 3

IMPORTANCE ? • Fraction of development cost = 40% – more for real-time programs -- why? – prior to delivery, % in Maintenance? • Objectives -- conflicting relevance + show program correctness - confidence – find errors to debug - actual approach • Setting to do testing -- complex requirements – Fixed environment -- reproducible / observable – Inputs -- all values / extreme+branch values / timings – Outputs -- values / performance metrics • customer expectations / · analytical predictions / • past values 4

IMPORTANCE ? • Fraction of development cost = 40% – more for real-time programs -- why? – prior to delivery, % in Maintenance? • Objectives -- conflicting relevance + show program correctness - confidence – find errors to debug - actual approach • Setting to do testing -- complex requirements – Fixed environment -- reproducible / observable – Inputs -- all values / extreme+branch values / timings – Outputs -- values / performance metrics • customer expectations / · analytical predictions / • past values 4

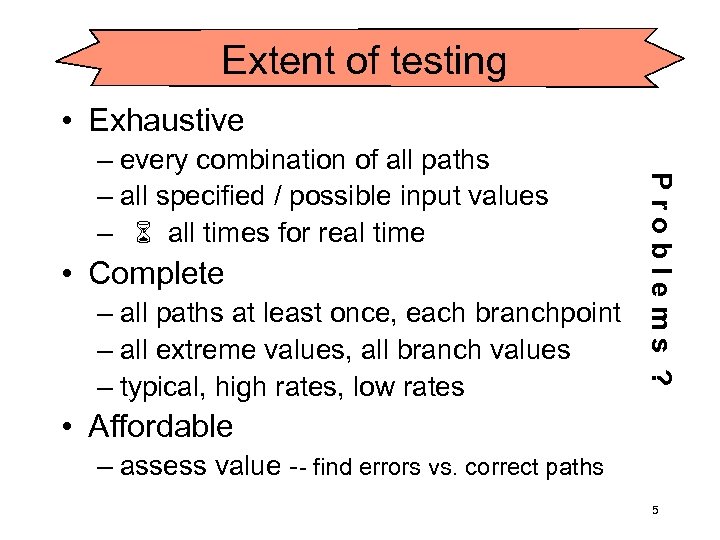

Extent of testing • Exhaustive • Complete – all paths at least once, each branchpoint – all extreme values, all branch values – typical, high rates, low rates Problems ? – every combination of all paths – all specified / possible input values – 6 all times for real time • Affordable – assess value -- find errors vs. correct paths 5

Extent of testing • Exhaustive • Complete – all paths at least once, each branchpoint – all extreme values, all branch values – typical, high rates, low rates Problems ? – every combination of all paths – all specified / possible input values – 6 all times for real time • Affordable – assess value -- find errors vs. correct paths 5

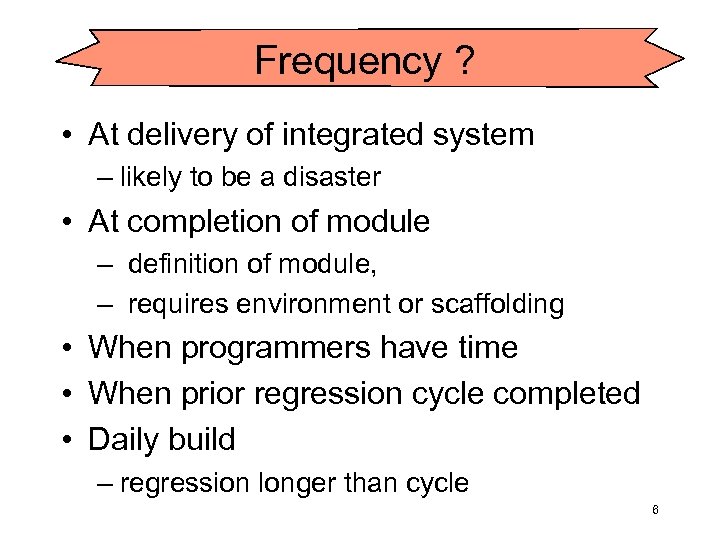

Frequency ? • At delivery of integrated system – likely to be a disaster • At completion of module – definition of module, – requires environment or scaffolding • When programmers have time • When prior regression cycle completed • Daily build – regression longer than cycle 6

Frequency ? • At delivery of integrated system – likely to be a disaster • At completion of module – definition of module, – requires environment or scaffolding • When programmers have time • When prior regression cycle completed • Daily build – regression longer than cycle 6

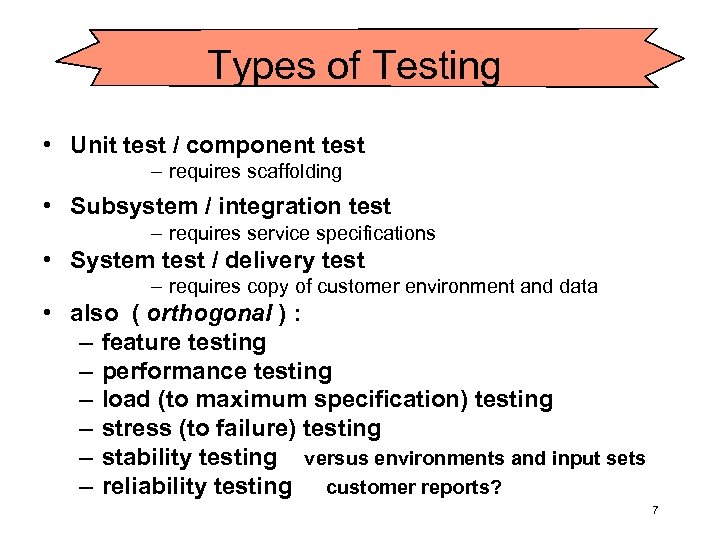

Types of Testing • Unit test / component test – requires scaffolding • Subsystem / integration test – requires service specifications • System test / delivery test – requires copy of customer environment and data • also ( orthogonal ) : – feature testing – performance testing – load (to maximum specification) testing – stress (to failure) testing – stability testing versus environments and input sets – reliability testing customer reports? 7

Types of Testing • Unit test / component test – requires scaffolding • Subsystem / integration test – requires service specifications • System test / delivery test – requires copy of customer environment and data • also ( orthogonal ) : – feature testing – performance testing – load (to maximum specification) testing – stress (to failure) testing – stability testing versus environments and input sets – reliability testing customer reports? 7

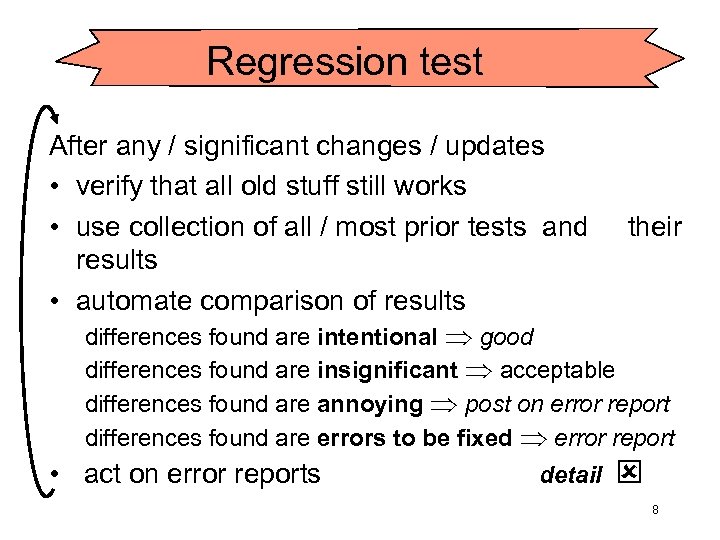

Regression test After any / significant changes / updates • verify that all old stuff still works • use collection of all / most prior tests and their results • automate comparison of results differences found are intentional Þ good differences found are insignificant Þ acceptable differences found are annoying Þ post on error report differences found are errors to be fixed Þ error report • act on error reports detail ý 8

Regression test After any / significant changes / updates • verify that all old stuff still works • use collection of all / most prior tests and their results • automate comparison of results differences found are intentional Þ good differences found are insignificant Þ acceptable differences found are annoying Þ post on error report differences found are errors to be fixed Þ error report • act on error reports detail ý 8

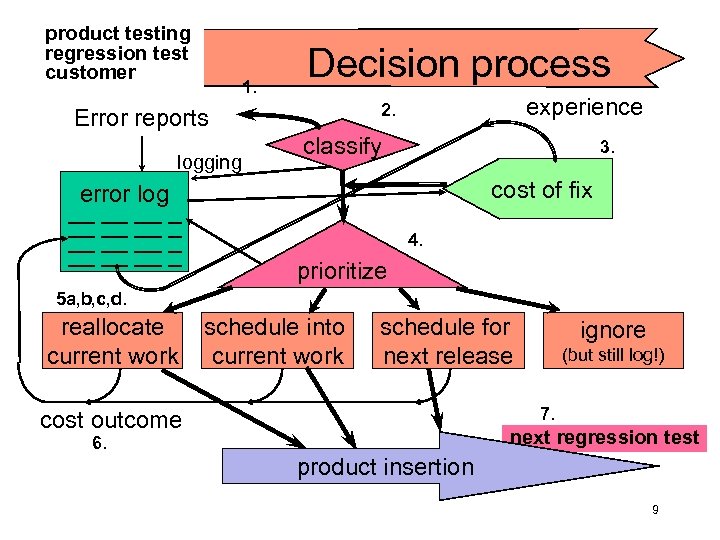

product testing regression test customer 1. Decision process logging error log __ __ __ _ experience 2. Error reports classify 3. cost of fix 4. prioritize 5 a, b, c, d. reallocate current work schedule into current work schedule for next release ignore (but still log!) 7. cost outcome next regression test 6. product insertion 9

product testing regression test customer 1. Decision process logging error log __ __ __ _ experience 2. Error reports classify 3. cost of fix 4. prioritize 5 a, b, c, d. reallocate current work schedule into current work schedule for next release ignore (but still log!) 7. cost outcome next regression test 6. product insertion 9

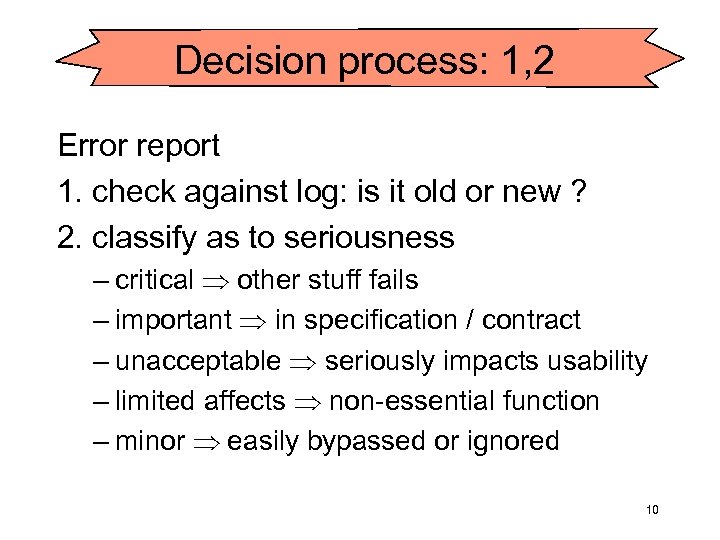

Decision process: 1, 2 Error report 1. check against log: is it old or new ? 2. classify as to seriousness – critical Þ other stuff fails – important Þ in specification / contract – unacceptable Þ seriously impacts usability – limited affects Þ non-essential function – minor Þ easily bypassed or ignored 10

Decision process: 1, 2 Error report 1. check against log: is it old or new ? 2. classify as to seriousness – critical Þ other stuff fails – important Þ in specification / contract – unacceptable Þ seriously impacts usability – limited affects Þ non-essential function – minor Þ easily bypassed or ignored 10

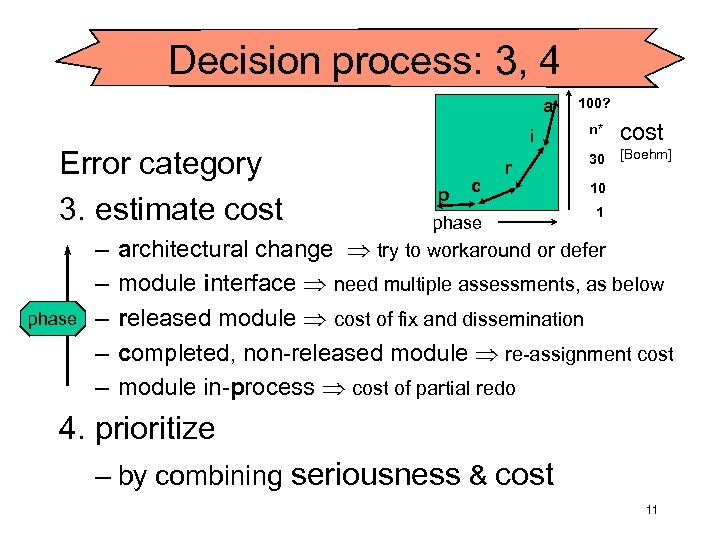

Decision process: 3, 4 a Error category 3. estimate cost phase – – – i p c r phase 100? n* cost 30 [Boehm] 10 1 architectural change Þ try to workaround or defer module interface Þ need multiple assessments, as below released module Þ cost of fix and dissemination completed, non-released module Þ re-assignment cost module in-process Þ cost of partial redo 4. prioritize – by combining seriousness & cost 11

Decision process: 3, 4 a Error category 3. estimate cost phase – – – i p c r phase 100? n* cost 30 [Boehm] 10 1 architectural change Þ try to workaround or defer module interface Þ need multiple assessments, as below released module Þ cost of fix and dissemination completed, non-released module Þ re-assignment cost module in-process Þ cost of partial redo 4. prioritize – by combining seriousness & cost 11

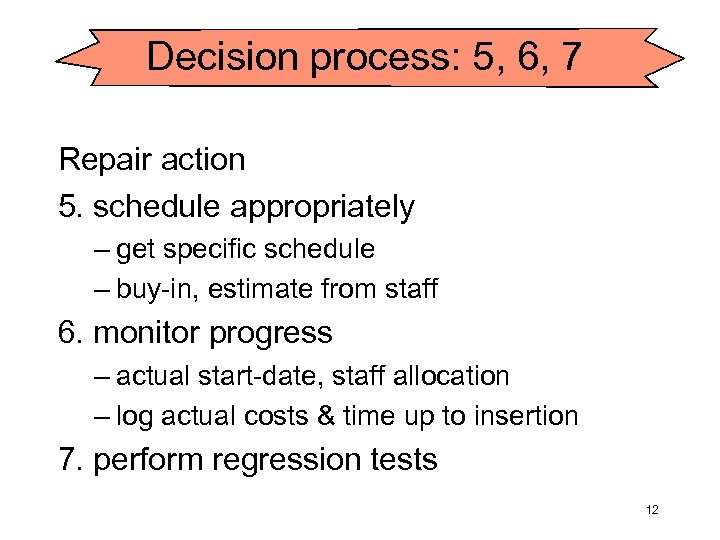

Decision process: 5, 6, 7 Repair action 5. schedule appropriately – get specific schedule – buy-in, estimate from staff 6. monitor progress – actual start-date, staff allocation – log actual costs & time up to insertion 7. perform regression tests 12

Decision process: 5, 6, 7 Repair action 5. schedule appropriately – get specific schedule – buy-in, estimate from staff 6. monitor progress – actual start-date, staff allocation – log actual costs & time up to insertion 7. perform regression tests 12

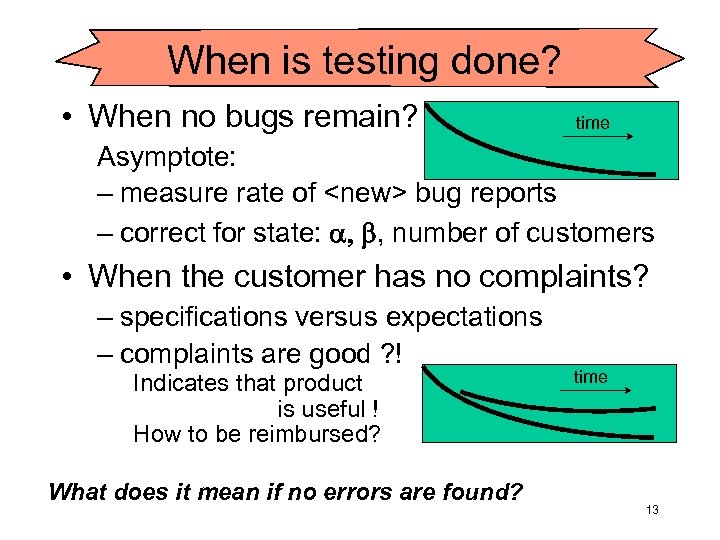

When is testing done? • When no bugs remain? time Asymptote: – measure rate of

When is testing done? • When no bugs remain? time Asymptote: – measure rate of

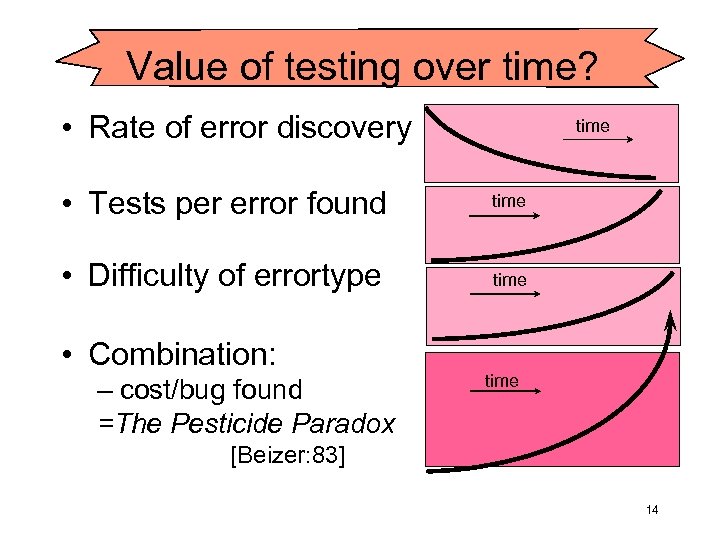

Value of testing over time? • Rate of error discovery time • Tests per error found time • Difficulty of errortype time • Combination: – cost/bug found =The Pesticide Paradox time [Beizer: 83] 14

Value of testing over time? • Rate of error discovery time • Tests per error found time • Difficulty of errortype time • Combination: – cost/bug found =The Pesticide Paradox time [Beizer: 83] 14

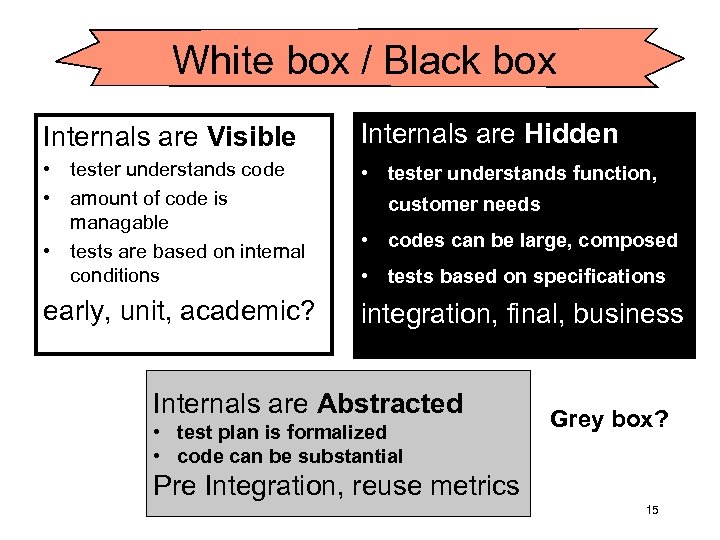

White box / Black box Internals are Visible Internals are Hidden • tester understands code • amount of code is managable • tests are based on internal conditions • tester understands function, early, unit, academic? integration, final, business customer needs • codes can be large, composed • tests based on specifications Internals are Abstracted • test plan is formalized • code can be substantial Grey box? Pre Integration, reuse metrics 15

White box / Black box Internals are Visible Internals are Hidden • tester understands code • amount of code is managable • tests are based on internal conditions • tester understands function, early, unit, academic? integration, final, business customer needs • codes can be large, composed • tests based on specifications Internals are Abstracted • test plan is formalized • code can be substantial Grey box? Pre Integration, reuse metrics 15

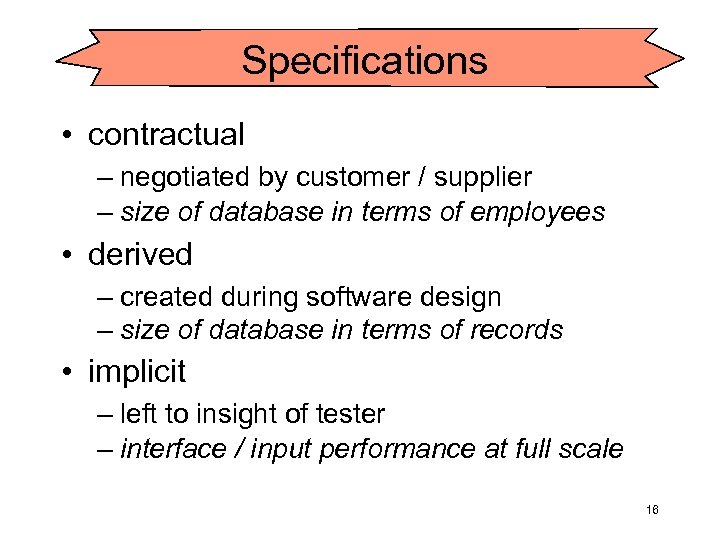

Specifications • contractual – negotiated by customer / supplier – size of database in terms of employees • derived – created during software design – size of database in terms of records • implicit – left to insight of tester – interface / input performance at full scale 16

Specifications • contractual – negotiated by customer / supplier – size of database in terms of employees • derived – created during software design – size of database in terms of records • implicit – left to insight of tester – interface / input performance at full scale 16

![Implicit specifications Example: Dialog design advice [Shneiderman] dont 1. Strive for consistency FTP login Implicit specifications Example: Dialog design advice [Shneiderman] dont 1. Strive for consistency FTP login](https://present5.com/presentation/c1f9d9904cd12519c3addfcef908720b/image-17.jpg) Implicit specifications Example: Dialog design advice [Shneiderman] dont 1. Strive for consistency FTP login - user 2. Enable shortcuts for experts deep menu trees 3. Offer informative feedback illegal operation 4. Design dialogs to yield closure FTP bin mode 5. Offer simple error handling editable form 6. Permit easy reversal of actions log, ctrl Z 7. Support internal locus of control no ptr to home page 8. Reduce short-term memory load > 7± 2 items Assignment? List violations from your experience 17

Implicit specifications Example: Dialog design advice [Shneiderman] dont 1. Strive for consistency FTP login - user 2. Enable shortcuts for experts deep menu trees 3. Offer informative feedback illegal operation 4. Design dialogs to yield closure FTP bin mode 5. Offer simple error handling editable form 6. Permit easy reversal of actions log, ctrl Z 7. Support internal locus of control no ptr to home page 8. Reduce short-term memory load > 7± 2 items Assignment? List violations from your experience 17

Bug types • assumptions – implit expectations • conflicts – features vs usability vs speed -- balance • algorithm – publications often don’t state limits – only report good aspect -- concurrency control • overflow – arrays, files, record types -- language dependent • leaks in memory allocation – language/executable dependent, tools available 18

Bug types • assumptions – implit expectations • conflicts – features vs usability vs speed -- balance • algorithm – publications often don’t state limits – only report good aspect -- concurrency control • overflow – arrays, files, record types -- language dependent • leaks in memory allocation – language/executable dependent, tools available 18

Testability What makes programs hard to test? • Boundary values hard to enter • Coverage hard to assess • Design for testability – internal breakpoints for inputs at branches – reports -- voluminous -- analysis tools – switches to bypass healthy areas (WB / BB) – temporary/permanent breakpoints/switches • Path to report mapping 19

Testability What makes programs hard to test? • Boundary values hard to enter • Coverage hard to assess • Design for testability – internal breakpoints for inputs at branches – reports -- voluminous -- analysis tools – switches to bypass healthy areas (WB / BB) – temporary/permanent breakpoints/switches • Path to report mapping 19

Alternatives • Verification – objective: versus formal specification – only performed after specifications are fixed • Validation – subjective: versus customer intent – implicit: expectations from the state-of-the-art – can be performed thoughout • Replication of coding and comparison – used where no realistic tests can be made – costly separation of staff, – still specification dependence, questionable • Code review ‘Static testing’ 20

Alternatives • Verification – objective: versus formal specification – only performed after specifications are fixed • Validation – subjective: versus customer intent – implicit: expectations from the state-of-the-art – can be performed thoughout • Replication of coding and comparison – used where no realistic tests can be made – costly separation of staff, – still specification dependence, questionable • Code review ‘Static testing’ 20

Conclusion • Planning for Testing is worthwhile – explicit cost item, relate to quality objective – distinct, but related to debugging • Logging is crucial – input to next test, further development • Requires knowledge of code, function – experience with programs, customer needs – can qualified testers be obtained, retained • Testing receives insufficient attention 21

Conclusion • Planning for Testing is worthwhile – explicit cost item, relate to quality objective – distinct, but related to debugging • Logging is crucial – input to next test, further development • Requires knowledge of code, function – experience with programs, customer needs – can qualified testers be obtained, retained • Testing receives insufficient attention 21