23119a16b24b6e2cb9d4faa42c0d47b5.ppt

- Количество слайдов: 19

Tense and Implicit Role Reference Joel Tetreault University of Rochester Department of Computer Science

Tense and Implicit Role Reference Joel Tetreault University of Rochester Department of Computer Science

Implicit Role Reference n n Verb phrases have certain required roles – NP’s that are expected For example: “take”: n n n Something to take (theme) A place to take it from (from-loc) A place to take it to (to-loc) Something to do the taking (agent) Possibly a tool to do the taking (instrument) Very little work has been done (Poesio, 1994; Asher and Lascarides, 1998)

Implicit Role Reference n n Verb phrases have certain required roles – NP’s that are expected For example: “take”: n n n Something to take (theme) A place to take it from (from-loc) A place to take it to (to-loc) Something to do the taking (agent) Possibly a tool to do the taking (instrument) Very little work has been done (Poesio, 1994; Asher and Lascarides, 1998)

Goal n n n Resolving IRR’s important to NLP To investigate how implicit roles work Develop an algorithm for resolving them Evaluation of algorithm for empirical results Use temporal information and discourse relations to improve results

Goal n n n Resolving IRR’s important to NLP To investigate how implicit roles work Develop an algorithm for resolving them Evaluation of algorithm for empirical results Use temporal information and discourse relations to improve results

Outline n n n Implicit Roles Annotation Algorithm Results Discussion

Outline n n n Implicit Roles Annotation Algorithm Results Discussion

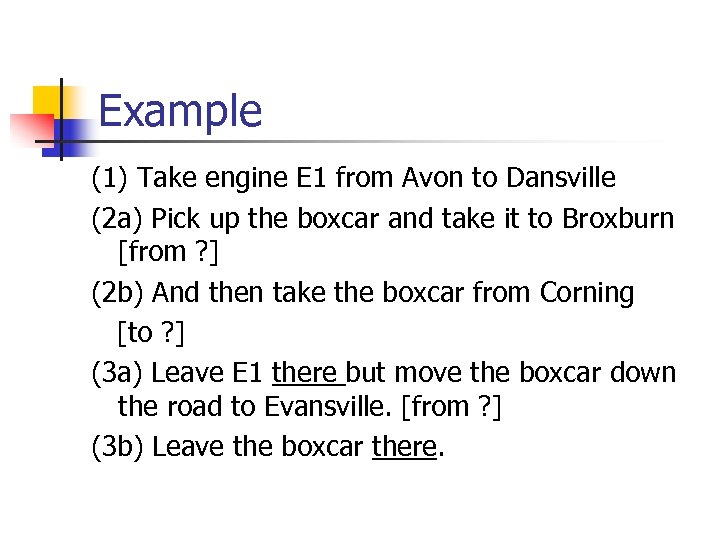

Example (1) Take engine E 1 from Avon to Dansville (2 a) Pick up the boxcar and take it to Broxburn [from ? ] (2 b) And then take the boxcar from Corning [to ? ] (3 a) Leave E 1 there but move the boxcar down the road to Evansville. [from ? ] (3 b) Leave the boxcar there.

Example (1) Take engine E 1 from Avon to Dansville (2 a) Pick up the boxcar and take it to Broxburn [from ? ] (2 b) And then take the boxcar from Corning [to ? ] (3 a) Leave E 1 there but move the boxcar down the road to Evansville. [from ? ] (3 b) Leave the boxcar there.

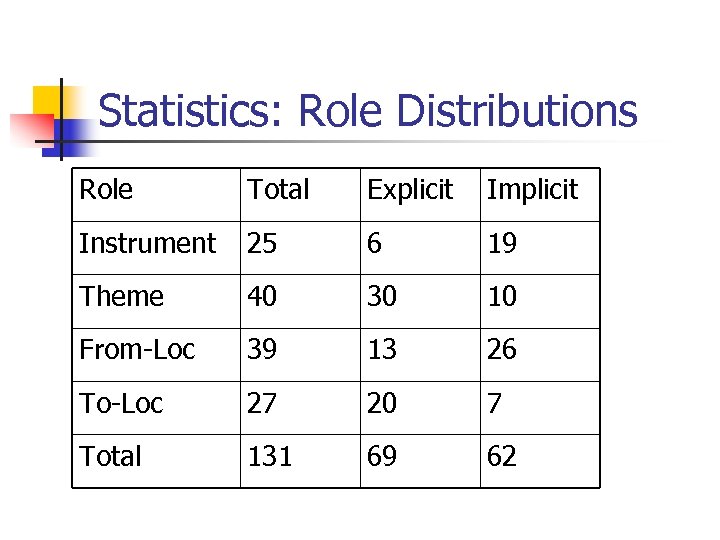

Statistics: Role Distributions Role Total Explicit Implicit Instrument 25 6 19 Theme 40 30 10 From-Loc 39 13 26 To-Loc 27 20 7 Total 131 69 62

Statistics: Role Distributions Role Total Explicit Implicit Instrument 25 6 19 Theme 40 30 10 From-Loc 39 13 26 To-Loc 27 20 7 Total 131 69 62

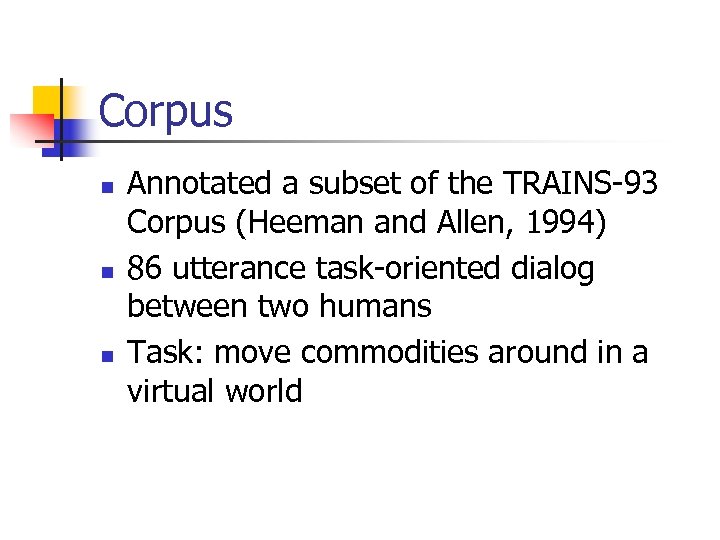

Corpus n n n Annotated a subset of the TRAINS-93 Corpus (Heeman and Allen, 1994) 86 utterance task-oriented dialog between two humans Task: move commodities around in a virtual world

Corpus n n n Annotated a subset of the TRAINS-93 Corpus (Heeman and Allen, 1994) 86 utterance task-oriented dialog between two humans Task: move commodities around in a virtual world

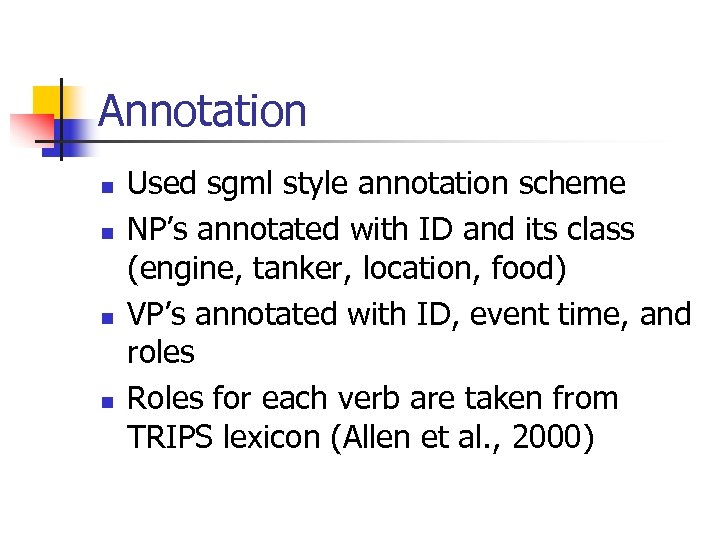

Annotation n n Used sgml style annotation scheme NP’s annotated with ID and its class (engine, tanker, location, food) VP’s annotated with ID, event time, and roles Roles for each verb are taken from TRIPS lexicon (Allen et al. , 2000)

Annotation n n Used sgml style annotation scheme NP’s annotated with ID and its class (engine, tanker, location, food) VP’s annotated with ID, event time, and roles Roles for each verb are taken from TRIPS lexicon (Allen et al. , 2000)

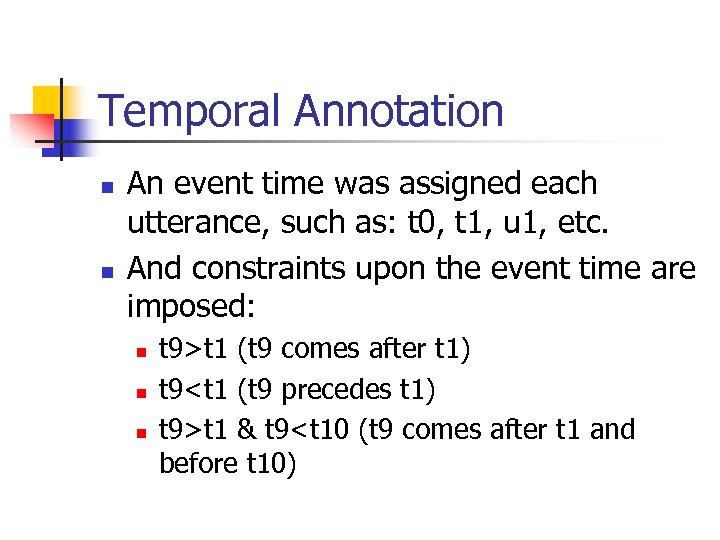

Temporal Annotation n n An event time was assigned each utterance, such as: t 0, t 1, u 1, etc. And constraints upon the event time are imposed: n n n t 9>t 1 (t 9 comes after t 1) t 9

Temporal Annotation n n An event time was assigned each utterance, such as: t 0, t 1, u 1, etc. And constraints upon the event time are imposed: n n n t 9>t 1 (t 9 comes after t 1) t 9

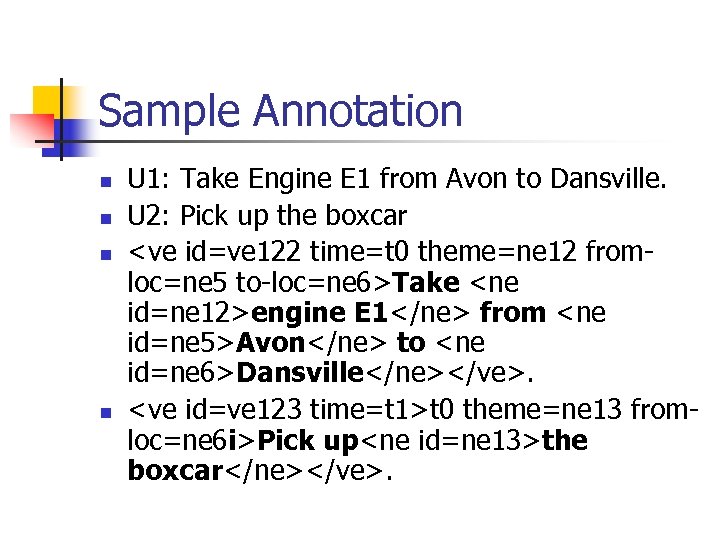

Sample Annotation n n U 1: Take Engine E 1 from Avon to Dansville. U 2: Pick up the boxcar

Sample Annotation n n U 1: Take Engine E 1 from Avon to Dansville. U 2: Pick up the boxcar

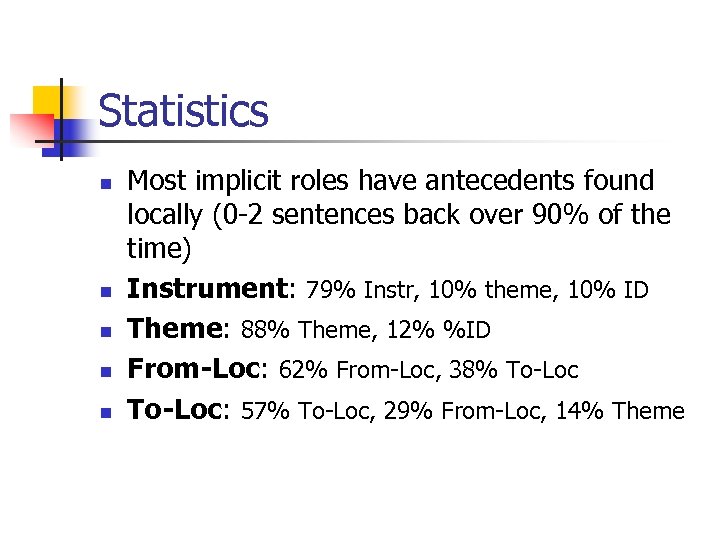

Statistics n n n Most implicit roles have antecedents found locally (0 -2 sentences back over 90% of the time) Instrument: 79% Instr, 10% theme, 10% ID Theme: 88% Theme, 12% %ID From-Loc: 62% From-Loc, 38% To-Loc: 57% To-Loc, 29% From-Loc, 14% Theme

Statistics n n n Most implicit roles have antecedents found locally (0 -2 sentences back over 90% of the time) Instrument: 79% Instr, 10% theme, 10% ID Theme: 88% Theme, 12% %ID From-Loc: 62% From-Loc, 38% To-Loc: 57% To-Loc, 29% From-Loc, 14% Theme

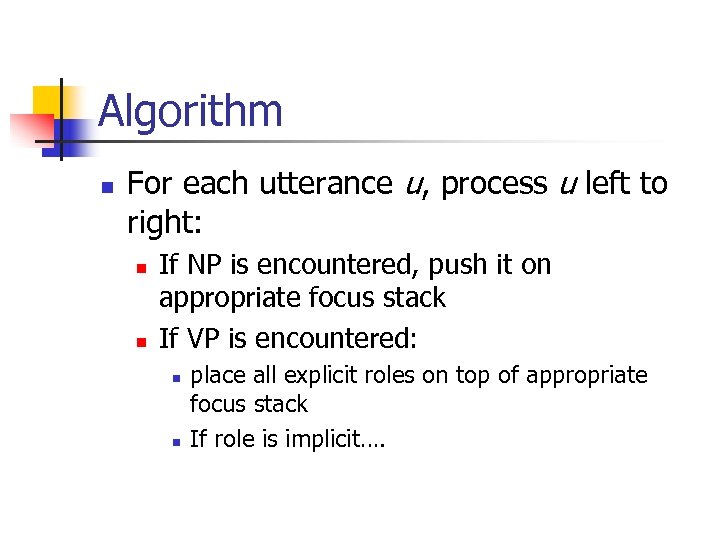

Algorithm n For each utterance u, process u left to right: n n If NP is encountered, push it on appropriate focus stack If VP is encountered: n n place all explicit roles on top of appropriate focus stack If role is implicit….

Algorithm n For each utterance u, process u left to right: n n If NP is encountered, push it on appropriate focus stack If VP is encountered: n n place all explicit roles on top of appropriate focus stack If role is implicit….

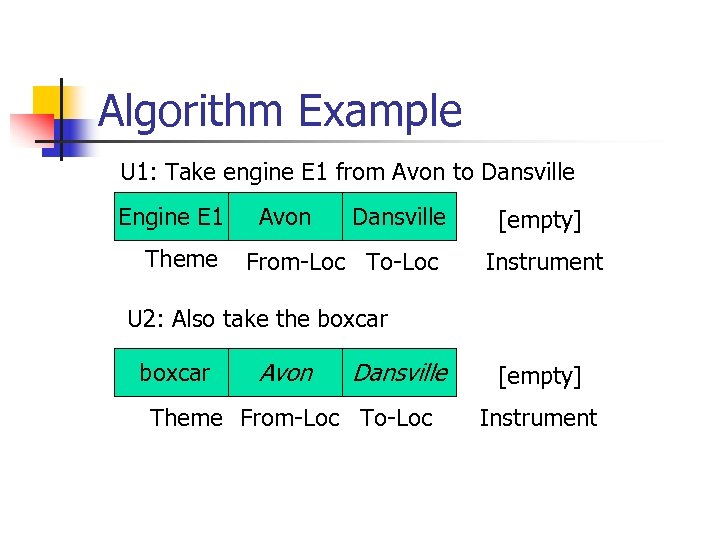

Algorithm Example U 1: Take engine E 1 from Avon to Dansville Engine E 1 Theme Avon Dansville From-Loc To-Loc [empty] Instrument U 2: Also take the boxcar Avon Dansville Theme From-Loc To-Loc [empty] Instrument

Algorithm Example U 1: Take engine E 1 from Avon to Dansville Engine E 1 Theme Avon Dansville From-Loc To-Loc [empty] Instrument U 2: Also take the boxcar Avon Dansville Theme From-Loc To-Loc [empty] Instrument

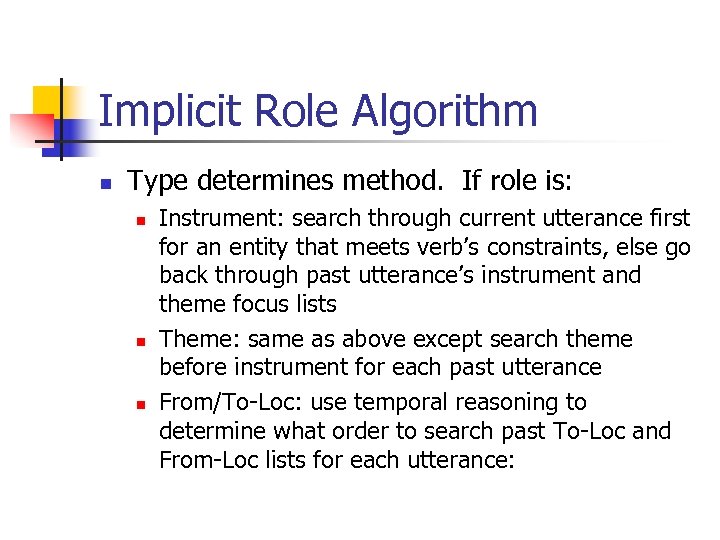

Implicit Role Algorithm n Type determines method. If role is: n n n Instrument: search through current utterance first for an entity that meets verb’s constraints, else go back through past utterance’s instrument and theme focus lists Theme: same as above except search theme before instrument for each past utterance From/To-Loc: use temporal reasoning to determine what order to search past To-Loc and From-Loc lists for each utterance:

Implicit Role Algorithm n Type determines method. If role is: n n n Instrument: search through current utterance first for an entity that meets verb’s constraints, else go back through past utterance’s instrument and theme focus lists Theme: same as above except search theme before instrument for each past utterance From/To-Loc: use temporal reasoning to determine what order to search past To-Loc and From-Loc lists for each utterance:

Temporal Algorithm n n n For two utterances uk and uj, with k > j, determine rel(uk, uj): If time(uk) > time(uj) then rel(uk, uj) = narrative Else rel(uk, uj) = parallel

Temporal Algorithm n n n For two utterances uk and uj, with k > j, determine rel(uk, uj): If time(uk) > time(uj) then rel(uk, uj) = narrative Else rel(uk, uj) = parallel

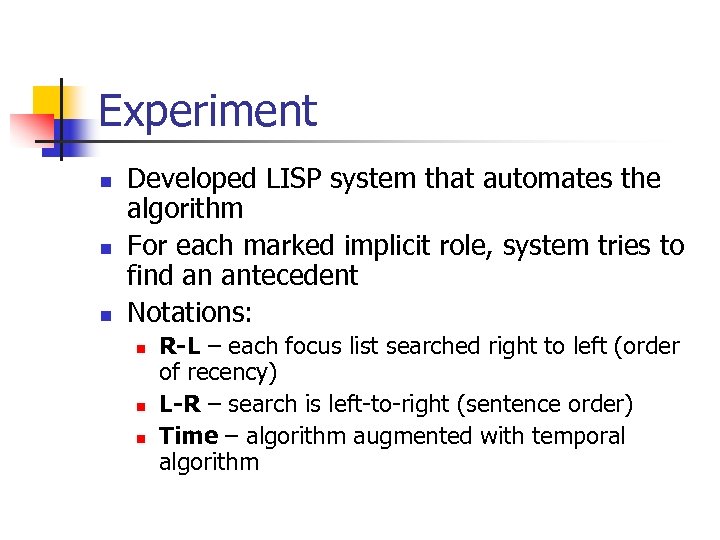

Experiment n n n Developed LISP system that automates the algorithm For each marked implicit role, system tries to find an antecedent Notations: n n n R-L – each focus list searched right to left (order of recency) L-R – search is left-to-right (sentence order) Time – algorithm augmented with temporal algorithm

Experiment n n n Developed LISP system that automates the algorithm For each marked implicit role, system tries to find an antecedent Notations: n n n R-L – each focus list searched right to left (order of recency) L-R – search is left-to-right (sentence order) Time – algorithm augmented with temporal algorithm

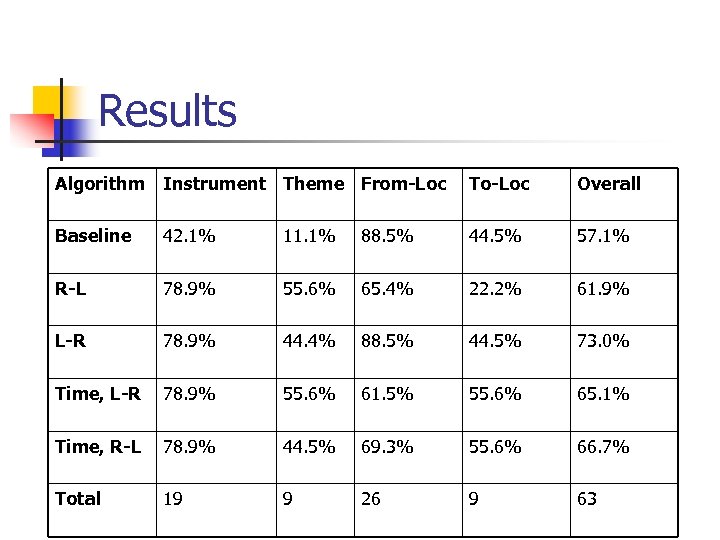

Results Algorithm Instrument Theme From-Loc To-Loc Overall Baseline 42. 1% 11. 1% 88. 5% 44. 5% 57. 1% R-L 78. 9% 55. 6% 65. 4% 22. 2% 61. 9% L-R 78. 9% 44. 4% 88. 5% 44. 5% 73. 0% Time, L-R 78. 9% 55. 6% 61. 5% 55. 6% 65. 1% Time, R-L 78. 9% 44. 5% 69. 3% 55. 6% 66. 7% Total 19 9 26 9 63

Results Algorithm Instrument Theme From-Loc To-Loc Overall Baseline 42. 1% 11. 1% 88. 5% 44. 5% 57. 1% R-L 78. 9% 55. 6% 65. 4% 22. 2% 61. 9% L-R 78. 9% 44. 4% 88. 5% 44. 5% 73. 0% Time, L-R 78. 9% 55. 6% 61. 5% 55. 6% 65. 1% Time, R-L 78. 9% 44. 5% 69. 3% 55. 6% 66. 7% Total 19 9 26 9 63

Discussion n n From-Loc’s – naive version is better To-Loc – any strategy better than naïve Top pronoun resolution algorithms perform around 70 -80% accuracy Problems: n n Corpus size – hard to make concrete conclusions or find trends Annotation scheme is basic Need to handle ‘return verbs’ properly Augment system to identify whether implicit roles should be resolved or not (ignore general cases)

Discussion n n From-Loc’s – naive version is better To-Loc – any strategy better than naïve Top pronoun resolution algorithms perform around 70 -80% accuracy Problems: n n Corpus size – hard to make concrete conclusions or find trends Annotation scheme is basic Need to handle ‘return verbs’ properly Augment system to identify whether implicit roles should be resolved or not (ignore general cases)

Current Work n n Building a larger corpus that can be annotated automatically using the TRIPS parser Domain is much more varied and has different types of verbs

Current Work n n Building a larger corpus that can be annotated automatically using the TRIPS parser Domain is much more varied and has different types of verbs