2adccdeaf02755b03e273d1a9871d1f5.ppt

- Количество слайдов: 72

Techniques for automated localization and correction of design errors Jaan Raik Tallinn University of Technology 1

Techniques for automated localization and correction of design errors Jaan Raik Tallinn University of Technology 1

Design error debug “There has never been an unexpectedly short debugging period in the history of computers. ” Steven Levy 2

Design error debug “There has never been an unexpectedly short debugging period in the history of computers. ” Steven Levy 2

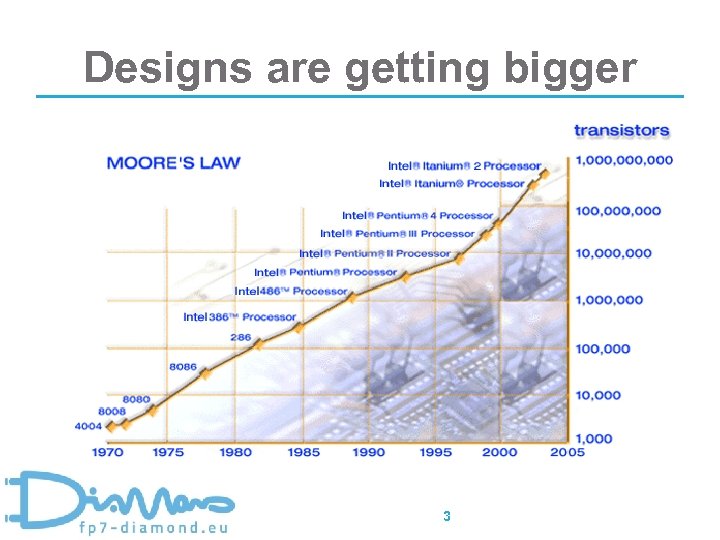

Designs are getting bigger 3

Designs are getting bigger 3

Designs are getting costlier 25 -30 % annually decreasing cost per function 15 percent annual growth of the market for IC • But … L The cost of chip design keeps on growing. • In 1981, development of a leading-edge CPU cost 1 M$ • …today it costs more than 300 M$ !!! • Why do the costs increase ? ? ? 4

Designs are getting costlier 25 -30 % annually decreasing cost per function 15 percent annual growth of the market for IC • But … L The cost of chip design keeps on growing. • In 1981, development of a leading-edge CPU cost 1 M$ • …today it costs more than 300 M$ !!! • Why do the costs increase ? ? ? 4

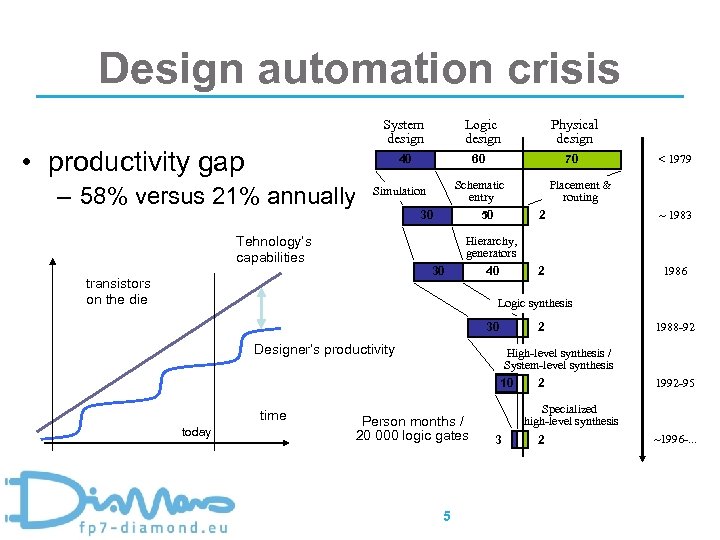

Design automation crisis System design • productivity gap Logic design 40 – 58% versus 21% annually 60 70 Schematic entry Simulation Tehnology’s capabilities Physical design 30 50 < 1979 Placement & routing 2 ~ 1983 2 1986 Hierarchy, generators 30 transistors on the die 40 Logic synthesis 30 2 Designer’s productivity High-level synthesis / System-level synthesis 10 time today 1988 -92 Person months / 20 000 logic gates 5 2 1992 -95 Specialized high-level synthesis 3 2 ~1996 -. . .

Design automation crisis System design • productivity gap Logic design 40 – 58% versus 21% annually 60 70 Schematic entry Simulation Tehnology’s capabilities Physical design 30 50 < 1979 Placement & routing 2 ~ 1983 2 1986 Hierarchy, generators 30 transistors on the die 40 Logic synthesis 30 2 Designer’s productivity High-level synthesis / System-level synthesis 10 time today 1988 -92 Person months / 20 000 logic gates 5 2 1992 -95 Specialized high-level synthesis 3 2 ~1996 -. . .

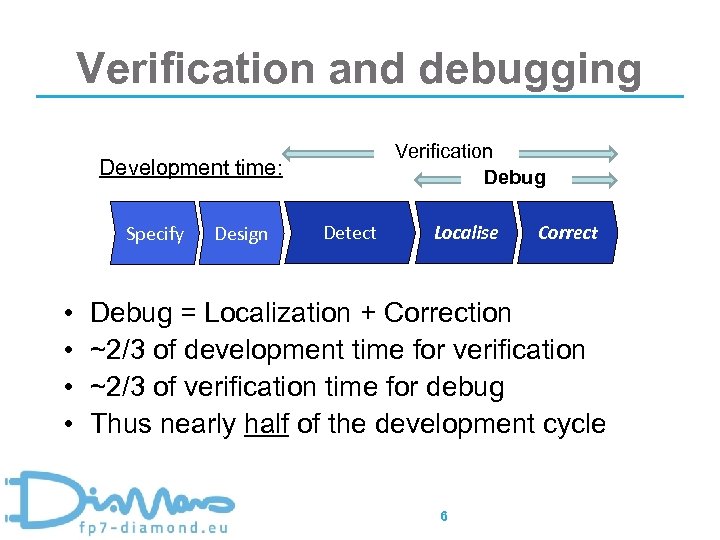

Verification and debugging Verification Debug Development time: Specify • • Design Detect Localise Correct Debug = Localization + Correction ~2/3 of development time for verification ~2/3 of verification time for debug Thus nearly half of the development cycle 6

Verification and debugging Verification Debug Development time: Specify • • Design Detect Localise Correct Debug = Localization + Correction ~2/3 of development time for verification ~2/3 of verification time for debug Thus nearly half of the development cycle 6

Bugs are getting „smarter“ CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 7

Bugs are getting „smarter“ CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 7

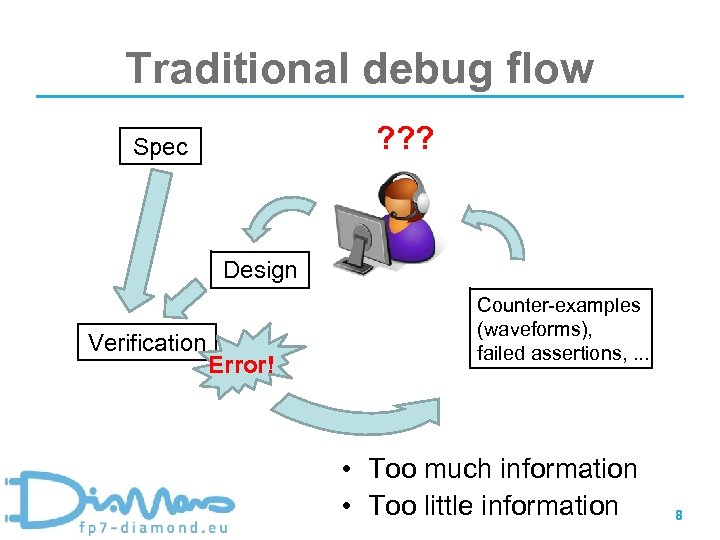

Traditional debug flow ? ? ? Spec Design Verification Error! Counter-examples (waveforms), failed assertions, . . . • Too much information • Too little information 8

Traditional debug flow ? ? ? Spec Design Verification Error! Counter-examples (waveforms), failed assertions, . . . • Too much information • Too little information 8

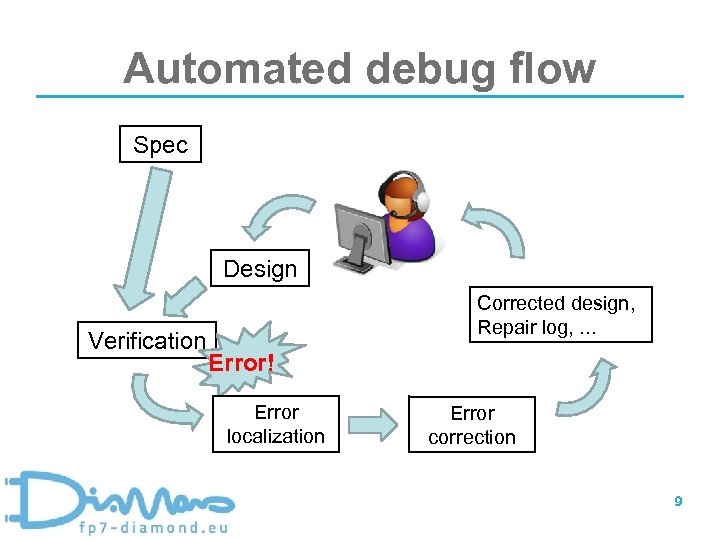

Automated debug flow Spec Design Verification Corrected design, Repair log, . . . Error! Error localization Error correction 9

Automated debug flow Spec Design Verification Corrected design, Repair log, . . . Error! Error localization Error correction 9

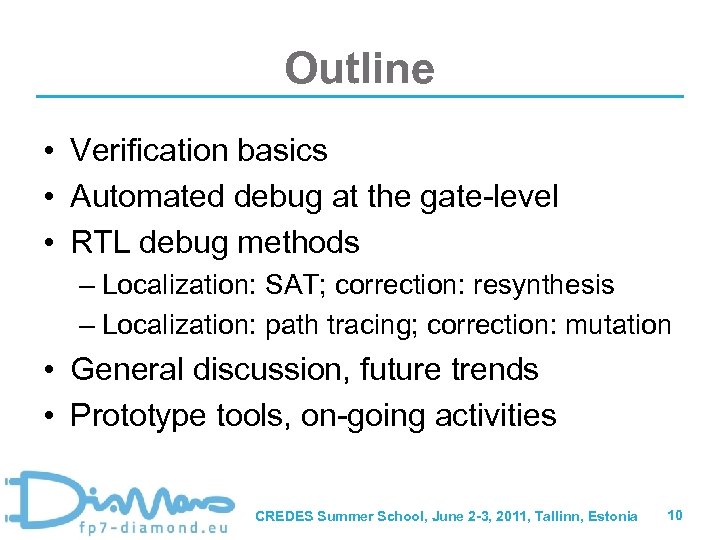

Outline • Verification basics • Automated debug at the gate-level • RTL debug methods – Localization: SAT; correction: resynthesis – Localization: path tracing; correction: mutation • General discussion, future trends • Prototype tools, on-going activities CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 10

Outline • Verification basics • Automated debug at the gate-level • RTL debug methods – Localization: SAT; correction: resynthesis – Localization: path tracing; correction: mutation • General discussion, future trends • Prototype tools, on-going activities CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 10

Verification “To err is human - and to blame it on a computer is even more so. ” Robert Orben 11

Verification “To err is human - and to blame it on a computer is even more so. ” Robert Orben 11

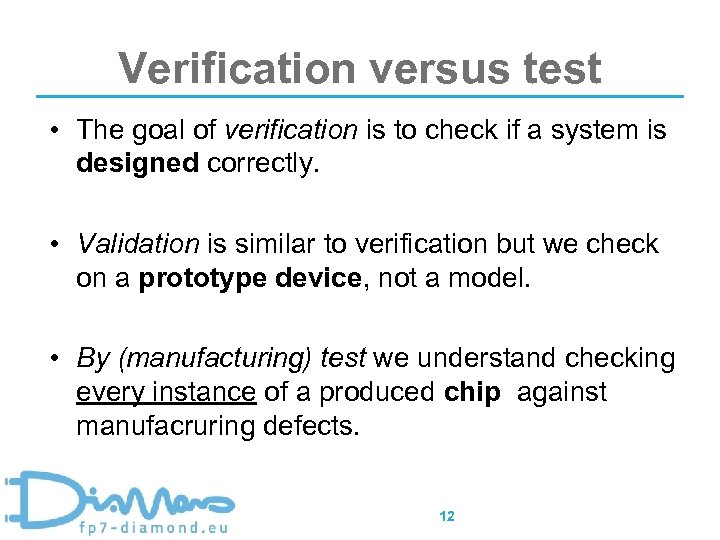

Verification versus test • The goal of verification is to check if a system is designed correctly. • Validation is similar to verification but we check on a prototype device, not a model. • By (manufacturing) test we understand checking every instance of a produced chip against manufacruring defects. 12

Verification versus test • The goal of verification is to check if a system is designed correctly. • Validation is similar to verification but we check on a prototype device, not a model. • By (manufacturing) test we understand checking every instance of a produced chip against manufacruring defects. 12

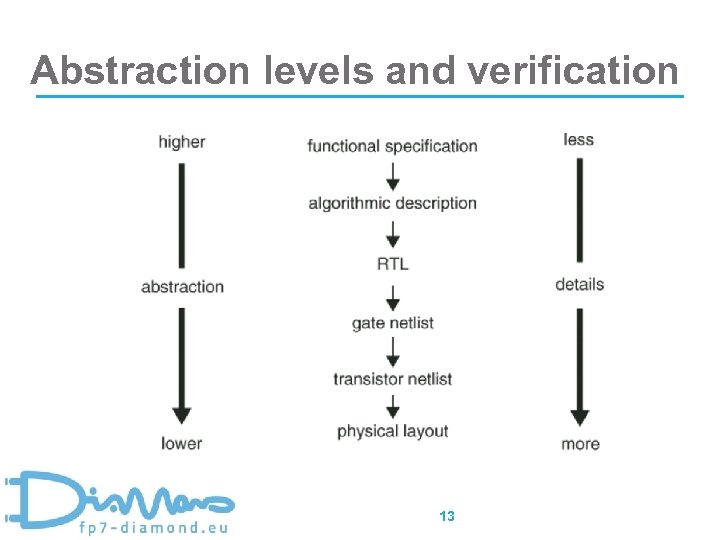

Abstraction levels and verification 13

Abstraction levels and verification 13

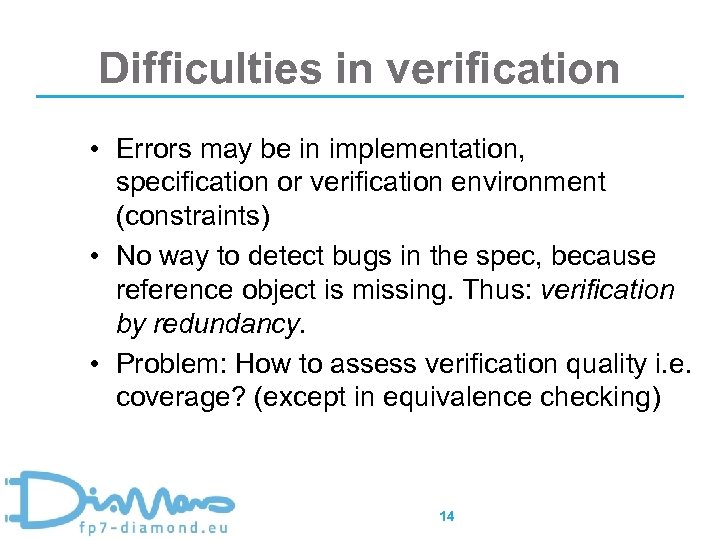

Difficulties in verification • Errors may be in implementation, specification or verification environment (constraints) • No way to detect bugs in the spec, because reference object is missing. Thus: verification by redundancy. • Problem: How to assess verification quality i. e. coverage? (except in equivalence checking) 14

Difficulties in verification • Errors may be in implementation, specification or verification environment (constraints) • No way to detect bugs in the spec, because reference object is missing. Thus: verification by redundancy. • Problem: How to assess verification quality i. e. coverage? (except in equivalence checking) 14

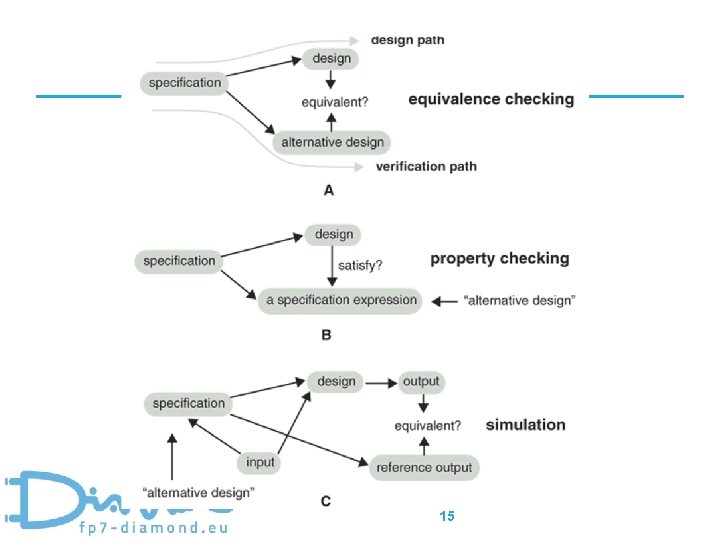

15

15

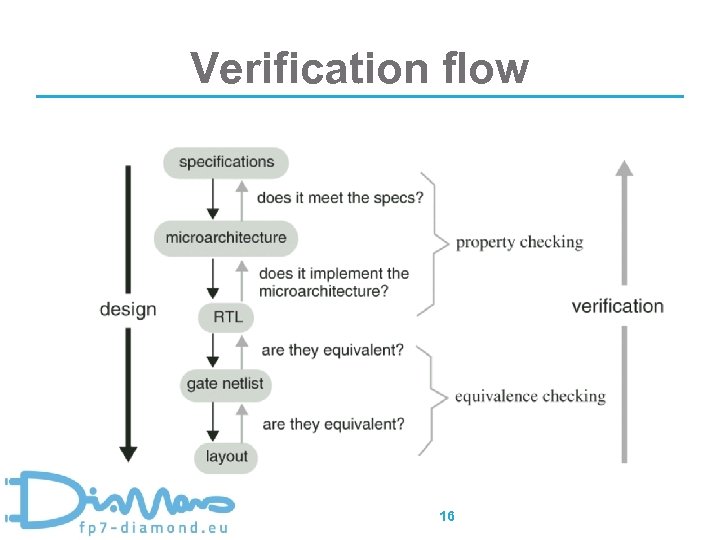

Verification flow 16

Verification flow 16

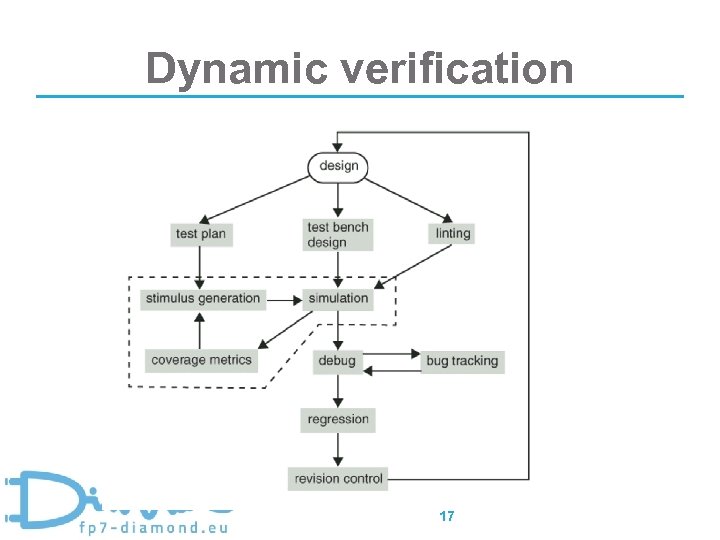

Dynamic verification 17

Dynamic verification 17

Dynamic verification • Based on simulation • Code coverage • Assertions, functional coverage 18

Dynamic verification • Based on simulation • Code coverage • Assertions, functional coverage 18

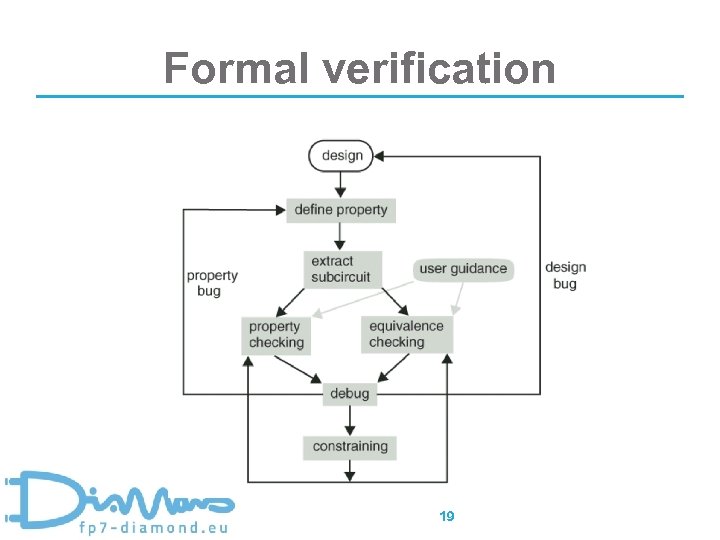

Formal verification 19

Formal verification 19

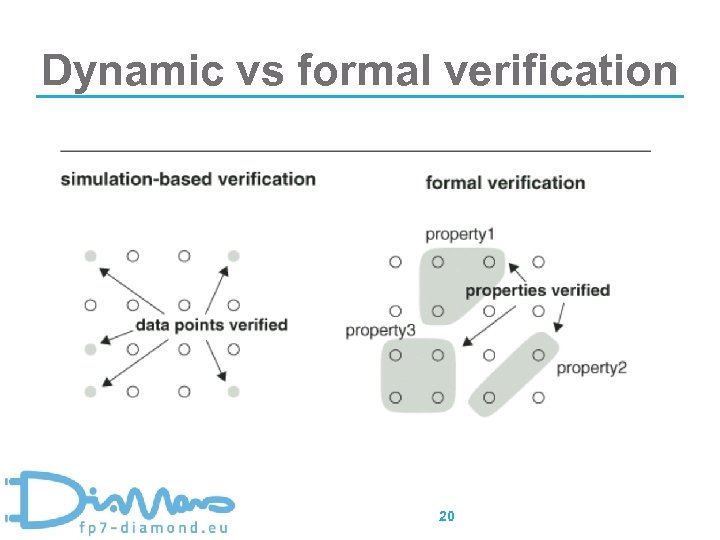

Dynamic vs formal verification 20

Dynamic vs formal verification 20

Automated debug techniques “Logic is a poor model of cause and effect. ” Gregory Bateson 21

Automated debug techniques “Logic is a poor model of cause and effect. ” Gregory Bateson 21

Debugging design errors • Concept of design error: – Mostly modeled in implementation, sometimes in specification • Main applications: – Checking the synthesis tools – Engineering change, incremental synthesis – Debugging 22

Debugging design errors • Concept of design error: – Mostly modeled in implementation, sometimes in specification • Main applications: – Checking the synthesis tools – Engineering change, incremental synthesis – Debugging 22

Debugging design errors What leads to debugging? • Design behavior doesn’t match expected behavior When does this occur? • During simulation of design • Formal tools (property/equivalence check) • Checkers identify the mismatch 23

Debugging design errors What leads to debugging? • Design behavior doesn’t match expected behavior When does this occur? • During simulation of design • Formal tools (property/equivalence check) • Checkers identify the mismatch 23

Design error diagnosis • Classification of methods: – Structure-based/specification-based – Explicit/Implicit fault model (model-free) – Single/multiple error assumption – Simulation-based/symbolic 24

Design error diagnosis • Classification of methods: – Structure-based/specification-based – Explicit/Implicit fault model (model-free) – Single/multiple error assumption – Simulation-based/symbolic 24

Debugging combinational logic • Thoroughly studied in 1990 s • Many works by Aas, Abadir, Wahba & Borrione, others • Also studied, at TUT (Ubar & Jutman) – Used structural BDDs for error localization 25

Debugging combinational logic • Thoroughly studied in 1990 s • Many works by Aas, Abadir, Wahba & Borrione, others • Also studied, at TUT (Ubar & Jutman) – Used structural BDDs for error localization 25

Explicit error model (Abadir) • functional errors of gate elements – – – gate substitution extra gate missing gate extra inverter missing inverter • connection errors of signal lines – extra connection – missing connection – wrong connection 26

Explicit error model (Abadir) • functional errors of gate elements – – – gate substitution extra gate missing gate extra inverter missing inverter • connection errors of signal lines – extra connection – missing connection – wrong connection 26

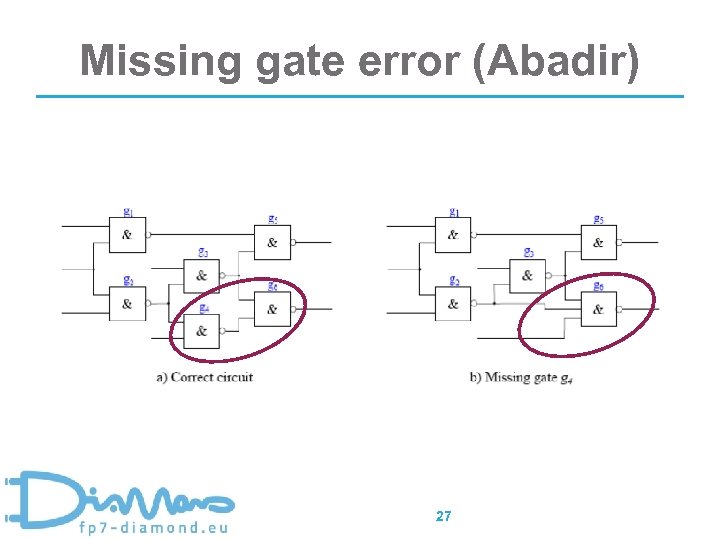

Missing gate error (Abadir) 27

Missing gate error (Abadir) 27

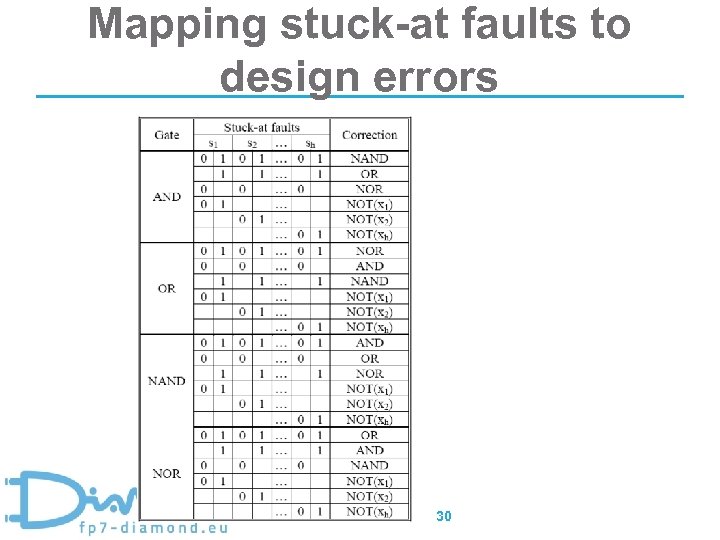

Mapping stuck-at faults to design errors • Abadir: Complete s-a test detects all single gate replacements (AND, OR, NAND, NOR), extra gates (simple case), missing gates (simple case) and extra wires. 28

Mapping stuck-at faults to design errors • Abadir: Complete s-a test detects all single gate replacements (AND, OR, NAND, NOR), extra gates (simple case), missing gates (simple case) and extra wires. 28

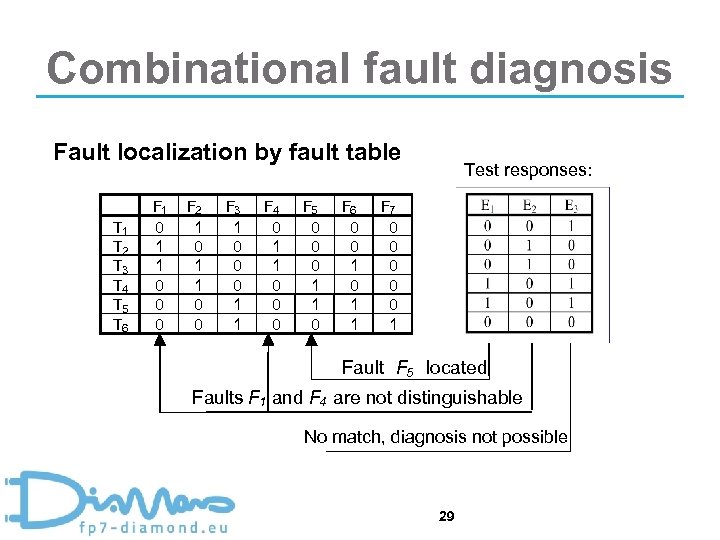

Combinational fault diagnosis Fault localization by fault table T 1 T 2 T 3 T 4 T 5 T 6 F 1 0 1 1 0 0 0 F 2 1 0 1 1 0 0 F 3 1 0 0 0 1 1 F 4 0 1 1 0 0 0 F 5 0 0 0 1 1 0 F 6 0 0 1 1 Test responses: F 7 0 0 0 1 Fault F 5 located Faults F 1 and F 4 are not distinguishable No match, diagnosis not possible 29

Combinational fault diagnosis Fault localization by fault table T 1 T 2 T 3 T 4 T 5 T 6 F 1 0 1 1 0 0 0 F 2 1 0 1 1 0 0 F 3 1 0 0 0 1 1 F 4 0 1 1 0 0 0 F 5 0 0 0 1 1 0 F 6 0 0 1 1 Test responses: F 7 0 0 0 1 Fault F 5 located Faults F 1 and F 4 are not distinguishable No match, diagnosis not possible 29

Mapping stuck-at faults to design errors 30

Mapping stuck-at faults to design errors 30

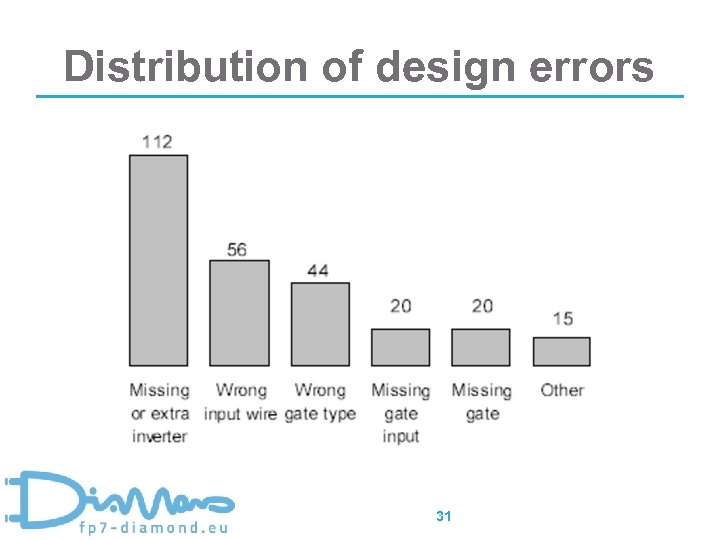

Distribution of design errors 31

Distribution of design errors 31

Explicit model: disadvantages • High number of errors to model • Some errors still not modeled 32

Explicit model: disadvantages • High number of errors to model • Some errors still not modeled 32

Implicit design error models • Do not rely on structure • Circuit under verification as a black box • I/O pin fault models 33

Implicit design error models • Do not rely on structure • Circuit under verification as a black box • I/O pin fault models 33

Design error correction • Classification: – Error matching approach – Resynthesis approach 34

Design error correction • Classification: – Error matching approach – Resynthesis approach 34

Design error correction • Happens in a loop: – An error is detected and localized – Correction step is applied – Corrected design must be reverified –. . . • Until the design passes verification 35

Design error correction • Happens in a loop: – An error is detected and localized – Correction step is applied – Corrected design must be reverified –. . . • Until the design passes verification 35

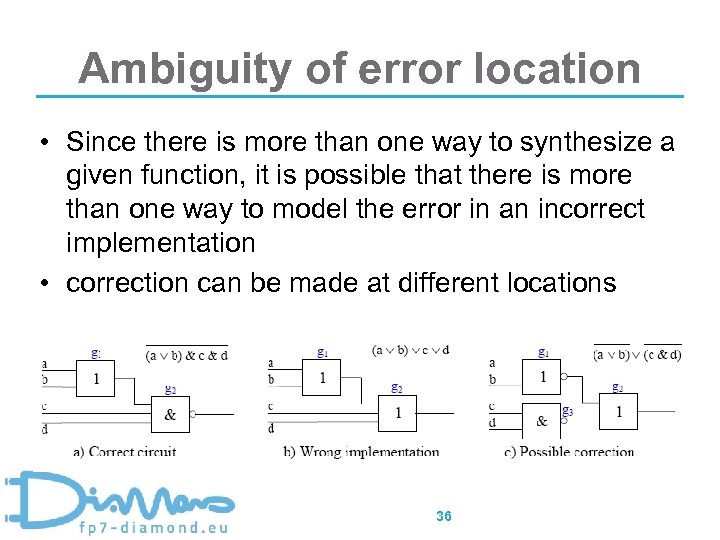

Ambiguity of error location • Since there is more than one way to synthesize a given function, it is possible that there is more than one way to model the error in an incorrect implementation • correction can be made at different locations 36

Ambiguity of error location • Since there is more than one way to synthesize a given function, it is possible that there is more than one way to model the error in an incorrect implementation • correction can be made at different locations 36

Crash course on SAT CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 37

Crash course on SAT CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 37

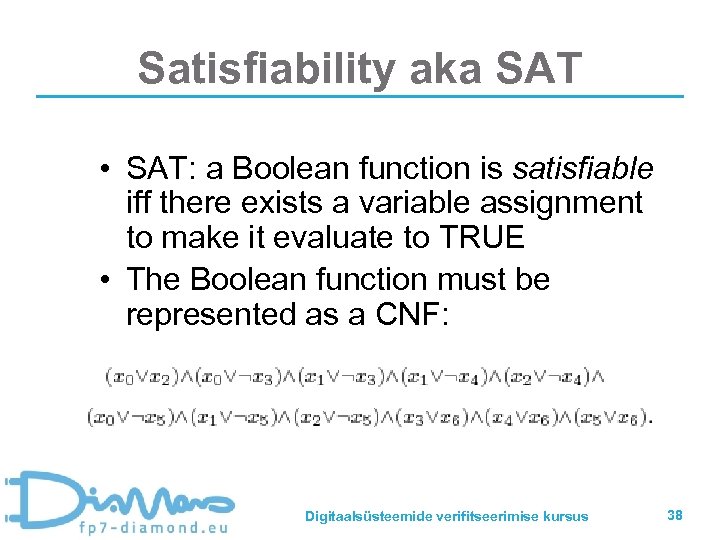

Satisfiability aka SAT • SAT: a Boolean function is satisfiable iff there exists a variable assignment to make it evaluate to TRUE • The Boolean function must be represented as a CNF: Digitaalsüsteemide verifitseerimise kursus 38

Satisfiability aka SAT • SAT: a Boolean function is satisfiable iff there exists a variable assignment to make it evaluate to TRUE • The Boolean function must be represented as a CNF: Digitaalsüsteemide verifitseerimise kursus 38

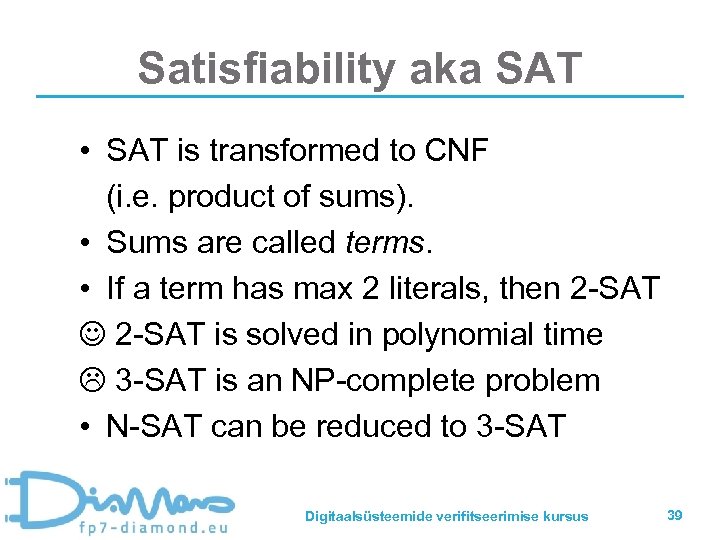

Satisfiability aka SAT • SAT is transformed to CNF (i. e. product of sums). • Sums are called terms. • If a term has max 2 literals, then 2 -SAT is solved in polynomial time L 3 -SAT is an NP-complete problem • N-SAT can be reduced to 3 -SAT Digitaalsüsteemide verifitseerimise kursus 39

Satisfiability aka SAT • SAT is transformed to CNF (i. e. product of sums). • Sums are called terms. • If a term has max 2 literals, then 2 -SAT is solved in polynomial time L 3 -SAT is an NP-complete problem • N-SAT can be reduced to 3 -SAT Digitaalsüsteemide verifitseerimise kursus 39

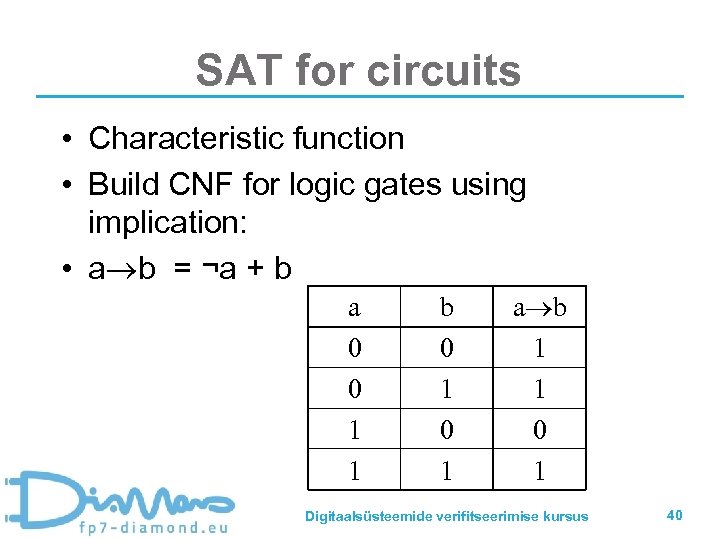

SAT for circuits • Characteristic function • Build CNF for logic gates using implication: • a b = ¬a + b a 0 0 1 1 b 0 1 a b 1 1 0 1 Digitaalsüsteemide verifitseerimise kursus 40

SAT for circuits • Characteristic function • Build CNF for logic gates using implication: • a b = ¬a + b a 0 0 1 1 b 0 1 a b 1 1 0 1 Digitaalsüsteemide verifitseerimise kursus 40

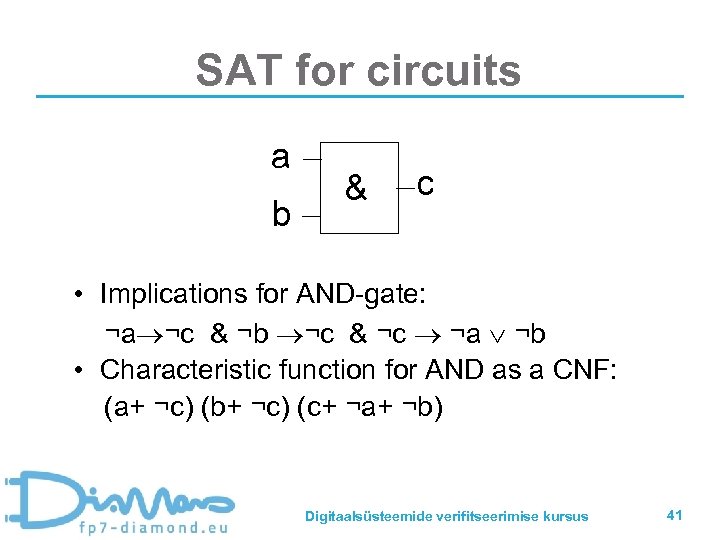

SAT for circuits a b & c • Implications for AND-gate: ¬a ¬c & ¬b ¬c & ¬c ¬a ¬b • Characteristic function for AND as a CNF: (a+ ¬c) (b+ ¬c) (c+ ¬a+ ¬b) Digitaalsüsteemide verifitseerimise kursus 41

SAT for circuits a b & c • Implications for AND-gate: ¬a ¬c & ¬b ¬c & ¬c ¬a ¬b • Characteristic function for AND as a CNF: (a+ ¬c) (b+ ¬c) (c+ ¬a+ ¬b) Digitaalsüsteemide verifitseerimise kursus 41

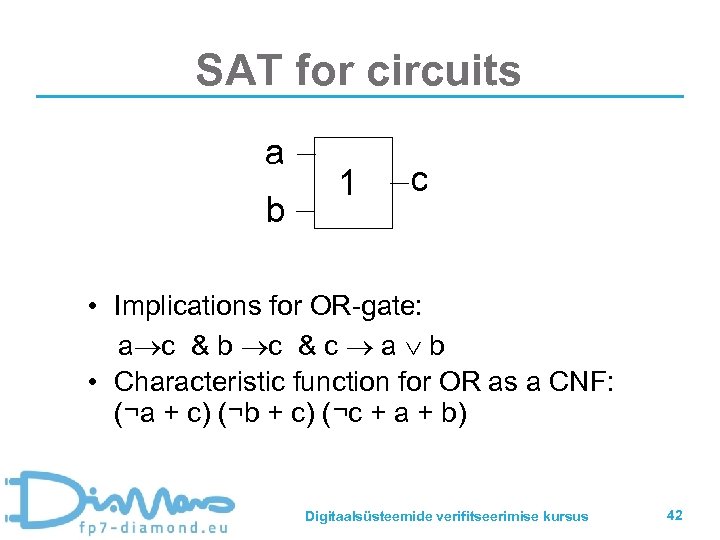

SAT for circuits a b 1 c • Implications for OR-gate: a c & b c & c a b • Characteristic function for OR as a CNF: (¬a + c) (¬b + c) (¬c + a + b) Digitaalsüsteemide verifitseerimise kursus 42

SAT for circuits a b 1 c • Implications for OR-gate: a c & b c & c a b • Characteristic function for OR as a CNF: (¬a + c) (¬b + c) (¬c + a + b) Digitaalsüsteemide verifitseerimise kursus 42

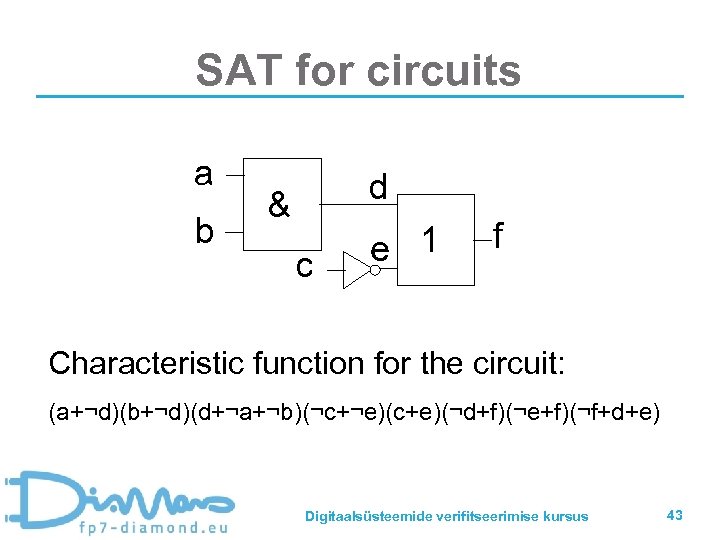

SAT for circuits a b d & c e 1 f Characteristic function for the circuit: (a+¬d)(b+¬d)(d+¬a+¬b)(¬c+¬e)(c+e)(¬d+f)(¬e+f)(¬f+d+e) Digitaalsüsteemide verifitseerimise kursus 43

SAT for circuits a b d & c e 1 f Characteristic function for the circuit: (a+¬d)(b+¬d)(d+¬a+¬b)(¬c+¬e)(c+e)(¬d+f)(¬e+f)(¬f+d+e) Digitaalsüsteemide verifitseerimise kursus 43

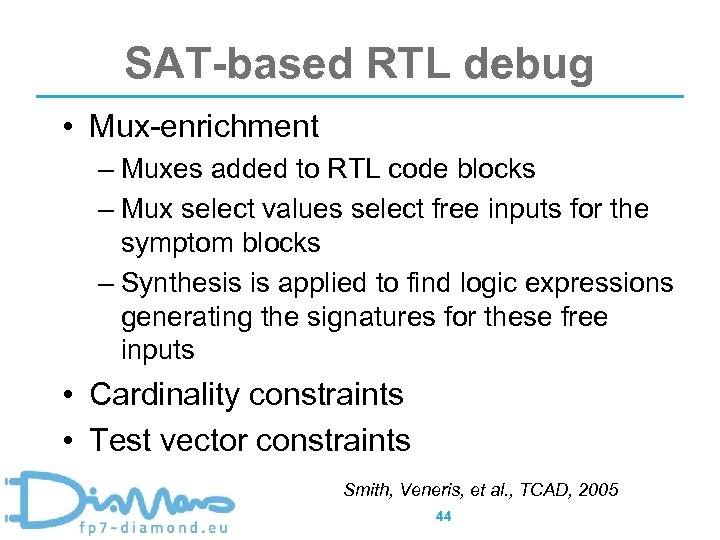

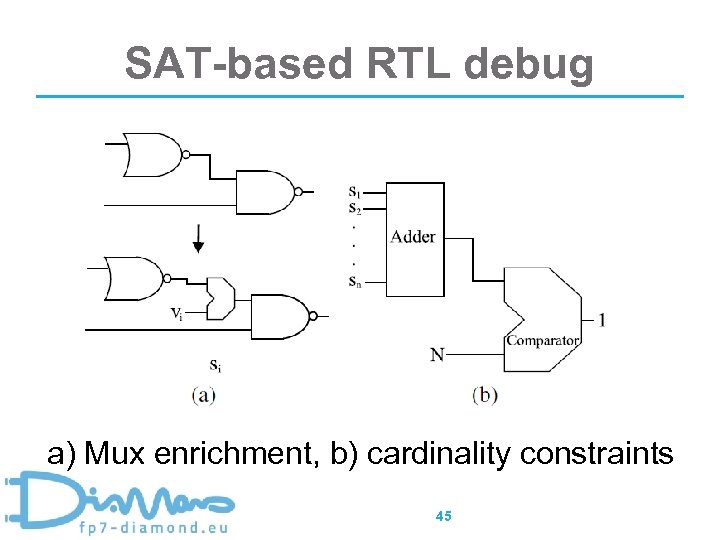

SAT-based RTL debug • Mux-enrichment – Muxes added to RTL code blocks – Mux select values select free inputs for the symptom blocks – Synthesis is applied to find logic expressions generating the signatures for these free inputs • Cardinality constraints • Test vector constraints Smith, Veneris, et al. , TCAD, 2005 44

SAT-based RTL debug • Mux-enrichment – Muxes added to RTL code blocks – Mux select values select free inputs for the symptom blocks – Synthesis is applied to find logic expressions generating the signatures for these free inputs • Cardinality constraints • Test vector constraints Smith, Veneris, et al. , TCAD, 2005 44

SAT-based RTL debug a) Mux enrichment, b) cardinality constraints 45

SAT-based RTL debug a) Mux enrichment, b) cardinality constraints 45

SAT-based RTL debug • SAT provides locations of signals where errors can be corrected • Multiple errors considered! • They also provide the partial truth table of the fix • Correction by resynthesis • This is also a disadvantage: – Why should we want to replace a bug with a more difficult one? 46

SAT-based RTL debug • SAT provides locations of signals where errors can be corrected • Multiple errors considered! • They also provide the partial truth table of the fix • Correction by resynthesis • This is also a disadvantage: – Why should we want to replace a bug with a more difficult one? 46

Path tracing for localization • One of the first debug methods • Backtracing mismatched outputs (sometimes also matched outputs) • Dynamic slicing → critical path tracing (RTL) 47

Path tracing for localization • One of the first debug methods • Backtracing mismatched outputs (sometimes also matched outputs) • Dynamic slicing → critical path tracing (RTL) 47

Mutation-based correction • Locate error suspects by backtracing • Correct by mutating the faulty block (replace by a different function from a preset library) • An error-matching approach 48

Mutation-based correction • Locate error suspects by backtracing • Correct by mutating the faulty block (replace by a different function from a preset library) • An error-matching approach 48

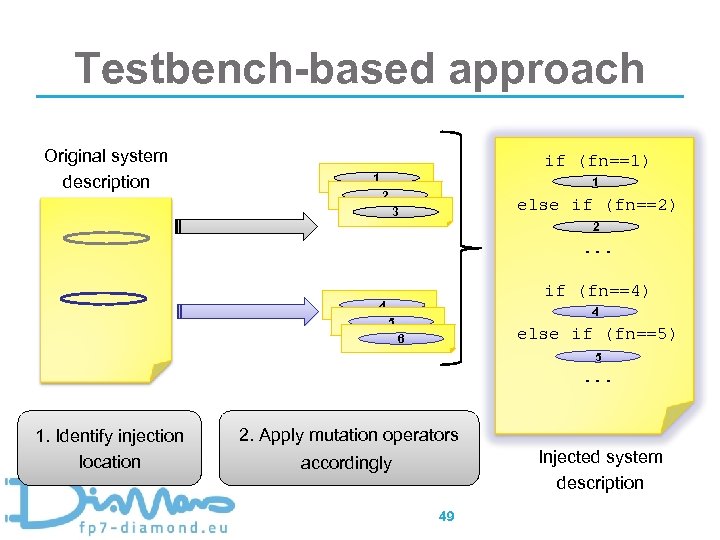

Testbench-based approach Original system description if (fn==1) 1 1 2 else if (fn==2) 3 2 . . . if (fn==4) 4 4 5 else if (fn==5) 6 5 . . . 1. Identify injection location 2. Apply mutation operators Injected system description accordingly 49

Testbench-based approach Original system description if (fn==1) 1 1 2 else if (fn==2) 3 2 . . . if (fn==4) 4 4 5 else if (fn==5) 6 5 . . . 1. Identify injection location 2. Apply mutation operators Injected system description accordingly 49

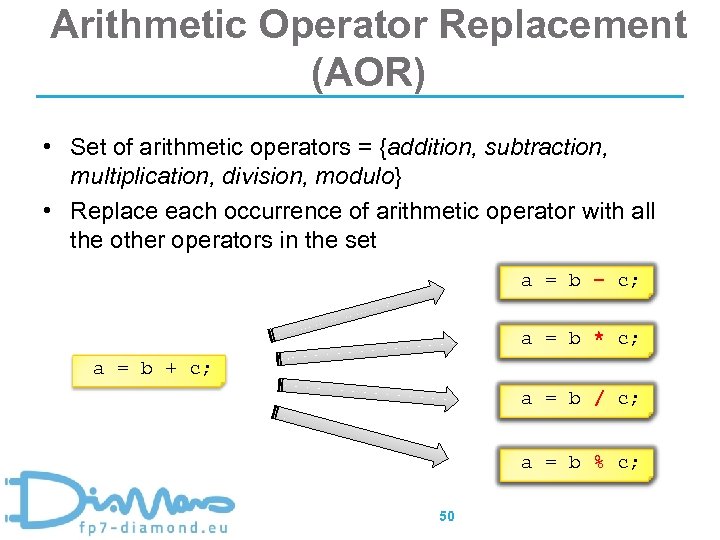

Arithmetic Operator Replacement (AOR) • Set of arithmetic operators = {addition, subtraction, multiplication, division, modulo} • Replace each occurrence of arithmetic operator with all the other operators in the set a = b – c; a = b * c; a = b + c; a = b / c; a = b % c; 50

Arithmetic Operator Replacement (AOR) • Set of arithmetic operators = {addition, subtraction, multiplication, division, modulo} • Replace each occurrence of arithmetic operator with all the other operators in the set a = b – c; a = b * c; a = b + c; a = b / c; a = b % c; 50

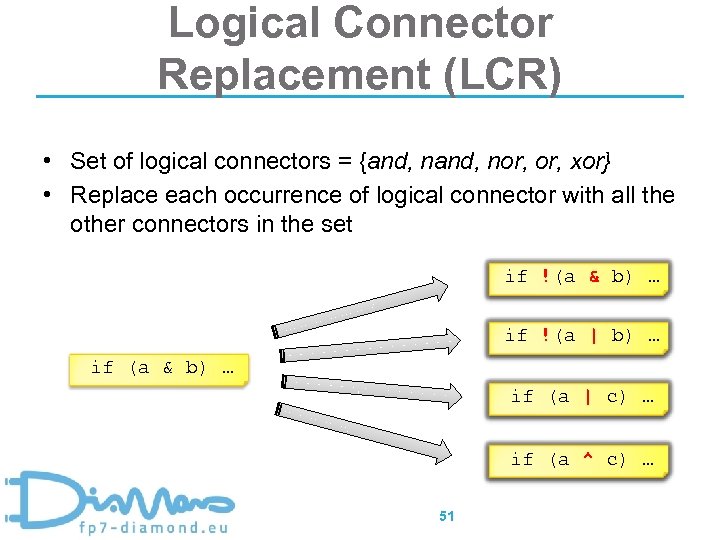

Logical Connector Replacement (LCR) • Set of logical connectors = {and, nor, xor} • Replace each occurrence of logical connector with all the other connectors in the set if !(a & b) … if !(a | b) … if (a & b) … if (a | c) … if (a ^ c) … 51

Logical Connector Replacement (LCR) • Set of logical connectors = {and, nor, xor} • Replace each occurrence of logical connector with all the other connectors in the set if !(a & b) … if !(a | b) … if (a & b) … if (a | c) … if (a ^ c) … 51

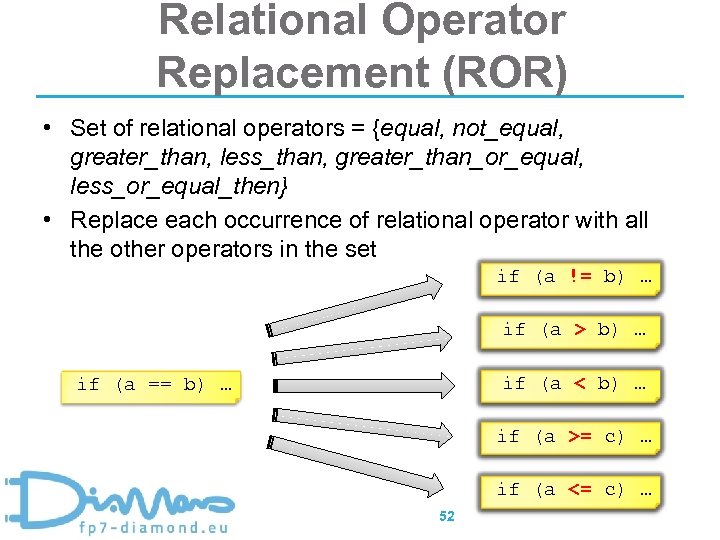

Relational Operator Replacement (ROR) • Set of relational operators = {equal, not_equal, greater_than, less_than, greater_than_or_equal, less_or_equal_then} • Replace each occurrence of relational operator with all the other operators in the set if (a != b) … if (a > b) … if (a < b) … if (a == b) … if (a >= c) … if (a <= c) … 52

Relational Operator Replacement (ROR) • Set of relational operators = {equal, not_equal, greater_than, less_than, greater_than_or_equal, less_or_equal_then} • Replace each occurrence of relational operator with all the other operators in the set if (a != b) … if (a > b) … if (a < b) … if (a == b) … if (a >= c) … if (a <= c) … 52

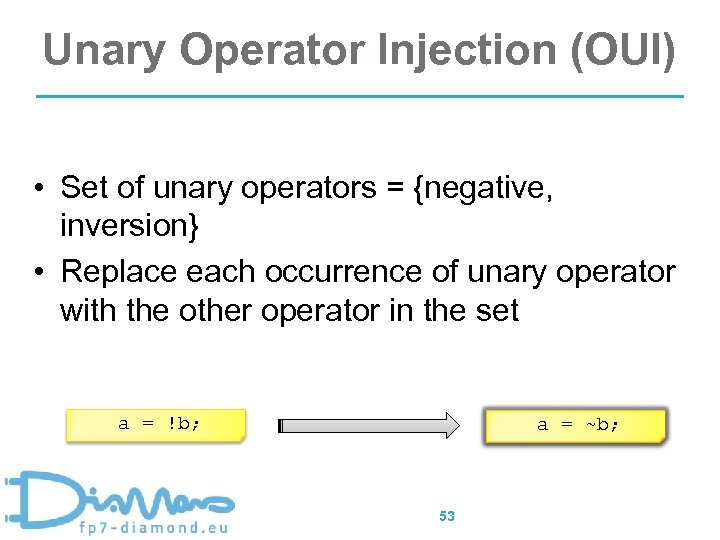

Unary Operator Injection (OUI) • Set of unary operators = {negative, inversion} • Replace each occurrence of unary operator with the other operator in the set a = !b; a = ~b; 53

Unary Operator Injection (OUI) • Set of unary operators = {negative, inversion} • Replace each occurrence of unary operator with the other operator in the set a = !b; a = ~b; 53

More mutation examples • • Constant value mutation Replacing signals with other signals Mutating control constructs. . . CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 54

More mutation examples • • Constant value mutation Replacing signals with other signals Mutating control constructs. . . CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 54

Approaches for SW & HW • Vidroha Debroy and W. Eric Wong, Using Mutation to Automatically Suggest Fixes for Faulty Programs, Software Testing, Verification and Validation Conf. , June 2010. • Raik, J. ; Repinski, U. ; et al. High-level design error diagnosis using backtrace on decision diagrams. 28 th Norchip Conference 15 -16 November 2010. 55

Approaches for SW & HW • Vidroha Debroy and W. Eric Wong, Using Mutation to Automatically Suggest Fixes for Faulty Programs, Software Testing, Verification and Validation Conf. , June 2010. • Raik, J. ; Repinski, U. ; et al. High-level design error diagnosis using backtrace on decision diagrams. 28 th Norchip Conference 15 -16 November 2010. 55

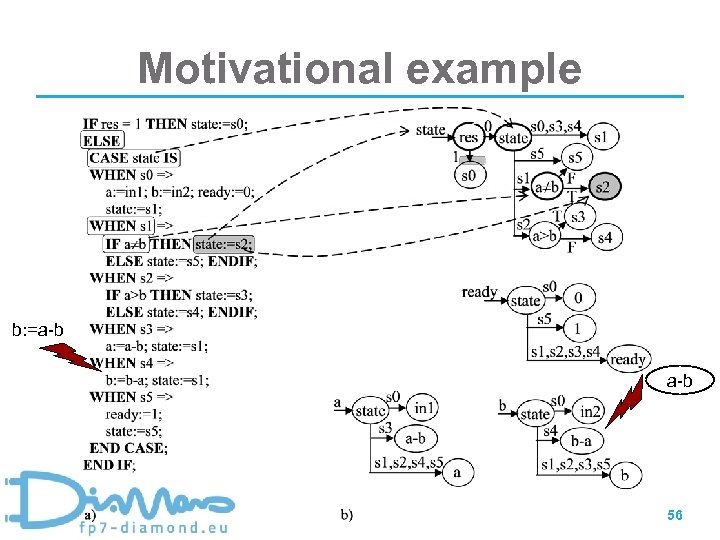

Motivational example b: =a-b 56

Motivational example b: =a-b 56

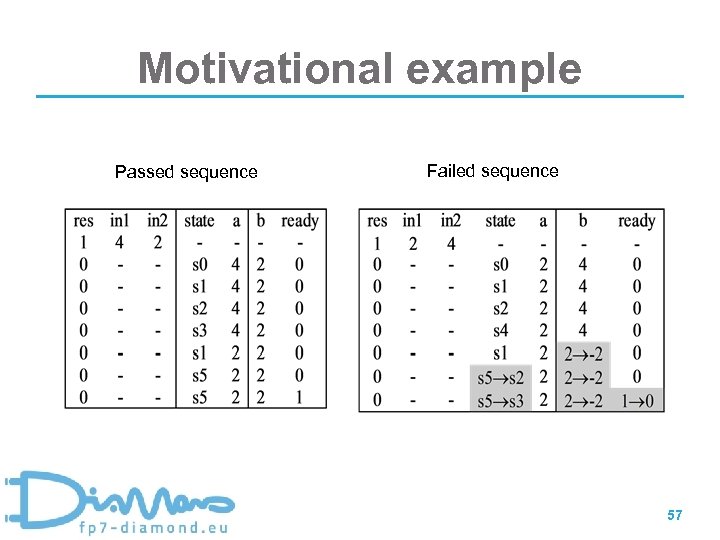

Motivational example Passed sequence Failed sequence 57

Motivational example Passed sequence Failed sequence 57

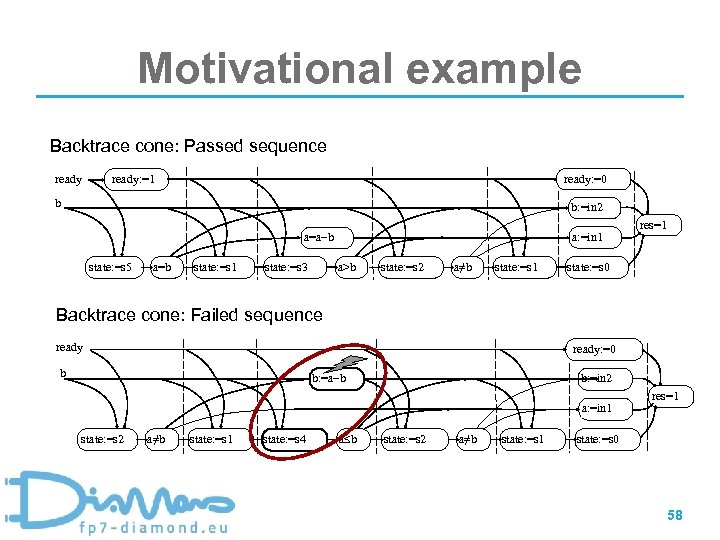

Motivational example Backtrace cone: Passed sequence ready: =0 ready: =1 b b: =in 2 a: =in 1 a=a b state: =s 5 a=b state: =s 1 state: =s 3 a>b state: =s 2 a≠b state: =s 1 res=1 state: =s 0 Backtrace cone: Failed sequence ready: =0 b b: =a b b: =in 2 a: =in 1 state: =s 2 a≠b state: =s 1 state: =s 4 a b state: =s 2 a≠b state: =s 1 res=1 state: =s 0 58

Motivational example Backtrace cone: Passed sequence ready: =0 ready: =1 b b: =in 2 a: =in 1 a=a b state: =s 5 a=b state: =s 1 state: =s 3 a>b state: =s 2 a≠b state: =s 1 res=1 state: =s 0 Backtrace cone: Failed sequence ready: =0 b b: =a b b: =in 2 a: =in 1 state: =s 2 a≠b state: =s 1 state: =s 4 a b state: =s 2 a≠b state: =s 1 res=1 state: =s 0 58

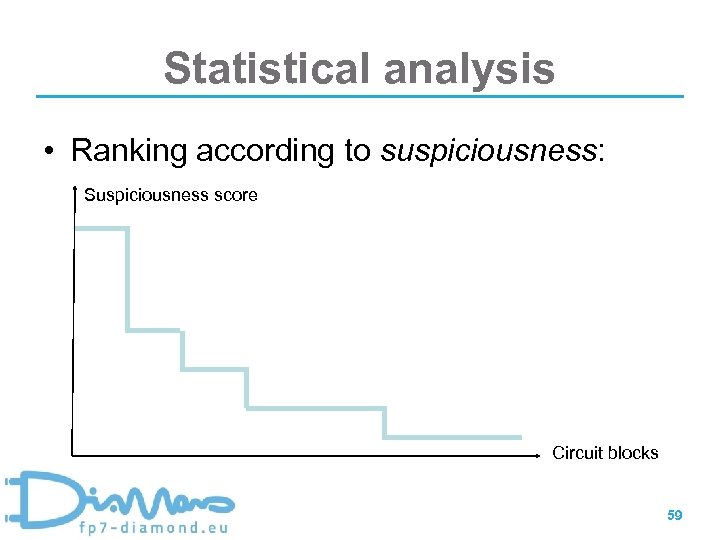

Statistical analysis • Ranking according to suspiciousness: Suspiciousness score Circuit blocks 59

Statistical analysis • Ranking according to suspiciousness: Suspiciousness score Circuit blocks 59

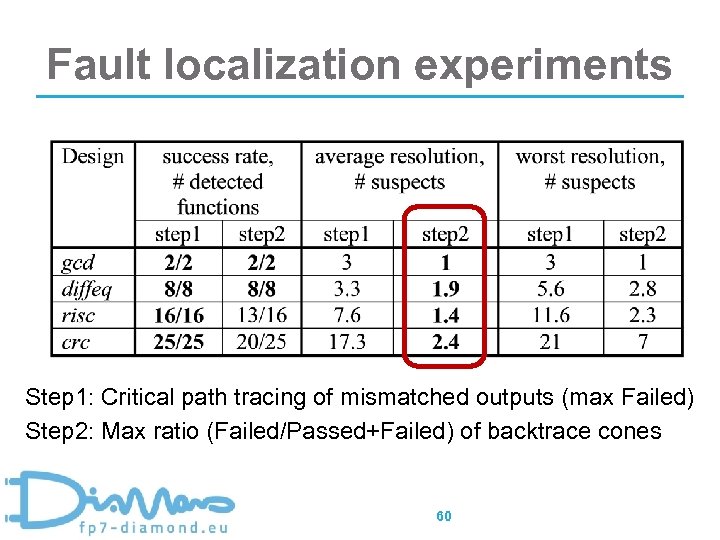

Fault localization experiments Step 1: Critical path tracing of mismatched outputs (max Failed) Step 2: Max ratio (Failed/Passed+Failed) of backtrace cones 60

Fault localization experiments Step 1: Critical path tracing of mismatched outputs (max Failed) Step 2: Max ratio (Failed/Passed+Failed) of backtrace cones 60

Advantages & open questions • • Mutation-based repair is readable Helps keeping user in the loop Provides a „global“ repair, for all stimuli How does this backtracing based method perform in the case of multiple errors? • What would be a good fault model for high -level design errors? 61

Advantages & open questions • • Mutation-based repair is readable Helps keeping user in the loop Provides a „global“ repair, for all stimuli How does this backtracing based method perform in the case of multiple errors? • What would be a good fault model for high -level design errors? 61

Future trends • The quality of localization and correction is dependent on input stimuli • Thus, diagnostic test generation needed • Readable, small correction prefered: – Correction holds normally only wrt given input vectors (e. g. Resynthesis) – Why should we replace an easily detectable bug with a more difficult one? ! 62

Future trends • The quality of localization and correction is dependent on input stimuli • Thus, diagnostic test generation needed • Readable, small correction prefered: – Correction holds normally only wrt given input vectors (e. g. Resynthesis) – Why should we replace an easily detectable bug with a more difficult one? ! 62

Idea: HLDD-based correction • A canonical form of high-level decision diagrams (HLDD) using characteristic polynomials • It allows fast probabilistic proof of equivalence of two different designs. • Idea: Extend it towards correction 63

Idea: HLDD-based correction • A canonical form of high-level decision diagrams (HLDD) using characteristic polynomials • It allows fast probabilistic proof of equivalence of two different designs. • Idea: Extend it towards correction 63

Prototype tools, activities CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 64

Prototype tools, activities CREDES Summer School, June 2 -3, 2011, Tallinn, Estonia 64

FP 7 Project DIAMOND • Start January 2010, duration 3 years • Total budget 3. 8 M € – EU contribution 2. 9 M € • Effort 462. 5 PM The IBM logo is a registered trademark of International Business Machines Corporation (IBM) in the United States and other countries. DIAMOND Kick-off, Tallinn, February 2 -3, 2010 65

FP 7 Project DIAMOND • Start January 2010, duration 3 years • Total budget 3. 8 M € – EU contribution 2. 9 M € • Effort 462. 5 PM The IBM logo is a registered trademark of International Business Machines Corporation (IBM) in the United States and other countries. DIAMOND Kick-off, Tallinn, February 2 -3, 2010 65

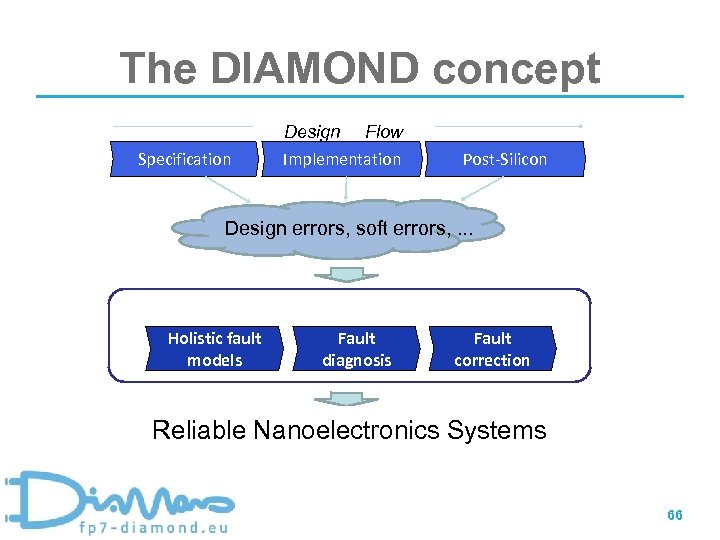

The DIAMOND concept Design Specification Flow Implementation Post-Silicon Design errors, soft errors, . . . Holistic fault models Fault diagnosis Fault correction Reliable Nanoelectronics Systems 66

The DIAMOND concept Design Specification Flow Implementation Post-Silicon Design errors, soft errors, . . . Holistic fault models Fault diagnosis Fault correction Reliable Nanoelectronics Systems 66

FORENSIC • Fo. REn. Si. C – Formal Repair Engine for Simple C • For debugging system-level HW • Idea by TUG, UNIB and TUT at DATE’ 10 • Front-end converting simple C descriptions to flowchart model completed • 1 st release expected by the end of 2011 67

FORENSIC • Fo. REn. Si. C – Formal Repair Engine for Simple C • For debugging system-level HW • Idea by TUG, UNIB and TUT at DATE’ 10 • Front-end converting simple C descriptions to flowchart model completed • 1 st release expected by the end of 2011 67

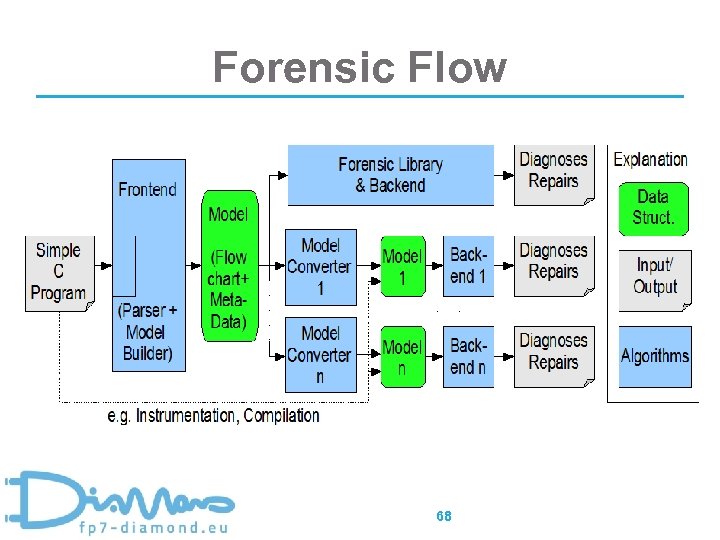

Forensic Flow 68

Forensic Flow 68

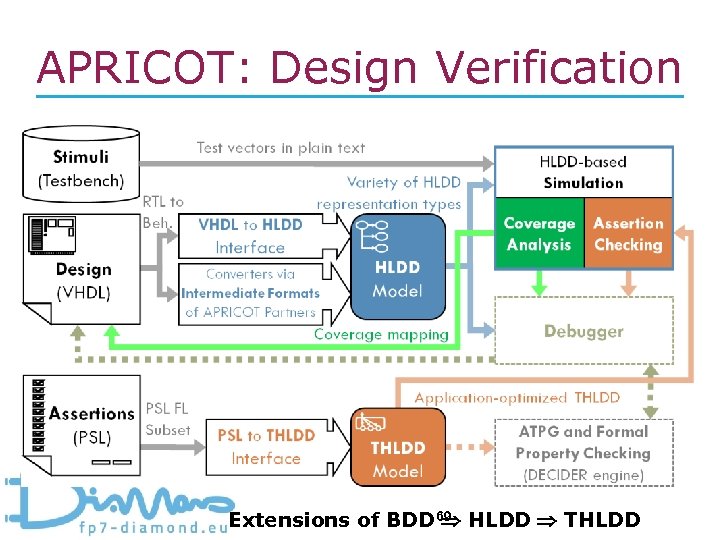

APRICOT: Design Verification Extensions of BDD 69 HLDD THLDD

APRICOT: Design Verification Extensions of BDD 69 HLDD THLDD

APri. Co. T Verification System – Assertion/Property check. Ing, Code coverage & Test generation – The tools run on a uniform design model based on high-level decision diagrams. – The functionality includes currently • • • test generation, code coverage analysis, assertion-checking, mutation analysis and design error localization 70

APri. Co. T Verification System – Assertion/Property check. Ing, Code coverage & Test generation – The tools run on a uniform design model based on high-level decision diagrams. – The functionality includes currently • • • test generation, code coverage analysis, assertion-checking, mutation analysis and design error localization 70

Zamia. CAD: IDE for HW Design • Zamia. CAD is an Eclipse-based development environment for hardware designs • Design entry • Analysis • Navigation • Simulation • Scalable! • Co-operation with IBM Germany, R. Dorsch 71

Zamia. CAD: IDE for HW Design • Zamia. CAD is an Eclipse-based development environment for hardware designs • Design entry • Analysis • Navigation • Simulation • Scalable! • Co-operation with IBM Germany, R. Dorsch 71

To probe further. . . Functional Design Errors in Digital Circuits: Diagnosis, Correction and Repair K. H. Chang, I. L. Markov, V. Bertacco. . . Publisher: Springer Pub Date: 2009 72

To probe further. . . Functional Design Errors in Digital Circuits: Diagnosis, Correction and Repair K. H. Chang, I. L. Markov, V. Bertacco. . . Publisher: Springer Pub Date: 2009 72