3e47c4afdbeb79312c0b5b6549168b43.ppt

- Количество слайдов: 39

t-tests, ANOVAs & Regression and their application to the statistical analysis of neuroimaging Carles Falcon & Suz Prejawa

t-tests, ANOVAs & Regression and their application to the statistical analysis of neuroimaging Carles Falcon & Suz Prejawa

OVERVIEW • • • Basics, populations and samples T-tests ANOVA Beware! Summary Part 1 Part 2

OVERVIEW • • • Basics, populations and samples T-tests ANOVA Beware! Summary Part 1 Part 2

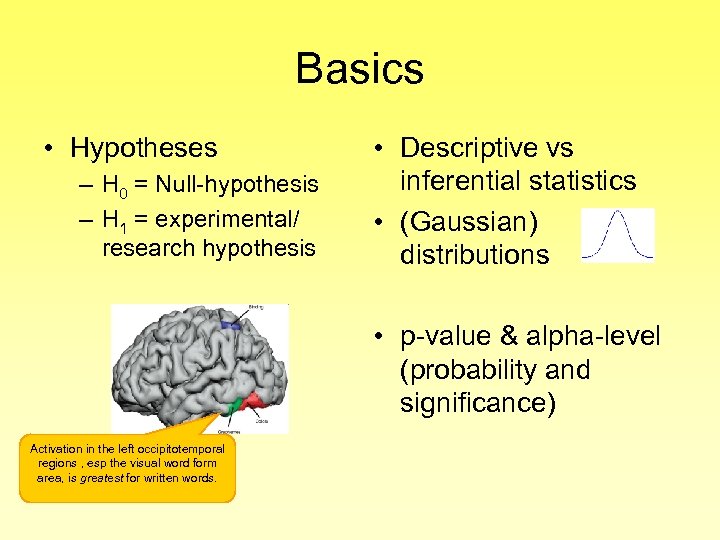

Basics • Hypotheses – H 0 = Null-hypothesis – H 1 = experimental/ research hypothesis • Descriptive vs inferential statistics • (Gaussian) distributions • p-value & alpha-level (probability and significance) Activation in the left occipitotemporal regions , esp the visual word form area, is greatest for written words.

Basics • Hypotheses – H 0 = Null-hypothesis – H 1 = experimental/ research hypothesis • Descriptive vs inferential statistics • (Gaussian) distributions • p-value & alpha-level (probability and significance) Activation in the left occipitotemporal regions , esp the visual word form area, is greatest for written words.

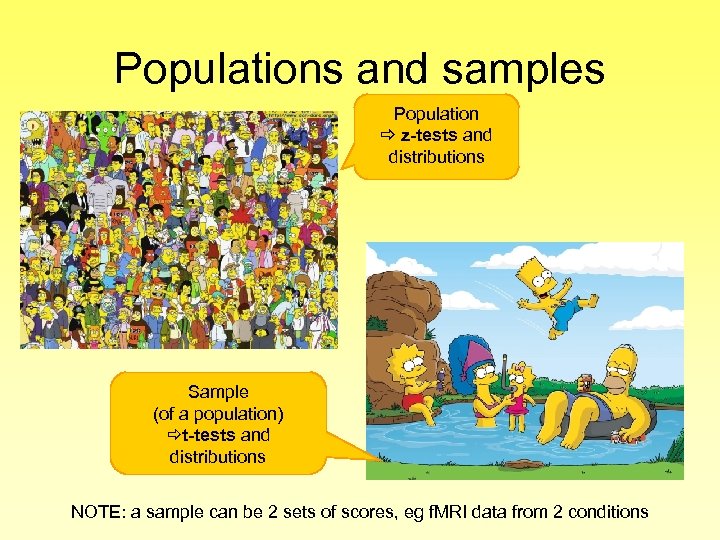

Populations and samples Population z-tests and distributions Sample (of a population) t-tests and distributions NOTE: a sample can be 2 sets of scores, eg f. MRI data from 2 conditions

Populations and samples Population z-tests and distributions Sample (of a population) t-tests and distributions NOTE: a sample can be 2 sets of scores, eg f. MRI data from 2 conditions

Comparison between Samples Are these groups different?

Comparison between Samples Are these groups different?

Comparison between Conditions (f. MRI) Reading aloud (script) Reading aloud vs vs “Reading” finger spelling (sign) Picture naming

Comparison between Conditions (f. MRI) Reading aloud (script) Reading aloud vs vs “Reading” finger spelling (sign) Picture naming

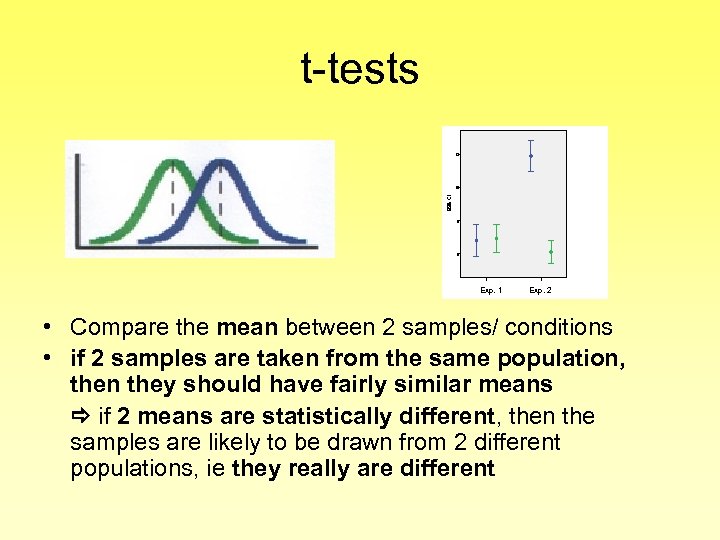

t-tests comp infer 12 95% CI 10 8 6 Left hemisphere right hemisphere Exp. 1 lesion site Exp. 2 • Compare the mean between 2 samples/ conditions • if 2 samples are taken from the same population, then they should have fairly similar means if 2 means are statistically different, then the samples are likely to be drawn from 2 different populations, ie they really are different

t-tests comp infer 12 95% CI 10 8 6 Left hemisphere right hemisphere Exp. 1 lesion site Exp. 2 • Compare the mean between 2 samples/ conditions • if 2 samples are taken from the same population, then they should have fairly similar means if 2 means are statistically different, then the samples are likely to be drawn from 2 different populations, ie they really are different

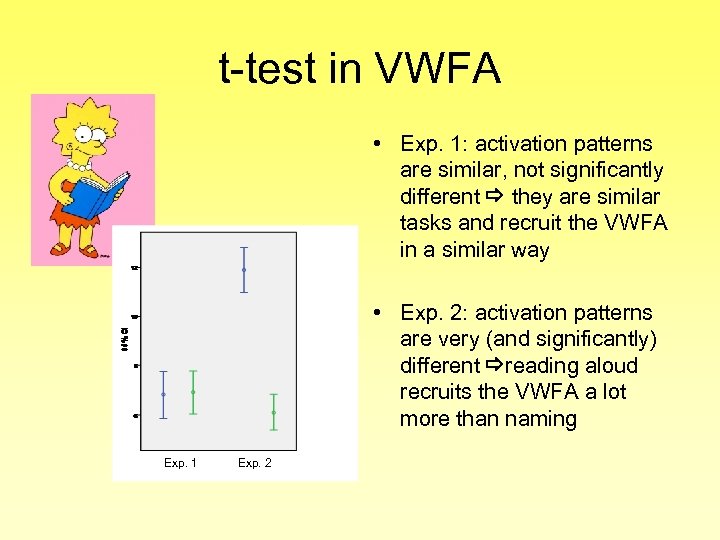

t-test in VWFA • Exp. 1: activation patterns are similar, not significantly different they are similar tasks and recruit the VWFA in a similar way • Exp. 2: activation patterns are very (and significantly) different reading aloud recruits the VWFA a lot more than naming Exp. 1 Exp. 2

t-test in VWFA • Exp. 1: activation patterns are similar, not significantly different they are similar tasks and recruit the VWFA in a similar way • Exp. 2: activation patterns are very (and significantly) different reading aloud recruits the VWFA a lot more than naming Exp. 1 Exp. 2

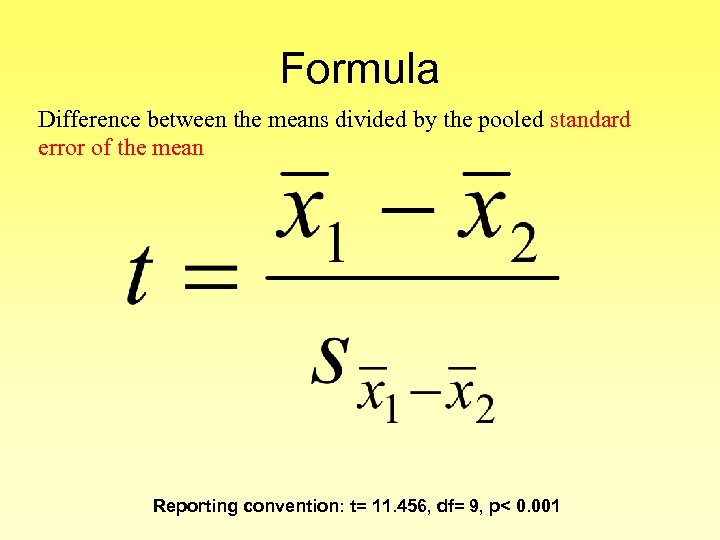

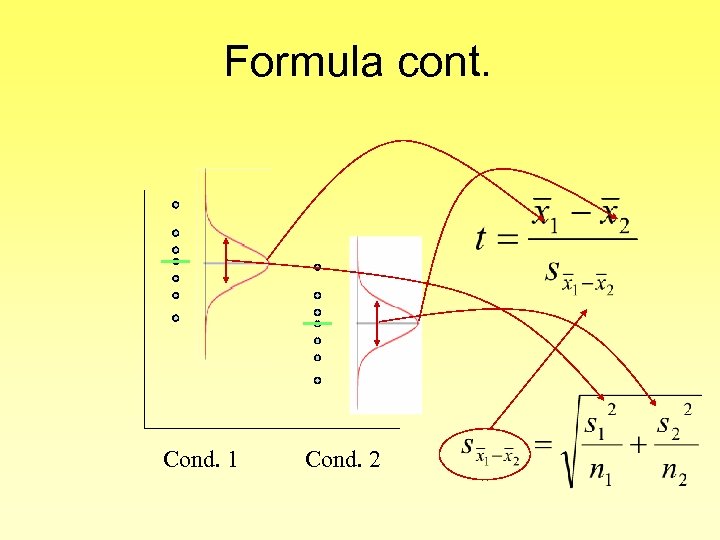

Formula Difference between the means divided by the pooled standard error of the mean Reporting convention: t= 11. 456, df= 9, p< 0. 001

Formula Difference between the means divided by the pooled standard error of the mean Reporting convention: t= 11. 456, df= 9, p< 0. 001

Formula cont. Cond. 1 Cond. 2

Formula cont. Cond. 1 Cond. 2

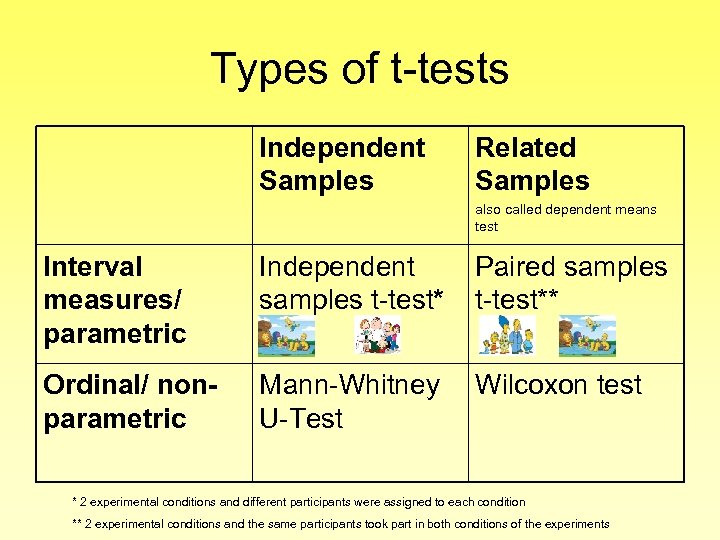

Types of t-tests Independent Samples Related Samples also called dependent means test Interval measures/ parametric Independent samples t-test* Paired samples t-test** Ordinal/ nonparametric Mann-Whitney U-Test Wilcoxon test * 2 experimental conditions and different participants were assigned to each condition ** 2 experimental conditions and the same participants took part in both conditions of the experiments

Types of t-tests Independent Samples Related Samples also called dependent means test Interval measures/ parametric Independent samples t-test* Paired samples t-test** Ordinal/ nonparametric Mann-Whitney U-Test Wilcoxon test * 2 experimental conditions and different participants were assigned to each condition ** 2 experimental conditions and the same participants took part in both conditions of the experiments

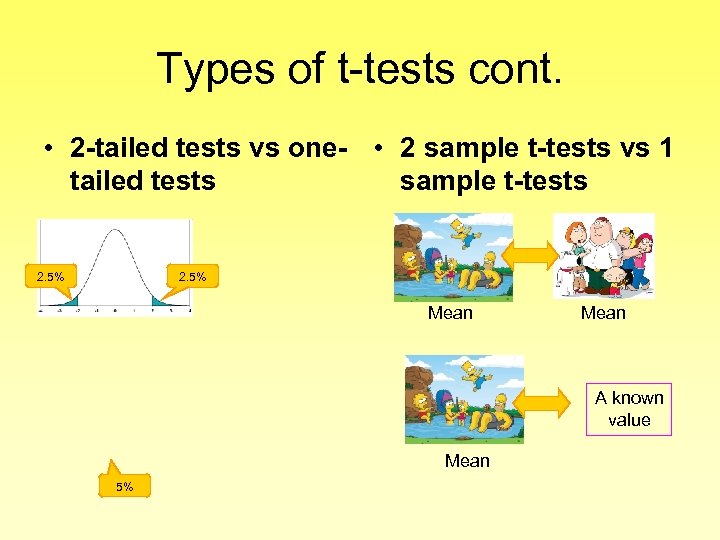

Types of t-tests cont. • 2 -tailed tests vs one- • 2 sample t-tests vs 1 tailed tests sample t-tests 2. 5% Mean A known value Mean 5%

Types of t-tests cont. • 2 -tailed tests vs one- • 2 sample t-tests vs 1 tailed tests sample t-tests 2. 5% Mean A known value Mean 5%

Comparison of more than 2 samples Tell me the difference between these groups… Thank God I have ANOVA

Comparison of more than 2 samples Tell me the difference between these groups… Thank God I have ANOVA

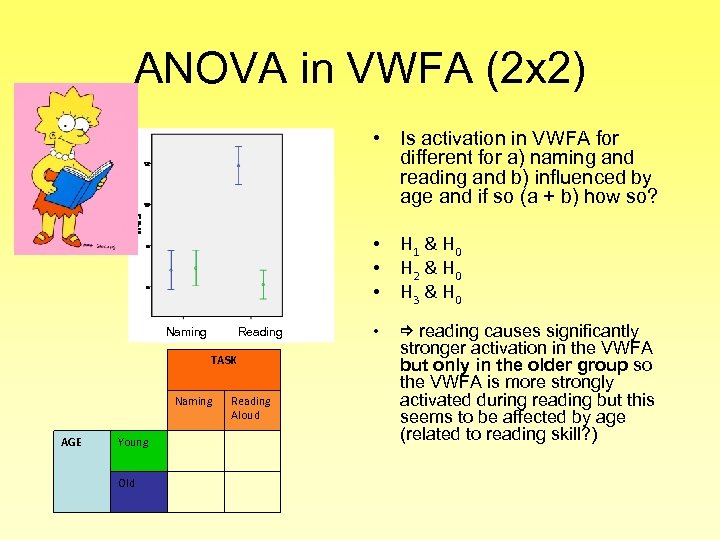

ANOVA in VWFA (2 x 2) • Is activation in VWFA for different for a) naming and reading and b) influenced by age and if so (a + b) how so? • H 1 & H 0 • H 2 & H 0 • H 3 & H 0 Naming Reading TASK Naming AGE Young Old Reading Aloud • reading causes significantly stronger activation in the VWFA but only in the older group so the VWFA is more strongly activated during reading but this seems to be affected by age (related to reading skill? )

ANOVA in VWFA (2 x 2) • Is activation in VWFA for different for a) naming and reading and b) influenced by age and if so (a + b) how so? • H 1 & H 0 • H 2 & H 0 • H 3 & H 0 Naming Reading TASK Naming AGE Young Old Reading Aloud • reading causes significantly stronger activation in the VWFA but only in the older group so the VWFA is more strongly activated during reading but this seems to be affected by age (related to reading skill? )

ANOVA • ANalysis Of VAriance (ANOVA) – Still compares the differences in means between groups but it uses the variance of data to “decide” if means are different • Terminology (factors and levels) • F- statistic – Magnitude of the difference between the different conditions – p-value associated with F is probability that differences between groups could occur by chance if null-hypothesis is correct – need for post-hoc testing (ANOVA can tell you if there is an effect but not where) Reporting convention: F= 65. 58, df= 4, 45, p<. 001

ANOVA • ANalysis Of VAriance (ANOVA) – Still compares the differences in means between groups but it uses the variance of data to “decide” if means are different • Terminology (factors and levels) • F- statistic – Magnitude of the difference between the different conditions – p-value associated with F is probability that differences between groups could occur by chance if null-hypothesis is correct – need for post-hoc testing (ANOVA can tell you if there is an effect but not where) Reporting convention: F= 65. 58, df= 4, 45, p<. 001

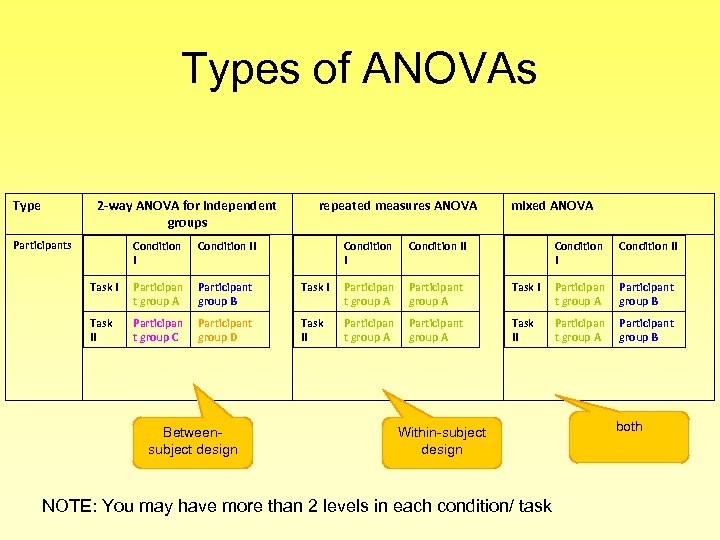

Types of ANOVAs Type 2 -way ANOVA for independent groups Participants Condition II Task I Participan t group A Participant group B Task II Participan t group C Participant group D repeated measures ANOVA Betweensubject design Condition II Task I Participan t group A Participant group A Task II Participan t group A Participant group A mixed ANOVA Condition II Task I Participan t group A Participant group B Task II Participan t group A Participant group B Within-subject design NOTE: You may have more than 2 levels in each condition/ task both

Types of ANOVAs Type 2 -way ANOVA for independent groups Participants Condition II Task I Participan t group A Participant group B Task II Participan t group C Participant group D repeated measures ANOVA Betweensubject design Condition II Task I Participan t group A Participant group A Task II Participan t group A Participant group A mixed ANOVA Condition II Task I Participan t group A Participant group B Task II Participan t group A Participant group B Within-subject design NOTE: You may have more than 2 levels in each condition/ task both

BEWARE! • Errors – Type I: false positives – Type II: false negatives • Multiple comparison problem esp prominent in f. MRI

BEWARE! • Errors – Type I: false positives – Type II: false negatives • Multiple comparison problem esp prominent in f. MRI

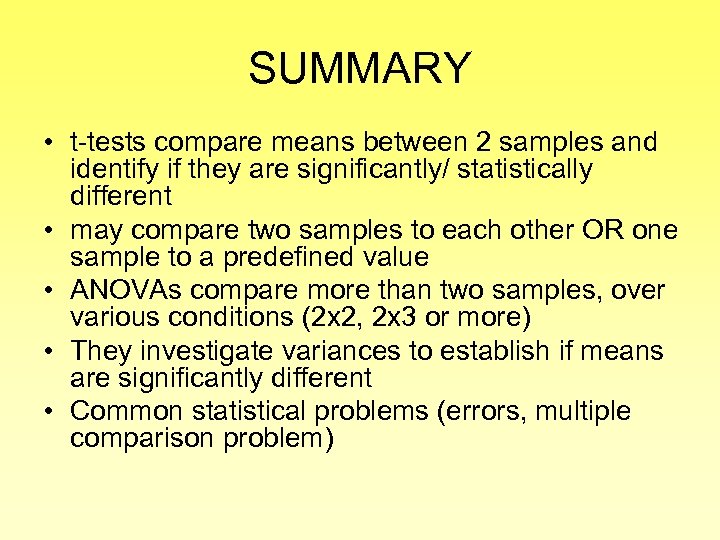

SUMMARY • t-tests compare means between 2 samples and identify if they are significantly/ statistically different • may compare two samples to each other OR one sample to a predefined value • ANOVAs compare more than two samples, over various conditions (2 x 2, 2 x 3 or more) • They investigate variances to establish if means are significantly different • Common statistical problems (errors, multiple comparison problem)

SUMMARY • t-tests compare means between 2 samples and identify if they are significantly/ statistically different • may compare two samples to each other OR one sample to a predefined value • ANOVAs compare more than two samples, over various conditions (2 x 2, 2 x 3 or more) • They investigate variances to establish if means are significantly different • Common statistical problems (errors, multiple comparison problem)

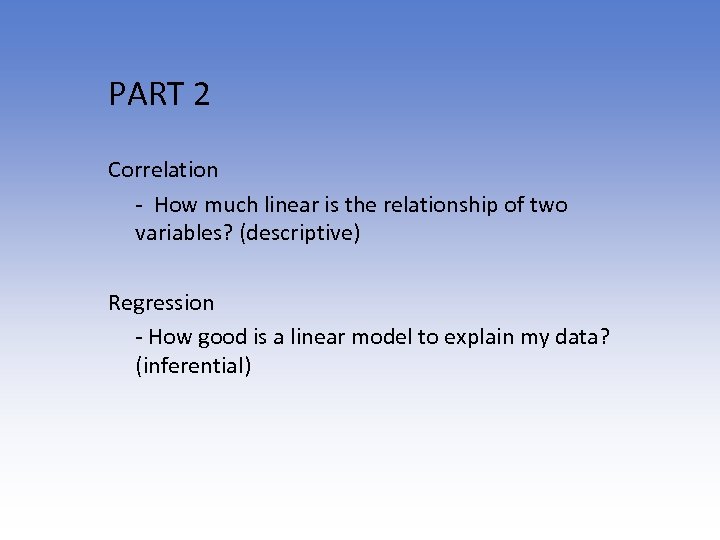

PART 2 Correlation - How much linear is the relationship of two variables? (descriptive) Regression - How good is a linear model to explain my data? (inferential)

PART 2 Correlation - How much linear is the relationship of two variables? (descriptive) Regression - How good is a linear model to explain my data? (inferential)

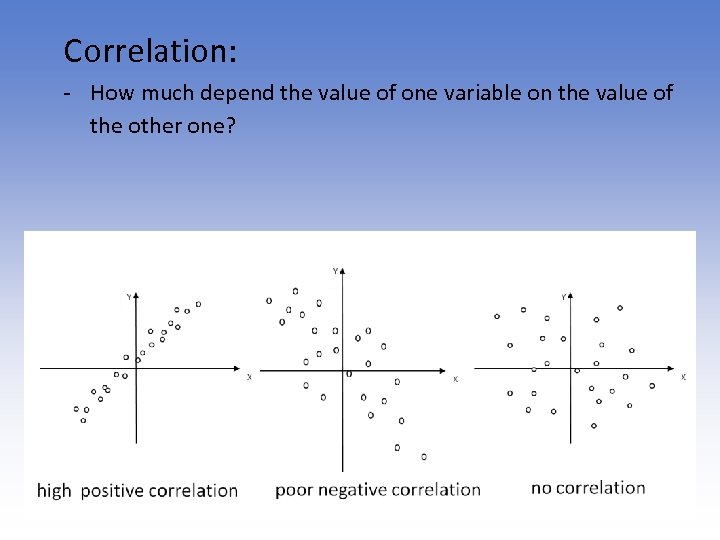

Correlation: - How much depend the value of one variable on the value of the other one?

Correlation: - How much depend the value of one variable on the value of the other one?

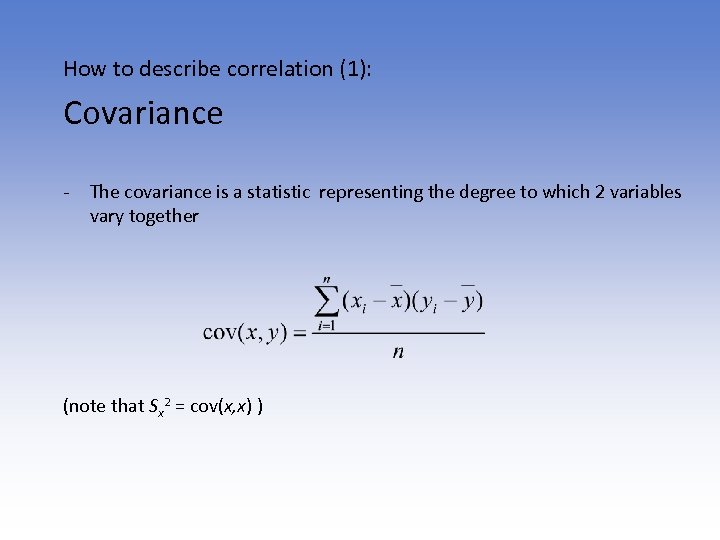

How to describe correlation (1): Covariance - The covariance is a statistic representing the degree to which 2 variables vary together (note that Sx 2 = cov(x, x) )

How to describe correlation (1): Covariance - The covariance is a statistic representing the degree to which 2 variables vary together (note that Sx 2 = cov(x, x) )

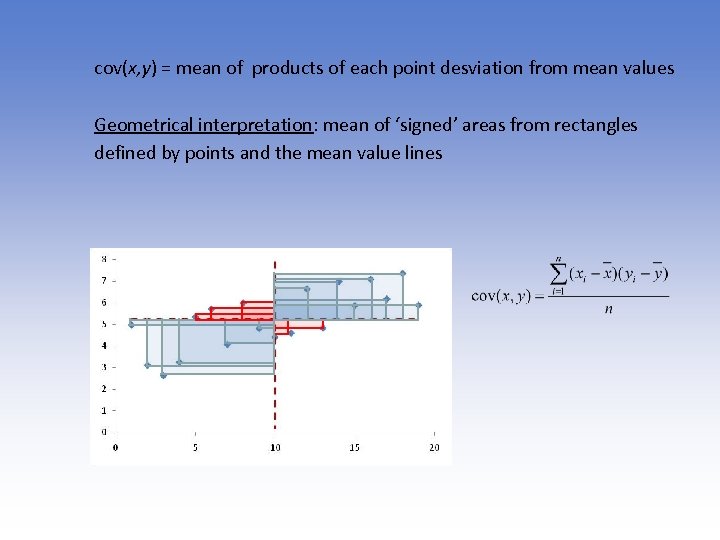

cov(x, y) = mean of products of each point desviation from mean values Geometrical interpretation: mean of ‘signed’ areas from rectangles defined by points and the mean value lines

cov(x, y) = mean of products of each point desviation from mean values Geometrical interpretation: mean of ‘signed’ areas from rectangles defined by points and the mean value lines

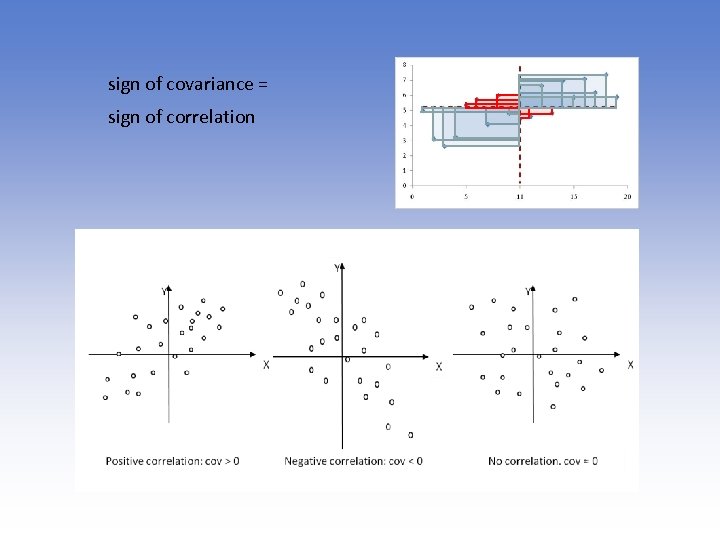

sign of covariance = sign of correlation

sign of covariance = sign of correlation

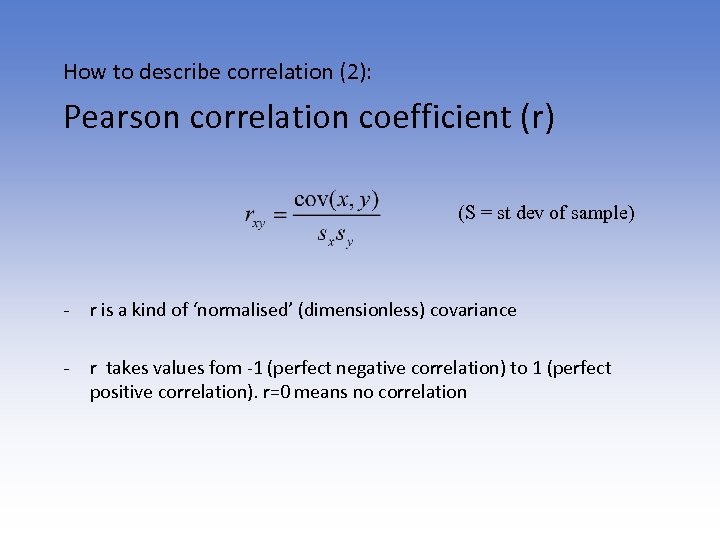

How to describe correlation (2): Pearson correlation coefficient (r) (S = st dev of sample) - r is a kind of ‘normalised’ (dimensionless) covariance - r takes values fom -1 (perfect negative correlation) to 1 (perfect positive correlation). r=0 means no correlation

How to describe correlation (2): Pearson correlation coefficient (r) (S = st dev of sample) - r is a kind of ‘normalised’ (dimensionless) covariance - r takes values fom -1 (perfect negative correlation) to 1 (perfect positive correlation). r=0 means no correlation

Pearson correlation coefficient (r) Problems: - It is sensitive to outlayers - r is an estimate from the sample, but does it represent the population parameter?

Pearson correlation coefficient (r) Problems: - It is sensitive to outlayers - r is an estimate from the sample, but does it represent the population parameter?

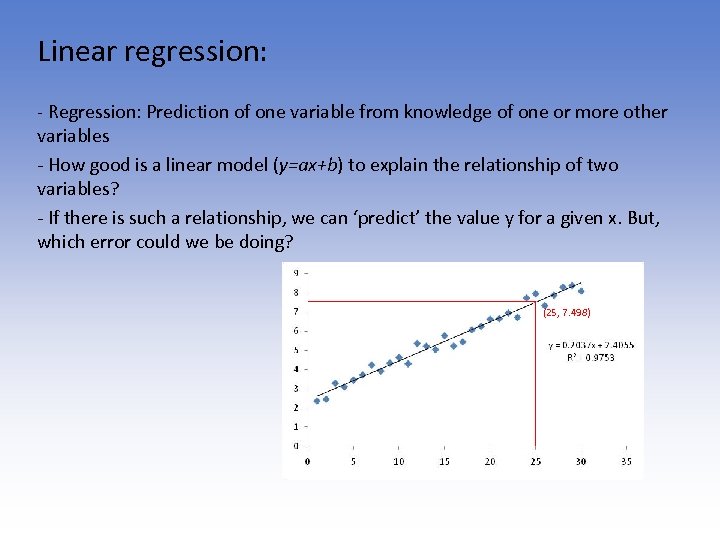

Linear regression: - Regression: Prediction of one variable from knowledge of one or more other variables - How good is a linear model (y=ax+b) to explain the relationship of two variables? - If there is such a relationship, we can ‘predict’ the value y for a given x. But, which error could we be doing? (25, 7. 498)

Linear regression: - Regression: Prediction of one variable from knowledge of one or more other variables - How good is a linear model (y=ax+b) to explain the relationship of two variables? - If there is such a relationship, we can ‘predict’ the value y for a given x. But, which error could we be doing? (25, 7. 498)

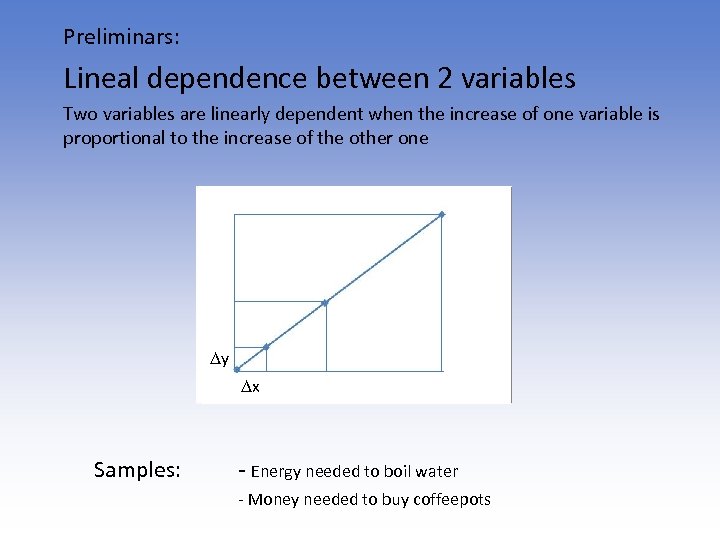

Preliminars: Lineal dependence between 2 variables Two variables are linearly dependent when the increase of one variable is proportional to the increase of the other one y x Samples: - Energy needed to boil water - Money needed to buy coffeepots

Preliminars: Lineal dependence between 2 variables Two variables are linearly dependent when the increase of one variable is proportional to the increase of the other one y x Samples: - Energy needed to boil water - Money needed to buy coffeepots

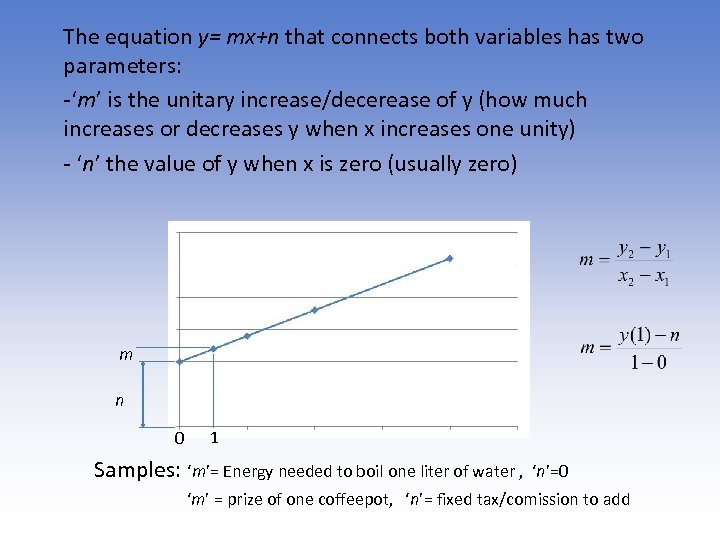

The equation y= mx+n that connects both variables has two parameters: -‘m’ is the unitary increase/decerease of y (how much increases or decreases y when x increases one unity) - ‘n’ the value of y when x is zero (usually zero) m n 0 1 Samples: ‘m’= Energy needed to boil one liter of water , ‘n’=0 ‘m’ = prize of one coffeepot, ‘n’= fixed tax/comission to add

The equation y= mx+n that connects both variables has two parameters: -‘m’ is the unitary increase/decerease of y (how much increases or decreases y when x increases one unity) - ‘n’ the value of y when x is zero (usually zero) m n 0 1 Samples: ‘m’= Energy needed to boil one liter of water , ‘n’=0 ‘m’ = prize of one coffeepot, ‘n’= fixed tax/comission to add

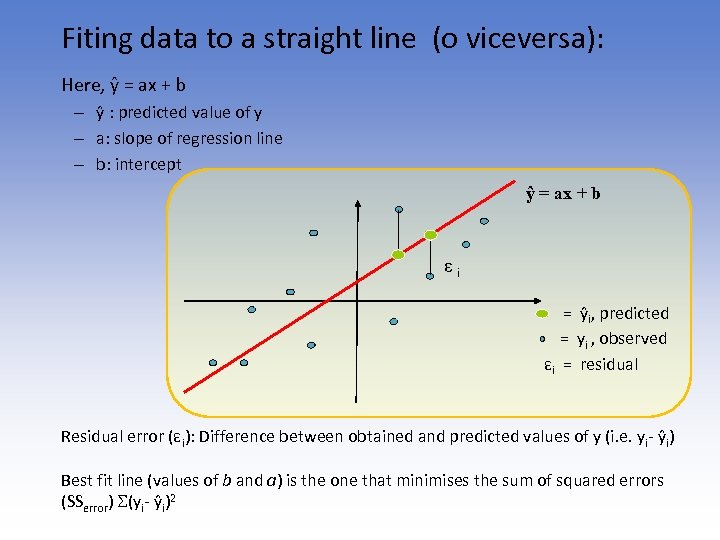

Fiting data to a straight line (o viceversa): Here, ŷ = ax + b – ŷ : predicted value of y – a: slope of regression line – b: intercept ŷ = ax + b εi = ŷi, predicted = yi , observed εi = residual Residual error (εi): Difference between obtained and predicted values of y (i. e. yi- ŷi) Best fit line (values of b and a) is the one that minimises the sum of squared errors (SSerror) (yi- ŷi)2

Fiting data to a straight line (o viceversa): Here, ŷ = ax + b – ŷ : predicted value of y – a: slope of regression line – b: intercept ŷ = ax + b εi = ŷi, predicted = yi , observed εi = residual Residual error (εi): Difference between obtained and predicted values of y (i. e. yi- ŷi) Best fit line (values of b and a) is the one that minimises the sum of squared errors (SSerror) (yi- ŷi)2

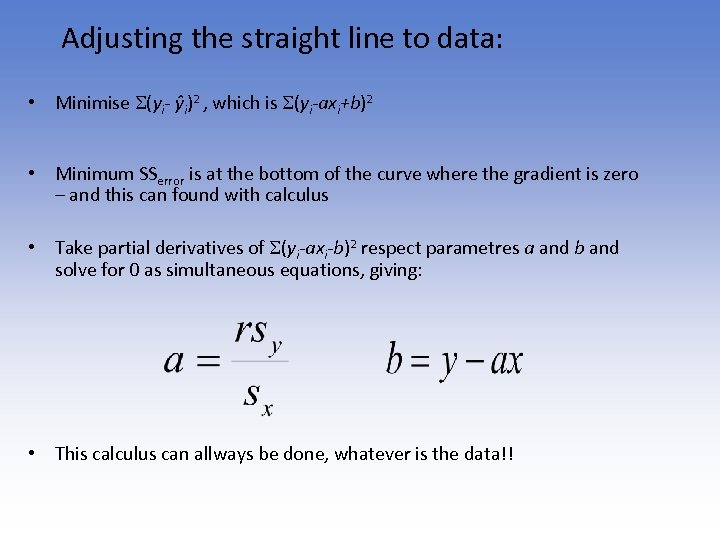

Adjusting the straight line to data: • Minimise (yi- ŷi)2 , which is (yi-axi+b)2 • Minimum SSerror is at the bottom of the curve where the gradient is zero – and this can found with calculus • Take partial derivatives of (yi-axi-b)2 respect parametres a and b and solve for 0 as simultaneous equations, giving: • This calculus can allways be done, whatever is the data!!

Adjusting the straight line to data: • Minimise (yi- ŷi)2 , which is (yi-axi+b)2 • Minimum SSerror is at the bottom of the curve where the gradient is zero – and this can found with calculus • Take partial derivatives of (yi-axi-b)2 respect parametres a and b and solve for 0 as simultaneous equations, giving: • This calculus can allways be done, whatever is the data!!

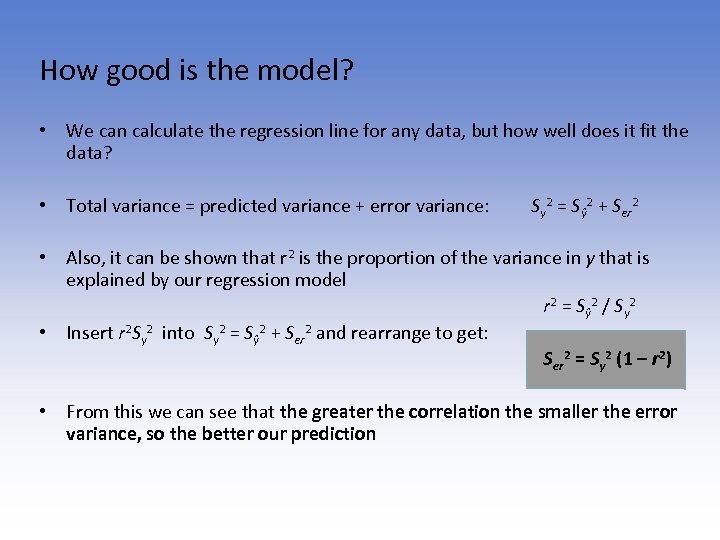

How good is the model? • We can calculate the regression line for any data, but how well does it fit the data? • Total variance = predicted variance + error variance: Sy 2 = Sŷ 2 + Ser 2 • Also, it can be shown that r 2 is the proportion of the variance in y that is explained by our regression model r 2 = Sŷ 2 / Sy 2 • Insert r 2 Sy 2 into Sy 2 = Sŷ 2 + Ser 2 and rearrange to get: Ser 2 = Sy 2 (1 – r 2) • From this we can see that the greater the correlation the smaller the error variance, so the better our prediction

How good is the model? • We can calculate the regression line for any data, but how well does it fit the data? • Total variance = predicted variance + error variance: Sy 2 = Sŷ 2 + Ser 2 • Also, it can be shown that r 2 is the proportion of the variance in y that is explained by our regression model r 2 = Sŷ 2 / Sy 2 • Insert r 2 Sy 2 into Sy 2 = Sŷ 2 + Ser 2 and rearrange to get: Ser 2 = Sy 2 (1 – r 2) • From this we can see that the greater the correlation the smaller the error variance, so the better our prediction

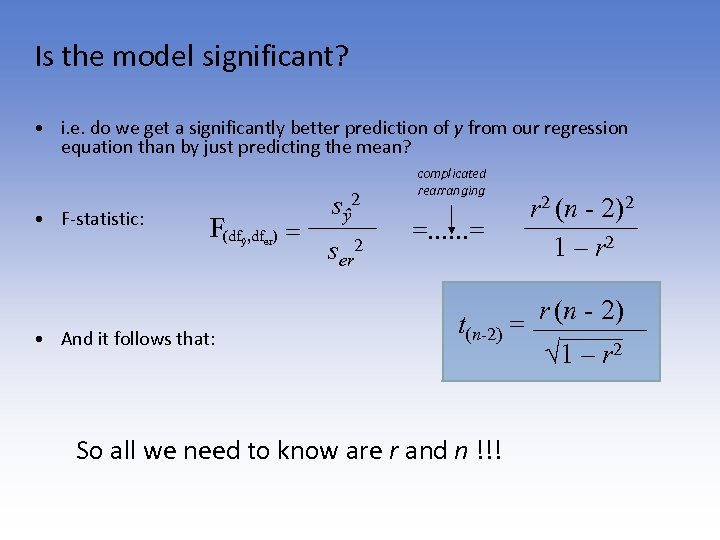

Is the model significant? • i. e. do we get a significantly better prediction of y from our regression equation than by just predicting the mean? • F-statistic: F(df , df ) = ŷ • And it follows that: er sŷ 2 ser 2 complicated rearranging =. . . = t(n-2) = So all we need to know are r and n !!! r 2 (n - 2)2 1 – r 2 r (n - 2) √ 1 – r 2

Is the model significant? • i. e. do we get a significantly better prediction of y from our regression equation than by just predicting the mean? • F-statistic: F(df , df ) = ŷ • And it follows that: er sŷ 2 ser 2 complicated rearranging =. . . = t(n-2) = So all we need to know are r and n !!! r 2 (n - 2)2 1 – r 2 r (n - 2) √ 1 – r 2

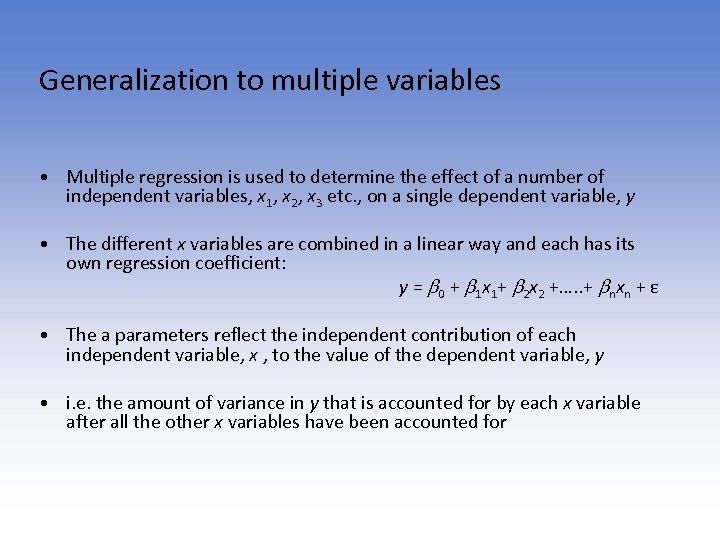

Generalization to multiple variables • Multiple regression is used to determine the effect of a number of independent variables, x 1, x 2, x 3 etc. , on a single dependent variable, y • The different x variables are combined in a linear way and each has its own regression coefficient: y = b 0 + b 1 x 1+ b 2 x 2 +…. . + bnxn + ε • The a parameters reflect the independent contribution of each independent variable, x , to the value of the dependent variable, y • i. e. the amount of variance in y that is accounted for by each x variable after all the other x variables have been accounted for

Generalization to multiple variables • Multiple regression is used to determine the effect of a number of independent variables, x 1, x 2, x 3 etc. , on a single dependent variable, y • The different x variables are combined in a linear way and each has its own regression coefficient: y = b 0 + b 1 x 1+ b 2 x 2 +…. . + bnxn + ε • The a parameters reflect the independent contribution of each independent variable, x , to the value of the dependent variable, y • i. e. the amount of variance in y that is accounted for by each x variable after all the other x variables have been accounted for

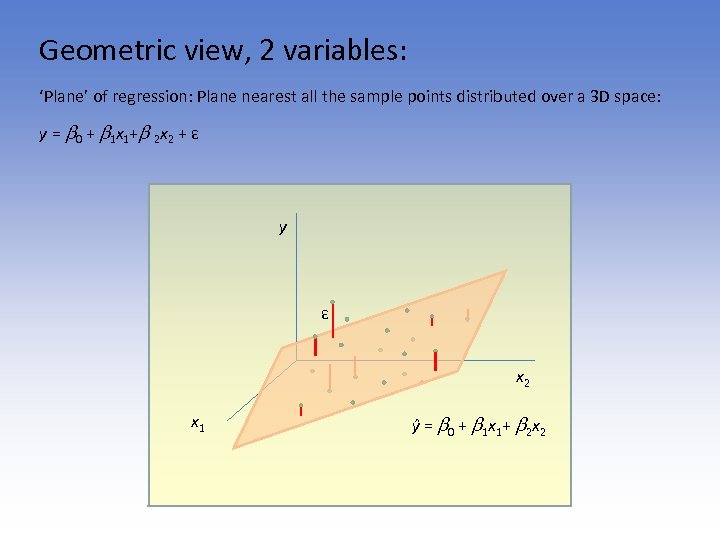

Geometric view, 2 variables: ‘Plane’ of regression: Plane nearest all the sample points distributed over a 3 D space: y = b 0 + b 1 x 1+b 2 x 2 + ε y ε x 2 x 1 ŷ = b 0 + b 1 x 1+ b 2 x 2

Geometric view, 2 variables: ‘Plane’ of regression: Plane nearest all the sample points distributed over a 3 D space: y = b 0 + b 1 x 1+b 2 x 2 + ε y ε x 2 x 1 ŷ = b 0 + b 1 x 1+ b 2 x 2

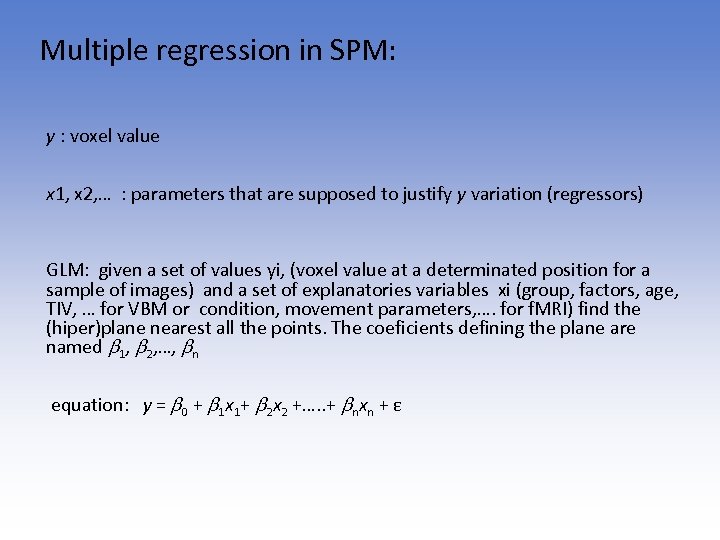

Multiple regression in SPM: y : voxel value x 1, x 2, … : parameters that are supposed to justify y variation (regressors) GLM: given a set of values yi, (voxel value at a determinated position for a sample of images) and a set of explanatories variables xi (group, factors, age, TIV, … for VBM or condition, movement parameters, …. for f. MRI) find the (hiper)plane nearest all the points. The coeficients defining the plane are named b 1, b 2, …, bn equation: y = b 0 + b 1 x 1+ b 2 x 2 +…. . + bnxn + ε

Multiple regression in SPM: y : voxel value x 1, x 2, … : parameters that are supposed to justify y variation (regressors) GLM: given a set of values yi, (voxel value at a determinated position for a sample of images) and a set of explanatories variables xi (group, factors, age, TIV, … for VBM or condition, movement parameters, …. for f. MRI) find the (hiper)plane nearest all the points. The coeficients defining the plane are named b 1, b 2, …, bn equation: y = b 0 + b 1 x 1+ b 2 x 2 +…. . + bnxn + ε

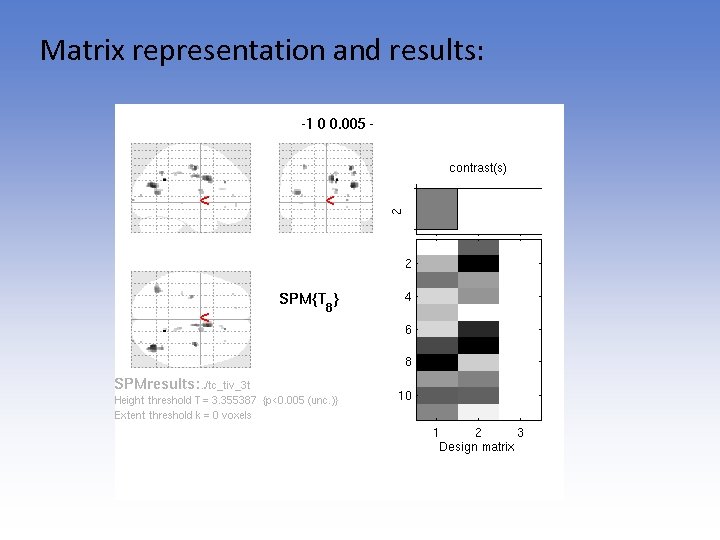

Matrix representation and results:

Matrix representation and results:

Last remarks: - Correlated doesn’t mean related. e. g, any two variables increasing or decreasing over time would show a nice correlation: C 02 air concentration in Antartica and lodging rental cost in London. Beware in longitudinal studies!!! - Relationship between two variables doesn’t mean causality (e. g leaves on the forest floor and hours of sun) - Cov(x, y)=0 doesn’t mean x, y being independents (yes for linear relationship but it could be quadratic, …)

Last remarks: - Correlated doesn’t mean related. e. g, any two variables increasing or decreasing over time would show a nice correlation: C 02 air concentration in Antartica and lodging rental cost in London. Beware in longitudinal studies!!! - Relationship between two variables doesn’t mean causality (e. g leaves on the forest floor and hours of sun) - Cov(x, y)=0 doesn’t mean x, y being independents (yes for linear relationship but it could be quadratic, …)

Questions ? Please don’t!

Questions ? Please don’t!

REFERENCES • Field, A. (2005). Discovering Statistics Using SPSS (3 rd ed). London: Sage Publications Ltd. • Field, A. (2009). Discovering Statistics Using SPSS (2 nd ed). London: Sage Publications Ltd. • Various stats websites (google yourself happy) • Old Mf. D slides, esp 2008

REFERENCES • Field, A. (2005). Discovering Statistics Using SPSS (3 rd ed). London: Sage Publications Ltd. • Field, A. (2009). Discovering Statistics Using SPSS (2 nd ed). London: Sage Publications Ltd. • Various stats websites (google yourself happy) • Old Mf. D slides, esp 2008