6757bb97918e33c6da4dc1d24d73c2b9.ppt

- Количество слайдов: 20

System and Application Performance at Extreme Scale Adolfy Hoisie with: Kevin Barker, Kei Davis, Eitan Frachtenberg, Song Jiang Greg Johnson, Darren Kerbyson, Mike Lang, Scott Pakin, Fabrizio Petrini, Jose-Carlos Sancho. www. c 3. lanl. gov/par_arch Performance and Architecture Lab (PAL) Computer and Computational Sciences Division Los Alamos National Laboratory http: //lacsi. rice. edu/review/2004/slides/Hoisie_LACSI_review. ppt

System and Application Performance at Extreme Scale Adolfy Hoisie with: Kevin Barker, Kei Davis, Eitan Frachtenberg, Song Jiang Greg Johnson, Darren Kerbyson, Mike Lang, Scott Pakin, Fabrizio Petrini, Jose-Carlos Sancho. www. c 3. lanl. gov/par_arch Performance and Architecture Lab (PAL) Computer and Computational Sciences Division Los Alamos National Laboratory http: //lacsi. rice. edu/review/2004/slides/Hoisie_LACSI_review. ppt

A Success Story: Performance Modeling • This work was enabled by LACSI, no funding support was available from any other source when we started. • Pioneered novel and practical methodologies in the field of performance – nothing similar exists in predictive modeling for entire apps and large-scale systems. • From the science of performance to practical applications of the methodologies. • Our approach to performance analysis is now applied throughout ASCI and other government sponsored programs. • Having the quantitative tools developed, PAL is now working towards solutions in system architecture, system software. Models are utilized throughout the process. • Disseminated this work widely (journals and conferences, invited speakers, tutorials, JASONS, ASCI PIs, ) to the benefit of the Laboratory and of ASCI.

A Success Story: Performance Modeling • This work was enabled by LACSI, no funding support was available from any other source when we started. • Pioneered novel and practical methodologies in the field of performance – nothing similar exists in predictive modeling for entire apps and large-scale systems. • From the science of performance to practical applications of the methodologies. • Our approach to performance analysis is now applied throughout ASCI and other government sponsored programs. • Having the quantitative tools developed, PAL is now working towards solutions in system architecture, system software. Models are utilized throughout the process. • Disseminated this work widely (journals and conferences, invited speakers, tutorials, JASONS, ASCI PIs, ) to the benefit of the Laboratory and of ASCI.

Outline • An experiment-ahead: Petaflop architecture • Performance analysis and modeling • Modeling methodology • Applications of modeling

Outline • An experiment-ahead: Petaflop architecture • Performance analysis and modeling • Modeling methodology • Applications of modeling

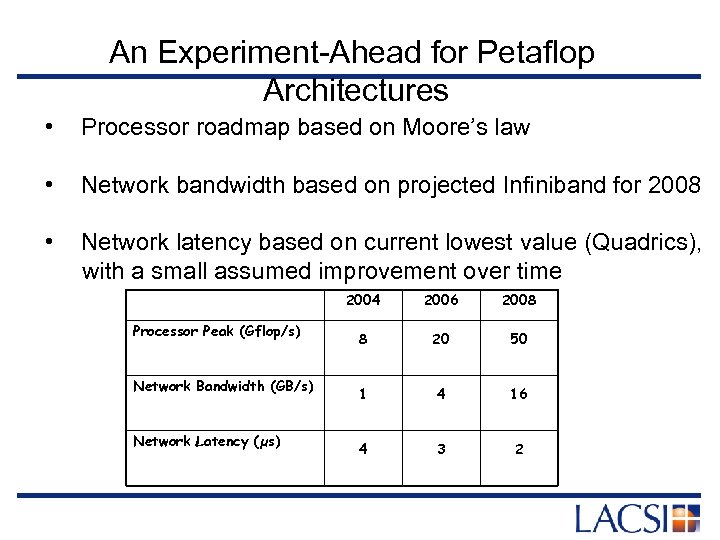

An Experiment-Ahead for Petaflop Architectures • Processor roadmap based on Moore’s law • Network bandwidth based on projected Infiniband for 2008 • Network latency based on current lowest value (Quadrics), with a small assumed improvement over time 2004 Processor Peak (Gflop/s) Network Bandwidth (GB/s) Network Latency (µs) 2006 2008 8 20 50 1 4 16 4 3 2

An Experiment-Ahead for Petaflop Architectures • Processor roadmap based on Moore’s law • Network bandwidth based on projected Infiniband for 2008 • Network latency based on current lowest value (Quadrics), with a small assumed improvement over time 2004 Processor Peak (Gflop/s) Network Bandwidth (GB/s) Network Latency (µs) 2006 2008 8 20 50 1 4 16 4 3 2

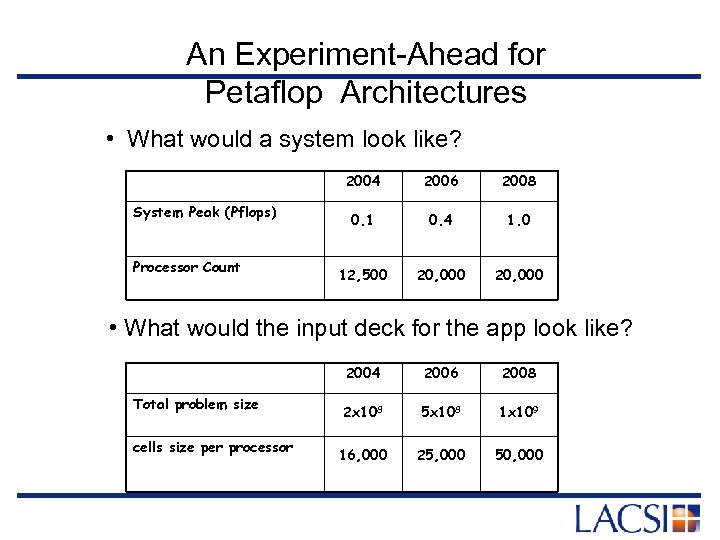

An Experiment-Ahead for Petaflop Architectures • What would a system look like? 2004 System Peak (Pflops) Processor Count 2006 2008 0. 1 0. 4 1. 0 12, 500 20, 000 • What would the input deck for the app look like? 2004 Total problem size cells size per processor 2006 2008 2 x 108 5 x 108 1 x 109 16, 000 25, 000 50, 000

An Experiment-Ahead for Petaflop Architectures • What would a system look like? 2004 System Peak (Pflops) Processor Count 2006 2008 0. 1 0. 4 1. 0 12, 500 20, 000 • What would the input deck for the app look like? 2004 Total problem size cells size per processor 2006 2008 2 x 108 5 x 108 1 x 109 16, 000 25, 000 50, 000

An Experiment-Ahead for Petaflop Architectures • Use expected system characteristics and problem sizes • Use PAL’s performance models • Provide expected performance improvements over time • Assume we well get same % of peak on a single CPU (~14% Sn, ~8% Hydro)

An Experiment-Ahead for Petaflop Architectures • Use expected system characteristics and problem sizes • Use PAL’s performance models • Provide expected performance improvements over time • Assume we well get same % of peak on a single CPU (~14% Sn, ~8% Hydro)

Performance improvements relative to ASCI Q • Workload assuming 80% Sn transport and 20% Hydro

Performance improvements relative to ASCI Q • Workload assuming 80% Sn transport and 20% Hydro

Performance Modeling Process • Basic approach: Trun = Tcomputation + Tcommunication - Toverlap Trun = f (T 1 -CPU , Scalability) • We are not using first principles to model singleprocessor computation time. —Rely on measurements for T 1 -CPU or simulation

Performance Modeling Process • Basic approach: Trun = Tcomputation + Tcommunication - Toverlap Trun = f (T 1 -CPU , Scalability) • We are not using first principles to model singleprocessor computation time. —Rely on measurements for T 1 -CPU or simulation

Performance Modeling Process • Simplified View of the Process: —Distill the design space by careful inspection of the code —Parameterize the key application characteristics —Parameterize the machine performance characteristics —Measure using microbenchmarks —Combine empirical data with analytical model —Iterate

Performance Modeling Process • Simplified View of the Process: —Distill the design space by careful inspection of the code —Parameterize the key application characteristics —Parameterize the machine performance characteristics —Measure using microbenchmarks —Combine empirical data with analytical model —Iterate

Performance Analysis • Measuring performance is of limited use: —current implementation of code —currently available architectures —impossible to distinguish between real performance and machine idiosyncrasies • Design space is Multidimensional —runtime = f( microprocessor performance, memory hierarchy, network characteristics, compiler/language etc. ) • Performance Characterization —typically done based on cross-sections of the design space

Performance Analysis • Measuring performance is of limited use: —current implementation of code —currently available architectures —impossible to distinguish between real performance and machine idiosyncrasies • Design space is Multidimensional —runtime = f( microprocessor performance, memory hierarchy, network characteristics, compiler/language etc. ) • Performance Characterization —typically done based on cross-sections of the design space

Application Coverage • Apps representative of the tri-Lab workload (LANL, LLNL, SNL) • Apps from OS workload: Community Climate Codes (POP, CICE, etc) • Apps in the DARPA HPCS suite (HYCOM, LBMHD, RFCTH, etc)

Application Coverage • Apps representative of the tri-Lab workload (LANL, LLNL, SNL) • Apps from OS workload: Community Climate Codes (POP, CICE, etc) • Apps in the DARPA HPCS suite (HYCOM, LBMHD, RFCTH, etc)

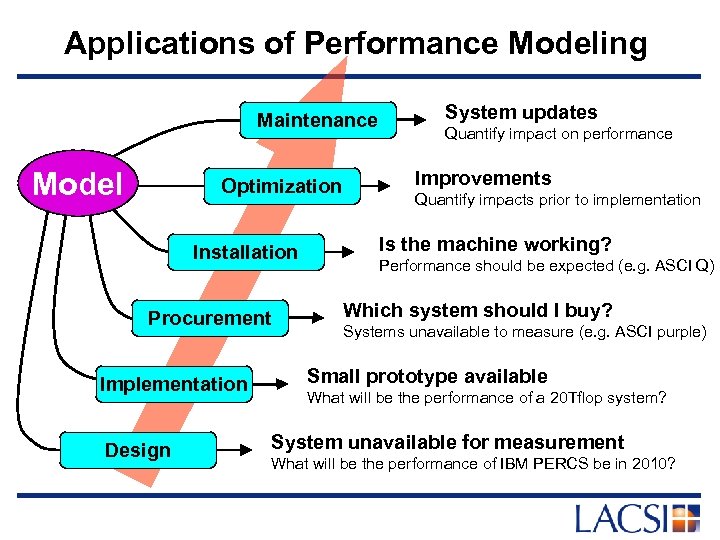

Applications of Performance Modeling Maintenance Model Optimization Installation Procurement Implementation Design System updates Quantify impact on performance Improvements Quantify impacts prior to implementation Is the machine working? Performance should be expected (e. g. ASCI Q) Which system should I buy? Systems unavailable to measure (e. g. ASCI purple) Small prototype available What will be the performance of a 20 Tflop system? System unavailable for measurement What will be the performance of IBM PERCS be in 2010?

Applications of Performance Modeling Maintenance Model Optimization Installation Procurement Implementation Design System updates Quantify impact on performance Improvements Quantify impacts prior to implementation Is the machine working? Performance should be expected (e. g. ASCI Q) Which system should I buy? Systems unavailable to measure (e. g. ASCI purple) Small prototype available What will be the performance of a 20 Tflop system? System unavailable for measurement What will be the performance of IBM PERCS be in 2010?

Application Optimization: Data Decomposition in Sage

Application Optimization: Data Decomposition in Sage

Experience: Q Installation • • Model provides expected performance Installation performed in stages Late 2001: Early 2002: (upgraded PCI) -> Model used to validate measurements!

Experience: Q Installation • • Model provides expected performance Installation performed in stages Late 2001: Early 2002: (upgraded PCI) -> Model used to validate measurements!

Performance on 1024 nodes • Performance consistent across QA and QB —Measured time 2 x greater than model (4096 PEs) There is a difference why ? Lower is better!

Performance on 1024 nodes • Performance consistent across QA and QB —Measured time 2 x greater than model (4096 PEs) There is a difference why ? Lower is better!

Resulting SAGE Performance

Resulting SAGE Performance

Comparison between the Earth Simulator and ASCI Q • • Numbers in table indicate a peak Tflop rated Alpha ES 46 system that would achieve the same performance as the Earth Simulator Currently: SAGE on NEC SX-6 achieved 5% on first run (Sweep 3 D expected to be less). This may improve over time.

Comparison between the Earth Simulator and ASCI Q • • Numbers in table indicate a peak Tflop rated Alpha ES 46 system that would achieve the same performance as the Earth Simulator Currently: SAGE on NEC SX-6 achieved 5% on first run (Sweep 3 D expected to be less). This may improve over time.

Comparison between BG/L and ASCI Q Sweep 3 D Performance

Comparison between BG/L and ASCI Q Sweep 3 D Performance

Where we go from here • Application of modeling to system architecture design • Further increase the application coverage • Development of new techniques for performance analysis and modeling • Tool-models development

Where we go from here • Application of modeling to system architecture design • Further increase the application coverage • Development of new techniques for performance analysis and modeling • Tool-models development

Conclusions • Shown a few of the accomplishments and results from this research that LACSI funded. • Spans the gamut from method development to practical applications, from research to development. • Greatest possible return on investment, scientifically and in $.

Conclusions • Shown a few of the accomplishments and results from this research that LACSI funded. • Spans the gamut from method development to practical applications, from research to development. • Greatest possible return on investment, scientifically and in $.