e85905e39de39d096cddfada9a6c7dfa.ppt

- Количество слайдов: 27

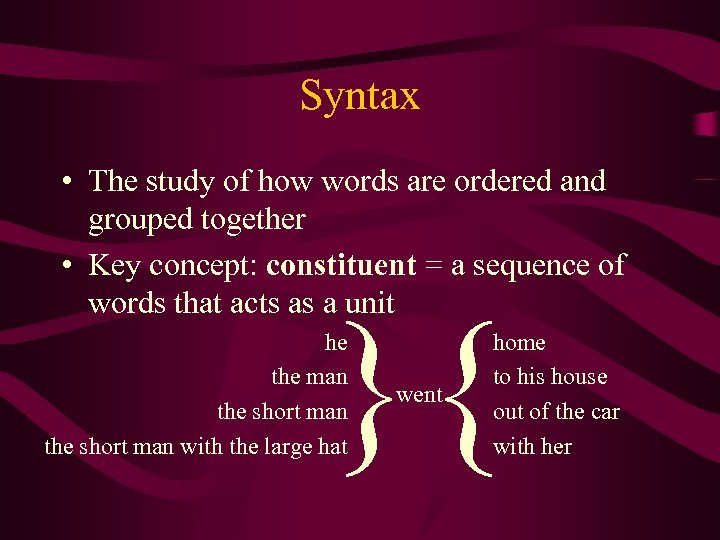

Syntax • The study of how words are ordered and grouped together • Key concept: constituent = a sequence of words that acts as a unit }{ he the man the short man with the large hat went home to his house out of the car with her

Syntax • The study of how words are ordered and grouped together • Key concept: constituent = a sequence of words that acts as a unit }{ he the man the short man with the large hat went home to his house out of the car with her

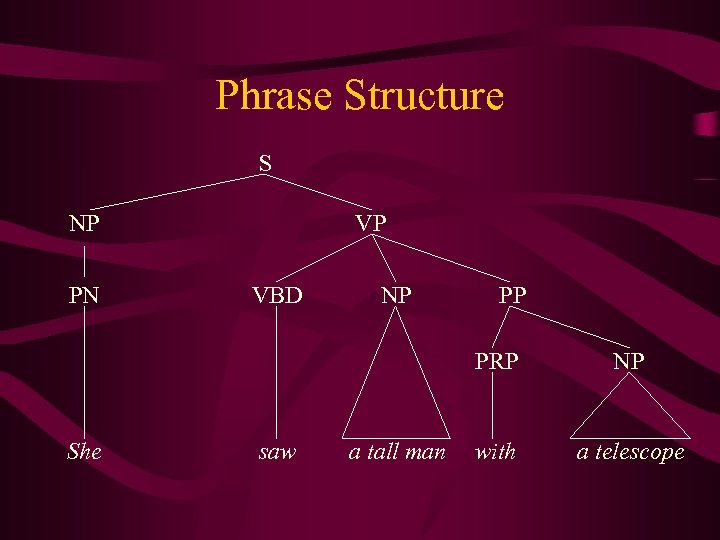

Phrase Structure S NP PN VP VBD NP PP PRP She saw a tall man NP with a telescope

Phrase Structure S NP PN VP VBD NP PP PRP She saw a tall man NP with a telescope

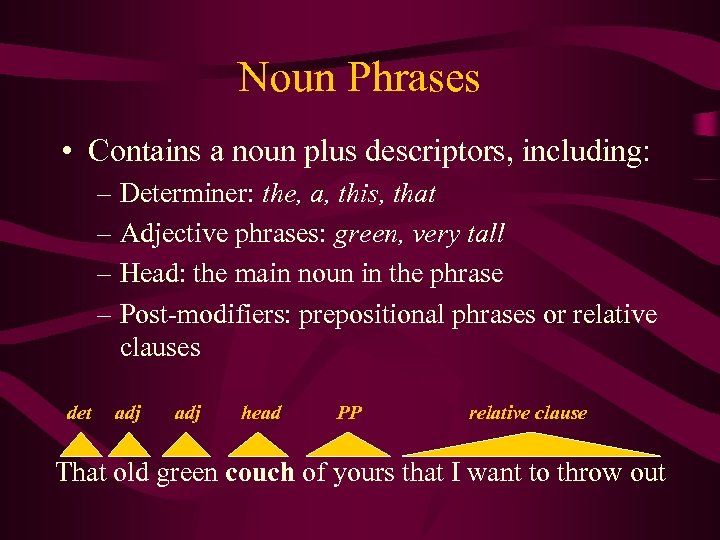

Noun Phrases • Contains a noun plus descriptors, including: – Determiner: the, a, this, that – Adjective phrases: green, very tall – Head: the main noun in the phrase – Post-modifiers: prepositional phrases or relative clauses det adj head PP relative clause That old green couch of yours that I want to throw out

Noun Phrases • Contains a noun plus descriptors, including: – Determiner: the, a, this, that – Adjective phrases: green, very tall – Head: the main noun in the phrase – Post-modifiers: prepositional phrases or relative clauses det adj head PP relative clause That old green couch of yours that I want to throw out

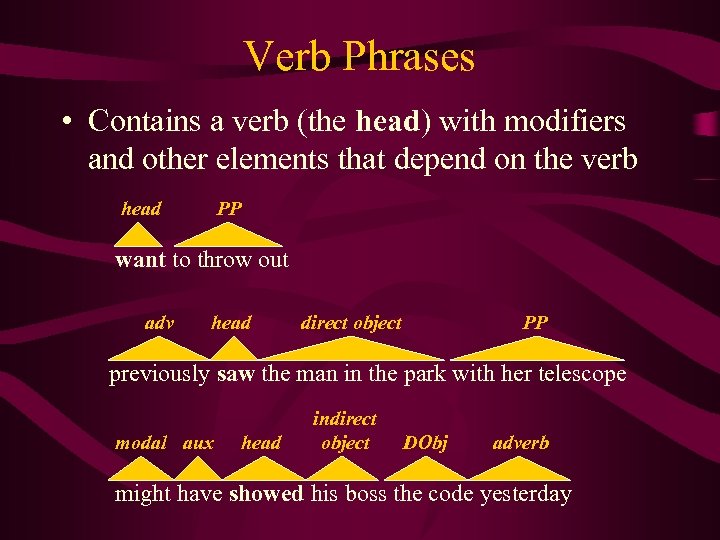

Verb Phrases • Contains a verb (the head) with modifiers and other elements that depend on the verb head PP want to throw out adv head direct object PP previously saw the man in the park with her telescope modal aux head indirect object DObj adverb might have showed his boss the code yesterday

Verb Phrases • Contains a verb (the head) with modifiers and other elements that depend on the verb head PP want to throw out adv head direct object PP previously saw the man in the park with her telescope modal aux head indirect object DObj adverb might have showed his boss the code yesterday

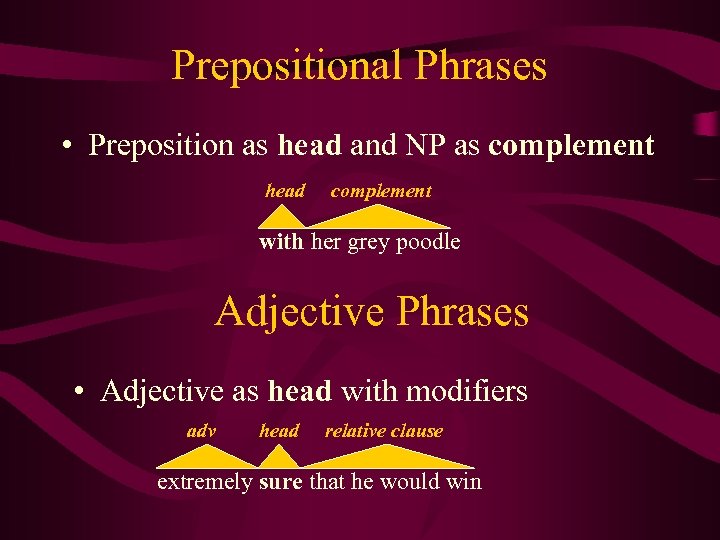

Prepositional Phrases • Preposition as head and NP as complement head complement with her grey poodle Adjective Phrases • Adjective as head with modifiers adv head relative clause extremely sure that he would win

Prepositional Phrases • Preposition as head and NP as complement head complement with her grey poodle Adjective Phrases • Adjective as head with modifiers adv head relative clause extremely sure that he would win

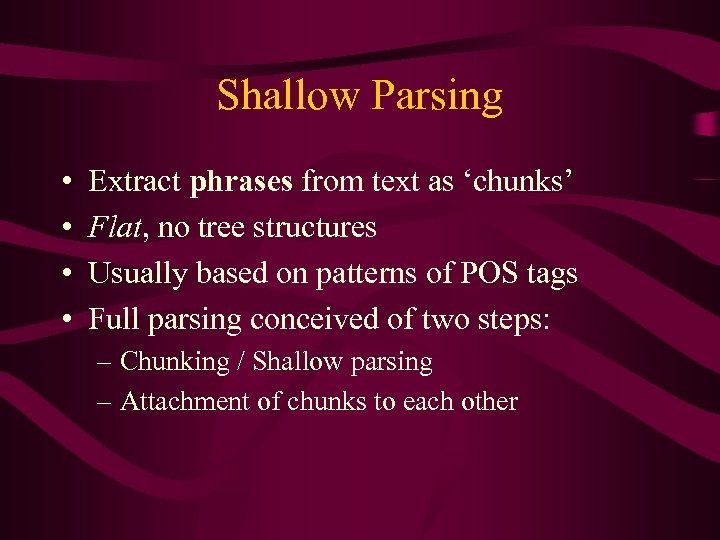

Shallow Parsing • • Extract phrases from text as ‘chunks’ Flat, no tree structures Usually based on patterns of POS tags Full parsing conceived of two steps: – Chunking / Shallow parsing – Attachment of chunks to each other

Shallow Parsing • • Extract phrases from text as ‘chunks’ Flat, no tree structures Usually based on patterns of POS tags Full parsing conceived of two steps: – Chunking / Shallow parsing – Attachment of chunks to each other

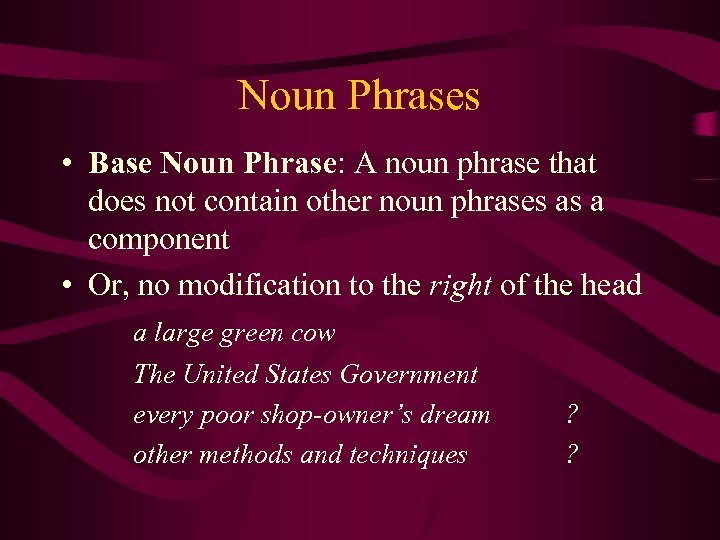

Noun Phrases • Base Noun Phrase: A noun phrase that does not contain other noun phrases as a component • Or, no modification to the right of the head a large green cow The United States Government every poor shop-owner’s dream other methods and techniques ? ?

Noun Phrases • Base Noun Phrase: A noun phrase that does not contain other noun phrases as a component • Or, no modification to the right of the head a large green cow The United States Government every poor shop-owner’s dream other methods and techniques ? ?

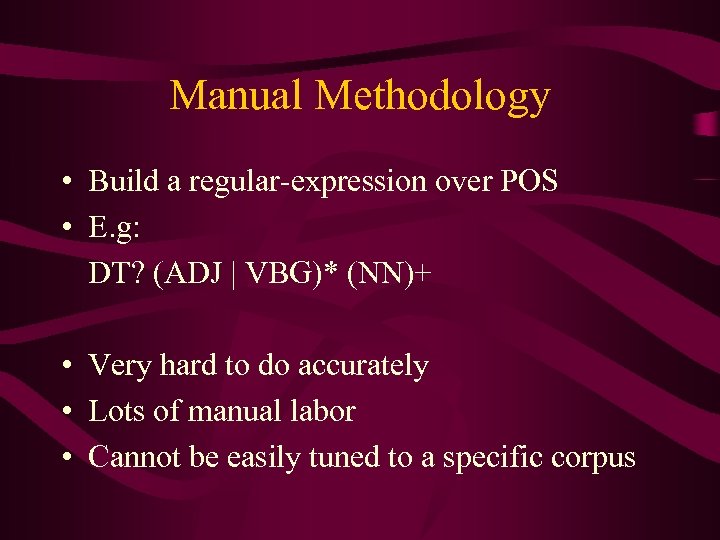

Manual Methodology • Build a regular-expression over POS • E. g: DT? (ADJ | VBG)* (NN)+ • Very hard to do accurately • Lots of manual labor • Cannot be easily tuned to a specific corpus

Manual Methodology • Build a regular-expression over POS • E. g: DT? (ADJ | VBG)* (NN)+ • Very hard to do accurately • Lots of manual labor • Cannot be easily tuned to a specific corpus

![Chunk Tags • Represent NPs by tags: [the tall man] ran with [blinding speed] Chunk Tags • Represent NPs by tags: [the tall man] ran with [blinding speed]](https://present5.com/presentation/e85905e39de39d096cddfada9a6c7dfa/image-9.jpg) Chunk Tags • Represent NPs by tags: [the tall man] ran with [blinding speed] DT ADJ NN 1 VBD PRP VBG NN 0 I I I O O I I • Need B tag for adjacent NPs: On [Tuesday] [the company] went bankrupt O I B I O O

Chunk Tags • Represent NPs by tags: [the tall man] ran with [blinding speed] DT ADJ NN 1 VBD PRP VBG NN 0 I I I O O I I • Need B tag for adjacent NPs: On [Tuesday] [the company] went bankrupt O I B I O O

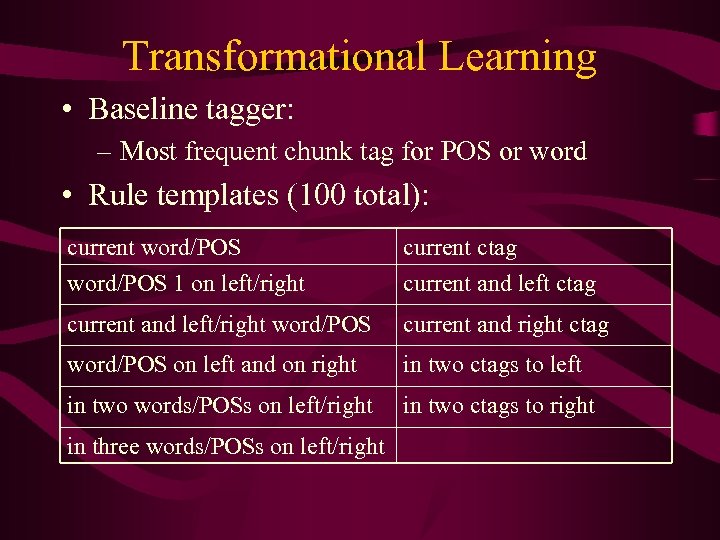

Transformational Learning • Baseline tagger: – Most frequent chunk tag for POS or word • Rule templates (100 total): current word/POS 1 on left/right current ctag current and left/right word/POS current and right ctag word/POS on left and on right in two ctags to left in two words/POSs on left/right in two ctags to right in three words/POSs on left/right

Transformational Learning • Baseline tagger: – Most frequent chunk tag for POS or word • Rule templates (100 total): current word/POS 1 on left/right current ctag current and left/right word/POS current and right ctag word/POS on left and on right in two ctags to left in two words/POSs on left/right in two ctags to right in three words/POSs on left/right

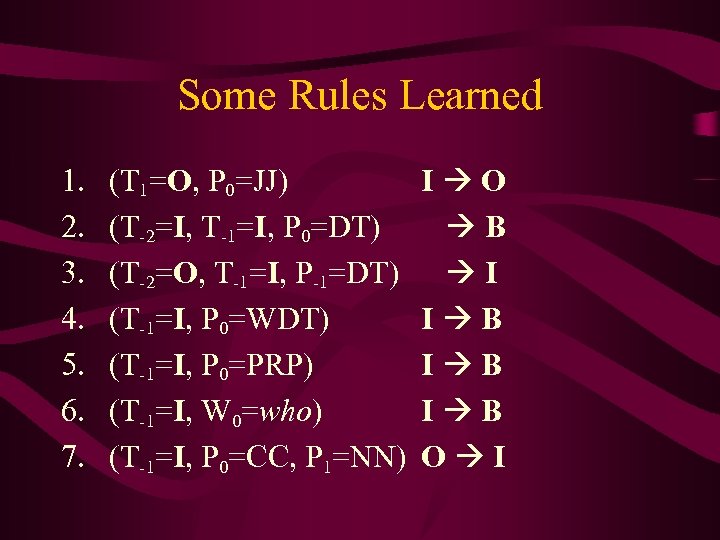

Some Rules Learned 1. 2. 3. 4. 5. 6. 7. (T 1=O, P 0=JJ) (T-2=I, T-1=I, P 0=DT) (T-2=O, T-1=I, P-1=DT) (T-1=I, P 0=WDT) (T-1=I, P 0=PRP) (T-1=I, W 0=who) (T-1=I, P 0=CC, P 1=NN) I O B I I B I B O I

Some Rules Learned 1. 2. 3. 4. 5. 6. 7. (T 1=O, P 0=JJ) (T-2=I, T-1=I, P 0=DT) (T-2=O, T-1=I, P-1=DT) (T-1=I, P 0=WDT) (T-1=I, P 0=PRP) (T-1=I, W 0=who) (T-1=I, P 0=CC, P 1=NN) I O B I I B I B O I

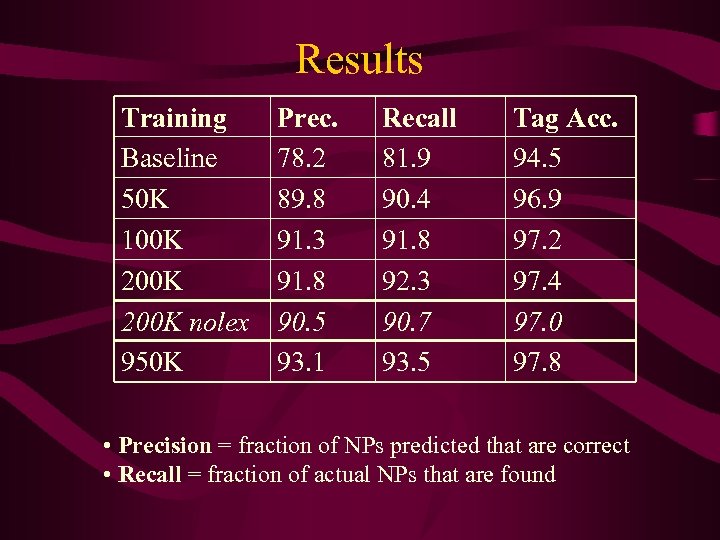

Results Training Baseline 50 K 100 K 200 K nolex 950 K Prec. 78. 2 89. 8 91. 3 91. 8 90. 5 93. 1 Recall 81. 9 90. 4 91. 8 92. 3 90. 7 93. 5 Tag Acc. 94. 5 96. 9 97. 2 97. 4 97. 0 97. 8 • Precision = fraction of NPs predicted that are correct • Recall = fraction of actual NPs that are found

Results Training Baseline 50 K 100 K 200 K nolex 950 K Prec. 78. 2 89. 8 91. 3 91. 8 90. 5 93. 1 Recall 81. 9 90. 4 91. 8 92. 3 90. 7 93. 5 Tag Acc. 94. 5 96. 9 97. 2 97. 4 97. 0 97. 8 • Precision = fraction of NPs predicted that are correct • Recall = fraction of actual NPs that are found

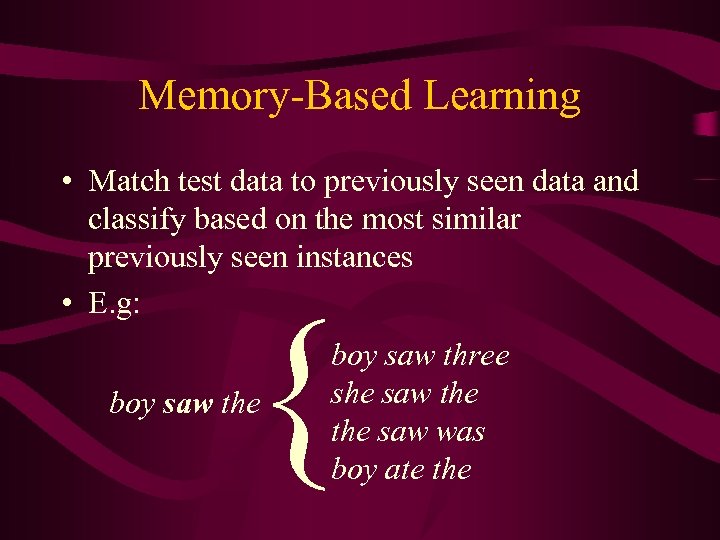

Memory-Based Learning • Match test data to previously seen data and classify based on the most similar previously seen instances • E. g: { boy saw the boy saw three she saw the saw was boy ate the

Memory-Based Learning • Match test data to previously seen data and classify based on the most similar previously seen instances • E. g: { boy saw the boy saw three she saw the saw was boy ate the

k-Nearest Neighbor (k. NN) • Find k most similar training examples • Let them ‘vote’ on the correct class for the test example – Weight neighbors by distance from test • Main problem: defining ‘similar’ – Shallow parsing – overlap of words and POS – Use feature weighting. . .

k-Nearest Neighbor (k. NN) • Find k most similar training examples • Let them ‘vote’ on the correct class for the test example – Weight neighbors by distance from test • Main problem: defining ‘similar’ – Shallow parsing – overlap of words and POS – Use feature weighting. . .

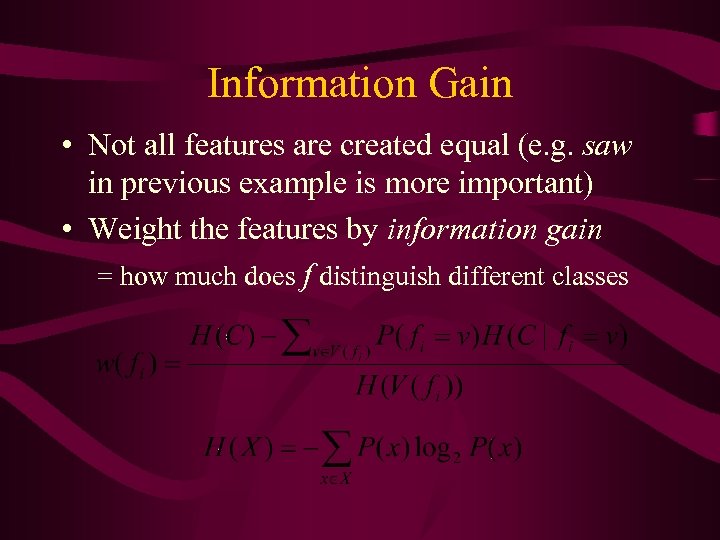

Information Gain • Not all features are created equal (e. g. saw in previous example is more important) • Weight the features by information gain = how much does f distinguish different classes

Information Gain • Not all features are created equal (e. g. saw in previous example is more important) • Weight the features by information gain = how much does f distinguish different classes

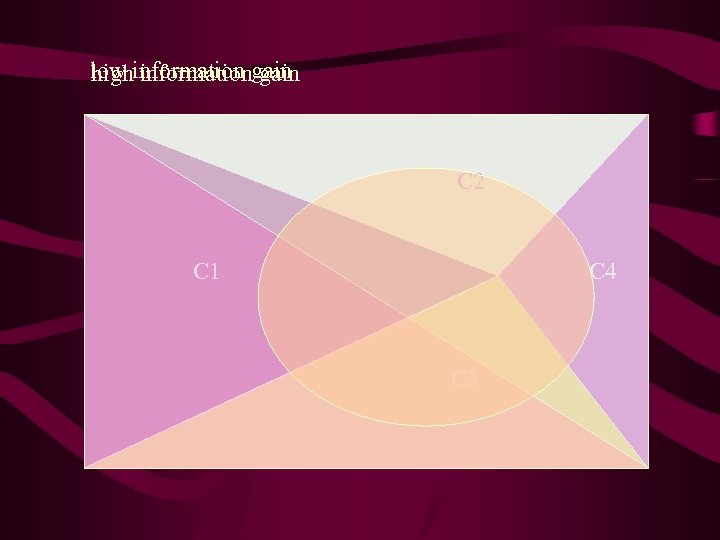

low information gain high information gain C 2 C 1 C 4 C 3

low information gain high information gain C 2 C 1 C 4 C 3

Base Verb Phrase • Verb phrase not including NPs or PPs [NP Pierre Vinken NP] , [NP 61 years NP] old , [VP will soon be joining VP] [NP the board NP] as [NP a nonexecutive director NP].

Base Verb Phrase • Verb phrase not including NPs or PPs [NP Pierre Vinken NP] , [NP 61 years NP] old , [VP will soon be joining VP] [NP the board NP] as [NP a nonexecutive director NP].

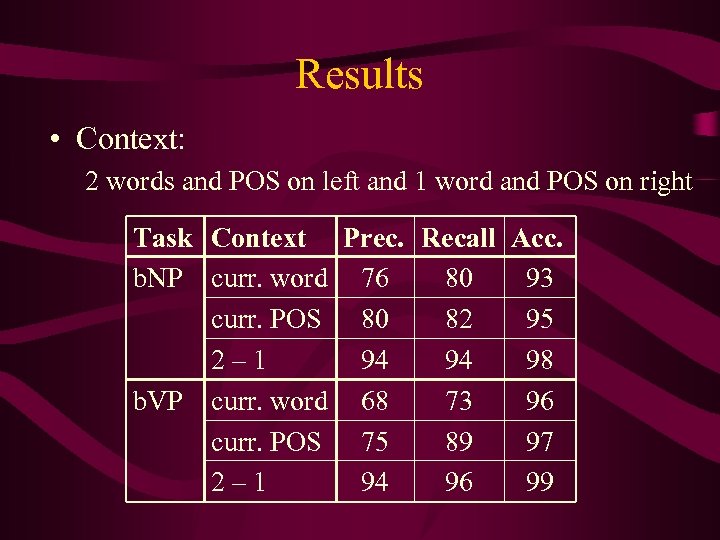

Results • Context: 2 words and POS on left and 1 word and POS on right Task Context Prec. Recall Acc. b. NP curr. word 76 80 93 curr. POS 80 82 95 2– 1 94 94 98 b. VP curr. word 68 73 96 curr. POS 75 89 97 2– 1 94 96 99

Results • Context: 2 words and POS on left and 1 word and POS on right Task Context Prec. Recall Acc. b. NP curr. word 76 80 93 curr. POS 80 82 95 2– 1 94 94 98 b. VP curr. word 68 73 96 curr. POS 75 89 97 2– 1 94 96 99

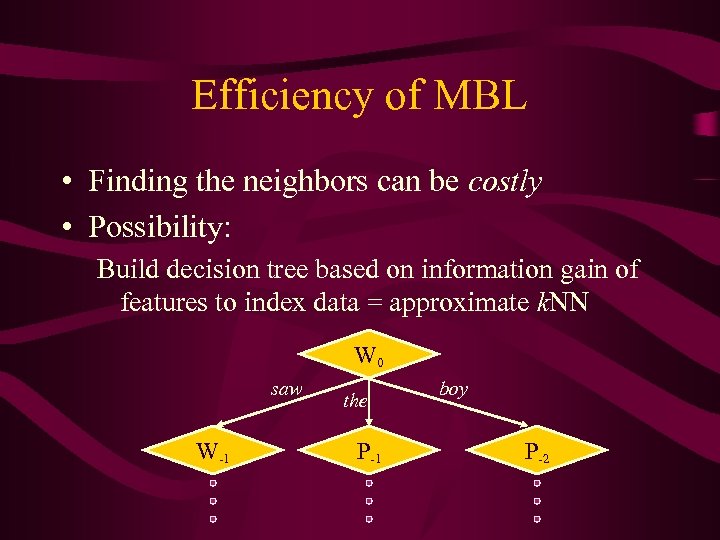

Efficiency of MBL • Finding the neighbors can be costly • Possibility: Build decision tree based on information gain of features to index data = approximate k. NN W 0 saw W-1 the P-1 boy P-2

Efficiency of MBL • Finding the neighbors can be costly • Possibility: Build decision tree based on information gain of features to index data = approximate k. NN W 0 saw W-1 the P-1 boy P-2

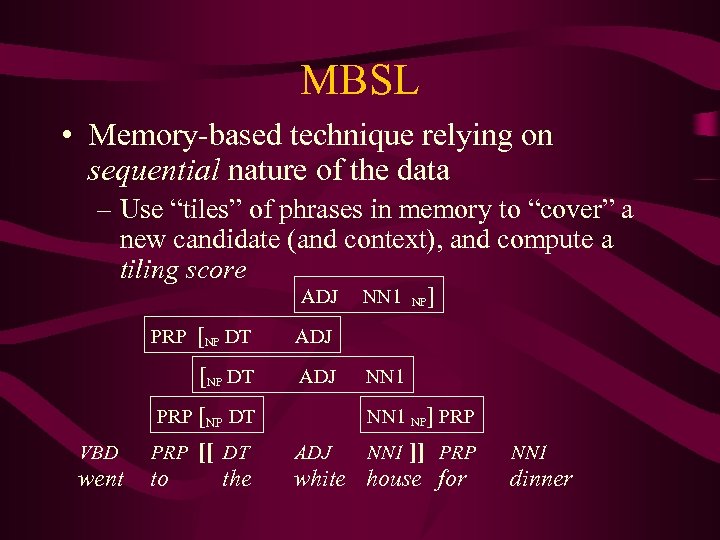

MBSL • Memory-based technique relying on sequential nature of the data – Use “tiles” of phrases in memory to “cover” a new candidate (and context), and compute a tiling score ADJ [NP DT ADJ PRP [NP DT VBD PRP went to NP ] ADJ [NP DT PRP NN 1 [[ DT the NN 1 NP] PRP ADJ NN 1 ]] PRP white house for NN 1 dinner

MBSL • Memory-based technique relying on sequential nature of the data – Use “tiles” of phrases in memory to “cover” a new candidate (and context), and compute a tiling score ADJ [NP DT ADJ PRP [NP DT VBD PRP went to NP ] ADJ [NP DT PRP NN 1 [[ DT the NN 1 NP] PRP ADJ NN 1 ]] PRP white house for NN 1 dinner

![Tile Evidence • Memory: [NP DT NN 1 NP] VBD [NP DT NN 1 Tile Evidence • Memory: [NP DT NN 1 NP] VBD [NP DT NN 1](https://present5.com/presentation/e85905e39de39d096cddfada9a6c7dfa/image-21.jpg) Tile Evidence • Memory: [NP DT NN 1 NP] VBD [NP DT NN 1 NP] [NP NN 2 NP]. [NP ADJ NN 2 NP] AUX VBG PRP [NP DT ADJ NN 1 NP]. • Some tiles: [NP DT NN 1 NP] NN 1 NP] VBD pos=3 pos=2 pos=1 pos=3 pos=1 neg=0 neg=1 neg=0 • Score tile t by ft(t) = pos / total, Only keep tiles that pass a threshhold ft(t) >

Tile Evidence • Memory: [NP DT NN 1 NP] VBD [NP DT NN 1 NP] [NP NN 2 NP]. [NP ADJ NN 2 NP] AUX VBG PRP [NP DT ADJ NN 1 NP]. • Some tiles: [NP DT NN 1 NP] NN 1 NP] VBD pos=3 pos=2 pos=1 pos=3 pos=1 neg=0 neg=1 neg=0 • Score tile t by ft(t) = pos / total, Only keep tiles that pass a threshhold ft(t) >

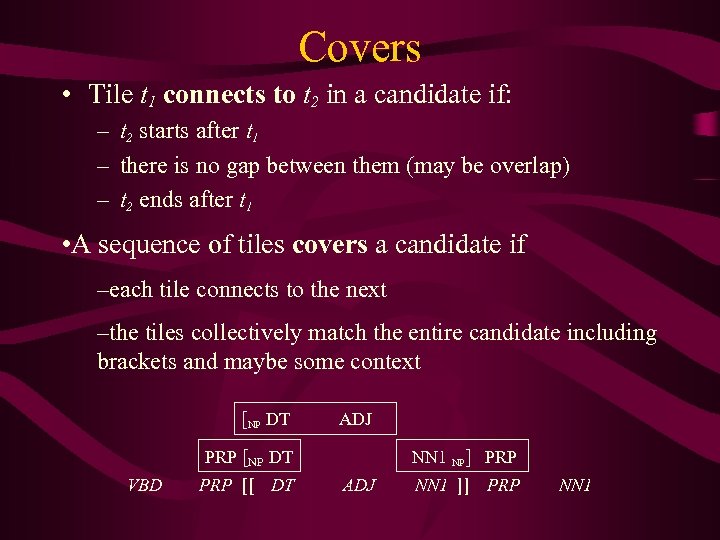

Covers • Tile t 1 connects to t 2 in a candidate if: – t 2 starts after t 1 – there is no gap between them (may be overlap) – t 2 ends after t 1 • A sequence of tiles covers a candidate if –each tile connects to the next –the tiles collectively match the entire candidate including brackets and maybe some context [NP DT ADJ PRP [NP DT VBD PRP [[ DT NN 1 NP] PRP ADJ NN 1 ]] PRP NN 1

Covers • Tile t 1 connects to t 2 in a candidate if: – t 2 starts after t 1 – there is no gap between them (may be overlap) – t 2 ends after t 1 • A sequence of tiles covers a candidate if –each tile connects to the next –the tiles collectively match the entire candidate including brackets and maybe some context [NP DT ADJ PRP [NP DT VBD PRP [[ DT NN 1 NP] PRP ADJ NN 1 ]] PRP NN 1

![Cover Graph ADJ PRP [NP DT NN 1 NP ] ADJ START END [NP Cover Graph ADJ PRP [NP DT NN 1 NP ] ADJ START END [NP](https://present5.com/presentation/e85905e39de39d096cddfada9a6c7dfa/image-23.jpg) Cover Graph ADJ PRP [NP DT NN 1 NP ] ADJ START END [NP DT ADJ PRP [NP DT VBD PRP [[ DT NN 1 NP] PRP ADJ NN 1 ]] PRP NN 1

Cover Graph ADJ PRP [NP DT NN 1 NP ] ADJ START END [NP DT ADJ PRP [NP DT VBD PRP [[ DT NN 1 NP] PRP ADJ NN 1 ]] PRP NN 1

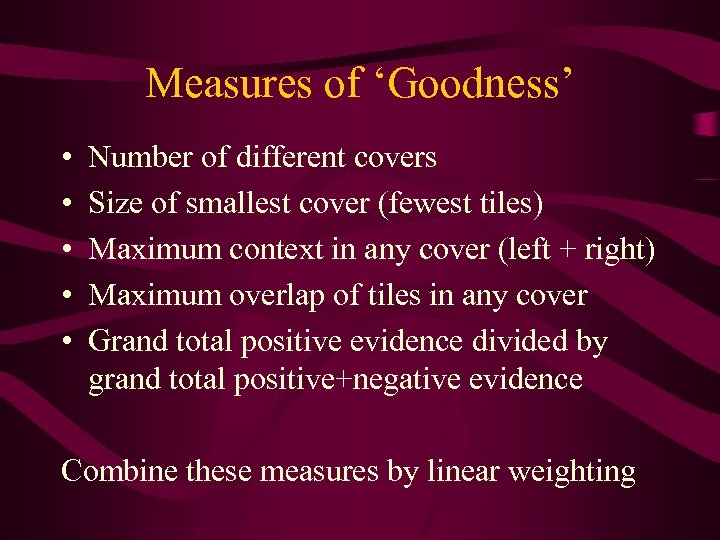

Measures of ‘Goodness’ • • • Number of different covers Size of smallest cover (fewest tiles) Maximum context in any cover (left + right) Maximum overlap of tiles in any cover Grand total positive evidence divided by grand total positive+negative evidence Combine these measures by linear weighting

Measures of ‘Goodness’ • • • Number of different covers Size of smallest cover (fewest tiles) Maximum context in any cover (left + right) Maximum overlap of tiles in any cover Grand total positive evidence divided by grand total positive+negative evidence Combine these measures by linear weighting

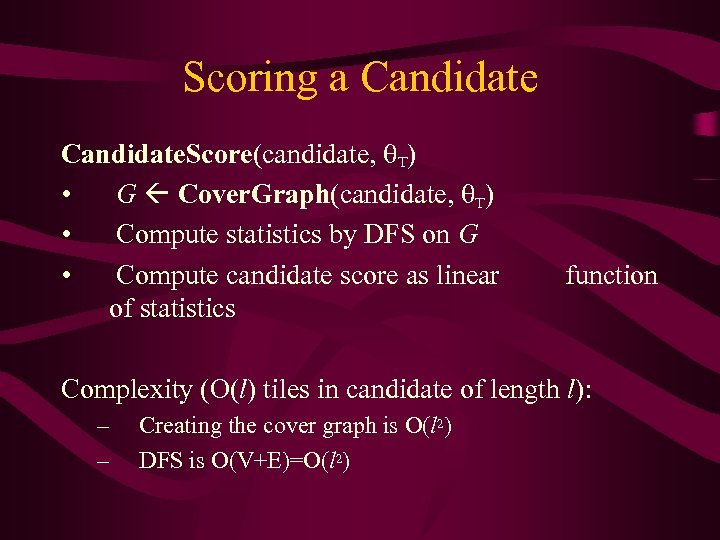

Scoring a Candidate. Score(candidate, T) • G Cover. Graph(candidate, T) • Compute statistics by DFS on G • Compute candidate score as linear of statistics function Complexity (O(l) tiles in candidate of length l): – – Creating the cover graph is O(l 2) DFS is O(V+E)=O(l 2)

Scoring a Candidate. Score(candidate, T) • G Cover. Graph(candidate, T) • Compute statistics by DFS on G • Compute candidate score as linear of statistics function Complexity (O(l) tiles in candidate of length l): – – Creating the cover graph is O(l 2) DFS is O(V+E)=O(l 2)

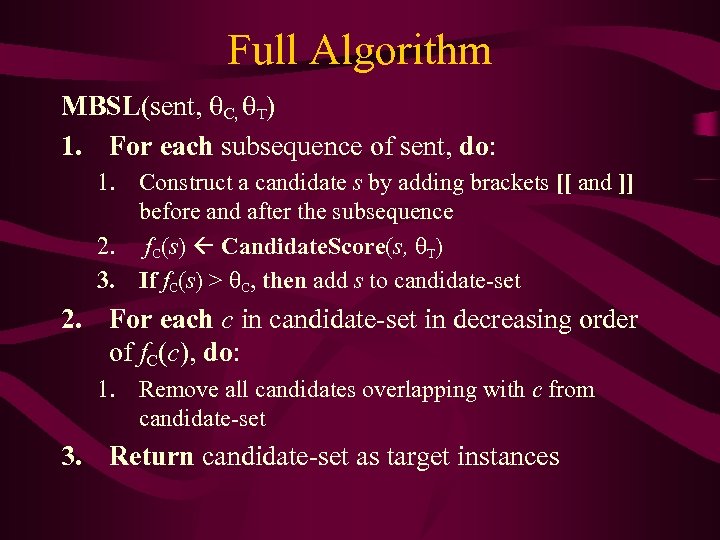

Full Algorithm MBSL(sent, C, T) 1. For each subsequence of sent, do: 1. Construct a candidate s by adding brackets [[ and ]] before and after the subsequence 2. f. C(s) Candidate. Score(s, T) 3. If f. C(s) > C, then add s to candidate-set 2. For each c in candidate-set in decreasing order of f. C(c), do: 1. Remove all candidates overlapping with c from candidate-set 3. Return candidate-set as target instances

Full Algorithm MBSL(sent, C, T) 1. For each subsequence of sent, do: 1. Construct a candidate s by adding brackets [[ and ]] before and after the subsequence 2. f. C(s) Candidate. Score(s, T) 3. If f. C(s) > C, then add s to candidate-set 2. For each c in candidate-set in decreasing order of f. C(c), do: 1. Remove all candidates overlapping with c from candidate-set 3. Return candidate-set as target instances

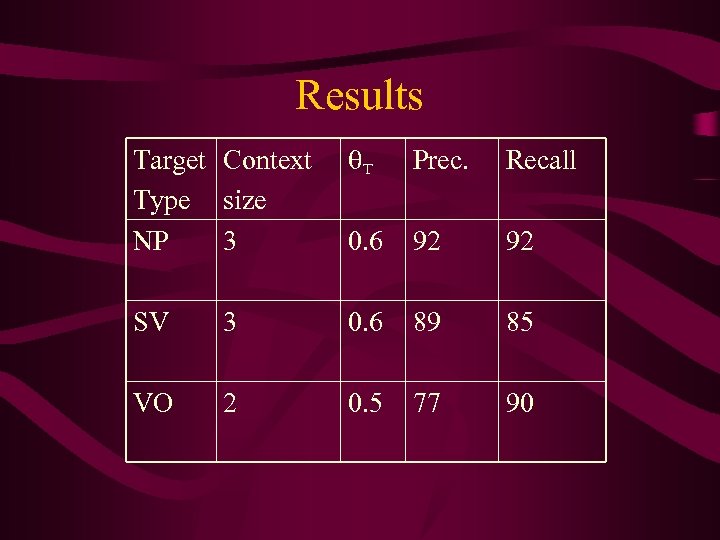

Results Target Context Type size NP 3 T Prec. Recall 0. 6 92 92 SV 3 0. 6 89 85 VO 2 0. 5 77 90

Results Target Context Type size NP 3 T Prec. Recall 0. 6 92 92 SV 3 0. 6 89 85 VO 2 0. 5 77 90