37148d2b37db3b7615d539cc0d1d0152.ppt

- Количество слайдов: 37

Switching to on-line evaluations for courses at UC Berkeley Daphne Ogle, Lead Design, UC Berkeley Aaron Zeckoski, Lead Developer, Unicon June 10 -15, 2012 Growing Community; Growing Possibilities

Switching to on-line evaluations for courses at UC Berkeley Daphne Ogle, Lead Design, UC Berkeley Aaron Zeckoski, Lead Developer, Unicon June 10 -15, 2012 Growing Community; Growing Possibilities

Speakers } } Daphne Ogle Berkeley Design Lead } OAE since 2010, Fluid Project, Sakai since 2004 } } } Aaron Zeckoski Unicon Development Lead Evaluation System original lead (2006), Evals software since 2001, Sakai since 2004 2012 Jasig Sakai Conference 2

Speakers } } Daphne Ogle Berkeley Design Lead } OAE since 2010, Fluid Project, Sakai since 2004 } } } Aaron Zeckoski Unicon Development Lead Evaluation System original lead (2006), Evals software since 2001, Sakai since 2004 2012 Jasig Sakai Conference 2

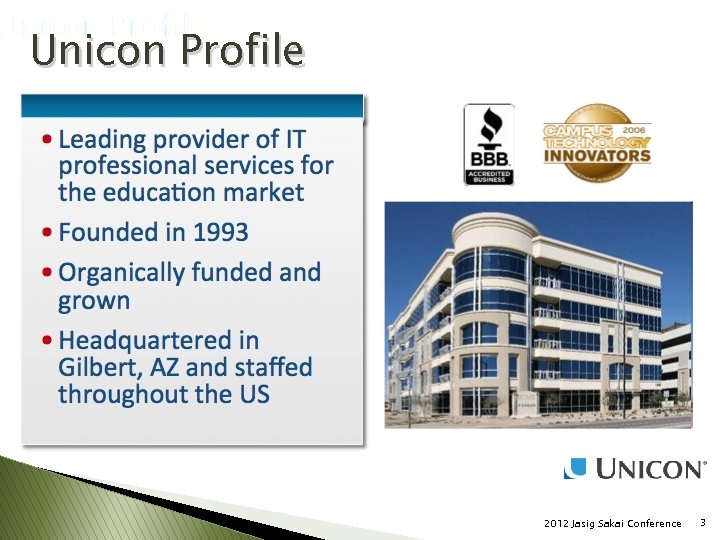

Unicon Profile 2012 Jasig Sakai Conference 3

Unicon Profile 2012 Jasig Sakai Conference 3

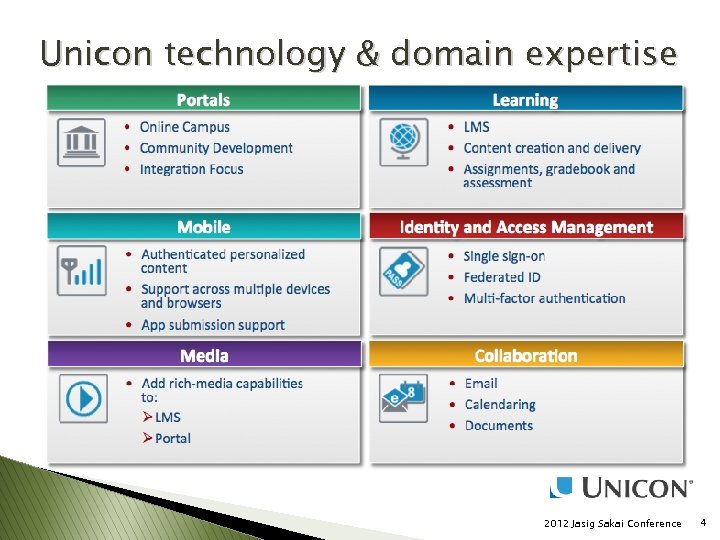

Unicon technology & domain expertise 2012 Jasig Sakai Conference 4

Unicon technology & domain expertise 2012 Jasig Sakai Conference 4

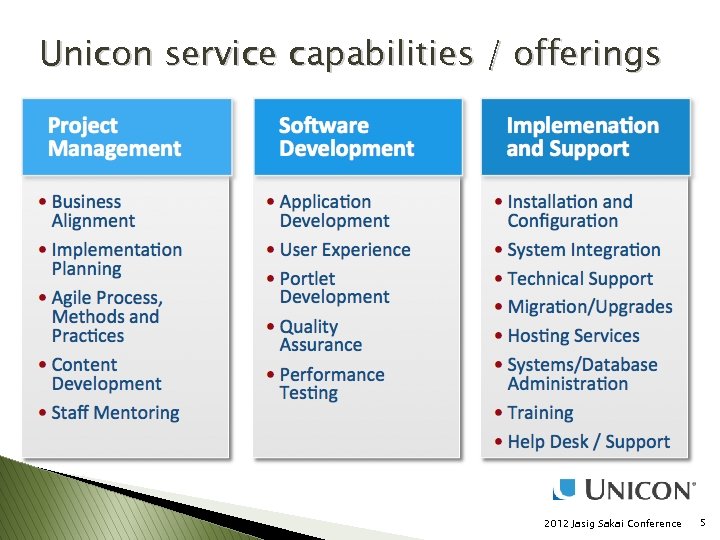

Unicon service capabilities / offerings 2012 Jasig Sakai Conference 5

Unicon service capabilities / offerings 2012 Jasig Sakai Conference 5

Today’s topics } } } Intro to UC Berkeley on-line course evaluation project Demo: Updates to CLE Evaluation System for Spring pilot Future work What we learned Questions & Answers 2012 Jasig Sakai Conference 6

Today’s topics } } } Intro to UC Berkeley on-line course evaluation project Demo: Updates to CLE Evaluation System for Spring pilot Future work What we learned Questions & Answers 2012 Jasig Sakai Conference 6

Introduction to online evaluations project at UC Berkeley Overall project initiative 2012 Jasig Sakai Conference 7

Introduction to online evaluations project at UC Berkeley Overall project initiative 2012 Jasig Sakai Conference 7

Online evaluations for courses } New shared questions ◦ campus-wide ◦ instructional format-based (14 formats) ◦ piloted first } On-line ◦ pilot CLE Evaluation System ◦ development to meet Berkeley needs & overall system improvement ◦ Berkeley & Unicon collaboration 2012 Jasig Sakai Conference 8

Online evaluations for courses } New shared questions ◦ campus-wide ◦ instructional format-based (14 formats) ◦ piloted first } On-line ◦ pilot CLE Evaluation System ◦ development to meet Berkeley needs & overall system improvement ◦ Berkeley & Unicon collaboration 2012 Jasig Sakai Conference 8

Goals of initiative } } Rapid access to useful information help to faculty improve their courses and their teaching effectiveness Improvements to the quality & integrity of data that the campus uses to understand recognize teaching contributions 21 st century tools staff to perform their for jobs efficiently and free up time for direct service to students and faculty High quality information for students about courses and instructors at Berkeley Cynthia Schrager, Assistant Vice Provost 2012 Jasig Sakai Conference 9

Goals of initiative } } Rapid access to useful information help to faculty improve their courses and their teaching effectiveness Improvements to the quality & integrity of data that the campus uses to understand recognize teaching contributions 21 st century tools staff to perform their for jobs efficiently and free up time for direct service to students and faculty High quality information for students about courses and instructors at Berkeley Cynthia Schrager, Assistant Vice Provost 2012 Jasig Sakai Conference 9

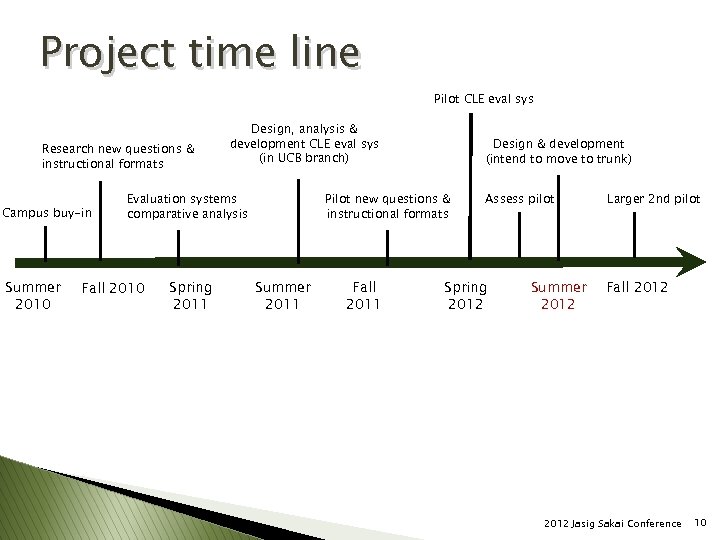

Project time line Pilot CLE eval sys Research new questions & instructional formats Campus buy-in Summer 2010 Design, analysis & development CLE eval sys (in UCB branch) Evaluation systems comparative analysis Fall 2010 Spring 2011 Design & development (intend to move to trunk) Pilot new questions & instructional formats Summer 2011 Fall 2011 Assess pilot Spring 2012 Summer 2012 Larger 2 nd pilot Fall 2012 Jasig Sakai Conference 10

Project time line Pilot CLE eval sys Research new questions & instructional formats Campus buy-in Summer 2010 Design, analysis & development CLE eval sys (in UCB branch) Evaluation systems comparative analysis Fall 2010 Spring 2011 Design & development (intend to move to trunk) Pilot new questions & instructional formats Summer 2011 Fall 2011 Assess pilot Spring 2012 Summer 2012 Larger 2 nd pilot Fall 2012 Jasig Sakai Conference 10

Introduction to online evaluations project at UC Berkeley Spring Pilot 2012 Jasig Sakai Conference 11

Introduction to online evaluations project at UC Berkeley Spring Pilot 2012 Jasig Sakai Conference 11

Goals of spring pilot } } Establish baseline response rate Assess CLE evaluation system ◦ technical longterm sustainability ◦ user experience } } Build knowledge of CLE evaluation system Build knowledge of infrastructure needs 2012 Jasig Sakai Conference 12

Goals of spring pilot } } Establish baseline response rate Assess CLE evaluation system ◦ technical longterm sustainability ◦ user experience } } Build knowledge of CLE evaluation system Build knowledge of infrastructure needs 2012 Jasig Sakai Conference 12

Spring pilot success criteria } } } Establish baseline response rate Collect data on function & experience User experience meets or exceeds previous Transparency across campus Elicit higher quality response Gain understanding to inform Fall design & development effort 2012 Jasig Sakai Conference 13

Spring pilot success criteria } } } Establish baseline response rate Collect data on function & experience User experience meets or exceeds previous Transparency across campus Elicit higher quality response Gain understanding to inform Fall design & development effort 2012 Jasig Sakai Conference 13

Spring pilot makeup } } } 8 departments 61 courses (primary & secondary) 2300+ enrollments 2012 Jasig Sakai Conference 14

Spring pilot makeup } } } 8 departments 61 courses (primary & secondary) 2300+ enrollments 2012 Jasig Sakai Conference 14

Demo Spring pilot 2012 Jasig Sakai Conference 15

Demo Spring pilot 2012 Jasig Sakai Conference 15

Spring pilot development } } Significant time understanding system Overall model & process to follow ◦ “uber” template ◦ self-service } } Focus on student & instructor experience System areas of focus ◦ ◦ Dashboard Evaluation presentation Administration page Notifications 2012 Jasig Sakai Conference 16

Spring pilot development } } Significant time understanding system Overall model & process to follow ◦ “uber” template ◦ self-service } } Focus on student & instructor experience System areas of focus ◦ ◦ Dashboard Evaluation presentation Administration page Notifications 2012 Jasig Sakai Conference 16

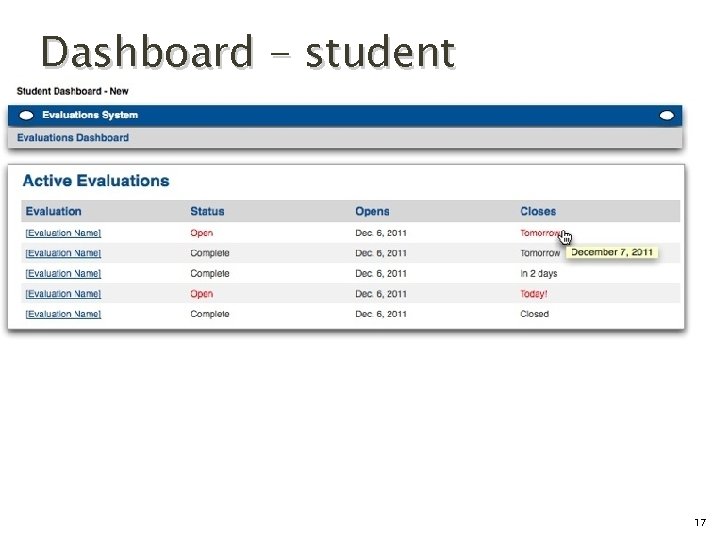

Dashboard - student 2012 Jasig Sakai Conference 17

Dashboard - student 2012 Jasig Sakai Conference 17

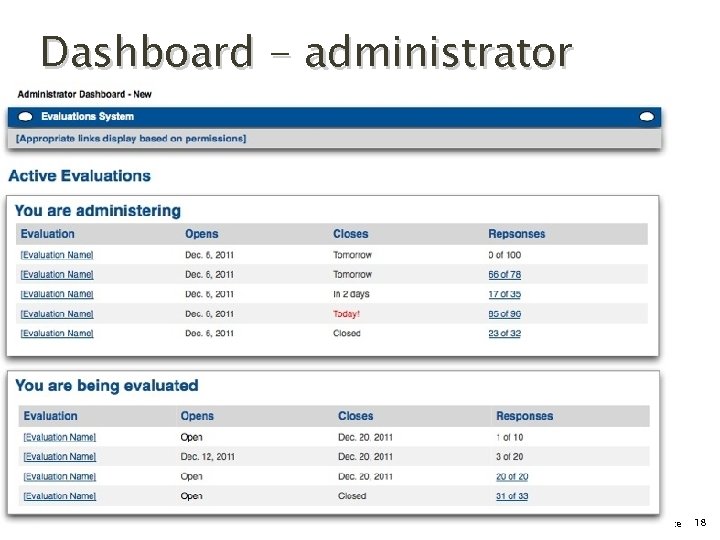

Dashboard - administrator 2012 Jasig Sakai Conference 18

Dashboard - administrator 2012 Jasig Sakai Conference 18

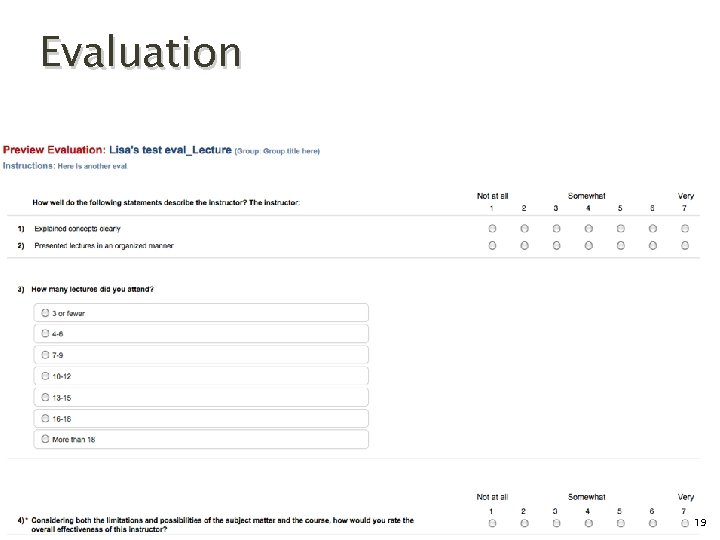

Evaluation 2012 Jasig Sakai Conference 19

Evaluation 2012 Jasig Sakai Conference 19

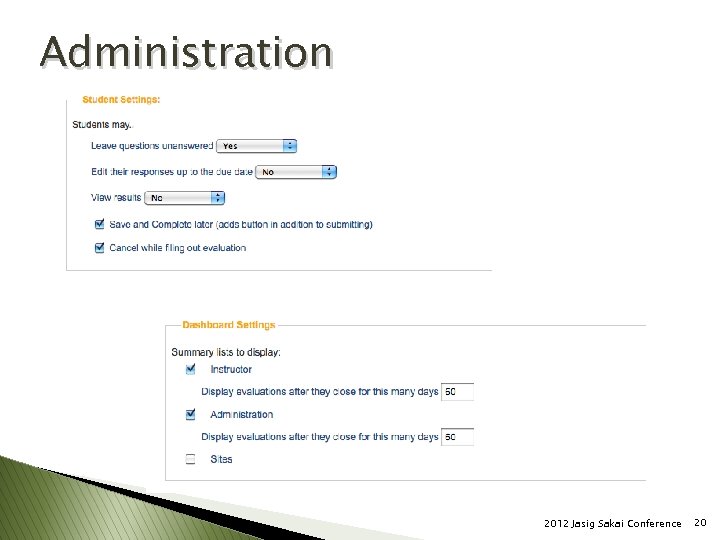

Administration 2012 Jasig Sakai Conference 20

Administration 2012 Jasig Sakai Conference 20

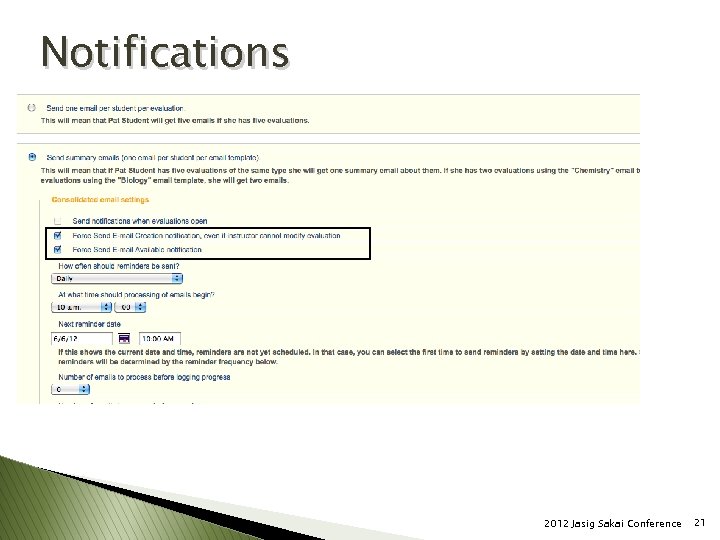

Notifications 2012 Jasig Sakai Conference 21

Notifications 2012 Jasig Sakai Conference 21

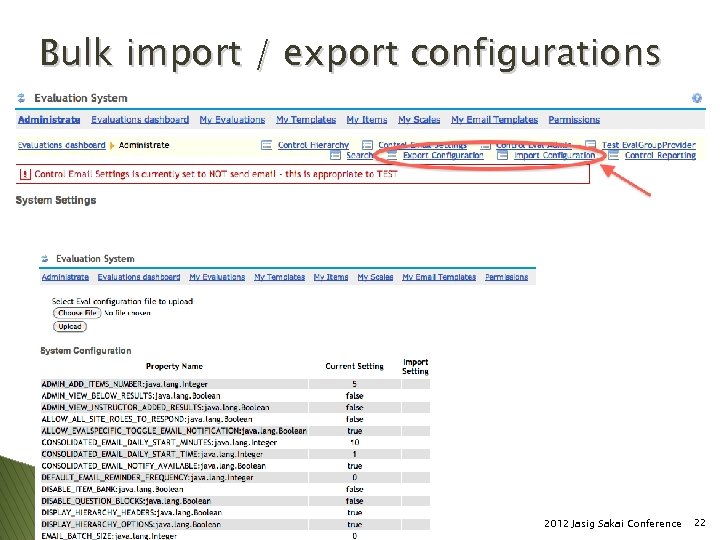

Bulk import / export configurations 2012 Jasig Sakai Conference 22

Bulk import / export configurations 2012 Jasig Sakai Conference 22

Live Demo http: //berkeley-eval. unicon. net/portal 2012 Jasig Sakai Conference 23

Live Demo http: //berkeley-eval. unicon. net/portal 2012 Jasig Sakai Conference 23

Lessons learned Food for thought 2012 Jasig Sakai Conference 24

Lessons learned Food for thought 2012 Jasig Sakai Conference 24

Lessons learned } Configurability + flexibility = Complexity ◦ documentation not always up to date ◦ allow significant time to “play around” } Unfinished functionality ◦ email notifications ◦ options in evaluation creation } Global tool ◦ acts different than other tools ◦ where will it be exposed? 2012 Jasig Sakai Conference 25

Lessons learned } Configurability + flexibility = Complexity ◦ documentation not always up to date ◦ allow significant time to “play around” } Unfinished functionality ◦ email notifications ◦ options in evaluation creation } Global tool ◦ acts different than other tools ◦ where will it be exposed? 2012 Jasig Sakai Conference 25

Lessons learned - cont’d } Allow a lot of time for QA ◦ see 1 st bullet: lots of variables ◦ QA’ing up to the last minute } Clean data required ◦ setup if not using group provider ◦ site roles NOT always evaluation roles } Communicate! ◦ be transparent ◦ remind users it’s a pilot 2012 Jasig Sakai Conference 26

Lessons learned - cont’d } Allow a lot of time for QA ◦ see 1 st bullet: lots of variables ◦ QA’ing up to the last minute } Clean data required ◦ setup if not using group provider ◦ site roles NOT always evaluation roles } Communicate! ◦ be transparent ◦ remind users it’s a pilot 2012 Jasig Sakai Conference 26

Lessons learned - cont’d } Learn from other implementors ◦ document models, workflows & implications ◦ community project? } Culture & legal requirements vary across campus ◦ viewing respondents ◦ roles, permissions, viewing each others evaluations 2012 Jasig Sakai Conference 27

Lessons learned - cont’d } Learn from other implementors ◦ document models, workflows & implications ◦ community project? } Culture & legal requirements vary across campus ◦ viewing respondents ◦ roles, permissions, viewing each others evaluations 2012 Jasig Sakai Conference 27

Future plans Evaluation system at Berkeley 2012 Jasig Sakai Conference 28

Future plans Evaluation system at Berkeley 2012 Jasig Sakai Conference 28

What’s next (1 of 2)? } } } Feedback sessions with users on Spring pilot Needs analysis around results Move our work back into trunk Configuration option to hide respondents page UX improvements ◦ ◦ ◦ results page multiple instructors (Berkeley-centric) add respondent link to dashboard make display options clear in context configuration page (information architecture) 2012 Jasig Sakai Conference 29

What’s next (1 of 2)? } } } Feedback sessions with users on Spring pilot Needs analysis around results Move our work back into trunk Configuration option to hide respondents page UX improvements ◦ ◦ ◦ results page multiple instructors (Berkeley-centric) add respondent link to dashboard make display options clear in context configuration page (information architecture) 2012 Jasig Sakai Conference 29

What else is next? } Investigation ◦ ◦ ◦ support for instructional formats (Berkeley-centric) give department admins access to results Evalsys tool added to My Workspace automatically group provider & SIS integration partial OAE integration 2012 Jasig Sakai Conference 30

What else is next? } Investigation ◦ ◦ ◦ support for instructional formats (Berkeley-centric) give department admins access to results Evalsys tool added to My Workspace automatically group provider & SIS integration partial OAE integration 2012 Jasig Sakai Conference 30

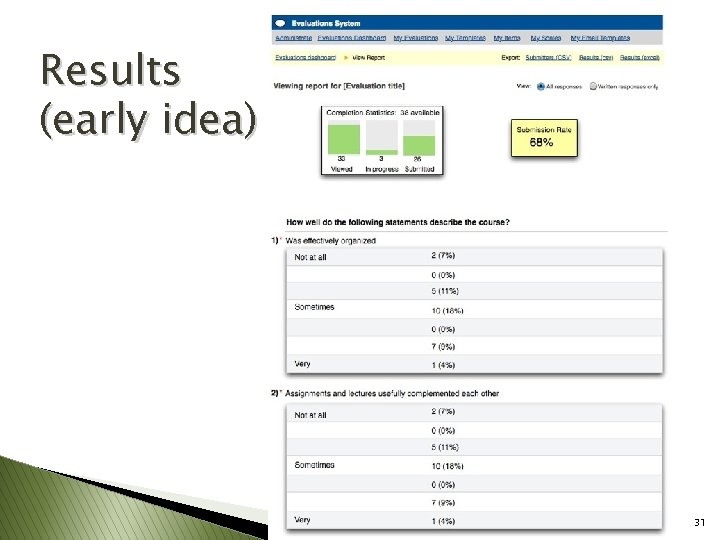

Results (early idea) 2012 Jasig Sakai Conference 31

Results (early idea) 2012 Jasig Sakai Conference 31

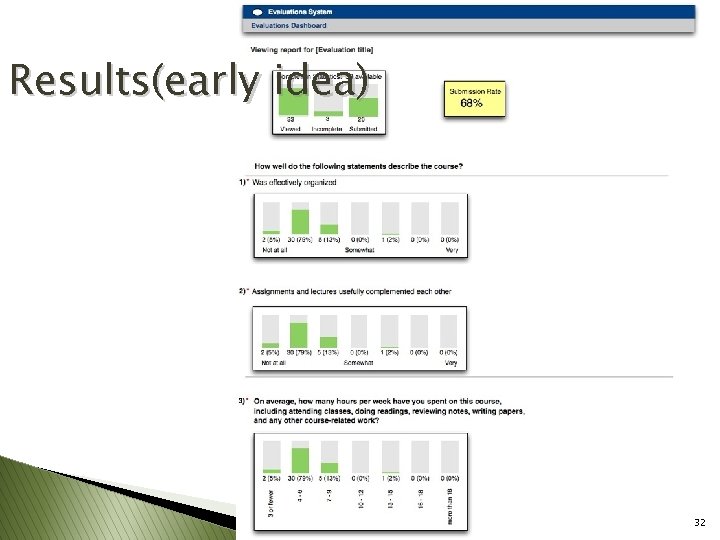

Results(early idea) 2012 Jasig Sakai Conference 32

Results(early idea) 2012 Jasig Sakai Conference 32

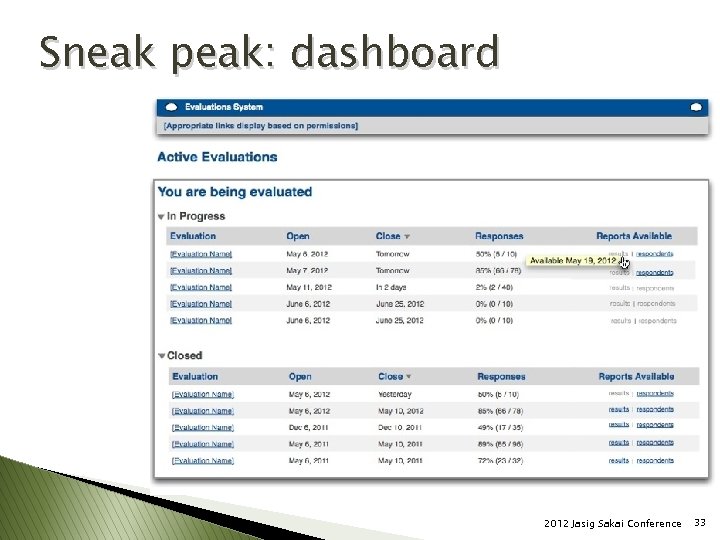

Sneak peak: dashboard 2012 Jasig Sakai Conference 33

Sneak peak: dashboard 2012 Jasig Sakai Conference 33

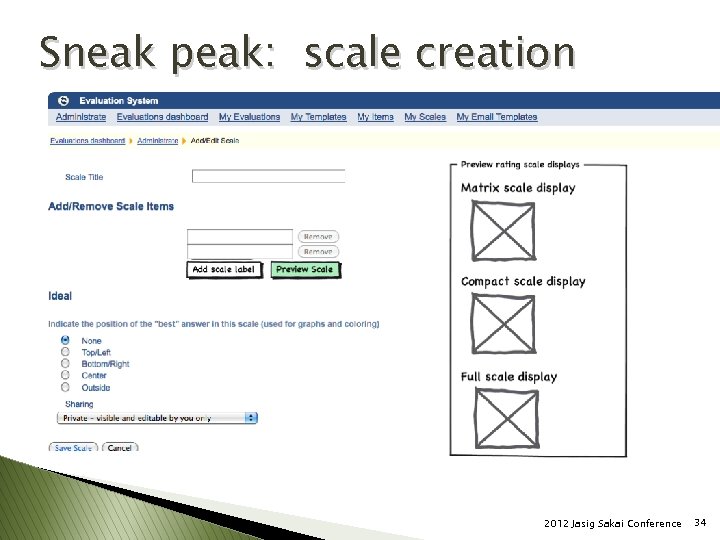

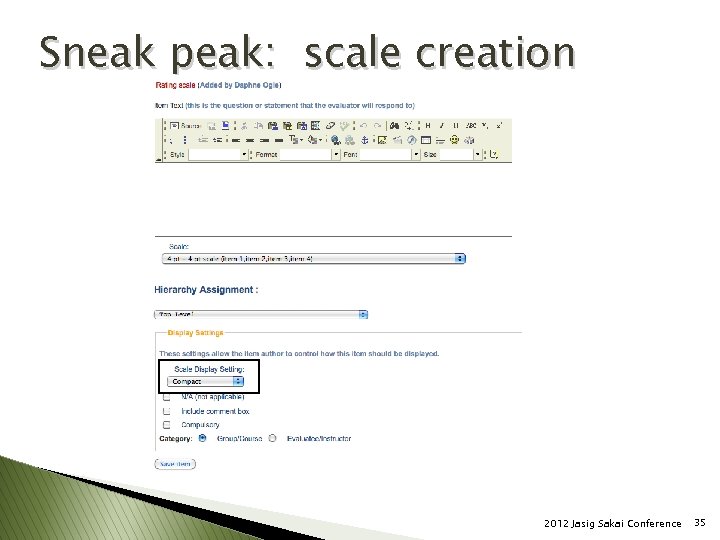

Sneak peak: scale creation 2012 Jasig Sakai Conference 34

Sneak peak: scale creation 2012 Jasig Sakai Conference 34

Sneak peak: scale creation 2012 Jasig Sakai Conference 35

Sneak peak: scale creation 2012 Jasig Sakai Conference 35

Resources } Berkeley Confluence ◦ https: //confluence. media. berkeley. edu/confluence/display/EVAL/Home } Sakai Confluence ◦ https: //confluence. sakaiproject. org/display/EVALSYS/Home } Sakai Jira ◦ https: //jira. sakaiproject. org/browse/EVALSYS 2012 Jasig Sakai Conference 36

Resources } Berkeley Confluence ◦ https: //confluence. media. berkeley. edu/confluence/display/EVAL/Home } Sakai Confluence ◦ https: //confluence. sakaiproject. org/display/EVALSYS/Home } Sakai Jira ◦ https: //jira. sakaiproject. org/browse/EVALSYS 2012 Jasig Sakai Conference 36

Thank you! } Questions, comments, discussion. . . 2012 Jasig Sakai Conference 37

Thank you! } Questions, comments, discussion. . . 2012 Jasig Sakai Conference 37