2c8414750fddfdd0deff5fd3b8c8013a.ppt

- Количество слайдов: 56

Supercomputing for the Masses: Killer-Apps, Parallel Mappings, Scalability and Application Lifespan Rob Farber Senior Scientist (PNNL) -> Visiting scientist ICHEC

Killer Apps occur when personal vision matches technical capability to fulfill a market demand • Graphics processors and games: killer apps that created a huge market 2

Technical capability • Market forces evolved GPUs into massively parallel GPGPUs (General Purpose GPUs). • 250+ million (1/4 billion) CUDA-enabled GPUs says it all! • CUDA: put supercomputing in the hands of the masses. o December 1996, ASCI Red the first teraflop supercomputer o Today: kids buy GPUs with flop rates comparable to systems available to scientists with supercomputer access in the mid to late 1990 s. • Ge. Force 480 1. 35 TF/s peak 32 -bit • Newegg. com: $299 Remember that Finnish kid who wrote some software to understand operating systems? Inexpensive commodity hardware enables: • New thinking 3 • A large educated base of developers

A perfect storm of opportunities and technology (Summary of Farber, Scientific Computing, “Realizing the Benefits of Affordable Teraflop-capable Hardware”) • Multi-threaded software is a must-have because manufacturers were forced to move to multi-core CPUs o The failure of Dennard’s scaling laws meant processor manufacturers had to add cores to increase performance and entice customers • Multi-core is disruptive to single-threaded legacy apps o Businesses and research efforts will not benefit from new hardware unless they invest in multi-threaded software o Lack of investment risks stagnation and losing to the competition • Competition is fierce, the new technology is readily available and it is inexpensive! o Which software and models? Look to those that are: • Widely adopted and have withstood the test of time • Look at CUDA and the CUDA model 4

CUDA is not the only game in town (but will be a focus of this talk) • Android/Iphone - mobile is huge 5 weeks - 3. 5 M downloads - Over $100 K net (2008) (2009) Augmented Reality The technical capability is there … you supply the vision! 5 Jen-Hsun with RTT at 2009 GTC

CUDA is a game changer! • CUDA enables orders of magnitude faster apps: o 10 x can make computational workflows more interactive (even poorly performing GPU apps are useful). o 100 x is disruptive and has the potential to fundamentally affect scientific research by removing time-to-discovery barriers. o 1000 x and greater achieved through the use of the NVIDIA SFU (Special Function Units) or multiple GPUs … Whooo Hoooo! • In a few slides: examine CUDA + Graphics = Wow! 6

CUDA was adopted amazingly fast! • February 2007: The initial CUDA SDK was made public. • Now: CUDA-based GPU Computing is part of the curriculum at over 360 universities. o MIT, Harvard, Cambridge, Oxford, the Indian Institutes of Technology, National Taiwan University, and the Chinese Academy of Sciences. 7

The numbers have changed! 8 http: //e-ditionsbyfry. com/Olive/ODE/SCISupp/Default. aspx? href=SCI/2010/11/03&pageno=06&view=document

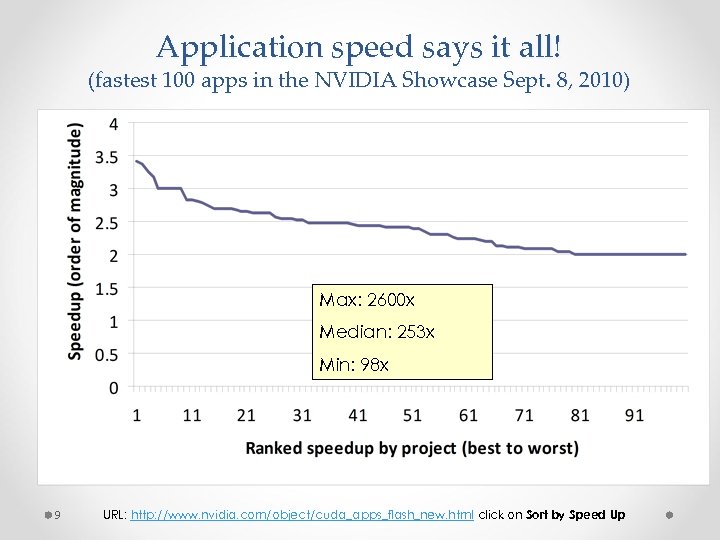

Application speed says it all! (fastest 100 apps in the NVIDIA Showcase Sept. 8, 2010) Max: 2600 x Median: 253 x Min: 98 x 9 URL: http: //www. nvidia. com/object/cuda_apps_flash_new. html click on Sort by Speed Up

Orders of magnitude increased performance in an extraordinary number of fields • Spanning a wide-range of computational, data driven, and realtime applications: o o o o Computational finance Medical Quantum chemistry simulations Molecular modeling and electrostatic potentials Diffusion Fluid flow Systems of differential equations Data driven problems such as microscopy • Many can be considered killer apps in their field. 10

An example: the Metropolis algorithm 300 x – 1000 x • Among the ten algorithms that have had the greatest influence on the development and practice of science and engineering in the 20 th century (Beichl, Sullivan, 2000). • Plays a significant role in statistics, econometrics, physics and computing science. o For some applications, MCMC simulation is the only known general approach for providing a solution within a reasonable time (Diaconis, Saloff-Coste, 1995). • CUDA version reported to be 300 x to 1000 x faster (Alerstam, Svensson, Engels, 2008). 11

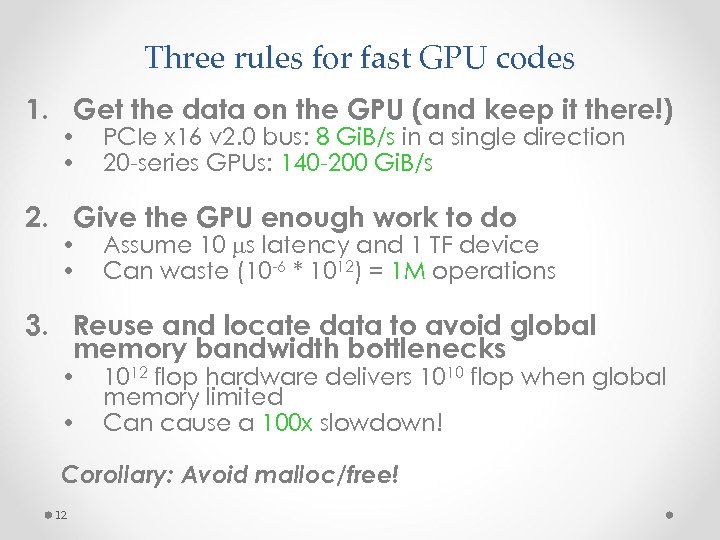

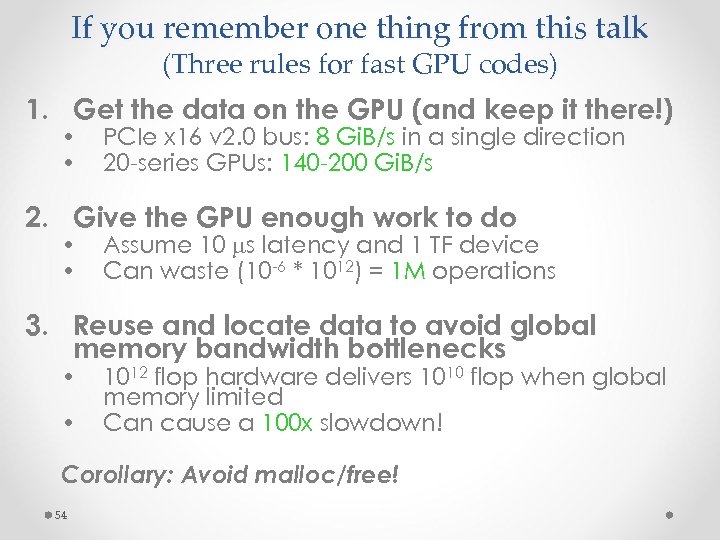

Three rules for fast GPU codes 1. Get the data on the GPU (and keep it there!) • • PCIe x 16 v 2. 0 bus: 8 Gi. B/s in a single direction 20 -series GPUs: 140 -200 Gi. B/s 2. Give the GPU enough work to do • • Assume 10 ms latency and 1 TF device Can waste (10 -6 * 1012) = 1 M operations 3. Reuse and locate data to avoid global memory bandwidth bottlenecks • • 1012 flop hardware delivers 1010 flop when global memory limited Can cause a 100 x slowdown! Corollary: Avoid malloc/free! 12

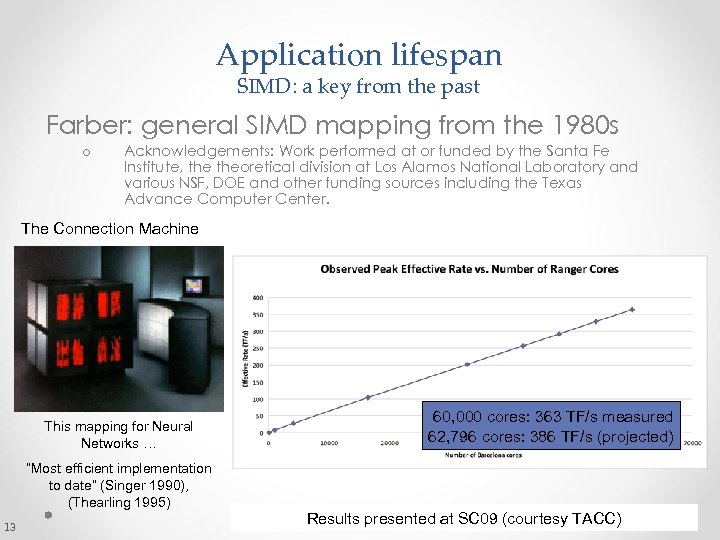

Application lifespan SIMD: a key from the past Farber: general SIMD mapping from the 1980 s o Acknowledgements: Work performed at or funded by the Santa Fe Institute, theoretical division at Los Alamos National Laboratory and various NSF, DOE and other funding sources including the Texas Advance Computer Center. The Connection Machine This mapping for Neural Networks … “Most efficient implementation to date” (Singer 1990), (Thearling 1995) 13 60, 000 cores: 363 TF/s measured 62, 796 cores: 386 TF/s (projected) Results presented at SC 09 (courtesy TACC)

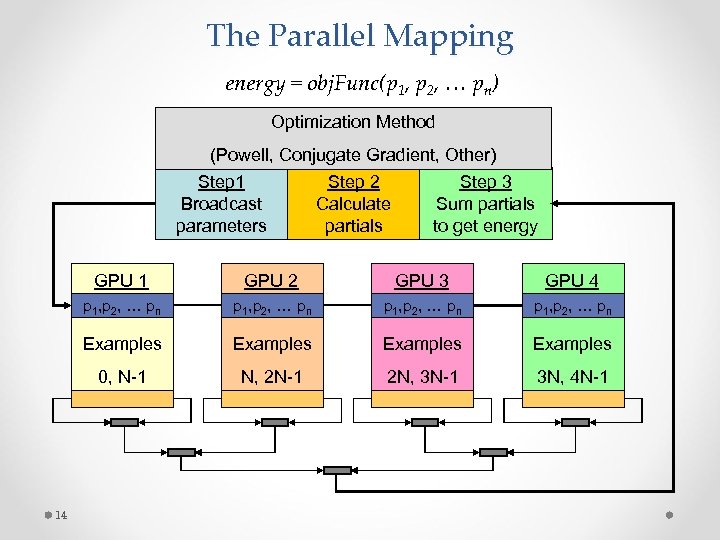

The Parallel Mapping energy = obj. Func(p 1, p 2, … pn) Optimization Method (Powell, Conjugate Gradient, Other) Step 1 Broadcast parameters Step 2 Calculate partials Step 3 Sum partials to get energy GPU 1 GPU 3 GPU 4 p 1, p 2, … pn Examples 0, N-1 14 GPU 2 N, 2 N-1 2 N, 3 N-1 3 N, 4 N-1

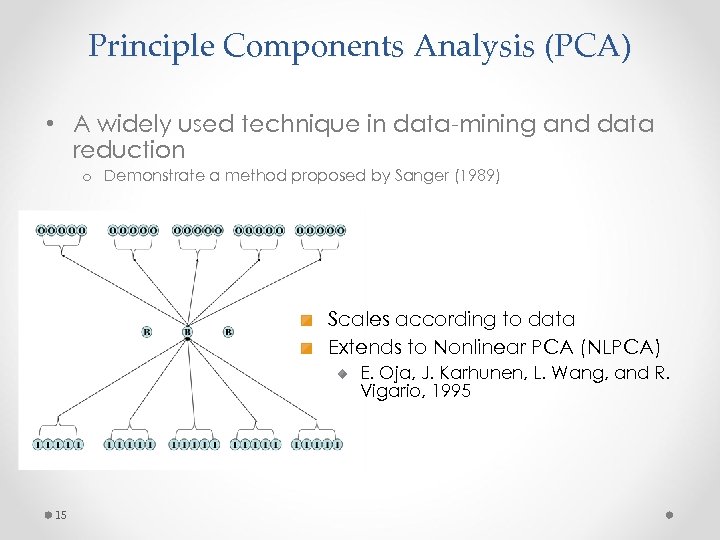

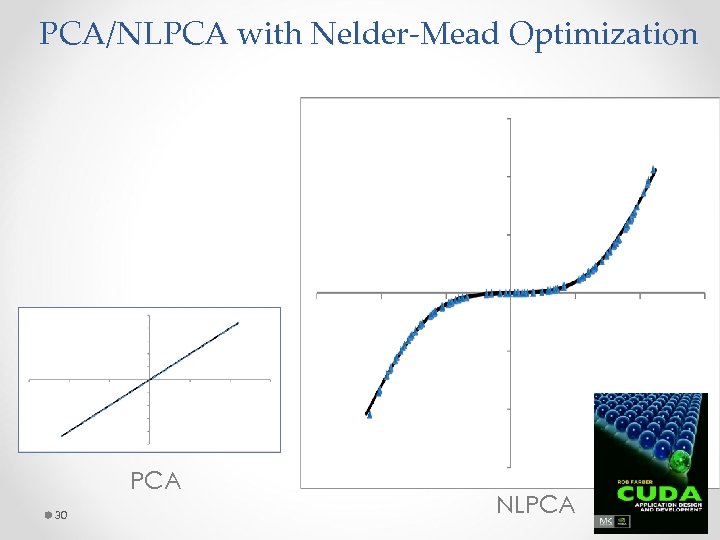

Principle Components Analysis (PCA) • A widely used technique in data-mining and data reduction o Demonstrate a method proposed by Sanger (1989) Scales according to data Extends to Nonlinear PCA (NLPCA) E. Oja, J. Karhunen, L. Wang, and R. Vigario, 1995 15

This is a general mapping (think of your own applications!) o o o 16 Optimization Locally Weighted Linear Regression (LWLR) Neural Networks Naive Bayes (NB) Gaussian Discriminative Analysis (GDA) k-means Logistic Regression (LR) Independent Component Analysis (ICA) Expectation Maximization (EM) Support Vector Machine (SVM) Others: (MDS, Ordinal MDS, etcetera)

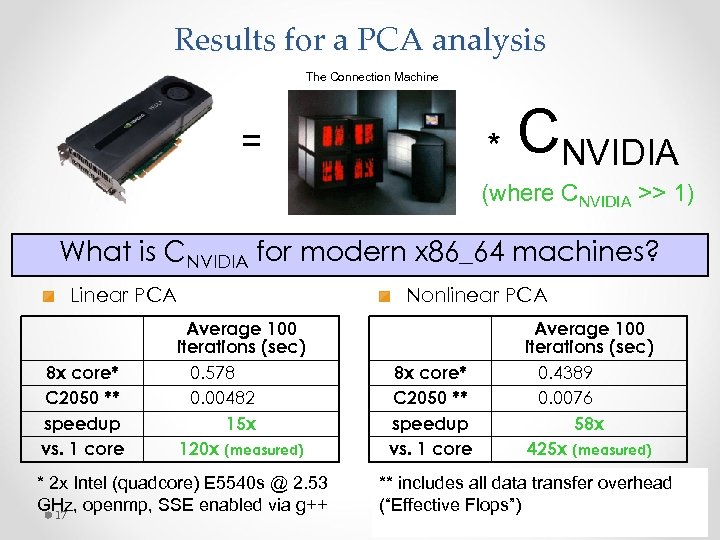

Results for a PCA analysis The Connection Machine = * CNVIDIA (where CNVIDIA >> 1) What is CNVIDIA for modern x 86_64 machines? Nonlinear PCA Linear PCA 8 x core* C 2050 ** speedup vs. 1 core Average 100 iterations (sec) 0. 578 0. 00482 15 x 120 x (measured) * 2 x Intel (quadcore) E 5540 s @ 2. 53 GHz, openmp, SSE enabled via g++ 17 8 x core* C 2050 ** speedup vs. 1 core Average 100 iterations (sec) 0. 4389 0. 0076 58 x 425 x (measured) ** includes all data transfer overhead (“Effective Flops”)

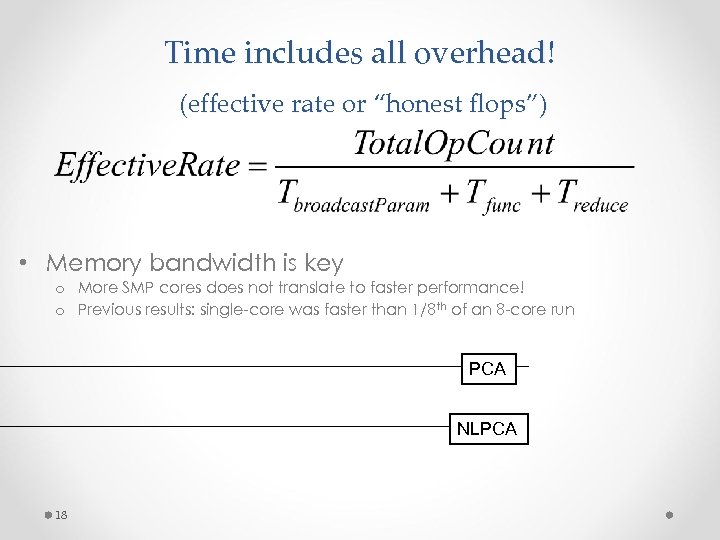

Time includes all overhead! (effective rate or “honest flops”) • Memory bandwidth is key o More SMP cores does not translate to faster performance! o Previous results: single-core was faster than 1/8 th of an 8 -core run PCA NLPCA 18

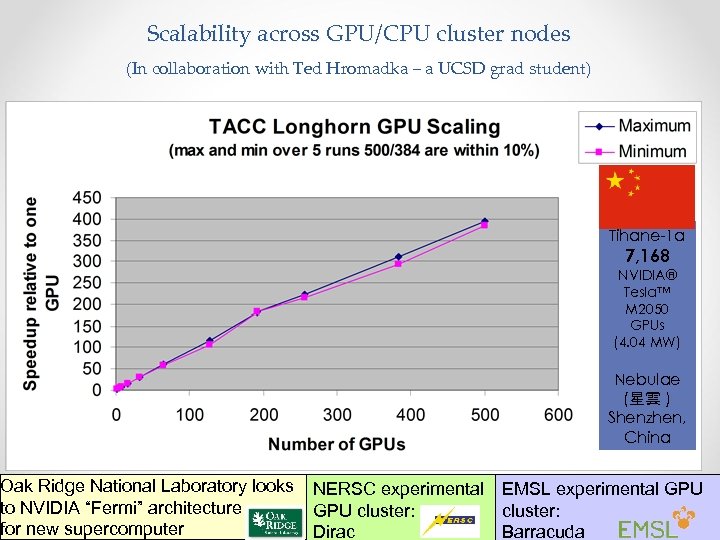

Scalability across GPU/CPU cluster nodes (In collaboration with Ted Hromadka – a UCSD grad student) Tihane-1 a 7, 168 NVIDIA® Tesla™ M 2050 GPUs (4. 04 MW) Nebulae (星雲 ) Shenzhen, China Oak Ridge National Laboratory looks NERSC experimental EMSL experimental GPU to NVIDIA “Fermi” architecture GPU cluster: 19 for new supercomputer Dirac Barracuda

Looking into my crystal ball I predict long life for GPGPU applications • Efficient CUDA codes will stay around o SIMD/SPMD/MIMD mapping translate well to new architectures o CUDA is an excellent way to create these codes o Previous SIMD example is still solving important problems Will these applications always be written in CUDA? Data-parallel extensions are hot! 20

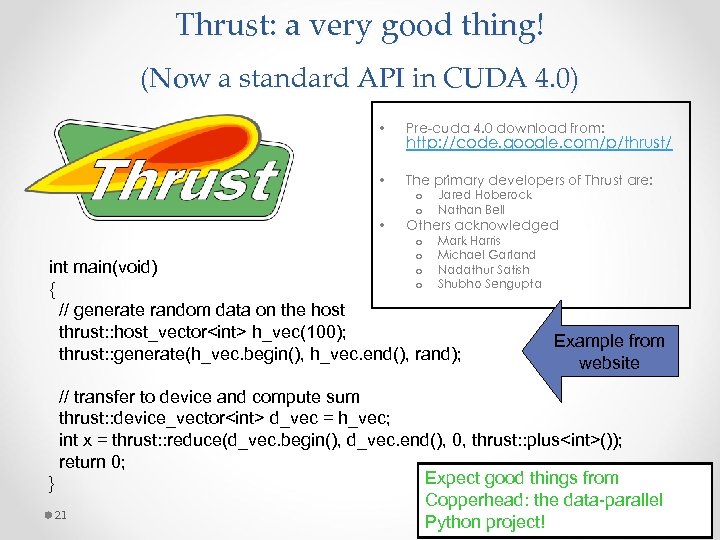

Thrust: a very good thing! (Now a standard API in CUDA 4. 0) • Pre-cuda 4. 0 download from: • The primary developers of Thrust are: http: //code. google. com/p/thrust/ Jared Hoberock Nathan Bell o o • o o Mark Harris Michael Garland Nadathur Satish Shubho Sengupta Others acknowledged int main(void) { // generate random data on the host thrust: : host_vector<int> h_vec(100); thrust: : generate(h_vec. begin(), h_vec. end(), rand); Example from website // transfer to device and compute sum thrust: : device_vector<int> d_vec = h_vec; int x = thrust: : reduce(d_vec. begin(), d_vec. end(), 0, thrust: : plus<int>()); return 0; Expect good things from } Copperhead: the data-parallel 21 Python project!

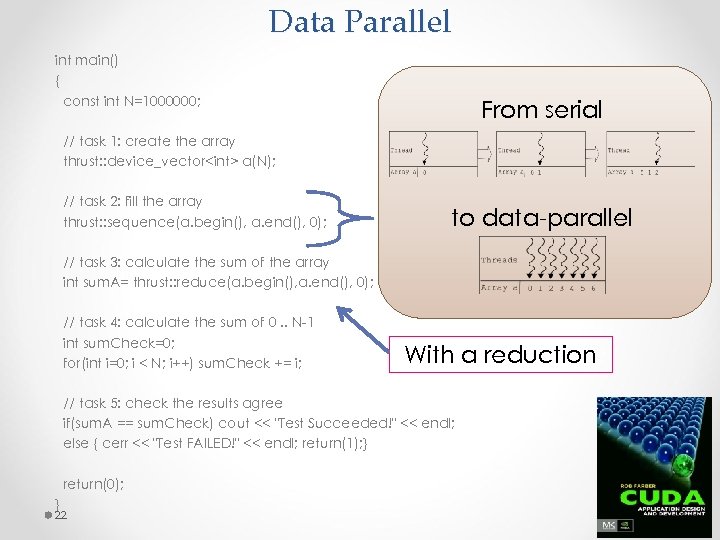

Data Parallel int main() { const int N=1000000; From serial // task 1: create the array thrust: : device_vector<int> a(N); // task 2: fill the array thrust: : sequence(a. begin(), a. end(), 0); to data-parallel // task 3: calculate the sum of the array int sum. A= thrust: : reduce(a. begin(), a. end(), 0); // task 4: calculate the sum of 0. . N-1 int sum. Check=0; for(int i=0; i < N; i++) sum. Check += i; With a reduction // task 5: check the results agree if(sum. A == sum. Check) cout << "Test Succeeded!" << endl; else { cerr << "Test FAILED!" << endl; return(1); } return(0); } 22

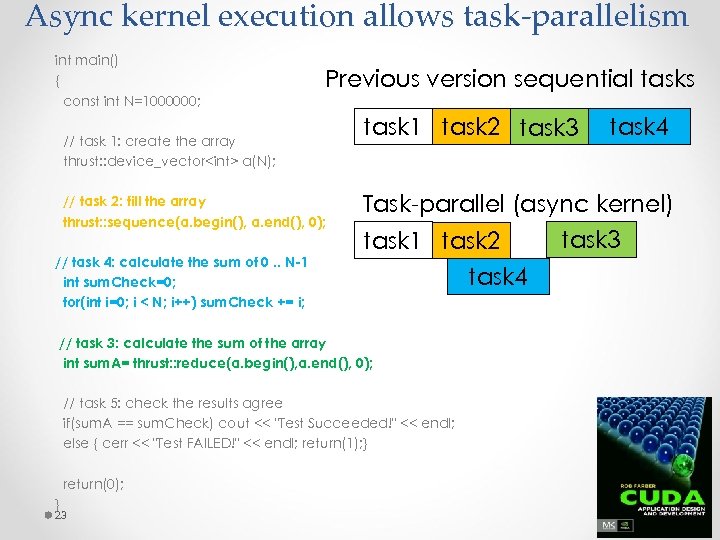

Async kernel execution allows task-parallelism int main() { const int N=1000000; Previous version sequential tasks // task 1: create the array thrust: : device_vector<int> a(N); // task 2: fill the array thrust: : sequence(a. begin(), a. end(), 0); // task 4: calculate the sum of 0. . N-1 int sum. Check=0; for(int i=0; i < N; i++) sum. Check += i; task 1 task 2 task 3 Task-parallel (async kernel) task 1 task 2 // task 3: calculate the sum of the array int sum. A= thrust: : reduce(a. begin(), a. end(), 0); // task 5: check the results agree if(sum. A == sum. Check) cout << "Test Succeeded!" << endl; else { cerr << "Test FAILED!" << endl; return(1); } return(0); } 23 task 4 task 3

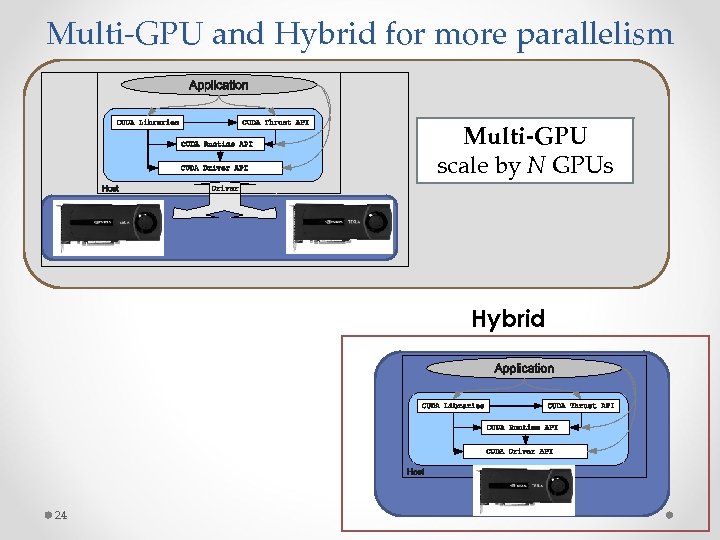

Multi-GPU and Hybrid for more parallelism Multi-GPU scale by N GPUs Hybrid 24

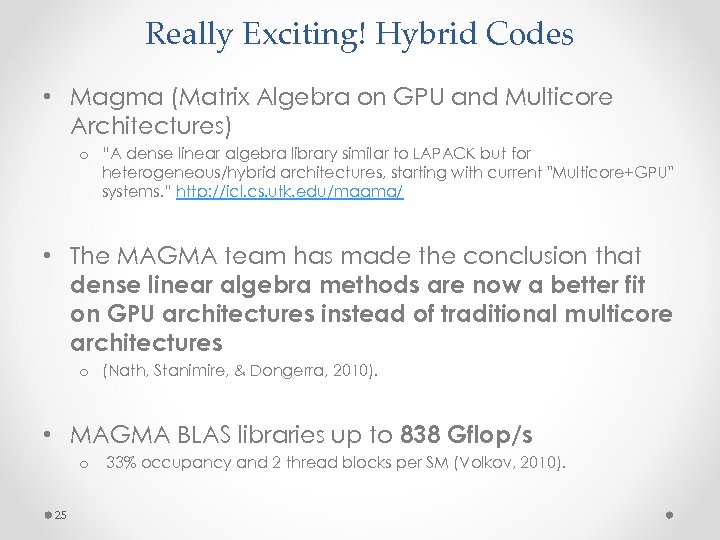

Really Exciting! Hybrid Codes • Magma (Matrix Algebra on GPU and Multicore Architectures) o “A dense linear algebra library similar to LAPACK but for heterogeneous/hybrid architectures, starting with current "Multicore+GPU" systems. ” http: //icl. cs. utk. edu/magma/ • The MAGMA team has made the conclusion that dense linear algebra methods are now a better fit on GPU architectures instead of traditional multicore architectures o (Nath, Stanimire, & Dongerra, 2010). • MAGMA BLAS libraries up to 838 Gflop/s o 25 33% occupancy and 2 thread blocks per SM (Volkov, 2010).

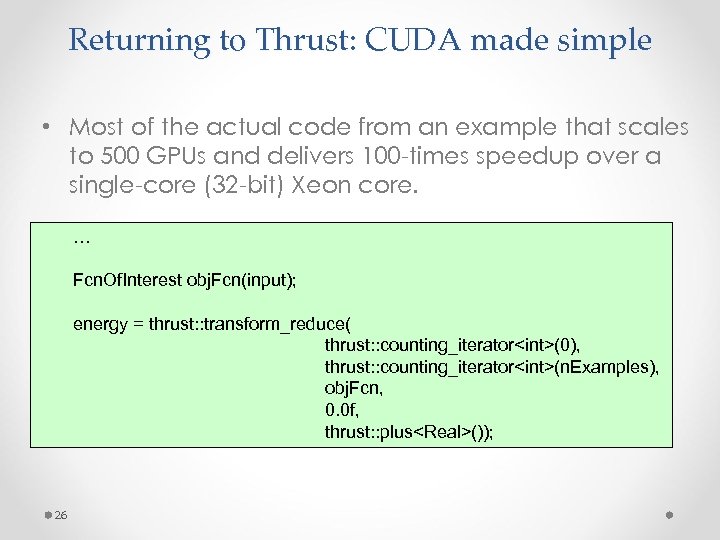

Returning to Thrust: CUDA made simple • Most of the actual code from an example that scales to 500 GPUs and delivers 100 -times speedup over a single-core (32 -bit) Xeon core. … Fcn. Of. Interest obj. Fcn(input); energy = thrust: : transform_reduce( thrust: : counting_iterator<int>(0), thrust: : counting_iterator<int>(n. Examples), obj. Fcn, 0. 0 f, thrust: : plus<Real>()); 26

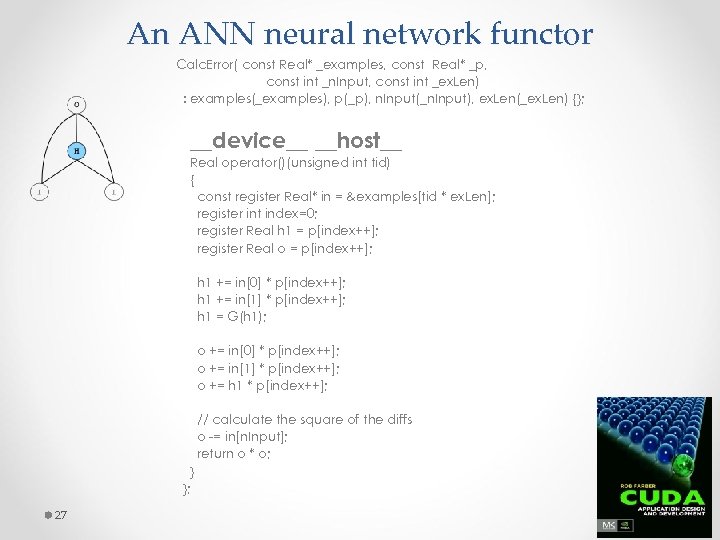

An ANN neural network functor Calc. Error( const Real* _examples, const Real* _p, const int _n. Input, const int _ex. Len) : examples(_examples), p(_p), n. Input(_n. Input), ex. Len(_ex. Len) {}; __device__ __host__ Real operator()(unsigned int tid) { const register Real* in = &examples[tid * ex. Len]; register int index=0; register Real h 1 = p[index++]; register Real o = p[index++]; h 1 += in[0] * p[index++]; h 1 += in[1] * p[index++]; h 1 = G(h 1); o += in[0] * p[index++]; o += in[1] * p[index++]; o += h 1 * p[index++]; // calculate the square of the diffs o -= in[n. Input]; return o * o; } }; 27

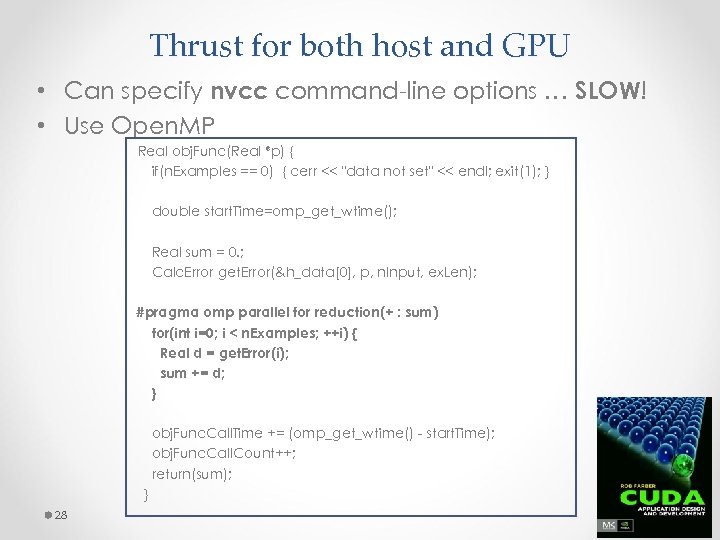

Thrust for both host and GPU • Can specify nvcc command-line options … SLOW! • Use Open. MP Real obj. Func(Real *p) { if(n. Examples == 0) { cerr << "data not set" << endl; exit(1); } double start. Time=omp_get_wtime(); Real sum = 0. ; Calc. Error get. Error(&h_data[0], p, n. Input, ex. Len); #pragma omp parallel for reduction(+ : sum) for(int i=0; i < n. Examples; ++i) { Real d = get. Error(i); sum += d; } obj. Func. Call. Time += (omp_get_wtime() - start. Time); obj. Func. Call. Count++; return(sum); } 28

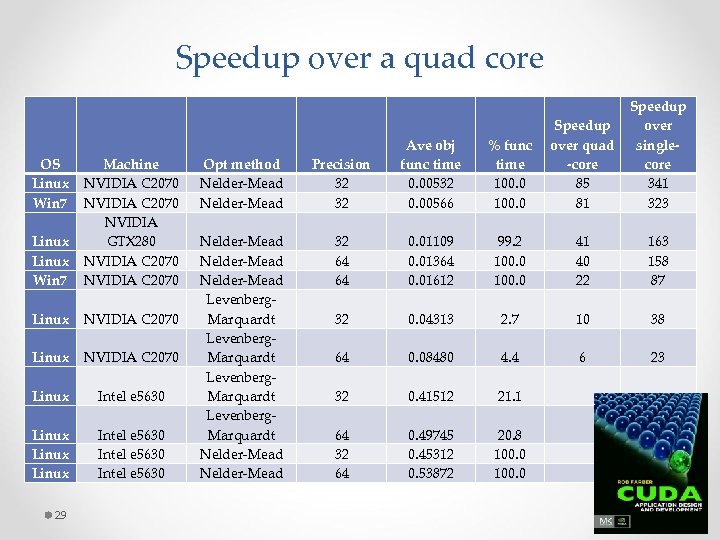

Speedup over a quad core OS Linux Win 7 Machine NVIDIA C 2070 NVIDIA GTX 280 NVIDIA C 2070 Linux Intel e 5630 Linux Intel e 5630 29 % func time 100. 0 Speedup over quad -core 85 81 Speedup over singlecore 341 323 Opt method Nelder-Mead Precision 32 32 Ave obj func time 0. 00532 0. 00566 Nelder-Mead Levenberg. Marquardt Nelder-Mead 32 64 64 0. 01109 0. 01364 0. 01612 99. 2 100. 0 41 40 22 163 158 87 32 0. 04313 2. 7 10 38 64 0. 08480 4. 4 6 23 32 0. 41512 21. 1 64 32 64 0. 49745 0. 45312 0. 53872 20. 8 100. 0

PCA/NLPCA with Nelder-Mead Optimization PCA 30 NLPCA

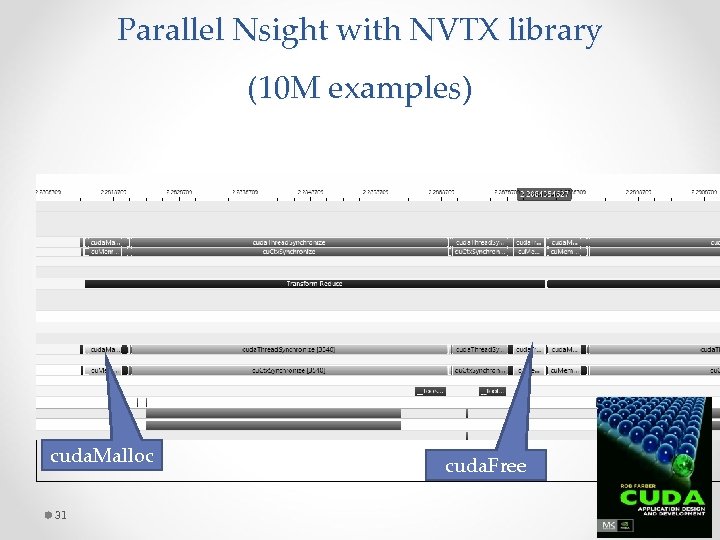

Parallel Nsight with NVTX library (10 M examples) cuda. Malloc 31 cuda. Free

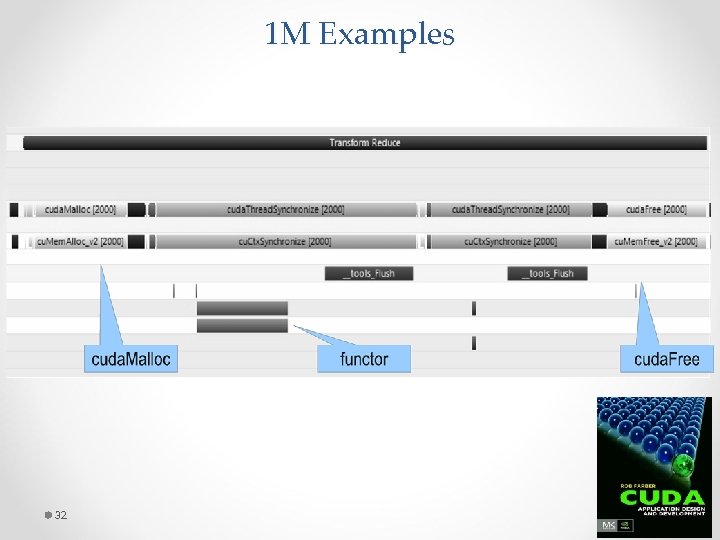

1 M Examples 32

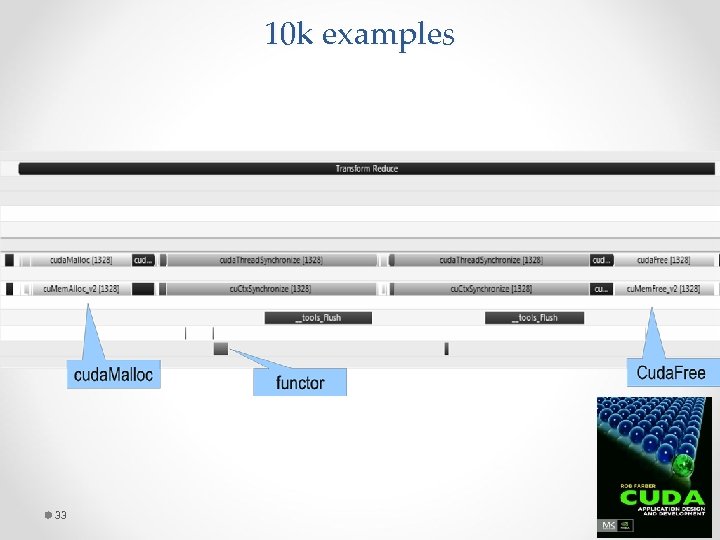

10 k examples 33

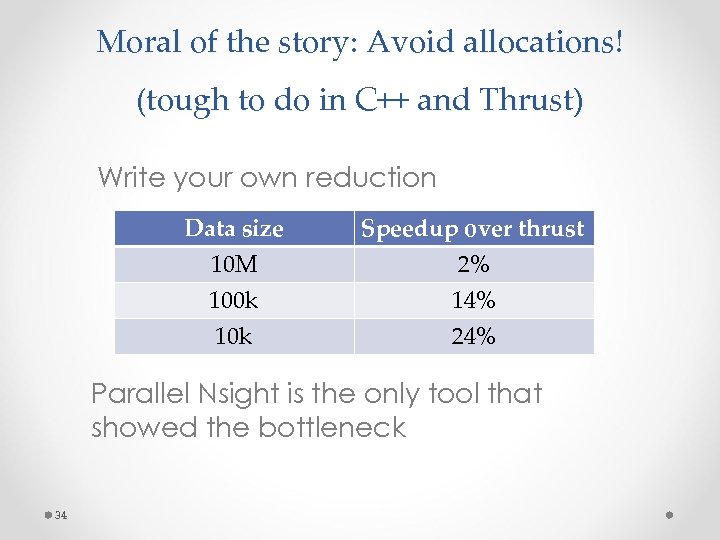

Moral of the story: Avoid allocations! (tough to do in C++ and Thrust) Write your own reduction Data size 10 M 100 k 10 k Speedup over thrust 2% 14% 24% Parallel Nsight is the only tool that showed the bottleneck 34

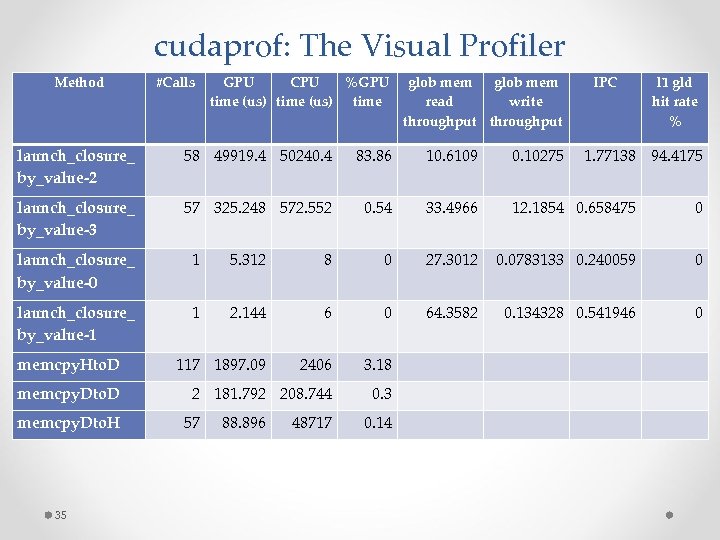

cudaprof: The Visual Profiler Method #Calls GPU CPU %GPU time (us) time glob mem read write throughput IPC l 1 gld hit rate % launch_closure_ by_value-2 58 49919. 4 50240. 4 83. 86 10. 6109 0. 10275 1. 77138 94. 4175 launch_closure_ by_value-3 57 325. 248 572. 552 0. 54 33. 4966 12. 1854 0. 658475 0 launch_closure_ by_value-0 1 5. 312 8 0 27. 3012 0. 0783133 0. 240059 0 launch_closure_ by_value-1 1 2. 144 6 0 64. 3582 0. 134328 0. 541946 0 117 1897. 09 2406 3. 18 2 181. 792 208. 744 0. 3 memcpy. Hto. D memcpy. Dto. H 35 57 88. 896 48717 0. 14

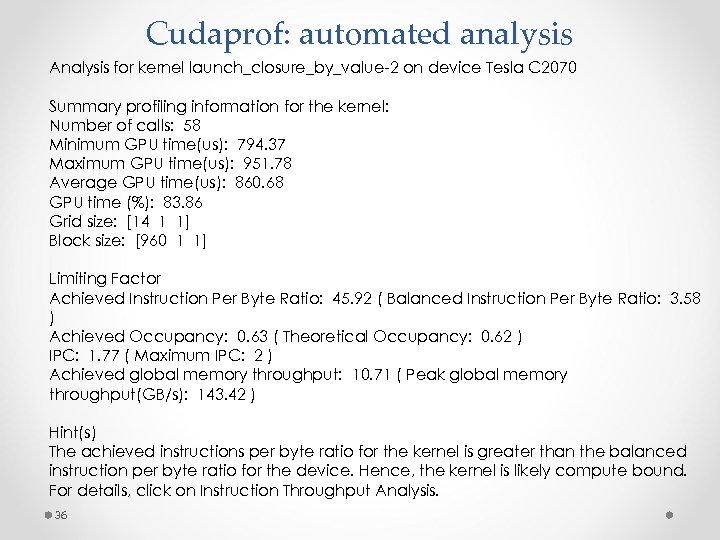

Cudaprof: automated analysis Analysis for kernel launch_closure_by_value-2 on device Tesla C 2070 Summary profiling information for the kernel: Number of calls: 58 Minimum GPU time(us): 794. 37 Maximum GPU time(us): 951. 78 Average GPU time(us): 860. 68 GPU time (%): 83. 86 Grid size: [14 1 1] Block size: [960 1 1] Limiting Factor Achieved Instruction Per Byte Ratio: 45. 92 ( Balanced Instruction Per Byte Ratio: 3. 58 ) Achieved Occupancy: 0. 63 ( Theoretical Occupancy: 0. 62 ) IPC: 1. 77 ( Maximum IPC: 2 ) Achieved global memory throughput: 10. 71 ( Peak global memory throughput(GB/s): 143. 42 ) Hint(s) The achieved instructions per byte ratio for the kernel is greater than the balanced instruction per byte ratio for the device. Hence, the kernel is likely compute bound. For details, click on Instruction Throughput Analysis. 36

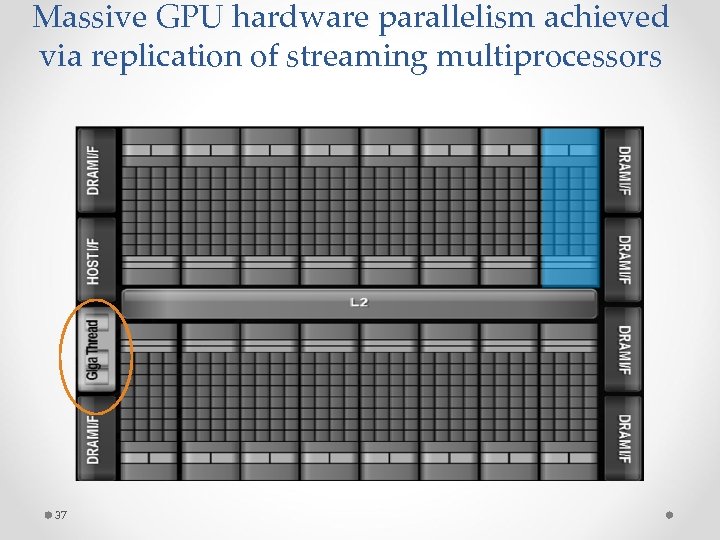

Massive GPU hardware parallelism achieved via replication of streaming multiprocessors 37

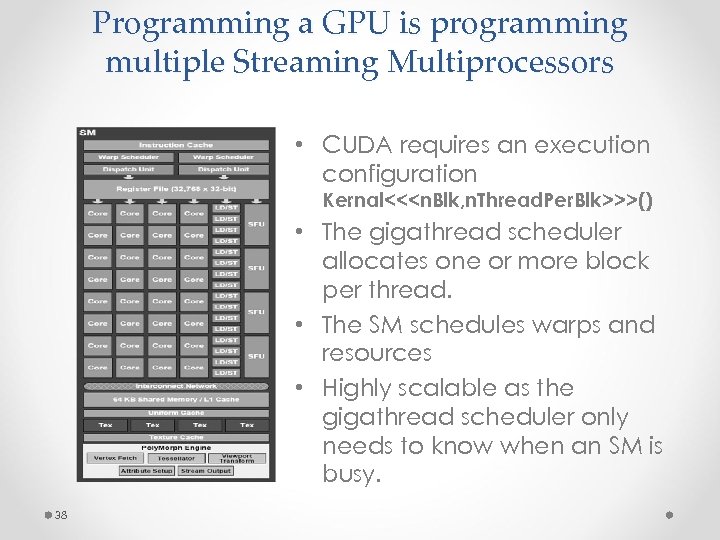

Programming a GPU is programming multiple Streaming Multiprocessors • CUDA requires an execution configuration Kernal<<<n. Blk, n. Thread. Per. Blk>>>() • The gigathread scheduler allocates one or more block per thread. • The SM schedules warps and resources • Highly scalable as the gigathread scheduler only needs to know when an SM is busy. 38

Thread level parallelism • Provide as many warps (groups of threads) as possible. • Warps execute in SIMD fashion • SM scheduler detects when SIMD instruction is ready to run (no dependency) • Lots of warps implies a good chance that a SIMD instruction will be ready to run … hides latency! 39

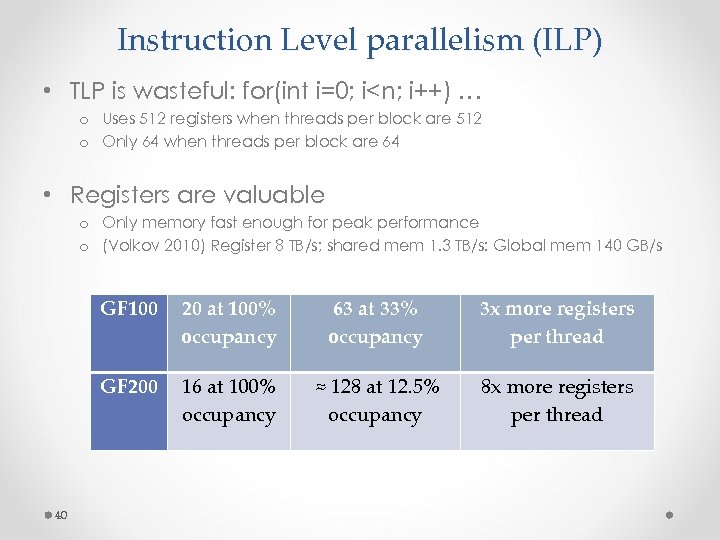

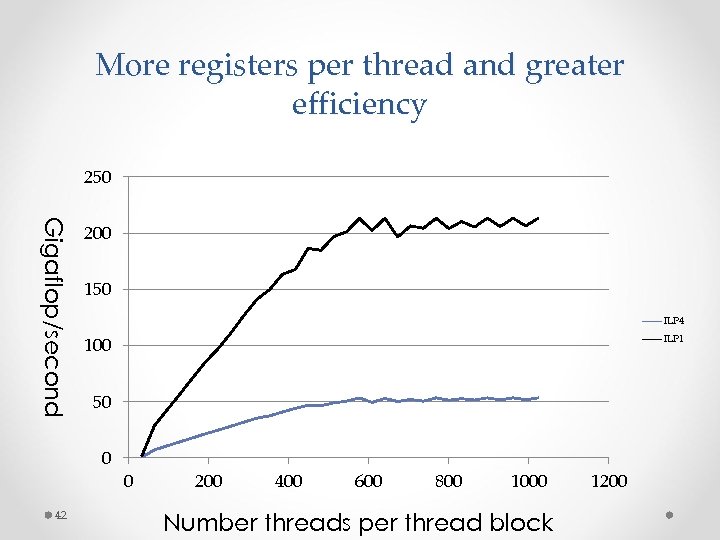

Instruction Level parallelism (ILP) • TLP is wasteful: for(int i=0; i<n; i++) … o Uses 512 registers when threads per block are 512 o Only 64 when threads per block are 64 • Registers are valuable o Only memory fast enough for peak performance o (Volkov 2010) Register 8 TB/s; shared mem 1. 3 TB/s: Global mem 140 GB/s GF 100 63 at 33% occupancy 3 x more registers per thread GF 200 40 20 at 100% occupancy 16 at 100% occupancy ≈ 128 at 12. 5% occupancy 8 x more registers per thread

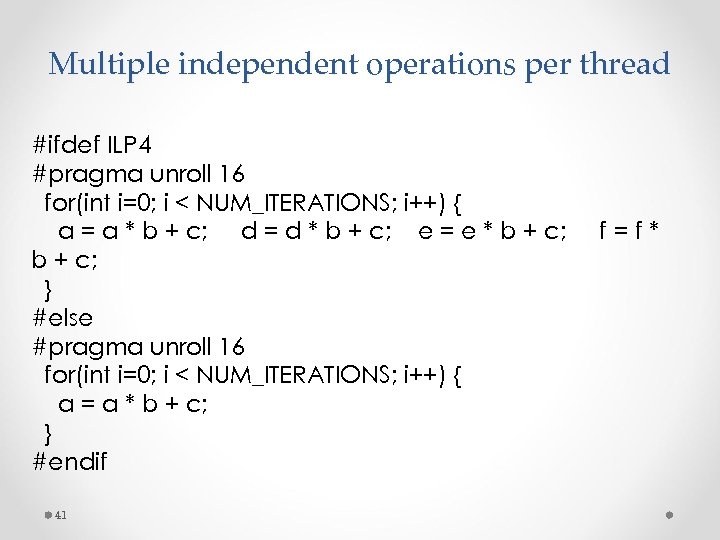

Multiple independent operations per thread #ifdef ILP 4 #pragma unroll 16 for(int i=0; i < NUM_ITERATIONS; i++) { a = a * b + c; d = d * b + c; e = e * b + c; } #else #pragma unroll 16 for(int i=0; i < NUM_ITERATIONS; i++) { a = a * b + c; } #endif 41 f=f*

More registers per thread and greater efficiency 250 Gigaflop/second 200 150 ILP 4 50 0 42 ILP 1 100 0 200 400 600 800 1000 Number threads per thread block 1200

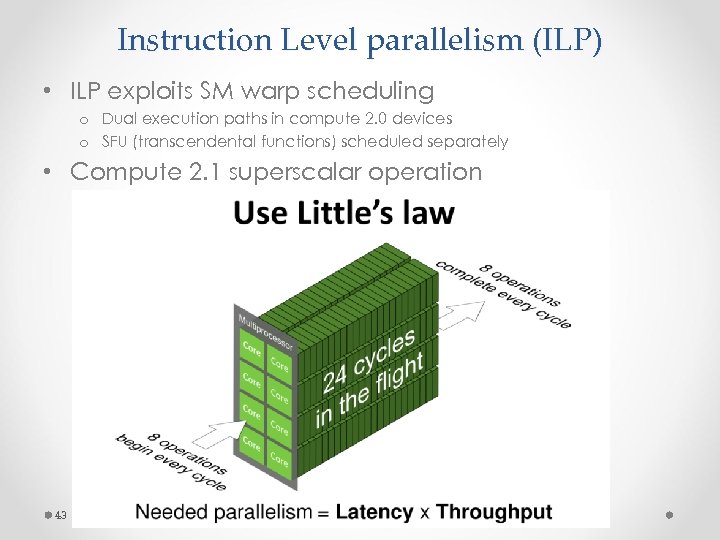

Instruction Level parallelism (ILP) • ILP exploits SM warp scheduling o Dual execution paths in compute 2. 0 devices o SFU (transcendental functions) scheduled separately • Compute 2. 1 superscalar operation 43

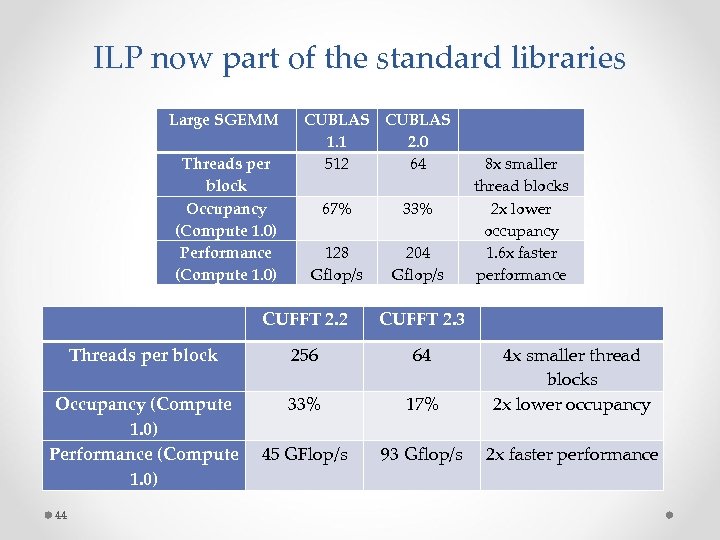

ILP now part of the standard libraries Large SGEMM Threads per block Occupancy (Compute 1. 0) Performance (Compute 1. 0) CUBLAS 1. 1 2. 0 512 64 67% 33% 128 Gflop/s 204 Gflop/s 8 x smaller thread blocks 2 x lower occupancy 1. 6 x faster performance CUFFT 2. 2 CUFFT 2. 3 Threads per block 256 64 Occupancy (Compute 1. 0) Performance (Compute 1. 0) 33% 17% 4 x smaller thread blocks 2 x lower occupancy 45 GFlop/s 93 Gflop/s 2 x faster performance 44

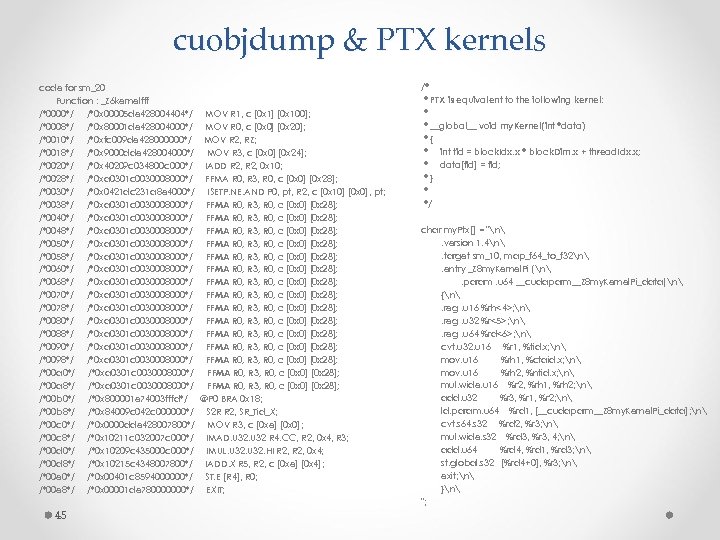

cuobjdump & PTX kernels code for sm_20 Function : _Z 6 kernelfff /*0000*/ /*0 x 00005 de 428004404*/ MOV R 1, c [0 x 1] [0 x 100]; /*0008*/ /*0 x 80001 de 428004000*/ MOV R 0, c [0 x 0] [0 x 20]; /*0010*/ /*0 xfc 009 de 428000000*/ MOV R 2, RZ; /*0018*/ /*0 x 9000 dde 428004000*/ MOV R 3, c [0 x 0] [0 x 24]; /*0020*/ /*0 x 40209 c 034800 c 000*/ IADD R 2, 0 x 10; /*0028*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0030*/ /*0 x 0421 dc 231 a 8 e 4000*/ ISETP. NE. AND P 0, pt, R 2, c [0 x 10] [0 x 0], pt; /*0038*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0040*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0048*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0050*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0058*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0060*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0068*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0070*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0078*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0080*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0088*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0090*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*0098*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*00 a 0*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*00 a 8*/ /*0 xa 0301 c 0030008000*/ FFMA R 0, R 3, R 0, c [0 x 0] [0 x 28]; /*00 b 0*/ /*0 x 800001 e 74003 fffd*/ @P 0 BRA 0 x 18; /*00 b 8*/ /*0 x 84009 c 042 c 000000*/ S 2 R R 2, SR_Tid_X; /*00 c 0*/ /*0 x 0000 dde 428007800*/ MOV R 3, c [0 xe] [0 x 0]; /*00 c 8*/ /*0 x 10211 c 032007 c 000*/ IMAD. U 32 R 4. CC, R 2, 0 x 4, R 3; /*00 d 0*/ /*0 x 10209 c 435000 c 000*/ IMUL. U 32. HI R 2, 0 x 4; /*00 d 8*/ /*0 x 10215 c 4348007800*/ IADD. X R 5, R 2, c [0 xe] [0 x 4]; /*00 e 0*/ /*0 x 00401 c 8594000000*/ ST. E [R 4], R 0; /*00 e 8*/ /*0 x 00001 de 780000000*/ EXIT; 45 /* * PTX is equivalent to the following kernel: * * __global__ void my. Kernel(int *data) *{ * int tid = block. Idx. x * block. Dim. x + thread. Idx. x; * data[tid] = tid; *} * */ char my. Ptx[] = "n. version 1. 4n. target sm_10, map_f 64_to_f 32n. entry _Z 8 my. Kernel. Pi (n. param. u 64 __cudaparm__Z 8 my. Kernel. Pi_data)n {n. reg. u 16 %rh<4>; n. reg. u 32 %r<5>; n. reg. u 64 %rd<6>; n cvt. u 32. u 16 %r 1, %tid. x; n mov. u 16 %rh 1, %ctaid. x; n mov. u 16 %rh 2, %ntid. x; n mul. wide. u 16 %r 2, %rh 1, %rh 2; n add. u 32 %r 3, %r 1, %r 2; n ld. param. u 64 %rd 1, [__cudaparm__Z 8 my. Kernel. Pi_data]; n cvt. s 64. s 32 %rd 2, %r 3; n mul. wide. s 32 %rd 3, %r 3, 4; n add. u 64 %rd 4, %rd 1, %rd 3; n st. global. s 32 [%rd 4+0], %r 3; n exit; n }n ";

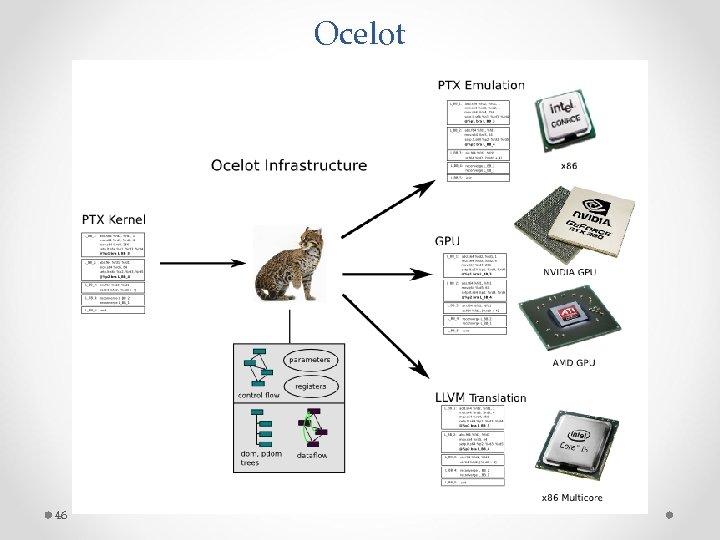

Ocelot 46

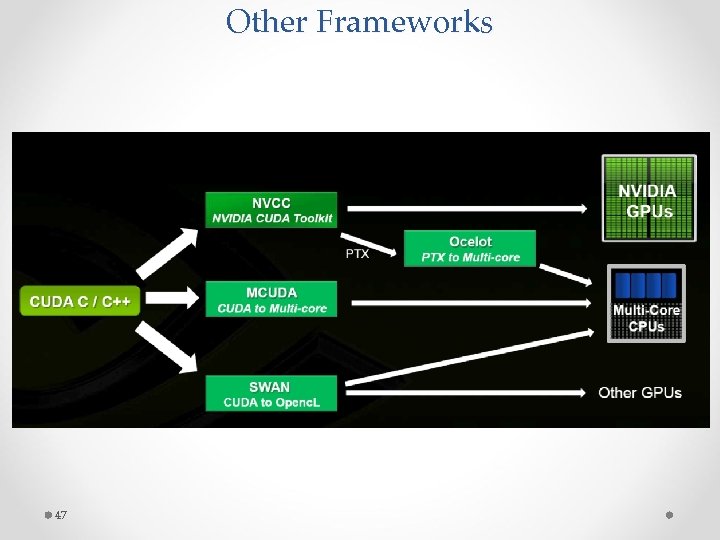

Other Frameworks 47

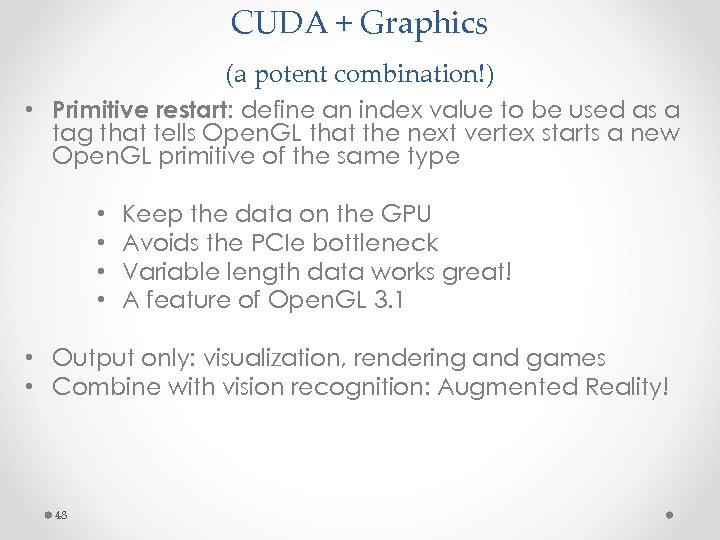

CUDA + Graphics (a potent combination!) • Primitive restart: define an index value to be used as a tag that tells Open. GL that the next vertex starts a new Open. GL primitive of the same type • • Keep the data on the GPU Avoids the PCIe bottleneck Variable length data works great! A feature of Open. GL 3. 1 • Output only: visualization, rendering and games • Combine with vision recognition: Augmented Reality! 48

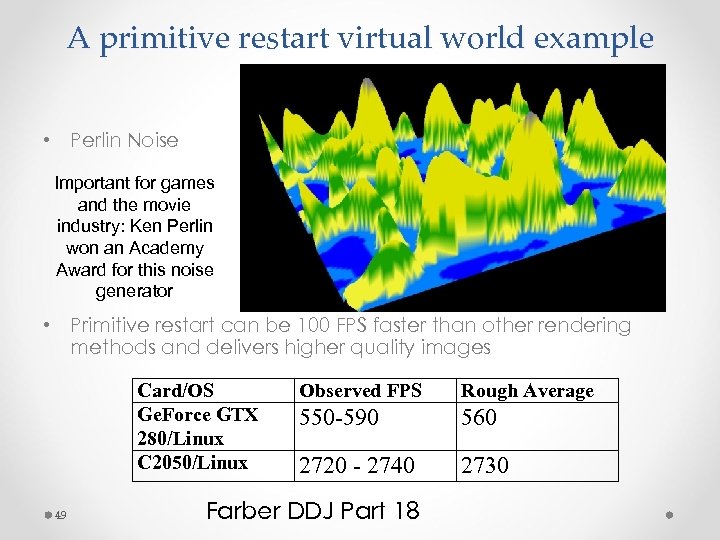

A primitive restart virtual world example • Perlin Noise Important for games and the movie industry: Ken Perlin won an Academy Award for this noise generator • Primitive restart can be 100 FPS faster than other rendering methods and delivers higher quality images Card/OS Ge. Force GTX 280/Linux C 2050/Linux 49 Observed FPS Rough Average 550 -590 560 2720 - 2740 2730 Farber DDJ Part 18

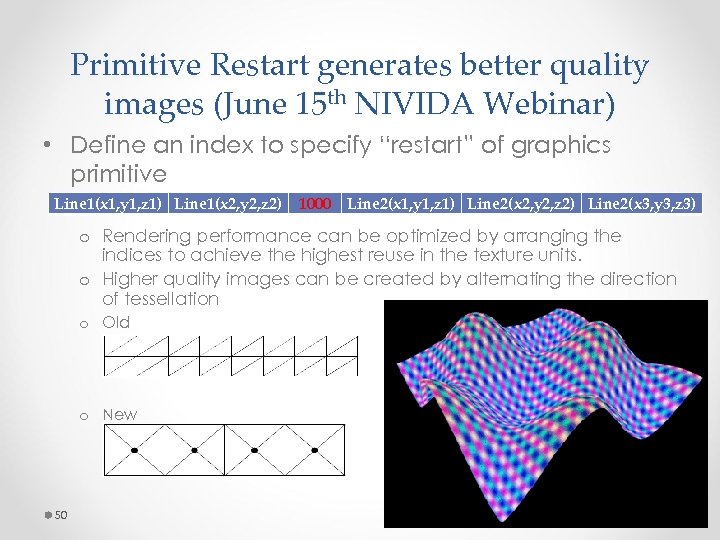

Primitive Restart generates better quality images (June 15 th NIVIDA Webinar) • Define an index to specify “restart” of graphics primitive Line 1(x 1, y 1, z 1) Line 1(x 2, y 2, z 2) 1000 Line 2(x 1, y 1, z 1) Line 2(x 2, y 2, z 2) Line 2(x 3, y 3, z 3) o Rendering performance can be optimized by arranging the indices to achieve the highest reuse in the texture units. o Higher quality images can be created by alternating the direction of tessellation o Old o New 50

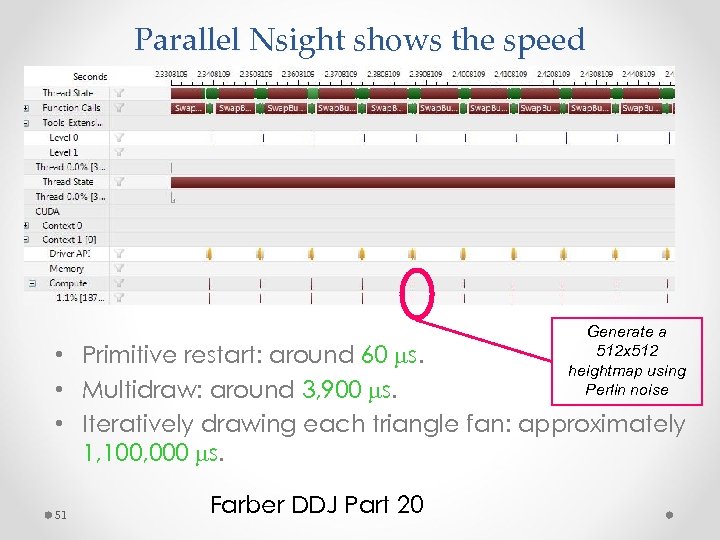

Parallel Nsight shows the speed Generate a 512 x 512 heightmap using Perlin noise • Primitive restart: around 60 ms. • Multidraw: around 3, 900 ms. • Iteratively drawing each triangle fan: approximately 1, 100, 000 ms. 51 Farber DDJ Part 20

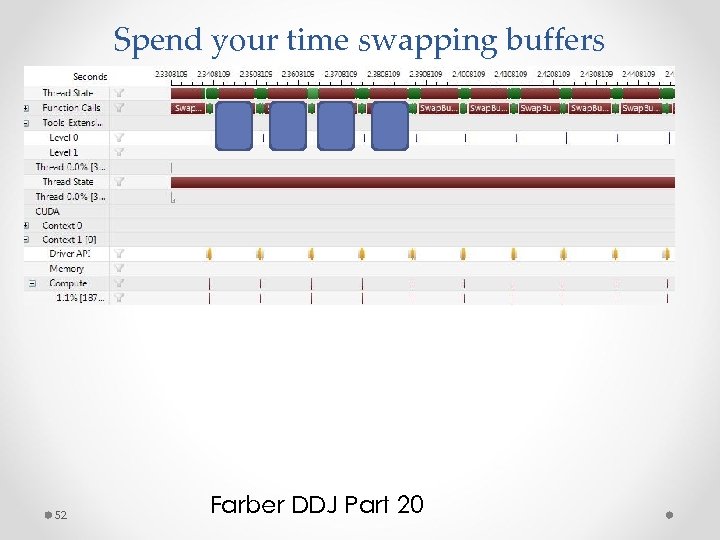

Spend your time swapping buffers 52 Farber DDJ Part 20

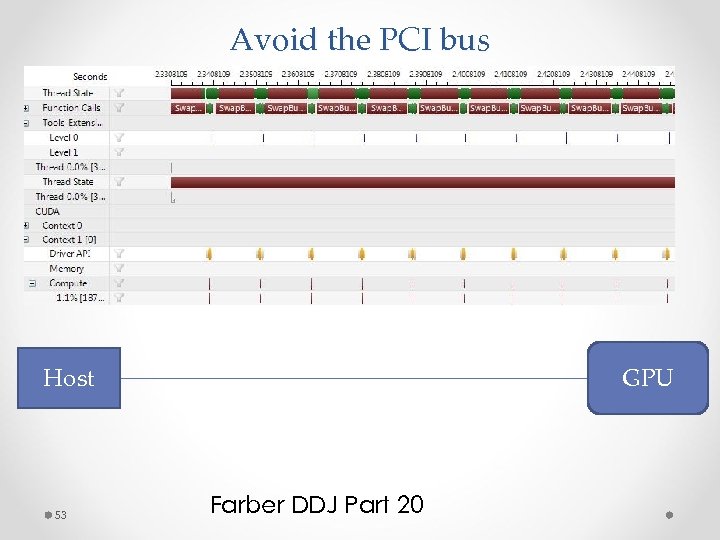

Avoid the PCI bus Data Host 53 GPU Farber DDJ Part 20

If you remember one thing from this talk (Three rules for fast GPU codes) 1. Get the data on the GPU (and keep it there!) • • PCIe x 16 v 2. 0 bus: 8 Gi. B/s in a single direction 20 -series GPUs: 140 -200 Gi. B/s 2. Give the GPU enough work to do • • Assume 10 ms latency and 1 TF device Can waste (10 -6 * 1012) = 1 M operations 3. Reuse and locate data to avoid global memory bandwidth bottlenecks • • 1012 flop hardware delivers 1010 flop when global memory limited Can cause a 100 x slowdown! Corollary: Avoid malloc/free! 54

Predicting future killer apps? Humility: five years ago I would not have believed: Adding four PCIe devices will give my workstation roughly the same peak flop rate as the largest PNNL supercomputer It is now possible to get the full 3 D wiring diagram for the entire brain of a cat or mouse 55 Harvard Connectome Project

Killer apps: when personal vision meets technical capability • The Connectome project: A Galilean first opportunity for scientists to examine the detailed schematic diagram that nature uses for vision and cognition. • SC 09: computers can simulate an entire cat brain o “The cat is out of the bag: cortical simulations with 109 neurons, 1013 synapses”, Ananthanarayanan, Esser, Simon, and Modha (2009). • My prediction: combining detailed brain models with sufficient computational capability will be a killer app. o People studied birds and (eventually) created supersonic aircraft o With nature’s wiring diagram for vision & language, (eventually) …? WHAT IS YOUR VISION? 56

2c8414750fddfdd0deff5fd3b8c8013a.ppt