35142df4f607da01930c62d2a2cdc73e.ppt

- Количество слайдов: 39

Super Belle with FKPPL VO & AMGA Data Handling Kihyeon Cho & Soonwook Hwang (KISTI) 1

Contents § § KISTI Super computing Center FKPPL VO Farm Super Belle Data Handling Summary

FKPPL VO Grid Testbed

Goal § Background § Collaborative work between KISTI and CC-IN 2 P 3 in the area of Grid computing under the framework of FKPPL § Objective § (short-term) to provide a Grid testbed to the e-Science summer school participants in order to keep drawing their attention to Grid computing and e-Science by allowing them to submit jobs and access data on the Grid § (long-term) to support the other FKPPL projects by providing a production-level Grid testbed for the development and deployment of their applications on the Grid § Target Users § FKPPL members § 2008 Seoul e-Science summer school Participants

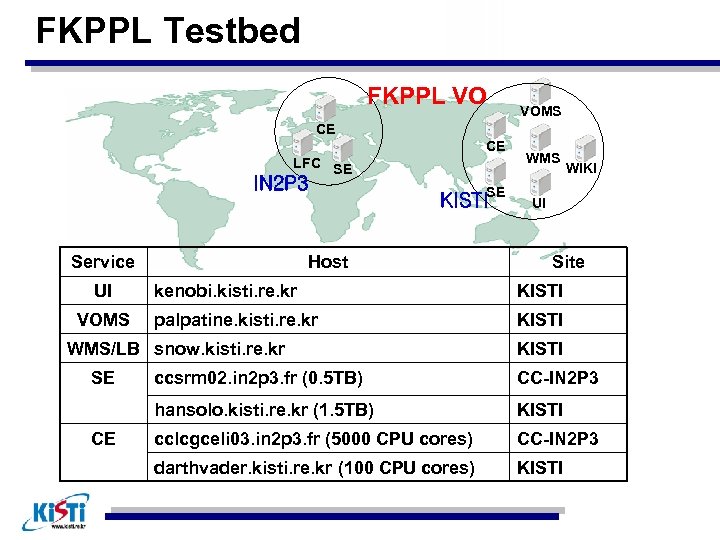

FKPPL Testbed FKPPL VO VOMS CE CE LFC SE IN 2 P 3 Service UI VOMS Host WIKI UI Site kenobi. kisti. re. kr KISTI palpatine. kisti. re. kr KISTI WMS/LB snow. kisti. re. kr SE SE KISTI WMS KISTI CC-IN 2 P 3 hansolo. kisti. re. kr (1. 5 TB) CE ccsrm 02. in 2 p 3. fr (0. 5 TB) KISTI cclcgceli 03. in 2 p 3. fr (5000 CPU cores) CC-IN 2 P 3 darthvader. kisti. re. kr (100 CPU cores) KISTI

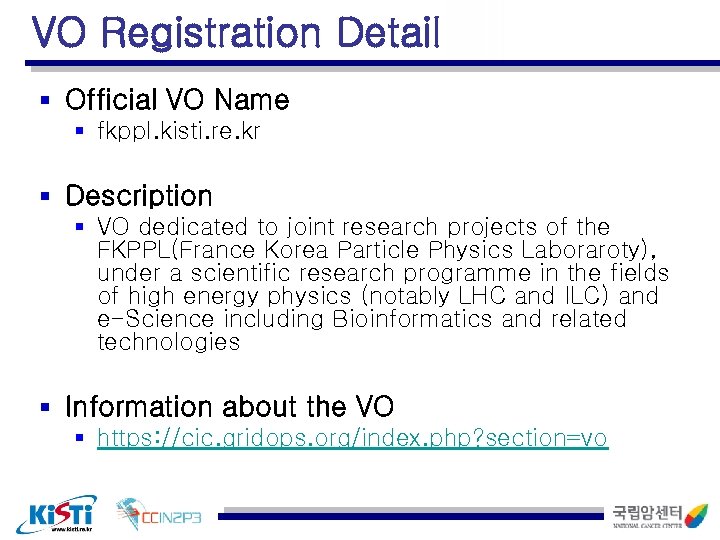

VO Registration Detail § Official VO Name § fkppl. kisti. re. kr § Description § VO dedicated to joint research projects of the FKPPL(France Korea Particle Physics Laboraroty), under a scientific research programme in the fields of high energy physics (notably LHC and ILC) and e-Science including Bioinformatics and related technologies § Information about the VO § https: //cic. gridops. org/index. php? section=vo

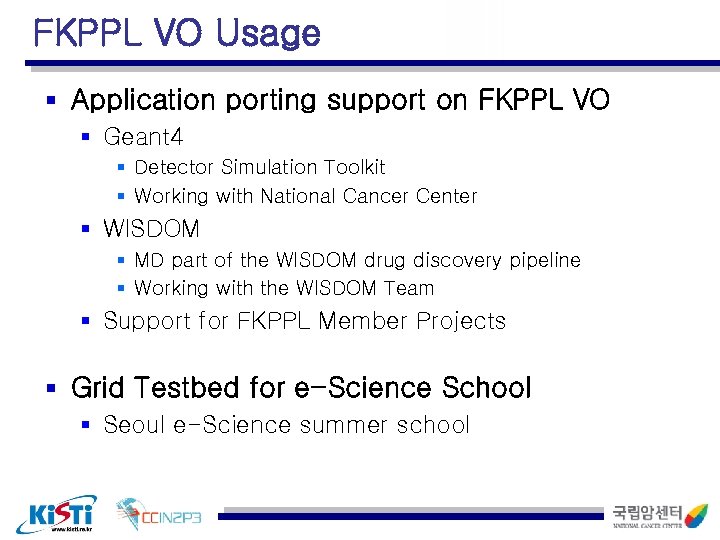

FKPPL VO Usage § Application porting support on FKPPL VO § Geant 4 § Detector Simulation Toolkit § Working with National Cancer Center § WISDOM § MD part of the WISDOM drug discovery pipeline § Working with the WISDOM Team § Support for FKPPL Member Projects § Grid Testbed for e-Science School § Seoul e-Science summer school

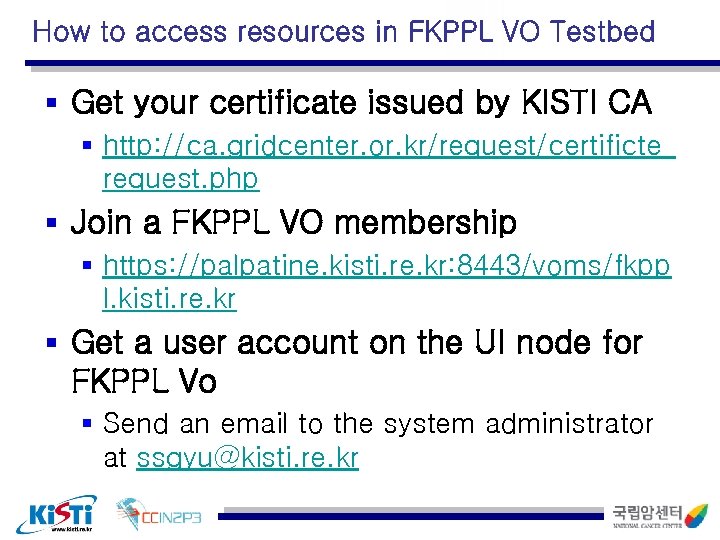

How to access resources in FKPPL VO Testbed § Get your certificate issued by KISTI CA § http: //ca. gridcenter. or. kr/request/certificte_ request. php § Join a FKPPL VO membership § https: //palpatine. kisti. re. kr: 8443/voms/fkpp l. kisti. re. kr § Get a user account on the UI node for FKPPL Vo § Send an email to the system administrator at ssgyu@kisti. re. kr

User Support § FKPPL VO Wiki site § http: //anakin. kisti. re. kr/mediawiki/index. php/FKPPL_VO § User Accounts on UI machine § 17 User accounts have been created § FKPPL VO Registration § 4 users have been registered as of now

Contact Infomation § Soonwook Hwang (KISTI), Dominique Boutigny (CCIN 2 P 3) § responsible person § hwang@kisti. re. kr, boutigny@in 2 p 3. fr § Sunil Ahn (KISTI), Yonny Cardenas (CC-IN 2 P 3) § Technical contact person, § siahn@kisti. re. kr, cardenas@cc. in 2 p 3. fr § Namgyu Kim § Site administrator § ssgyu@kisti. re. kr § Sehoon Lee § User Support § sehooi@kisti. re. kr

Configuration KISTI site VOMS, WMS, CE+WN*, UI, Wiki * Infrastructure installation in progress ( a cluster with 128 cores has been purchased) CC-IN 2 P 3 site CE+WN, SE, LFC Yonny CARDENAS Monday, December 2, 2008 11

Configuration VO Registration procedure – VO name: fkppl. kisti. re. kr – VO manager: Sunil Ahn – Status: Active Yonny CARDENAS Monday, December 2, 2008 12

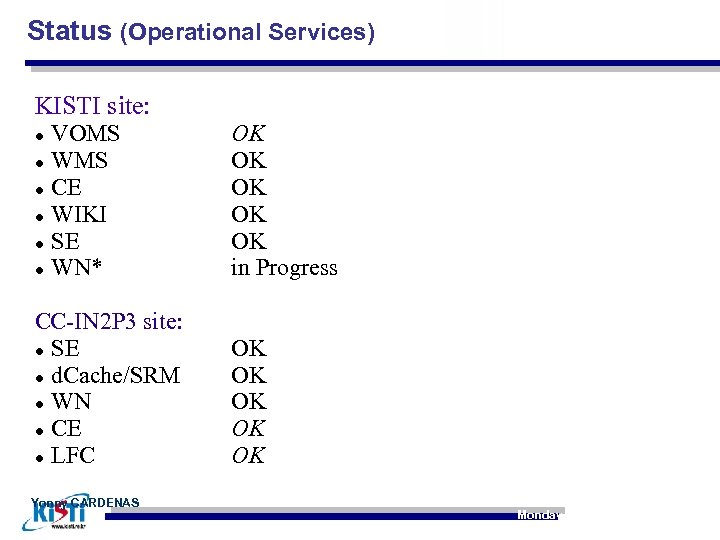

Status (Operational Services) KISTI site: VOMS WMS CE WIKI SE WN* CC-IN 2 P 3 site: SE d. Cache/SRM WN CE LFC Yonny CARDENAS OK OK OK in Progress OK OK OK Monday , December 2 , 2008 13

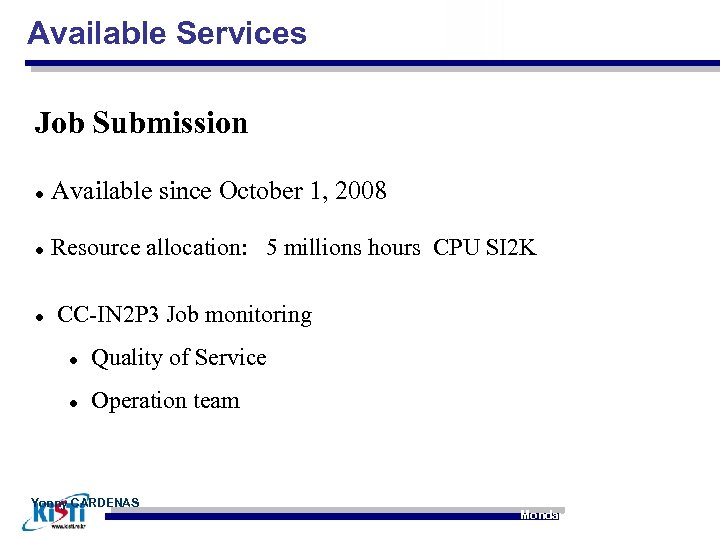

Available Services Job Submission Available since October 1, 2008 Resource allocation: 5 millions hours CPU SI 2 K CC-IN 2 P 3 Job monitoring Quality of Service Operation team Yonny CARDENAS Monday, December 2 , 2008 14

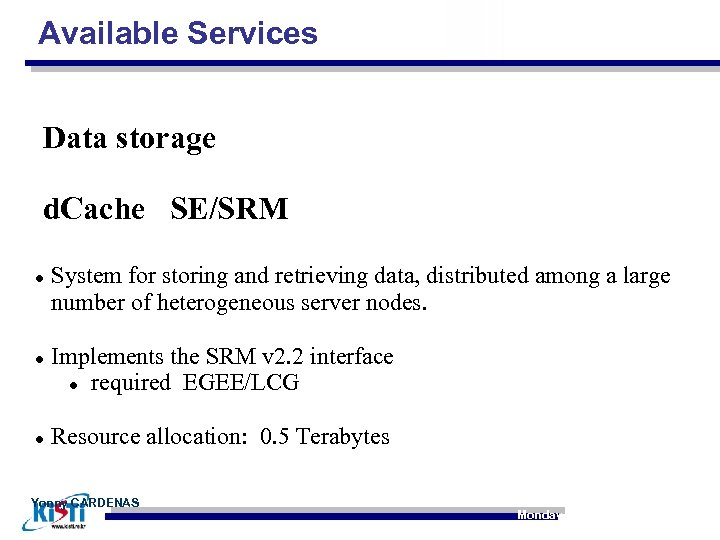

Available Services Data storage d. Cache SE/SRM System for storing and retrieving data, distributed among a large number of heterogeneous server nodes. Implements the SRM v 2. 2 interface required EGEE/LCG Resource allocation: 0. 5 Terabytes Yonny CARDENAS Monday , December 2 , 2008 15

Available Services Data storage AFS (Andrew File System) Network file system for personal and group files, experiment software, system tools (compilers, libraries , . . . ) Indirect use (jobs) Resource allocation: 2 Gigabytes Yonny CARDENAS Monday, December 2, 2008 16

Available Services Data storage LFC - LCG File Catalog Maintains mappings between logical file names (LFN) and SRM file identifiers. Supports references to SRM files in several storage elements. Yonny CARDENAS Monday, December 2 , 2008 17

Utilisation - Services Jobs Submission October 34 jobs for 150 hours CPU SI 2 K November 1690 jobs for 48250 hours CPU SI 2 K Yonny CARDENAS Monday, December 2, 2008 18

Utilisation - Services Data Storage 7193 files for 60 G bytes of used space • 440 G bytes available. Yonny CARDENAS Monday, December 2, 2008 19

User Support § FKPPL VO Wiki site § http: //anakin. kisti. re. kr/mediawiki/index. php/FKPPL_VO § User Accounts on UI § 20 User accounts has been created § FKPPL VO Membership Registration § 7 Users have been registered at FKPPL VO membership

FKPPL VO Usage § Deployment of Geant 4 applications on FKPPL VO § Detector Simulation Toolkit § Working with Jungwook Shin at National Cancer Center § Grid Interoperability Testbed

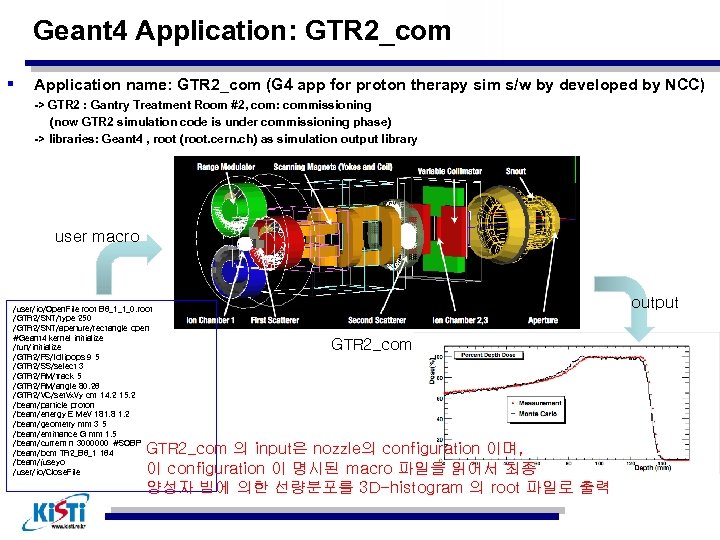

Geant 4 Application: GTR 2_com § Application name: GTR 2_com (G 4 app for proton therapy sim s/w by developed by NCC) -> GTR 2 : Gantry Treatment Room #2, com: commissioning (now GTR 2 simulation code is under commissioning phase) -> libraries: Geant 4 , root (root. cern. ch) as simulation output library user macro /user/io/Open. File root B 6_1_1_0. root /GTR 2/SNT/type 250 /GTR 2/SNT/aperture/rectangle open #Geant 4 kernel initialize /run/initialize /GTR 2/FS/lollipops 9 5 /GTR 2/SS/select 3 /GTR 2/RM/track 5 /GTR 2/RM/angle 80. 26 /GTR 2/VC/set. Vx. Vy cm 14. 2 15. 2 /beam/particle proton /beam/energy E Me. V 181. 8 1. 2 /beam/geometry mm 3 5 /beam/emittance G mm 1. 5 /beam/current n 3000000 #SOBP /beam/bcm TR 2_B 6_1 164 /beam/juseyo /user/io/Close. File output GTR 2_com 의 input은 nozzle의 configuration 이며, 이 configuration 이 명시된 macro 파일을 읽어서 최종 양성자 빔에 의한 선량분포를 3 D-histogram 의 root 파일로 출력

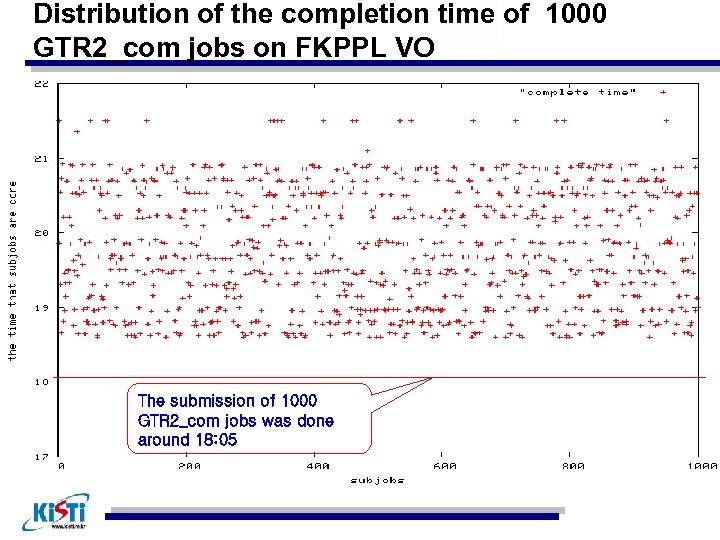

Distribution of the completion time of 1000 GTR 2_com jobs on FKPPL VO The submission of 1000 GTR 2_com jobs was done around 18: 05

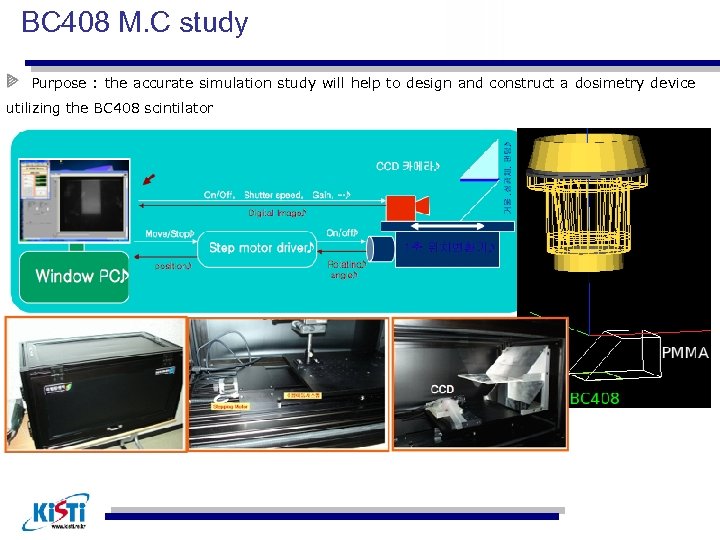

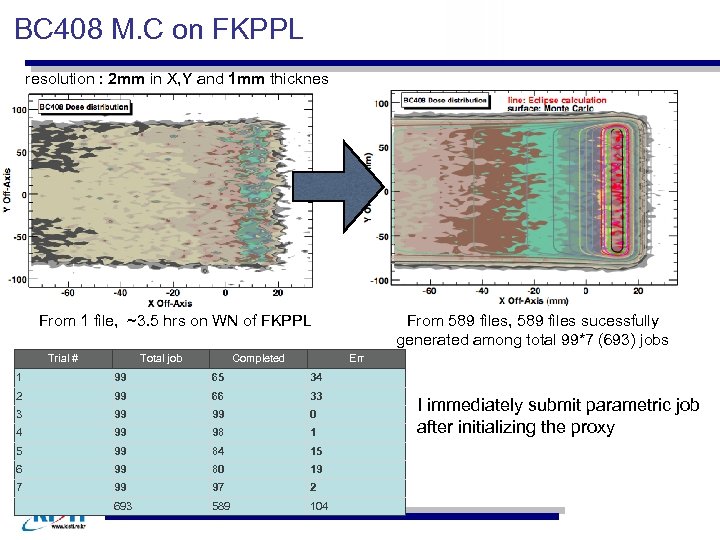

BC 408 M. C study Purpose : the accurate simulation study will help to design and construct a dosimetry device utilizing the BC 408 scintilator

BC 408 M. C on FKPPL resolution : 2 mm in X, Y and 1 mm thicknes From 1 file, ~3. 5 hrs on WN of FKPPL Trial # Total job Completed From 589 files, 589 files sucessfully generated among total 99*7 (693) jobs Err 1 99 65 34 2 99 66 33 3 99 99 0 4 99 98 1 5 99 84 15 6 99 80 19 7 99 97 2 693 589 104 I immediately submit parametric job after initializing the proxy

Super Belle Data Handling

Super Belle Computing § Conveners: T. Hara, T. Kuhr § Distributed Computing (Martin Seviour) § Data Handling (Kihyeon Cho) § Data Base (Vacant)

Super Belle Data Handling § Data Handling depends on distribution computing. § Cloud Computing? § Grid farm? § Data Handling Suggestions (2/17) § SAM (Sequential Access through Metadata) § CDF by Thomas Kuhr § AMGA § KISTI by Soonwook Hwang

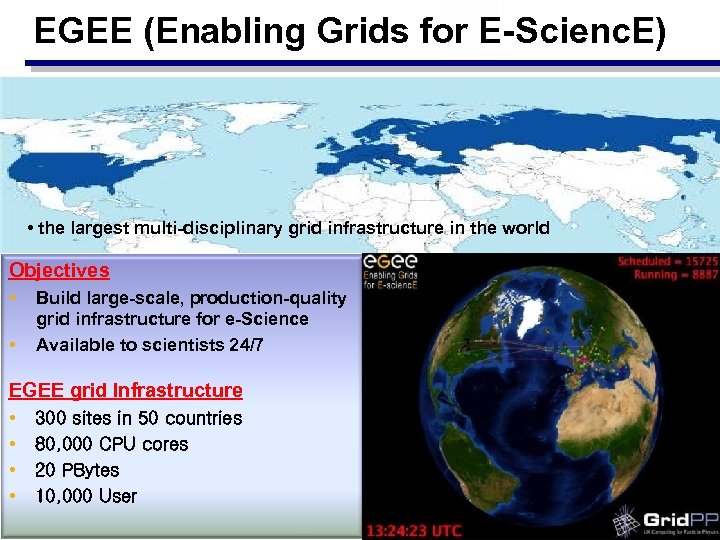

EGEE (Enabling Grids for E-Scienc. E) • the largest multi-disciplinary grid infrastructure in the world Objectives • • Build large-scale, production-quality grid infrastructure for e-Science Available to scientists 24/7 EGEE grid Infrastructure • • 300 sites in 50 countries 80, 000 CPU cores 20 PBytes 10, 000 User

Overview of AMGA (1/2) § § Metadata is data about data AMGA provides: § Access to Metadata for files stored on the Grid § A simplified general access to relational data stored in database systems. § 2004 – the ARDA project evaluated existing Metadata Services from HEP experiments § AMI (ATLAS), Ref. DB (CMS), Alien Metadata Catalogue (ALICE) § Similar goals, similar concepts § Each designed for a particular application domain § Reuse outside intended domain difficult § Several technical limitations: large answers, scalability, speed, lack of flexibility § ARDA proposed an interface for Metadata access on the GRID § Based on requirements of LHC experiments § But generic - not bound to a particular application domain § Designed jointly with the g. Lite/EGEE team

Overview of AMGA (2/2) What is AMGA ? (ARDA Metadata Grid Application) § Began as prototype to evaluate the Metadata Interface § Evaluated by community since the beginning: § Matured quickly thanks to users feedback § Now part of g. Lite middleware : EGEE’s g. Lite 3. 1 MW § Requirements from HEP community § Millions of files, 6000+ users, 200+ computing centres § Mainly (real-only) file metadata § Main concerns : scalability, performance, fault-tolerance, Support for Hierarchical Collection § Requirements from Biomed community § Smaller scale than HEP § Main concerns : Security ARDA Project (A Realisation of Distributed Analysis for LHC)

Metadata user requirements § I want to § store some information about files § In a structured way § query a system about those information § keep information about jobs § I want my jobs to have read/write access to those information § have easy access to structured data using the grid proxy certificate § NOT use a database

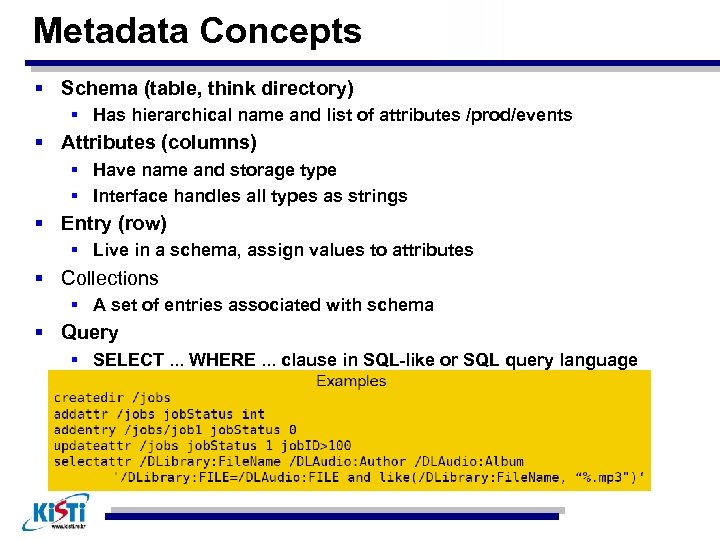

Metadata Concepts § Schema (table, think directory) § Has hierarchical name and list of attributes /prod/events § Attributes (columns) § Have name and storage type § Interface handles all types as strings § Entry (row) § Live in a schema, assign values to attributes § Collections § A set of entries associated with schema § Query § SELECT. . . WHERE. . . clause in SQL-like or SQL query language

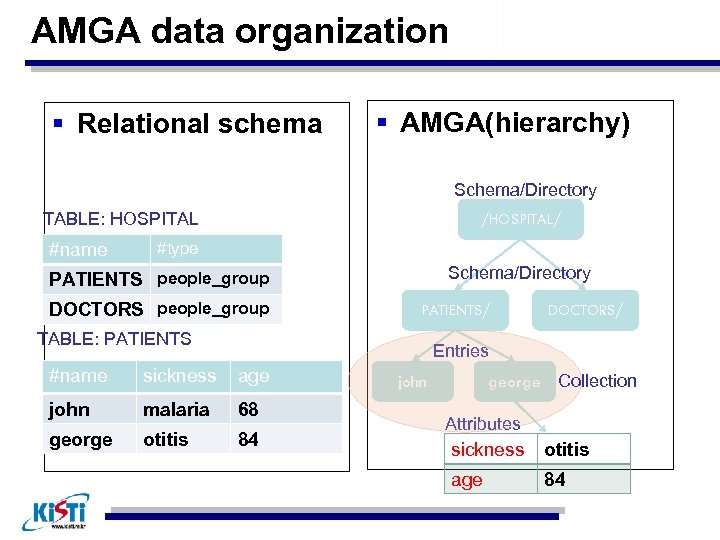

AMGA data organization § Relational schema § AMGA(hierarchy) Schema/Directory TABLE: HOSPITAL #name /HOSPITAL/ #type Schema/Directory PATIENTS people_group DOCTORS people_group PATIENTS/ TABLE: PATIENTS DOCTORS/ Entries #name sickness age john malaria 68 george otitis 84 george Collection john Attributes sickness otitis age 84

Importing existing data § Suppose that you have the data § A reasonable question would be: § Can I use my existing database data? ? § The answer is YES § Importing data to AMGA § Pretty simple § Connect a database to AMGA § Execute the import command § import table directory § Ready to go!

AMGA Use Cases

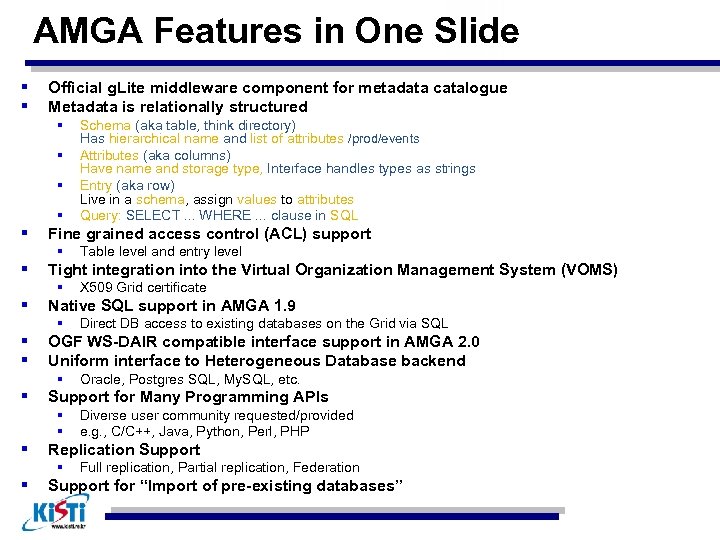

AMGA Features in One Slide § § Official g. Lite middleware component for metadata catalogue Metadata is relationally structured § § § Fine grained access control (ACL) support § § Diverse user community requested/provided e. g. , C/C++, Java, Python, Perl, PHP Replication Support § § Oracle, Postgres SQL, My. SQL, etc. Support for Many Programming APIs § § § Direct DB access to existing databases on the Grid via SQL OGF WS-DAIR compatible interface support in AMGA 2. 0 Uniform interface to Heterogeneous Database backend § § X 509 Grid certificate Native SQL support in AMGA 1. 9 § § § Table level and entry level Tight integration into the Virtual Organization Management System (VOMS) § § Schema (aka table, think directory) Has hierarchical name and list of attributes /prod/events Attributes (aka columns) Have name and storage type, Interface handles types as strings Entry (aka row) Live in a schema, assign values to attributes Query: SELECT. . . WHERE. . . clause in SQL Full replication, Partial replication, Federation Support for “Import of pre-existing databases”

AMGA Website http: //amga. web. cern. ch/a mga

To do § Prototype of AMGA § Using Belle flat form, we will use AMGA (Namkyu Kim and Dr. Junghyun Kim) § Belle flat form => flag § Using Super Belle flat form (Grid or Cloud computing), we will use AMGA.

35142df4f607da01930c62d2a2cdc73e.ppt