4250363c7e1930173549ec490999419b.ppt

- Количество слайдов: 24

Sun’s weak points in UE 10000 1

Sun’s weak points in UE 10000 1

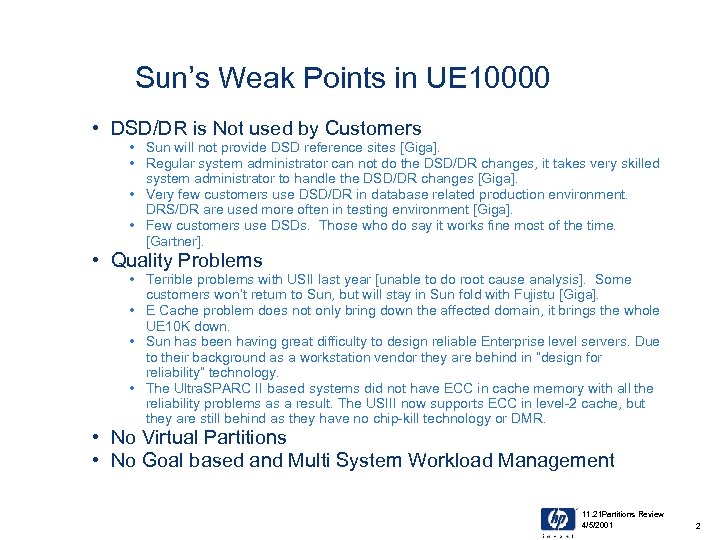

Sun’s Weak Points in UE 10000 • DSD/DR is Not used by Customers • Sun will not provide DSD reference sites [Giga]. • Regular system administrator can not do the DSD/DR changes, it takes very skilled system administrator to handle the DSD/DR changes [Giga]. • Very few customers use DSD/DR in database related production environment. DRS/DR are used more often in testing environment [Giga]. • Few customers use DSDs. Those who do say it works fine most of the time. [Gartner]. • Quality Problems • Terrible problems with USII last year [unable to do root cause analysis]. Some customers won’t return to Sun, but will stay in Sun fold with Fujistu [Giga]. • E Cache problem does not only bring down the affected domain, it brings the whole UE 10 K down. • Sun has been having great difficulty to design reliable Enterprise level servers. Due to their background as a workstation vendor they are behind in “design for reliability” technology. • The Ultra. SPARC II based systems did not have ECC in cache memory with all the reliability problems as a result. The USIII now supports ECC in level-2 cache, but they are still behind as they have no chip-kill technology or DMR. • No Virtual Partitions • No Goal based and Multi System Workload Management 11. 21 Partitions Review 4/5/2001 2

Sun’s Weak Points in UE 10000 • DSD/DR is Not used by Customers • Sun will not provide DSD reference sites [Giga]. • Regular system administrator can not do the DSD/DR changes, it takes very skilled system administrator to handle the DSD/DR changes [Giga]. • Very few customers use DSD/DR in database related production environment. DRS/DR are used more often in testing environment [Giga]. • Few customers use DSDs. Those who do say it works fine most of the time. [Gartner]. • Quality Problems • Terrible problems with USII last year [unable to do root cause analysis]. Some customers won’t return to Sun, but will stay in Sun fold with Fujistu [Giga]. • E Cache problem does not only bring down the affected domain, it brings the whole UE 10 K down. • Sun has been having great difficulty to design reliable Enterprise level servers. Due to their background as a workstation vendor they are behind in “design for reliability” technology. • The Ultra. SPARC II based systems did not have ECC in cache memory with all the reliability problems as a result. The USIII now supports ECC in level-2 cache, but they are still behind as they have no chip-kill technology or DMR. • No Virtual Partitions • No Goal based and Multi System Workload Management 11. 21 Partitions Review 4/5/2001 2

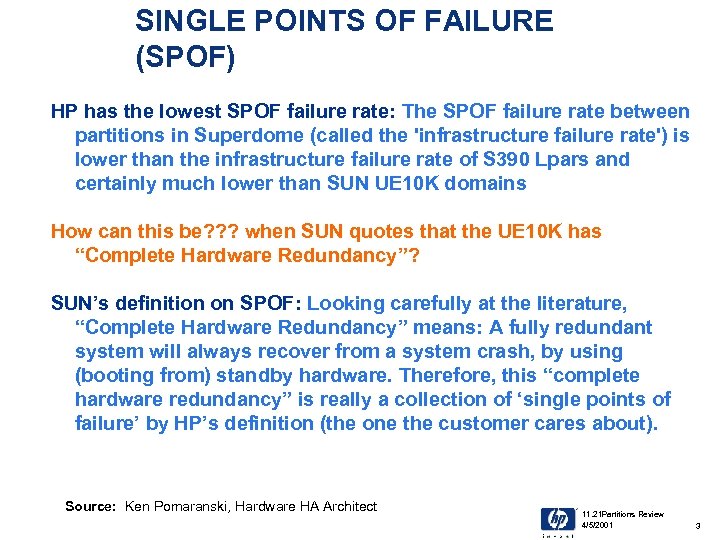

SINGLE POINTS OF FAILURE (SPOF) HP has the lowest SPOF failure rate: The SPOF failure rate between partitions in Superdome (called the 'infrastructure failure rate') is lower than the infrastructure failure rate of S 390 Lpars and certainly much lower than SUN UE 10 K domains How can this be? ? ? when SUN quotes that the UE 10 K has “Complete Hardware Redundancy”? SUN’s definition on SPOF: Looking carefully at the literature, “Complete Hardware Redundancy” means: A fully redundant system will always recover from a system crash, by using (booting from) standby hardware. Therefore, this “complete hardware redundancy” is really a collection of ‘single points of failure’ by HP’s definition (the one the customer cares about). Source: Ken Pomaranski, Hardware HA Architect 11. 21 Partitions Review 4/5/2001 3

SINGLE POINTS OF FAILURE (SPOF) HP has the lowest SPOF failure rate: The SPOF failure rate between partitions in Superdome (called the 'infrastructure failure rate') is lower than the infrastructure failure rate of S 390 Lpars and certainly much lower than SUN UE 10 K domains How can this be? ? ? when SUN quotes that the UE 10 K has “Complete Hardware Redundancy”? SUN’s definition on SPOF: Looking carefully at the literature, “Complete Hardware Redundancy” means: A fully redundant system will always recover from a system crash, by using (booting from) standby hardware. Therefore, this “complete hardware redundancy” is really a collection of ‘single points of failure’ by HP’s definition (the one the customer cares about). Source: Ken Pomaranski, Hardware HA Architect 11. 21 Partitions Review 4/5/2001 3

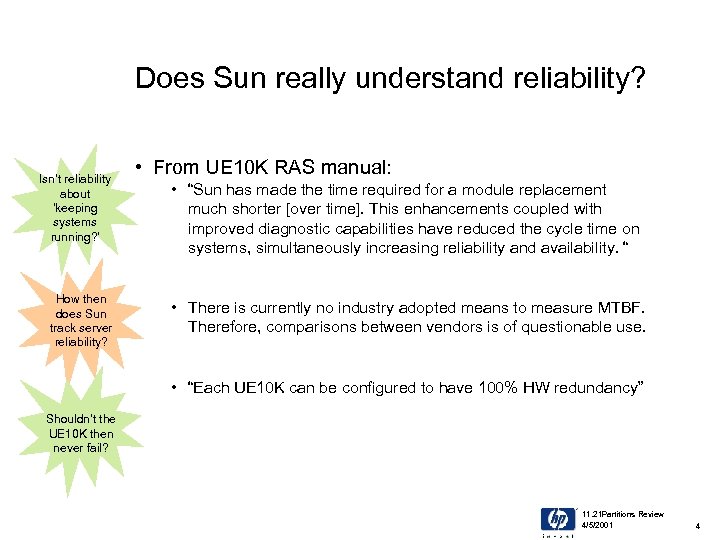

Does Sun really understand reliability? Isn’t reliability about ‘keeping systems running? ’ How then does Sun track server reliability? • From UE 10 K RAS manual: • “Sun has made the time required for a module replacement much shorter [over time]. This enhancements coupled with improved diagnostic capabilities have reduced the cycle time on systems, simultaneously increasing reliability and availability. “ • There is currently no industry adopted means to measure MTBF. Therefore, comparisons between vendors is of questionable use. • “Each UE 10 K can be configured to have 100% HW redundancy” Shouldn’t the UE 10 K then never fail? 11. 21 Partitions Review 4/5/2001 4

Does Sun really understand reliability? Isn’t reliability about ‘keeping systems running? ’ How then does Sun track server reliability? • From UE 10 K RAS manual: • “Sun has made the time required for a module replacement much shorter [over time]. This enhancements coupled with improved diagnostic capabilities have reduced the cycle time on systems, simultaneously increasing reliability and availability. “ • There is currently no industry adopted means to measure MTBF. Therefore, comparisons between vendors is of questionable use. • “Each UE 10 K can be configured to have 100% HW redundancy” Shouldn’t the UE 10 K then never fail? 11. 21 Partitions Review 4/5/2001 4

Sun’s Customers Understand! • Topping their list of complaints are the frequency of server crashes caused by the problem [memory], fixes that don't work and Sun's tendency to initially blame the problem on other factors before acknowledging it - often only under a nondisclosure agreement. – Computer World – 9/04/2000 • "They treated the whole thing like a cover-up“, said one user at a large utility in the Western U. S. who asked not to be named. – Computer World – 9/04/00 • “The long-standing nature of the problem and Sun's handling of the issue raise troubling questions about the quality of Sun's hardware and support” – Gartner group • Engineers have long known that memory chips can be disrupted by radiation and other environmental factors. That is why Hewlett-Packard and IBM use error-correcting code, or ECC, which detects cache errors and restores bits that were changed by mistake. – Forbes 11/13/2000 • Sun servers lack ECC protection. "Frankly, we just missed it. It's something we regret at this point, " Shoemaker [Sun executive VP] says. – Forbes 11/13/2000 What else have they ‘missed’? ? 11. 21 Partitions Review 4/5/2001 5

Sun’s Customers Understand! • Topping their list of complaints are the frequency of server crashes caused by the problem [memory], fixes that don't work and Sun's tendency to initially blame the problem on other factors before acknowledging it - often only under a nondisclosure agreement. – Computer World – 9/04/2000 • "They treated the whole thing like a cover-up“, said one user at a large utility in the Western U. S. who asked not to be named. – Computer World – 9/04/00 • “The long-standing nature of the problem and Sun's handling of the issue raise troubling questions about the quality of Sun's hardware and support” – Gartner group • Engineers have long known that memory chips can be disrupted by radiation and other environmental factors. That is why Hewlett-Packard and IBM use error-correcting code, or ECC, which detects cache errors and restores bits that were changed by mistake. – Forbes 11/13/2000 • Sun servers lack ECC protection. "Frankly, we just missed it. It's something we regret at this point, " Shoemaker [Sun executive VP] says. – Forbes 11/13/2000 What else have they ‘missed’? ? 11. 21 Partitions Review 4/5/2001 5

Sun’s UE 10 K Dynamic Reconfiguration Weaknesses Sun’s UE 10 K implementation of DR is not quite as dynamic as SUN would have you believe. It’s a marketing tale!!! • Hot swapping I/O requires that CPU and memory also be brought down. • Any DR activity requires that the database be shut down, therefore making applications unavailable during the process. • DR cannot be used in combination with memory interleaving across system boards which reduces maximum performance. Sun customers have to choose between good system performance or DR functionality, but cannot get both at the same time! • DR is not supported in combination with Sun. Cluster fail-over. Since during a DR operation the system halts, Sun. Cluster considers this system to be failing and starts a fail-over procedure to another system. Sun customers have to choose between a true multi-system, high availability solution and the use of DR, but cannot get both at the same time! • DR conflicts with Intimate Shared Memory (ISM) used by demanding applications. To improve performance, most memory intensive applications, like databases, make use of the Intimate Shared Memory (ISM) capability in the E 10000. Most applications using ISM do not allow dynamic addition or removal of their shared memory allocation. Using memory intensive applications with ISM (like large databases) and making the most efficient use of partitions prevent the use of DR. • Deactivating/moving a system board with full memory can take 15 minutes (backup and rearrange memory contents). All activities in the affected partitions(s) have to be paused during that time! (To compensate Sun introduced Turbo. DR boards with just CPU’s, no memory. . . ) Source: John Wiltschut, BSTO Marketing 11. 21 Partitions Review 4/5/2001 6

Sun’s UE 10 K Dynamic Reconfiguration Weaknesses Sun’s UE 10 K implementation of DR is not quite as dynamic as SUN would have you believe. It’s a marketing tale!!! • Hot swapping I/O requires that CPU and memory also be brought down. • Any DR activity requires that the database be shut down, therefore making applications unavailable during the process. • DR cannot be used in combination with memory interleaving across system boards which reduces maximum performance. Sun customers have to choose between good system performance or DR functionality, but cannot get both at the same time! • DR is not supported in combination with Sun. Cluster fail-over. Since during a DR operation the system halts, Sun. Cluster considers this system to be failing and starts a fail-over procedure to another system. Sun customers have to choose between a true multi-system, high availability solution and the use of DR, but cannot get both at the same time! • DR conflicts with Intimate Shared Memory (ISM) used by demanding applications. To improve performance, most memory intensive applications, like databases, make use of the Intimate Shared Memory (ISM) capability in the E 10000. Most applications using ISM do not allow dynamic addition or removal of their shared memory allocation. Using memory intensive applications with ISM (like large databases) and making the most efficient use of partitions prevent the use of DR. • Deactivating/moving a system board with full memory can take 15 minutes (backup and rearrange memory contents). All activities in the affected partitions(s) have to be paused during that time! (To compensate Sun introduced Turbo. DR boards with just CPU’s, no memory. . . ) Source: John Wiltschut, BSTO Marketing 11. 21 Partitions Review 4/5/2001 6

Why Sun is being defensive: Superdome vs. E 10000 7

Why Sun is being defensive: Superdome vs. E 10000 7

Sun blames HP and IBM for copying the E 10000 The truth is: • Superdome is more original than the E 10000 has ever been: the E 10 K is an exact copy of the Cray CS 6400 • Sun is just playing catch-up with the E 10000’s inferior performance, reliability and functionality • The E 10000 is an end-of-line product based on old technology and without future expansion capabilities • Superdome is built as an advanced architecture based on the latest technology and with a very strong growth potential • Sun has never developed a high-end server by themselves. 11. 21 Partitions Review 4/5/2001 8

Sun blames HP and IBM for copying the E 10000 The truth is: • Superdome is more original than the E 10000 has ever been: the E 10 K is an exact copy of the Cray CS 6400 • Sun is just playing catch-up with the E 10000’s inferior performance, reliability and functionality • The E 10000 is an end-of-line product based on old technology and without future expansion capabilities • Superdome is built as an advanced architecture based on the latest technology and with a very strong growth potential • Sun has never developed a high-end server by themselves. 11. 21 Partitions Review 4/5/2001 8

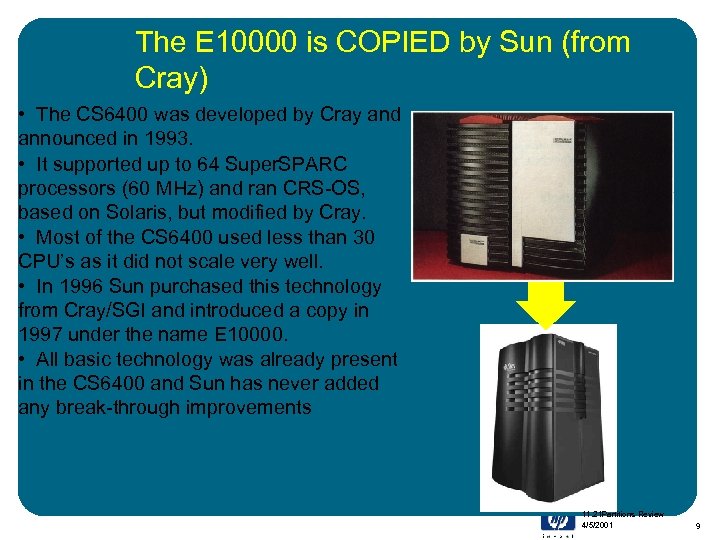

The E 10000 is COPIED by Sun (from Cray) • The CS 6400 was developed by Cray and announced in 1993. • It supported up to 64 Super. SPARC processors (60 MHz) and ran CRS-OS, based on Solaris, but modified by Cray. • Most of the CS 6400 used less than 30 CPU’s as it did not scale very well. • In 1996 Sun purchased this technology from Cray/SGI and introduced a copy in 1997 under the name E 10000. • All basic technology was already present in the CS 6400 and Sun has never added any break-through improvements 11. 21 Partitions Review 4/5/2001 9

The E 10000 is COPIED by Sun (from Cray) • The CS 6400 was developed by Cray and announced in 1993. • It supported up to 64 Super. SPARC processors (60 MHz) and ran CRS-OS, based on Solaris, but modified by Cray. • Most of the CS 6400 used less than 30 CPU’s as it did not scale very well. • In 1996 Sun purchased this technology from Cray/SGI and introduced a copy in 1997 under the name E 10000. • All basic technology was already present in the CS 6400 and Sun has never added any break-through improvements 11. 21 Partitions Review 4/5/2001 9

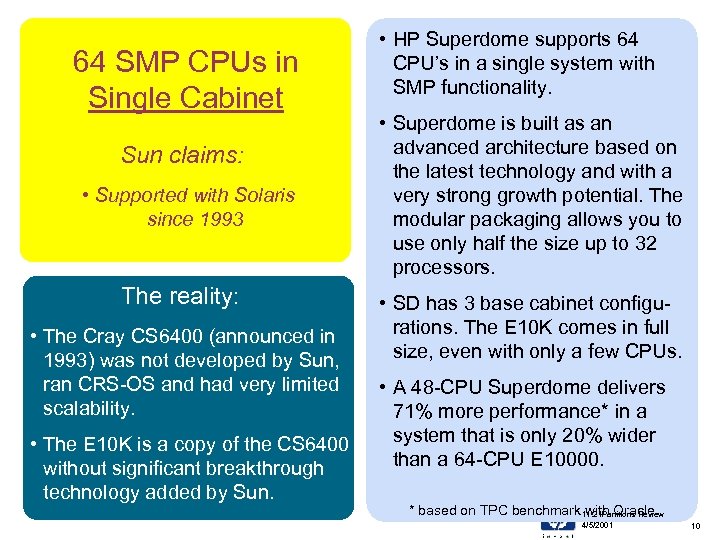

64 SMP CPUs in Single Cabinet Sun claims: • Supported with Solaris since 1993 The reality: • The Cray CS 6400 (announced in 1993) was not developed by Sun, ran CRS-OS and had very limited scalability. • The E 10 K is a copy of the CS 6400 without significant breakthrough technology added by Sun. • HP Superdome supports 64 CPU’s in a single system with SMP functionality. • Superdome is built as an advanced architecture based on the latest technology and with a very strong growth potential. The modular packaging allows you to use only half the size up to 32 processors. • SD has 3 base cabinet configurations. The E 10 K comes in full size, even with only a few CPUs. • A 48 -CPU Superdome delivers 71% more performance* in a system that is only 20% wider than a 64 -CPU E 10000. * based on TPC benchmark 11. 21 Partitions Review with Oracle 4/5/2001 10

64 SMP CPUs in Single Cabinet Sun claims: • Supported with Solaris since 1993 The reality: • The Cray CS 6400 (announced in 1993) was not developed by Sun, ran CRS-OS and had very limited scalability. • The E 10 K is a copy of the CS 6400 without significant breakthrough technology added by Sun. • HP Superdome supports 64 CPU’s in a single system with SMP functionality. • Superdome is built as an advanced architecture based on the latest technology and with a very strong growth potential. The modular packaging allows you to use only half the size up to 32 processors. • SD has 3 base cabinet configurations. The E 10 K comes in full size, even with only a few CPUs. • A 48 -CPU Superdome delivers 71% more performance* in a system that is only 20% wider than a 64 -CPU E 10000. * based on TPC benchmark 11. 21 Partitions Review with Oracle 4/5/2001 10

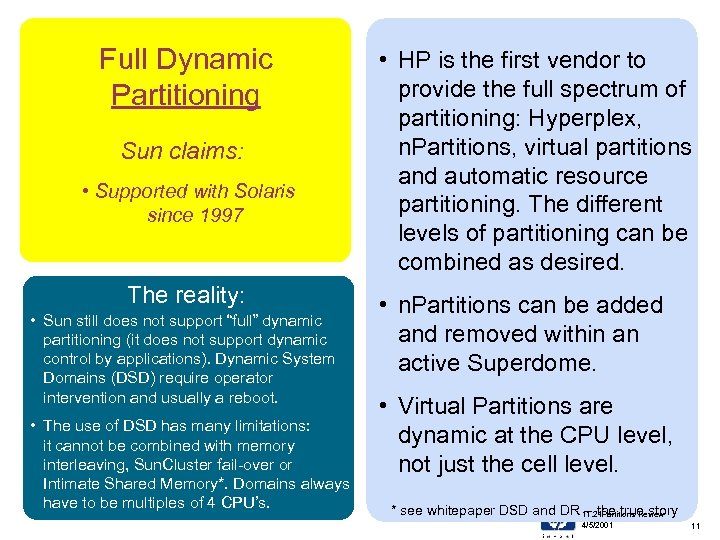

Full Dynamic Partitioning Sun claims: • Supported with Solaris since 1997 The reality: • Sun still does not support “full” dynamic partitioning (it does not support dynamic control by applications). Dynamic System Domains (DSD) require operator intervention and usually a reboot. • The use of DSD has many limitations: it cannot be combined with memory interleaving, Sun. Cluster fail-over or Intimate Shared Memory*. Domains always have to be multiples of 4 CPU’s. • HP is the first vendor to provide the full spectrum of partitioning: Hyperplex, n. Partitions, virtual partitions and automatic resource partitioning. The different levels of partitioning can be combined as desired. • n. Partitions can be added and removed within an active Superdome. • Virtual Partitions are dynamic at the CPU level, not just the cell level. * see whitepaper DSD and DR 11. 21 Partitions Review -- the true story 4/5/2001 11

Full Dynamic Partitioning Sun claims: • Supported with Solaris since 1997 The reality: • Sun still does not support “full” dynamic partitioning (it does not support dynamic control by applications). Dynamic System Domains (DSD) require operator intervention and usually a reboot. • The use of DSD has many limitations: it cannot be combined with memory interleaving, Sun. Cluster fail-over or Intimate Shared Memory*. Domains always have to be multiples of 4 CPU’s. • HP is the first vendor to provide the full spectrum of partitioning: Hyperplex, n. Partitions, virtual partitions and automatic resource partitioning. The different levels of partitioning can be combined as desired. • n. Partitions can be added and removed within an active Superdome. • Virtual Partitions are dynamic at the CPU level, not just the cell level. * see whitepaper DSD and DR 11. 21 Partitions Review -- the true story 4/5/2001 11

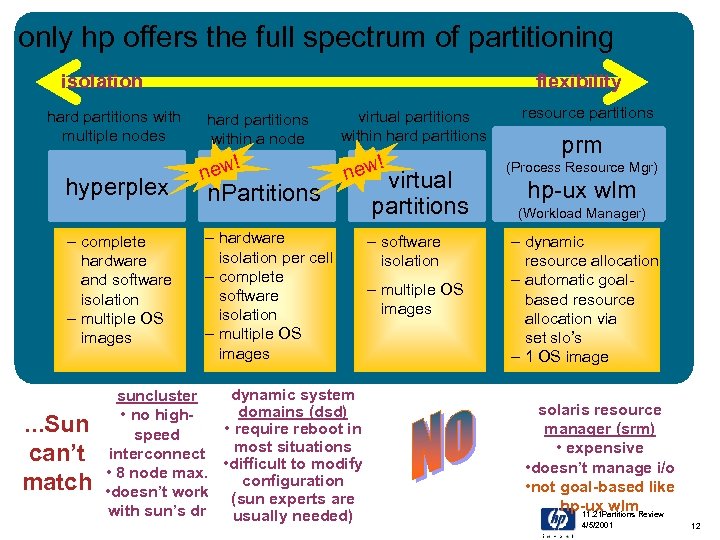

only hp offers the full spectrum of partitioning isolation hard partitions with multiple nodes hyperplex – complete hardware and software isolation – multiple OS images . . . Sun can’t match flexibility hard partitions within a node ! new n. Partitions – hardware isolation per cell – complete software isolation – multiple OS images virtual partitions within hard partitions ! new virtual partitions – software isolation – multiple OS images dynamic system suncluster domains (dsd) • no high • require reboot in speed most situations interconnect • difficult to modify • 8 node max. configuration • doesn’t work (sun experts are with sun’s dr usually needed) resource partitions prm (Process Resource Mgr) hp-ux wlm (Workload Manager) – dynamic resource allocation – automatic goalbased resource allocation via set slo’s – 1 OS image solaris resource manager (srm) • expensive • doesn’t manage i/o • not goal-based like hp-ux wlm. Review 11. 21 Partitions 4/5/2001 12

only hp offers the full spectrum of partitioning isolation hard partitions with multiple nodes hyperplex – complete hardware and software isolation – multiple OS images . . . Sun can’t match flexibility hard partitions within a node ! new n. Partitions – hardware isolation per cell – complete software isolation – multiple OS images virtual partitions within hard partitions ! new virtual partitions – software isolation – multiple OS images dynamic system suncluster domains (dsd) • no high • require reboot in speed most situations interconnect • difficult to modify • 8 node max. configuration • doesn’t work (sun experts are with sun’s dr usually needed) resource partitions prm (Process Resource Mgr) hp-ux wlm (Workload Manager) – dynamic resource allocation – automatic goalbased resource allocation via set slo’s – 1 OS image solaris resource manager (srm) • expensive • doesn’t manage i/o • not goal-based like hp-ux wlm. Review 11. 21 Partitions 4/5/2001 12

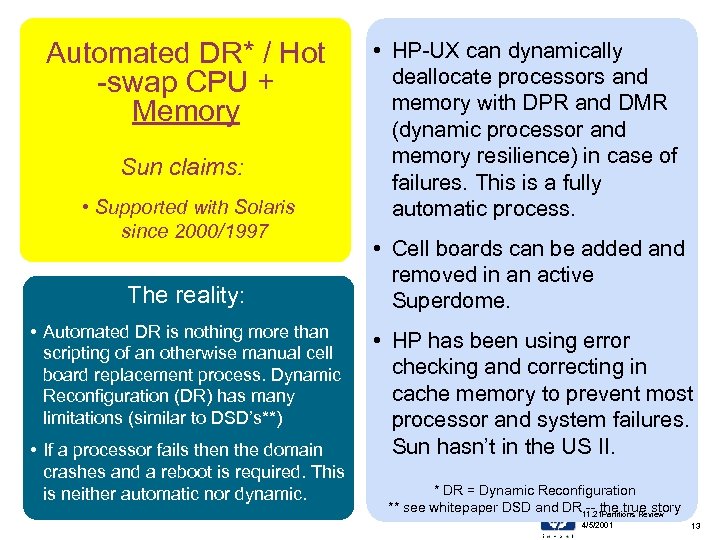

Automated DR* / Hot -swap CPU + Memory Sun claims: • Supported with Solaris since 2000/1997 The reality: • Automated DR is nothing more than scripting of an otherwise manual cell board replacement process. Dynamic Reconfiguration (DR) has many limitations (similar to DSD’s**) • If a processor fails then the domain crashes and a reboot is required. This is neither automatic nor dynamic. • HP-UX can dynamically deallocate processors and memory with DPR and DMR (dynamic processor and memory resilience) in case of failures. This is a fully automatic process. • Cell boards can be added and removed in an active Superdome. • HP has been using error checking and correcting in cache memory to prevent most processor and system failures. Sun hasn’t in the US II. * DR = Dynamic Reconfiguration ** see whitepaper DSD and DR 11. 21 Partitions Review -- the true story 4/5/2001 13

Automated DR* / Hot -swap CPU + Memory Sun claims: • Supported with Solaris since 2000/1997 The reality: • Automated DR is nothing more than scripting of an otherwise manual cell board replacement process. Dynamic Reconfiguration (DR) has many limitations (similar to DSD’s**) • If a processor fails then the domain crashes and a reboot is required. This is neither automatic nor dynamic. • HP-UX can dynamically deallocate processors and memory with DPR and DMR (dynamic processor and memory resilience) in case of failures. This is a fully automatic process. • Cell boards can be added and removed in an active Superdome. • HP has been using error checking and correcting in cache memory to prevent most processor and system failures. Sun hasn’t in the US II. * DR = Dynamic Reconfiguration ** see whitepaper DSD and DR 11. 21 Partitions Review -- the true story 4/5/2001 13

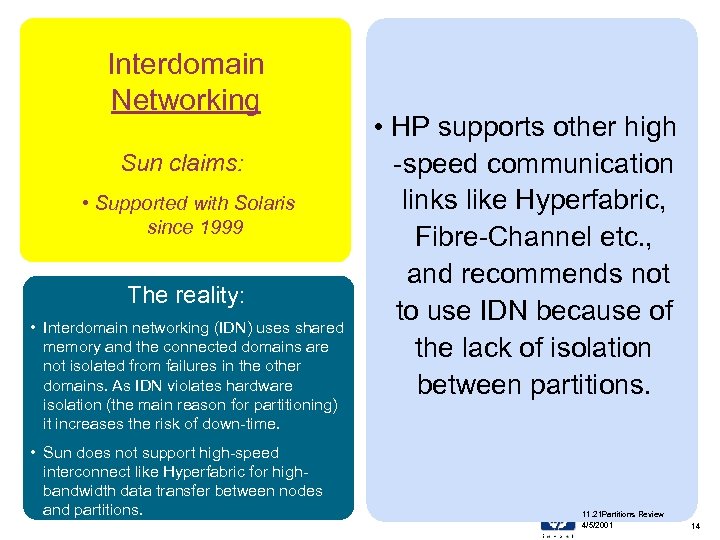

Interdomain Networking Sun claims: • Supported with Solaris since 1999 The reality: • Interdomain networking (IDN) uses shared memory and the connected domains are not isolated from failures in the other domains. As IDN violates hardware isolation (the main reason for partitioning) it increases the risk of down-time. • Sun does not support high-speed interconnect like Hyperfabric for highbandwidth data transfer between nodes and partitions. • HP supports other high -speed communication links like Hyperfabric, Fibre-Channel etc. , and recommends not to use IDN because of the lack of isolation between partitions. 11. 21 Partitions Review 4/5/2001 14

Interdomain Networking Sun claims: • Supported with Solaris since 1999 The reality: • Interdomain networking (IDN) uses shared memory and the connected domains are not isolated from failures in the other domains. As IDN violates hardware isolation (the main reason for partitioning) it increases the risk of down-time. • Sun does not support high-speed interconnect like Hyperfabric for highbandwidth data transfer between nodes and partitions. • HP supports other high -speed communication links like Hyperfabric, Fibre-Channel etc. , and recommends not to use IDN because of the lack of isolation between partitions. 11. 21 Partitions Review 4/5/2001 14

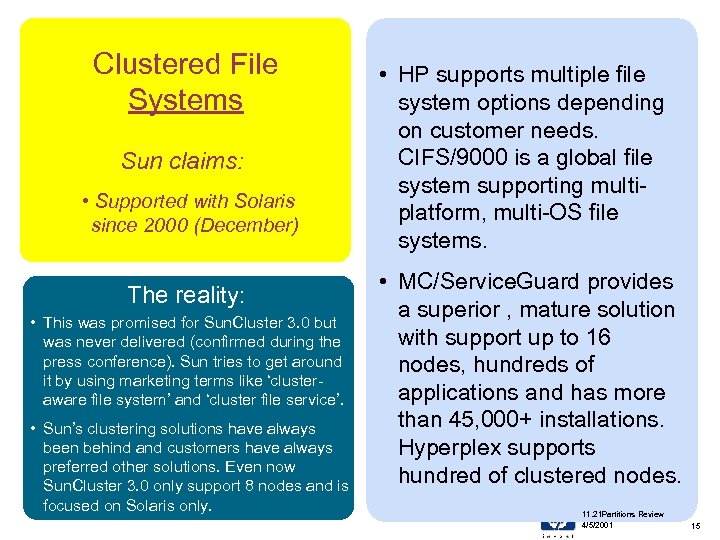

Clustered File Systems Sun claims: • Supported with Solaris since 2000 (December) The reality: • This was promised for Sun. Cluster 3. 0 but was never delivered (confirmed during the press conference). Sun tries to get around it by using marketing terms like ‘clusteraware file system’ and ‘cluster file service’. • Sun’s clustering solutions have always been behind and customers have always preferred other solutions. Even now Sun. Cluster 3. 0 only support 8 nodes and is focused on Solaris only. • HP supports multiple file system options depending on customer needs. CIFS/9000 is a global file system supporting multiplatform, multi-OS file systems. • MC/Service. Guard provides a superior , mature solution with support up to 16 nodes, hundreds of applications and has more than 45, 000+ installations. Hyperplex supports hundred of clustered nodes. 11. 21 Partitions Review 4/5/2001 15

Clustered File Systems Sun claims: • Supported with Solaris since 2000 (December) The reality: • This was promised for Sun. Cluster 3. 0 but was never delivered (confirmed during the press conference). Sun tries to get around it by using marketing terms like ‘clusteraware file system’ and ‘cluster file service’. • Sun’s clustering solutions have always been behind and customers have always preferred other solutions. Even now Sun. Cluster 3. 0 only support 8 nodes and is focused on Solaris only. • HP supports multiple file system options depending on customer needs. CIFS/9000 is a global file system supporting multiplatform, multi-OS file systems. • MC/Service. Guard provides a superior , mature solution with support up to 16 nodes, hundreds of applications and has more than 45, 000+ installations. Hyperplex supports hundred of clustered nodes. 11. 21 Partitions Review 4/5/2001 15

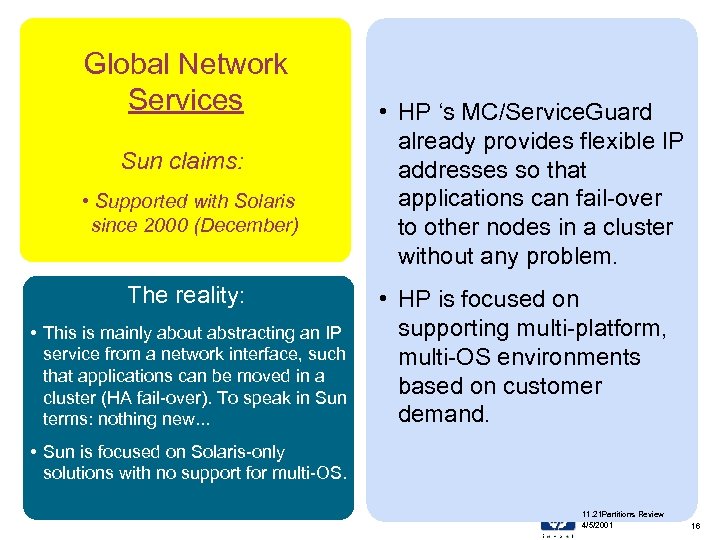

Global Network Services Sun claims: • Supported with Solaris since 2000 (December) The reality: • This is mainly about abstracting an IP service from a network interface, such that applications can be moved in a cluster (HA fail-over). To speak in Sun terms: nothing new. . . • HP ‘s MC/Service. Guard already provides flexible IP addresses so that applications can fail-over to other nodes in a cluster without any problem. • HP is focused on supporting multi-platform, multi-OS environments based on customer demand. • Sun is focused on Solaris-only solutions with no support for multi-OS. 11. 21 Partitions Review 4/5/2001 16

Global Network Services Sun claims: • Supported with Solaris since 2000 (December) The reality: • This is mainly about abstracting an IP service from a network interface, such that applications can be moved in a cluster (HA fail-over). To speak in Sun terms: nothing new. . . • HP ‘s MC/Service. Guard already provides flexible IP addresses so that applications can fail-over to other nodes in a cluster without any problem. • HP is focused on supporting multi-platform, multi-OS environments based on customer demand. • Sun is focused on Solaris-only solutions with no support for multi-OS. 11. 21 Partitions Review 4/5/2001 16

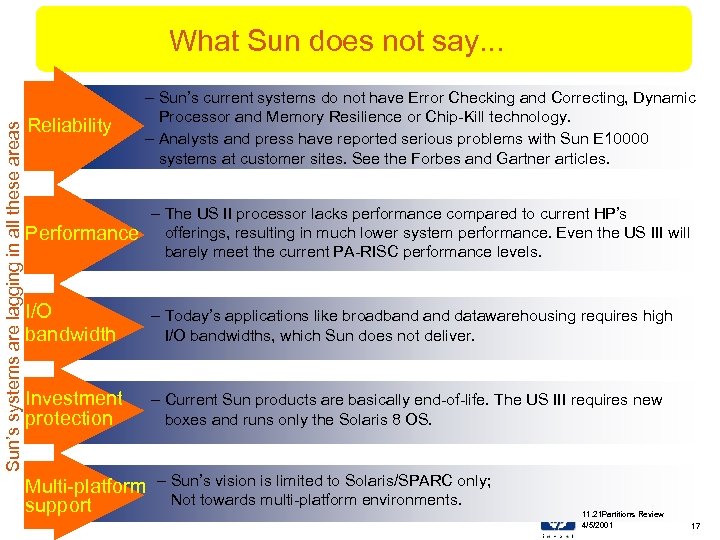

Sun’s systems are lagging in all these areas What Sun does not say. . . Reliability – Sun’s current systems do not have Error Checking and Correcting, Dynamic Processor and Memory Resilience or Chip-Kill technology. – Analysts and press have reported serious problems with Sun E 10000 systems at customer sites. See the Forbes and Gartner articles. Performance – The US II processor lacks performance compared to current HP’s offerings, resulting in much lower system performance. Even the US III will barely meet the current PA-RISC performance levels. I/O bandwidth – Today’s applications like broadband datawarehousing requires high I/O bandwidths, which Sun does not deliver. Investment protection – Current Sun products are basically end-of-life. The US III requires new boxes and runs only the Solaris 8 OS. Multi-platform – Sun’s vision is limited to Solaris/SPARC only; Not towards multi-platform environments. support 11. 21 Partitions Review 4/5/2001 17

Sun’s systems are lagging in all these areas What Sun does not say. . . Reliability – Sun’s current systems do not have Error Checking and Correcting, Dynamic Processor and Memory Resilience or Chip-Kill technology. – Analysts and press have reported serious problems with Sun E 10000 systems at customer sites. See the Forbes and Gartner articles. Performance – The US II processor lacks performance compared to current HP’s offerings, resulting in much lower system performance. Even the US III will barely meet the current PA-RISC performance levels. I/O bandwidth – Today’s applications like broadband datawarehousing requires high I/O bandwidths, which Sun does not deliver. Investment protection – Current Sun products are basically end-of-life. The US III requires new boxes and runs only the Solaris 8 OS. Multi-platform – Sun’s vision is limited to Solaris/SPARC only; Not towards multi-platform environments. support 11. 21 Partitions Review 4/5/2001 17

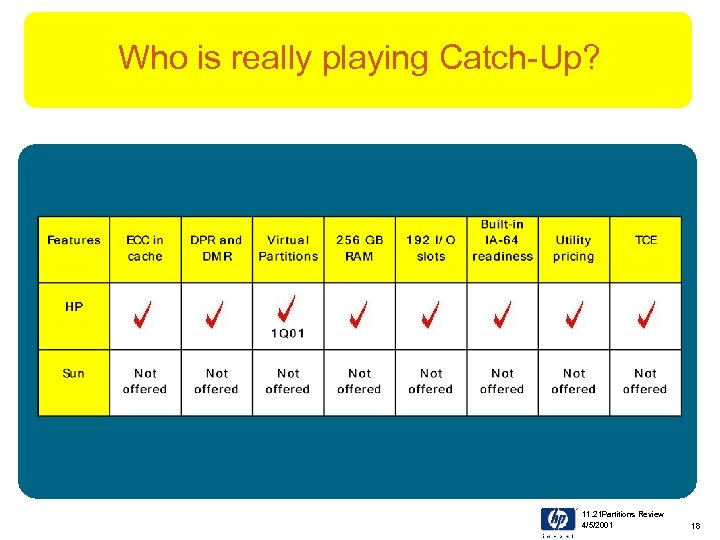

Who is really playing Catch-Up? 11. 21 Partitions Review 4/5/2001 18

Who is really playing Catch-Up? 11. 21 Partitions Review 4/5/2001 18

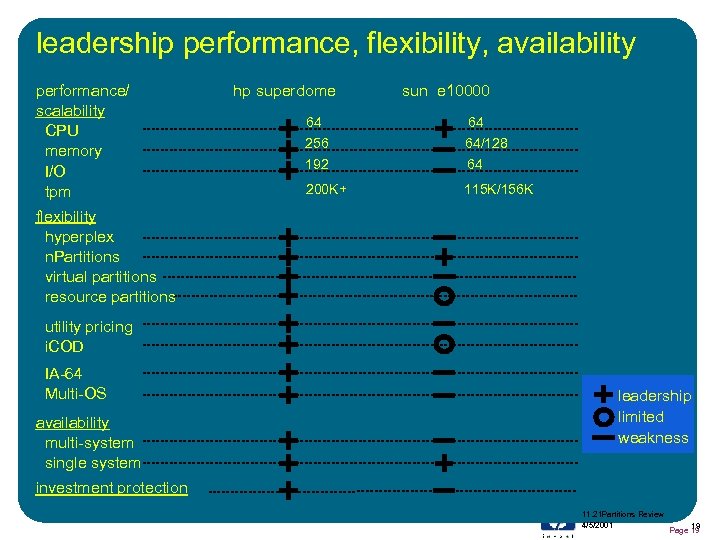

leadership performance, flexibility, availability performance/ scalability CPU memory I/O tpm hp superdome sun e 10000 64 64 256 192 64/128 64 200 K+ 115 K/156 K flexibility hyperplex n. Partitions virtual partitions resource partitions utility pricing i. COD IA-64 Multi-OS availability multi-system single system leadership limited weakness investment protection 11. 21 Partitions Review 4/5/2001 19 Page 19

leadership performance, flexibility, availability performance/ scalability CPU memory I/O tpm hp superdome sun e 10000 64 64 256 192 64/128 64 200 K+ 115 K/156 K flexibility hyperplex n. Partitions virtual partitions resource partitions utility pricing i. COD IA-64 Multi-OS availability multi-system single system leadership limited weakness investment protection 11. 21 Partitions Review 4/5/2001 19 Page 19

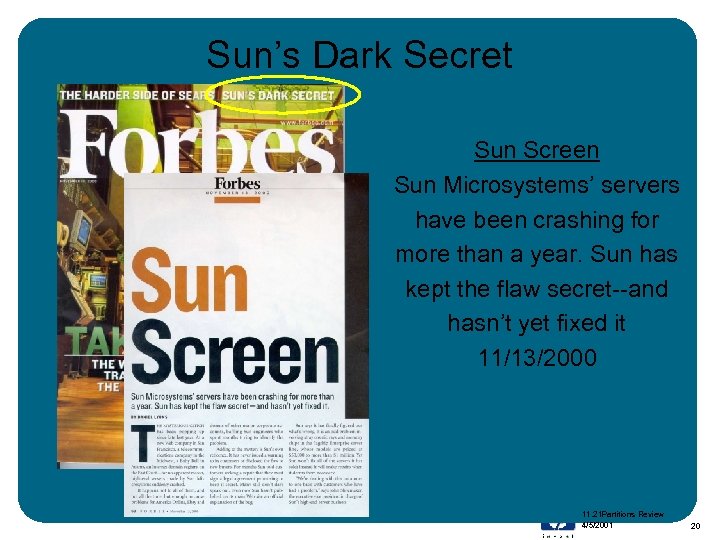

Sun’s Dark Secret Sun Screen Sun Microsystems’ servers have been crashing for more than a year. Sun has kept the flaw secret--and hasn’t yet fixed it 11/13/2000 11. 21 Partitions Review 4/5/2001 20

Sun’s Dark Secret Sun Screen Sun Microsystems’ servers have been crashing for more than a year. Sun has kept the flaw secret--and hasn’t yet fixed it 11/13/2000 11. 21 Partitions Review 4/5/2001 20

Sun and HP Reliability Comparisons 21

Sun and HP Reliability Comparisons 21

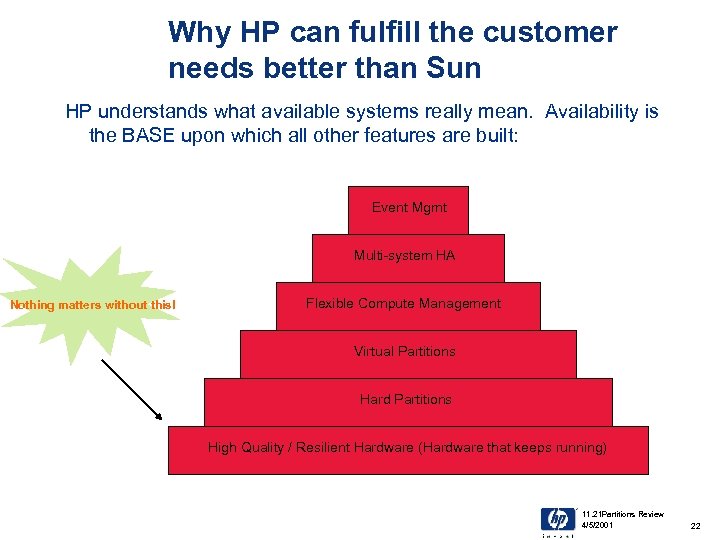

Why HP can fulfill the customer needs better than Sun HP understands what available systems really mean. Availability is the BASE upon which all other features are built: Event Mgmt Multi-system HA Nothing matters without this! Flexible Compute Management Virtual Partitions Hard Partitions High Quality / Resilient Hardware (Hardware that keeps running) 11. 21 Partitions Review 4/5/2001 22

Why HP can fulfill the customer needs better than Sun HP understands what available systems really mean. Availability is the BASE upon which all other features are built: Event Mgmt Multi-system HA Nothing matters without this! Flexible Compute Management Virtual Partitions Hard Partitions High Quality / Resilient Hardware (Hardware that keeps running) 11. 21 Partitions Review 4/5/2001 22

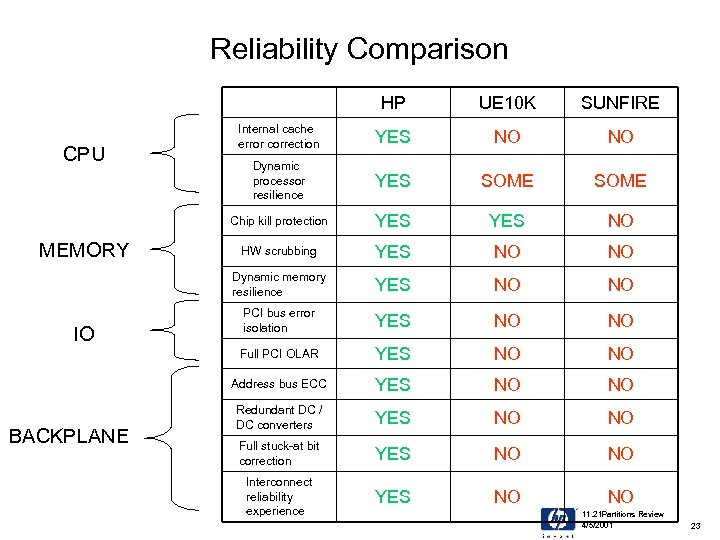

Reliability Comparison HP NO NO Dynamic processor resilience YES SOME YES NO HW scrubbing YES NO NO PCI bus error isolation YES NO NO Address bus ECC BACKPLANE YES Full PCI OLAR IO Internal cache error correction Dynamic memory resilience MEMORY SUNFIRE Chip kill protection CPU UE 10 K YES NO NO Redundant DC / DC converters YES NO NO Full stuck-at bit correction YES NO NO Interconnect reliability experience YES NO NO 11. 21 Partitions Review 4/5/2001 23

Reliability Comparison HP NO NO Dynamic processor resilience YES SOME YES NO HW scrubbing YES NO NO PCI bus error isolation YES NO NO Address bus ECC BACKPLANE YES Full PCI OLAR IO Internal cache error correction Dynamic memory resilience MEMORY SUNFIRE Chip kill protection CPU UE 10 K YES NO NO Redundant DC / DC converters YES NO NO Full stuck-at bit correction YES NO NO Interconnect reliability experience YES NO NO 11. 21 Partitions Review 4/5/2001 23

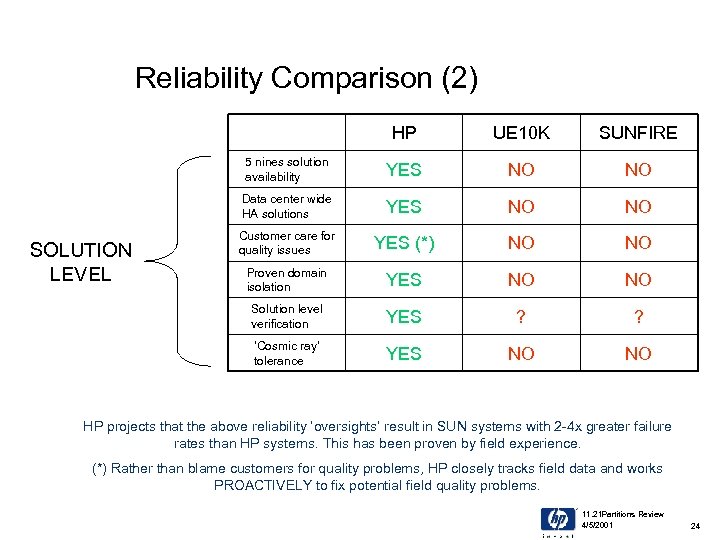

Reliability Comparison (2) HP SUNFIRE 5 nines solution availability YES NO NO Data center wide HA solutions YES NO NO Customer care for quality issues YES (*) NO NO Proven domain isolation YES NO NO Solution level verification YES ? ? ‘Cosmic ray’ tolerance SOLUTION LEVEL UE 10 K YES NO NO HP projects that the above reliability ‘oversights’ result in SUN systems with 2 -4 x greater failure rates than HP systems. This has been proven by field experience. (*) Rather than blame customers for quality problems, HP closely tracks field data and works PROACTIVELY to fix potential field quality problems. 11. 21 Partitions Review 4/5/2001 24

Reliability Comparison (2) HP SUNFIRE 5 nines solution availability YES NO NO Data center wide HA solutions YES NO NO Customer care for quality issues YES (*) NO NO Proven domain isolation YES NO NO Solution level verification YES ? ? ‘Cosmic ray’ tolerance SOLUTION LEVEL UE 10 K YES NO NO HP projects that the above reliability ‘oversights’ result in SUN systems with 2 -4 x greater failure rates than HP systems. This has been proven by field experience. (*) Rather than blame customers for quality problems, HP closely tracks field data and works PROACTIVELY to fix potential field quality problems. 11. 21 Partitions Review 4/5/2001 24