b21a939ba012ca60e2ad1679e3be499a.ppt

- Количество слайдов: 96

Structured P 2 P Overlays

Structured P 2 P Overlays

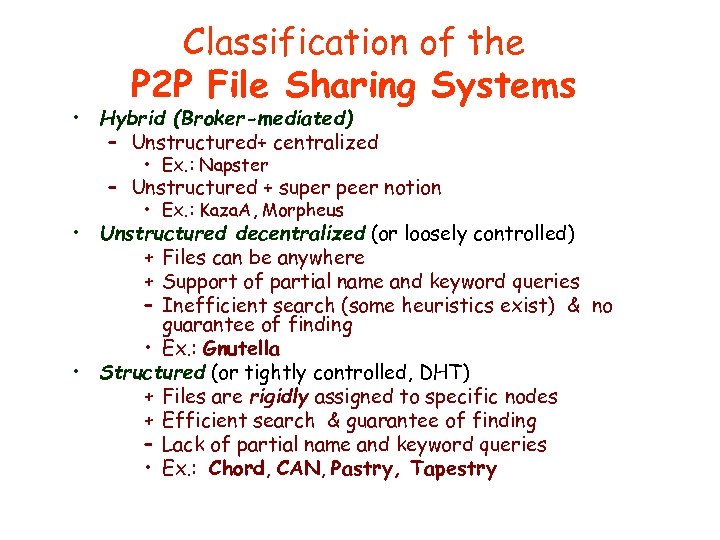

Classification of the P 2 P File Sharing Systems • Hybrid (Broker-mediated) – Unstructured+ centralized • Ex. : Napster – Unstructured + super peer notion • Ex. : Kaza. A, Morpheus • Unstructured decentralized (or loosely controlled) + Files can be anywhere + Support of partial name and keyword queries – Inefficient search (some heuristics exist) & no guarantee of finding • Ex. : Gnutella • Structured (or tightly controlled, DHT) + Files are rigidly assigned to specific nodes + Efficient search & guarantee of finding – Lack of partial name and keyword queries • Ex. : Chord, CAN, Pastry, Tapestry

Classification of the P 2 P File Sharing Systems • Hybrid (Broker-mediated) – Unstructured+ centralized • Ex. : Napster – Unstructured + super peer notion • Ex. : Kaza. A, Morpheus • Unstructured decentralized (or loosely controlled) + Files can be anywhere + Support of partial name and keyword queries – Inefficient search (some heuristics exist) & no guarantee of finding • Ex. : Gnutella • Structured (or tightly controlled, DHT) + Files are rigidly assigned to specific nodes + Efficient search & guarantee of finding – Lack of partial name and keyword queries • Ex. : Chord, CAN, Pastry, Tapestry

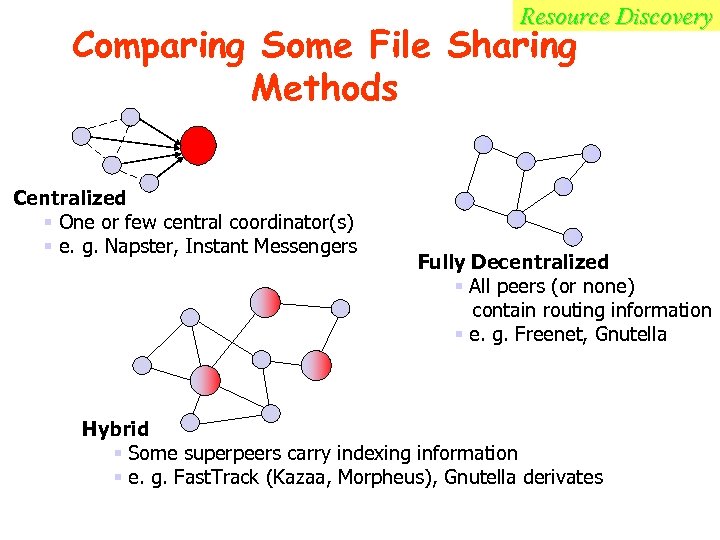

Resource Discovery Comparing Some File Sharing Methods Centralized § One or few central coordinator(s) § e. g. Napster, Instant Messengers Fully Decentralized § All peers (or none) contain routing information § e. g. Freenet, Gnutella Hybrid § Some superpeers carry indexing information § e. g. Fast. Track (Kazaa, Morpheus), Gnutella derivates

Resource Discovery Comparing Some File Sharing Methods Centralized § One or few central coordinator(s) § e. g. Napster, Instant Messengers Fully Decentralized § All peers (or none) contain routing information § e. g. Freenet, Gnutella Hybrid § Some superpeers carry indexing information § e. g. Fast. Track (Kazaa, Morpheus), Gnutella derivates

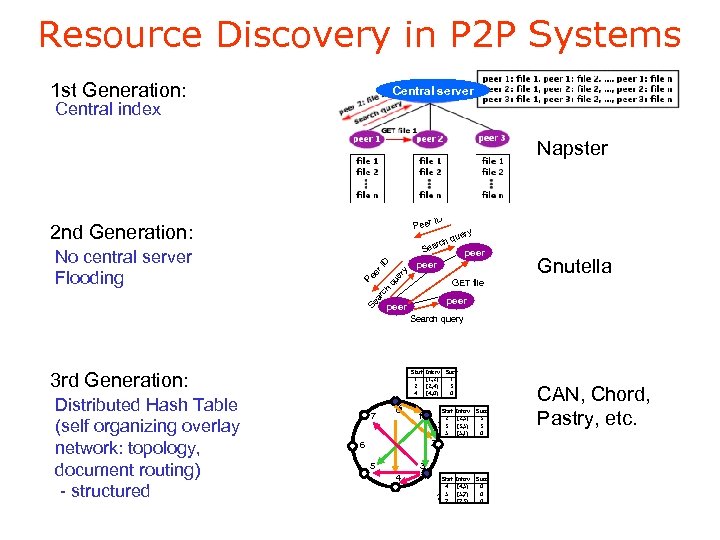

Resource Discovery in P 2 P Systems 1 st Generation: Central server Central index Napster Peer 2 nd Generation: ch ear ry que Gnutella GET file ch Se ar peer qu er y er I D S Pe No central server Flooding ID peer Search query Start Interv Succ 1 [1, 2) 1 2 [2, 4) 3 4 [4, 0) 0 3 rd Generation: Distributed Hash Table (self organizing overlay network: topology, document routing) - structured 7 6 0 Start Interv Succ 2 [2, 3) 3 1 3 [3, 5) 3 5 [5, 1) 0 1 2 6 5 3 4 Start Interv Succ 4 [4, 5) 0 2 5 [5, 7) 0 7 [7, 3) 0 CAN, Chord, Pastry, etc.

Resource Discovery in P 2 P Systems 1 st Generation: Central server Central index Napster Peer 2 nd Generation: ch ear ry que Gnutella GET file ch Se ar peer qu er y er I D S Pe No central server Flooding ID peer Search query Start Interv Succ 1 [1, 2) 1 2 [2, 4) 3 4 [4, 0) 0 3 rd Generation: Distributed Hash Table (self organizing overlay network: topology, document routing) - structured 7 6 0 Start Interv Succ 2 [2, 3) 3 1 3 [3, 5) 3 5 [5, 1) 0 1 2 6 5 3 4 Start Interv Succ 4 [4, 5) 0 2 5 [5, 7) 0 7 [7, 3) 0 CAN, Chord, Pastry, etc.

Challenges • Duplicated Messages – From loop and flooding • Missing some contents – From loop and TTL • Oriented to File • Why? – Unstructured Network – Too Specific

Challenges • Duplicated Messages – From loop and flooding • Missing some contents – From loop and TTL • Oriented to File • Why? – Unstructured Network – Too Specific

Structured P 2 P • Second generation P 2 P overlay networks • Self-organizing • Load balanced • Fault-tolerant • Scalable guarantees on numbers of hops to answer a query – Major difference with unstructured P 2 P systems • Based on a distributed hash table interface

Structured P 2 P • Second generation P 2 P overlay networks • Self-organizing • Load balanced • Fault-tolerant • Scalable guarantees on numbers of hops to answer a query – Major difference with unstructured P 2 P systems • Based on a distributed hash table interface

Distributed Hash Tables (DHT) • Distributed version of a hash table data structure • Stores (key, value) pairs – The key is like a filename – The value can be file contents • Goal: Efficiently insert/lookup/delete (key, value) pairs • Each peer stores a subset of (key, value) pairs in the system • Core operation: Find node responsible for a key – Map key to node – Efficiently route insert/lookup/delete request to this node

Distributed Hash Tables (DHT) • Distributed version of a hash table data structure • Stores (key, value) pairs – The key is like a filename – The value can be file contents • Goal: Efficiently insert/lookup/delete (key, value) pairs • Each peer stores a subset of (key, value) pairs in the system • Core operation: Find node responsible for a key – Map key to node – Efficiently route insert/lookup/delete request to this node

DHT Applications • Many services can be built on top of a DHT interface – – – – File sharing Archival storage Databases Naming, service discovery Chat service Rendezvous-based communication Publish/Subscribe

DHT Applications • Many services can be built on top of a DHT interface – – – – File sharing Archival storage Databases Naming, service discovery Chat service Rendezvous-based communication Publish/Subscribe

DHT Desirable Properties • Keys mapped evenly to all nodes in the network • Each node maintains information about only a few other nodes • Messages can be routed to a node efficiently • Node arrival/departures only affect a few nodes

DHT Desirable Properties • Keys mapped evenly to all nodes in the network • Each node maintains information about only a few other nodes • Messages can be routed to a node efficiently • Node arrival/departures only affect a few nodes

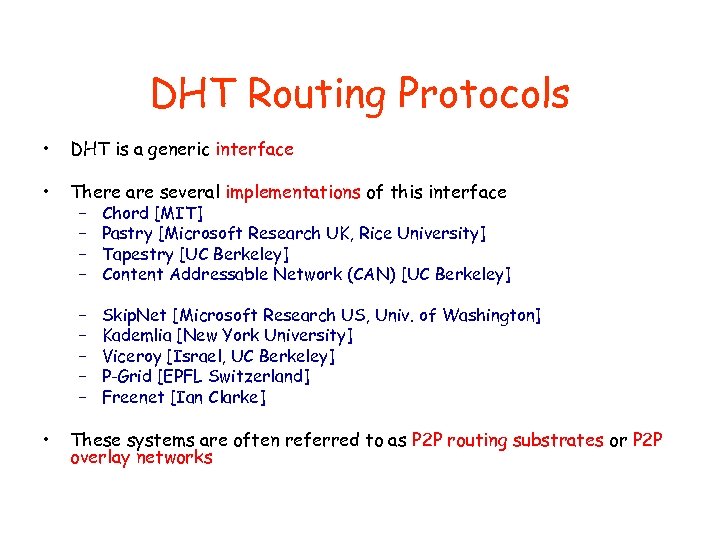

DHT Routing Protocols • DHT is a generic interface • There are several implementations of this interface – Chord [MIT] – Pastry [Microsoft Research UK, Rice University] – Tapestry [UC Berkeley] – Content Addressable Network (CAN) [UC Berkeley] – – – • Skip. Net [Microsoft Research US, Univ. of Washington] Kademlia [New York University] Viceroy [Israel, UC Berkeley] P-Grid [EPFL Switzerland] Freenet [Ian Clarke] These systems are often referred to as P 2 P routing substrates or P 2 P overlay networks

DHT Routing Protocols • DHT is a generic interface • There are several implementations of this interface – Chord [MIT] – Pastry [Microsoft Research UK, Rice University] – Tapestry [UC Berkeley] – Content Addressable Network (CAN) [UC Berkeley] – – – • Skip. Net [Microsoft Research US, Univ. of Washington] Kademlia [New York University] Viceroy [Israel, UC Berkeley] P-Grid [EPFL Switzerland] Freenet [Ian Clarke] These systems are often referred to as P 2 P routing substrates or P 2 P overlay networks

Structured Overlays • Properties – Topology is tightly controlled • Well-defined rules determine to which other nodes a node connects – Files placed at precisely specified locations • Hash function maps file names to nodes – Scalable routing based on file attributes

Structured Overlays • Properties – Topology is tightly controlled • Well-defined rules determine to which other nodes a node connects – Files placed at precisely specified locations • Hash function maps file names to nodes – Scalable routing based on file attributes

Second generation P 2 P systems • They guarantee a definite answer to a query in a bounded number of network hops. • They form a self-organizing overlay network. • They provide a load balanced, faulttolerant distributed hash table, in which items can be inserted and looked up in a bounded number of forwarding hops.

Second generation P 2 P systems • They guarantee a definite answer to a query in a bounded number of network hops. • They form a self-organizing overlay network. • They provide a load balanced, faulttolerant distributed hash table, in which items can be inserted and looked up in a bounded number of forwarding hops.

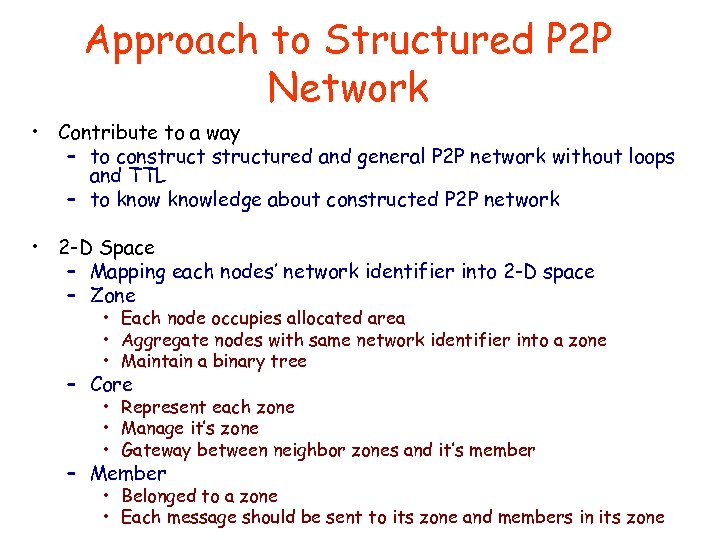

Approach to Structured P 2 P Network • Contribute to a way – to constructured and general P 2 P network without loops and TTL – to knowledge about constructed P 2 P network • 2 -D Space – Mapping each nodes’ network identifier into 2 -D space – Zone • Each node occupies allocated area • Aggregate nodes with same network identifier into a zone • Maintain a binary tree – Core • Represent each zone • Manage it’s zone • Gateway between neighbor zones and it’s member – Member • Belonged to a zone • Each message should be sent to its zone and members in its zone

Approach to Structured P 2 P Network • Contribute to a way – to constructured and general P 2 P network without loops and TTL – to knowledge about constructed P 2 P network • 2 -D Space – Mapping each nodes’ network identifier into 2 -D space – Zone • Each node occupies allocated area • Aggregate nodes with same network identifier into a zone • Maintain a binary tree – Core • Represent each zone • Manage it’s zone • Gateway between neighbor zones and it’s member – Member • Belonged to a zone • Each message should be sent to its zone and members in its zone

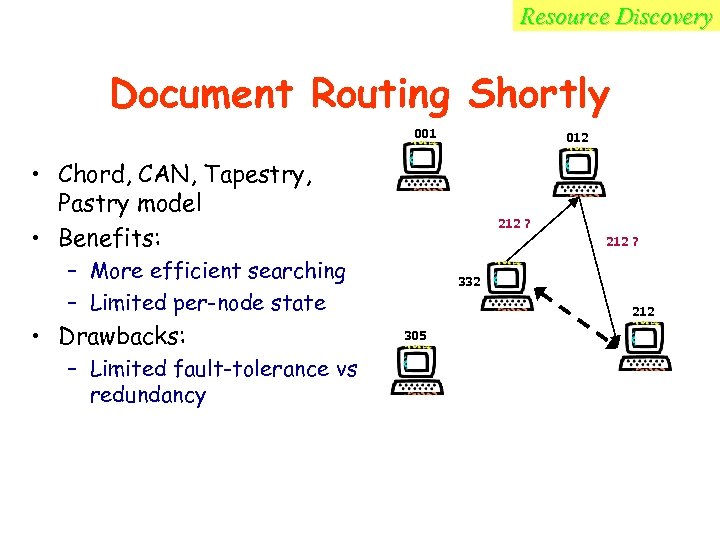

Resource Discovery Document Routing Shortly 001 012 • Chord, CAN, Tapestry, Pastry model • Benefits: 212 ? – More efficient searching – Limited per-node state • Drawbacks: – Limited fault-tolerance vs redundancy 332 212 305

Resource Discovery Document Routing Shortly 001 012 • Chord, CAN, Tapestry, Pastry model • Benefits: 212 ? – More efficient searching – Limited per-node state • Drawbacks: – Limited fault-tolerance vs redundancy 332 212 305

Scalability of P 2 P Systems • Peer-to-peer (P 2 P) file sharing systems are now one of the most popular Internet applications and have become a major source of Internet traffic • Thus, it is extremely important that these systems be scalable • Unfortunately, the initial designs for P 2 P systems have significant scaling problems: – Napster has a centralized directory service – Gnutella employs a flooding-based search mechanism that is not suitable for large systems

Scalability of P 2 P Systems • Peer-to-peer (P 2 P) file sharing systems are now one of the most popular Internet applications and have become a major source of Internet traffic • Thus, it is extremely important that these systems be scalable • Unfortunately, the initial designs for P 2 P systems have significant scaling problems: – Napster has a centralized directory service – Gnutella employs a flooding-based search mechanism that is not suitable for large systems

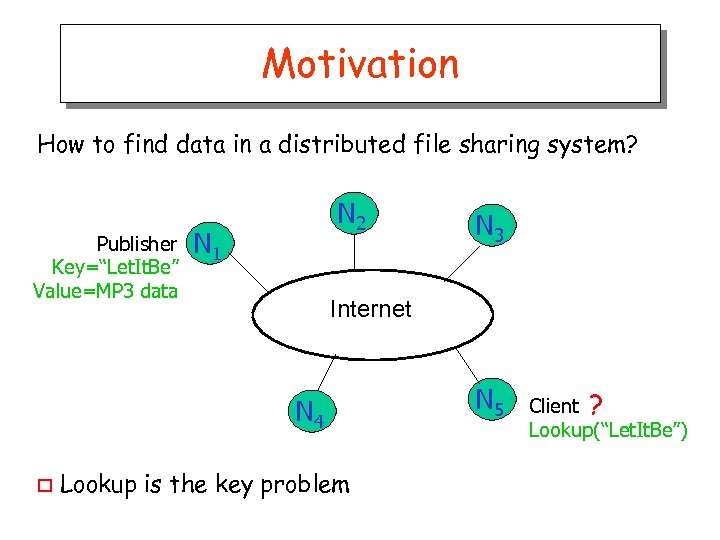

Motivation How to find data in a distributed file sharing system? Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 Internet N 4 o N 3 Lookup is the key problem N 5 Client ? Lookup(“Let. It. Be”)

Motivation How to find data in a distributed file sharing system? Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 Internet N 4 o N 3 Lookup is the key problem N 5 Client ? Lookup(“Let. It. Be”)

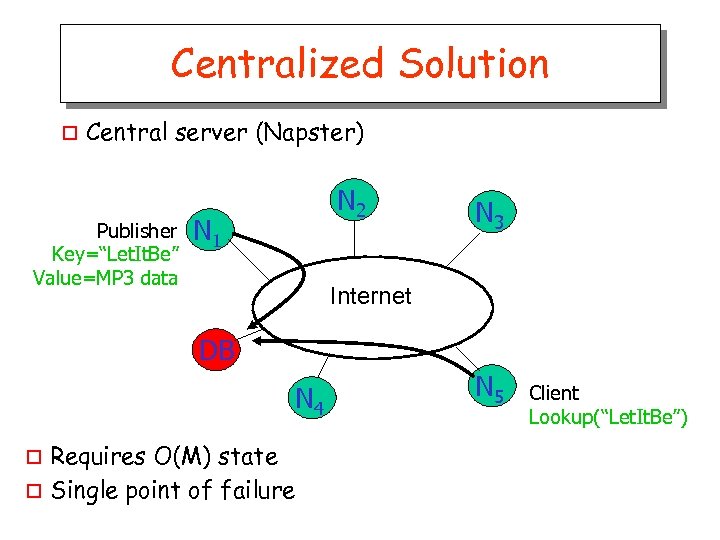

Centralized Solution o Central server (Napster) Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 N 3 Internet DB N 4 Requires O(M) state o Single point of failure o N 5 Client Lookup(“Let. It. Be”)

Centralized Solution o Central server (Napster) Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 N 3 Internet DB N 4 Requires O(M) state o Single point of failure o N 5 Client Lookup(“Let. It. Be”)

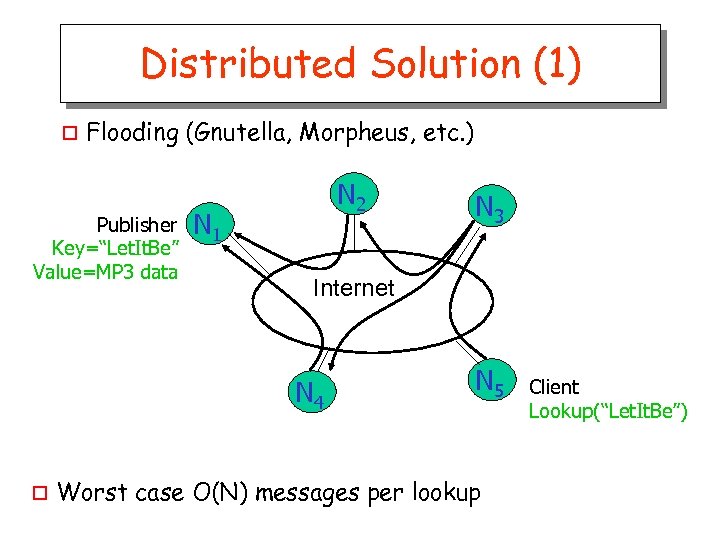

Distributed Solution (1) o Flooding (Gnutella, Morpheus, etc. ) Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 Internet N 4 o N 3 N 5 Worst case O(N) messages per lookup Client Lookup(“Let. It. Be”)

Distributed Solution (1) o Flooding (Gnutella, Morpheus, etc. ) Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 Internet N 4 o N 3 N 5 Worst case O(N) messages per lookup Client Lookup(“Let. It. Be”)

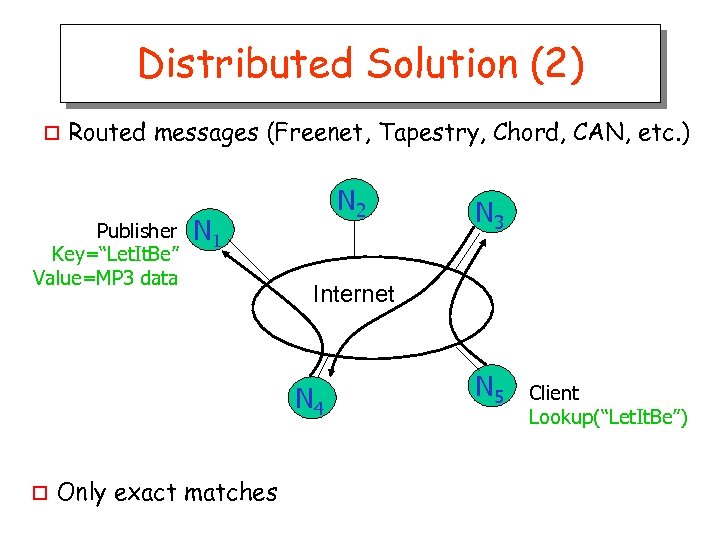

Distributed Solution (2) o Routed messages (Freenet, Tapestry, Chord, CAN, etc. ) Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 Internet N 4 o Only exact matches N 3 N 5 Client Lookup(“Let. It. Be”)

Distributed Solution (2) o Routed messages (Freenet, Tapestry, Chord, CAN, etc. ) Publisher Key=“Let. It. Be” Value=MP 3 data N 2 N 1 Internet N 4 o Only exact matches N 3 N 5 Client Lookup(“Let. It. Be”)

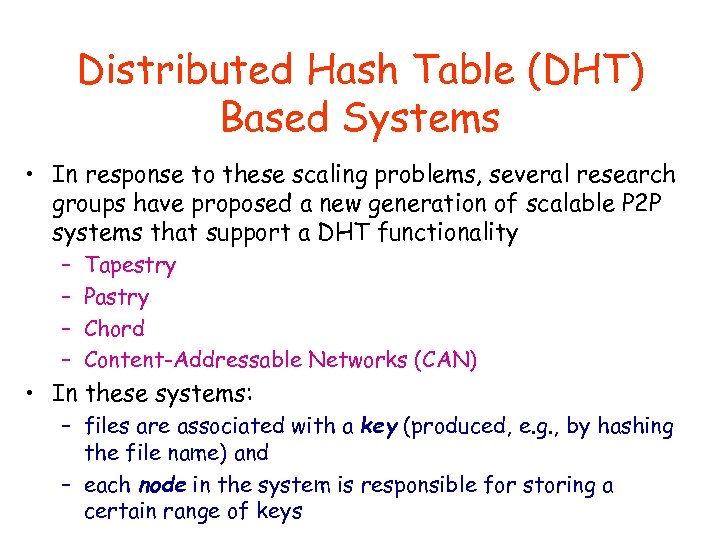

Distributed Hash Table (DHT) Based Systems • In response to these scaling problems, several research groups have proposed a new generation of scalable P 2 P systems that support a DHT functionality – – Tapestry Pastry Chord Content-Addressable Networks (CAN) • In these systems: – files are associated with a key (produced, e. g. , by hashing the file name) and – each node in the system is responsible for storing a certain range of keys

Distributed Hash Table (DHT) Based Systems • In response to these scaling problems, several research groups have proposed a new generation of scalable P 2 P systems that support a DHT functionality – – Tapestry Pastry Chord Content-Addressable Networks (CAN) • In these systems: – files are associated with a key (produced, e. g. , by hashing the file name) and – each node in the system is responsible for storing a certain range of keys

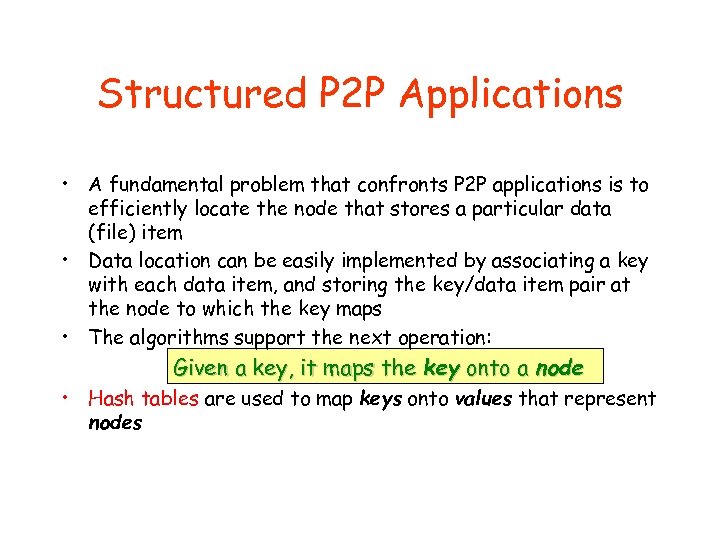

Structured P 2 P Applications • A fundamental problem that confronts P 2 P applications is to efficiently locate the node that stores a particular data (file) item • Data location can be easily implemented by associating a key with each data item, and storing the key/data item pair at the node to which the key maps • The algorithms support the next operation: Given a key, it maps the key onto a node • Hash tables are used to map keys onto values that represent nodes

Structured P 2 P Applications • A fundamental problem that confronts P 2 P applications is to efficiently locate the node that stores a particular data (file) item • Data location can be easily implemented by associating a key with each data item, and storing the key/data item pair at the node to which the key maps • The algorithms support the next operation: Given a key, it maps the key onto a node • Hash tables are used to map keys onto values that represent nodes

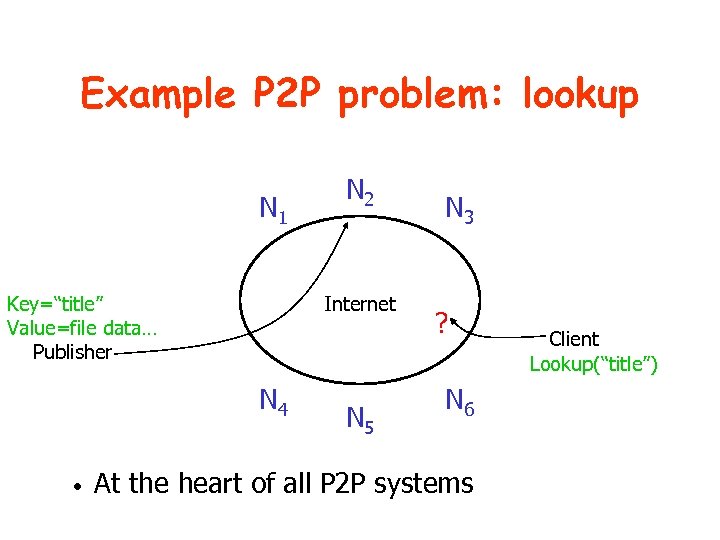

Example P 2 P problem: lookup N 1 Key=“title” Value=file data… Publisher Internet N 4 • N 2 N 5 N 3 ? N 6 At the heart of all P 2 P systems Client Lookup(“title”)

Example P 2 P problem: lookup N 1 Key=“title” Value=file data… Publisher Internet N 4 • N 2 N 5 N 3 ? N 6 At the heart of all P 2 P systems Client Lookup(“title”)

Structured P 2 P Applications • P 2 P routing protocols like Chord, Pastry, CAN, and Tapestry induce a connected overlay network across the Internet, with a rich structure that enables efficient key lookups • Such protocols have 2 parts: – Looking up file item in a specially constructed overlay structure – A protocol is specified that allows a node to join or leave the network, properly rearranging the ideal overlay to account for their presence or absence

Structured P 2 P Applications • P 2 P routing protocols like Chord, Pastry, CAN, and Tapestry induce a connected overlay network across the Internet, with a rich structure that enables efficient key lookups • Such protocols have 2 parts: – Looking up file item in a specially constructed overlay structure – A protocol is specified that allows a node to join or leave the network, properly rearranging the ideal overlay to account for their presence or absence

Looking Up • It is a basic operation in these DHT systems • lookup(key) returns the identity (e. g. , the IP address) of the node storing the object with that key • This operation allows nodes to put and get files based on their key, thereby supporting the hash-table-like interface

Looking Up • It is a basic operation in these DHT systems • lookup(key) returns the identity (e. g. , the IP address) of the node storing the object with that key • This operation allows nodes to put and get files based on their key, thereby supporting the hash-table-like interface

Document Routing • The core of these DHT systems is the routing algorithm • The DHT nodes form an overlay network with each node having several other nodes as neighbors • When a lookup(key) is issued, the lookup is routed through the overlay network to the node responsible for that key • The scalability of these DHT algorithms is tied directly to the efficiency of their routing algorithms

Document Routing • The core of these DHT systems is the routing algorithm • The DHT nodes form an overlay network with each node having several other nodes as neighbors • When a lookup(key) is issued, the lookup is routed through the overlay network to the node responsible for that key • The scalability of these DHT algorithms is tied directly to the efficiency of their routing algorithms

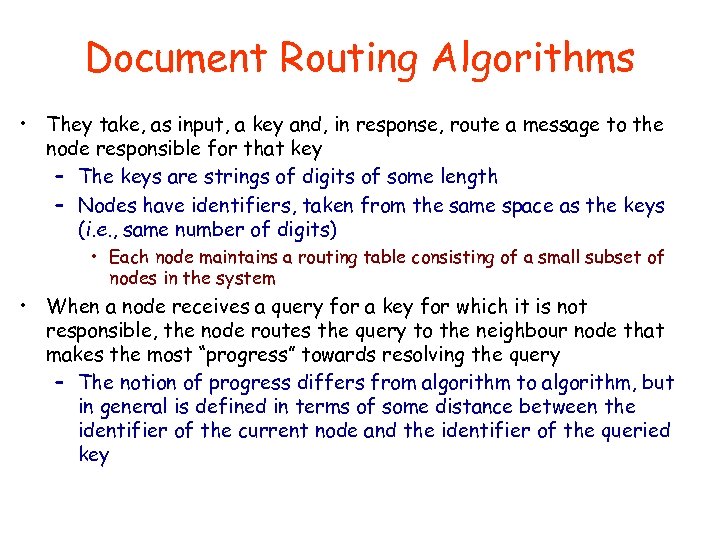

Document Routing Algorithms • They take, as input, a key and, in response, route a message to the node responsible for that key – The keys are strings of digits of some length – Nodes have identifiers, taken from the same space as the keys (i. e. , same number of digits) • Each node maintains a routing table consisting of a small subset of nodes in the system • When a node receives a query for a key for which it is not responsible, the node routes the query to the neighbour node that makes the most “progress” towards resolving the query – The notion of progress differs from algorithm to algorithm, but in general is defined in terms of some distance between the identifier of the current node and the identifier of the queried key

Document Routing Algorithms • They take, as input, a key and, in response, route a message to the node responsible for that key – The keys are strings of digits of some length – Nodes have identifiers, taken from the same space as the keys (i. e. , same number of digits) • Each node maintains a routing table consisting of a small subset of nodes in the system • When a node receives a query for a key for which it is not responsible, the node routes the query to the neighbour node that makes the most “progress” towards resolving the query – The notion of progress differs from algorithm to algorithm, but in general is defined in terms of some distance between the identifier of the current node and the identifier of the queried key

Content-Addressable Network (CAN) • A typical document routing method • Virtual Cartesian coordinate space is used • Entire space is partitioned amongst all the nodes – every node “owns” a zone in the overall space • Abstraction – can store data at “points” in the space – can route from one “point” to another • Point = node that owns the enclosing zone

Content-Addressable Network (CAN) • A typical document routing method • Virtual Cartesian coordinate space is used • Entire space is partitioned amongst all the nodes – every node “owns” a zone in the overall space • Abstraction – can store data at “points” in the space – can route from one “point” to another • Point = node that owns the enclosing zone

Basic Concept of CAN Data stored in the CAN is addressed by name (i. e. key), not location (i. e. IP address) Task of the routing: how find the place of a file?

Basic Concept of CAN Data stored in the CAN is addressed by name (i. e. key), not location (i. e. IP address) Task of the routing: how find the place of a file?

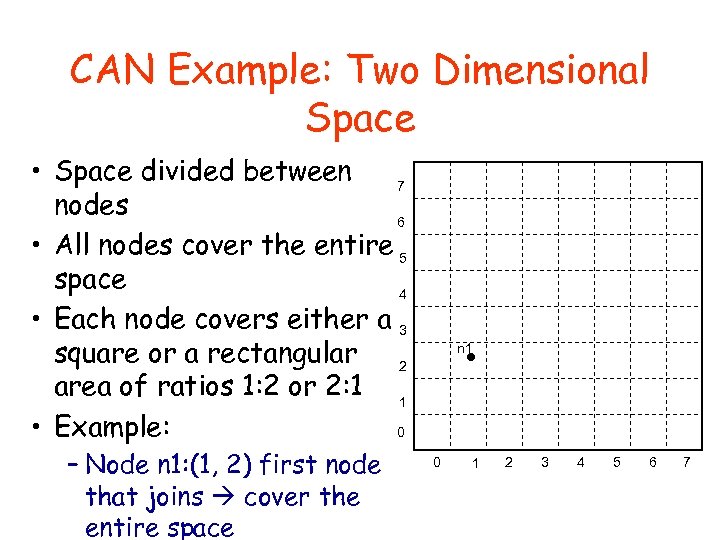

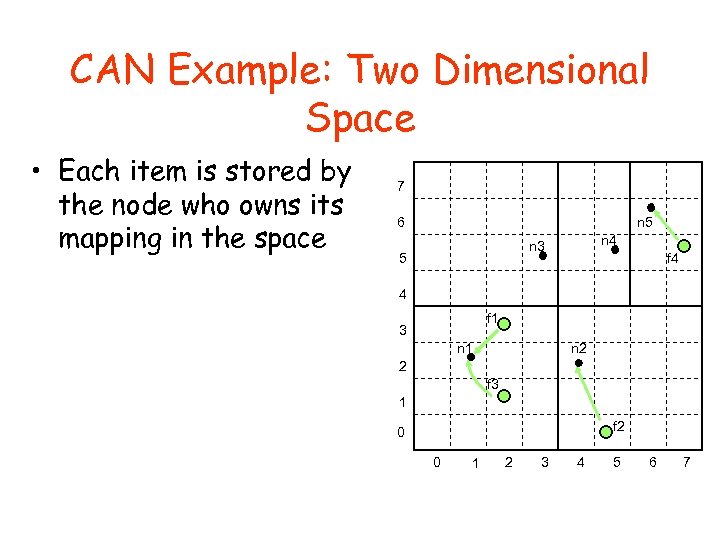

CAN Example: Two Dimensional Space • Space divided between 7 nodes 6 • All nodes cover the entire 5 space 4 • Each node covers either a 3 square or a rectangular 2 area of ratios 1: 2 or 2: 1 1 • Example: 0 – Node n 1: (1, 2) first node that joins cover the entire space n 1 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Space divided between 7 nodes 6 • All nodes cover the entire 5 space 4 • Each node covers either a 3 square or a rectangular 2 area of ratios 1: 2 or 2: 1 1 • Example: 0 – Node n 1: (1, 2) first node that joins cover the entire space n 1 0 1 2 3 4 5 6 7

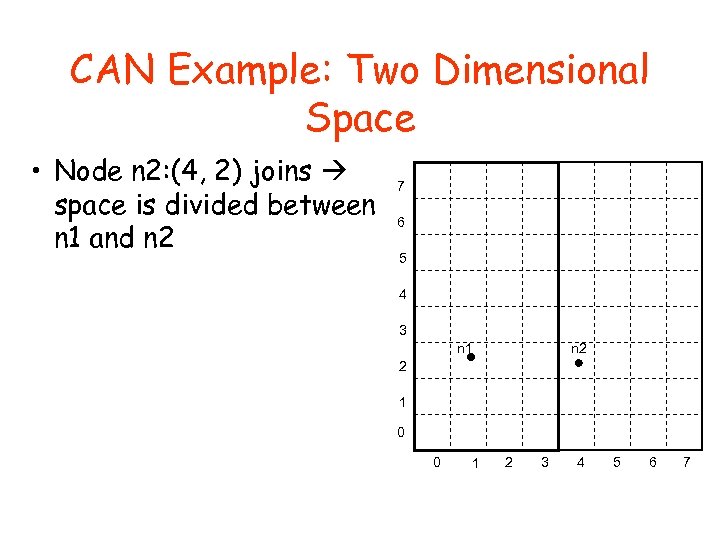

CAN Example: Two Dimensional Space • Node n 2: (4, 2) joins space is divided between n 1 and n 2 7 6 5 4 3 n 2 n 1 2 1 0 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Node n 2: (4, 2) joins space is divided between n 1 and n 2 7 6 5 4 3 n 2 n 1 2 1 0 0 1 2 3 4 5 6 7

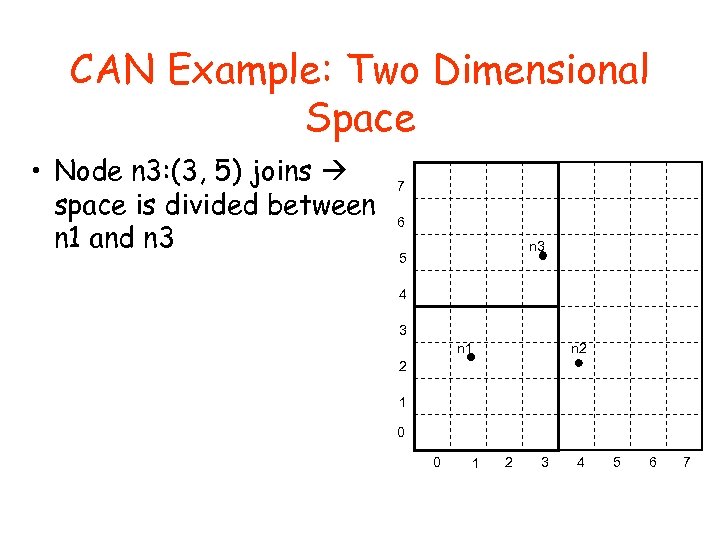

CAN Example: Two Dimensional Space • Node n 3: (3, 5) joins space is divided between n 1 and n 3 7 6 n 3 5 4 3 n 2 n 1 2 1 0 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Node n 3: (3, 5) joins space is divided between n 1 and n 3 7 6 n 3 5 4 3 n 2 n 1 2 1 0 0 1 2 3 4 5 6 7

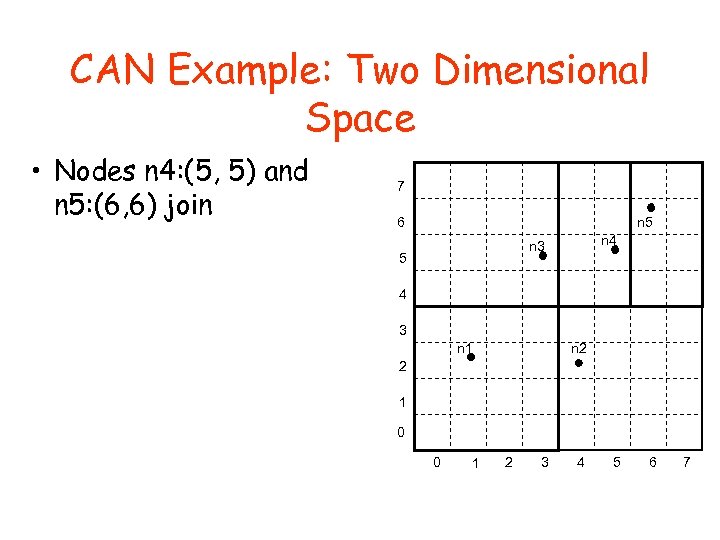

CAN Example: Two Dimensional Space • Nodes n 4: (5, 5) and n 5: (6, 6) join 7 6 n 5 n 4 n 3 5 4 3 n 2 n 1 2 1 0 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Nodes n 4: (5, 5) and n 5: (6, 6) join 7 6 n 5 n 4 n 3 5 4 3 n 2 n 1 2 1 0 0 1 2 3 4 5 6 7

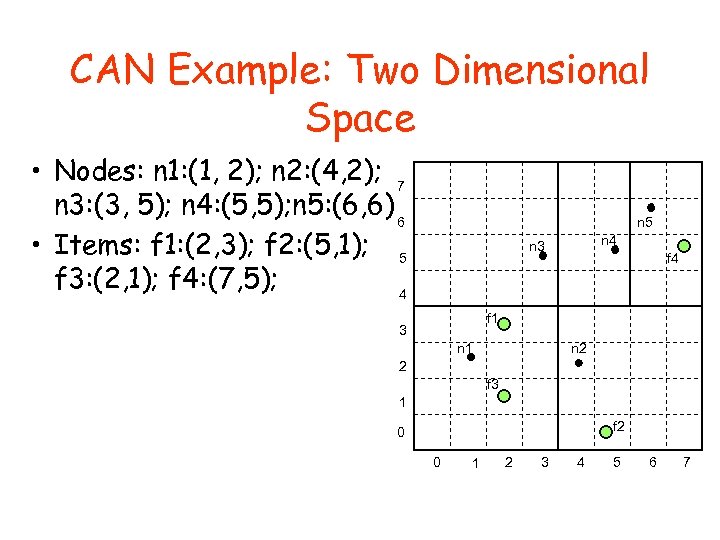

CAN Example: Two Dimensional Space • Nodes: n 1: (1, 2); n 2: (4, 2); 7 n 3: (3, 5); n 4: (5, 5); n 5: (6, 6) 6 • Items: f 1: (2, 3); f 2: (5, 1); 5 f 3: (2, 1); f 4: (7, 5); 4 n 5 n 4 n 3 f 4 f 1 3 n 2 n 1 2 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Nodes: n 1: (1, 2); n 2: (4, 2); 7 n 3: (3, 5); n 4: (5, 5); n 5: (6, 6) 6 • Items: f 1: (2, 3); f 2: (5, 1); 5 f 3: (2, 1); f 4: (7, 5); 4 n 5 n 4 n 3 f 4 f 1 3 n 2 n 1 2 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Each item is stored by the node who owns its mapping in the space 7 6 n 5 n 4 n 3 5 f 4 4 f 1 3 n 2 n 1 2 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN Example: Two Dimensional Space • Each item is stored by the node who owns its mapping in the space 7 6 n 5 n 4 n 3 5 f 4 4 f 1 3 n 2 n 1 2 f 3 1 f 2 0 0 1 2 3 4 5 6 7

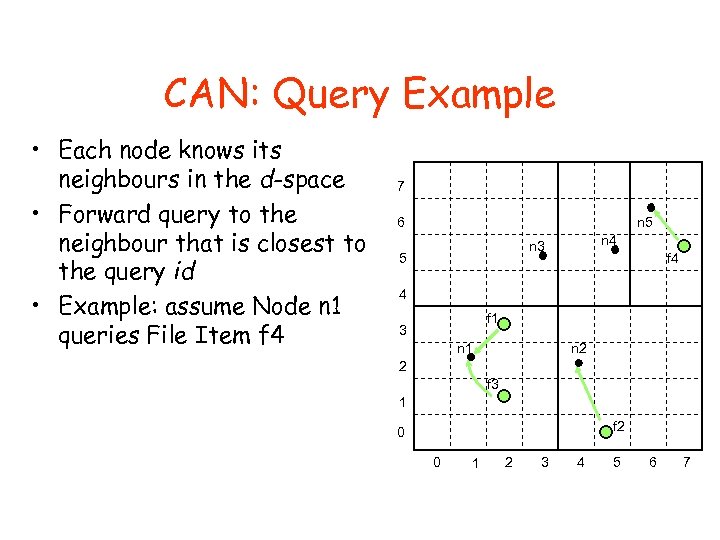

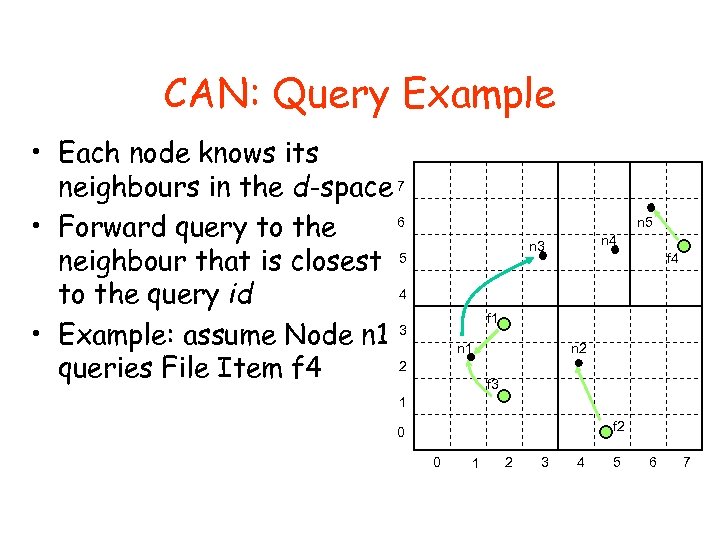

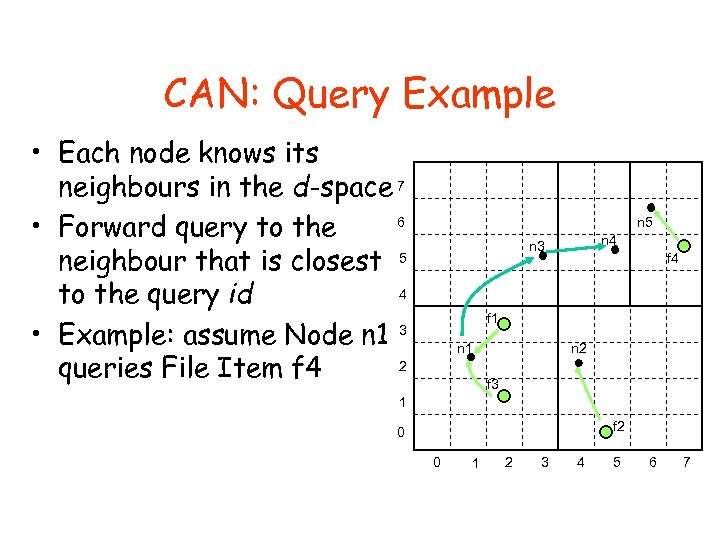

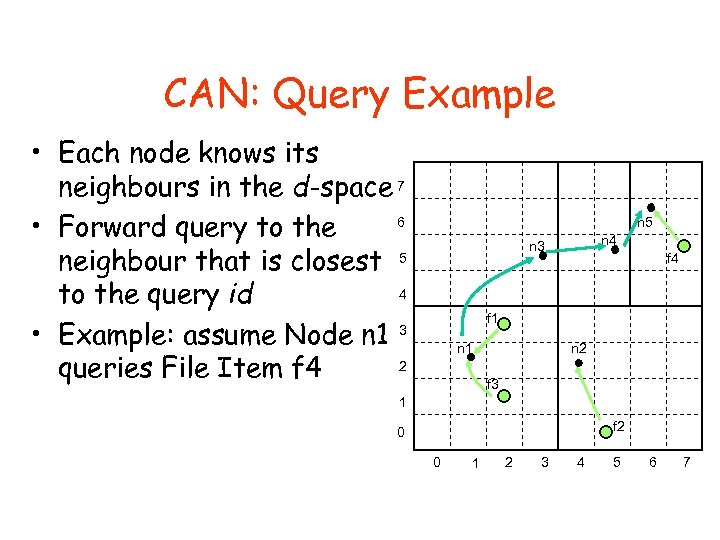

CAN: Query Example • Each node knows its neighbours in the d-space • Forward query to the neighbour that is closest to the query id • Example: assume Node n 1 queries File Item f 4 7 6 n 5 n 4 n 3 5 f 4 4 f 1 3 n 2 n 1 2 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space • Forward query to the neighbour that is closest to the query id • Example: assume Node n 1 queries File Item f 4 7 6 n 5 n 4 n 3 5 f 4 4 f 1 3 n 2 n 1 2 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space 7 6 • Forward query to the neighbour that is closest 5 4 to the query id • Example: assume Node n 1 3 2 queries File Item f 4 n 5 n 4 n 3 f 4 f 1 n 2 n 1 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space 7 6 • Forward query to the neighbour that is closest 5 4 to the query id • Example: assume Node n 1 3 2 queries File Item f 4 n 5 n 4 n 3 f 4 f 1 n 2 n 1 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space 7 6 • Forward query to the neighbour that is closest 5 4 to the query id • Example: assume Node n 1 3 2 queries File Item f 4 n 5 n 4 n 3 f 4 f 1 n 2 n 1 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space 7 6 • Forward query to the neighbour that is closest 5 4 to the query id • Example: assume Node n 1 3 2 queries File Item f 4 n 5 n 4 n 3 f 4 f 1 n 2 n 1 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space 7 6 • Forward query to the neighbour that is closest 5 4 to the query id • Example: assume Node n 1 3 2 queries File Item f 4 n 5 n 4 n 3 f 4 f 1 n 2 n 1 f 3 1 f 2 0 0 1 2 3 4 5 6 7

CAN: Query Example • Each node knows its neighbours in the d-space 7 6 • Forward query to the neighbour that is closest 5 4 to the query id • Example: assume Node n 1 3 2 queries File Item f 4 n 5 n 4 n 3 f 4 f 1 n 2 n 1 f 3 1 f 2 0 0 1 2 3 4 5 6 7

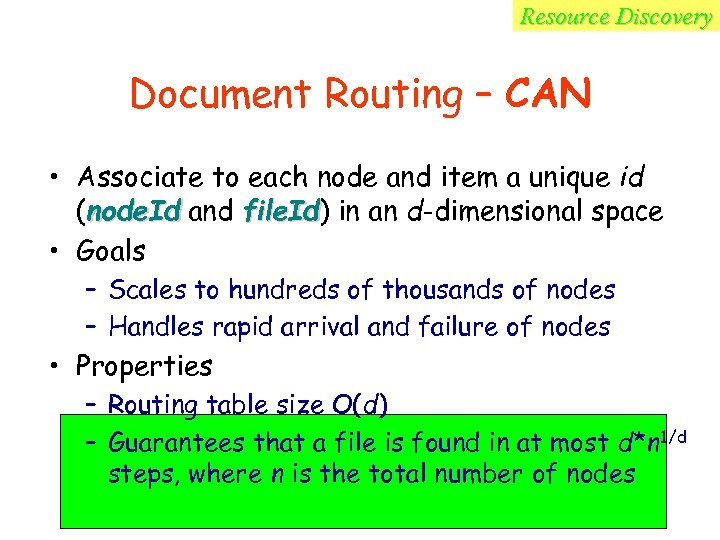

Resource Discovery Document Routing – CAN • Associate to each node and item a unique id (node. Id and file. Id) in an d-dimensional space file. Id • Goals – Scales to hundreds of thousands of nodes – Handles rapid arrival and failure of nodes • Properties – Routing table size O(d) – Guarantees that a file is found in at most d*n 1/d steps, where n is the total number of nodes

Resource Discovery Document Routing – CAN • Associate to each node and item a unique id (node. Id and file. Id) in an d-dimensional space file. Id • Goals – Scales to hundreds of thousands of nodes – Handles rapid arrival and failure of nodes • Properties – Routing table size O(d) – Guarantees that a file is found in at most d*n 1/d steps, where n is the total number of nodes

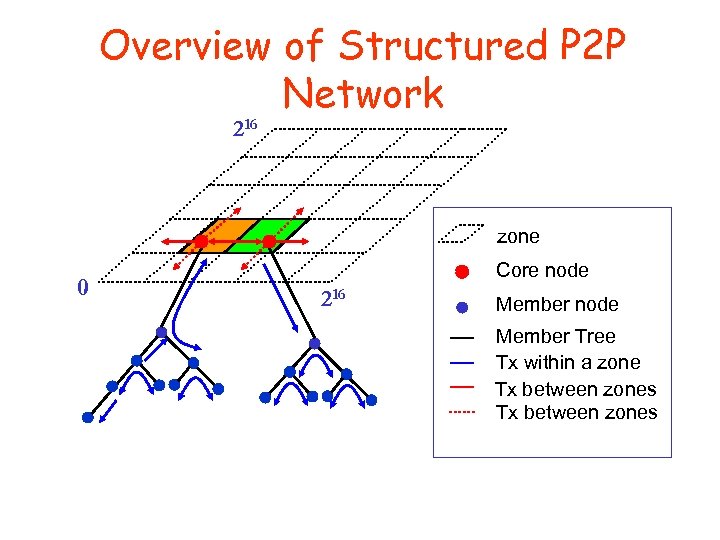

Overview of Structured P 2 P Network 216 zone 0 Core node 216 Member node Member Tree Tx within a zone Tx between zones

Overview of Structured P 2 P Network 216 zone 0 Core node 216 Member node Member Tree Tx within a zone Tx between zones

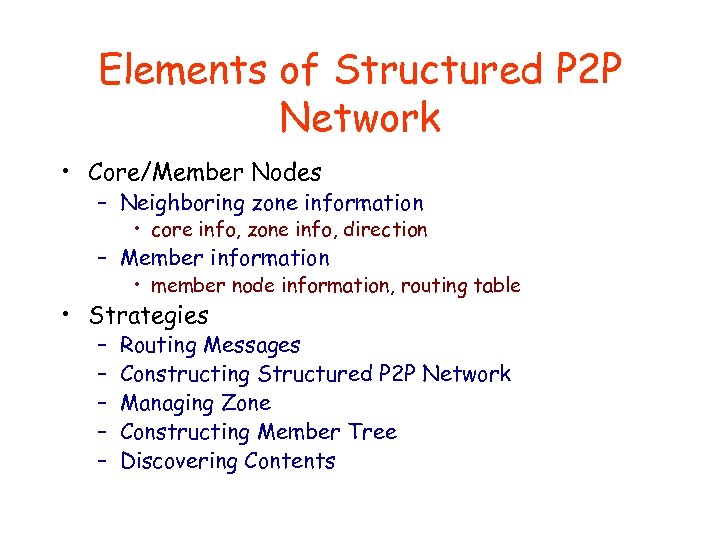

Elements of Structured P 2 P Network • Core/Member Nodes – Neighboring zone information • core info, zone info, direction – Member information • member node information, routing table • Strategies – – – Routing Messages Constructing Structured P 2 P Network Managing Zone Constructing Member Tree Discovering Contents

Elements of Structured P 2 P Network • Core/Member Nodes – Neighboring zone information • core info, zone info, direction – Member information • member node information, routing table • Strategies – – – Routing Messages Constructing Structured P 2 P Network Managing Zone Constructing Member Tree Discovering Contents

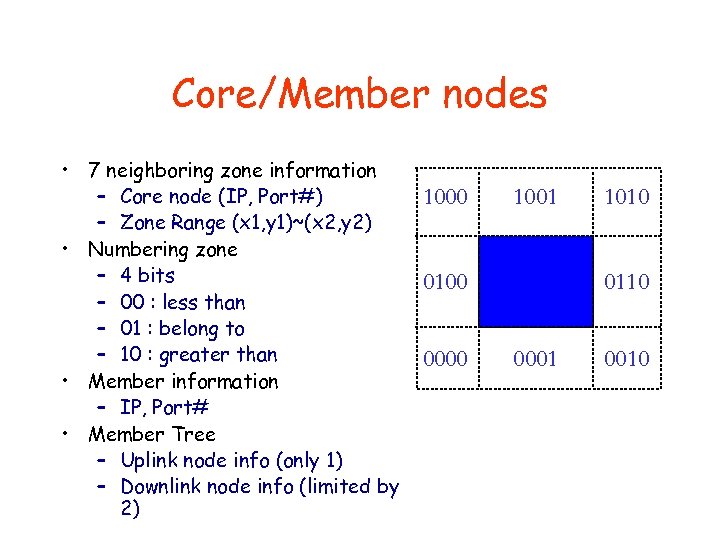

Core/Member nodes • 7 neighboring zone information – Core node (IP, Port#) – Zone Range (x 1, y 1)~(x 2, y 2) • Numbering zone – 4 bits – 00 : less than – 01 : belong to – 10 : greater than • Member information – IP, Port# • Member Tree – Uplink node info (only 1) – Downlink node info (limited by 2) 1000 1001 0110 0100 0000 1010 0001 0010

Core/Member nodes • 7 neighboring zone information – Core node (IP, Port#) – Zone Range (x 1, y 1)~(x 2, y 2) • Numbering zone – 4 bits – 00 : less than – 01 : belong to – 10 : greater than • Member information – IP, Port# • Member Tree – Uplink node info (only 1) – Downlink node info (limited by 2) 1000 1001 0110 0100 0000 1010 0001 0010

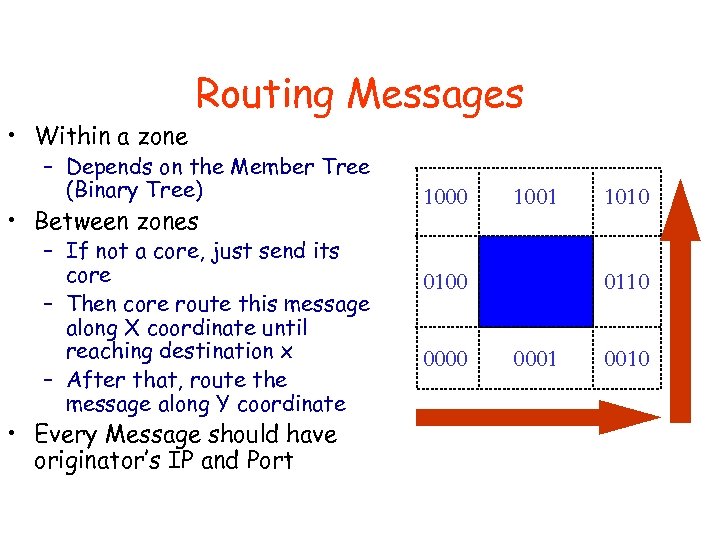

• Within a zone Routing Messages – Depends on the Member Tree (Binary Tree) • Between zones – If not a core, just send its core – Then core route this message along X coordinate until reaching destination x – After that, route the message along Y coordinate • Every Message should have originator’s IP and Port 1000 1001 0110 0100 0000 1010 0001 0010

• Within a zone Routing Messages – Depends on the Member Tree (Binary Tree) • Between zones – If not a core, just send its core – Then core route this message along X coordinate until reaching destination x – After that, route the message along Y coordinate • Every Message should have originator’s IP and Port 1000 1001 0110 0100 0000 1010 0001 0010

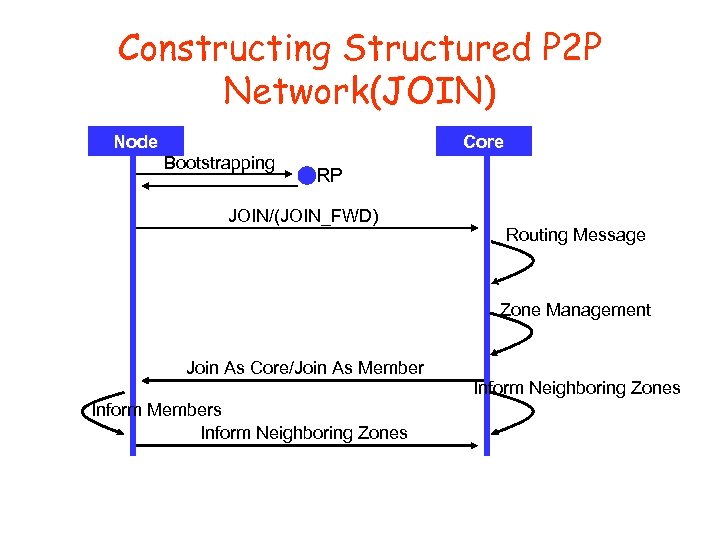

Constructing Structured P 2 P Network(JOIN) Node Bootstrapping Core RP JOIN/(JOIN_FWD) Routing Message Zone Management Join As Core/Join As Member Inform Members Inform Neighboring Zones

Constructing Structured P 2 P Network(JOIN) Node Bootstrapping Core RP JOIN/(JOIN_FWD) Routing Message Zone Management Join As Core/Join As Member Inform Members Inform Neighboring Zones

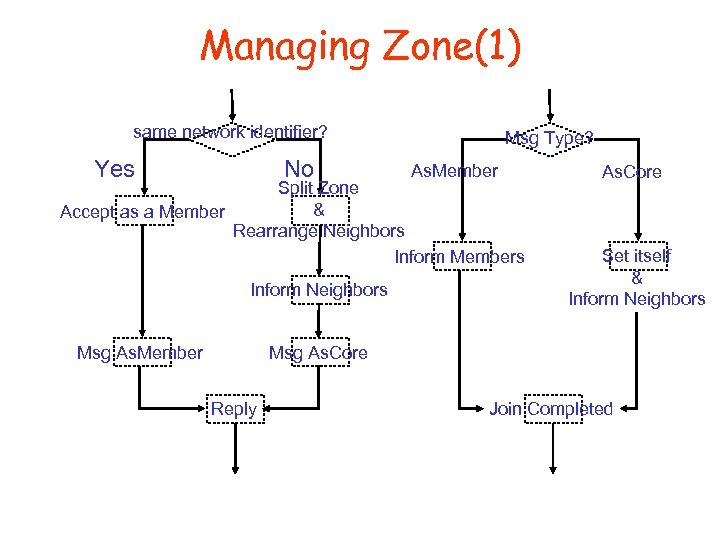

Managing Zone(1) same network identifier? Yes No Msg Type? As. Member Split Zone & Accept as a Member Rearrange Neighbors Inform Members Inform Neighbors As. Core Set itself & Inform Neighbors Msg As. Core Msg As. Member Reply Join Completed

Managing Zone(1) same network identifier? Yes No Msg Type? As. Member Split Zone & Accept as a Member Rearrange Neighbors Inform Members Inform Neighbors As. Core Set itself & Inform Neighbors Msg As. Core Msg As. Member Reply Join Completed

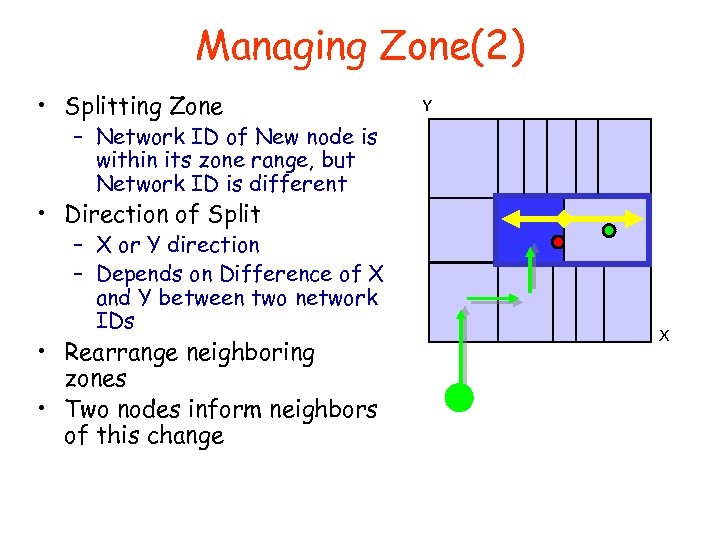

Managing Zone(2) • Splitting Zone Y – Network ID of New node is within its zone range, but Network ID is different • Direction of Split – X or Y direction – Depends on Difference of X and Y between two network IDs • Rearrange neighboring zones • Two nodes inform neighbors of this change X

Managing Zone(2) • Splitting Zone Y – Network ID of New node is within its zone range, but Network ID is different • Direction of Split – X or Y direction – Depends on Difference of X and Y between two network IDs • Rearrange neighboring zones • Two nodes inform neighbors of this change X

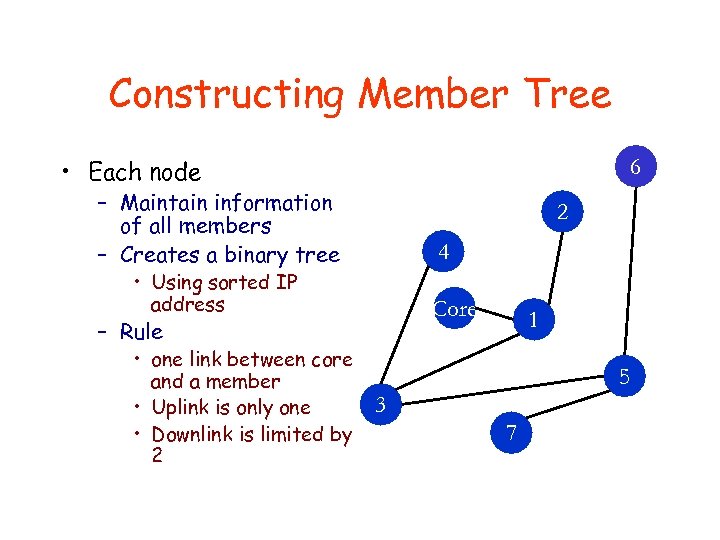

Constructing Member Tree • Each node 6 – Maintain information of all members – Creates a binary tree 2 4 • Using sorted IP address Core – Rule • one link between core and a member • Uplink is only one • Downlink is limited by 2 1 5 3 7

Constructing Member Tree • Each node 6 – Maintain information of all members – Creates a binary tree 2 4 • Using sorted IP address Core – Rule • one link between core and a member • Uplink is only one • Downlink is limited by 2 1 5 3 7

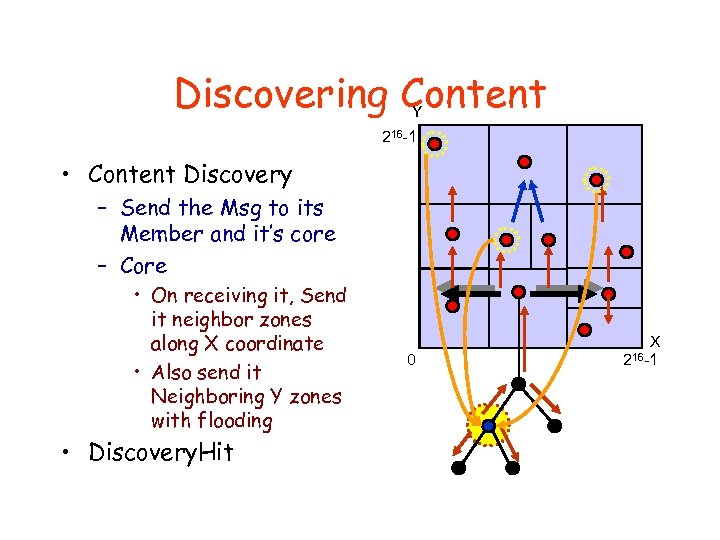

Discovering Content Y 216 -1 • Content Discovery – Send the Msg to its Member and it’s core – Core • On receiving it, Send it neighbor zones along X coordinate • Also send it Neighboring Y zones with flooding • Discovery. Hit 0 X 216 -1

Discovering Content Y 216 -1 • Content Discovery – Send the Msg to its Member and it’s core – Core • On receiving it, Send it neighbor zones along X coordinate • Also send it Neighboring Y zones with flooding • Discovery. Hit 0 X 216 -1

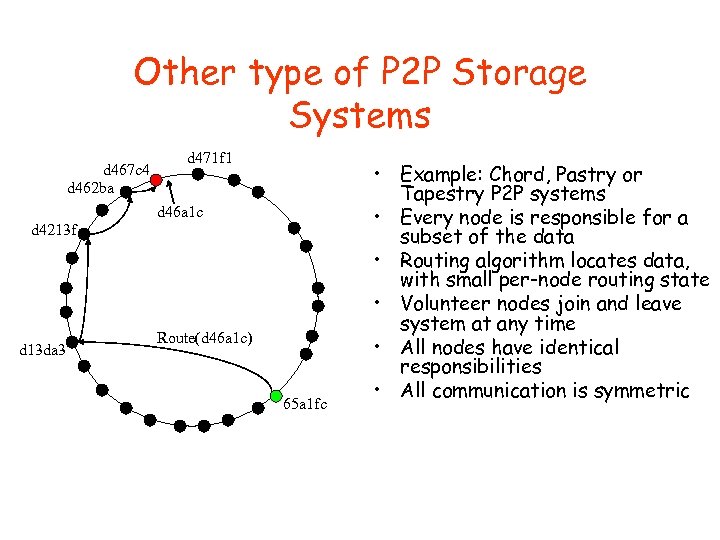

Other type of P 2 P Storage Systems d 467 c 4 d 462 ba d 471 f 1 d 46 a 1 c d 4213 f d 13 da 3 Route(d 46 a 1 c) 65 a 1 fc • Example: Chord, Pastry or Tapestry P 2 P systems • Every node is responsible for a subset of the data • Routing algorithm locates data, with small per-node routing state • Volunteer nodes join and leave system at any time • All nodes have identical responsibilities • All communication is symmetric

Other type of P 2 P Storage Systems d 467 c 4 d 462 ba d 471 f 1 d 46 a 1 c d 4213 f d 13 da 3 Route(d 46 a 1 c) 65 a 1 fc • Example: Chord, Pastry or Tapestry P 2 P systems • Every node is responsible for a subset of the data • Routing algorithm locates data, with small per-node routing state • Volunteer nodes join and leave system at any time • All nodes have identical responsibilities • All communication is symmetric

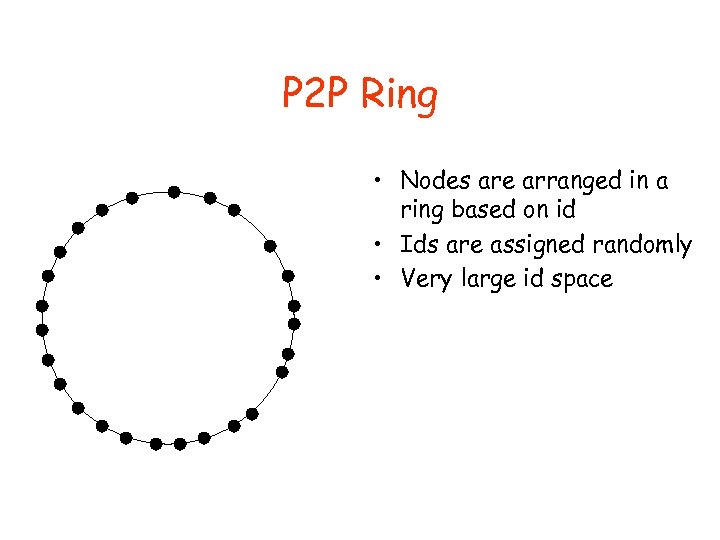

P 2 P Ring • Nodes are arranged in a ring based on id • Ids are assigned randomly • Very large id space

P 2 P Ring • Nodes are arranged in a ring based on id • Ids are assigned randomly • Very large id space

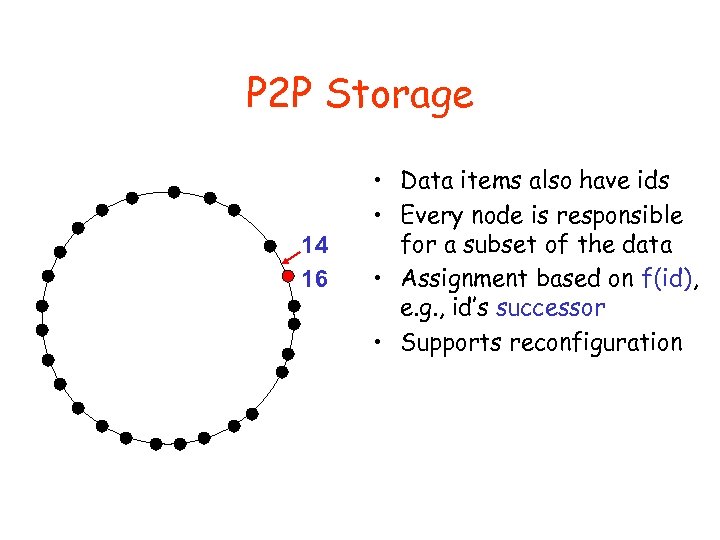

P 2 P Storage 14 16 • Data items also have ids • Every node is responsible for a subset of the data • Assignment based on f(id), e. g. , id’s successor • Supports reconfiguration

P 2 P Storage 14 16 • Data items also have ids • Every node is responsible for a subset of the data • Assignment based on f(id), e. g. , id’s successor • Supports reconfiguration

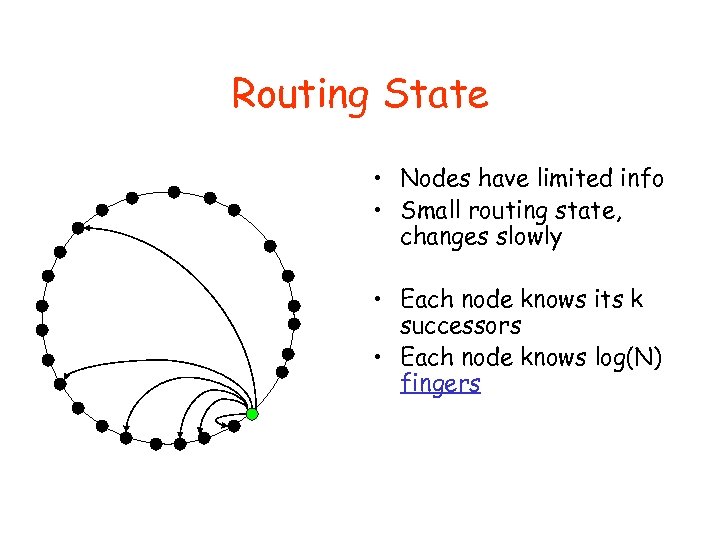

Routing State • Nodes have limited info • Small routing state, changes slowly • Each node knows its k successors • Each node knows log(N) fingers

Routing State • Nodes have limited info • Small routing state, changes slowly • Each node knows its k successors • Each node knows log(N) fingers

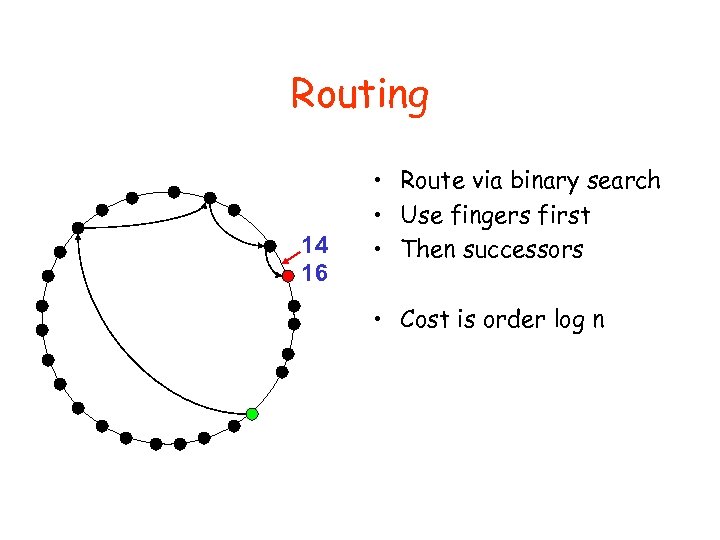

Routing 14 16 • Route via binary search • Use fingers first • Then successors • Cost is order log n

Routing 14 16 • Route via binary search • Use fingers first • Then successors • Cost is order log n

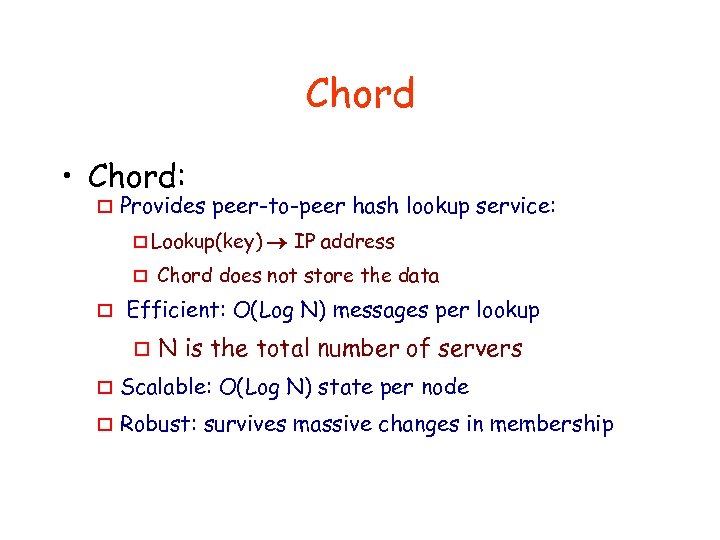

Chord • Chord: o Provides peer-to-peer hash lookup service: o Lookup(key) o o IP address Chord does not store the data Efficient: O(Log N) messages per lookup o N is the total number of servers o Scalable: O(Log N) state per node o Robust: survives massive changes in membership

Chord • Chord: o Provides peer-to-peer hash lookup service: o Lookup(key) o o IP address Chord does not store the data Efficient: O(Log N) messages per lookup o N is the total number of servers o Scalable: O(Log N) state per node o Robust: survives massive changes in membership

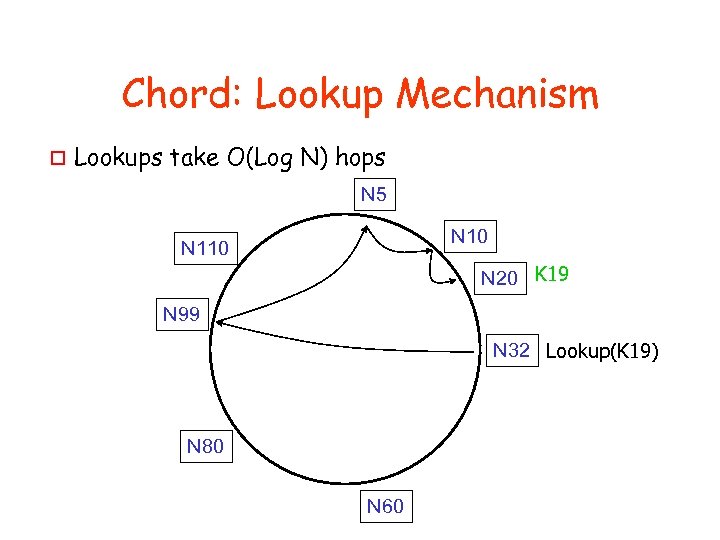

Chord: Lookup Mechanism o Lookups take O(Log N) hops N 5 N 10 N 110 N 20 K 19 N 99 N 32 Lookup(K 19) N 80 N 60

Chord: Lookup Mechanism o Lookups take O(Log N) hops N 5 N 10 N 110 N 20 K 19 N 99 N 32 Lookup(K 19) N 80 N 60

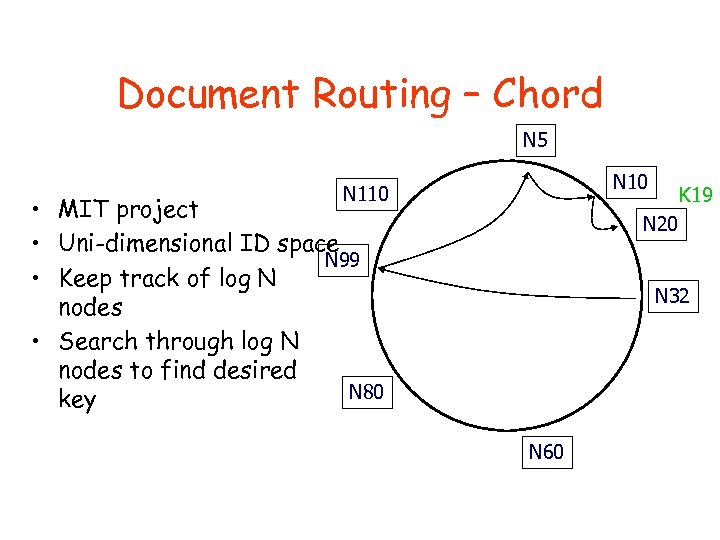

Document Routing – Chord N 5 N 10 N 110 • MIT project • Uni-dimensional ID space N 99 • Keep track of log N nodes • Search through log N nodes to find desired N 80 key K 19 N 20 N 32 N 60

Document Routing – Chord N 5 N 10 N 110 • MIT project • Uni-dimensional ID space N 99 • Keep track of log N nodes • Search through log N nodes to find desired N 80 key K 19 N 20 N 32 N 60

Routing Challenges o Define a useful key nearness metric o Keep the hop count small o Keep the routing tables “right size” o Stay robust despite rapid changes in membership Authors claim: Chord: emphasizes efficiency and simplicity o

Routing Challenges o Define a useful key nearness metric o Keep the hop count small o Keep the routing tables “right size” o Stay robust despite rapid changes in membership Authors claim: Chord: emphasizes efficiency and simplicity o

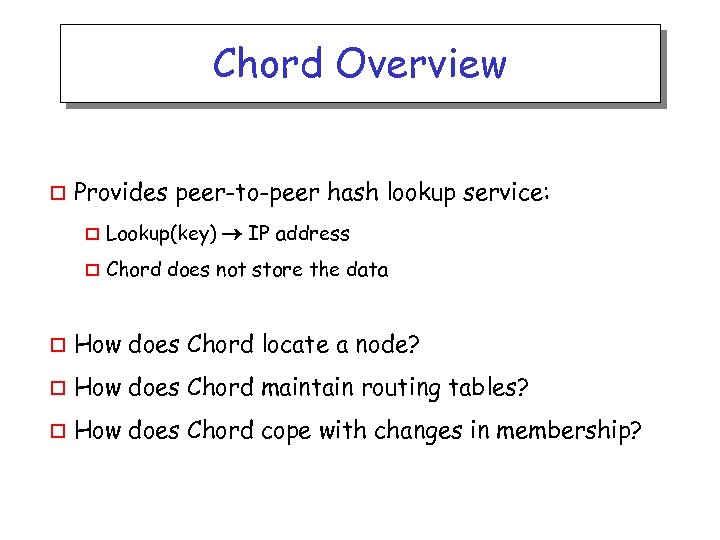

Chord Overview o Provides peer-to-peer hash lookup service: o Lookup(key) IP address o Chord does not store the data o How does Chord locate a node? o How does Chord maintain routing tables? o How does Chord cope with changes in membership?

Chord Overview o Provides peer-to-peer hash lookup service: o Lookup(key) IP address o Chord does not store the data o How does Chord locate a node? o How does Chord maintain routing tables? o How does Chord cope with changes in membership?

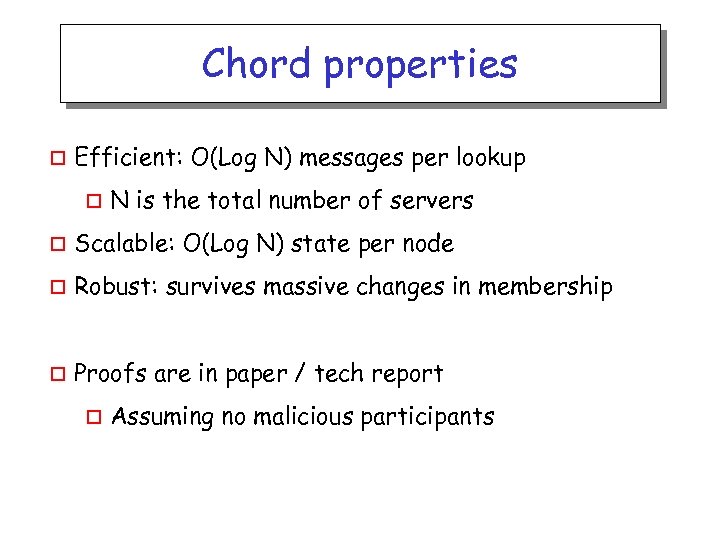

Chord properties o Efficient: O(Log N) messages per lookup o N is the total number of servers o Scalable: O(Log N) state per node o Robust: survives massive changes in membership o Proofs are in paper / tech report o Assuming no malicious participants

Chord properties o Efficient: O(Log N) messages per lookup o N is the total number of servers o Scalable: O(Log N) state per node o Robust: survives massive changes in membership o Proofs are in paper / tech report o Assuming no malicious participants

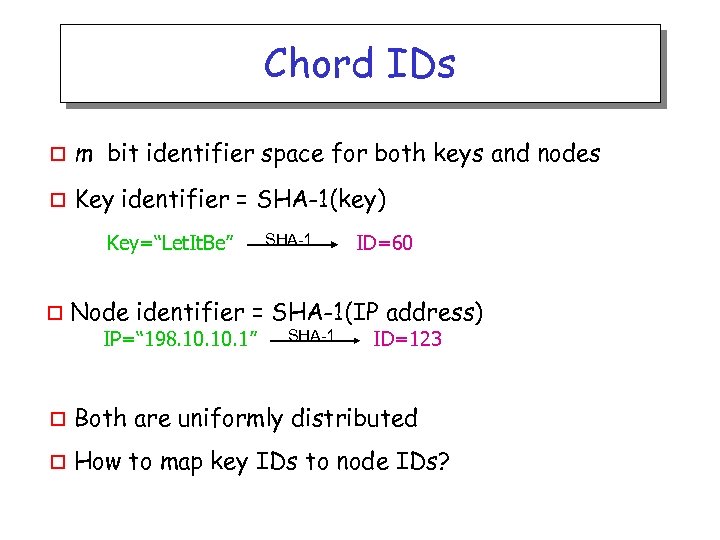

Chord IDs o m bit identifier space for both keys and nodes o Key identifier = SHA-1(key) Key=“Let. It. Be” o SHA-1 ID=60 Node identifier = SHA-1(IP address) IP=“ 198. 10. 1” SHA-1 ID=123 o Both are uniformly distributed o How to map key IDs to node IDs?

Chord IDs o m bit identifier space for both keys and nodes o Key identifier = SHA-1(key) Key=“Let. It. Be” o SHA-1 ID=60 Node identifier = SHA-1(IP address) IP=“ 198. 10. 1” SHA-1 ID=123 o Both are uniformly distributed o How to map key IDs to node IDs?

![Consistent Hashing [Karger 97] 0 K 5 IP=“ 198. 10. 1” N 123 K Consistent Hashing [Karger 97] 0 K 5 IP=“ 198. 10. 1” N 123 K](https://present5.com/presentation/b21a939ba012ca60e2ad1679e3be499a/image-61.jpg) Consistent Hashing [Karger 97] 0 K 5 IP=“ 198. 10. 1” N 123 K 101 N 90 o K 20 Circular 7 -bit ID space K 60 N 32 Key=“Let. It. Be” A key is stored at its successor: node with next higher ID

Consistent Hashing [Karger 97] 0 K 5 IP=“ 198. 10. 1” N 123 K 101 N 90 o K 20 Circular 7 -bit ID space K 60 N 32 Key=“Let. It. Be” A key is stored at its successor: node with next higher ID

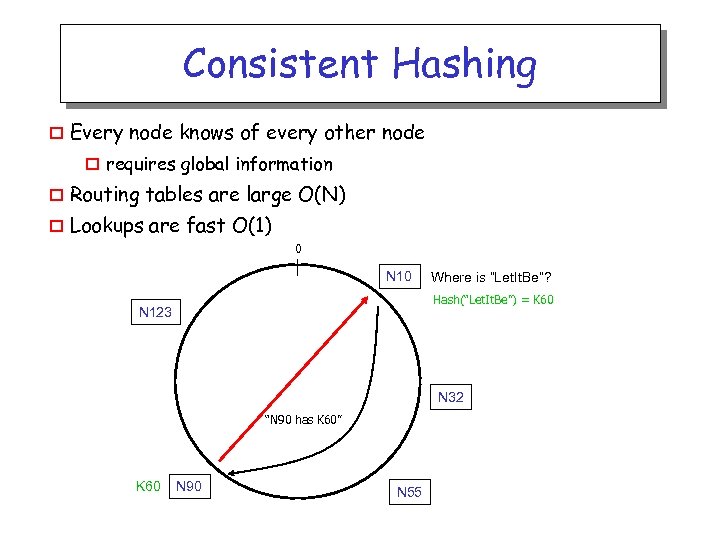

Consistent Hashing o Every node knows of every other node o requires global information o Routing tables are large O(N) o Lookups are fast O(1) 0 N 10 Where is “Let. It. Be”? Hash(“Let. It. Be”) = K 60 N 123 N 32 “N 90 has K 60” K 60 N 90 N 55

Consistent Hashing o Every node knows of every other node o requires global information o Routing tables are large O(N) o Lookups are fast O(1) 0 N 10 Where is “Let. It. Be”? Hash(“Let. It. Be”) = K 60 N 123 N 32 “N 90 has K 60” K 60 N 90 N 55

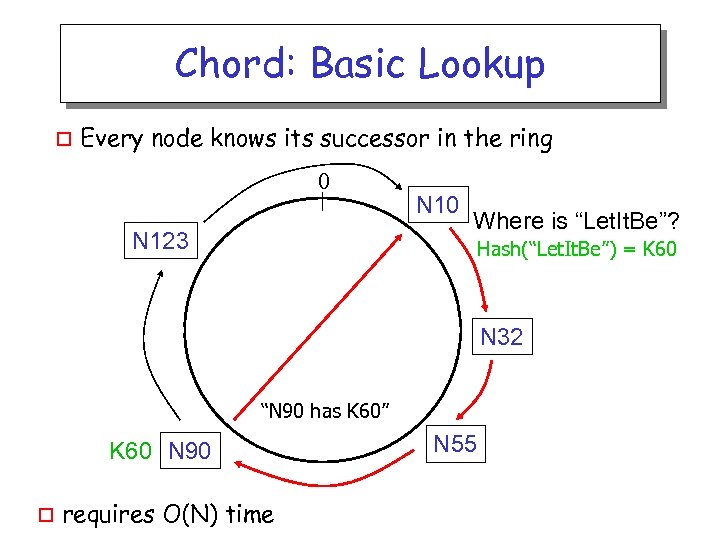

Chord: Basic Lookup o Every node knows its successor in the ring 0 N 123 N 10 Where is “Let. It. Be”? Hash(“Let. It. Be”) = K 60 N 32 “N 90 has K 60” K 60 N 90 o requires O(N) time N 55

Chord: Basic Lookup o Every node knows its successor in the ring 0 N 123 N 10 Where is “Let. It. Be”? Hash(“Let. It. Be”) = K 60 N 32 “N 90 has K 60” K 60 N 90 o requires O(N) time N 55

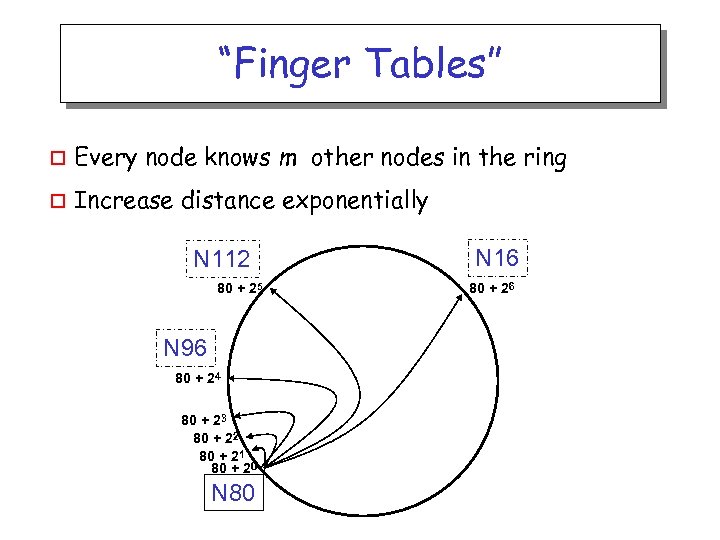

“Finger Tables” o Every node knows m other nodes in the ring o Increase distance exponentially N 112 80 + 25 N 96 80 + 24 80 + 23 80 + 22 80 + 21 80 + 20 N 80 N 16 80 + 26

“Finger Tables” o Every node knows m other nodes in the ring o Increase distance exponentially N 112 80 + 25 N 96 80 + 24 80 + 23 80 + 22 80 + 21 80 + 20 N 80 N 16 80 + 26

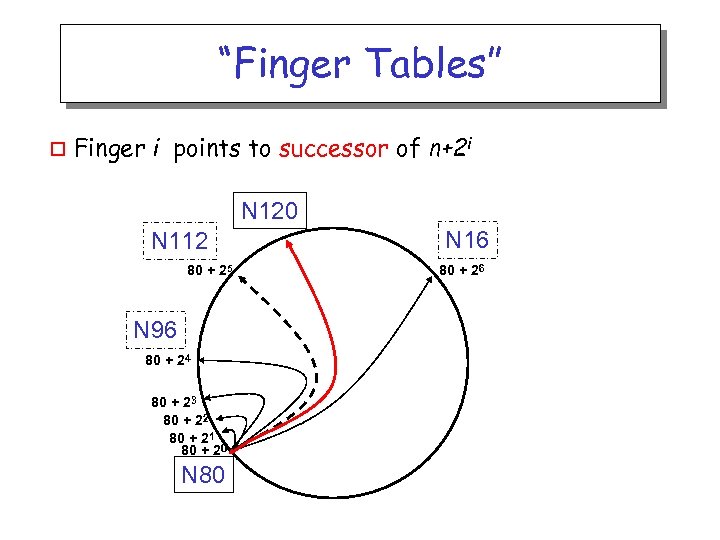

“Finger Tables” o Finger i points to successor of n+2 i N 120 N 112 80 + 25 N 96 80 + 24 80 + 23 80 + 22 80 + 21 80 + 20 N 80 N 16 80 + 26

“Finger Tables” o Finger i points to successor of n+2 i N 120 N 112 80 + 25 N 96 80 + 24 80 + 23 80 + 22 80 + 21 80 + 20 N 80 N 16 80 + 26

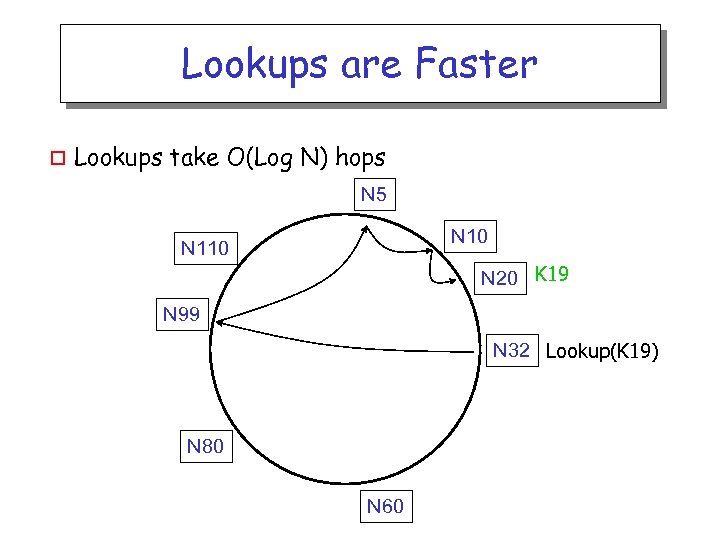

Lookups are Faster o Lookups take O(Log N) hops N 5 N 10 N 110 N 20 K 19 N 99 N 32 Lookup(K 19) N 80 N 60

Lookups are Faster o Lookups take O(Log N) hops N 5 N 10 N 110 N 20 K 19 N 99 N 32 Lookup(K 19) N 80 N 60

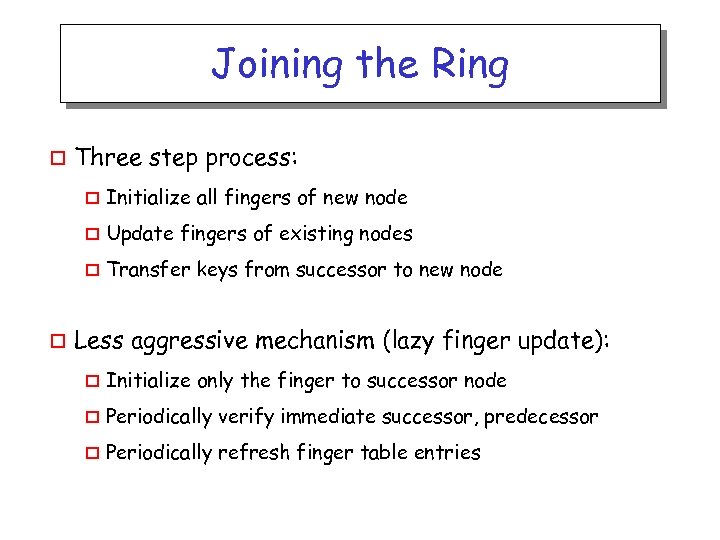

Joining the Ring o Three step process: o o Update fingers of existing nodes o o Initialize all fingers of new node Transfer keys from successor to new node Less aggressive mechanism (lazy finger update): o Initialize only the finger to successor node o Periodically verify immediate successor, predecessor o Periodically refresh finger table entries

Joining the Ring o Three step process: o o Update fingers of existing nodes o o Initialize all fingers of new node Transfer keys from successor to new node Less aggressive mechanism (lazy finger update): o Initialize only the finger to successor node o Periodically verify immediate successor, predecessor o Periodically refresh finger table entries

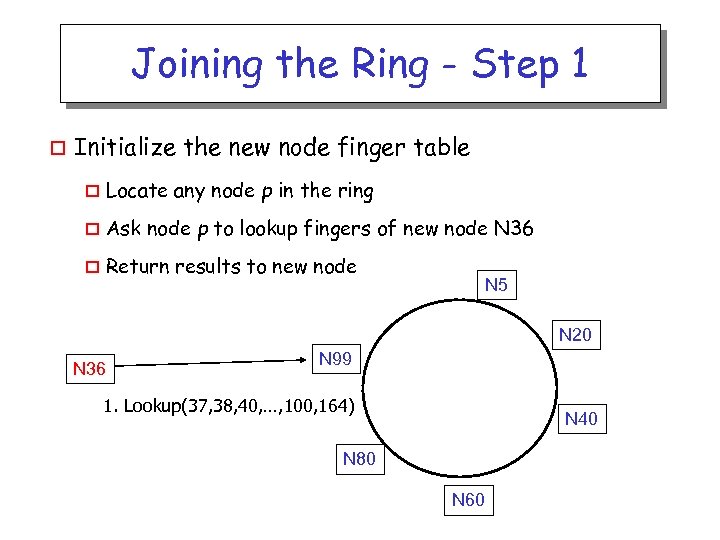

Joining the Ring - Step 1 o Initialize the new node finger table o Locate any node p in the ring o Ask node p to lookup fingers of new node N 36 o Return results to new node N 5 N 20 N 36 N 99 1. Lookup(37, 38, 40, …, 100, 164) N 40 N 80 N 60

Joining the Ring - Step 1 o Initialize the new node finger table o Locate any node p in the ring o Ask node p to lookup fingers of new node N 36 o Return results to new node N 5 N 20 N 36 N 99 1. Lookup(37, 38, 40, …, 100, 164) N 40 N 80 N 60

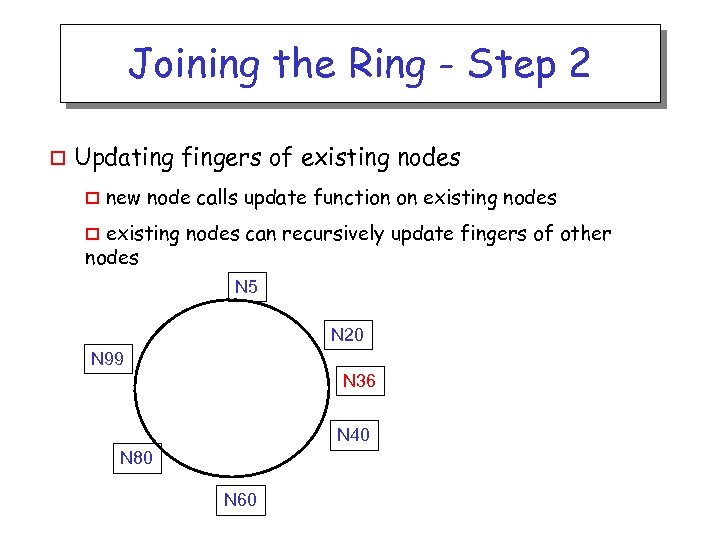

Joining the Ring - Step 2 o Updating fingers of existing nodes o new node calls update function on existing nodes can recursively update fingers of other nodes o N 5 N 20 N 99 N 36 N 40 N 80 N 60

Joining the Ring - Step 2 o Updating fingers of existing nodes o new node calls update function on existing nodes can recursively update fingers of other nodes o N 5 N 20 N 99 N 36 N 40 N 80 N 60

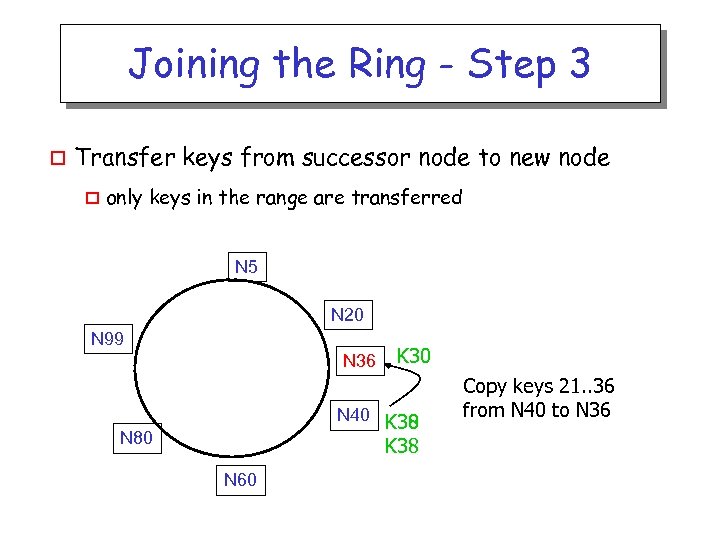

Joining the Ring - Step 3 o Transfer keys from successor node to new node o only keys in the range are transferred N 5 N 20 N 99 N 36 K 30 N 40 K 38 K 30 N 80 K 38 N 60 Copy keys 21. . 36 from N 40 to N 36

Joining the Ring - Step 3 o Transfer keys from successor node to new node o only keys in the range are transferred N 5 N 20 N 99 N 36 K 30 N 40 K 38 K 30 N 80 K 38 N 60 Copy keys 21. . 36 from N 40 to N 36

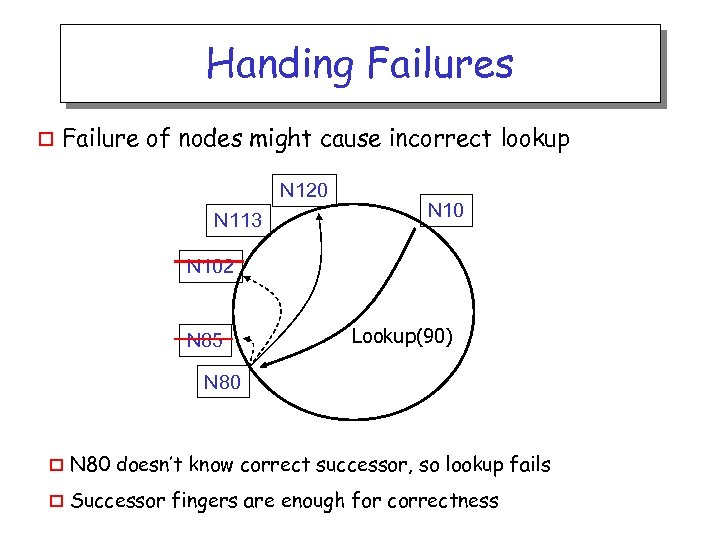

Handing Failures o Failure of nodes might cause incorrect lookup N 120 N 113 N 102 N 85 Lookup(90) N 80 o N 80 doesn’t know correct successor, so lookup fails o Successor fingers are enough for correctness

Handing Failures o Failure of nodes might cause incorrect lookup N 120 N 113 N 102 N 85 Lookup(90) N 80 o N 80 doesn’t know correct successor, so lookup fails o Successor fingers are enough for correctness

Handling Failures o Use successor list o o After failure, will know first live successor o o Each node knows r immediate successors Correct successors guarantee correct lookups Guarantee is with some probability Can choose r to make probability of lookup failure arbitrarily small o

Handling Failures o Use successor list o o After failure, will know first live successor o o Each node knows r immediate successors Correct successors guarantee correct lookups Guarantee is with some probability Can choose r to make probability of lookup failure arbitrarily small o

Evaluation Overview o Quick lookup in large systems o Low variation in lookup costs o Robust despite massive failure o Experiments confirm theoretical results

Evaluation Overview o Quick lookup in large systems o Low variation in lookup costs o Robust despite massive failure o Experiments confirm theoretical results

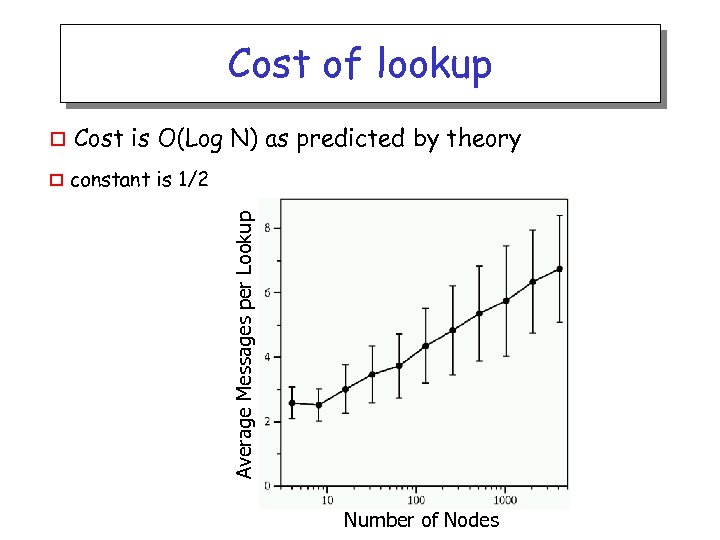

Cost of lookup Cost is O(Log N) as predicted by theory o constant is 1/2 Average Messages per Lookup o Number of Nodes

Cost of lookup Cost is O(Log N) as predicted by theory o constant is 1/2 Average Messages per Lookup o Number of Nodes

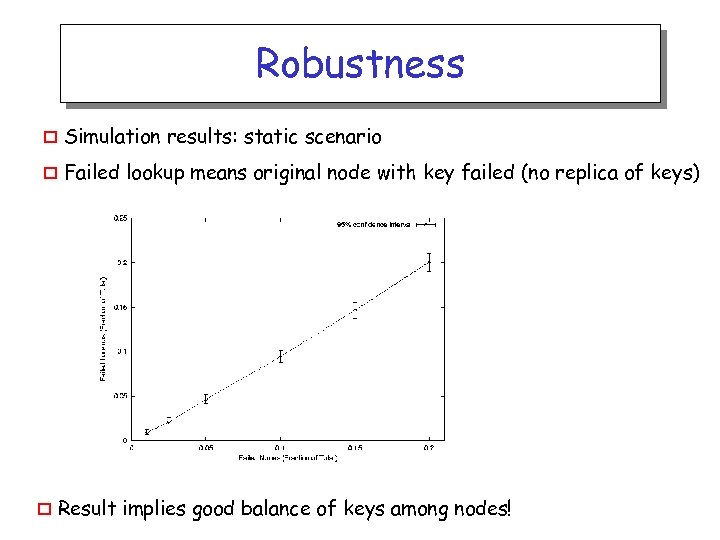

Robustness o Simulation results: static scenario o Failed lookup means original node with key failed (no replica of keys) o Result implies good balance of keys among nodes!

Robustness o Simulation results: static scenario o Failed lookup means original node with key failed (no replica of keys) o Result implies good balance of keys among nodes!

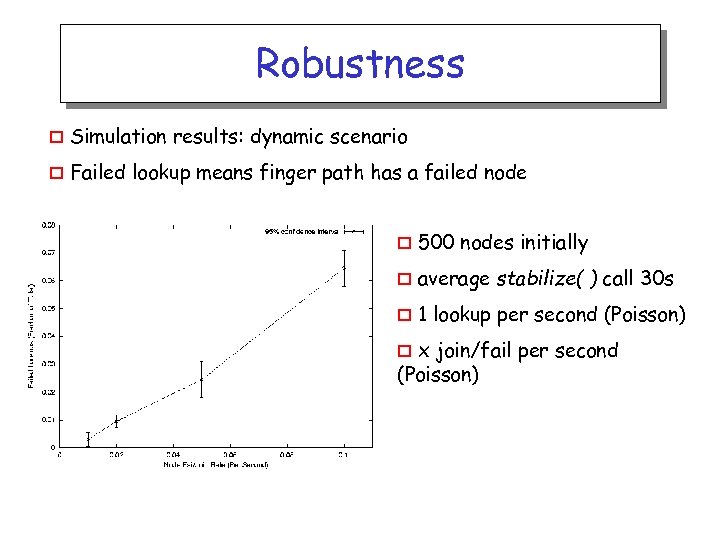

Robustness o Simulation results: dynamic scenario o Failed lookup means finger path has a failed node o 500 nodes initially o average stabilize( ) call 30 s o 1 lookup per second (Poisson) x join/fail per second (Poisson) o

Robustness o Simulation results: dynamic scenario o Failed lookup means finger path has a failed node o 500 nodes initially o average stabilize( ) call 30 s o 1 lookup per second (Poisson) x join/fail per second (Poisson) o

Strengths o Based on theoretical work (consistent hashing) o Proven performance in many different aspects o o “with high probability” proofs Robust (Is it? )

Strengths o Based on theoretical work (consistent hashing) o Proven performance in many different aspects o o “with high probability” proofs Robust (Is it? )

Weakness o NOT that simple (compared to CAN) o Member joining is complicated o o o aggressive mechanisms requires too many messages and updates no analysis of convergence in lazy finger mechanism Key management mechanism mixed between layers o upper layer does insertion and handle node failures o Chord transfer keys when node joins (no leave mechanism!) o Routing table grows with # of members in group o Worst case lookup can be slow

Weakness o NOT that simple (compared to CAN) o Member joining is complicated o o o aggressive mechanisms requires too many messages and updates no analysis of convergence in lazy finger mechanism Key management mechanism mixed between layers o upper layer does insertion and handle node failures o Chord transfer keys when node joins (no leave mechanism!) o Routing table grows with # of members in group o Worst case lookup can be slow

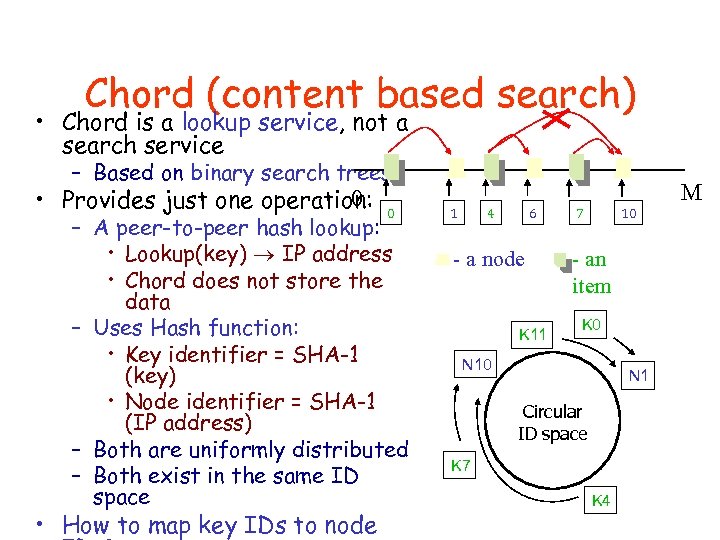

Chord (content based search) • Chord is a lookup service, not a search service – Based on binary search trees 0 • Provides just one operation: 0 – A peer-to-peer hash lookup: • Lookup(key) IP address • Chord does not store the data – Uses Hash function: • Key identifier = SHA-1 (key) • Node identifier = SHA-1 (IP address) – Both are uniformly distributed – Both exist in the same ID space • How to map key IDs to node 1 4 6 - a node K 11 7 10 - an item K 0 N 1 Circular ID space K 7 K 4 M

Chord (content based search) • Chord is a lookup service, not a search service – Based on binary search trees 0 • Provides just one operation: 0 – A peer-to-peer hash lookup: • Lookup(key) IP address • Chord does not store the data – Uses Hash function: • Key identifier = SHA-1 (key) • Node identifier = SHA-1 (IP address) – Both are uniformly distributed – Both exist in the same ID space • How to map key IDs to node 1 4 6 - a node K 11 7 10 - an item K 0 N 1 Circular ID space K 7 K 4 M

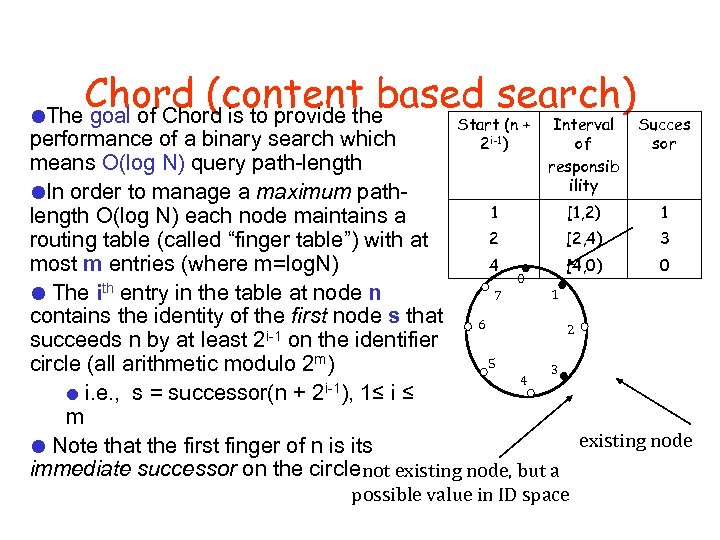

Chord (content based search) The goal of Chord is to provide the Start (n + 2 i-1) Interval of responsib ility Succes sor performance of a binary search which means O(log N) query path-length In order to manage a maximum path 1 [1, 2) 1 length O(log N) each node maintains a 2 [2, 4) 3 routing table (called “finger table”) with at most m entries (where m=log. N) 4 [4, 0) 0 0 1 The ith entry in the table at node n 7 contains the identity of the first node s that 6 2 i-1 on the identifier succeeds n by at least 2 5 circle (all arithmetic modulo 2 m) 3 4 i. e. , s = successor(n + 2 i-1), 1≤ i ≤ m existing node Note that the first finger of n is its immediate successor on the circle not existing node, but a possible value in ID space

Chord (content based search) The goal of Chord is to provide the Start (n + 2 i-1) Interval of responsib ility Succes sor performance of a binary search which means O(log N) query path-length In order to manage a maximum path 1 [1, 2) 1 length O(log N) each node maintains a 2 [2, 4) 3 routing table (called “finger table”) with at most m entries (where m=log. N) 4 [4, 0) 0 0 1 The ith entry in the table at node n 7 contains the identity of the first node s that 6 2 i-1 on the identifier succeeds n by at least 2 5 circle (all arithmetic modulo 2 m) 3 4 i. e. , s = successor(n + 2 i-1), 1≤ i ≤ m existing node Note that the first finger of n is its immediate successor on the circle not existing node, but a possible value in ID space

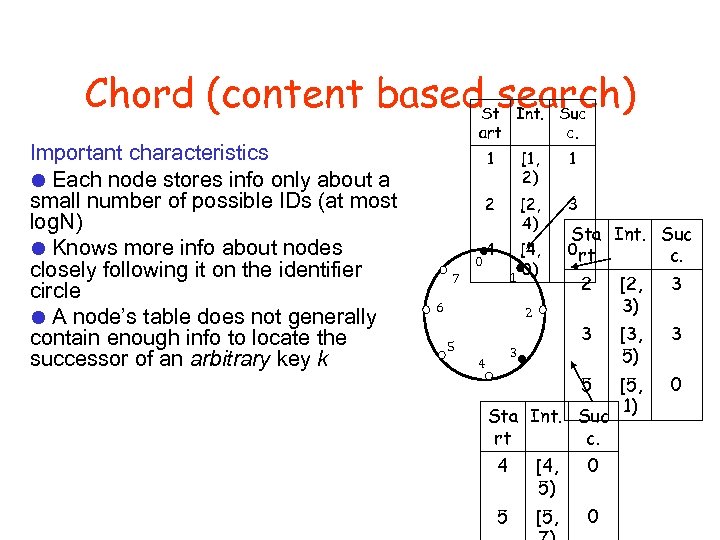

Chord (content based search) Important characteristics Each node stores info only about a small number of possible IDs (at most log. N) Knows more info about nodes closely following it on the identifier circle A node’s table does not generally contain enough info to locate the successor of an arbitrary key k St Int. Suc art c. 1 1 2 0 [1, 2) [2, 4) 3 4 [4, 0) 1 7 Sta Int. Suc 0 rt c. [2, 3) 3 3 [3, 5) 3 5 6 2 [5, 1) 0 2 5 3 4 Sta Int. Suc rt c. 4 [4, 5) 0 5 [5, 0

Chord (content based search) Important characteristics Each node stores info only about a small number of possible IDs (at most log. N) Knows more info about nodes closely following it on the identifier circle A node’s table does not generally contain enough info to locate the successor of an arbitrary key k St Int. Suc art c. 1 1 2 0 [1, 2) [2, 4) 3 4 [4, 0) 1 7 Sta Int. Suc 0 rt c. [2, 3) 3 3 [3, 5) 3 5 6 2 [5, 1) 0 2 5 3 4 Sta Int. Suc rt c. 4 [4, 5) 0 5 [5, 0

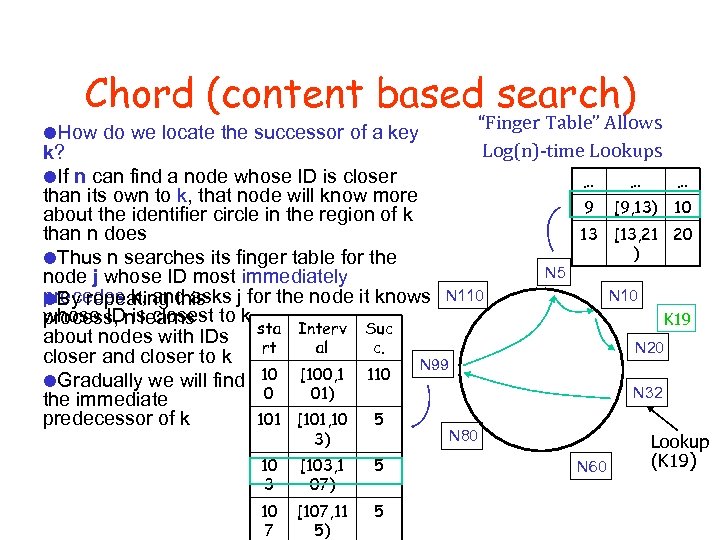

Chord (content based search) How do we locate the successor of a key “Finger Table” Allows Log(n)-time Lookups k? If n can find a node whose ID is closer than its own to k, that node will know more about the identifier circle in the region of k than n does Thus n searches its finger table for the node j whose ID most immediately precedes k, and asks j for the node it knows N 110 By repeating this whose IDnis closest to k process, learns about nodes with IDs sta Interv Suc rt al c. closer and closer to k N 99 10 [100, 1 110 Gradually we will find 0 01) the immediate predecessor of k 101 [101, 10 5 3) … … … 9 [9, 13) 10 13 [13, 21 20 ) N 5 N 10 K 19 N 20 N 32 N 80 10 3 [103, 1 07) 5 10 7 [107, 11 5) 5 N 60 Lookup (K 19)

Chord (content based search) How do we locate the successor of a key “Finger Table” Allows Log(n)-time Lookups k? If n can find a node whose ID is closer than its own to k, that node will know more about the identifier circle in the region of k than n does Thus n searches its finger table for the node j whose ID most immediately precedes k, and asks j for the node it knows N 110 By repeating this whose IDnis closest to k process, learns about nodes with IDs sta Interv Suc rt al c. closer and closer to k N 99 10 [100, 1 110 Gradually we will find 0 01) the immediate predecessor of k 101 [101, 10 5 3) … … … 9 [9, 13) 10 13 [13, 21 20 ) N 5 N 10 K 19 N 20 N 32 N 80 10 3 [103, 1 07) 5 10 7 [107, 11 5) 5 N 60 Lookup (K 19)

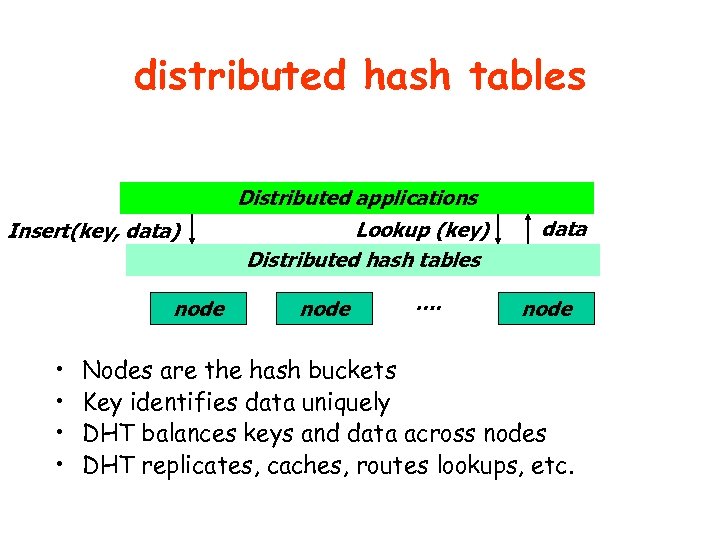

distributed hash tables Distributed applications Insert(key, data) node • • Lookup (key) Distributed hash tables node …. data node Nodes are the hash buckets Key identifies data uniquely DHT balances keys and data across nodes DHT replicates, caches, routes lookups, etc.

distributed hash tables Distributed applications Insert(key, data) node • • Lookup (key) Distributed hash tables node …. data node Nodes are the hash buckets Key identifies data uniquely DHT balances keys and data across nodes DHT replicates, caches, routes lookups, etc.

Why DHT? • Demand pulls – Growing need for security and robustness – Large-scale distributed apps are difficult to build – Many applications use location-independent data • Technology pushes – Bigger, faster, and better: every PC can be a server – Scalable lookup algorithms are available – Trustworthy systems from untrusted components

Why DHT? • Demand pulls – Growing need for security and robustness – Large-scale distributed apps are difficult to build – Many applications use location-independent data • Technology pushes – Bigger, faster, and better: every PC can be a server – Scalable lookup algorithms are available – Trustworthy systems from untrusted components

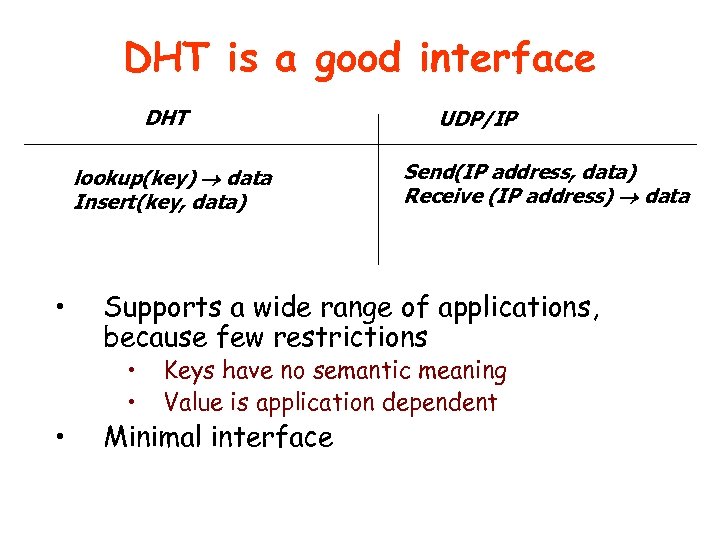

DHT is a good interface DHT lookup(key) data Insert(key, data) • • UDP/IP Send(IP address, data) Receive (IP address) data Supports a wide range of applications, because few restrictions • • Keys have no semantic meaning Value is application dependent Minimal interface

DHT is a good interface DHT lookup(key) data Insert(key, data) • • UDP/IP Send(IP address, data) Receive (IP address) data Supports a wide range of applications, because few restrictions • • Keys have no semantic meaning Value is application dependent Minimal interface

DHT is a good shared infrastructure • Applications inherit some security and robustness from DHT – DHT replicates data – Resistant to malicious participants • Low-cost deployment – Self-organizing across administrative domains – Allows to be shared among applications • Large scale supports Internet-scale workloads

DHT is a good shared infrastructure • Applications inherit some security and robustness from DHT – DHT replicates data – Resistant to malicious participants • Low-cost deployment – Self-organizing across administrative domains – Allows to be shared among applications • Large scale supports Internet-scale workloads

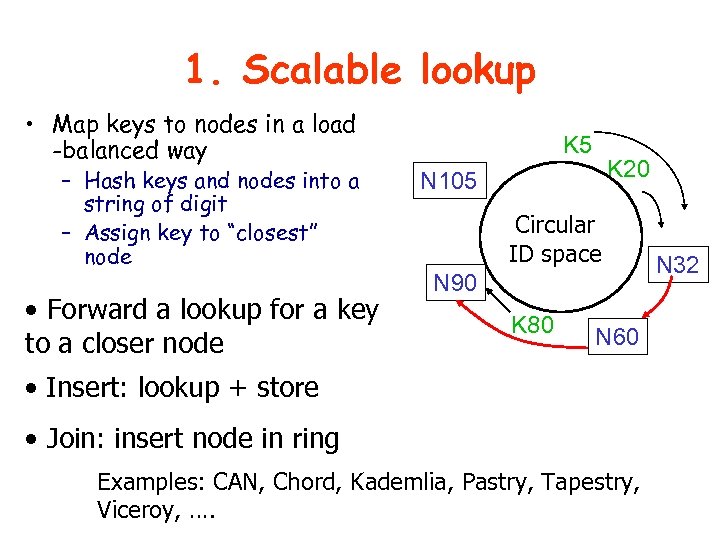

1. Scalable lookup • Map keys to nodes in a load -balanced way – Hash keys and nodes into a string of digit – Assign key to “closest” node • Forward a lookup for a key to a closer node K 5 K 20 N 105 Circular ID space N 90 K 80 N 60 • Insert: lookup + store • Join: insert node in ring Examples: CAN, Chord, Kademlia, Pastry, Tapestry, Viceroy, …. N 32

1. Scalable lookup • Map keys to nodes in a load -balanced way – Hash keys and nodes into a string of digit – Assign key to “closest” node • Forward a lookup for a key to a closer node K 5 K 20 N 105 Circular ID space N 90 K 80 N 60 • Insert: lookup + store • Join: insert node in ring Examples: CAN, Chord, Kademlia, Pastry, Tapestry, Viceroy, …. N 32

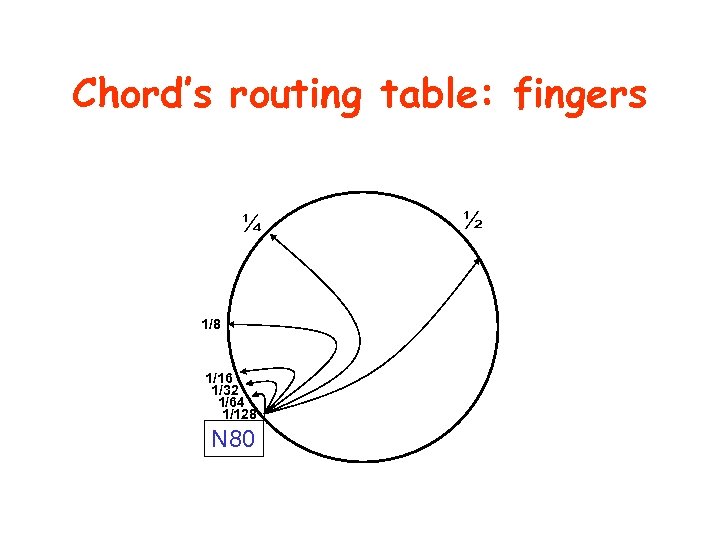

Chord’s routing table: fingers ¼ 1/8 1/16 1/32 1/64 1/128 N 80 ½

Chord’s routing table: fingers ¼ 1/8 1/16 1/32 1/64 1/128 N 80 ½

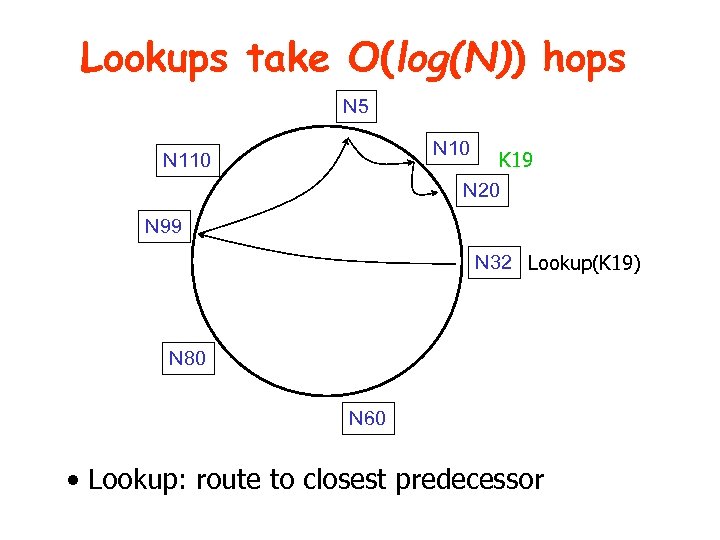

Lookups take O(log(N)) hops N 5 N 10 K 19 N 20 N 110 N 99 N 32 Lookup(K 19) N 80 N 60 • Lookup: route to closest predecessor

Lookups take O(log(N)) hops N 5 N 10 K 19 N 20 N 110 N 99 N 32 Lookup(K 19) N 80 N 60 • Lookup: route to closest predecessor

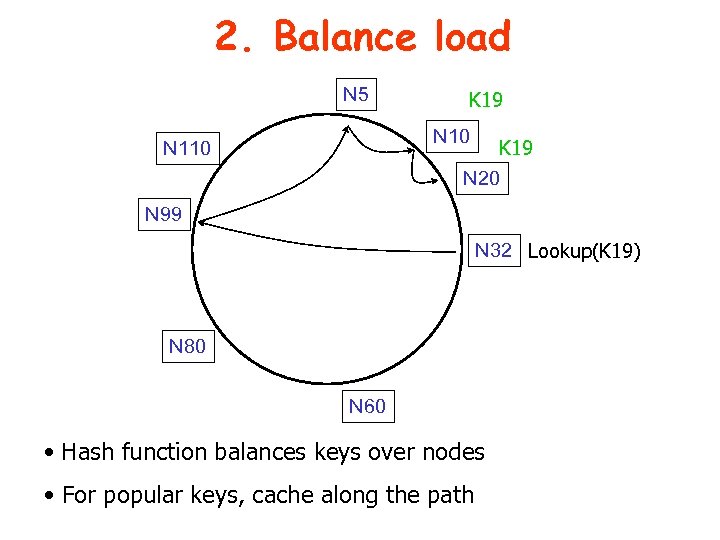

2. Balance load N 5 K 19 N 10 K 19 N 20 N 110 N 99 N 32 Lookup(K 19) N 80 N 60 • Hash function balances keys over nodes • For popular keys, cache along the path

2. Balance load N 5 K 19 N 10 K 19 N 20 N 110 N 99 N 32 Lookup(K 19) N 80 N 60 • Hash function balances keys over nodes • For popular keys, cache along the path

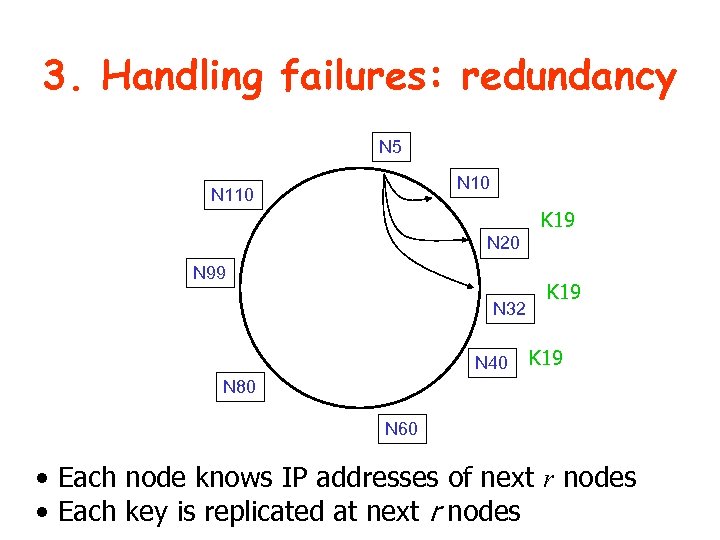

3. Handling failures: redundancy N 5 N 10 N 110 K 19 N 20 N 99 N 32 N 40 K 19 N 80 N 60 • Each node knows IP addresses of next r nodes • Each key is replicated at next r nodes

3. Handling failures: redundancy N 5 N 10 N 110 K 19 N 20 N 99 N 32 N 40 K 19 N 80 N 60 • Each node knows IP addresses of next r nodes • Each key is replicated at next r nodes

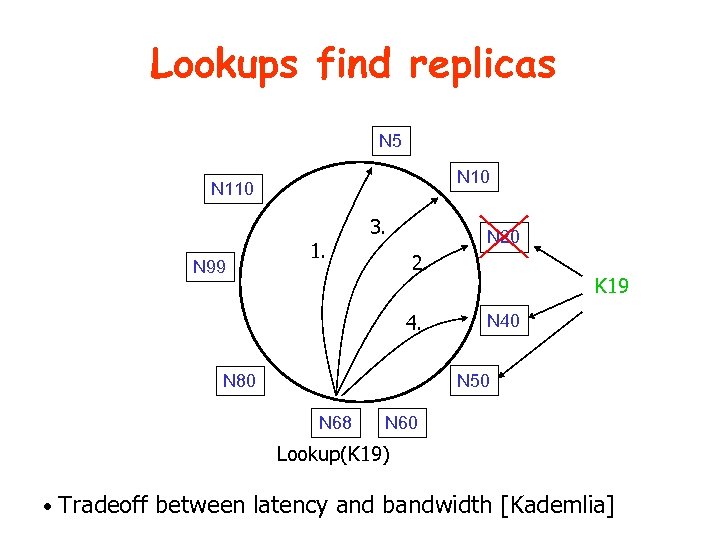

Lookups find replicas N 5 N 10 N 110 N 99 1. 3. N 20 2. 4. K 19 N 40 N 50 N 80 N 68 N 60 Lookup(K 19) • Tradeoff between latency and bandwidth [Kademlia]

Lookups find replicas N 5 N 10 N 110 N 99 1. 3. N 20 2. 4. K 19 N 40 N 50 N 80 N 68 N 60 Lookup(K 19) • Tradeoff between latency and bandwidth [Kademlia]

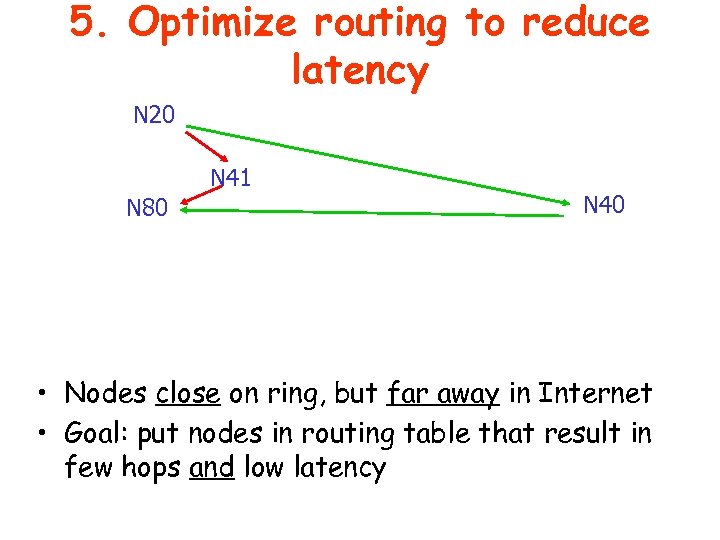

5. Optimize routing to reduce latency N 20 N 41 N 80 N 40 • Nodes close on ring, but far away in Internet • Goal: put nodes in routing table that result in few hops and low latency

5. Optimize routing to reduce latency N 20 N 41 N 80 N 40 • Nodes close on ring, but far away in Internet • Goal: put nodes in routing table that result in few hops and low latency

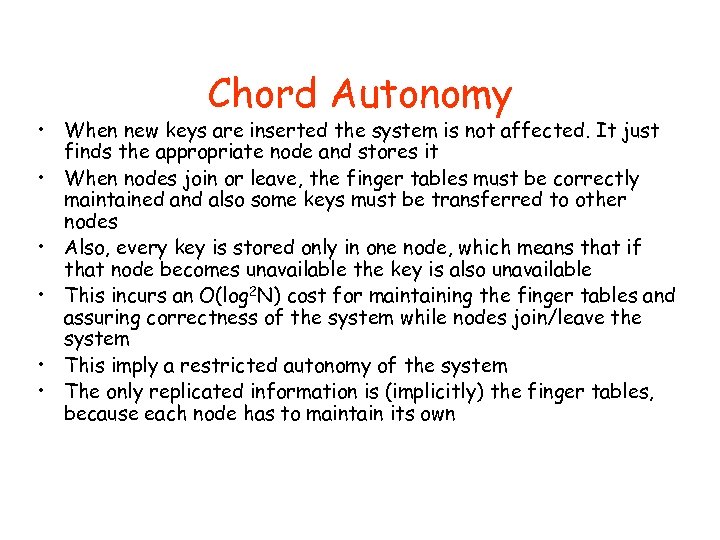

Chord Autonomy • When new keys are inserted the system is not affected. It just finds the appropriate node and stores it • When nodes join or leave, the finger tables must be correctly maintained and also some keys must be transferred to other nodes • Also, every key is stored only in one node, which means that if that node becomes unavailable the key is also unavailable • This incurs an O(log 2 N) cost for maintaining the finger tables and assuring correctness of the system while nodes join/leave the system • This imply a restricted autonomy of the system • The only replicated information is (implicitly) the finger tables, because each node has to maintain its own

Chord Autonomy • When new keys are inserted the system is not affected. It just finds the appropriate node and stores it • When nodes join or leave, the finger tables must be correctly maintained and also some keys must be transferred to other nodes • Also, every key is stored only in one node, which means that if that node becomes unavailable the key is also unavailable • This incurs an O(log 2 N) cost for maintaining the finger tables and assuring correctness of the system while nodes join/leave the system • This imply a restricted autonomy of the system • The only replicated information is (implicitly) the finger tables, because each node has to maintain its own

Distributed Hash Tables • A large shared memory implemented by p 2 p nodes • Addresses are logical, not physical • Implies applications can select them as desired • Typically a hash of other information • System looks them up

Distributed Hash Tables • A large shared memory implemented by p 2 p nodes • Addresses are logical, not physical • Implies applications can select them as desired • Typically a hash of other information • System looks them up

Drawbacks of DHTs • Structured solution – Given a filename, find its location – Tightly controlled topology & file placement • Can DHTs do file sharing? – Probably, but with lots of extra work: • Caching • Keyword searching – Poorly suited for keyword searches – Transient clients cause overhead – Can find rare files, but that may not matter • General evaluation of the structured P 2 P systems – Great at finding rare files, but most queries are for popular files

Drawbacks of DHTs • Structured solution – Given a filename, find its location – Tightly controlled topology & file placement • Can DHTs do file sharing? – Probably, but with lots of extra work: • Caching • Keyword searching – Poorly suited for keyword searches – Transient clients cause overhead – Can find rare files, but that may not matter • General evaluation of the structured P 2 P systems – Great at finding rare files, but most queries are for popular files