be60c33f034a4ebaadde257be89a620d.ppt

- Количество слайдов: 13

Storage Task Force Intermediate pre report

Storage Task Force Intermediate pre report

History • Grid. Ka Technical advisory board needs storage numbers: Assemble a team of experts. 04/05 • At HEPi. X 05/05 – many sites indicate similar question(s) – What hardware needed for which profile – HEPi. X community, GDB chair and LCG project leader agree on task force. • First (telephone) meeting on 17/6/05 – Since then 1 face to face, several phone conf. , numerous emails

History • Grid. Ka Technical advisory board needs storage numbers: Assemble a team of experts. 04/05 • At HEPi. X 05/05 – many sites indicate similar question(s) – What hardware needed for which profile – HEPi. X community, GDB chair and LCG project leader agree on task force. • First (telephone) meeting on 17/6/05 – Since then 1 face to face, several phone conf. , numerous emails

Mandate • Examine the current LHC experiment computing models. • Attempt to determine the data volumes, access patterns and required data security for the various classes of data, as a function of Tier and of time. • Consider the current storage technologies, their prices in various geographical regions and their suitability for various classes of data storage. • Attempt to map the required storage capacities to suitable technologies. • Formulate a plan to implement the required storage in a timely fashion. • Report to GDB and/or HEPi. X

Mandate • Examine the current LHC experiment computing models. • Attempt to determine the data volumes, access patterns and required data security for the various classes of data, as a function of Tier and of time. • Consider the current storage technologies, their prices in various geographical regions and their suitability for various classes of data storage. • Attempt to map the required storage capacities to suitable technologies. • Formulate a plan to implement the required storage in a timely fashion. • Report to GDB and/or HEPi. X

Members Roger Jones (convener, ATLAS) • Tech experts Luca d’Agnello (CNAF) Martin Gasthuber (DESY) Andrei Maslennikov (CASPUR) Helge Meinhard (CERN) Andrew Sansum (RAL) Jos van Wezel (FZK) • Experiment experts Peter Malzacher (Alice) Vincenso Vagnoni (LHCb) nn (CMS) • Report due 2 nd wk October at HEPi. X (SLAC)

Members Roger Jones (convener, ATLAS) • Tech experts Luca d’Agnello (CNAF) Martin Gasthuber (DESY) Andrei Maslennikov (CASPUR) Helge Meinhard (CERN) Andrew Sansum (RAL) Jos van Wezel (FZK) • Experiment experts Peter Malzacher (Alice) Vincenso Vagnoni (LHCb) nn (CMS) • Report due 2 nd wk October at HEPi. X (SLAC)

Methods used • Define hardware solutions – storage block with certain capacities and capabilities • Perform simple trend analysis – costs, densities, CPU v. s. IO throughput • Follow storage/data path in computing models – – – ATLAS, CMS (and Alice) used at the moment assume input rate is fixed (scaling? ) estimate inter T 1 data flow estimate data flow to T 2 attempt to estimate file and array/tape contentions • Define storage classes – based on storage use in models – reliability, throughput (=> costs function) • Using CERN tender of DAS storage as the basis for an example set of requirements

Methods used • Define hardware solutions – storage block with certain capacities and capabilities • Perform simple trend analysis – costs, densities, CPU v. s. IO throughput • Follow storage/data path in computing models – – – ATLAS, CMS (and Alice) used at the moment assume input rate is fixed (scaling? ) estimate inter T 1 data flow estimate data flow to T 2 attempt to estimate file and array/tape contentions • Define storage classes – based on storage use in models – reliability, throughput (=> costs function) • Using CERN tender of DAS storage as the basis for an example set of requirements

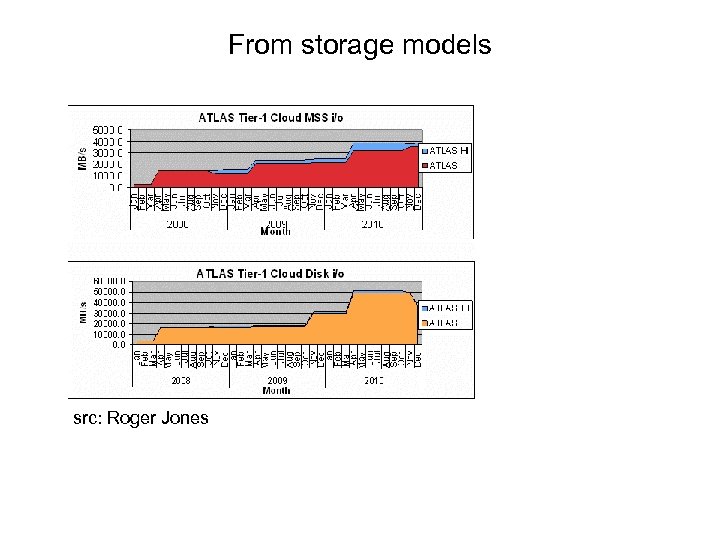

From storage models src: Roger Jones

From storage models src: Roger Jones

Trying to deliver • Type of storage fitted to the specific req. – access patterns – applications involved – data classes • Amount of storage at time t 0 to t+4 years – what is needed when – growth of data sets is ? ? • (Non exhaustive) list of hardware – via web site (feed with recent talks) – experiences – need maintenance • Determination list for disk storage at T 2 (and T 1) – IO rates via connections (ether, scsi, i-band) – Availability types (RAID) – Management, maintenance and replacement costs

Trying to deliver • Type of storage fitted to the specific req. – access patterns – applications involved – data classes • Amount of storage at time t 0 to t+4 years – what is needed when – growth of data sets is ? ? • (Non exhaustive) list of hardware – via web site (feed with recent talks) – experiences – need maintenance • Determination list for disk storage at T 2 (and T 1) – IO rates via connections (ether, scsi, i-band) – Availability types (RAID) – Management, maintenance and replacement costs

For T 1 only • Tape access and throughput specs. – involves disk storage for caching – relates to the amount of disk (cache/data ratio) • CMS and ATLAS seem to have different tape access predictions. • Tape contention for – raw data – reconstruction • Atlas 1500 MB/s in 2008, 4000 MB/s in 2010 all T 1’s – T 2 access

For T 1 only • Tape access and throughput specs. – involves disk storage for caching – relates to the amount of disk (cache/data ratio) • CMS and ATLAS seem to have different tape access predictions. • Tape contention for – raw data – reconstruction • Atlas 1500 MB/s in 2008, 4000 MB/s in 2010 all T 1’s – T 2 access

Conclusions • Disk size is leading factor. IO throughput is of minor importance (observed 1 MB/s / cpu) but rates at production are not known • DAS storage model is cheaper but less experience • Cache to data ratio is not known. • Probably need yearly assessment of hardware. (especially important for those that buy infrequently) • Experiment models differ on several points: more analysis needed. • Disk server scaling difficult because network to disk ratio is not useful. • Analysis access pattern is not well known but will hit databases most – should be revisited in a year. • Non-posix access requires more CPU

Conclusions • Disk size is leading factor. IO throughput is of minor importance (observed 1 MB/s / cpu) but rates at production are not known • DAS storage model is cheaper but less experience • Cache to data ratio is not known. • Probably need yearly assessment of hardware. (especially important for those that buy infrequently) • Experiment models differ on several points: more analysis needed. • Disk server scaling difficult because network to disk ratio is not useful. • Analysis access pattern is not well known but will hit databases most – should be revisited in a year. • Non-posix access requires more CPU

What in it for Grid. Ka • Alignment of expert views on hardware – realization of very different local circumstances – survey of estimated costs returned similar numbers SAN vs. DAS/storage in a box factor 1. 5 more expensive Tape: 620 euro/TB • Better understanding of experiment requirements - in house workshop with experts maybe worthwhile - did not lead to altered or reduced storage requirements • DAS/Storage in a box will not work for all – Large centers buy both. There are success and failure stories. • T 2 is probably different

What in it for Grid. Ka • Alignment of expert views on hardware – realization of very different local circumstances – survey of estimated costs returned similar numbers SAN vs. DAS/storage in a box factor 1. 5 more expensive Tape: 620 euro/TB • Better understanding of experiment requirements - in house workshop with experts maybe worthwhile - did not lead to altered or reduced storage requirements • DAS/Storage in a box will not work for all – Large centers buy both. There are success and failure stories. • T 2 is probably different

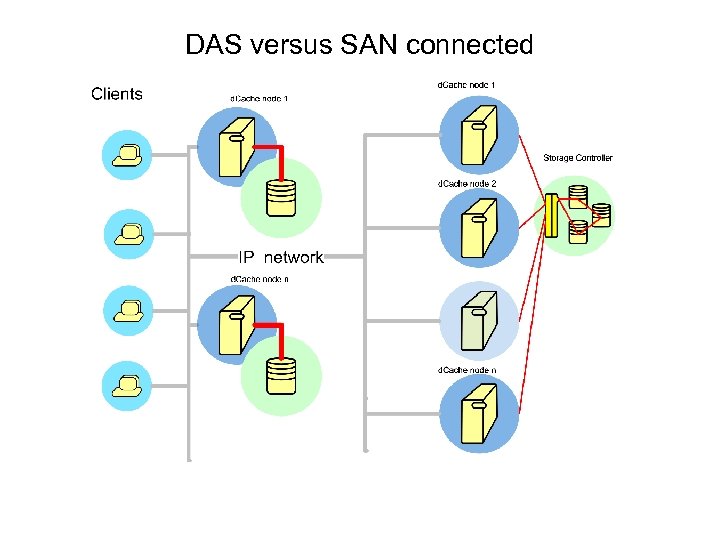

DAS versus SAN connected

DAS versus SAN connected

Grid. Ka storage preview • Next year expansion with 325 TB – Use expansion as disk-cache to tape via d. Cache – Re-use reliable storage from existing SAN pool • On-line disk of LHC experiments will move to cache pool • Storage in a box (DAS/NAS) – Cons: • hidden costs? difficult to estimate maintenance: failure rate is multiplied by recovery/service time • not adaptable to different architectures/uses • needs more rack space, power (est 20%) – Pro: • price

Grid. Ka storage preview • Next year expansion with 325 TB – Use expansion as disk-cache to tape via d. Cache – Re-use reliable storage from existing SAN pool • On-line disk of LHC experiments will move to cache pool • Storage in a box (DAS/NAS) – Cons: • hidden costs? difficult to estimate maintenance: failure rate is multiplied by recovery/service time • not adaptable to different architectures/uses • needs more rack space, power (est 20%) – Pro: • price