605f89967a722e861aa8a371db8f4c69.ppt

- Количество слайдов: 49

Storage management and caching in PAST, a large-scale, persistent peer-to-peer storage utility Gabi Kliot, Computer Science Department, Technion Topics in Reliable Distributed Computing 21/11/2004 Partially borrowed from Peter Druschel’s presentation 1

Storage management and caching in PAST, a large-scale, persistent peer-to-peer storage utility Gabi Kliot, Computer Science Department, Technion Topics in Reliable Distributed Computing 21/11/2004 Partially borrowed from Peter Druschel’s presentation 1

Outline § § § § Introduction Pastry overview PAST Overview Storage Management Caching Experimental Results Conclusion 2

Outline § § § § Introduction Pastry overview PAST Overview Storage Management Caching Experimental Results Conclusion 2

Sources n “Storage management and caching in PAST, a large-scale persistent peer-to-peer storage utility” n n n Antony Rowstron (Microsoft Research) Peter Druschel (Rice University) “Pastry: scalable, decentralized object location and routing for large-scale peer-to-peer systems” n n Antony Rowstron (Microsoft Research) Peter Druschel (Rice University) 3

Sources n “Storage management and caching in PAST, a large-scale persistent peer-to-peer storage utility” n n n Antony Rowstron (Microsoft Research) Peter Druschel (Rice University) “Pastry: scalable, decentralized object location and routing for large-scale peer-to-peer systems” n n Antony Rowstron (Microsoft Research) Peter Druschel (Rice University) 3

PASTRY 4

PASTRY 4

Pastry Generic p 2 p location and routing substrate (DHT) n n n Self-organizing overlay network (join, departures, locality repair) Consistent hashing Lookup/insert object in < log 2 b N routing steps (expected) O(log N) per-node state Network locality heuristics Scalable, fault resilient, self-organizing, locality aware, secure 5

Pastry Generic p 2 p location and routing substrate (DHT) n n n Self-organizing overlay network (join, departures, locality repair) Consistent hashing Lookup/insert object in < log 2 b N routing steps (expected) O(log N) per-node state Network locality heuristics Scalable, fault resilient, self-organizing, locality aware, secure 5

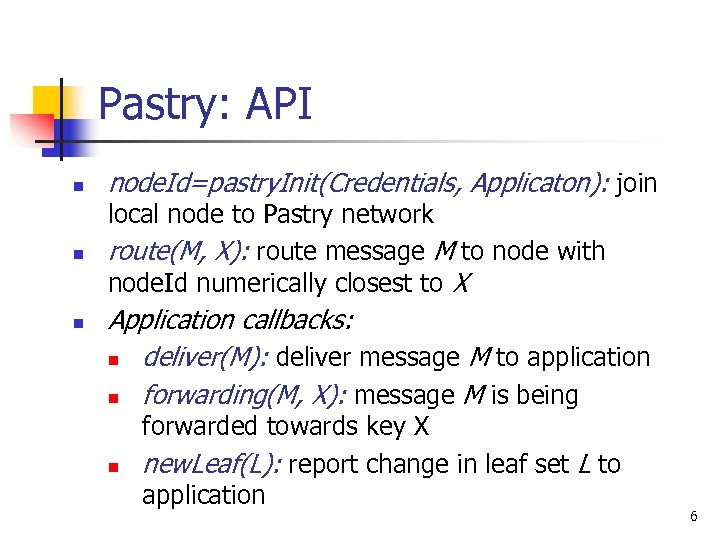

Pastry: API n node. Id=pastry. Init(Credentials, Applicaton): join local node to Pastry network route(M, X): route message M to node with node. Id numerically closest to X Application callbacks: n deliver(M): deliver message M to application n forwarding(M, X): message M is being n forwarded towards key X new. Leaf(L): report change in leaf set L to application 6

Pastry: API n node. Id=pastry. Init(Credentials, Applicaton): join local node to Pastry network route(M, X): route message M to node with node. Id numerically closest to X Application callbacks: n deliver(M): deliver message M to application n forwarding(M, X): message M is being n forwarded towards key X new. Leaf(L): report change in leaf set L to application 6

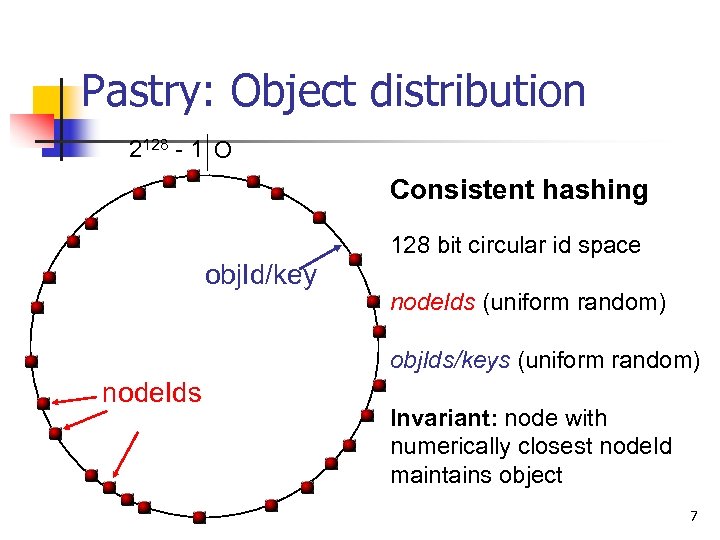

Pastry: Object distribution 2128 - 1 O Consistent hashing 128 bit circular id space obj. Id/key node. Ids (uniform random) obj. Ids/keys (uniform random) node. Ids Invariant: node with numerically closest node. Id maintains object 7

Pastry: Object distribution 2128 - 1 O Consistent hashing 128 bit circular id space obj. Id/key node. Ids (uniform random) obj. Ids/keys (uniform random) node. Ids Invariant: node with numerically closest node. Id maintains object 7

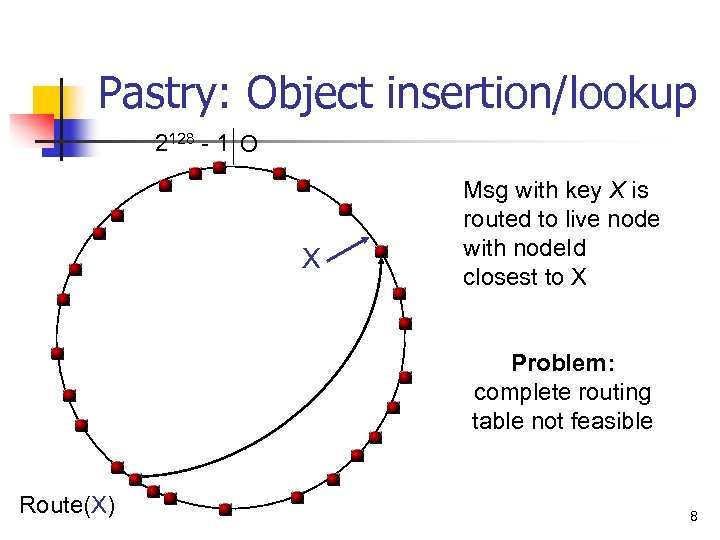

Pastry: Object insertion/lookup 2128 - 1 O X Msg with key X is routed to live node with node. Id closest to X Problem: complete routing table not feasible Route(X) 8

Pastry: Object insertion/lookup 2128 - 1 O X Msg with key X is routed to live node with node. Id closest to X Problem: complete routing table not feasible Route(X) 8

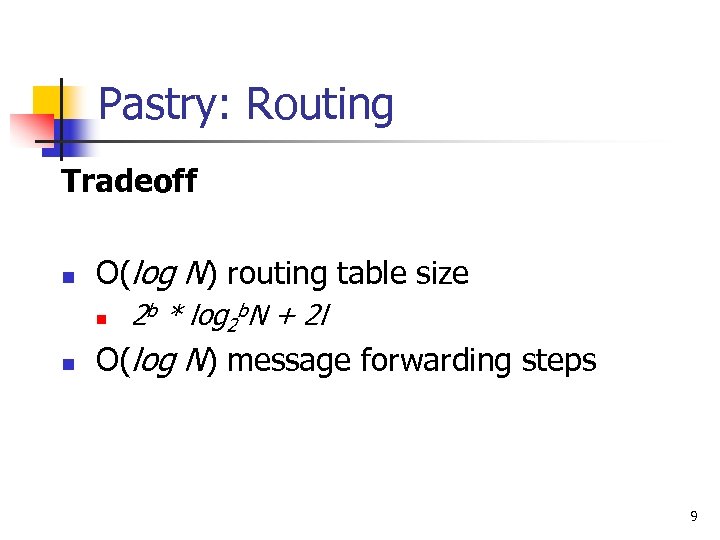

Pastry: Routing Tradeoff n O(log N) routing table size n n 2 b * log 2 b. N + 2 l O(log N) message forwarding steps 9

Pastry: Routing Tradeoff n O(log N) routing table size n n 2 b * log 2 b. N + 2 l O(log N) message forwarding steps 9

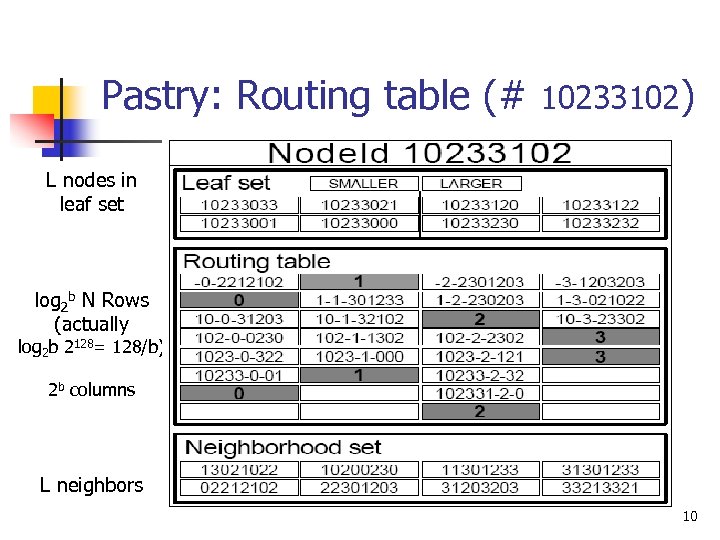

Pastry: Routing table (# 10233102) L nodes in leaf set log 2 b N Rows (actually log 2 b 2128= 128/b) 2 b columns L neighbors 10

Pastry: Routing table (# 10233102) L nodes in leaf set log 2 b N Rows (actually log 2 b 2128= 128/b) 2 b columns L neighbors 10

Pastry: Leaf sets Each node maintains IP addresses of the nodes with the L numerically closest larger and smaller node. Ids, respectively. § routing efficiency/robustness § fault detection (keep-alive) § application-specific local coordination

Pastry: Leaf sets Each node maintains IP addresses of the nodes with the L numerically closest larger and smaller node. Ids, respectively. § routing efficiency/robustness § fault detection (keep-alive) § application-specific local coordination

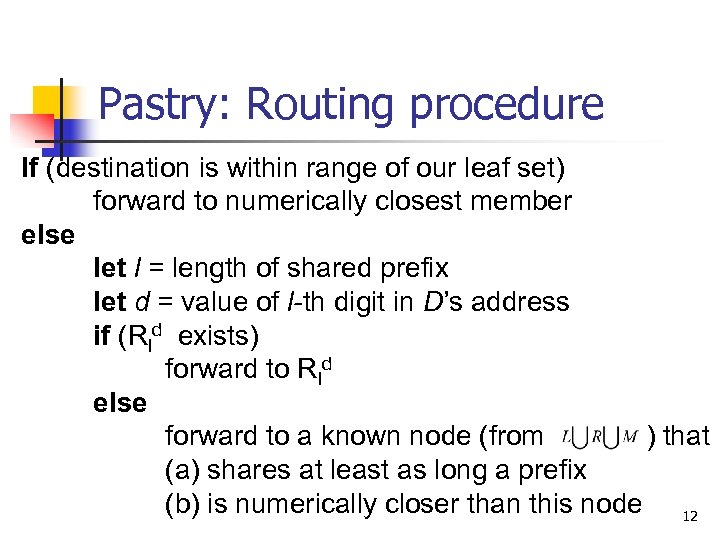

Pastry: Routing procedure If (destination is within range of our leaf set) forward to numerically closest member else let l = length of shared prefix let d = value of l-th digit in D’s address if (Rld exists) forward to Rld else forward to a known node (from ) that (a) shares at least as long a prefix (b) is numerically closer than this node 12

Pastry: Routing procedure If (destination is within range of our leaf set) forward to numerically closest member else let l = length of shared prefix let d = value of l-th digit in D’s address if (Rld exists) forward to Rld else forward to a known node (from ) that (a) shares at least as long a prefix (b) is numerically closer than this node 12

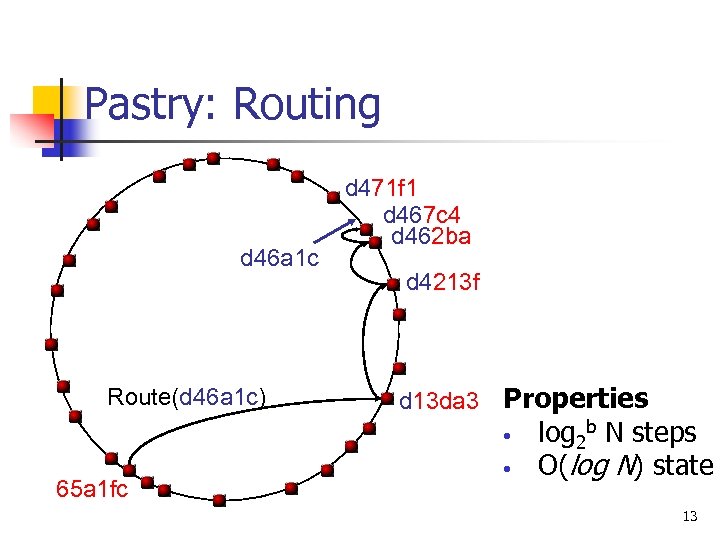

Pastry: Routing d 46 a 1 c Route(d 46 a 1 c) 65 a 1 fc d 471 f 1 d 467 c 4 d 462 ba d 4213 f d 13 da 3 Properties • log 2 b N steps • O(log N) state 13

Pastry: Routing d 46 a 1 c Route(d 46 a 1 c) 65 a 1 fc d 471 f 1 d 467 c 4 d 462 ba d 4213 f d 13 da 3 Properties • log 2 b N steps • O(log N) state 13

Pastry: Routing Integrity of overlay: n guaranteed unless L/2 simultaneous failures of nodes with adjacent node. Ids Number of routing hops: n No failures: < log 2 b N expected, 128/b + 1 max n During failure recovery: n O(N) worst case, average case much better 14

Pastry: Routing Integrity of overlay: n guaranteed unless L/2 simultaneous failures of nodes with adjacent node. Ids Number of routing hops: n No failures: < log 2 b N expected, 128/b + 1 max n During failure recovery: n O(N) worst case, average case much better 14

Pastry: Locality properties Assumption: scalar proximity metric n e. g. ping/RTT delay, # IP hops n traceroute, subnet masks n a node can probe distance to any other node Proximity invariant: Each routing table entry refers to a node close to the local node (in the proximity space), among all nodes with the appropriate node. Id prefix. 15

Pastry: Locality properties Assumption: scalar proximity metric n e. g. ping/RTT delay, # IP hops n traceroute, subnet masks n a node can probe distance to any other node Proximity invariant: Each routing table entry refers to a node close to the local node (in the proximity space), among all nodes with the appropriate node. Id prefix. 15

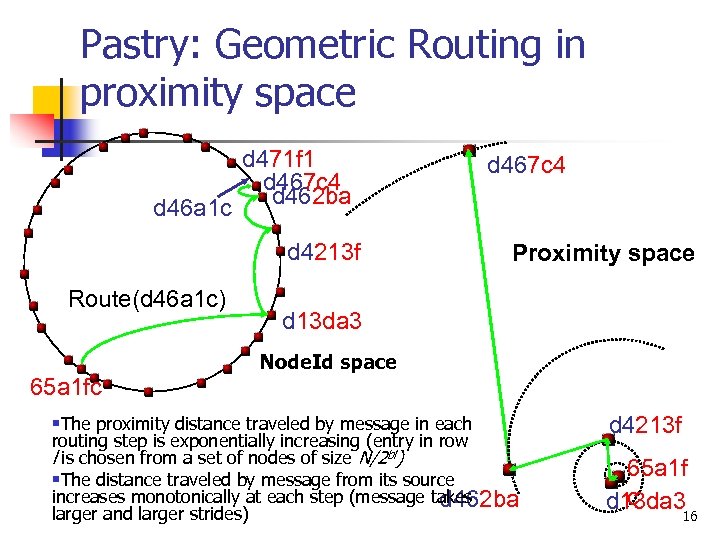

Pastry: Geometric Routing in proximity space d 46 a 1 c d 471 f 1 d 467 c 4 d 462 ba d 4213 f Route(d 46 a 1 c) d 467 c 4 Proximity space d 13 da 3 Node. Id space 65 a 1 fc §The proximity distance traveled by message in each routing step is exponentially increasing (entry in row l is chosen from a set of nodes of size N/2 bl) §The distance traveled by message from its source increases monotonically at each step (message takes d 462 ba larger and larger strides) d 4213 f 65 a 1 f c d 13 da 316

Pastry: Geometric Routing in proximity space d 46 a 1 c d 471 f 1 d 467 c 4 d 462 ba d 4213 f Route(d 46 a 1 c) d 467 c 4 Proximity space d 13 da 3 Node. Id space 65 a 1 fc §The proximity distance traveled by message in each routing step is exponentially increasing (entry in row l is chosen from a set of nodes of size N/2 bl) §The distance traveled by message from its source increases monotonically at each step (message takes d 462 ba larger and larger strides) d 4213 f 65 a 1 f c d 13 da 316

Pastry: Locality properties n n Each routing step is local, but there is no guarantee of globally shortest path Nevertheless, simulations show: n n Expected distance traveled by a message in the proximity space is within a small constant of the minimum Among k nodes with node. Ids closest to the key, message likely to reach the node closest to the source node first 17

Pastry: Locality properties n n Each routing step is local, but there is no guarantee of globally shortest path Nevertheless, simulations show: n n Expected distance traveled by a message in the proximity space is within a small constant of the minimum Among k nodes with node. Ids closest to the key, message likely to reach the node closest to the source node first 17

Pastry: Self-organization Initializing and maintaining routing tables and leaf sets n n Node addition Node departure (failure) The goal is to maintain all routing table entries to refer to a near node, among all live nodes with appropriate prefix 18

Pastry: Self-organization Initializing and maintaining routing tables and leaf sets n n Node addition Node departure (failure) The goal is to maintain all routing table entries to refer to a near node, among all live nodes with appropriate prefix 18

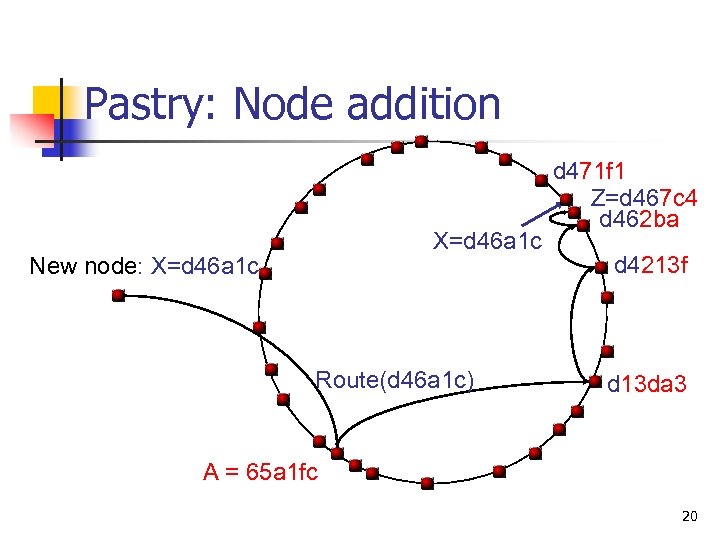

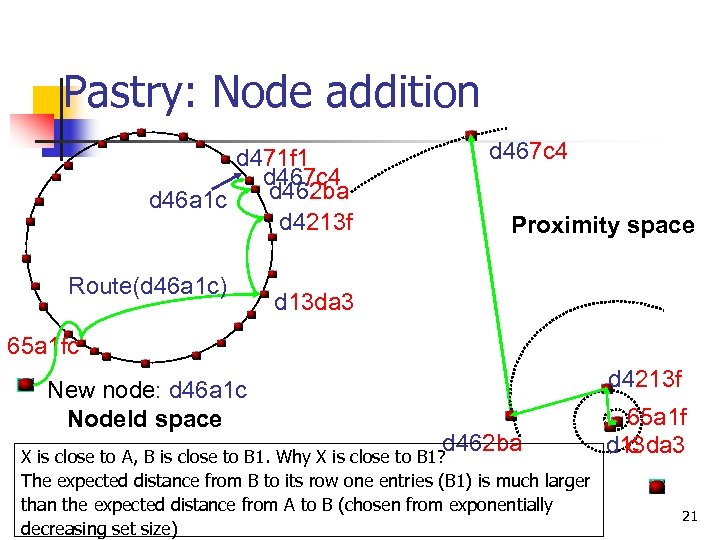

Pastry: Node addition n n New node X contacts nearby node A A routes “join” message to X, which arrives to Z, closest to X X obtains leaf set from Z, i’th row for routing table from i’th node from A to Z X informs any nodes that need to be aware of its arrival n n X also improves its table locality by requesting neighborhood sets from all nodes X knows In practice: optimistic approach 19

Pastry: Node addition n n New node X contacts nearby node A A routes “join” message to X, which arrives to Z, closest to X X obtains leaf set from Z, i’th row for routing table from i’th node from A to Z X informs any nodes that need to be aware of its arrival n n X also improves its table locality by requesting neighborhood sets from all nodes X knows In practice: optimistic approach 19

Pastry: Node addition X=d 46 a 1 c New node: X=d 46 a 1 c Route(d 46 a 1 c) d 471 f 1 Z=d 467 c 4 d 462 ba d 4213 f d 13 da 3 A = 65 a 1 fc 20

Pastry: Node addition X=d 46 a 1 c New node: X=d 46 a 1 c Route(d 46 a 1 c) d 471 f 1 Z=d 467 c 4 d 462 ba d 4213 f d 13 da 3 A = 65 a 1 fc 20

Pastry: Node addition d 471 f 1 d 467 c 4 d 462 ba d 46 a 1 c d 4213 f Route(d 46 a 1 c) d 467 c 4 Proximity space d 13 da 3 65 a 1 fc New node: d 46 a 1 c Node. Id space d 4213 f d 462 ba X is close to A, B is close to B 1. Why X is close to B 1? The expected distance from B to its row one entries (B 1) is much larger than the expected distance from A to B (chosen from exponentially decreasing set size) 65 a 1 f c d 13 da 3 21

Pastry: Node addition d 471 f 1 d 467 c 4 d 462 ba d 46 a 1 c d 4213 f Route(d 46 a 1 c) d 467 c 4 Proximity space d 13 da 3 65 a 1 fc New node: d 46 a 1 c Node. Id space d 4213 f d 462 ba X is close to A, B is close to B 1. Why X is close to B 1? The expected distance from B to its row one entries (B 1) is much larger than the expected distance from A to B (chosen from exponentially decreasing set size) 65 a 1 f c d 13 da 3 21

Node departure (failure) n Leaf set repair (eager – all the time): n n n Routing table repair (lazy – upon failure): n n Leaf set members exchange keep-alive messages request set from furthest live node in set get table from peers in the same row, if not found – from higher rows Neighborhood set repair (eager) 22

Node departure (failure) n Leaf set repair (eager – all the time): n n n Routing table repair (lazy – upon failure): n n Leaf set members exchange keep-alive messages request set from furthest live node in set get table from peers in the same row, if not found – from higher rows Neighborhood set repair (eager) 22

Pastry: Security n n n Secure node. Id assignment Randomized routing – pick random node among all potential Byzantine fault-tolerant leaf set membership protocol 23

Pastry: Security n n n Secure node. Id assignment Randomized routing – pick random node among all potential Byzantine fault-tolerant leaf set membership protocol 23

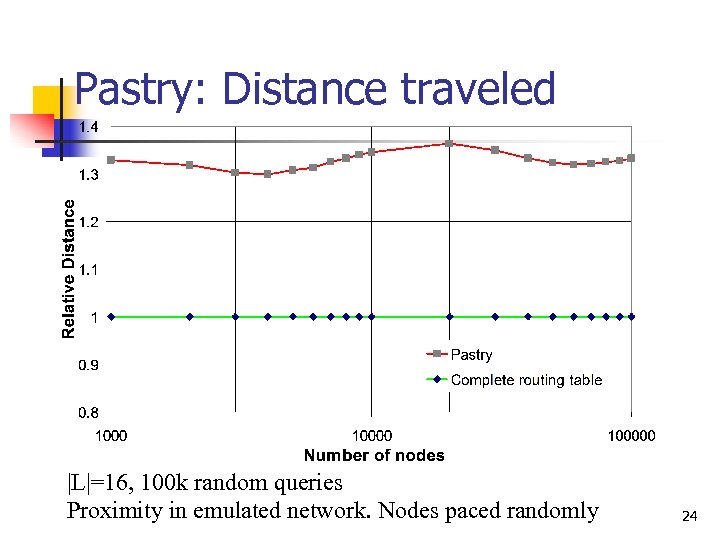

Pastry: Distance traveled |L|=16, 100 k random queries Proximity in emulated network. Nodes paced randomly 24

Pastry: Distance traveled |L|=16, 100 k random queries Proximity in emulated network. Nodes paced randomly 24

Pastry: Summary n n n Generic p 2 p overlay network Scalable, fault resilient, self-organizing, secure O(log N) routing steps (expected) O(log N) routing table size Network locality properties 25

Pastry: Summary n n n Generic p 2 p overlay network Scalable, fault resilient, self-organizing, secure O(log N) routing steps (expected) O(log N) routing table size Network locality properties 25

PAST 26

PAST 26

INTRODUCTION § PAST system § Internet-based, peer-to-peer global storage utility § Characteristics: strong persistence, high availability (by using k replicas) scalability (due to efficient Pastry routing) short insert and query paths query load balancing and latency reduction (due to wide dispersion, Pastry locality and caching) § security § § § Composed of nodes connected to internet, each node has 128 -bit node. Id § Use Pastry for efficient routing scheme § No support for mutable files, searching, directory lookup 27

INTRODUCTION § PAST system § Internet-based, peer-to-peer global storage utility § Characteristics: strong persistence, high availability (by using k replicas) scalability (due to efficient Pastry routing) short insert and query paths query load balancing and latency reduction (due to wide dispersion, Pastry locality and caching) § security § § § Composed of nodes connected to internet, each node has 128 -bit node. Id § Use Pastry for efficient routing scheme § No support for mutable files, searching, directory lookup 27

INTRODUCTION § Function of nodes : § store replicas of files § initiate and route client requests to insert or retrieve files in PAST § File-related property : § Inserted files have quasi-unique file. Id, § File is replicated across multiple nodes § To retrieve file, client must know file. Id and decryption key (if necessary) § file. Id : 160 -bit computed as SHA-1 of file name, owner’s public key, random salt number 28

INTRODUCTION § Function of nodes : § store replicas of files § initiate and route client requests to insert or retrieve files in PAST § File-related property : § Inserted files have quasi-unique file. Id, § File is replicated across multiple nodes § To retrieve file, client must know file. Id and decryption key (if necessary) § file. Id : 160 -bit computed as SHA-1 of file name, owner’s public key, random salt number 28

PAST Operation § Insert: file. Id = Insert(name, owner-credentials, k, file) 1. file. Id computed (hash code of file name, public key, etc. ) 2. Request Message reaches one of k nodes closest § § to file. Id 3. Node accepts a replica of the file, forwards message to k-1 nodes existing in leaf set 4. Once k nodes accept, ‘ack’ message with store receipt is passed to client Lookup: file = Lookup(file. Id) Reclaim: Reclaim(file. Id, owner-credentials) 29

PAST Operation § Insert: file. Id = Insert(name, owner-credentials, k, file) 1. file. Id computed (hash code of file name, public key, etc. ) 2. Request Message reaches one of k nodes closest § § to file. Id 3. Node accepts a replica of the file, forwards message to k-1 nodes existing in leaf set 4. Once k nodes accept, ‘ack’ message with store receipt is passed to client Lookup: file = Lookup(file. Id) Reclaim: Reclaim(file. Id, owner-credentials) 29

STORAGE MANAGEMENT why? § Responsibility § Replicas of files be maintained by k nodes with node. Id closest to file. Id § Balance free storage space among nodes in PAST § Conflict : K nodes having insufficient storage vs. neighbor nodes having sufficient storage § Cause of load imbalance : 3 differences § Number of files assigned to each node § Size of each inserted file § Storage capacity of each node § Resolution : Replica diversion, File diversion 30

STORAGE MANAGEMENT why? § Responsibility § Replicas of files be maintained by k nodes with node. Id closest to file. Id § Balance free storage space among nodes in PAST § Conflict : K nodes having insufficient storage vs. neighbor nodes having sufficient storage § Cause of load imbalance : 3 differences § Number of files assigned to each node § Size of each inserted file § Storage capacity of each node § Resolution : Replica diversion, File diversion 30

STORAGE MANAGEMENT Replica Diversion § GOAL : balance the remaining free storage space among nodes in leaf set § Diversion steps of node A (that received insertion request but has insufficient space) 1. choose node B among nodes in leaf set except k closest, s. t. B does not already holds diverted replica 2. ask B to store a copy 3. enter an file entry in table with pointer to B 4. send store receipt as usual 31

STORAGE MANAGEMENT Replica Diversion § GOAL : balance the remaining free storage space among nodes in leaf set § Diversion steps of node A (that received insertion request but has insufficient space) 1. choose node B among nodes in leaf set except k closest, s. t. B does not already holds diverted replica 2. ask B to store a copy 3. enter an file entry in table with pointer to B 4. send store receipt as usual 31

STORAGE MANAGEMENT Replica Diversion § Policy for accepting a replica by node § Node rejects file if file_size/remaining_storage > t § Threshold t -> tpri (in primary replica), tdiv (in diverted replica) § Avoids unnecessary diversion when node still has space § Prefer diverting large files – minimize number of diversions § Prefer accepting primary replicas than diverted replicas 32

STORAGE MANAGEMENT Replica Diversion § Policy for accepting a replica by node § Node rejects file if file_size/remaining_storage > t § Threshold t -> tpri (in primary replica), tdiv (in diverted replica) § Avoids unnecessary diversion when node still has space § Prefer diverting large files – minimize number of diversions § Prefer accepting primary replicas than diverted replicas 32

STORAGE MANAGEMENT File Diversion § GOAL : balancing the remaining free storage space § § among nodes in PAST network When all k nodes and their leaf sets have insufficient space Client node generate new file. Id using different salt value Repeats limit : 3 times Fourth fail -> make smaller file size by fragmenting 33

STORAGE MANAGEMENT File Diversion § GOAL : balancing the remaining free storage space § § among nodes in PAST network When all k nodes and their leaf sets have insufficient space Client node generate new file. Id using different salt value Repeats limit : 3 times Fourth fail -> make smaller file size by fragmenting 33

STORAGE MANAGEMENT node strategy to maintain k replicas § In Pastry, neighboring nodes exchange keepalive message § If period T is passed, leaf nodes § removes the failed node from leaf set § includes a live node with next closest noid. Id § File strategy for node joining and dropping in leaf sets § if failed node is one of k nodes for certain files (primary or diverted replica holder), re-creating replicas held by failed node § To cope with diverter failure – replicate diversion pointers § Optimization – joining node may, instead of requesting all its replicas, install a pointer to the previous replica holder in file table 34 (like replica diversion). Than gradual migration

STORAGE MANAGEMENT node strategy to maintain k replicas § In Pastry, neighboring nodes exchange keepalive message § If period T is passed, leaf nodes § removes the failed node from leaf set § includes a live node with next closest noid. Id § File strategy for node joining and dropping in leaf sets § if failed node is one of k nodes for certain files (primary or diverted replica holder), re-creating replicas held by failed node § To cope with diverter failure – replicate diversion pointers § Optimization – joining node may, instead of requesting all its replicas, install a pointer to the previous replica holder in file table 34 (like replica diversion). Than gradual migration

STORAGE MANAGEMENT Fragmenting and File encoding § In Reed-Solomon encoding, to increase high availability § Fragmentation: § improves equal disk utilization § improves bandwidth – parallel download § Higher latency to contact several nodes for retreaval 35

STORAGE MANAGEMENT Fragmenting and File encoding § In Reed-Solomon encoding, to increase high availability § Fragmentation: § improves equal disk utilization § improves bandwidth – parallel download § Higher latency to contact several nodes for retreaval 35

CACHING Ø Ø GOAL : minimizing client access latency, maximizing query throughput, balancing query load Create and maintain additional copies of highly popular file in “unused” disk space of nodes During successful insertion and lookup, on all routed nodes Greedy. Dual-Size (GD-S) policy for replacement Ø Ø Applying Hf(=cost(f)/size(f)) value to each cached file File with lowest Hf is replaced 36

CACHING Ø Ø GOAL : minimizing client access latency, maximizing query throughput, balancing query load Create and maintain additional copies of highly popular file in “unused” disk space of nodes During successful insertion and lookup, on all routed nodes Greedy. Dual-Size (GD-S) policy for replacement Ø Ø Applying Hf(=cost(f)/size(f)) value to each cached file File with lowest Hf is replaced 36

Security in PAST § Smartcard – private/public key scheme § ensure node. Id / file. Id assignment integrity § Against a malicious node § § § Getting store receipt – prevent fewer than k replicas File certificate – verify the authenticity of file content File privacy by clients encryption Signing routing tables entries Randomizing the routing scheme, to prevent DOS § Can not completely prevent malicious node to suppress valid entries 37

Security in PAST § Smartcard – private/public key scheme § ensure node. Id / file. Id assignment integrity § Against a malicious node § § § Getting store receipt – prevent fewer than k replicas File certificate – verify the authenticity of file content File privacy by clients encryption Signing routing tables entries Randomizing the routing scheme, to prevent DOS § Can not completely prevent malicious node to suppress valid entries 37

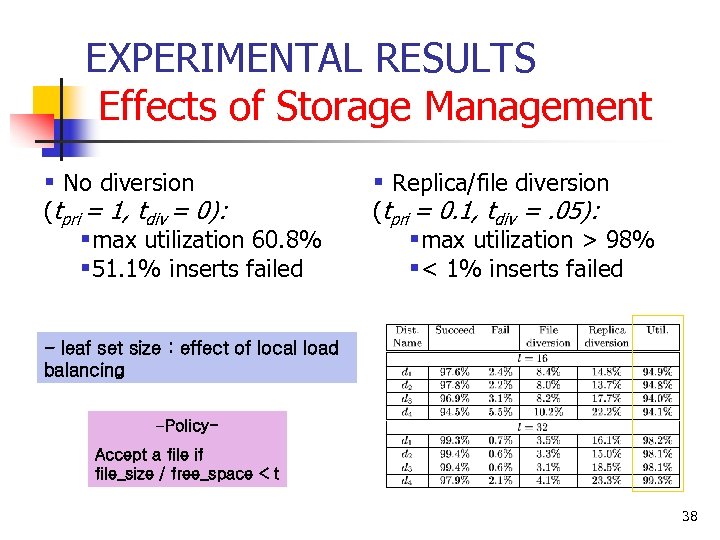

EXPERIMENTAL RESULTS Effects of Storage Management § No diversion (tpri = 1, tdiv = 0): §max utilization 60. 8% § 51. 1% inserts failed § Replica/file diversion (tpri = 0. 1, tdiv =. 05): §max utilization > 98% §< 1% inserts failed - leaf set size : effect of local load balancing -Policy. Accept a file if file_size / free_space < t 38

EXPERIMENTAL RESULTS Effects of Storage Management § No diversion (tpri = 1, tdiv = 0): §max utilization 60. 8% § 51. 1% inserts failed § Replica/file diversion (tpri = 0. 1, tdiv =. 05): §max utilization > 98% §< 1% inserts failed - leaf set size : effect of local load balancing -Policy. Accept a file if file_size / free_space < t 38

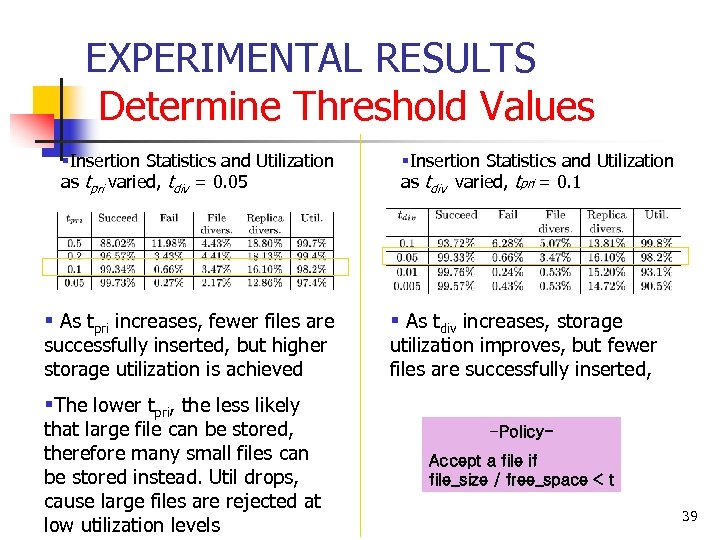

EXPERIMENTAL RESULTS Determine Threshold Values §Insertion Statistics and Utilization as tpri varied, tdiv = 0. 05 § As tpri increases, fewer files are successfully inserted, but higher storage utilization is achieved §Insertion Statistics and Utilization as tdiv varied, tpri = 0. 1 § As tdiv increases, storage utilization improves, but fewer files are successfully inserted, §The lower tpri, the less likely that large file can be stored, therefore many small files can be stored instead. Util drops, cause large files are rejected at low utilization levels -Policy. Accept a file if file_size / free_space < t 39

EXPERIMENTAL RESULTS Determine Threshold Values §Insertion Statistics and Utilization as tpri varied, tdiv = 0. 05 § As tpri increases, fewer files are successfully inserted, but higher storage utilization is achieved §Insertion Statistics and Utilization as tdiv varied, tpri = 0. 1 § As tdiv increases, storage utilization improves, but fewer files are successfully inserted, §The lower tpri, the less likely that large file can be stored, therefore many small files can be stored instead. Util drops, cause large files are rejected at low utilization levels -Policy. Accept a file if file_size / free_space < t 39

EXPERIMENTAL RESULTS Impact of file and replica diversion § File diversions are negligible for storage utilization below 83% § Number of replica diversions is small even at high utilization: § at 80% utilization less than 10% replicas are diverted § => The overhead imposed by replica and file diversions is small as long as utilization is below 95% 40

EXPERIMENTAL RESULTS Impact of file and replica diversion § File diversions are negligible for storage utilization below 83% § Number of replica diversions is small even at high utilization: § at 80% utilization less than 10% replicas are diverted § => The overhead imposed by replica and file diversions is small as long as utilization is below 95% 40

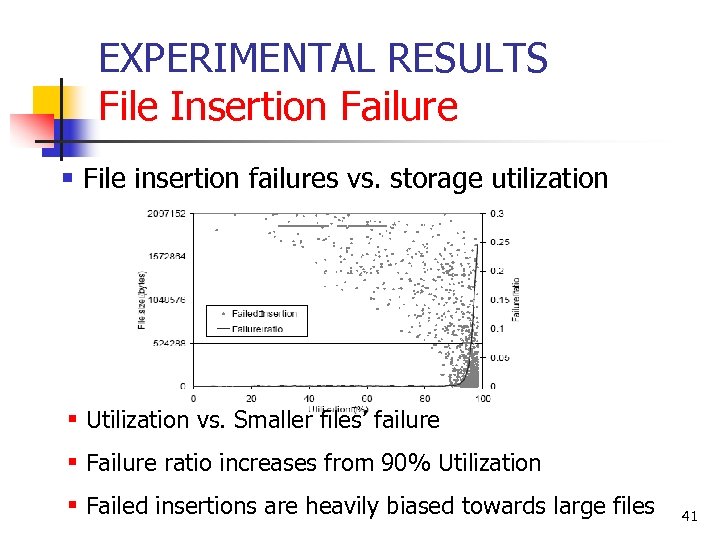

EXPERIMENTAL RESULTS File Insertion Failure § File insertion failures vs. storage utilization § Utilization vs. Smaller files’ failure § Failure ratio increases from 90% Utilization § Failed insertions are heavily biased towards large files 41

EXPERIMENTAL RESULTS File Insertion Failure § File insertion failures vs. storage utilization § Utilization vs. Smaller files’ failure § Failure ratio increases from 90% Utilization § Failed insertions are heavily biased towards large files 41

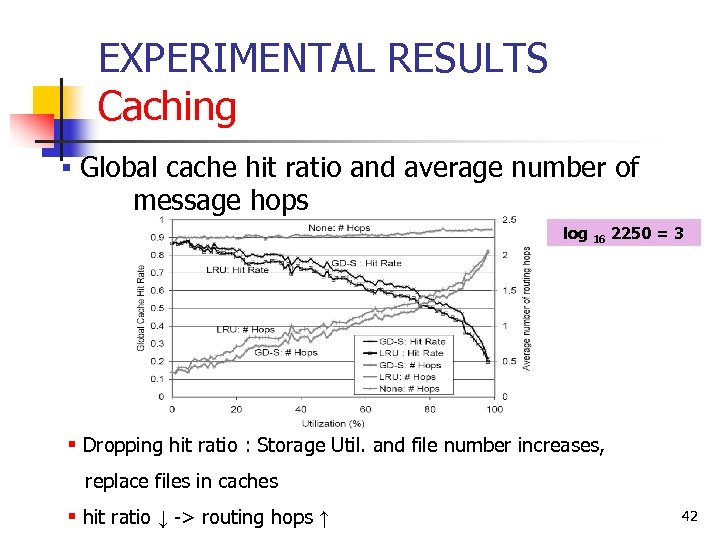

EXPERIMENTAL RESULTS Caching § Global cache hit ratio and average number of message hops log 16 2250 = 3 § Dropping hit ratio : Storage Util. and file number increases, replace files in caches § hit ratio ↓ -> routing hops ↑ 42

EXPERIMENTAL RESULTS Caching § Global cache hit ratio and average number of message hops log 16 2250 = 3 § Dropping hit ratio : Storage Util. and file number increases, replace files in caches § hit ratio ↓ -> routing hops ↑ 42

CONCLUSION § Design and evaluation of PAST § Storage Management, Caching § Nodes and files are assigned uniformly distributed ID § Replicas of file stored at k nodes closest to fild. Id § Experimental results § Achieve storage utilization of 98% § Low file insertion failure ratio at high storage utilization § Effective caching achieves load balancing 43

CONCLUSION § Design and evaluation of PAST § Storage Management, Caching § Nodes and files are assigned uniformly distributed ID § Replicas of file stored at k nodes closest to fild. Id § Experimental results § Achieve storage utilization of 98% § Low file insertion failure ratio at high storage utilization § Effective caching achieves load balancing 43

Weakness § Does not support mutable files –read only § No searching, directory lookup § Local fault in segment of network may cause § § functioning node not to be able to contact outside world, since its routing table is mainly local No direct support for anonymity or confidentiality Breaking large node apart – is it good or bad? Simulation is too sterile No experimental comparison of PAST to other systems 44

Weakness § Does not support mutable files –read only § No searching, directory lookup § Local fault in segment of network may cause § § functioning node not to be able to contact outside world, since its routing table is mainly local No direct support for anonymity or confidentiality Breaking large node apart – is it good or bad? Simulation is too sterile No experimental comparison of PAST to other systems 44

Comparison to other systems 45

Comparison to other systems 45

Comparison § PASTRY compared to Freenet and Gnutella: § Guaranteed answer in bounded number of steps, while retaining scalabilty of Freenet and self-organization of Freenet and Gnutella § PASTRY Compared to Chord § Chord makes no explicit effort to achieve good network locality § PAST compared to Ocean. Store § PAST has no support for mutable files, searching, directory lookup § more sophisticated storage semantics could be build on top of PAST § Pastry (and Tapestry) are similar to Plaxton: § routing based on prefixes, generalization of hypercube routing § Plaxton is not self organizing; one node associated per file, thus single point of failure 46

Comparison § PASTRY compared to Freenet and Gnutella: § Guaranteed answer in bounded number of steps, while retaining scalabilty of Freenet and self-organization of Freenet and Gnutella § PASTRY Compared to Chord § Chord makes no explicit effort to achieve good network locality § PAST compared to Ocean. Store § PAST has no support for mutable files, searching, directory lookup § more sophisticated storage semantics could be build on top of PAST § Pastry (and Tapestry) are similar to Plaxton: § routing based on prefixes, generalization of hypercube routing § Plaxton is not self organizing; one node associated per file, thus single point of failure 46

Comparison n PAST compared to Far. Site n n Far. Site has traditional file system semantics, distributed directory service to locate content. Every node maintains partial list of live nodes, from which it chooses nodes to store replicas LAN assumptions of Far. Site may not hold in a wide-area environment PAST compared to CFS n n n CFS built on top of Chord File sharing medium, block oriented, read only Each block is stored on multiple nodes with adjacent Chord node. Ids, caching of popular blocks n n n Increased file retrieval overhead Parallel block retrieval good for large files CFS assumes abundance of free disk space n Relies on hosting multiple logical nodes in one physical Chord node, with separate ids, in order to accommodate nodes with big storage capacity 47 => increasing query overhead

Comparison n PAST compared to Far. Site n n Far. Site has traditional file system semantics, distributed directory service to locate content. Every node maintains partial list of live nodes, from which it chooses nodes to store replicas LAN assumptions of Far. Site may not hold in a wide-area environment PAST compared to CFS n n n CFS built on top of Chord File sharing medium, block oriented, read only Each block is stored on multiple nodes with adjacent Chord node. Ids, caching of popular blocks n n n Increased file retrieval overhead Parallel block retrieval good for large files CFS assumes abundance of free disk space n Relies on hosting multiple logical nodes in one physical Chord node, with separate ids, in order to accommodate nodes with big storage capacity 47 => increasing query overhead

Comparison n PAST compared to LAND n n n Expected constant number of outgoing links in each node Constant number of pointers to each object Constant bound on distortion (stretch): accumulative route cost divided by distance cost n n Links choice enforces distance upper bound on each stage of the route LAND uses two tier architecture: super-nodes 48

Comparison n PAST compared to LAND n n n Expected constant number of outgoing links in each node Constant number of pointers to each object Constant bound on distortion (stretch): accumulative route cost divided by distance cost n n Links choice enforces distance upper bound on each stage of the route LAND uses two tier architecture: super-nodes 48

The END 49

The END 49