f6ff91bad2855ad1e769067bbc6392e6.ppt

- Количество слайдов: 16

STORAGE @ L’POOL HEPSYSMAN, July 09 Steve Jones sjones@hep. ph. liv. ac. uk

STORAGE @ L’POOL HEPSYSMAN, July 09 Steve Jones sjones@hep. ph. liv. ac. uk

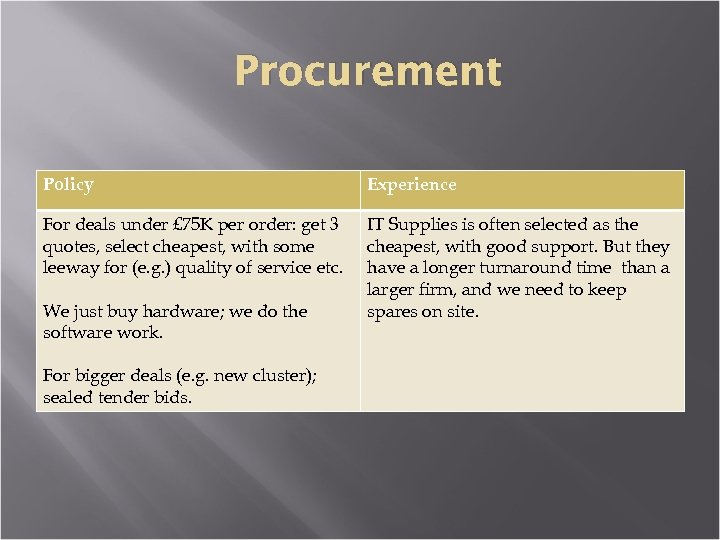

Procurement Policy Experience For deals under £ 75 K per order: get 3 quotes, select cheapest, with some leeway for (e. g. ) quality of service etc. IT Supplies is often selected as the cheapest, with good support. But they have a longer turnaround time than a larger firm, and we need to keep spares on site. We just buy hardware; we do the software work. For bigger deals (e. g. new cluster); sealed tender bids.

Procurement Policy Experience For deals under £ 75 K per order: get 3 quotes, select cheapest, with some leeway for (e. g. ) quality of service etc. IT Supplies is often selected as the cheapest, with good support. But they have a longer turnaround time than a larger firm, and we need to keep spares on site. We just buy hardware; we do the software work. For bigger deals (e. g. new cluster); sealed tender bids.

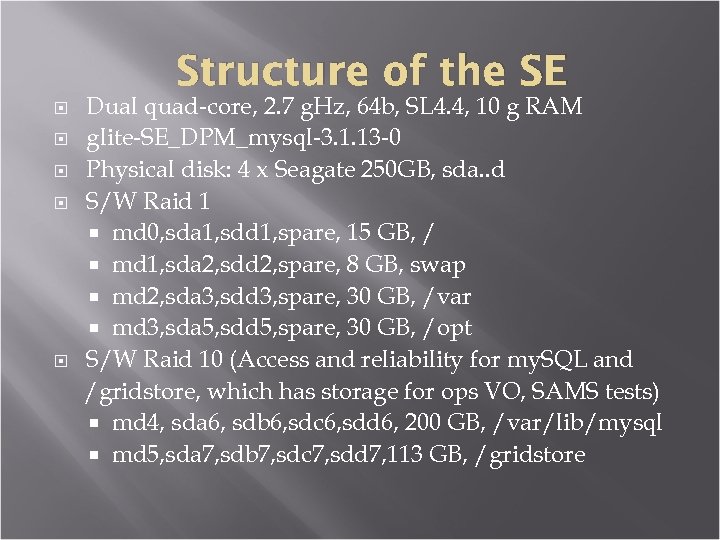

Structure of the SE Dual quad-core, 2. 7 g. Hz, 64 b, SL 4. 4, 10 g RAM glite-SE_DPM_mysql-3. 1. 13 -0 Physical disk: 4 x Seagate 250 GB, sda. . d S/W Raid 1 md 0, sda 1, sdd 1, spare, 15 GB, / md 1, sda 2, sdd 2, spare, 8 GB, swap md 2, sda 3, sdd 3, spare, 30 GB, /var md 3, sda 5, sdd 5, spare, 30 GB, /opt S/W Raid 10 (Access and reliability for my. SQL and /gridstore, which has storage for ops VO, SAMS tests) md 4, sda 6, sdb 6, sdc 6, sdd 6, 200 GB, /var/lib/mysql md 5, sda 7, sdb 7, sdc 7, sdd 7, 113 GB, /gridstore

Structure of the SE Dual quad-core, 2. 7 g. Hz, 64 b, SL 4. 4, 10 g RAM glite-SE_DPM_mysql-3. 1. 13 -0 Physical disk: 4 x Seagate 250 GB, sda. . d S/W Raid 1 md 0, sda 1, sdd 1, spare, 15 GB, / md 1, sda 2, sdd 2, spare, 8 GB, swap md 2, sda 3, sdd 3, spare, 30 GB, /var md 3, sda 5, sdd 5, spare, 30 GB, /opt S/W Raid 10 (Access and reliability for my. SQL and /gridstore, which has storage for ops VO, SAMS tests) md 4, sda 6, sdb 6, sdc 6, sdd 6, 200 GB, /var/lib/mysql md 5, sda 7, sdb 7, sdc 7, sdd 7, 113 GB, /gridstore

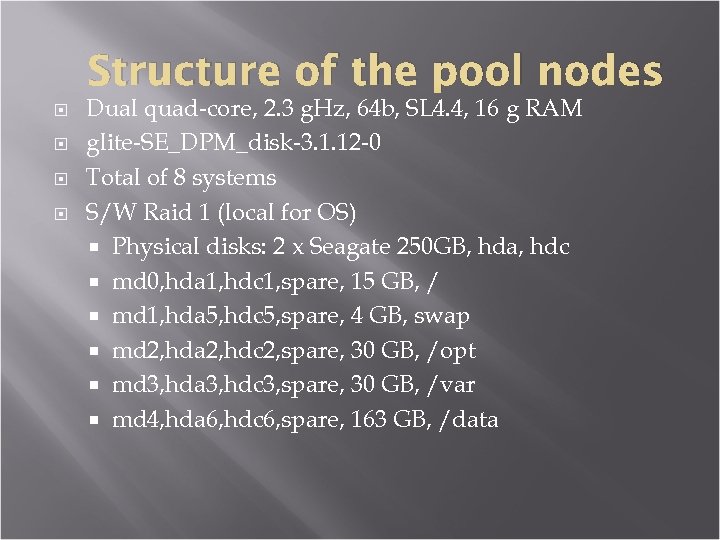

Structure of the pool nodes Dual quad-core, 2. 3 g. Hz, 64 b, SL 4. 4, 16 g RAM glite-SE_DPM_disk-3. 1. 12 -0 Total of 8 systems S/W Raid 1 (local for OS) Physical disks: 2 x Seagate 250 GB, hda, hdc md 0, hda 1, hdc 1, spare, 15 GB, / md 1, hda 5, hdc 5, spare, 4 GB, swap md 2, hda 2, hdc 2, spare, 30 GB, /opt md 3, hda 3, hdc 3, spare, 30 GB, /var md 4, hda 6, hdc 6, spare, 163 GB, /data

Structure of the pool nodes Dual quad-core, 2. 3 g. Hz, 64 b, SL 4. 4, 16 g RAM glite-SE_DPM_disk-3. 1. 12 -0 Total of 8 systems S/W Raid 1 (local for OS) Physical disks: 2 x Seagate 250 GB, hda, hdc md 0, hda 1, hdc 1, spare, 15 GB, / md 1, hda 5, hdc 5, spare, 4 GB, swap md 2, hda 2, hdc 2, spare, 30 GB, /opt md 3, hda 3, hdc 3, spare, 30 GB, /var md 4, hda 6, hdc 6, spare, 163 GB, /data

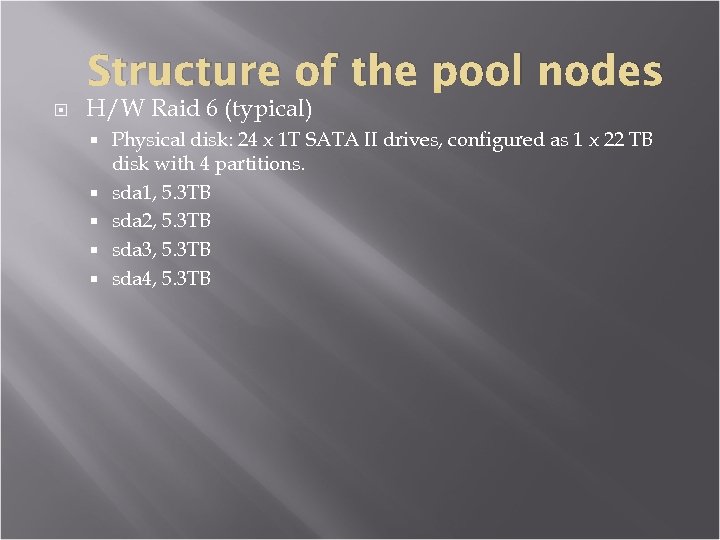

Structure of the pool nodes H/W Raid 6 (typical) Physical disk: 24 x 1 T SATA II drives, configured as 1 x 22 TB disk with 4 partitions. sda 1, 5. 3 TB sda 2, 5. 3 TB sda 3, 5. 3 TB sda 4, 5. 3 TB

Structure of the pool nodes H/W Raid 6 (typical) Physical disk: 24 x 1 T SATA II drives, configured as 1 x 22 TB disk with 4 partitions. sda 1, 5. 3 TB sda 2, 5. 3 TB sda 3, 5. 3 TB sda 4, 5. 3 TB

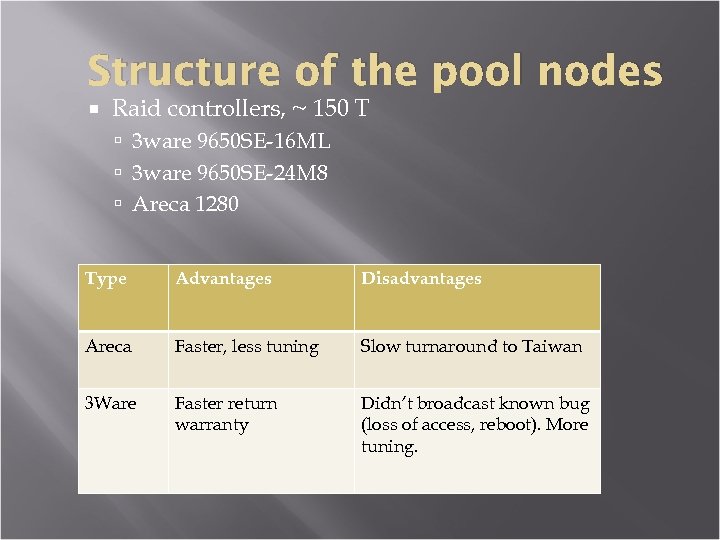

Structure of the pool nodes Raid controllers, ~ 150 T 3 ware 9650 SE-16 ML 3 ware 9650 SE-24 M 8 Areca 1280 Type Advantages Disadvantages Areca Faster, less tuning Slow turnaround to Taiwan 3 Ware Faster return warranty Didn’t broadcast known bug (loss of access, reboot). More tuning.

Structure of the pool nodes Raid controllers, ~ 150 T 3 ware 9650 SE-16 ML 3 ware 9650 SE-24 M 8 Areca 1280 Type Advantages Disadvantages Areca Faster, less tuning Slow turnaround to Taiwan 3 Ware Faster return warranty Didn’t broadcast known bug (loss of access, reboot). More tuning.

The network 1 gbps NICs, Intel 4 per system. Two on MB + 2 extra Each system has 2 network presences One to HEP network, routable to Internet (138. 253…), using 1 NIC. Another for internal traffic (192. 168. 178…); busy, so 3 NICs, bonded (1 MAC) to Force 10 switch (LACP). Useful when 100 s of connections (can’t break a connection across two NICs). Isolated, local grid, allows jumbo frames).

The network 1 gbps NICs, Intel 4 per system. Two on MB + 2 extra Each system has 2 network presences One to HEP network, routable to Internet (138. 253…), using 1 NIC. Another for internal traffic (192. 168. 178…); busy, so 3 NICs, bonded (1 MAC) to Force 10 switch (LACP). Useful when 100 s of connections (can’t break a connection across two NICs). Isolated, local grid, allows jumbo frames).

Each system has software firewall, to stop unwanted traffic (no ssh!) Allows incoming rfio, gridftp, srm (head node only)

Each system has software firewall, to stop unwanted traffic (no ssh!) Allows incoming rfio, gridftp, srm (head node only)

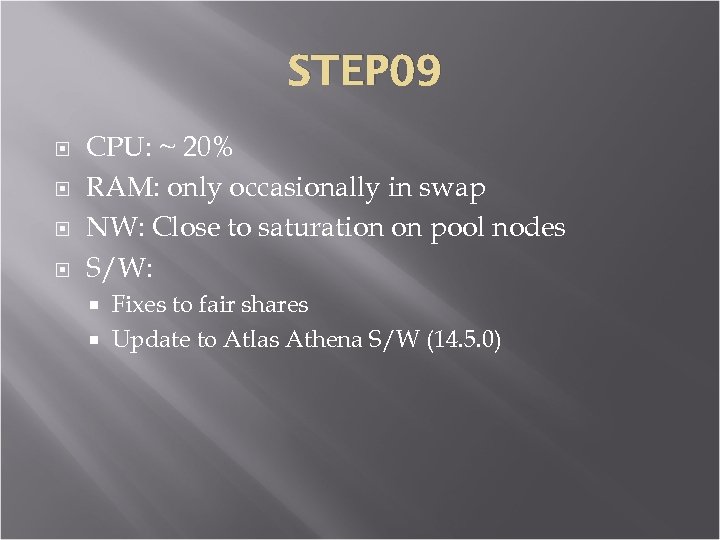

STEP 09 CPU: ~ 20% RAM: only occasionally in swap NW: Close to saturation on pool nodes S/W: Fixes to fair shares Update to Atlas Athena S/W (14. 5. 0)

STEP 09 CPU: ~ 20% RAM: only occasionally in swap NW: Close to saturation on pool nodes S/W: Fixes to fair shares Update to Atlas Athena S/W (14. 5. 0)

STEP 09 Problems Rfio buffer sizes: We started with 128 MB buffers to load plenty of data (rfio). Many connections to pool nodes exhausted RAM. This slowed things down. Due to the lack of cache space, the disks got hammered. Fix: reduce buffer size to 64 MB. Note: big buffers help CPU efficiency, but strategy fails if forced to use swap when RAM exhausted, or when bandwidth is saturated. It depends on the number of jobs/connections.

STEP 09 Problems Rfio buffer sizes: We started with 128 MB buffers to load plenty of data (rfio). Many connections to pool nodes exhausted RAM. This slowed things down. Due to the lack of cache space, the disks got hammered. Fix: reduce buffer size to 64 MB. Note: big buffers help CPU efficiency, but strategy fails if forced to use swap when RAM exhausted, or when bandwidth is saturated. It depends on the number of jobs/connections.

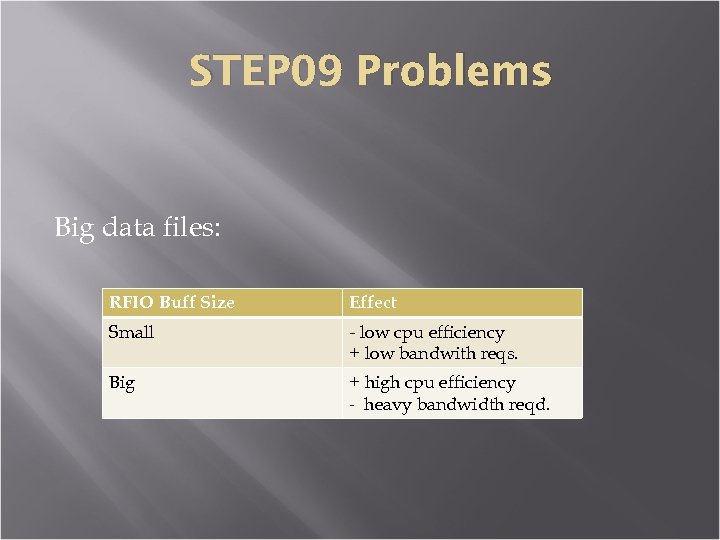

STEP 09 Problems Big data files: RFIO Buff Size Effect Small - low cpu efficiency + low bandwith reqs. Big + high cpu efficiency - heavy bandwidth reqd.

STEP 09 Problems Big data files: RFIO Buff Size Effect Small - low cpu efficiency + low bandwith reqs. Big + high cpu efficiency - heavy bandwidth reqd.

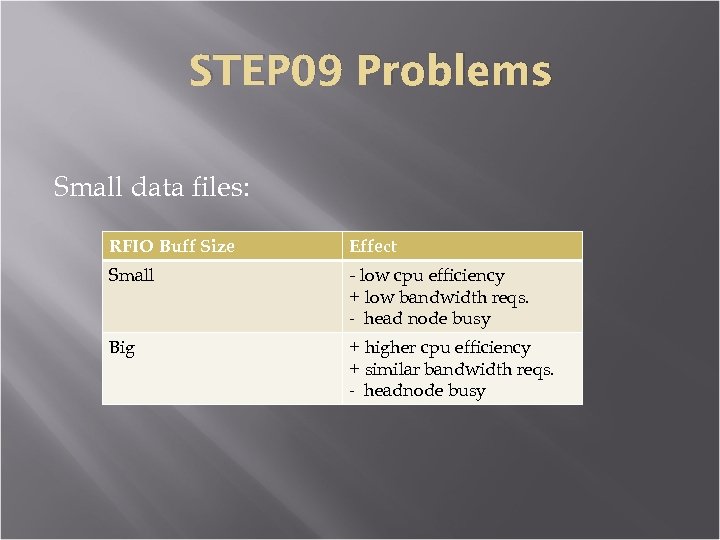

STEP 09 Problems Small data files: RFIO Buff Size Effect Small - low cpu efficiency + low bandwidth reqs. - head node busy Big + higher cpu efficiency + similar bandwidth reqs. - headnode busy

STEP 09 Problems Small data files: RFIO Buff Size Effect Small - low cpu efficiency + low bandwidth reqs. - head node busy Big + higher cpu efficiency + similar bandwidth reqs. - headnode busy

STEP 09 We are ready for real data, we hope, as long as things remain as they are… Improvements will continue, e. g. four more pool nodes of similar spec.

STEP 09 We are ready for real data, we hope, as long as things remain as they are… Improvements will continue, e. g. four more pool nodes of similar spec.

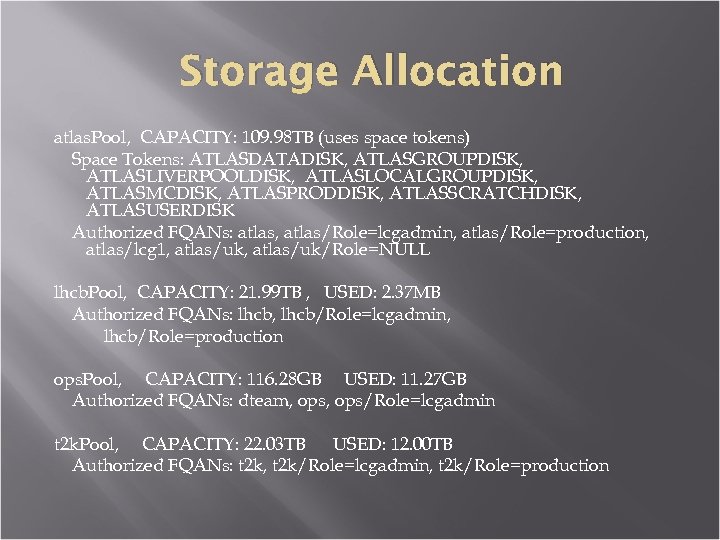

Storage Allocation atlas. Pool, CAPACITY: 109. 98 TB (uses space tokens) Space Tokens: ATLASDATADISK, ATLASGROUPDISK, ATLASLIVERPOOLDISK, ATLASLOCALGROUPDISK, ATLASMCDISK, ATLASPRODDISK, ATLASSCRATCHDISK, ATLASUSERDISK Authorized FQANs: atlas, atlas/Role=lcgadmin, atlas/Role=production, atlas/lcg 1, atlas/uk/Role=NULL lhcb. Pool, CAPACITY: 21. 99 TB , USED: 2. 37 MB Authorized FQANs: lhcb, lhcb/Role=lcgadmin, lhcb/Role=production ops. Pool, CAPACITY: 116. 28 GB USED: 11. 27 GB Authorized FQANs: dteam, ops/Role=lcgadmin t 2 k. Pool, CAPACITY: 22. 03 TB USED: 12. 00 TB Authorized FQANs: t 2 k, t 2 k/Role=lcgadmin, t 2 k/Role=production

Storage Allocation atlas. Pool, CAPACITY: 109. 98 TB (uses space tokens) Space Tokens: ATLASDATADISK, ATLASGROUPDISK, ATLASLIVERPOOLDISK, ATLASLOCALGROUPDISK, ATLASMCDISK, ATLASPRODDISK, ATLASSCRATCHDISK, ATLASUSERDISK Authorized FQANs: atlas, atlas/Role=lcgadmin, atlas/Role=production, atlas/lcg 1, atlas/uk/Role=NULL lhcb. Pool, CAPACITY: 21. 99 TB , USED: 2. 37 MB Authorized FQANs: lhcb, lhcb/Role=lcgadmin, lhcb/Role=production ops. Pool, CAPACITY: 116. 28 GB USED: 11. 27 GB Authorized FQANs: dteam, ops/Role=lcgadmin t 2 k. Pool, CAPACITY: 22. 03 TB USED: 12. 00 TB Authorized FQANs: t 2 k, t 2 k/Role=lcgadmin, t 2 k/Role=production

Our questions Atlas data access is (apparently) random, which enormously reduces CPU efficiency by hammering the caching. Must this be so? Could it be sorted into some sequence?

Our questions Atlas data access is (apparently) random, which enormously reduces CPU efficiency by hammering the caching. Must this be so? Could it be sorted into some sequence?

Thanks to Rob and John at L’pool. I hope it helps.

Thanks to Rob and John at L’pool. I hope it helps.