b377e2a8d31d0f457fad05decf42cfa2.ppt

- Количество слайдов: 16

Status of the GRID in the Czech Republic NEC’ 2007 11 September 2007 Milos Lokajicek Institute of Physics AS CR Prague

Outline • CZ pre-grid activities • Grid status – Networking – Distributed Tier 2 center and its performance • Conclusions 11 September 2007 NEC'2007 2

CZ pre-grid activities • Synergy of the Czech pre-grid activities in 90 ties – Particle Physics – Meta. Centrum Project – CESNET • Particle physics active collaboration – – DELPHI experiment on LEP at CERN H 1 experiment on HERA at DESY Several smaller experiments Collaboration groups • Institutes of the Academy of Sciences of the Czech Republic • Charles University in Prague • Czech Technical University in Prague 11 September 2007 NEC'2007 3

CZ pre-grid activities • Meta. Centrum Project – Collaboration of the Czech universities supercomputing centers – Create single computing environment for academic users – Single user login, same user interfaces and application interfaces independent on HW or OS – Single queuing system – Open. AFS Kerberized system, same authentication credentials – Originally Masaryk University in Brno, West Bohemia University in Plzen and Charles university in Prague – Now project of CESNET with contribution of Universities – Resources in 4 sites • 1000 CPUs, mainly IA-32/64 architecture, • Tens of TB disk space, tape archives in Plzen and Brno – Partner to international EGEE and EGI_DS (European Grid Initiative Design Study), national Medi. GRID project 11 September 2007 NEC'2007 4

CZ pre-grid activities • CESNET Czech scientific research network provider • 1992 first ip line to Austria • 1993 country-wide backbone network connecting 8 cities • Member/stake holder of all important European network activities • Today legal structure: Association of legal bodies created by Academy of Sciences of the Czech Republic and Czech Universities • Connects all Czech Universities with high bandwidth network • Current charge of CESNET – Research network, novel application over network, grid activities 11 September 2007 NEC'2007 5

Grid activities • Preparation of LHC project – Monarch project at CERN, widelly distributed hierarchical model – CHEP conference in Padova 2000 • Reached agreement in HEP community of grid – EDG (European Data. GRID) from 2001 • And other parallel grid projects • Similar situation in U. S. A. – Czech Republic (CESNET and Institute of Physics AS CR) took part from beginning • Testbed and HEP applicaton, Logging and bookkeeping of the Workload management package – EGEE, EGEEII, … EGEEIII, EGI Design Study, CESNET as partner • Preparation of local NGI (National Grid Initiative) – a tough task – LCG, WLCG – participation via Institute of Physics AS CR – Distributed TIER 2 center in Prague 11 September 2007 NEC'2007 6

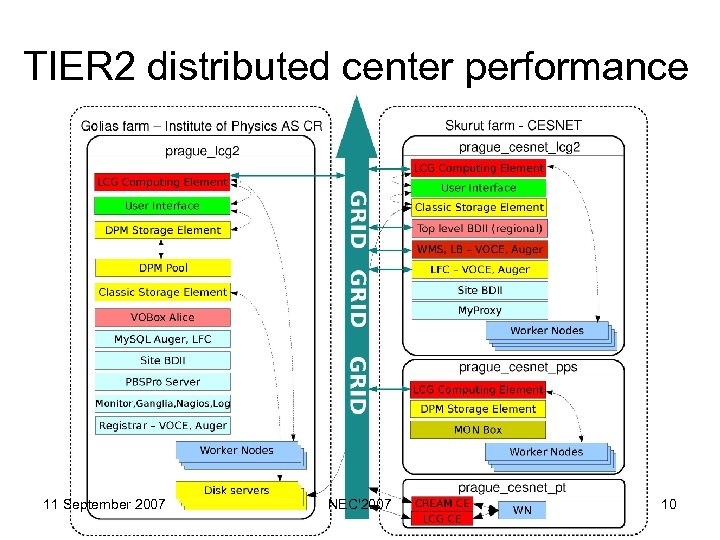

Grid Status • Distributed TIER 2 center in Prague – Golias farm Institute of Physics AS CR – Skurut farm CESNET • EGEEII – Pre/Production services – Reengineering – logging and bookkeeping, job provenance – VOCE – Central Europe Federation VO • Proposed by CESNET • Running it main services • All purpose research VO for CE – AUGER VO support 11 September 2007 NEC'2007 7

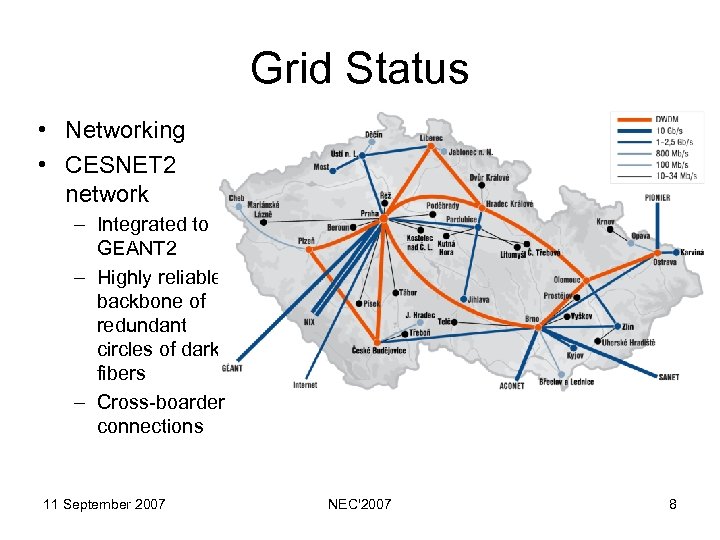

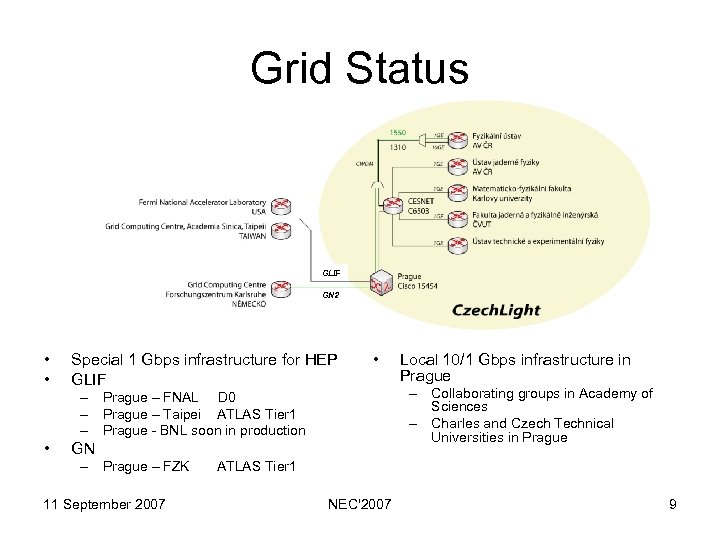

Grid Status • Networking • CESNET 2 network – Integrated to GEANT 2 – Highly reliable backbone of redundant circles of dark fibers – Cross-boarder connections 11 September 2007 NEC'2007 8

Grid Status GLIF GN 2 • • Special 1 Gbps infrastructure for HEP GLIF • – Prague – FNAL D 0 – Prague – Taipei ATLAS Tier 1 – Prague - BNL soon in production • GN – Prague – FZK 11 September 2007 Local 10/1 Gbps infrastructure in Prague – Collaborating groups in Academy of Sciences – Charles and Czech Technical Universities in Prague ATLAS Tier 1 NEC'2007 9

TIER 2 distributed center performance 11 September 2007 NEC'2007 10

TIER 2 distributed center performance • End 2007 capacities Computing capacity k. SI 2 k Number of cores Disk capacity TB GOLIAS 670 458 60 SKURUT 30 38 Shared from Golias • Main usage – D 0 experiment simulations and reconstruction 50% – ATLAS, ALICE, STAR, VOCE, Auger computing, solid state physics – Support for VO: VOCE, Auger • Preproduction and Preview testbeds 11 September 2007 NEC'2007 11

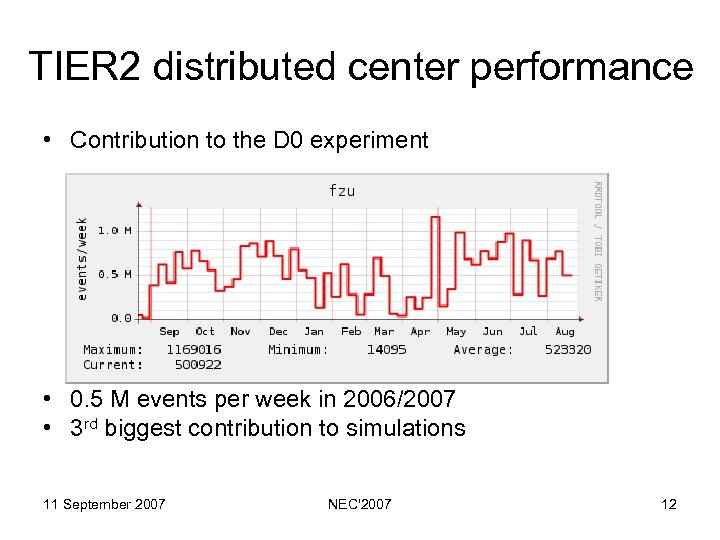

TIER 2 distributed center performance • Contribution to the D 0 experiment • 0. 5 M events per week in 2006/2007 • 3 rd biggest contribution to simulations 11 September 2007 NEC'2007 12

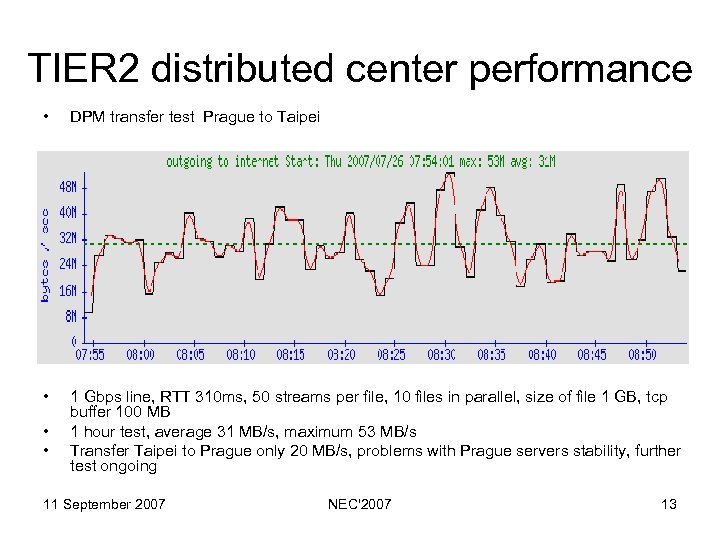

TIER 2 distributed center performance • DPM transfer test Prague to Taipei • 1 Gbps line, RTT 310 ms, 50 streams per file, 10 files in parallel, size of file 1 GB, tcp buffer 100 MB 1 hour test, average 31 MB/s, maximum 53 MB/s Transfer Taipei to Prague only 20 MB/s, problems with Prague servers stability, further test ongoing • • 11 September 2007 NEC'2007 13

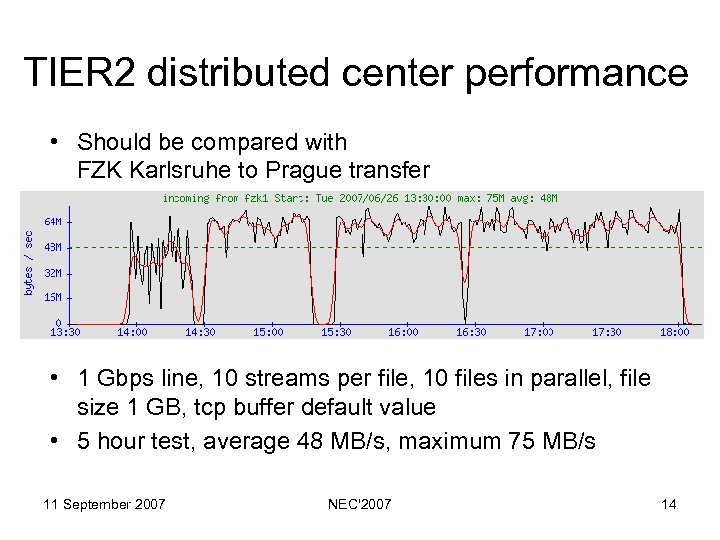

TIER 2 distributed center performance • Should be compared with FZK Karlsruhe to Prague transfer • 1 Gbps line, 10 streams per file, 10 files in parallel, file size 1 GB, tcp buffer default value • 5 hour test, average 48 MB/s, maximum 75 MB/s 11 September 2007 NEC'2007 14

TIER 2 distributed center performance • Monitoring – key for reliable service – both standard grid monitoring and local HW, SW and services monitoring • Local monitoring (Talk of Tomas Kouba) – Nagios – various plugins and workarounds – Interface for dynamic configuraton of nagios according to our HW and SW database – Reduction of nagios warning • Critical nagios warnings – distributed immediatelly • Other warnings – summarized in a developped digest and distributed 3 times per day only 11 September 2007 NEC'2007 15

Conclusions • Czech Republic – partner of EU grid projects from 2001 • Activities both in grid deployment and reengineering • Middle sized distributed TIER 2 center fully integrated into EGEE/LCG environment – Delivery of well visible computing services to experiments and projects – Data transfer services over special lines – Support of VOs VOCE and Auger – Active contribution for reengineering – logging and bookkeeping and recently job provenance • All this is supported by excellent quality of CESNET 2 network and special E 2 E lines both local and international • Taking part in preparation EGEEIII and EGI projects • Big effort will be needed to create Czech NGI 11 September 2007 NEC'2007 16

b377e2a8d31d0f457fad05decf42cfa2.ppt