115af25cbfba4c66d320b6c6a989444b.ppt

- Количество слайдов: 57

Statistics Quality Management in Korea Kyungsoon Choi Statistics Quality Management Division 1

Statistics Quality Management in Korea Kyungsoon Choi Statistics Quality Management Division 1

Quality is a Lousy Idea- If it’s Only an Idea 2

Quality is a Lousy Idea- If it’s Only an Idea 2

CONTENTS 1 2 Statistics Quality Management in Korea 3 Rotational Assessment Program 4 Self Assessment Program 5 3 Introduction Conclusion

CONTENTS 1 2 Statistics Quality Management in Korea 3 Rotational Assessment Program 4 Self Assessment Program 5 3 Introduction Conclusion

1. Introduction Who are we? Definitions 4

1. Introduction Who are we? Definitions 4

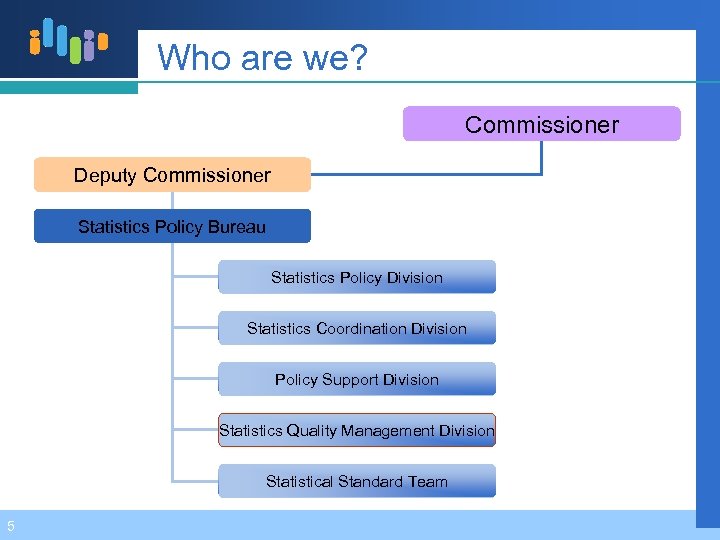

Who are we? Commissioner Deputy Commissioner Statistics Policy Bureau Statistics Policy Division Statistics Coordination Division Policy Support Division Statistics Quality Management Division Statistical Standard Team 5

Who are we? Commissioner Deputy Commissioner Statistics Policy Bureau Statistics Policy Division Statistics Coordination Division Policy Support Division Statistics Quality Management Division Statistical Standard Team 5

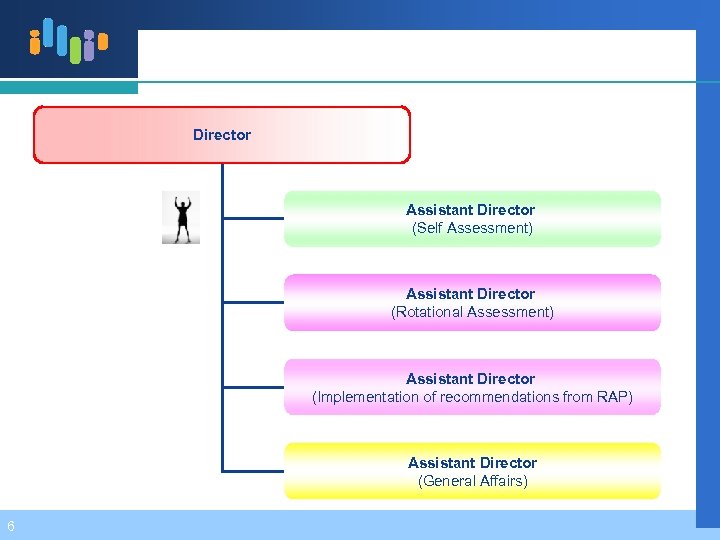

Director Assistant Director (Self Assessment) Assistant Director (Rotational Assessment) Assistant Director (Implementation of recommendations from RAP) Assistant Director (General Affairs) 6

Director Assistant Director (Self Assessment) Assistant Director (Rotational Assessment) Assistant Director (Implementation of recommendations from RAP) Assistant Director (General Affairs) 6

Definitions Statistics? Quality? Statistics Quality Management? 7

Definitions Statistics? Quality? Statistics Quality Management? 7

Statistics? Numerical information produced by statistical institutes formulating and evaluating policies; and for analysis of the economic and/or social phenomenon (Statistics Act) Information to make a right decision under uncertainty 8

Statistics? Numerical information produced by statistical institutes formulating and evaluating policies; and for analysis of the economic and/or social phenomenon (Statistics Act) Information to make a right decision under uncertainty 8

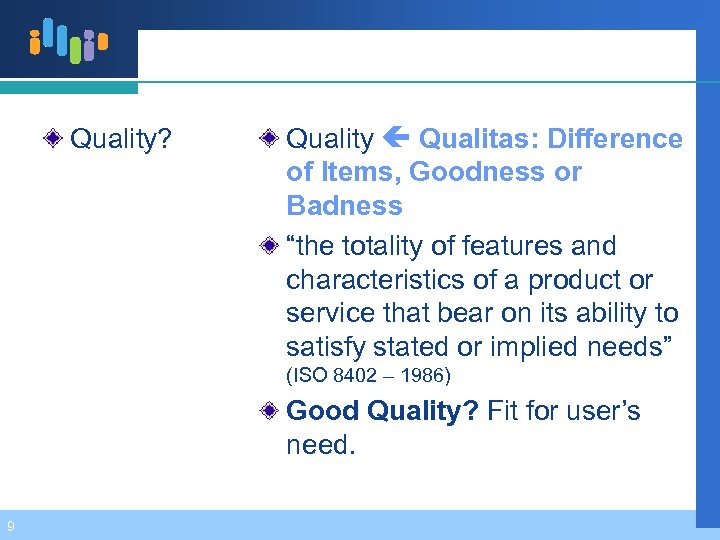

Quality? Quality Qualitas: Difference of Items, Goodness or Badness “the totality of features and characteristics of a product or service that bear on its ability to satisfy stated or implied needs” (ISO 8402 – 1986) Good Quality? Fit for user’s need. 9

Quality? Quality Qualitas: Difference of Items, Goodness or Badness “the totality of features and characteristics of a product or service that bear on its ability to satisfy stated or implied needs” (ISO 8402 – 1986) Good Quality? Fit for user’s need. 9

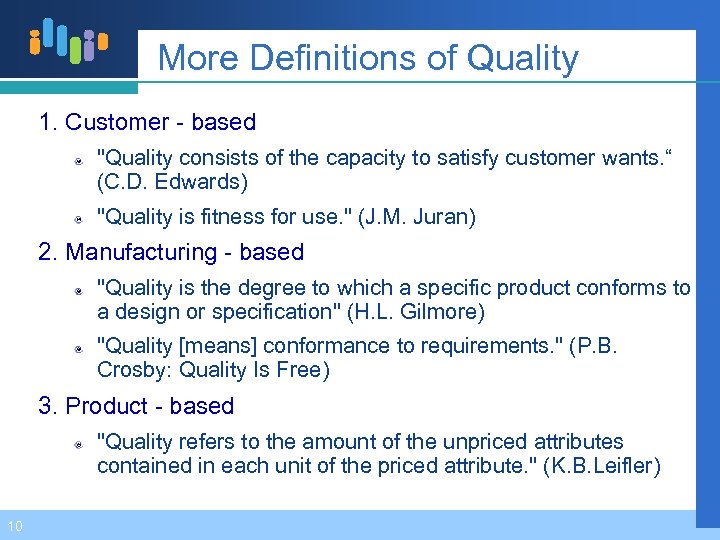

More Definitions of Quality 1. Customer - based "Quality consists of the capacity to satisfy customer wants. “ (C. D. Edwards) "Quality is fitness for use. " (J. M. Juran) 2. Manufacturing - based "Quality is the degree to which a specific product conforms to a design or specification" (H. L. Gilmore) "Quality [means] conformance to requirements. " (P. B. Crosby: Quality Is Free) 3. Product - based "Quality refers to the amount of the unpriced attributes contained in each unit of the priced attribute. " (K. B. Leifler) 10

More Definitions of Quality 1. Customer - based "Quality consists of the capacity to satisfy customer wants. “ (C. D. Edwards) "Quality is fitness for use. " (J. M. Juran) 2. Manufacturing - based "Quality is the degree to which a specific product conforms to a design or specification" (H. L. Gilmore) "Quality [means] conformance to requirements. " (P. B. Crosby: Quality Is Free) 3. Product - based "Quality refers to the amount of the unpriced attributes contained in each unit of the priced attribute. " (K. B. Leifler) 10

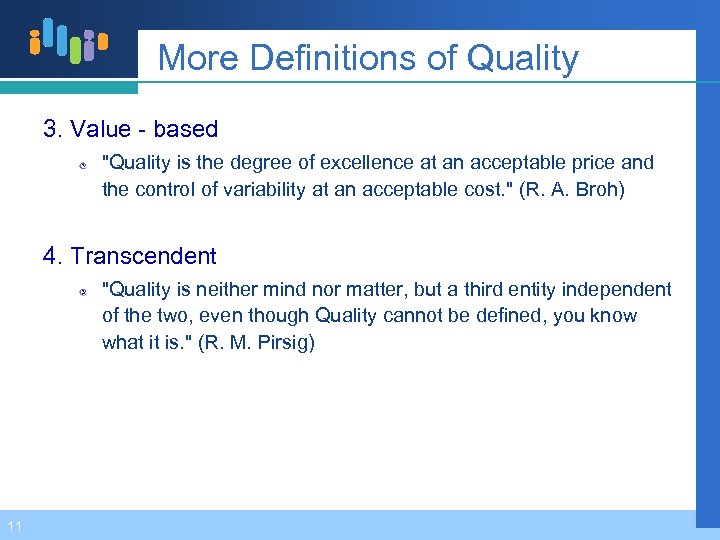

More Definitions of Quality 3. Value - based "Quality is the degree of excellence at an acceptable price and the control of variability at an acceptable cost. " (R. A. Broh) 4. Transcendent "Quality is neither mind nor matter, but a third entity independent of the two, even though Quality cannot be defined, you know what it is. " (R. M. Pirsig) 11

More Definitions of Quality 3. Value - based "Quality is the degree of excellence at an acceptable price and the control of variability at an acceptable cost. " (R. A. Broh) 4. Transcendent "Quality is neither mind nor matter, but a third entity independent of the two, even though Quality cannot be defined, you know what it is. " (R. M. Pirsig) 11

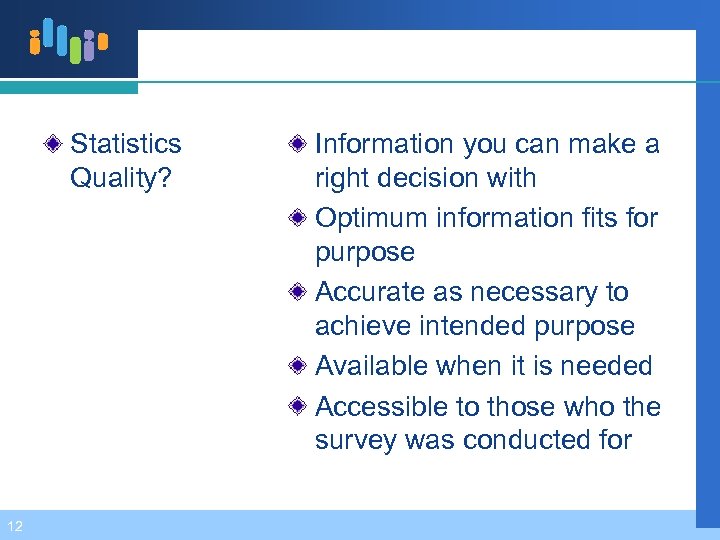

Statistics Quality? 12 Information you can make a right decision with Optimum information fits for purpose Accurate as necessary to achieve intended purpose Available when it is needed Accessible to those who the survey was conducted for

Statistics Quality? 12 Information you can make a right decision with Optimum information fits for purpose Accurate as necessary to achieve intended purpose Available when it is needed Accessible to those who the survey was conducted for

Management? 13 Act of getting people together to accomplish desired goals efficiently

Management? 13 Act of getting people together to accomplish desired goals efficiently

Quality Management? 14 Act of getting people together to accomplish desired quality efficiently A set of coordinated activities to direct and control an organization in order to continually improve the effectiveness and efficiency of its performance

Quality Management? 14 Act of getting people together to accomplish desired quality efficiently A set of coordinated activities to direct and control an organization in order to continually improve the effectiveness and efficiency of its performance

Statistics Quality Management? 15 Act of getting people together to accomplish desired quality of statistics efficiently Activities to ensure continuous improvement in the quality of statistics

Statistics Quality Management? 15 Act of getting people together to accomplish desired quality of statistics efficiently Activities to ensure continuous improvement in the quality of statistics

2. Statistics Quality Management in Korea Purpose of the SQM History of the SQM in Korea Definition and Dimensions of statistics quality Quality Assessment Program 16

2. Statistics Quality Management in Korea Purpose of the SQM History of the SQM in Korea Definition and Dimensions of statistics quality Quality Assessment Program 16

Purpose of the SQM • To produce accurate output 17 • To ensure fitness for use • To support the continuing quality improvement

Purpose of the SQM • To produce accurate output 17 • To ensure fitness for use • To support the continuing quality improvement

History of the SQM in Korea IMF crisis (1997) A sector in the planning division (1999) Introduction of quality assessment system 18

History of the SQM in Korea IMF crisis (1997) A sector in the planning division (1999) Introduction of quality assessment system 18

A team under the commissioner (2002) 3 year plan (2002 -2005) to review 53 KNSO statistics A division in the statistics policy bureau (2005) 3 year plan (2006 -2008) to review 457 approved statistics § 107 in ‘ 06, 180 in ‘ 07, 170 in ‘ 08 Extended to 5 year plan till 2010 due to the increase of approved statistics 19

A team under the commissioner (2002) 3 year plan (2002 -2005) to review 53 KNSO statistics A division in the statistics policy bureau (2005) 3 year plan (2006 -2008) to review 457 approved statistics § 107 in ‘ 06, 180 in ‘ 07, 170 in ‘ 08 Extended to 5 year plan till 2010 due to the increase of approved statistics 19

Definition and Dimensions Fitness for use Multi-dimensional concept : defines it with reference to the six dimensions 1. 2. 3. 4. 5. 6. 20 Relevance Accuracy Timeliness and Punctuality Accessibility and Clarity Comparability Coherence

Definition and Dimensions Fitness for use Multi-dimensional concept : defines it with reference to the six dimensions 1. 2. 3. 4. 5. 6. 20 Relevance Accuracy Timeliness and Punctuality Accessibility and Clarity Comparability Coherence

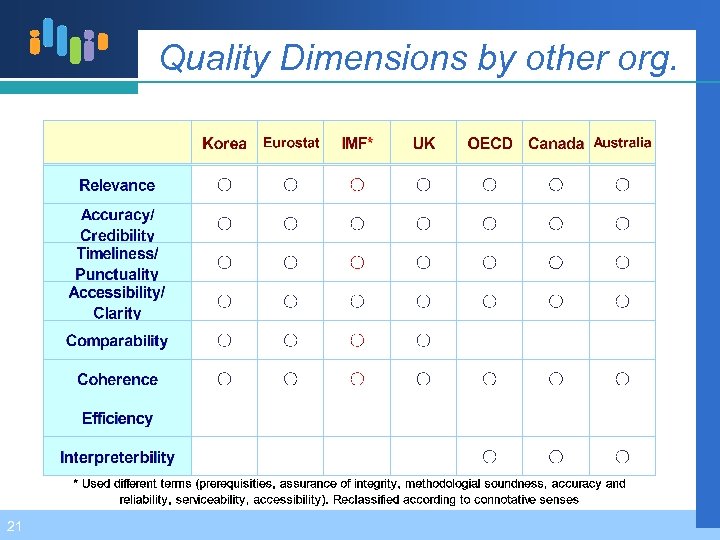

Quality Dimensions by other org. 21

Quality Dimensions by other org. 21

Six Dimensions 1. Relevance – value contributed by data to users; whether it meets user needs 2. Accuracy – degree to which data correctly estimate ‘true’ value 3. Timeliness/Punctuality – length of time between event and availability of data / adherence to publication schedule 22

Six Dimensions 1. Relevance – value contributed by data to users; whether it meets user needs 2. Accuracy – degree to which data correctly estimate ‘true’ value 3. Timeliness/Punctuality – length of time between event and availability of data / adherence to publication schedule 22

Six Dimensions 4. Accessibility/Clarity – suitability of media form / information environment, metadata availability 5. Comparability – over time and space (whether it follows the national and/or international standards) 6. Coherence – within & across datasets (whether it can be successfully joined with other statistical information) 23

Six Dimensions 4. Accessibility/Clarity – suitability of media form / information environment, metadata availability 5. Comparability – over time and space (whether it follows the national and/or international standards) 6. Coherence – within & across datasets (whether it can be successfully joined with other statistical information) 23

1. Relevance Degree to meet current & potential users’ needs Whether all statistics needed are produced Extent to which concepts used reflect user needs 24

1. Relevance Degree to meet current & potential users’ needs Whether all statistics needed are produced Extent to which concepts used reflect user needs 24

How to measure relevance(1) Describe the extent to which the statistics are useful to, and used by, the broadest array of users. Compile information about their users (who they are, how many they are, how important is each one of them) Compile information on their needs Assess how far these needs are met è Overall evaluation of the level of relevance of statistical product & main reasons for lack of relevance 25

How to measure relevance(1) Describe the extent to which the statistics are useful to, and used by, the broadest array of users. Compile information about their users (who they are, how many they are, how important is each one of them) Compile information on their needs Assess how far these needs are met è Overall evaluation of the level of relevance of statistical product & main reasons for lack of relevance 25

How to measure relevance(2) User consultant Auxiliary means publication sales, # of questions received, complaints etc. Assessment of completeness Rate of non-available statistics (= # of non-available statistics / # of statistics needed) A (usefulness) weighted ratio of non availability 26

How to measure relevance(2) User consultant Auxiliary means publication sales, # of questions received, complaints etc. Assessment of completeness Rate of non-available statistics (= # of non-available statistics / # of statistics needed) A (usefulness) weighted ratio of non availability 26

2. Accuracy Closeness between the estimated value and the true value Variability and Bias Variability § Changes from trial to trial due to random effects Bias § Distance from the true value due to systematic effects 27

2. Accuracy Closeness between the estimated value and the true value Variability and Bias Variability § Changes from trial to trial due to random effects Bias § Distance from the true value due to systematic effects 27

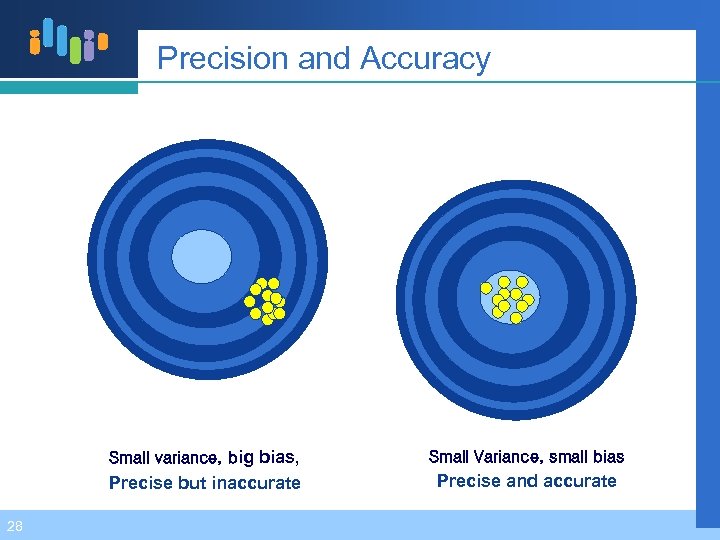

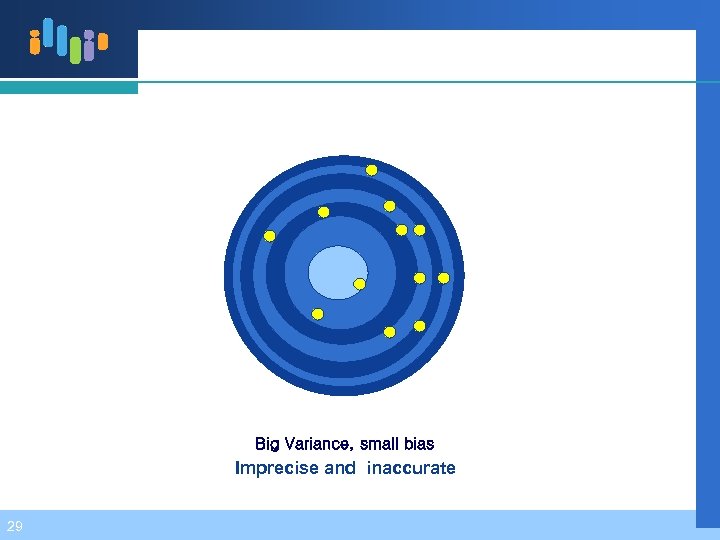

Precision and Accuracy Small variance, big bias, Precise but inaccurate 28 Small Variance, small bias Precise and accurate

Precision and Accuracy Small variance, big bias, Precise but inaccurate 28 Small Variance, small bias Precise and accurate

Big Variance, small bias Imprecise and inaccurate 29

Big Variance, small bias Imprecise and inaccurate 29

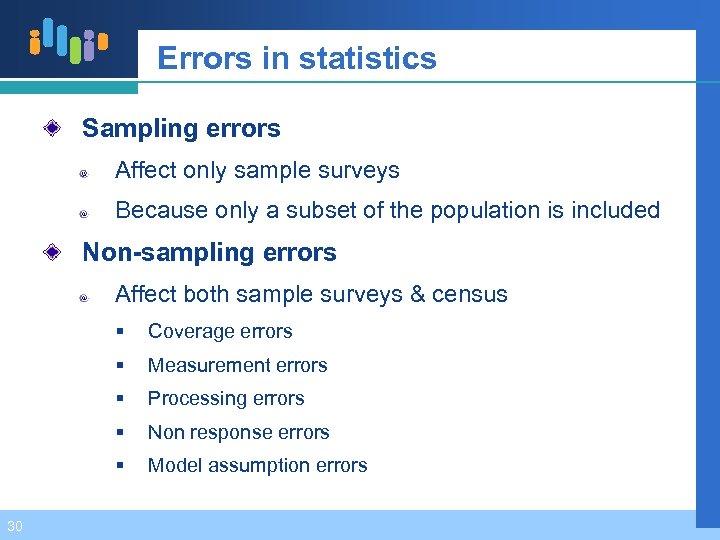

Errors in statistics Sampling errors Affect only sample surveys Because only a subset of the population is included Non-sampling errors Affect both sample surveys & census § § Measurement errors § Processing errors § Non response errors § 30 Coverage errors Model assumption errors

Errors in statistics Sampling errors Affect only sample surveys Because only a subset of the population is included Non-sampling errors Affect both sample surveys & census § § Measurement errors § Processing errors § Non response errors § 30 Coverage errors Model assumption errors

3. Timeliness & punctuality Timeliness Date of the output available - Date of the event or phenomenon happens Punctuality Release date - Target date § Target date : date announced in official release calendar by regulations or previously agreed 31

3. Timeliness & punctuality Timeliness Date of the output available - Date of the event or phenomenon happens Punctuality Release date - Target date § Target date : date announced in official release calendar by regulations or previously agreed 31

How to measure Timeliness & punctuality Timeliness Average production time Maximum production time (by providing the worst recorded case) Punctuality & timeliness are related with frequency Production time/Periodicity Actual production time/Standard (if set) Actual production time - Target time 32

How to measure Timeliness & punctuality Timeliness Average production time Maximum production time (by providing the worst recorded case) Punctuality & timeliness are related with frequency Production time/Periodicity Actual production time/Standard (if set) Actual production time - Target time 32

4. Accessibility & clarity Simple & easy for users to access outputs Simple & user-friendly In an expected form Within an acceptable time period With the appropriate user information & assistance Optimum use of the outputs 33

4. Accessibility & clarity Simple & easy for users to access outputs Simple & user-friendly In an expected form Within an acceptable time period With the appropriate user information & assistance Optimum use of the outputs 33

Accessibility Physical conditions for users to access outputs Distribution channels Ordering procedures Time required for delivery Pricing policy Marketing conditions (copyright, etc. ) Availability of micro or macro data Media (paper, CD-ROM, Internet…) etc. 34

Accessibility Physical conditions for users to access outputs Distribution channels Ordering procedures Time required for delivery Pricing policy Marketing conditions (copyright, etc. ) Availability of micro or macro data Media (paper, CD-ROM, Internet…) etc. 34

Clarity Appropriate metadata provided Textual information, explanations, documentation, etc Graphs, maps, & other illustrations Information on data quality Assistance offered Measuring clarity is more difficult Examine in a qualitative manner whether provided metadata is complete Require information from both producers & users § Producers description of the accompanying information § Users adequacy & appropriateness of such information for future use 35

Clarity Appropriate metadata provided Textual information, explanations, documentation, etc Graphs, maps, & other illustrations Information on data quality Assistance offered Measuring clarity is more difficult Examine in a qualitative manner whether provided metadata is complete Require information from both producers & users § Producers description of the accompanying information § Users adequacy & appropriateness of such information for future use 35

5. Comparability Extent to which differences between statistics are attributed to differences between the true values of the statistical characteristics In practice, aims at measuring the impact of differences in other aspects such as concepts, definitions, classification, etc. On the comparison of statistics between geographical areas, non-geographical domains, or over time 36

5. Comparability Extent to which differences between statistics are attributed to differences between the true values of the statistical characteristics In practice, aims at measuring the impact of differences in other aspects such as concepts, definitions, classification, etc. On the comparison of statistics between geographical areas, non-geographical domains, or over time 36

What affects comparability Concepts Statistical characteristics Statistical measure (indicator) Statistical unit Target population Frame population Reference period & frequency Geographical coverage (for comparability over time) Standards, classifications Definition Measurement (Methodology) Sample design Data collection Data processing Estimation 37

What affects comparability Concepts Statistical characteristics Statistical measure (indicator) Statistical unit Target population Frame population Reference period & frequency Geographical coverage (for comparability over time) Standards, classifications Definition Measurement (Methodology) Sample design Data collection Data processing Estimation 37

Geographical comparability The degree of comparability between similar surveys in different geographical entities What to report: Descriptions of all concepts and methods that can affect the comparability Difference scores, comparison matrices, multidimensional scaling maps, and scoring method to quantify metadata differences (in case of comparisons between regions) Comments on the discrepancies that appear in the mirror statistics 38

Geographical comparability The degree of comparability between similar surveys in different geographical entities What to report: Descriptions of all concepts and methods that can affect the comparability Difference scores, comparison matrices, multidimensional scaling maps, and scoring method to quantify metadata differences (in case of comparisons between regions) Comments on the discrepancies that appear in the mirror statistics 38

Comparability over time Degree of comparability among repeated surveys What to report: Reference period of the survey where the break occurred § whether the difference reported is a ‘once-off adopted policy’ or an adopted policy for the future § Description of difference in concepts & methods of measurement before & after the break. A description of the changes in classification, in statistical methodology, statistical population, methods of data manipulation, etc. Magnitude of the effect of the changes in a quantitative way 39

Comparability over time Degree of comparability among repeated surveys What to report: Reference period of the survey where the break occurred § whether the difference reported is a ‘once-off adopted policy’ or an adopted policy for the future § Description of difference in concepts & methods of measurement before & after the break. A description of the changes in classification, in statistical methodology, statistical population, methods of data manipulation, etc. Magnitude of the effect of the changes in a quantitative way 39

6. Coherence To what extent statistics originated from other sources are compatible with the data produced and how well they can be used together Easier to show cases of incoherence than to prove coherence 40

6. Coherence To what extent statistics originated from other sources are compatible with the data produced and how well they can be used together Easier to show cases of incoherence than to prove coherence 40

How to measure coherence By comparison with NA or Bo. P statistics; Methodology (primary data source & adjustments, divergences in the concepts used) should be described to advise users By comparison between provisional and final statistics Normally based on the same concepts and methods, but provisional statistics are produced with less information & processing time By comparison among statistics with different frequency Compare estimates of annual average, totals or annual growth rate By comparison with other statistics in the same socioeconomic domain Different types of statistics measure same phenomenon, but with different approaches 41

How to measure coherence By comparison with NA or Bo. P statistics; Methodology (primary data source & adjustments, divergences in the concepts used) should be described to advise users By comparison between provisional and final statistics Normally based on the same concepts and methods, but provisional statistics are produced with less information & processing time By comparison among statistics with different frequency Compare estimates of annual average, totals or annual growth rate By comparison with other statistics in the same socioeconomic domain Different types of statistics measure same phenomenon, but with different approaches 41

Coherence & comparability Basis for deciding if two sets are coherent or comparable Coherence § Based on the inconsistencies between actual data § Compare statistics based on same or largely similar populations Comparability § Based on metadata. § Compare statistics based on (usually) unrelated populations 42

Coherence & comparability Basis for deciding if two sets are coherent or comparable Coherence § Based on the inconsistencies between actual data § Compare statistics based on same or largely similar populations Comparability § Based on metadata. § Compare statistics based on (usually) unrelated populations 42

Quality Assessment Program Background One of essential infrastructures of national governance is highly qualified statistics. Distorted statistics lead to distorted policies. An information-oriented society starts from accurate statistics It becomes necessary to build statistical database. 43

Quality Assessment Program Background One of essential infrastructures of national governance is highly qualified statistics. Distorted statistics lead to distorted policies. An information-oriented society starts from accurate statistics It becomes necessary to build statistical database. 43

legal Basis: Statistics Act 2007 All approved statistics are subject to quality assessment Rotational Assessment Program (RAP): every 5 years, by experts (clause 9) Ad-hoc Assessment Program (AAP): when reasonable doubts arise on the quality of certain statistics, by experts (clause 10) 44 Self Assessment Program (SAP): every year, on all statistics except those being reviewed by rotational or ad-hoc assessment (clause 11)

legal Basis: Statistics Act 2007 All approved statistics are subject to quality assessment Rotational Assessment Program (RAP): every 5 years, by experts (clause 9) Ad-hoc Assessment Program (AAP): when reasonable doubts arise on the quality of certain statistics, by experts (clause 10) 44 Self Assessment Program (SAP): every year, on all statistics except those being reviewed by rotational or ad-hoc assessment (clause 11)

3. Rotational Assessment Program What is the RAP? Cycle of the RAP Procedure of the RAP 45

3. Rotational Assessment Program What is the RAP? Cycle of the RAP Procedure of the RAP 45

What is the RAP? A thorough quality assessment program of all approved statistics By external experts. § Assessment team of external experts are recruited through open competition Over a five-year rolling period Using effective quality assessment tools (ISO 9001 certified) 46

What is the RAP? A thorough quality assessment program of all approved statistics By external experts. § Assessment team of external experts are recruited through open competition Over a five-year rolling period Using effective quality assessment tools (ISO 9001 certified) 46

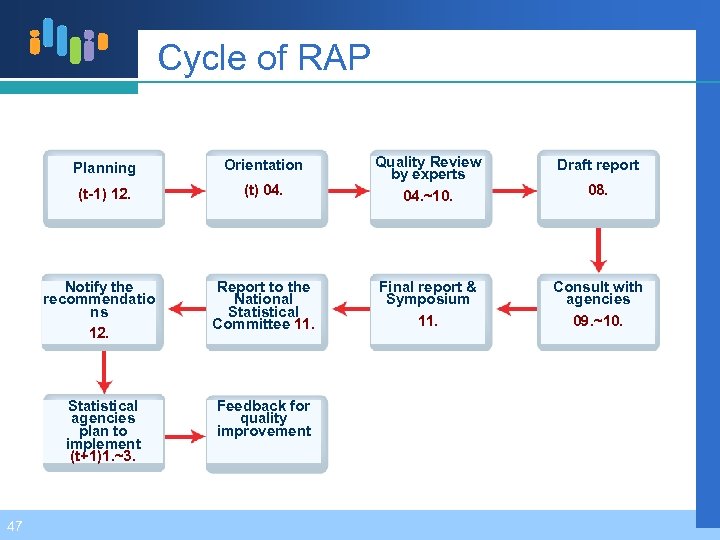

Cycle of RAP Draft report (t) 04. Quality Review by experts 04. ~10. Notify the recommendatio ns 12. Report to the National Statistical Committee 11. Final report & Symposium 11. Consult with agencies 09. ~10. Statistical agencies plan to implement (t+1)1. ~3. Feedback for quality improvement Planning (t-1) 12. 47 Orientation 08.

Cycle of RAP Draft report (t) 04. Quality Review by experts 04. ~10. Notify the recommendatio ns 12. Report to the National Statistical Committee 11. Final report & Symposium 11. Consult with agencies 09. ~10. Statistical agencies plan to implement (t+1)1. ~3. Feedback for quality improvement Planning (t-1) 12. 47 Orientation 08.

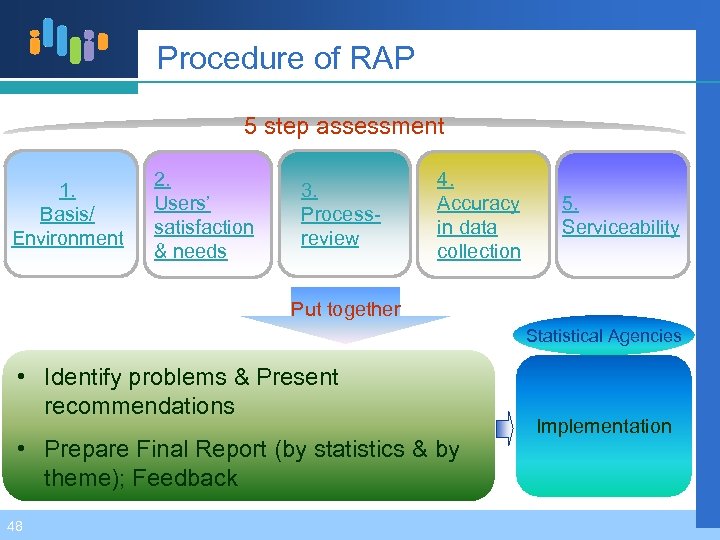

Procedure of RAP 5 step assessment 1 1. Basis/ Environment 2. Users’ satisfaction & needs 3. Processreview 4. Accuracy in data collection 5. Serviceability Put together Statistical Agencies • Identify problems & Present recommendations • Prepare Final Report (by statistics & by theme); Feedback 48 Implementation

Procedure of RAP 5 step assessment 1 1. Basis/ Environment 2. Users’ satisfaction & needs 3. Processreview 4. Accuracy in data collection 5. Serviceability Put together Statistical Agencies • Identify problems & Present recommendations • Prepare Final Report (by statistics & by theme); Feedback 48 Implementation

4. Self Assessment Program What is the SAP? Objective of the SAP Structure of the SAP 64

4. Self Assessment Program What is the SAP? Objective of the SAP Structure of the SAP 64

What is the SAP? A self assessment program of all approved statistics annual basis. By survey managers Annual basis Using DESAP originated checklist 65

What is the SAP? A self assessment program of all approved statistics annual basis. By survey managers Annual basis Using DESAP originated checklist 65

Objectives of SAP Help statistical agencies understand importance of statistics & review quality of their own products Gradually put emphasis on SAP to save administrative costs from carrying out large scale RAP every year 66

Objectives of SAP Help statistical agencies understand importance of statistics & review quality of their own products Gradually put emphasis on SAP to save administrative costs from carrying out large scale RAP every year 66

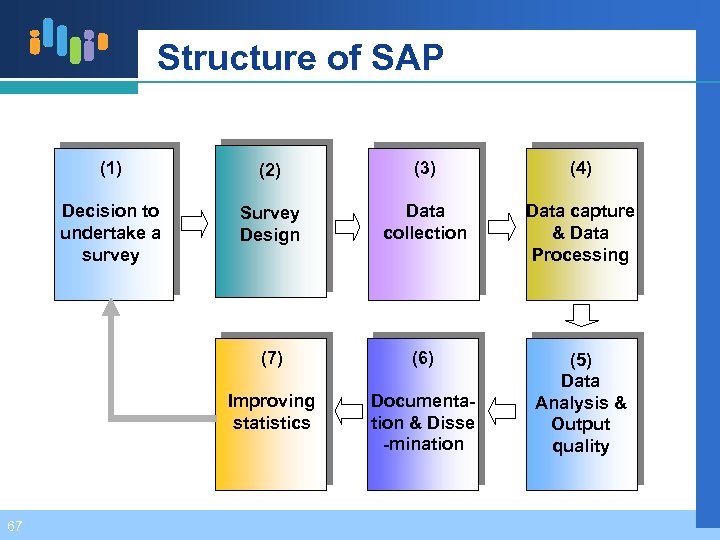

Structure of SAP (1) (3) (4) Decision to undertake a survey Survey Design Data collection Data capture & Data Processing (7) (6) Improving statistics 67 (2) Documentation & Disse -mination (5) Data Analysis & Output quality

Structure of SAP (1) (3) (4) Decision to undertake a survey Survey Design Data collection Data capture & Data Processing (7) (6) Improving statistics 67 (2) Documentation & Disse -mination (5) Data Analysis & Output quality

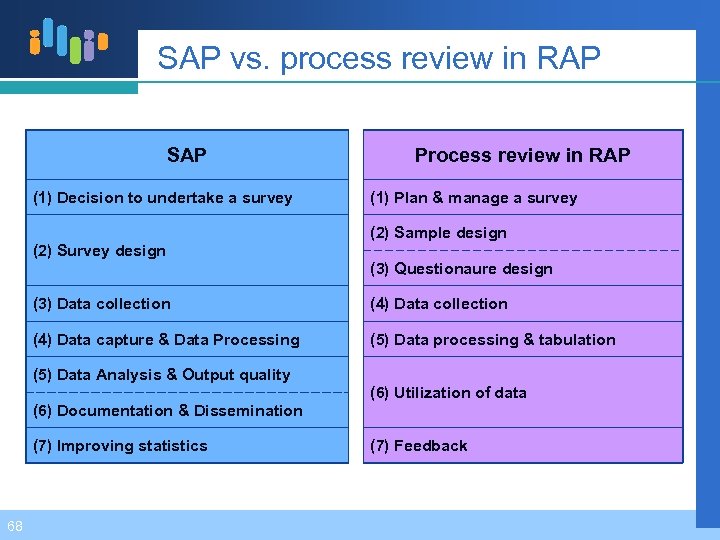

SAP vs. process review in RAP SAP (1) Decision to undertake a survey (2) Survey design Process review in RAP (1) Plan & manage a survey (2) Sample design (3) Questionaure design (3) Data collection (4) Data capture & Data Processing (5) Data processing & tabulation (5) Data Analysis & Output quality (6) Documentation & Dissemination (7) Improving statistics 68 (6) Utilization of data (7) Feedback

SAP vs. process review in RAP SAP (1) Decision to undertake a survey (2) Survey design Process review in RAP (1) Plan & manage a survey (2) Sample design (3) Questionaure design (3) Data collection (4) Data capture & Data Processing (5) Data processing & tabulation (5) Data Analysis & Output quality (6) Documentation & Dissemination (7) Improving statistics 68 (6) Utilization of data (7) Feedback

5. Conclusion Lessons learned Future plans 69

5. Conclusion Lessons learned Future plans 69

Lessons learned See the wood first, but don’t miss the trees either Need to draw a big picture at the planning stage Let the external experts HELP you Utilize the self assessment program (combine with the external assessment program) 70

Lessons learned See the wood first, but don’t miss the trees either Need to draw a big picture at the planning stage Let the external experts HELP you Utilize the self assessment program (combine with the external assessment program) 70

Future plans To categorize the approved statistics Define the scope of national and/or official statistics Differentiate the intensity of assessment To improve the assessment tools To draw up a new strategy for better quality management Embedding Quality Assurance Framework in statistics production procedure 71

Future plans To categorize the approved statistics Define the scope of national and/or official statistics Differentiate the intensity of assessment To improve the assessment tools To draw up a new strategy for better quality management Embedding Quality Assurance Framework in statistics production procedure 71

72

72