b0e45a1467c3711cd8f135ec291748c0.ppt

- Количество слайдов: 39

Statistics New Zealand’s Case Study ”Creating a New Business Model for a National Statistical Office if the 21 st Century” Craig Mitchell, Gary Dunnet, Matjaz Jug

Statistics New Zealand’s Case Study ”Creating a New Business Model for a National Statistical Office if the 21 st Century” Craig Mitchell, Gary Dunnet, Matjaz Jug

Overview • Introduction: organization, programme, strategy • The Statistical Metadata Systems and the Statistical Cycle: description of the metainformation systems, overview of the process model, description of different metadata groups • Statistical Metadata in each phase of the Statistical Cycle: metadata produced & used • Systems and Design issues: IT architecture, tools, standards • Organizational and cultural issues: user groups • Lessons learned

Overview • Introduction: organization, programme, strategy • The Statistical Metadata Systems and the Statistical Cycle: description of the metainformation systems, overview of the process model, description of different metadata groups • Statistical Metadata in each phase of the Statistical Cycle: metadata produced & used • Systems and Design issues: IT architecture, tools, standards • Organizational and cultural issues: user groups • Lessons learned

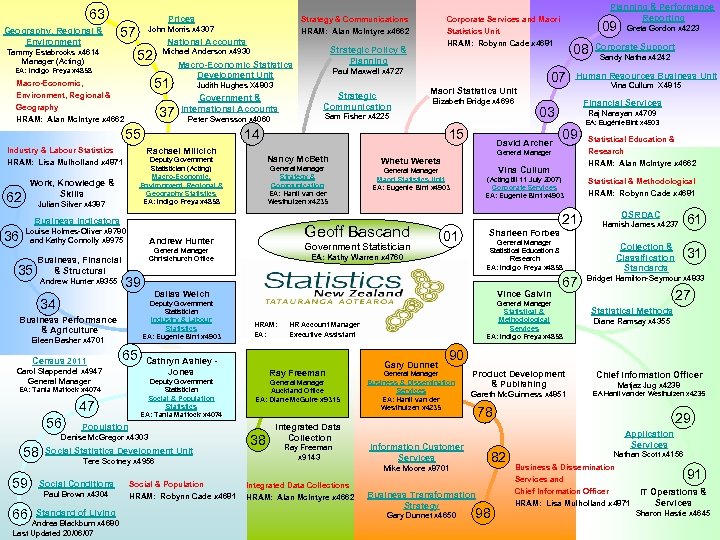

63 Geography, Regional & Environment Prices John Morris x 4307 57 National Accounts 52 Tammy Estabrooks x 4614 Manager (Acting) EA: Indigo Freya x 4858 51 Macro-Economic, Environment, Regional & Geography HRAM: Alan Mc. Intyre x 4662 37 Macro-Economic Statistics Development Unit Government & International Accounts Peter Swensson x 4060 55 62 Julian Silver x 4387 15 General Manager Strategy & Communication EA: Hanli van der Westhuizen x 4235 Business Indicators 36 Louise Holmes-Oliver x 8780 and Kathy Connolly x 8975 35 Business, Financial & Structural Andrew Hunter x 8355 39 Eileen Basher x 4701 65 Carol Slappendel x 4947 General Manager EA: Tania Mattock x 4074 47 59 EA: Executive Assistant Deputy Government Statistician Social & Population Statistics EA: Tania Mattock x 4074 Ray Freeman General Manager Auckland Office EA: Diane Mc. Guire x 9315 Last Updated 20/06/07 Social & Population HRAM: Robynn Cade x 4681 38 Integrated Data Collection Ray Freeman x 9143 Gary Dunnet OSRDAC Hamish James x 4237 67 Collection & Classification Standards 61 31 Bridget Hamilton-Seymour x 4833 27 Statistical Methods Diane Ramsay x 4355 90 General Manager Business & Dissemination Services EA: Hanli van der Westhuizen x 4235 Product Development & Publishing Chief Information Officer Gareth Mc. Guinness x 4851 EA Hanli van der Westhuizen x 4235 29 Application Services Information Customer Services 82 Business Transformation Strategy Gary Dunnet x 4650 Matjaz Jug x 4238 78 Mike Moore x 8701 Integrated Data Collections HRAM: Alan Mc. Intyre x 4662 Statistical & Methodological HRAM: Robynn Cade x 4681 General Manager Statistical Education & Research EA: Indigo Freya x 4858 HR Account Manager Cathryn Ashley Jones Tere Scotney x 4956 Standard of Living 66 Andrea Blackburn x 4680 Sharleen Forbes Vince Galvin Social Statistics Development Unit Paul Brown x 4304 21 General Manager Statistical & Methodological Services EA: Indigo Freya x 4858 Population Social Conditions (Acting till 11 July 2007) Corporate Services EA: Eugenie Bint x 4903 EA: Kathy Warren x 4760 HRAM: Statistical Education & Research HRAM: Alan Mc. Intyre x 4662 Dallas Welch Denise Mc. Gregor x 4303 58 09 Vina Cullum 01 Vina Cullum X 4815 Raj Narayan x 4709 Deputy Government Statistician Industry & Labour Statistics EA: Eugenie Bint x 4903 Business Performance & Agriculture 56 Government Statistician General Manager Christchurch Office Sandy Natha x 4242 EA: Eugénie Bint x 4903 General Manager Maori Statistics Unit EA: Eugenie Bint x 4903 Corporate Support Financial Services 03 David Archer Whetu Wereta Geoff Bascand Andrew Hunter 34 Census 2011 Elizabeth Bridge x 4696 Greta Gordon x 4223 Human Resources Business Unit Maori Statistics Unit Sam Fisher x 4225 Nancy Mc. Beth Deputy Government Statistician (Acting) Macro-Economic, Environment, Regional & Geography Statistics EA: Indigo Freya x 4858 Work, Knowledge & Skills Strategic Communication 08 07 14 Rachael Milicich Industry & Labour Statistics HRAM: Lisa Mulholland x 4871 Paul Maxwell x 4727 Judith Hughes X 4803 09 HRAM: Robynn Cade x 4681 Strategic Policy & Planning Michael Anderson x 4930 Planning & Performance Reporting Corporate Services and Maori Statistics Unit Strategy & Communications HRAM: Alan Mc. Intyre x 4662 98 Nathan Scott x 4156 Business & Dissemination Services and Chief Information Officer HRAM: Lisa Mulholland x 4871 91 IT Operations & Services Sharon Hastie x 4645

63 Geography, Regional & Environment Prices John Morris x 4307 57 National Accounts 52 Tammy Estabrooks x 4614 Manager (Acting) EA: Indigo Freya x 4858 51 Macro-Economic, Environment, Regional & Geography HRAM: Alan Mc. Intyre x 4662 37 Macro-Economic Statistics Development Unit Government & International Accounts Peter Swensson x 4060 55 62 Julian Silver x 4387 15 General Manager Strategy & Communication EA: Hanli van der Westhuizen x 4235 Business Indicators 36 Louise Holmes-Oliver x 8780 and Kathy Connolly x 8975 35 Business, Financial & Structural Andrew Hunter x 8355 39 Eileen Basher x 4701 65 Carol Slappendel x 4947 General Manager EA: Tania Mattock x 4074 47 59 EA: Executive Assistant Deputy Government Statistician Social & Population Statistics EA: Tania Mattock x 4074 Ray Freeman General Manager Auckland Office EA: Diane Mc. Guire x 9315 Last Updated 20/06/07 Social & Population HRAM: Robynn Cade x 4681 38 Integrated Data Collection Ray Freeman x 9143 Gary Dunnet OSRDAC Hamish James x 4237 67 Collection & Classification Standards 61 31 Bridget Hamilton-Seymour x 4833 27 Statistical Methods Diane Ramsay x 4355 90 General Manager Business & Dissemination Services EA: Hanli van der Westhuizen x 4235 Product Development & Publishing Chief Information Officer Gareth Mc. Guinness x 4851 EA Hanli van der Westhuizen x 4235 29 Application Services Information Customer Services 82 Business Transformation Strategy Gary Dunnet x 4650 Matjaz Jug x 4238 78 Mike Moore x 8701 Integrated Data Collections HRAM: Alan Mc. Intyre x 4662 Statistical & Methodological HRAM: Robynn Cade x 4681 General Manager Statistical Education & Research EA: Indigo Freya x 4858 HR Account Manager Cathryn Ashley Jones Tere Scotney x 4956 Standard of Living 66 Andrea Blackburn x 4680 Sharleen Forbes Vince Galvin Social Statistics Development Unit Paul Brown x 4304 21 General Manager Statistical & Methodological Services EA: Indigo Freya x 4858 Population Social Conditions (Acting till 11 July 2007) Corporate Services EA: Eugenie Bint x 4903 EA: Kathy Warren x 4760 HRAM: Statistical Education & Research HRAM: Alan Mc. Intyre x 4662 Dallas Welch Denise Mc. Gregor x 4303 58 09 Vina Cullum 01 Vina Cullum X 4815 Raj Narayan x 4709 Deputy Government Statistician Industry & Labour Statistics EA: Eugenie Bint x 4903 Business Performance & Agriculture 56 Government Statistician General Manager Christchurch Office Sandy Natha x 4242 EA: Eugénie Bint x 4903 General Manager Maori Statistics Unit EA: Eugenie Bint x 4903 Corporate Support Financial Services 03 David Archer Whetu Wereta Geoff Bascand Andrew Hunter 34 Census 2011 Elizabeth Bridge x 4696 Greta Gordon x 4223 Human Resources Business Unit Maori Statistics Unit Sam Fisher x 4225 Nancy Mc. Beth Deputy Government Statistician (Acting) Macro-Economic, Environment, Regional & Geography Statistics EA: Indigo Freya x 4858 Work, Knowledge & Skills Strategic Communication 08 07 14 Rachael Milicich Industry & Labour Statistics HRAM: Lisa Mulholland x 4871 Paul Maxwell x 4727 Judith Hughes X 4803 09 HRAM: Robynn Cade x 4681 Strategic Policy & Planning Michael Anderson x 4930 Planning & Performance Reporting Corporate Services and Maori Statistics Unit Strategy & Communications HRAM: Alan Mc. Intyre x 4662 98 Nathan Scott x 4156 Business & Dissemination Services and Chief Information Officer HRAM: Lisa Mulholland x 4871 91 IT Operations & Services Sharon Hastie x 4645

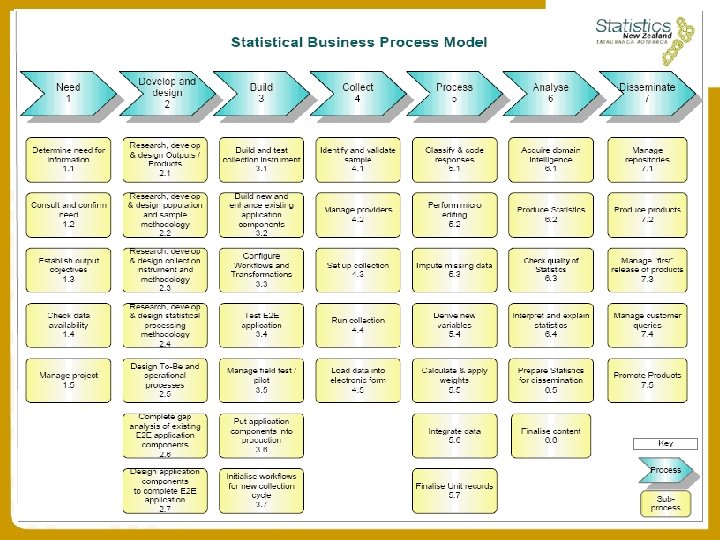

Business model Transformation Strategy 1. A number of standard, generic end-to end processes for collection, analysis and dissemination of statistical data and information l l l 2. 3. Includes statistical methods Covering business process life-cycle To enable statisticians to focus on data quality and implemented best practice methods, greater coordination and effective resource utilisation. A disciplined approach to data and metadata management, using a standard information lifecycle An agreed enterprise-wide technical architecture

Business model Transformation Strategy 1. A number of standard, generic end-to end processes for collection, analysis and dissemination of statistical data and information l l l 2. 3. Includes statistical methods Covering business process life-cycle To enable statisticians to focus on data quality and implemented best practice methods, greater coordination and effective resource utilisation. A disciplined approach to data and metadata management, using a standard information lifecycle An agreed enterprise-wide technical architecture

Bm. TS & Metadata The Business Model Transformation Strategy (Bm. TS) is designing a metadata management strategy that ensures metadata: – – – – fits into a metadata framework that can adequately describe all of Statistics New Zealand's data, and under the Official Statistics Strategy (OSS) the data of other agencies documents all the stages of the statistical life cycle from conception to archiving and destruction is centrally accessible is automatically populated during the business process, where ever possible is used to drive the business process is easily accessible by all potential users is populated and maintained by data creators is managed centrally

Bm. TS & Metadata The Business Model Transformation Strategy (Bm. TS) is designing a metadata management strategy that ensures metadata: – – – – fits into a metadata framework that can adequately describe all of Statistics New Zealand's data, and under the Official Statistics Strategy (OSS) the data of other agencies documents all the stages of the statistical life cycle from conception to archiving and destruction is centrally accessible is automatically populated during the business process, where ever possible is used to drive the business process is easily accessible by all potential users is populated and maintained by data creators is managed centrally

A - Existing Metadata Issues • • • metadata is not kept up to date metadata maintenance is considered a low priority metadata is not held in a consistent way relevant information is unavailable there is confusion about what metadata needs to be stored the existing metadata infrastructure is being under utilised there is a failure to meet the metadata needs of advanced data users it is difficult to find information unless you have some expertise or know it exists there is inconsistent use of classifications/terminology in some instances there is little information about data, where it came from, processes it has been under or even the question to which it relates

A - Existing Metadata Issues • • • metadata is not kept up to date metadata maintenance is considered a low priority metadata is not held in a consistent way relevant information is unavailable there is confusion about what metadata needs to be stored the existing metadata infrastructure is being under utilised there is a failure to meet the metadata needs of advanced data users it is difficult to find information unless you have some expertise or know it exists there is inconsistent use of classifications/terminology in some instances there is little information about data, where it came from, processes it has been under or even the question to which it relates

B - Target Metadata Principles • • • metadata is centrally accessible metadata structure should be strongly linked to data metadata is shared between data sets content structure conforms to standards metadata is managed from end-to-end in the data life cycle. there is a registration process (workflow) associated with each metadata element capture metadata at source, automatically ensure the cost to producers is justified by the benefit to users metadata is considered active metadata is managed at as a high a level as is possible metadata is readily available and useable in the context of client's information needs (internal or external) track the use of some types of metadata (eg. classifications)

B - Target Metadata Principles • • • metadata is centrally accessible metadata structure should be strongly linked to data metadata is shared between data sets content structure conforms to standards metadata is managed from end-to-end in the data life cycle. there is a registration process (workflow) associated with each metadata element capture metadata at source, automatically ensure the cost to producers is justified by the benefit to users metadata is considered active metadata is managed at as a high a level as is possible metadata is readily available and useable in the context of client's information needs (internal or external) track the use of some types of metadata (eg. classifications)

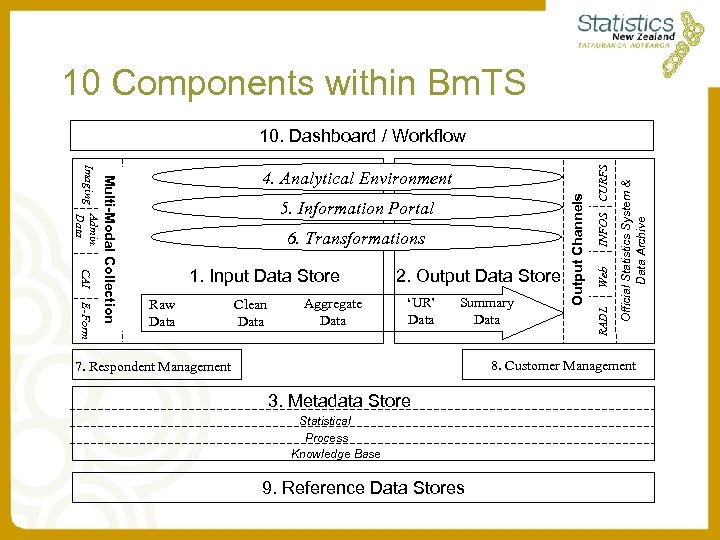

How to come from A to B? 1. Identified the key (10) components of our information model. 2. Service Oriented Architecture. 3. Developed Generic Business Process Model. 4. Development approach from ‘stove-pipes’ to ‘components’ and ‘core’ teams. 5. Governance – Architectural Reviews & Staged Funding Model. 6. Re-use of components.

How to come from A to B? 1. Identified the key (10) components of our information model. 2. Service Oriented Architecture. 3. Developed Generic Business Process Model. 4. Development approach from ‘stove-pipes’ to ‘components’ and ‘core’ teams. 5. Governance – Architectural Reviews & Staged Funding Model. 6. Re-use of components.

10 Components within Bm. TS E-Form Raw Data Clean Data Aggregate Data 2. Output Data Store ‘UR’ Data Summary Data Official Statistics System & Data Archive 1. Input Data Store Web 6. Transformations RADL 5. Information Portal Output Channels CAI Multi-Modal Collection Imaging Admin. Data 4. Analytical Environment INFOS CURFS 10. Dashboard / Workflow 8. Customer Management 7. Respondent Management 3. Metadata Store Statistical Process Knowledge Base 9. Reference Data Stores

10 Components within Bm. TS E-Form Raw Data Clean Data Aggregate Data 2. Output Data Store ‘UR’ Data Summary Data Official Statistics System & Data Archive 1. Input Data Store Web 6. Transformations RADL 5. Information Portal Output Channels CAI Multi-Modal Collection Imaging Admin. Data 4. Analytical Environment INFOS CURFS 10. Dashboard / Workflow 8. Customer Management 7. Respondent Management 3. Metadata Store Statistical Process Knowledge Base 9. Reference Data Stores

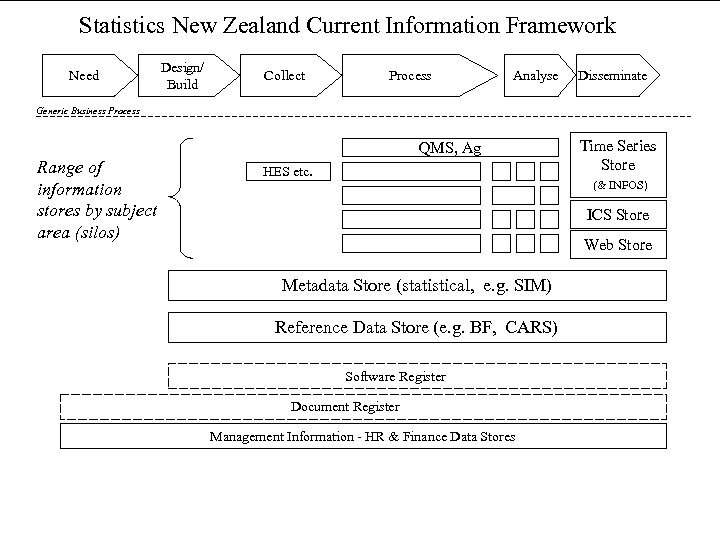

Statistics New Zealand Current Information Framework Need Design/ Build Collect Process Analyse Disseminate Generic Business Process QMS, Ag Range of information stores by subject area (silos) HES etc. Time Series Store (& INFOS) ICS Store Web Store Metadata Store (statistical, e. g. SIM) Reference Data Store (e. g. BF, CARS) Software Register Document Register Management Information - HR & Finance Data Stores

Statistics New Zealand Current Information Framework Need Design/ Build Collect Process Analyse Disseminate Generic Business Process QMS, Ag Range of information stores by subject area (silos) HES etc. Time Series Store (& INFOS) ICS Store Web Store Metadata Store (statistical, e. g. SIM) Reference Data Store (e. g. BF, CARS) Software Register Document Register Management Information - HR & Finance Data Stores

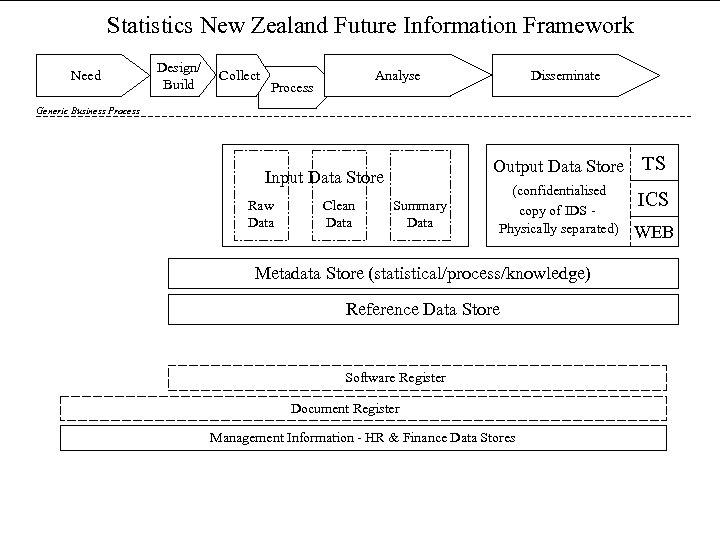

Statistics New Zealand Future Information Framework Need Design/ Build Collect Analyse Process Disseminate Generic Business Process Output Data Store Input Data Store Raw Data Clean Data Summary Data TS (confidentialised copy of IDS Physically separated) ICS Metadata Store (statistical/process/knowledge) Reference Data Store Software Register Document Register Management Information - HR & Finance Data Stores WEB

Statistics New Zealand Future Information Framework Need Design/ Build Collect Analyse Process Disseminate Generic Business Process Output Data Store Input Data Store Raw Data Clean Data Summary Data TS (confidentialised copy of IDS Physically separated) ICS Metadata Store (statistical/process/knowledge) Reference Data Store Software Register Document Register Management Information - HR & Finance Data Stores WEB

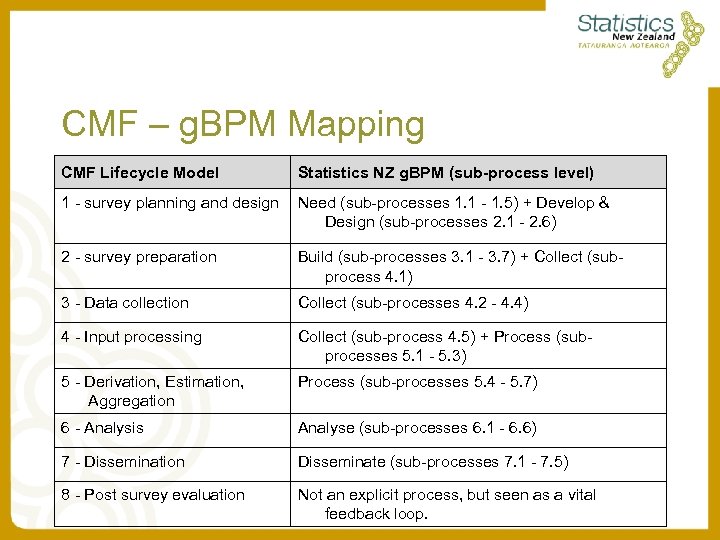

CMF – g. BPM Mapping CMF Lifecycle Model Statistics NZ g. BPM (sub-process level) 1 - survey planning and design Need (sub-processes 1. 1 - 1. 5) + Develop & Design (sub-processes 2. 1 - 2. 6) 2 - survey preparation Build (sub-processes 3. 1 - 3. 7) + Collect (subprocess 4. 1) 3 - Data collection Collect (sub-processes 4. 2 - 4. 4) 4 - Input processing Collect (sub-process 4. 5) + Process (subprocesses 5. 1 - 5. 3) 5 - Derivation, Estimation, Aggregation Process (sub-processes 5. 4 - 5. 7) 6 - Analysis Analyse (sub-processes 6. 1 - 6. 6) 7 - Dissemination Disseminate (sub-processes 7. 1 - 7. 5) 8 - Post survey evaluation Not an explicit process, but seen as a vital feedback loop.

CMF – g. BPM Mapping CMF Lifecycle Model Statistics NZ g. BPM (sub-process level) 1 - survey planning and design Need (sub-processes 1. 1 - 1. 5) + Develop & Design (sub-processes 2. 1 - 2. 6) 2 - survey preparation Build (sub-processes 3. 1 - 3. 7) + Collect (subprocess 4. 1) 3 - Data collection Collect (sub-processes 4. 2 - 4. 4) 4 - Input processing Collect (sub-process 4. 5) + Process (subprocesses 5. 1 - 5. 3) 5 - Derivation, Estimation, Aggregation Process (sub-processes 5. 4 - 5. 7) 6 - Analysis Analyse (sub-processes 6. 1 - 6. 6) 7 - Dissemination Disseminate (sub-processes 7. 1 - 7. 5) 8 - Post survey evaluation Not an explicit process, but seen as a vital feedback loop.

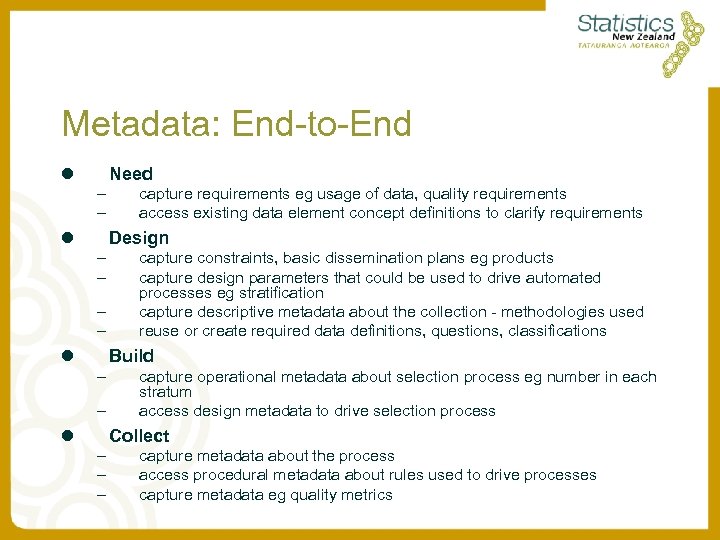

Metadata: End-to-End l Need – – l capture requirements eg usage of data, quality requirements access existing data element concept definitions to clarify requirements Design – – l capture constraints, basic dissemination plans eg products capture design parameters that could be used to drive automated processes eg stratification capture descriptive metadata about the collection - methodologies used reuse or create required data definitions, questions, classifications Build – – l capture operational metadata about selection process eg number in each stratum access design metadata to drive selection process Collect – – – capture metadata about the process access procedural metadata about rules used to drive processes capture metadata eg quality metrics

Metadata: End-to-End l Need – – l capture requirements eg usage of data, quality requirements access existing data element concept definitions to clarify requirements Design – – l capture constraints, basic dissemination plans eg products capture design parameters that could be used to drive automated processes eg stratification capture descriptive metadata about the collection - methodologies used reuse or create required data definitions, questions, classifications Build – – l capture operational metadata about selection process eg number in each stratum access design metadata to drive selection process Collect – – – capture metadata about the process access procedural metadata about rules used to drive processes capture metadata eg quality metrics

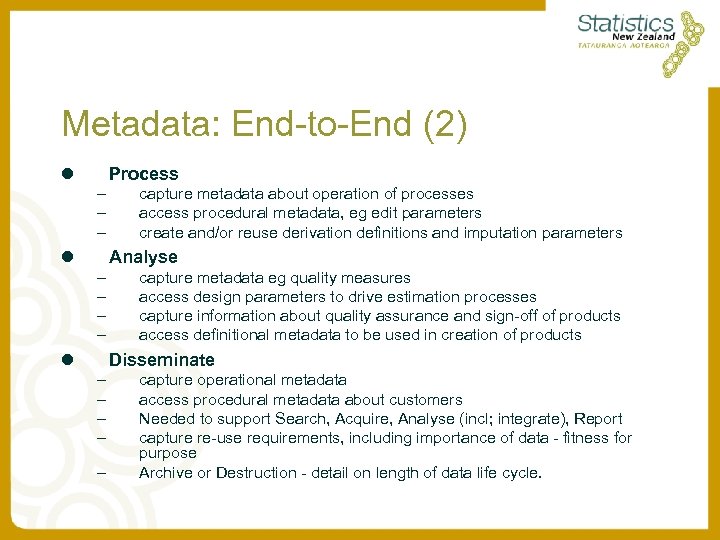

Metadata: End-to-End (2) l Process – – – l capture metadata about operation of processes access procedural metadata, eg edit parameters create and/or reuse derivation definitions and imputation parameters Analyse – – l capture metadata eg quality measures access design parameters to drive estimation processes capture information about quality assurance and sign-off of products access definitional metadata to be used in creation of products Disseminate – – – capture operational metadata access procedural metadata about customers Needed to support Search, Acquire, Analyse (incl; integrate), Report capture re-use requirements, including importance of data - fitness for purpose Archive or Destruction - detail on length of data life cycle.

Metadata: End-to-End (2) l Process – – – l capture metadata about operation of processes access procedural metadata, eg edit parameters create and/or reuse derivation definitions and imputation parameters Analyse – – l capture metadata eg quality measures access design parameters to drive estimation processes capture information about quality assurance and sign-off of products access definitional metadata to be used in creation of products Disseminate – – – capture operational metadata access procedural metadata about customers Needed to support Search, Acquire, Analyse (incl; integrate), Report capture re-use requirements, including importance of data - fitness for purpose Archive or Destruction - detail on length of data life cycle.

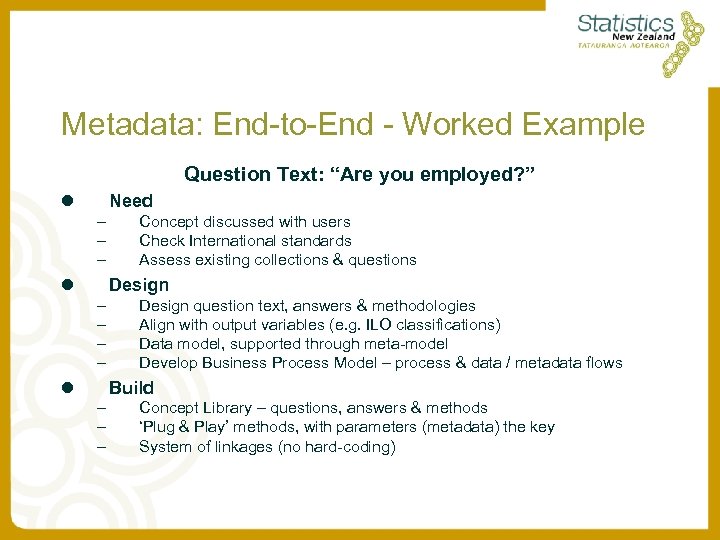

Metadata: End-to-End - Worked Example Question Text: “Are you employed? ” l Need – – – l Concept discussed with users Check International standards Assess existing collections & questions Design – – l Design question text, answers & methodologies Align with output variables (e. g. ILO classifications) Data model, supported through meta-model Develop Business Process Model – process & data / metadata flows Build – – – Concept Library – questions, answers & methods ‘Plug & Play’ methods, with parameters (metadata) the key System of linkages (no hard-coding)

Metadata: End-to-End - Worked Example Question Text: “Are you employed? ” l Need – – – l Concept discussed with users Check International standards Assess existing collections & questions Design – – l Design question text, answers & methodologies Align with output variables (e. g. ILO classifications) Data model, supported through meta-model Develop Business Process Model – process & data / metadata flows Build – – – Concept Library – questions, answers & methods ‘Plug & Play’ methods, with parameters (metadata) the key System of linkages (no hard-coding)

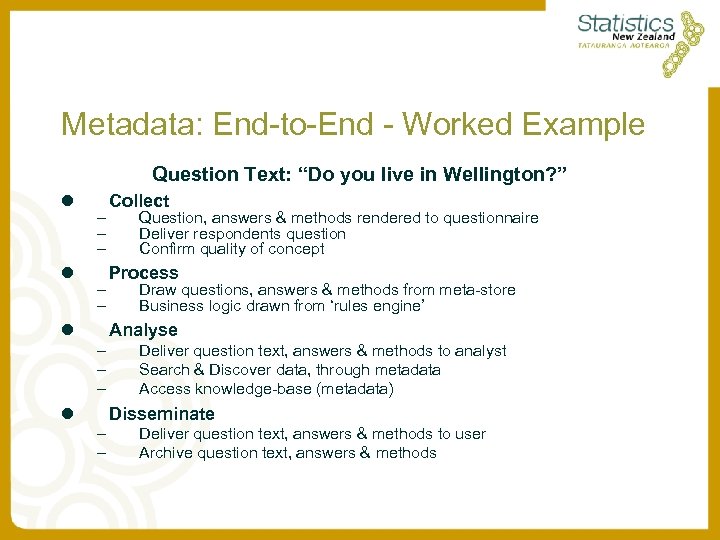

Metadata: End-to-End - Worked Example Question Text: “Do you live in Wellington? ” l l – – – l Collect Question, answers & methods rendered to questionnaire Deliver respondents question Confirm quality of concept Process Draw questions, answers & methods from meta-store Business logic drawn from ‘rules engine’ Analyse – – – l Deliver question text, answers & methods to analyst Search & Discover data, through metadata Access knowledge-base (metadata) Disseminate – – Deliver question text, answers & methods to user Archive question text, answers & methods

Metadata: End-to-End - Worked Example Question Text: “Do you live in Wellington? ” l l – – – l Collect Question, answers & methods rendered to questionnaire Deliver respondents question Confirm quality of concept Process Draw questions, answers & methods from meta-store Business logic drawn from ‘rules engine’ Analyse – – – l Deliver question text, answers & methods to analyst Search & Discover data, through metadata Access knowledge-base (metadata) Disseminate – – Deliver question text, answers & methods to user Archive question text, answers & methods

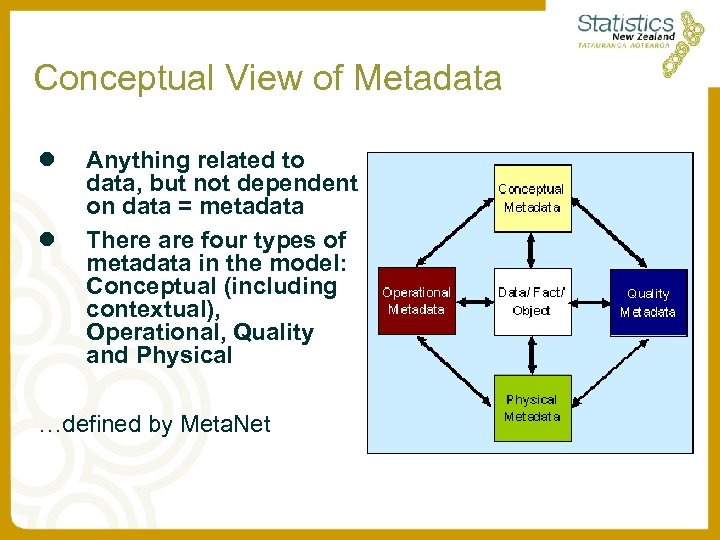

Conceptual View of Metadata l l Anything related to data, but not dependent on data = metadata There are four types of metadata in the model: Conceptual (including contextual), Operational, Quality and Physical …defined by Meta. Net

Conceptual View of Metadata l l Anything related to data, but not dependent on data = metadata There are four types of metadata in the model: Conceptual (including contextual), Operational, Quality and Physical …defined by Meta. Net

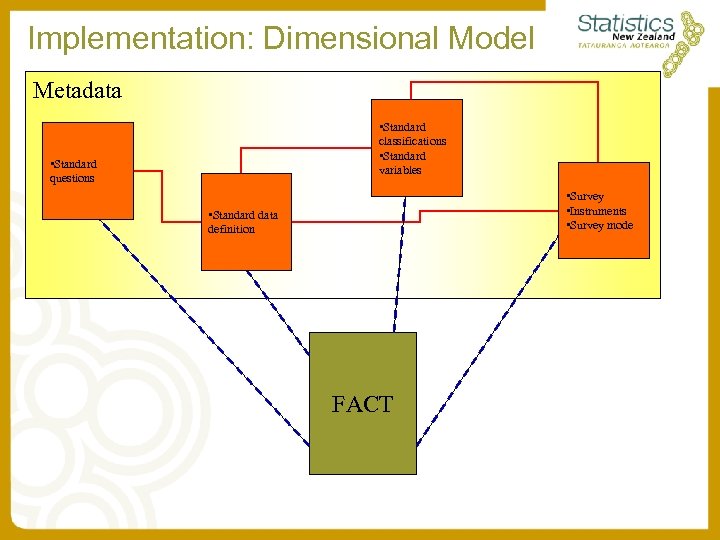

Implementation: Dimensional Model Metadata • Standard classifications • Dimension Standard variables • Standard Dimension questions • Survey • Dimension Instruments • Survey mode • Standard data Dimension definition FACT

Implementation: Dimensional Model Metadata • Standard classifications • Dimension Standard variables • Standard Dimension questions • Survey • Dimension Instruments • Survey mode • Standard data Dimension definition FACT

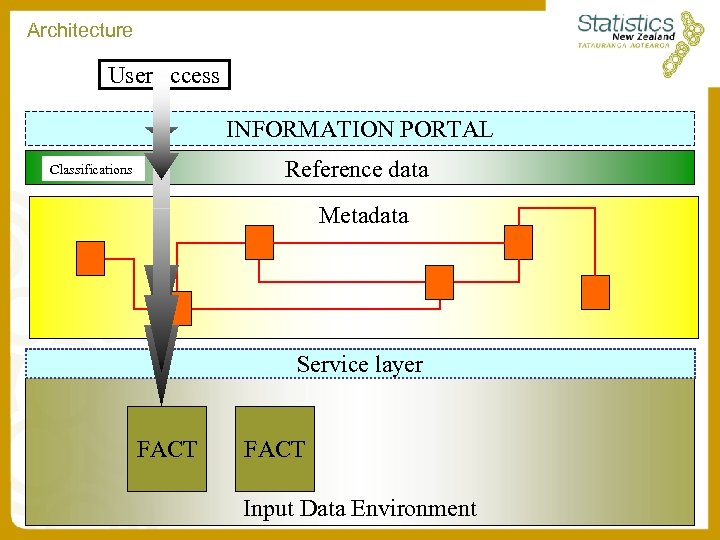

Architecture User access INFORMATION PORTAL Reference data Classifications Metadata Service layer FACT Input Data Environment

Architecture User access INFORMATION PORTAL Reference data Classifications Metadata Service layer FACT Input Data Environment

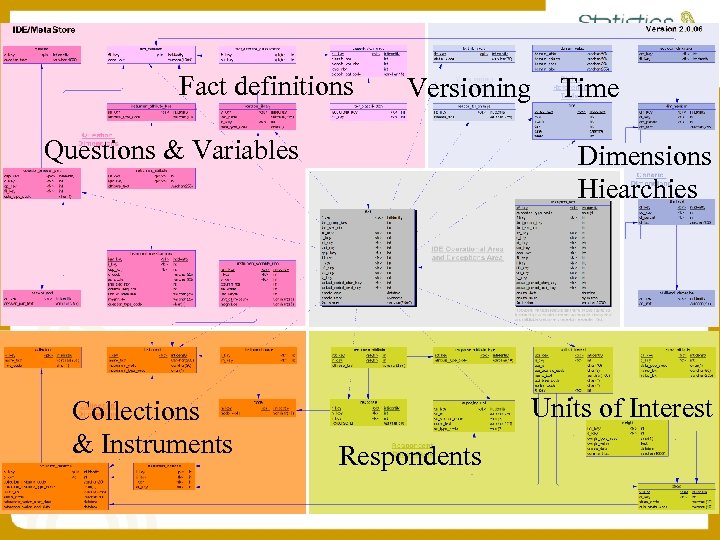

Fact definitions Versioning Questions & Variables Collections & Instruments Time Dimensions Hiearchies Units of Interest Respondents

Fact definitions Versioning Questions & Variables Collections & Instruments Time Dimensions Hiearchies Units of Interest Respondents

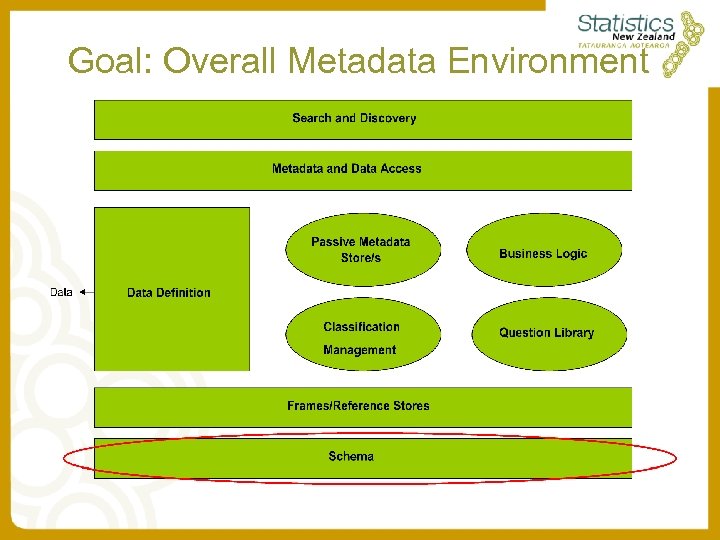

Goal: Overall Metadata Environment

Goal: Overall Metadata Environment

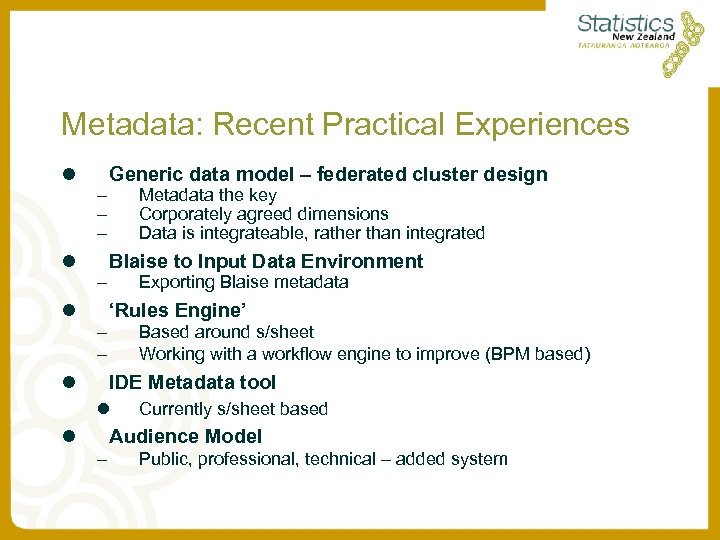

Metadata: Recent Practical Experiences l l – – l Generic data model – federated cluster design Metadata the key Corporately agreed dimensions Data is integrateable, rather than integrated Blaise to Input Data Environment Exporting Blaise metadata ‘Rules Engine’ – – l Based around s/sheet Working with a workflow engine to improve (BPM based) IDE Metadata tool l l Currently s/sheet based Audience Model – Public, professional, technical – added system

Metadata: Recent Practical Experiences l l – – l Generic data model – federated cluster design Metadata the key Corporately agreed dimensions Data is integrateable, rather than integrated Blaise to Input Data Environment Exporting Blaise metadata ‘Rules Engine’ – – l Based around s/sheet Working with a workflow engine to improve (BPM based) IDE Metadata tool l l Currently s/sheet based Audience Model – Public, professional, technical – added system

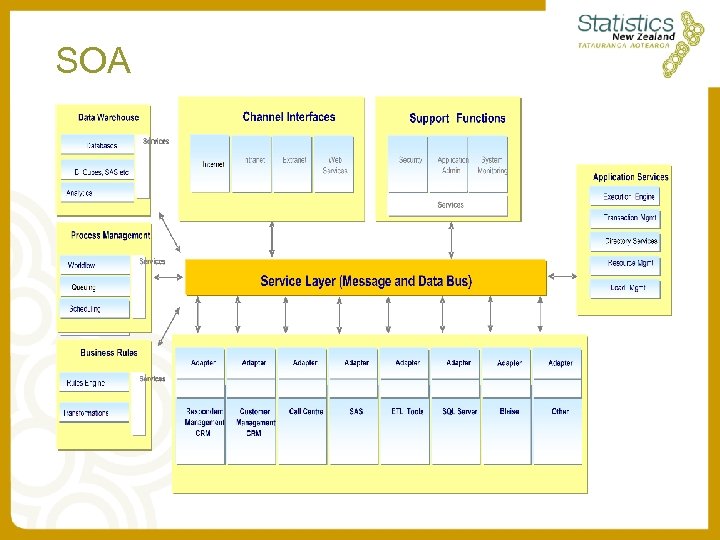

SOA

SOA

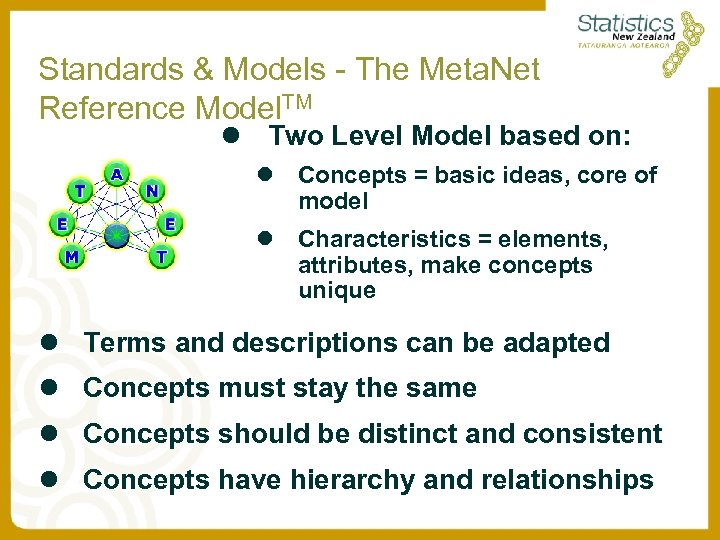

Standards & Models - The Meta. Net Reference Model. TM l Two Level Model based on: l Concepts = basic ideas, core of model l Characteristics = elements, attributes, make concepts unique l Terms and descriptions can be adapted l Concepts must stay the same l Concepts should be distinct and consistent l Concepts have hierarchy and relationships

Standards & Models - The Meta. Net Reference Model. TM l Two Level Model based on: l Concepts = basic ideas, core of model l Characteristics = elements, attributes, make concepts unique l Terms and descriptions can be adapted l Concepts must stay the same l Concepts should be distinct and consistent l Concepts have hierarchy and relationships

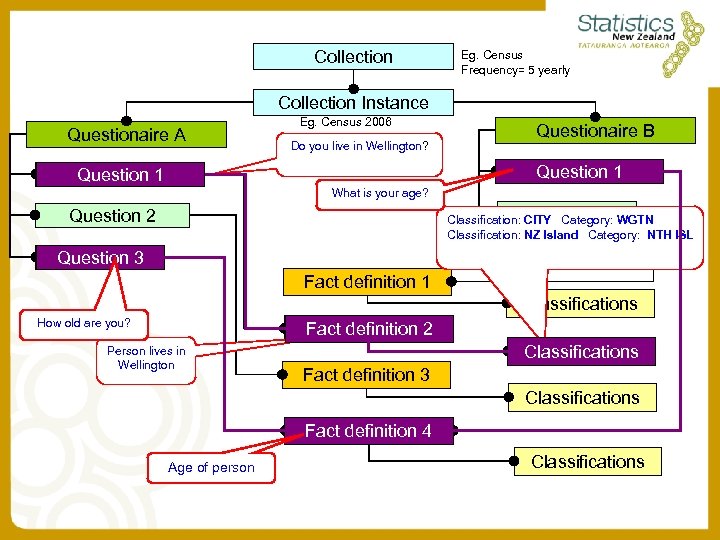

Collection Eg. Census Frequency= 5 yearly Collection Instance Questionaire A Eg. Census 2006 Do you live in Wellington? Questionaire B Question 1 What is your age? Classification: Question 2 WGTN CITY Category: Classification: NZ Island Category: NTH ISL Question 2 Question 3 Fact definition 1 Classifications How old are you? Fact definition 2 Person lives in Wellington Classifications Fact definition 3 Classifications Fact definition 4 Age of person Classifications

Collection Eg. Census Frequency= 5 yearly Collection Instance Questionaire A Eg. Census 2006 Do you live in Wellington? Questionaire B Question 1 What is your age? Classification: Question 2 WGTN CITY Category: Classification: NZ Island Category: NTH ISL Question 2 Question 3 Fact definition 1 Classifications How old are you? Fact definition 2 Person lives in Wellington Classifications Fact definition 3 Classifications Fact definition 4 Age of person Classifications

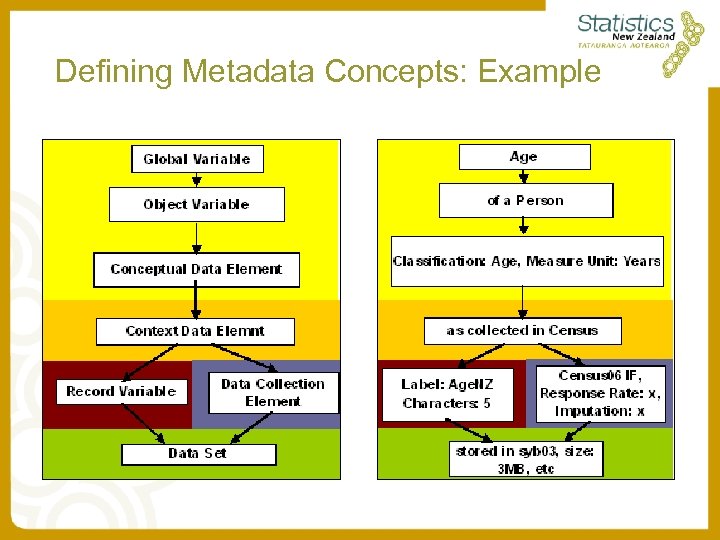

Defining Metadata Concepts: Example

Defining Metadata Concepts: Example

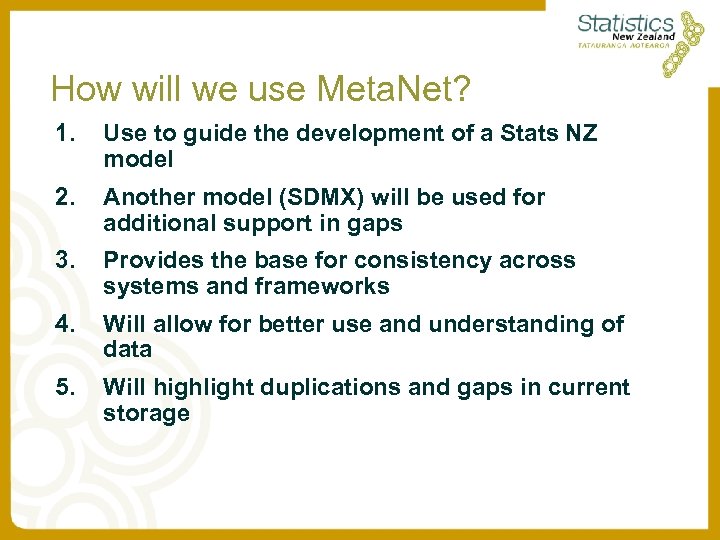

How will we use Meta. Net? 1. Use to guide the development of a Stats NZ model 2. Another model (SDMX) will be used for additional support in gaps 3. Provides the base for consistency across systems and frameworks 4. Will allow for better use and understanding of data 5. Will highlight duplications and gaps in current storage

How will we use Meta. Net? 1. Use to guide the development of a Stats NZ model 2. Another model (SDMX) will be used for additional support in gaps 3. Provides the base for consistency across systems and frameworks 4. Will allow for better use and understanding of data 5. Will highlight duplications and gaps in current storage

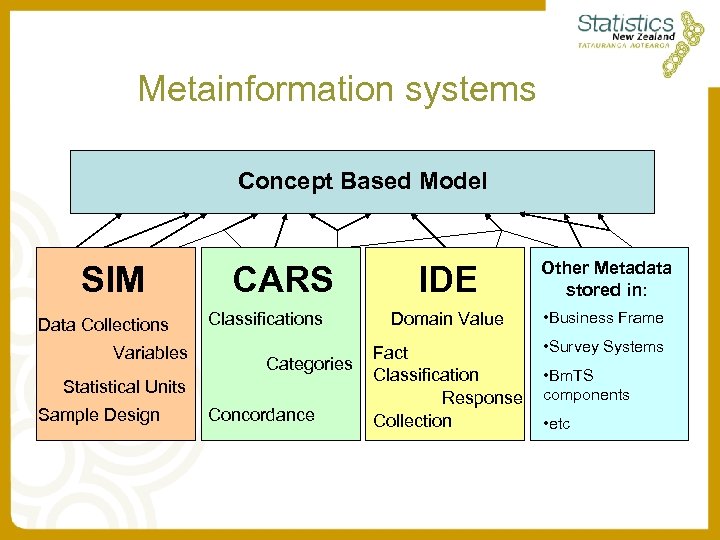

Metainformation systems Concept Based Model SIM Data Collections Variables CARS Classifications Categories Statistical Units Sample Design Concordance IDE Other Metadata stored in: Domain Value • Business Frame Fact Classification Response Collection • Survey Systems • Bm. TS components • etc

Metainformation systems Concept Based Model SIM Data Collections Variables CARS Classifications Categories Statistical Units Sample Design Concordance IDE Other Metadata stored in: Domain Value • Business Frame Fact Classification Response Collection • Survey Systems • Bm. TS components • etc

Metadata Users - External • Government, • Public, • External Statisticans (incl. Intl Orgs)

Metadata Users - External • Government, • Public, • External Statisticans (incl. Intl Orgs)

Metadata Users - Internal – Statistical Analysts – IT Personnel (business analysts, IT designers & technical leads, developers, testers etc. ) – Management – Data Managers / Custodians / Archivists – Statistical Methodologists – External Statisticians (researchers etc. ) – Architects - data, process & application – Respondent Liaison – Survey Developers – Metadata and Interoperability Experts – Project Managers & Teams – IT Management – Product Development and Publishing – Information Customer Services

Metadata Users - Internal – Statistical Analysts – IT Personnel (business analysts, IT designers & technical leads, developers, testers etc. ) – Management – Data Managers / Custodians / Archivists – Statistical Methodologists – External Statisticians (researchers etc. ) – Architects - data, process & application – Respondent Liaison – Survey Developers – Metadata and Interoperability Experts – Project Managers & Teams – IT Management – Product Development and Publishing – Information Customer Services

Lessons Learnt – Metadata Concepts • • • Apart from 'basic' principles, metadata principles are quite difficult. To get a good understanding of and this makes communication of them even harder. Every-one has a view on what metadata they need - the list of metadata requirements / elements can be endless. Given the breadth of metadata - an incremental approach to the delivery of storage facilities is fundamental. Establish a metadata framework upon which discussions can be based that best fits your organisation - we have agreed on Meta. Net, supplemented with SDMX.

Lessons Learnt – Metadata Concepts • • • Apart from 'basic' principles, metadata principles are quite difficult. To get a good understanding of and this makes communication of them even harder. Every-one has a view on what metadata they need - the list of metadata requirements / elements can be endless. Given the breadth of metadata - an incremental approach to the delivery of storage facilities is fundamental. Establish a metadata framework upon which discussions can be based that best fits your organisation - we have agreed on Meta. Net, supplemented with SDMX.

Lessons Learnt – BPM • To make data re-use a reality there is a need to go back to 1 st principles, i. e. what is the concept behind the data item. Surprisingly it might be difficult for some subject matter areas to identify these 1 st principles easily, particularly if the collection has been in existence for some time. • Be prepared for survey-specific requirements: the BPM exercise is absolutely needed to define the common processes and identify potentially required survey-specific features.

Lessons Learnt – BPM • To make data re-use a reality there is a need to go back to 1 st principles, i. e. what is the concept behind the data item. Surprisingly it might be difficult for some subject matter areas to identify these 1 st principles easily, particularly if the collection has been in existence for some time. • Be prepared for survey-specific requirements: the BPM exercise is absolutely needed to define the common processes and identify potentially required survey-specific features.

Lessons Learnt – Implementation • • Without significant governance it is very easy to start with a generic service concept and yet still deliver a silo solution. The ongoing upgrade of all generic services is needed to avoid this. Expecting delivery of generic services from input / output specific projects leads to significant tensions, particularly in relation to added scope elements within fixed resource schedules. Delivery of business services at the same time as developing and delivering the underlying architecture services adds significant complexity to implementation.

Lessons Learnt – Implementation • • Without significant governance it is very easy to start with a generic service concept and yet still deliver a silo solution. The ongoing upgrade of all generic services is needed to avoid this. Expecting delivery of generic services from input / output specific projects leads to significant tensions, particularly in relation to added scope elements within fixed resource schedules. Delivery of business services at the same time as developing and delivering the underlying architecture services adds significant complexity to implementation.

Lessons Learnt – Implementation • Well defined relationship between data and metadata is very important, the approach with direct connection between data element defined as statistical fact and metadata dimensions proved to be successful because we were able to test and utilize the concept before the (costly) development of metadata management systems.

Lessons Learnt – Implementation • Well defined relationship between data and metadata is very important, the approach with direct connection between data element defined as statistical fact and metadata dimensions proved to be successful because we were able to test and utilize the concept before the (costly) development of metadata management systems.

Lessons Learnt – SOA • The adoption and implementation of SOA as a Statistical Information Architecture requires a significant mind shift from data processing to enabling enterprise business processes through the delivery of enterprise services. • Skilled resources, familiar with SOA concepts and application are very difficult to recruit, and equally difficult to grow.

Lessons Learnt – SOA • The adoption and implementation of SOA as a Statistical Information Architecture requires a significant mind shift from data processing to enabling enterprise business processes through the delivery of enterprise services. • Skilled resources, familiar with SOA concepts and application are very difficult to recruit, and equally difficult to grow.

Lessons Learnt – Governance • • The move from ‘silo systems’ to a Bm. TS type model is a major challenge that should not be under -estimated. Having an active Standards Governance Committee, made up of senior representatives from across the organisation (ours has the 3 DGSs on it), is a very useful thing to have in place. This forum provides an environment which standards can be discussed & agreed and the Committee can take on the role of the 'authority to answer to' if need be.

Lessons Learnt – Governance • • The move from ‘silo systems’ to a Bm. TS type model is a major challenge that should not be under -estimated. Having an active Standards Governance Committee, made up of senior representatives from across the organisation (ours has the 3 DGSs on it), is a very useful thing to have in place. This forum provides an environment which standards can be discussed & agreed and the Committee can take on the role of the 'authority to answer to' if need be.

Lessons Learnt – Other • • • There is a need to consider the audience of the metadata. Some metadata is better than no metadata - as long as it is of good quality. Do not expect to get it 100% right the very first time.

Lessons Learnt – Other • • • There is a need to consider the audience of the metadata. Some metadata is better than no metadata - as long as it is of good quality. Do not expect to get it 100% right the very first time.

Questions?

Questions?