d4a27e958344a165355b3149a1d5694d.ppt

- Количество слайдов: 30

Standardized Survey Tools for Assessment in Archives and Special Collections Elizabeth Yakel University of Michigan Library Assessment Conference August 4 th, 2008 SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN

Standardized Survey Tools for Assessment in Archives and Special Collections Elizabeth Yakel University of Michigan Library Assessment Conference August 4 th, 2008 SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics • • • Drs. Wendy Duff and Joan Cherry, University of Toronto Dr. Helen Tibbo, University of North Carolina Aprille Mc. Kay, J. D. , University of Michigan • Andrew W. Mellon Foundation Grant (June 2005 – March 2008) • Toward the Development of Archival Metrics in College and University Archives and Special Collections • Archival Metrics • http: //archivalmetrics. org

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics • • • Drs. Wendy Duff and Joan Cherry, University of Toronto Dr. Helen Tibbo, University of North Carolina Aprille Mc. Kay, J. D. , University of Michigan • Andrew W. Mellon Foundation Grant (June 2005 – March 2008) • Toward the Development of Archival Metrics in College and University Archives and Special Collections • Archival Metrics • http: //archivalmetrics. org

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics Toolkits • • • On-site Researcher Archival Website Online Finding Aids Student Researcher Teaching Support

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics Toolkits • • • On-site Researcher Archival Website Online Finding Aids Student Researcher Teaching Support

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archives and Special Collections • • • Weak culture of assessment Need the ability to perform user based evaluation on concepts that are specific to archives and special collections No consistency in user-based evaluation tools No reliability in any survey tools No means of comparing data across repositories

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archives and Special Collections • • • Weak culture of assessment Need the ability to perform user based evaluation on concepts that are specific to archives and special collections No consistency in user-based evaluation tools No reliability in any survey tools No means of comparing data across repositories

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Developing the Archival Metrics Toolkits • • • Analysis of other survey instruments Interviews with archivists, faculty, students to identify core concepts for evaluation Concept Map Creation of the questionnaires Testing the questionnaires, survey administration procedures, and the instructions for use and analysis

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Developing the Archival Metrics Toolkits • • • Analysis of other survey instruments Interviews with archivists, faculty, students to identify core concepts for evaluation Concept Map Creation of the questionnaires Testing the questionnaires, survey administration procedures, and the instructions for use and analysis

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Analysis of Other Instruments • • Lib. Qual+ E-metrics (Charles Mc. Clure and David Lankes, Florida State University) Definitions Question phraseology

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Analysis of Other Instruments • • Lib. Qual+ E-metrics (Charles Mc. Clure and David Lankes, Florida State University) Definitions Question phraseology

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Interviews • • • Develop concepts for evaluation Identify areas of greatest concern Understand the feasibility of deploying surveys using different methods

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Interviews • • • Develop concepts for evaluation Identify areas of greatest concern Understand the feasibility of deploying surveys using different methods

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Interviews: Availability (1) • Archivist: This is a very big place and people are busy. If somebody doesn’t stop you to ask you a question and you don’t even see that they’re there because they don’t…we’re actually thinking about finding some mechanism like a bell rings when somebody walks in the door because you get so focused on your computer screen that you don’t even know somebody’s there. We understand why they’re a little intimidated. (MAM 02, lines 403408)

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Interviews: Availability (1) • Archivist: This is a very big place and people are busy. If somebody doesn’t stop you to ask you a question and you don’t even see that they’re there because they don’t…we’re actually thinking about finding some mechanism like a bell rings when somebody walks in the door because you get so focused on your computer screen that you don’t even know somebody’s there. We understand why they’re a little intimidated. (MAM 02, lines 403408)

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Interviews: Availability (2) • Student: I’ve said this a million times, but the access to someone who is open to helping. So not just someone who is supposed to be doing it, but who actually wants to. (MSM 02, lines 559 -561) • Professor: And they'll even do it after hours, almost every one of them. In fact they'd rather do it after hours because they don't have a crush of business in there. (MPM 04, lines 320 -322)

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Interviews: Availability (2) • Student: I’ve said this a million times, but the access to someone who is open to helping. So not just someone who is supposed to be doing it, but who actually wants to. (MSM 02, lines 559 -561) • Professor: And they'll even do it after hours, almost every one of them. In fact they'd rather do it after hours because they don't have a crush of business in there. (MPM 04, lines 320 -322)

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Defining terms • Accessibility of service • Accessibility of Service is a measure of how easily potential users are able to avail themselves of the service and includes (but is certainly not limited to) such factors as: availability (both time and day of the week); site design (simplicity of interface); ADA compliance; ease of use; placement in website hierarchy if using web submission form or email link from the website; use of metatags for digital reference websites (indexed in major search tools, etc. ); or multilingual capabilities in both interface and staff, if warranted based on target population (E-Metrics)

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Defining terms • Accessibility of service • Accessibility of Service is a measure of how easily potential users are able to avail themselves of the service and includes (but is certainly not limited to) such factors as: availability (both time and day of the week); site design (simplicity of interface); ADA compliance; ease of use; placement in website hierarchy if using web submission form or email link from the website; use of metatags for digital reference websites (indexed in major search tools, etc. ); or multilingual capabilities in both interface and staff, if warranted based on target population (E-Metrics)

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Conceptual Framework • • Quality of the Interaction Access to systems and services Physical Information Space Learning Outcomes

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Conceptual Framework • • Quality of the Interaction Access to systems and services Physical Information Space Learning Outcomes

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Quality of the Interaction • • Perceived expertise of the archivist Availability of staff to assist researchers • Instructors and students in the interviews returned to these dimensions again and again as important in a successful visit.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Quality of the Interaction • • Perceived expertise of the archivist Availability of staff to assist researchers • Instructors and students in the interviews returned to these dimensions again and again as important in a successful visit.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Learning Outcomes • • Student Researcher Cognitive and affective outcomes • Confidence

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Learning Outcomes • • Student Researcher Cognitive and affective outcomes • Confidence

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Testing of the tools • • • Iterative design and initial user feedback Small scale deployment Larger scale testing

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Testing of the tools • • • Iterative design and initial user feedback Small scale deployment Larger scale testing

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Pilot Testing • Two phases • Single archives / special collections • Walked people through the questionnaire • Post test interview • • • Clarity of questions Length of instrument Comprehensiveness of questions Willingness to complete a questionnaire of this type Single implementations of the questionnaire and administration procedures until the instrument was stable

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Pilot Testing • Two phases • Single archives / special collections • Walked people through the questionnaire • Post test interview • • • Clarity of questions Length of instrument Comprehensiveness of questions Willingness to complete a questionnaire of this type Single implementations of the questionnaire and administration procedures until the instrument was stable

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Pilot Testing Outcomes • Lots of feedback on question wording • Decision to include a definition of finding aids • • • Decision not to pursue a question bank approach Move away from expectation questions Decisions about how to administer the surveys • On-site researcher and student researcher surveys became paper-based • Online finding aids and website survey - online

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Pilot Testing Outcomes • Lots of feedback on question wording • Decision to include a definition of finding aids • • • Decision not to pursue a question bank approach Move away from expectation questions Decisions about how to administer the surveys • On-site researcher and student researcher surveys became paper-based • Online finding aids and website survey - online

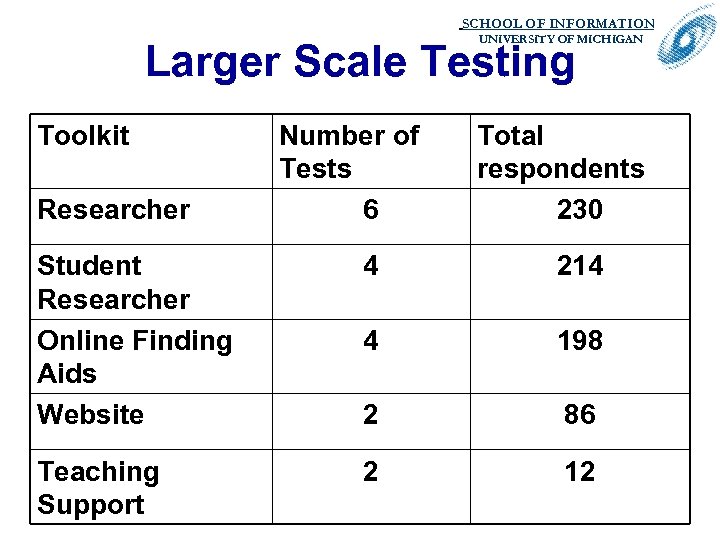

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Larger Scale Testing Toolkit Researcher Number of Tests 6 Total respondents 230 Student Researcher Online Finding Aids Website 4 214 4 198 2 86 Teaching Support 2 12

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Larger Scale Testing Toolkit Researcher Number of Tests 6 Total respondents 230 Student Researcher Online Finding Aids Website 4 214 4 198 2 86 Teaching Support 2 12

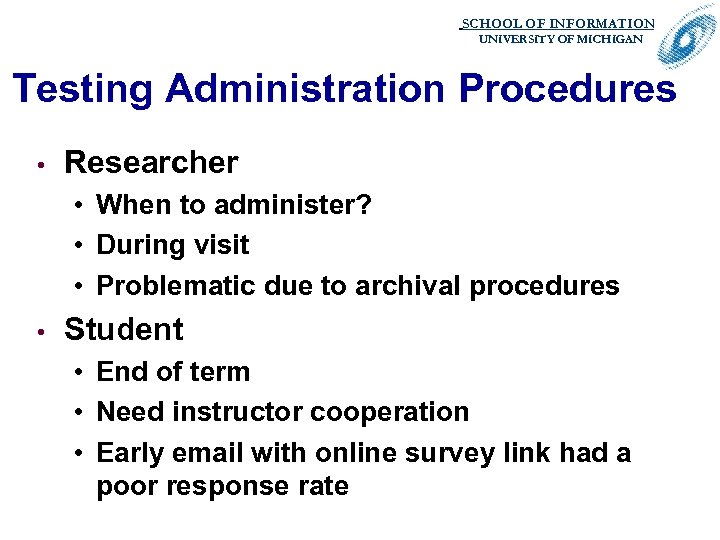

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Testing Administration Procedures • Researcher • When to administer? • During visit • Problematic due to archival procedures • Student • End of term • Need instructor cooperation • Early email with online survey link had a poor response rate

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Testing Administration Procedures • Researcher • When to administer? • During visit • Problematic due to archival procedures • Student • End of term • Need instructor cooperation • Early email with online survey link had a poor response rate

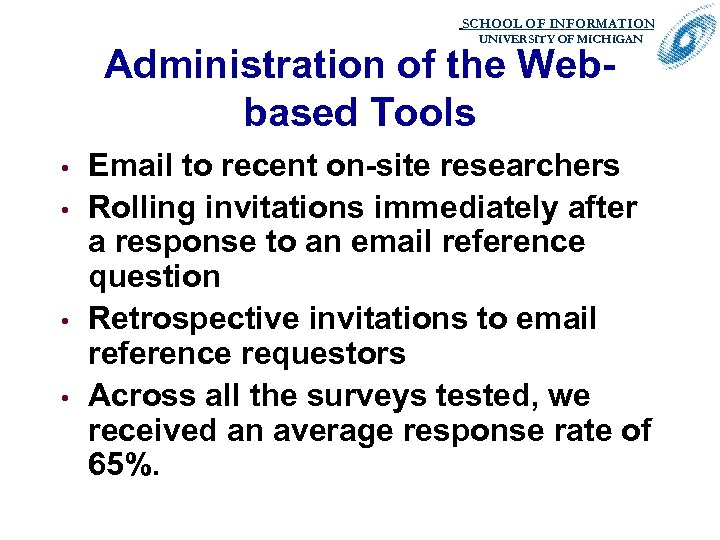

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Administration of the Webbased Tools • • Email to recent on-site researchers Rolling invitations immediately after a response to an email reference question Retrospective invitations to email reference requestors Across all the surveys tested, we received an average response rate of 65%.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Administration of the Webbased Tools • • Email to recent on-site researchers Rolling invitations immediately after a response to an email reference question Retrospective invitations to email reference requestors Across all the surveys tested, we received an average response rate of 65%.

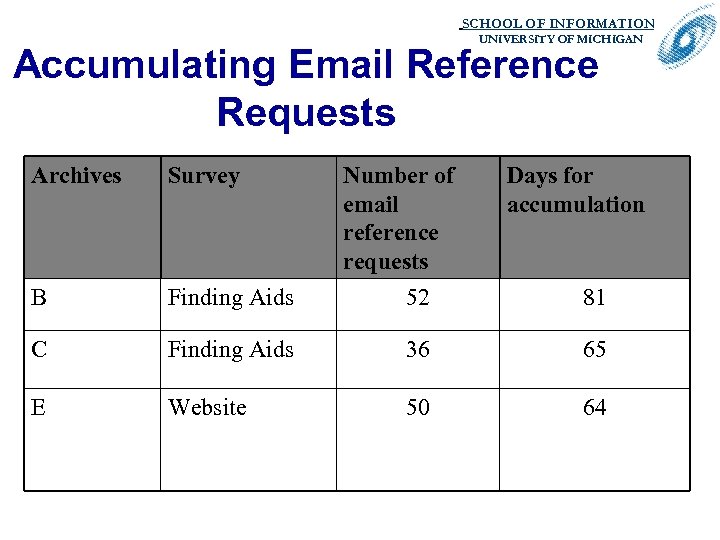

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Accumulating Email Reference Requests Archives Survey Number of email reference requests 52 Days for accumulation B Finding Aids C Finding Aids 36 65 E Website 50 64 81

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Accumulating Email Reference Requests Archives Survey Number of email reference requests 52 Days for accumulation B Finding Aids C Finding Aids 36 65 E Website 50 64 81

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Administration Recommendations • • Email reference and on-site researchers represent two different populations Few email reference requestors had visited the repository in person

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Administration Recommendations • • Email reference and on-site researchers represent two different populations Few email reference requestors had visited the repository in person

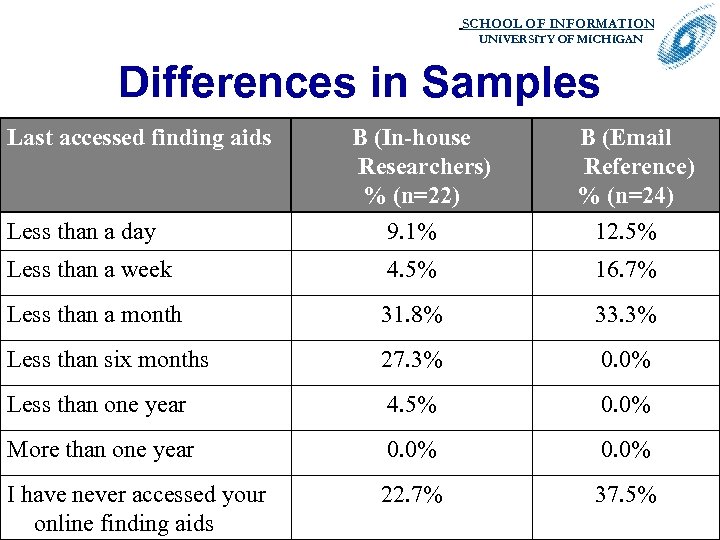

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Differences in Samples Last accessed finding aids Less than a day B (In-house Researchers) % (n=22) 9. 1% B (Email Reference) % (n=24) 12. 5% Less than a week 4. 5% 16. 7% Less than a month 31. 8% 33. 3% Less than six months 27. 3% 0. 0% Less than one year 4. 5% 0. 0% More than one year 0. 0% I have never accessed your online finding aids 22. 7% 37. 5%

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Differences in Samples Last accessed finding aids Less than a day B (In-house Researchers) % (n=22) 9. 1% B (Email Reference) % (n=24) 12. 5% Less than a week 4. 5% 16. 7% Less than a month 31. 8% 33. 3% Less than six months 27. 3% 0. 0% Less than one year 4. 5% 0. 0% More than one year 0. 0% I have never accessed your online finding aids 22. 7% 37. 5%

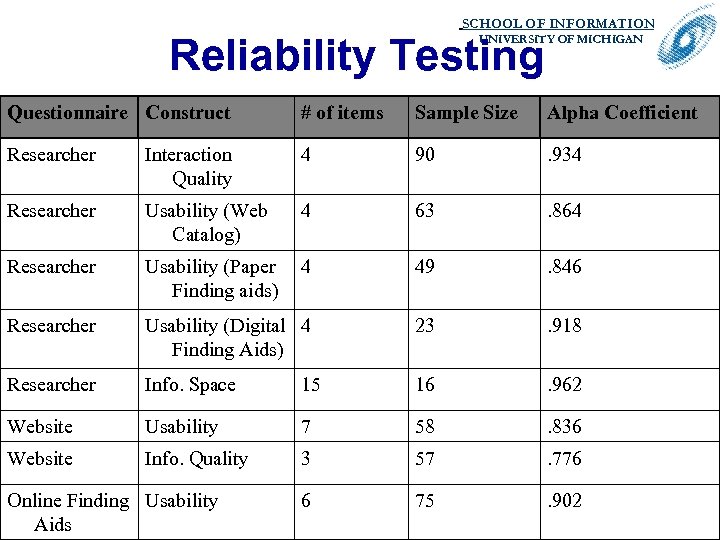

SCHOOL OF INFORMATION. Reliability Testing UNIVERSITY OF MICHIGAN Questionnaire Construct # of items Sample Size Alpha Coefficient Researcher Interaction Quality 4 90 . 934 Researcher Usability (Web Catalog) 4 63 . 864 Researcher Usability (Paper Finding aids) 4 49 . 846 Researcher Usability (Digital 4 Finding Aids) 23 . 918 Researcher Info. Space 15 16 . 962 Website Usability 7 58 . 836 Website Info. Quality 3 57 . 776 6 75 . 902 Online Finding Usability Aids

SCHOOL OF INFORMATION. Reliability Testing UNIVERSITY OF MICHIGAN Questionnaire Construct # of items Sample Size Alpha Coefficient Researcher Interaction Quality 4 90 . 934 Researcher Usability (Web Catalog) 4 63 . 864 Researcher Usability (Paper Finding aids) 4 49 . 846 Researcher Usability (Digital 4 Finding Aids) 23 . 918 Researcher Info. Space 15 16 . 962 Website Usability 7 58 . 836 Website Info. Quality 3 57 . 776 6 75 . 902 Online Finding Usability Aids

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Reliability of Other Questions • • Free text, multiple choice questions generate consistent responses Student survey focuses on learning outcomes, confidence, development of transferable skills • Contradictory results in Questions 12 (‘Is archival research valuable for your goals? ’) and 13 (‘Have you developed any skills by doing research in archives that help you in other areas of your work or studies? ’) • Moderately correlated chi-square test, the phi coefficient was. 296; 2(1, N=426) = 37. 39, p<. 05.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Reliability of Other Questions • • Free text, multiple choice questions generate consistent responses Student survey focuses on learning outcomes, confidence, development of transferable skills • Contradictory results in Questions 12 (‘Is archival research valuable for your goals? ’) and 13 (‘Have you developed any skills by doing research in archives that help you in other areas of your work or studies? ’) • Moderately correlated chi-square test, the phi coefficient was. 296; 2(1, N=426) = 37. 39, p<. 05.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics Toolkits 1. Questionnaire Word document 1. PDF 2. Can transfer Survey Monkey version to other Survey Monkey accounts 2. Administering the Survey (Instructions) 3. Preparing your data for analysis 4. Excel spreadsheet pre-formatted for data from the Questionnaire 5. Pre-coded questionnaire 6. SPSS file pre-formatted for data from the Website Questionnaire 7. Sample report

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics Toolkits 1. Questionnaire Word document 1. PDF 2. Can transfer Survey Monkey version to other Survey Monkey accounts 2. Administering the Survey (Instructions) 3. Preparing your data for analysis 4. Excel spreadsheet pre-formatted for data from the Questionnaire 5. Pre-coded questionnaire 6. SPSS file pre-formatted for data from the Website Questionnaire 7. Sample report

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Administration Instructions • • • How the questionnaires can and cannot be amended Identifying a survey population Sampling strategies Soliciting subjects Applying to their university’s Institutional Review Board (IRB) or ethics panel.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Administration Instructions • • • How the questionnaires can and cannot be amended Identifying a survey population Sampling strategies Soliciting subjects Applying to their university’s Institutional Review Board (IRB) or ethics panel.

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Analysis Instructions • • Instructions Preformatted Excel spreadsheet • Codebook • Preformatted SPSS file

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Analysis Instructions • • Instructions Preformatted Excel spreadsheet • Codebook • Preformatted SPSS file

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics Toolkits • Free and available for download • Creative Commons License • • Must ‘register” but this information will not be shared We will be doing our own user-based evaluation of their use

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Archival Metrics Toolkits • Free and available for download • Creative Commons License • • Must ‘register” but this information will not be shared We will be doing our own user-based evaluation of their use

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Thank-you and Questions

SCHOOL OF INFORMATION. UNIVERSITY OF MICHIGAN Thank-you and Questions